Abstract

The use of Artificial Intelligence (AI) in healthcare is expanding rapidly, including in oncology. Although generic AI development and implementation frameworks exist in healthcare, no effective governance models have been reported in oncology. Our study reports on a Comprehensive Cancer Center’s Responsible AI governance model for clinical, operations, and research programs. We report our one-year AI Governance Committee results with respect to the registration and monitoring of 26 AI models (including large language models), 2 ambient AI pilots, and a review of 33 nomograms. Novel management tools for AI governance are shared, including an overall program model, model information sheet, risk assessment tool, and lifecycle management tool. Two AI model case studies illustrate lessons learned and our “Express Pass” methodology for select models. Open research questions are explored. To the best of our knowledge, this is one of the first published reports on Responsible AI governance at scale in oncology.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) is disrupting healthcare, and oncology care is no exception. Research is currently underway evaluating the implementation of AI at all stages of a patient’s cancer journey, for clinical trial matching, care team decision support, and the full breadth of cancer research domains1,2,3,4. The Food and Drug Administration’s (FDA) registry for AI and Machine Learning-enabled medical devices contains 949 “devices” as of August 20245, with a growing number that are oncology-specific (75 registered, 40 added in the last 3 years)3. Cancer centers are also testing Generative AI in non-clinical settings6. Our institution is deliberately testing and deploying numerous forms of AI, such as Generative, Ambient, Intelligent Automation, and traditional AI, Machine Learning (ML), and natural language processing (NLP)7,8,9,10,11,12,13,14,15.

Oncology-specific frameworks for Responsible AI (RAI) have yet to be established. While numerous RAI frameworks and regulations for best-practices in design and deployment are emerging (https://train4health.ai/)5,16,17,18,19,20, none addresses the specific opportunities and challenges for implementation in oncology. Despite this, the need for quality assurance and local model validation in oncology is pervasive and significant21,22. Oncology has unique use cases for Al such as predicting tumor evolution and treatment response, tumor genomic decision support, pathology image analysis, radiation oncology treatment planning, improving treatment time on chemotherapy, and chemotherapy toxicity prediction. Oncology AI might exacerbate risks to equitable cancer care given the prevailing disparities in screening, access, and treatment outcomes23. It is unknown what resourcing (e.g., staffing, infrastructure, digital tools) of these efforts might constitute minimally responsible oversight. There are potentially new regulations to consider for AI-enabled personalized therapies24. Therefore, RAI in oncology requires organizations address the utility of creating new governance structures or to treat AI like any new technological tool.

AI models developed for use in general populations are not uniformly transportable into oncology settings. Few rigorous studies of large language models (LLMs) have been done in cancer care25. Off-the-shelf models developed on general patient populations may need significant tuning21,26. Although tools like Ambient AI are being evaluated in general care domains27,28, rigorous studies are pending in oncology. While general deterioration models may have utility in oncology29,30, we found that such models require significant tuning for oncology inpatients. New evidence is emerging regarding when not to use certain AI tools31.

Our experience addressing the aforementioned open questions forms the basis for this study. We report on a Comprehensive Cancer Center’s working RAI governance model to improve patient care, research, and operations. Our objectives were to assess RAI in oncology in two phases: (1) the design and development of a responsible AI governance approach for oncology, and (2) the implementation of lessons learned. We discuss the results for each phase and lessons learned through one year of real-world AI governance experience across clinical, operations, and research settings. The novel management tools we developed, and how they have been implemented in practice, are also described. Two AI model case studies are shared (built vs. acquired), and we also discuss open questions for future research.

Results

The reusable frameworks, processes, and tools that resulted from the design and development phase of our study are shared in this Results section. Using these tools in the implementation phase of the study, we registered, evaluated, and monitored 26 AI models (including large language models), and 2 ambient AI pilots. We also conducted a retrospective review of 33 live nomograms using these approaches. Nomograms are clinical decision aids that generate numeric or graphical predictions of clinical events based on an underlying statistical model with various prognostic variables. Descriptive statistics and use cases shared in this section highlight how these frameworks, processes, and tools come together to support our RAI governance approach in action.

Phase 1 – Design & development: AI task force results

The AI Task Force (AITF) work resulted in our overall AI program framework (see Fig. 1), and the identification of 4 main challenges to be overcome: 1) high-quality data for AI model development, 2) high-performance computing power, 3) AI talent capacity, and 4) policies and procedures. The initial AI Model Inventory completed by the AI TF in Q4 2023 identified 87 active projects (66 research (76%); 15 clinical (17%); 6 operations (7%)) spanning 9 program domains. The leading AI development teams of clinical and operational models at MSK are Strategy & Innovation (9 models), Medical Physics (3 models) and Computational Pathology (3 models). The AITF subgroups identified 22 priorities for future AI-related investment, which were formed into 5 high-level strategic goals. The AITF developed a partnership model (see Fig. 1, right column) for use in evaluating potential AI vendors. The AITF recommended expanding high-quality curated data, by increasing our pace using AI-enabled curation.

Overall AI program structure developed by Memorial Sloan Kettering’s AI Task Force with three programmatic AI domains (Research, Clinical, Operations), supported by governance, partnerships, and underlying infrastructure, skills and processes. MSK = Memorial Sloan Kettering; AI/ML artificial intelligence/machine learning, LLM large language model, HR human resources, EHR electronic health record, ML Ops machine learning operations. Source: Memorial Sloan Kettering.

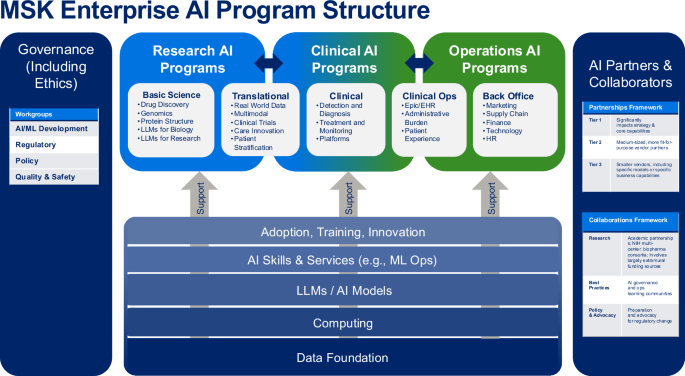

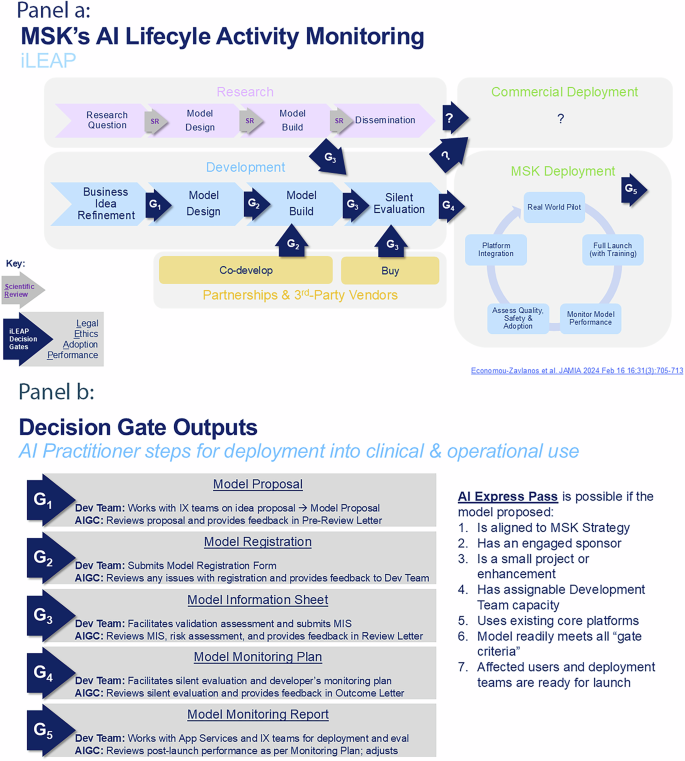

Phase 1 – Design & development: AI Governance Committee Results

The AI Governance Committee (AIGC) work resulted in our novel oncology AI Lifecycle Management operating model - iLEAP - and its decision gates (“Gx”; see Fig. 2, Panel a). iLEAP stands for Legal, Ethics, Adoption, Performance. It has three main paths for AI practitioners: 1) research (purple path), 2) home-grown build (blue path), and 3) acquired or purchased (orange path). Models developed for research projects are out of scope for the AIGC and governed by existing scientific review processes via MSK’s IRB to ensure scientific freedom and velocity. The AIGC acts as a consultant to the IRB in this path. However, if research models are brought by AI practitioners for translation into clinical or operational use, the AIGC review process is initiated minimally beginning at G3. Decision gate definitions and Express Path criteria are described in Fig. 2, Panel b. The “embedded” AIGC is positioned within the enterprise digital governance structure as shown in Fig. 3). The AIGC developed a Model Information Sheet (MIS - aka “nutrition card”) which is now used to prospectively register all models in our Model Registry that are headed for silent-evaluation mode, pilot or full production deployment (See Table 1 for MIS details). Anticipatable “aiAE’s” are captured at G2 and formalized in the MIS at G3 pre-launch. The Model Registry currently contains 21 MISs. The AIGC AI/ML workgroup adapted and launched a risk assessment model for reviewing acquired and built in-house AI models (see Table 2)32. Model risk scores are a balance of averaged risks factors in the upper part of Table 2, offset by averaged mitigation measures in the lower part of Table 2. Individual risk factor scores are 1-Low, 2-Medium, and 3-High. Mitigation measures are binary 1-present or 0-absent. We also began implementing a validated tool for measuring clinician trust of AI as part of the G5 evaluation toolkit that we developed previously (TrAAIT)33. This trust assessment is used when relevant as part of the “Assess Quality, Safety & Adoption” step within iLEAP G5, as shown in Fig. 2, Panel a.

a End-to-end AI model lifecycle management model employed by the AI Governance Committee with three paths to entry into enterprise-wide deployment (research-purple path; in-house development-blue path; 3rd-party co-development or purchase-orange path). b Decision gate definitions, the outputs of each decision gate, and express path criteria used by the AI Governance Committee for review of each registered model. iLEAP Legal, Ethics, Adoption, Performance, MSK Memorial Sloan Kettering, IX Informatics, Dev Development, MIS Model Information Sheet, App Application, AIGC AI Governance Committee. Source: Memorial Sloan Kettering.

Positioning of the AI Governance Committee as embedded within overall digital governance structures, along with goals, primary activities, and key collaborations with other related committees needed for successful governance of AI models throughout their lifecycle. IRB Institutional Review Board, DAC Digital Advisory Committee, RAI Responsible Artificial Intelligence, MSK Memorial Sloan Kettering, IT Information Technology. Source: Memorial Sloan Kettering.

Phase 2 – Implementation: AI Model Portfolio Management Results

The AIGC treats the overall collection of AI models at various stage gates of development as an actively managed “portfolio”. The Model Registry enables tracking which models are at each iLEAP gate stage as they mature. Part of our iterative refinement process over the last year has been to clarify for AI practitioners what the specific entry and exit criteria are for each gate, such that they are easily explained and achievable. For example, a key transition point is G3 (Model Information Sheet) into G4 (Model Monitoring Plan) -- the point at which models get “productionized” after a period of validation in a testing environment (see Fig. 2, Panel a). For AI models exiting G3, sponsors and leads working in collaboration with the AIGC and AI/ML solution team, must have a) completed model registration in the registry, b) specified responses to the online questionnaire for the Model Information Sheet (including FDA Software-as-a-Medical Device (SaMD) screening questions), c) completed a risk assessment with our risk management tool, and d) received a AIGC Review Letter approving moving the model from the testing environment into enterprise production systems. Meeting all the criteria for an “Express Pass” enables a more rapid review by the AIGC and deployment into production (see Fig. 2, Panel b), especially if the AI practitioner is proposing to deploy their model through existing, approved core platforms. A visual of this Express Pass process flow is depicted for Case Study #1 below.

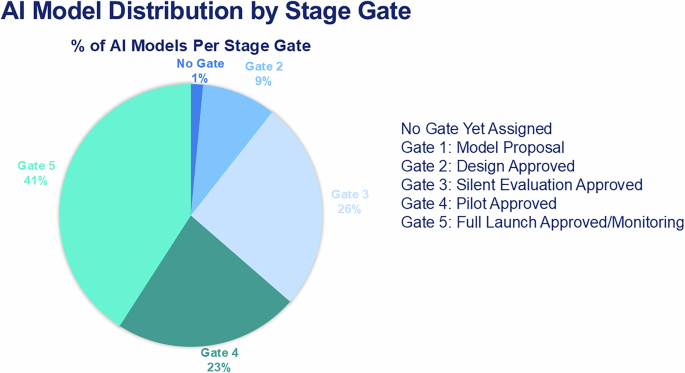

Our Model Registry allows us to track the distribution of models across the iLEAP stage gates (see Fig. 4). The AI/ML Solution Engineering team has supported the development of 19 models year-to-date (see Table 3) and conducted 17 acquired model risk assessments. The AIGC has provided 2 formal “Express Path” Review Letters to sponsors (one each for our Radiology and Medical Physics departments). We reviewed G5 success metrics of 2 Ambient AI pilots, and approved one of these to move to an expanded deployment. We retrospectively reviewed 33 live clinical nomograms with sponsors as part of G5 monitoring, resulting in sunsetting 2 models because clinical evidence has evolved. The Quality & Safety workgroup is planning for the interception and remediation of “aiAE’s” for models in G4-5 stages which will guide the “Assess Quality, Safety & Adoption” step in G5. This will leverage existing voluntary reporting and departmental quality assurance infrastructure to avoid duplicative processes. Trend analysis shows that, overall, AI demand is going up. AI project intake volume was up 63% in calendar year 2024 vs. 2023. Five proposals reviewed for deployment in 2024 were 3rd-party vendor models, compared to 15 proposed and reviewed for deployment in 2025.

Monitoring of the performance and impact of models that go live in production is mission critical in a mature RAI governance framework. The AIGC adopted four main components of evaluation in G5 (Monitoring Report) which are prospectively planned in G4 (Monitoring Plan) with the sponsors and leads for each model. The main components of G5 monitoring are: (1) model performance in “production” as compared to in “the lab” (e.g., precision, recall, f-measure for monitoring drift), (2) adoption (using the TrAAIT tool), (3) pre-specified success metric attainment, and (4) rate of aiAE’s for safety monitoring. Each model is assigned a date for formal AIGC review, usually 6–12 months after go-live or major revision. Evaluation criteria in G5 are tailored to be specific to a model’s risk profile and what it accomplishes in workflow. An example of this in practice is for inpatient clinical deterioration in oncology. Our home-grown Prognosis at Admission model predicts 45-day mortality10. Success metrics focus on goal-concordant care, such as Goal of Care documentation compliance, appropriate referral to hospice, and rate of “ICU days” near the end of life. Of note, this model is planned for re-launch within our new EHR and will be compared to an EHR-supplied deterioration model later this year on these metrics and reviewed by the AIGC. A second example of this in practice is for our Ambient AI deployment. We will be assessing documentation quality, “pajama time”, adoption rate, and cognitive burden, and the AIGC will use that evaluation to determine if Ambient AI’s use should be further expanded.

Phase 2 – Implementation: Case studies of RAI governance in action

To demonstrate our governance approach in real-world use, a recent AIGC agenda (October 2024) and two recent AI model review case studies are described below. Typical AIGC agendas include programmatic updates, 1-2 key topics, new business generated from our intake process, and follow-up and monitoring of models previously reviewed and deployed into production. Our two case studies below are selected to illustrate how our Express Pass works, and to highlight some of the issues of “build vs. buy” using one example of each. Of note, only these 2 of 19 live models (10.5% of models approved for G5 deployment) have met Express Path criteria as of the end of this study period.

The October 2024 AIGC agenda included new business, a key topic, model follow-ups, and a review of two Ambient AI pilots results and associated decisions. The two decisions made by the AIGC for the Ambient AI pilots covered: (1) obtaining informed 2-party consent for these pilots from patients and care teams was approved as consistent with our guiding AI ethics principles (despite New York State law only requiring 1-party consent for recordings), and (2) an expanded Ambient AI pilot was recommended based on the initial G5 Monitoring Report. The new business review included: 1 model at G1, 20 models at G2, and 3 models at G3 (5 developed at MSK; 19 vendor-developed). Our key topic involved finalizing updates to the language in our charter around decision rights to pause or terminate models with degraded performance or safety issues. Lastly, we initiated proactive updates to existing policies and procedures for allowed and prohibited uses of AI via the Policy workgroup.

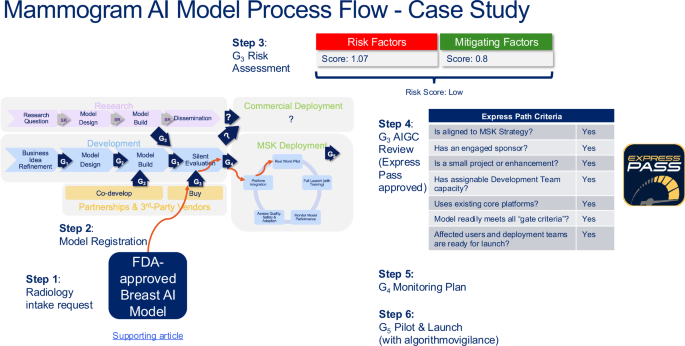

The first case study discusses our AI governance process flow for a radiology FDA-approved AI model by a 3rd party vendor. This AI model uses image-based analysis to identify possible breast cancers. The second case study discusses an internally built AI model by our Medical Physics department. This second model is a tumor segmentation model for brain metastasis built on MRI images.

Our first case study was a “buy/acquire” AI model example from a 3rd-party vendor. Our Radiology Department identified an FDA-approved AI model by a new 3rd party vendor that assists radiologists in triaging mammograms, using image-based analysis to identify possible breast cancers. Radiology asked for an “Express Pass” review (See Fig. 2, Panel b). The model operates on top of our enterprise Picture Archiving and Communication System (PACS), and as an aid to the radiologist doing the interpretation. Intake for the idea proposal was reviewed and refined by the AIGC with the Radiology team, Informatics, and Application Services. Radiology at MSK has experience with developing and implementing AI. The Chair of the department is the Sponsor, and the Chief of the Breast Service is the Lead. The overall risk assessment score using our tool in Table 2 was “low”, driven by an averaged risk factor score 1.07, and mitigated by present measures of human-in-the-loop with the interpreting radiologist, post-go live monitoring reports provided by the model vendor, and FDA approval for the intended use (averaged mitigation measure score 0.8). The Radiology Department has an established quality assurance program which they will leverage in their post-go-live monitoring plan and an internal education plan. The AIGC recommended proceeding to bring this model live, with ongoing monitoring (next G5 check point 6–12 months post-live). The turn-around time for the “Express Pass” review by the AIGC was 2 weeks. MSK’s enterprise Application Services deployed the model for Radiology. A visual summarizing this model’s progression through the iLEAP model is depicted in Fig. 5, including the Risk Assessment, and Express Pass approach.

Real-world example of an acquired FDA-approved radiology AI model from a 3rd-party vendor, and the steps on the path it followed through the lifecycle management stage gates. iLEAP Legal, Ethics, Adoption, Performance; FDA Food and Drug Administration, AIGC AI Governance Committee. Source: Memorial Sloan Kettering.

Our second case study was a “build” AI model example, developed in-house. Our Medical Physics department has a long history of developing and implementing in-house methods for clinical AI for radiation oncology normal tissue contouring, tumor segmentation, and treatment planning, with over 10,000 patient treatment plans facilitated by their in-house developed AI tools13,34. Medical Physics requested the AIGC for an “express path” review of an in-house model they developed for brain metastasis tumor segmentation built on MRI images. Intake for the idea proposal was reviewed and refined by the AIGC with the Medical Physics team, Informatics, and the AI/ML Platform Group. The Chair of the department is the Sponsor, and the Vice Chair is the Lead. The risk assessment score was “medium” driven by use with live patients, but mitigated by Medical Physics’ established quality assurance program for software deployment which was human-in-the loop35. The AIGC recommended proceeding to bring this model live, with ongoing monitoring (next G5 check point 6-12 months post-live). The turn-around time for the “Express Pass” review by the AIGC was 2 weeks. MSK’s Medical Physics department will deploy the model.

Discussion

This study is one of the first published reports on RAI governance at scale in oncology. We demonstrated that governance and quality assurance of oncology AI models are feasible at scale but require key components for success. This is especially true as our Model Registry data shows AI intake volume increased from the year 2023 to 2024, and is projected to increase further in 2025.

Our study is distinguished from prior research on non-clinical explorations6 by the description of our experience governing clinical and operations AI. Our RAI governance approach is aligned with but distinguished from more general guidance in previously released recommendations (https://train4health.ai/)5,16,17,18,19,20 in that we provide a deeper level of specificity and lessons learned about how we implemented real-world RAI. We also reported on novel tools and processes that can be used in practice by others in oncology, and perhaps more broadly. We extended prior work in life-cycle operating models36,37 with our novel model iLEAP, adding explicit on-ramps for 3rd party vendor models, and a clinician trust evaluation step with our TrAAIT assessment tool33. We also developed a novel partnership model at the overall program level. Our risk-based assessment approach is a novel adaptation for application in oncology. Our Model Information Sheet questions screen for FDA SaMD compliance as a key consideration (see Table 1). However, our RAI approach was also designed to be broader to handle many other types of AI models that are not considered SaMD. This is to be expected given the various “intensities” of AI model development in comprehensive cancer centers compared to general healthcare organizations. These disease-specific “intensities” include, for example, predicting tumor evolution and treatment response, genomic decision support, pathology image analysis, radiation oncology treatment planning, improving patient time on treatment, and chemotherapy toxicity prediction.

Improving our AI governance process is an ongoing learning endeavor. We are revisiting AIGC “decision rights” and any revisions needed to our charter. One of our central concerns is balancing the two sides of our main AIGC guiding principle - “promoting use” AND “responsible use”. Modeled similarly to an IRB, we anticipate the AIGC will be asked to exercise authority to recommend acceleration (e.g., for break-through AI tools), deceleration (e.g., for models that failed to meet exit criteria after silent-mode testing in G4), sunsetting (e.g., for models that drift and demonstrate worsening performance or non-adoption in G5), or outright rejection (e.g., in the case of duplication of an existing model, or significant misalignment with strategy, policy, or guiding principles). Our “Express Pass” is one of our current approaches to striking this balance – if AI practitioners adhere to these criteria, review of their models can be expedited. Evaluation of the clinical and operational impact of each of our models is outside the scope of the current study and will be part of future research based on our G5 monitoring.

A limitation of this study is that it represents one Comprehensive Cancer Center’s view on RAI governance and the accompanying concerns for generalizability. Not all organizations will have AI/ML development teams for internal AI model build, or Machine Learning Operations teams to support silent evaluations and model monitoring at scale. Some will depend exclusively on their vendor partners for clinical and operational AI. As such, we propose the following minimum RAI program components as part of a “pragmatic RAI governance approach”: (1) multi-disciplinary governance committee, (2) intake process for new AI model ideas, (3) risk assessment framework, (4) model registry, (5) access to vendor monitoring data, if possible (e.g., off-the shelf dashboards, if available), (6) linkage into the hospital’s existing safety event reporting processes and tools, and (7) support from leadership for the authority of the governance committee. The relative effectiveness and financial value of a more robust RAI governance model vs. a more pragmatic governance model is a reasonable future study, perhaps using implementation science as a conceptual framework.

Open questions for further applied research include: (1) governance of built models going straight-to-commercialization, (2) learning additional best-practices from internal “centers of excellence” in AI (e.g., Strategy; Med Physics; Radiology at MSK), 3) refining pre-launch risk assessment criteria, (4) reliable handling of “aiAE’s” to rapidly mitigate and prevent harm, (5) rationalizing the minimum policies and safe guardrails needed for faster execution of an “express pass”, (6) addressing the talent shortage, (7) pedagogy for future AI-enabled oncologists, (8) staffing levels to support IRB-approved research studies with AI-based interventions, (9) effective communication articulating the value of RAI governance, (10) validated approaches for improving clinician trust in AI (perhaps leveraging our TrAAIT tool33), and (11) pragmatic approaches to determine which models actually need governance review. This last question is perhaps the most important since many AI models are increasingly available as simply-configured “system features” (easily toggled on or off by a systems analyst), as opposed to stand-alone models requiring more extensive Machine Learning Operations and high-performance compute support. This increase in “system feature” volume will rapidly outstrip RAI governance capacity. One option for future research is whether a tiered review process (such as full review, expedited review, exempt from review like an IRB) can enable throughput while preserving safety effectively. MSK’s AIGC members have developed preliminary risk criteria that trigger review of such “system features” under limited conditions – our effort to strike the balance of promotion and responsible use of these tools.

Methods

We employed a consensus-based approach to develop and implement our novel socio-technical AI governance framework and management tools. This study is an assessment of this approach in two programmatic phases: (1) design and development of the governance approach, and (2) real-world implementation experience and outputs of our responsible AI program over its first year in action. Each phase had two programmatic workstreams which are described below. Results are reported as descriptions of novel tools developed for AI model governance, descriptive statistics of the management of our AI program, and illustrative use cases. The impact of the individual AI models on clinical and operational outcomes is beyond the scope of this report and will form the basis of future empirical research. However, we describe below how productionized models are evaluated for performance and impact as an explicit step in our novel AI model lifecycle management process.

Phase 1: Design & Development of an AI strategic roadmap

Our first phase involved assessing the current state of AI development and use across our organization and developing a rational plan for future strategic investment and support. Previous work at Memorial Sloan Kettering (MSK) on Ethical AI guiding principles was completed in 2022. The release of new LLMs and generative AI in late 2022 led our executive leadership to commission an updated strategy and roadmap. An AI Task Force (AITF) was created in Q1 2023 to address this need. Membership and a timeline of activities and outputs of the AITF are detailed in Table 4, including our Ethical AI principles. A prospective enterprise inventory of active AI projects was created and reviewed. The multidisciplinary AITF identified key, oncology-specific strategic priorities over a five-year time horizon. To ensure responsible and effective execution of that plan, the AITF recommended that an “AI governance body” be created to address the volume of AI projects underway and the rapid pace of change in the field.

Phase 1: Design & development of the AI Governance Committee (AIGC)

In response to the AITF recommendation to establish a governing body for our AI program, the AI Governance Committee (AIGC) was commissioned in Q4 2023. The AIGC launched in Q1 of 2024 and has been meeting monthly for 11 months at the time of this report. Membership was intentionally multi-disciplinary to ensure relevant, enterprise-wide experience and perspectives. The members included AI developers, clinicians, nursing, quality and safety, ethics, legal, compliance, translational research, technology, and were orchestrated by informatics. The AIGC developed a charter focused on “promoting the responsible use of AI”. Our charter was guided by six ethical AI principles developed previously at MSK (see Table 4). The AIGC is positioned intentionally as an “embedded” committee in our overall digital governance structure. “Embedded” means that it is not a standalone governance process, isolated from other enterprise digital activities and inputs. The AIGC’s rationale for this was to acknowledge AI’s new risks while not creating a separate structure and process likely to stifle innovation, duplicate existing governance processes, and become unsustainable. Therefore, the AIGC takes prioritization guidance from existing digital advisory committees and is consulted by (and consults with) the Institutional Review Board (IRB) and the Research Data Governance Committee (RDG). The AIGC has had four workgroups to-date: lifecycle governance, regulatory, policy, and quality & safety. A timeline of key activities and outputs of the AIGC are detailed further in Table 4.

Phase 2: Implementation – technical support methods

The second phase of our RAI approach focused on implementation, including establishing a centralized AI technical support team in a hub-and-spoke model across our organization. The AI/ML Solutions Engineering is a centralized engineering team that was initiated in 2021. Core capabilities include technical support of the AI lifecycle end-to-end (i.e., initial design through post-launch monitoring) and AI-enabled applications. The team supports approved projects and information-secure sandbox environments for AI discovery and testing. It consists of 11 staff (data scientists, AI/ML engineers, strategy and program support) with an additional 10 off-shore consultants augmenting the team as needed. The AI/ML Solutions Engineering team partners with several other AI practitioner groups housed in different departments across the organization, whose scopes include AI models for hospital operations to grant-funded AI research.

As shown in Fig. 6, the AI/ML Solutions Engineering team supports an end-to-end platform for the AI model lifecycle on-premise and on the public-cloud. The technology stack spans machine learning/deep learning (ML/DL), tools to transfer models into production, and cognitive, large language model (LLM), and vision large model (VLM) support.

High-level description of the technology stack employed by the central AI/ML team to support AI practitioners across MSK. AI/ML artificial intelligence/machine learning, ML machine learning, DL deep learning, LLM large language model, VLM vision language model, EHR electronic health record. Source: Memorial Sloan Kettering Cancer Center.

There are two main workstreams within the AI/ML Solutions Engineering group: 1) AI/ML Clinical Research Group, and 2) AI/ML Platform Group. The AI/ML Clinical Research Group works with researchers on grants, publications, and retrospective studies. In contrast, the AI/ML Platform Group supports enterprise-wide use cases and deployments into full production with ongoing monitoring (i.e., Machine Learning Operations (MLOps)). The Research Group often helps AI practitioners incubate ideas that are then picked up by the Platform Group for production deployment. Both groups assist in validating model performance. The Clinical Research group uses retrospective hold-out data and applies k-fold cross-validation with bootstrap for confidence intervals. The Platforms team uses AI/ML platform tooling for measuring model performance metrics and model drift from ground truth data compared to the prospective use of the model with new patient data. For example, these groups supported MSK’s Digital Pathology team for novel biomarker detection and quantification from digital images14. Also, these groups supported MSK’s Radiology Informatics team in developing a novel fusion model (vision and language) to analyze brain metastases.

These groups also provide active deep-learning-based multi-modal modeling support. The Platform Group has enabled two Generative AI Large Language Model sandbox environments approved by Information Security, Privacy, and Compliance for use by MSK’s AI practitioners. One of the sandboxes supports discovery on de-identified data (stripped of personal health information (PHI) and personal identifying information (PII)). The second sandbox is a secure and private environment supporting discovery on PHI data (with appropriate Institutional Review Board approval for research). The current focus of these groups is enabling additional technical support required for MLOps (i.e., technical support of models over time, including productionization, integration, and monitoring for model drift).

Our prospective enterprise AI Model Registry was developed by the Data Science team in Strategy Innovation & Technology Development. It uses widely available forms of management technology that simplify the process for modelers to submit their Model Information Sheet. The registry data is surfaced in a dashboard for MSK’s AI practitioners and compliance to explore information about these models and their operational status.

Phase 2: Implementation – responsible AI governance methods

Our next step in Phase 2 was to implement and mature our RAI governance model in an iterative refinement process based on data and AI practitioner feedback. The RAI governance model’s primary goal was to balance “promotion of AI” with “responsible use of AI”. This involved striking a balance across multiple objectives. These objectives included ensuring that appropriate priority is provided to AI projects that are aligned to strategy, that AI practitioners develop and employ AI responsibly, that AI practitioners have easy access to approved development and deployment resources, and that scientific freedom is preserved.

Key activities of the AIGC to-date include defining clinical and operations AI considered to be “in-scope” for active governance, and an updated model intake process (aka “demand intake”). As detailed below in the Results section, we developed or adapted the following novel tools: “Model Information Sheet” (MIS; aka “Model Card”); Model Registry (a repository with MIS information about our AI models, including nomograms); risk-assessment process for acquired and built in-house AI models; AI model lifecycle management process called iLEAP, based on a modified version of the Algorithm-Based Clinical Decision Support ABCDS model from Duke AI Health36,37. Nomograms are clinical decision aids that generate numeric or graphical predictions of clinical events based on an underlying statistical model with various prognostic variables. We conducted a full review of live models for FDA Software-as-a-Medical Device (SaMD) regulatory compliance. We created a criteria-based “Express Pass” for AI practitioners who follow best-practices to promote speed-to-deployment for high-priority, high-impact models.

The AIGC prioritizes safety of the models in our portfolio as part of responsible use. Therefore, model developers are required to provide a credible list of anticipated adverse events that might be caused by use of the AI Model as part of their MIS. The quality and safety workgroup is currently prototyping an approach to post-go-live algorithmovigilance38 that will collect AI-induced Adverse Events (aiAE’s) through our voluntary reporting system. As part of any MIS, model developers are also required to provide the expected impact and benefits to relevant users (including clinicians, administrators, researchers or patients), as well as their plan for monitoring the use and impact for these AI models. Expected impact is helpful to assess the overall value and prioritization for all models in the registry.

Lastly, we have initiated an awareness campaign of the AIGC and its new processes with presentations to key stakeholders across the organization. See Table 4 for additional details regarding our methods and timeline for the year prior to, and since launching, the AIGC.

Data availability

No datasets were generated or analyzed during the current study.

Code availability

Not applicable.

References

Riaz, I. B., Khan, M. A. & Haddad, T. C. Potential application of artificial intelligence in cancer therapy. Curr. Opin. Oncol. 36, 437–448 (2024).

Ferber, D. et al. GPT-4 for Information retrieval and comparison of Medical Oncology Guidelines. NEJM AI 1, AIcs2300235 (2024).

Luchini, C., Pea, A. & Scarpa, A. Artificial intelligence in oncology: current applications and future perspectives. Br. J. Cancer 126, 4–9 (2022).

Wiest, I. C., Gilbert, S. & Kather, J. N. From research to reality: The role of artificial intelligence applications in HCC care. Clin. Liver Dis. 23, e0136 (2024).

Federal Drug Administration (FDA). Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices. 2024. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices.

Umeton, R. et al. GPT-4 in a Cancer Center - Institute-wide deployment challenges and lessons learned. NEJM AI 1, (2024).

Finch, L. et al. ChatGPT compared to national guidelines for management of ovarian cancer: Did ChatGPT get it right? - A Memorial Sloan Kettering Cancer Center Team Ovary study. Gynecol. Oncol. 189, 75–79 (2024).

Geevarghese, R. et al. Extraction and classification of structured data from unstructured hepatobiliary pathology reports using large language models: a feasibility study compared with rules-based natural language processing. J. Clin. Pathol. 78, 135–138 (2024).

Ghahremani, P., Marino, J., Dodds, R. & Nadeem, S. DeepLIIF: An online platform for quantification of clinical pathology slides. Proc. IEEE Comput. Soc. Conf. Comput Vis. Pattern Recognit. 2022, 21399–21405 (2022).

Herskovits, A. Z. et al. Comparing clinician estimates versus a statistical tool for predicting risk of death within 45 days of admission for cancer patients. Appl Clin. Inf. 15, 489–500 (2024).

Hung, T. K. W. et al. Performance of retrieval-augmented large language models to recommend head and neck cancer clinical trials. J. Med Internet Res. 26, e60695 (2024).

Jee, J. et al. Automated real-world data integration improves cancer outcome prediction. Nature 636, 728–736 (2024).

Jiang, J. et al. Artificial intelligence-based automated segmentation and radiotherapy dose mapping for thoracic normal tissues. Phys. Imaging Radiat. Oncol. 29, 100542 (2024).

Pareja, F. et al. A genomics-driven Artificial Intelligence-based model classifies breast invasive lobular carcinoma and discovers cdh1 inactivating mechanisms. Cancer Res. 84, 3478–3489 (2024).

Swinburne, N. C. et al. Foundational segmentation models and clinical data mining enable accurate computer vision for lung cancer. J. Imaging Inform. Med. 38, 1552–1562 (2024).

Coalition for Health AI (CHAI). ASSURANCE STANDARDS GUIDE AND REPORTING CHECKLIST. 2024 https://www.chai.org/workgroup/responsible-ai/responsible-ai-checklists-raic.

European Union (EU). European Union Artificial Intelligence Act. Official Journal of the European Union (2024). https://artificialintelligenceact.eu/.

NIST. National Institute of Standards and Technology, Artificial Intelligence Risk Management Framework (AI RMF1.0) (2023). https://www.nist.gov/itl/ai-risk-management-framework.

Office of the National Coordinator (ONC) Health Data, Technology, and Interoperability: Certification Program Updates, Algorithm Transparency, and Information Sharing. (2024) https://www.federalregister.gov/documents/2024/01/09/2023-28857/health-data-technology-and-interoperabilitycertification-program-updates-algorithm-transparency-and.

Solomonides, A. E. et al. Defining AMIA’s artificial intelligence principles. J. Am. Med. Inf. Assoc. 29, 585–591 (2022).

Longhurst, C. A., Singh, K., Chopra, A., Atreja, A. & Brownstein, J. S. A Call for Artificial Intelligence implementation science centers to evaluate clinical effectiveness. NEJM AI 1, AIp2400223 (2024).

Shah, N. H. et al. A nationwide network of Health AI assurance laboratories. JAMA 331, 245–249 (2024).

Chen, R. J. et al. Algorithmic fairness in artificial intelligence for medicine and healthcare. Nat. Biomed. Eng. 7, 719–742 (2023).

Derraz, B. et al. New regulatory thinking is needed for AI-based personalised drug and cell therapies in precision oncology. NPJ Precis. Oncol. 8, 23 (2024).

Bedi, S. et al. Testing and evaluation of health care applications of large language models: a systematic review. JAMA. 333, 319–328 (2024).

Edelson, D. P. et al. Early warning scores with and without Artificial Intelligence. JAMA Netw. Open 7, e2438986 (2024).

Liu, T. L. et al. Does AI-powered clinical documentation enhance clinician efficiency? A longitudinal study. NEJM AI 1, (2024).

Tierney, A. A. et al. Ambient Artificial Intelligence scribes to alleviate the burden of clinical documentation. NEJM Catal. 5, CAT.23.0404 (2024).

Chan, A. S. et al. Palliative referrals in advanced cancer patients: utilizing the supportive and palliative care indicators tool and Rothman Index. Am. J. Hosp. Palliat. Care 39, 164–168 (2022).

Peterson, K. J. et al. Evaluation of the Rothman Index in predicting readmission after colorectal resection. Am. J. Med Qual. 38, 287–293 (2023).

Soroush, A. et al. Large language models are poor medical coders — benchmarking of medical code querying. NEJM AI 1, AIdbp2300040 (2024).

Yeung, L. Guidance for the Development of AI Risk and Impact Assessments. (Center for Long-Term Cybersecurity, University of California, Berkeley, 2021).

Stevens, A. F. & Stetson, P. Theory of trust and acceptance of artificial intelligence technology (TrAAIT): An instrument to assess clinician trust and acceptance of artificial intelligence. J. Biomed. Inf. 148, 104550 (2023).

Elguindi, S. et al. In-depth timing study of AI-Assisted OAR contouring with a single institution expert. Int. J. Radat. Oncol. Biol. Phys. 120, e621 (2024).

Moran, J. M. et al. A safe and practical cycle for team-based development and implementation of in-house clinical software. Adv. Radiat. Oncol. 7, 100768 (2022).

Bedoya, A. D. et al. A framework for the oversight and local deployment of safe and high-quality prediction models. J. Am. Med Inf. Assoc. 29, 1631–1636 (2022).

Economou-Zavlanos, N. J. et al. Translating ethical and quality principles for the effective, safe and fair development, deployment and use of artificial intelligence technologies in healthcare. J. Am. Med. Inf. Assoc. 31, 705–713 (2024).

Embi, P. J. Algorithmovigilance-advancing methods to analyze and monitor Artificial Intelligence-driven health care for effectiveness and equity. JAMA Netw. Open 4, e214622 (2021).

Acknowledgements

The authors acknowledge the significant contributions of the members of Memorial Sloan Kettering’s AI Task Force, whose seminal work led to and supported the formation of MSK’s AI Governance Committee. The Task Force members included Michael Berger, Avijit Chatterjee, Steven Cleaver, Joseph Deasy, Kojo Elenitoba-Johnson, Rémy Evard, Steven Loguidice, Janet Mak, Quaid Morris, Todd Neville, Anaeze Offodile, Dana Pe’er, Paul Sabbatini, Lawrence Schwartz, Sohrab Shah, Peter Stetson, and Louis Voigt. We also acknowledge Liz Herkelrath for her work on digital governance within DigITs (Digital Informatics & Technology Services) at MSK. This research was funded in part through the NIH/NCI Cancer Center Support Grant P30 CA008748.

Author information

Authors and Affiliations

Contributions

All authors reviewed the manuscript. Specific contributions: 1. P.D.S.: conception and design of the work; acquisition, analysis, and interpretation of data; drafted the work and substantively revised it 2. J.C.: conception and design of the work; acquisition, analysis, and interpretation of data; drafted the work and substantively revised it. 3. N.S.: conception and design of the work; acquisition, analysis, and interpretation of data; creation of new software used in the work; drafted the work and substantively revised it. 4. A.B.M.: conception and design of the work; drafted the work and substantively revised it. 5. J.M.: conception and design of the work. 6. A.C.: conception and design of the work; acquisition, analysis, and interpretation of data 7.K.K.: conception and design of the work; substantively revised the work. 8. C.K.: conception and design of the work; substantively revised the work. 9. PS: conception and design of the work 10. J.H.: conception and design of the work; drafted the work and substantively revised it. 11. L.V.: conception and design of the work; acquisition, analysis, and interpretation of data; drafted the work and substantively revised it. 12. JJ: conception and design of the work; substantively revised the work. 13. J.iF.: conception and design of the work; substantively revised the work. 14. MC: conception and design of the work 15. R.H.: conception and design of the work; acquisition, analysis, and interpretation of data; substantively revised the work. 16. R.E.: conception and design of the work. 17. ACO2nd: conception and design of the work; substantively revised the work.

Corresponding author

Ethics declarations

Competing interests

Anaeze C. Offodile, 2nd, is a board member of the Peterson Health Technology Institute. All other authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Stetson, P.D., Choy, J., Summerville, N. et al. Responsible Artificial Intelligence governance in oncology. npj Digit. Med. 8, 407 (2025). https://doi.org/10.1038/s41746-025-01794-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01794-w

This article is cited by

-

Incorporating large language models as clinical decision support in oncology: the Woollie model

npj Digital Medicine (2025)