Abstract

The scarcity of experimental training data restricts the integration of machine learning into catalysis research. Here, we report on the effectiveness of graph convolutional network (GCN) models pretrained on a molecular topological index, which is not used in typical organic synthesis, for estimating the catalytic activity, a task that usually requires high levels of human expertise. For pretraining, we used custom-tailored virtual molecular databases that can be readily constructed using either a systematic generation method or a molecular generator developed in our group. Although 94%–99% of the employed virtual molecules are unregistered in the PubChem database, the resulting pretrained GCN models improve the prediction of catalytic activity for real-world organic photosensitizers. The results demonstrate the efficiency of the present transfer-learning strategy, which leverages readily obtainable information from self-generated virtual molecules.

Similar content being viewed by others

Introduction

The prediction of catalytic properties using machine learning (ML) has become an intriguing challenge, offering new opportunities for data-driven catalyst design and screening1,2,3,4. However, when applying ML to real-world catalysis research, the limited availability of training data poses a considerable hurdle. To address this issue, transfer learning (TL), which consists of transferring the knowledge acquired from one task to another for enhanced model performance with minimal data, has emerged as a promising strategy5,6,7. Although TL methods are crucial and widely used in molecular science8,9,10, further diversification and refinement of TL strategies tailored to molecular catalysis are still important.

Over the past decade, numerous photocatalytic organic reactions have been reported11,12,13,14. Subsequently, ML methods capable of predicting, for instance, substrate reactivity and catalytic activity in photoreactions have been developed15,16,17,18,19,20,21. In particular, the catalytic properties of photosensitizers are critical in determining the success of photoreactions, rendering their ML prediction compelling15,16,18. Inevitably, the development of TL methods with improved performance for the prediction of the photocatalytic activity has become essential. Recently, we have reported a TL strategy based on domain adaptation for predicting the catalytic activity of organic photosensitizers (OPSs), which was implemented using gradient-boosting models22. This TL method is not based on deep learning (DL)23,24,25, with both the source and target tasks focusing on similar yield predictions from OPS properties.

Meanwhile, DL-based TL has also gained prominence as a prediction tool for catalytic reactions26,27,28; however, its application to the prediction of the photocatalytic activity remains underdeveloped. In general, fine-tuning, which is a widely used DL-based TL method, first requires pretraining on a large, existing dataset. Then, the parameters of the pretrained model are transferred and fine-tuned to adapt efficiently to a new task. The interesting aspect of this DL-based approach is that it can use information that is not directly related to target tasks of predicting catalytic properties, unlike non-DL-based TL methods. For example, Sunoj has used a transformer-based model pretrained on SMILES strings of molecules from ChEMBL27, while King-Smith has employed a graph neural network-based model pretrained on crystal structures from the CCDC database28. These studies demonstrate that DL leverages textual or structural data to enhance the predictive performance for yield and enantiomeric excess. In addition to these ChEMBL and CCDC databases, various molecular databases, such as QM9, ZINC, Open Reaction Database (ORD), and USPTO, have been developed on the basis of extensive quantum chemical calculations, experiments, and surveys of commercial sources29,30,31,32,33,34. However, these databases still represent only a fraction of the whole chemical space, which is estimated to contain more than 1060 molecules35, leaving a considerably larger number of “latent” organic molecules unregistered36. Obviously, the use of these molecular groups through DL could lead to the diversification and enhancement of ML strategies targeting molecules.

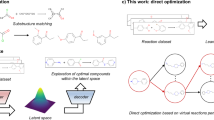

In this study, to explore the utility of these potentially countless unused molecules for TL in catalysis research, we investigated the transferability of information from a custom-tailored virtual molecular database for the prediction of the catalytic activity of real-world OPSs (Fig. 1). While elegant precedents have been reported in TL for molecular catalysis as mentioned earlier, a primary contribution of our approach lies in the creation of virtual molecular databases composed of OPS-like fragments, constructed using both a systematic generation method and a molecular generator developed in our group. The preparation of pretraining labels constitutes a major challenge when using custom-tailored databases. When constructing large databases, obtaining chemical properties that can be used as prediction targets through quantum chemical calculations or experiments is often impractical owing to excessive operational costs. To address this issue and to facilitate the use of virtual molecules, we used molecular topological indices as pretraining labels, which are not directly related to photocatalytic activity but can be prepared in a much more cost-efficient manner than values derived from quantum chemical calculations or experiments. Typically, compound properties are estimated on the basis of related properties of known compounds. In this study, we also attempted to enhance this prediction process by incorporating intuitively unrelated information from diverse unrecognized compounds using DL.

a Graphical overview and b detailed workflow of the TL process. In this study, graph convolutional network-based deep-learning (DL) models, pretrained on a topological index of virtual molecules, are used to construct machine-learning (ML) models for predicting the catalytic activity of organic photosensitizers (OPSs).

Results and discussion

Generation of virtual molecular databases

First, we constructed virtual databases by combining molecular fragments. We prepared 30 donor fragments, 47 acceptor fragments, and 12 bridge fragments (Figs. S1–S3), partially referring to the report by Liu and Wang37. The donor fragments are primarily based on aryl or alkyl amino groups, carbazolyl groups with various substituents, and aromatic rings with electron-donating groups, supplemented with fluorenyl, silafluorenyl, and diphenylphosphino groups. The acceptor fragments consist of nitrogen-containing heterocyclic rings and aromatic rings with electron-withdrawing groups, as well as tricyclic aromatic rings such as phenanthrene and benzo[1,2-b;4,5-b’]difuran. The bridges include simpler π-conjugated fragments such as benzene, acetylene, ethylene, furan, thiophene, and pyrrole, as well as ether and thioether fragments. To prevent the database from being excessively biased by preferences of organic chemists, several fragments whose boundaries among donor, bridge, and acceptor types are ambiguous were also included.

Using these fragments, we constructed Database A by systematically combining fragments at predetermined positions and Databases B–D by using a molecular generator developed in this research. In Database A, different bonding positions of the same acceptor fragments were also applied (Fig. S3). As a result, from 30 donor fragments (D), 65 acceptor fragments (A), and 12 bridge fragments (B), we generated 25,350 molecules composed of two to five fragments, including D–A, D–B–A, D–A–D, and D–B–A–B–D structures (Fig. 2a).

a Rules for the construction of databases. Molecules in Database A were generated by systematically combining donor (D), bridge (B), and acceptor (A) fragments at predetermined positions. Databases B, C, and D were created with an in-house molecular generator. b Chemical space representation of each database, derived from the UMAP dimensionality reduction on Morgan fingerprint. c Molecular-weight distributions across each database.

The molecular generator used to construct Databases B–D was based on a tabular reinforcement learning (RL) system (Fig. 2a). RL is a type of ML wherein an agent learns to make decisions by interacting with an environment. The agent takes actions, receives feedback, i.e., rewards or penalties, and adjusts its behavior to maximize the cumulative reward throughout the training process. RL can guide molecular generation in a specific direction, and we implemented the Q-function, which is crucial for policy setting, using a tabular representation. For calculating a reward, we used the Tanimoto coefficient (TC), which estimates molecular similarity through the Morgan fingerprint of each generated molecule. The TCs of each newly generated molecule were calculated against all previously generated molecules and averaged. We used the inverse of the averaged TC (avgTC) as a reward for RL, which assigns higher rewards to molecules dissimilar to the previously generated molecules. Error settings were important to ensure that molecules in Databases B–D did not deviate too much from those in Database A. Specifically, molecules with molecular weights <100 or >1000 and those consisting of at least six fragments were assigned negative rewards (for details, see Supplementary Note 1). Compounds with negative rewards or duplicate canonical SMILES were removed after the generation of molecules. As part of the policy settings, we used the ε-greedy method to balance exploration and exploitation, and applied three different ε values. For Database B, we set ε = 1, meaning that the molecular generation was based only on random exploration, without exploiting any knowledge obtained from the previous action. In contrast, we set ε = 0.1 for Database C, prioritizing exploitation (90%) while allowing for some random exploration (10%). For Database D, ε started at 1 and gradually decreased to 0.1, progressively increasing the reliance on exploitation. We thus generated <30,000 molecules using each method. Differences in the behavior of molecular generation according to the reward and policy settings are summarized in the Supplementary Information (Figs. S4 and S5).

Selection of pretraining labels and comparison of database properties

The selection of pretraining labels that are both effective and cost-efficient was crucial to our approach. Therefore, we focused on features such as molecular topological indices available in the RDKit and Mordred descriptor sets, henceforth referred to as RDKit and Mordred, respectively. From these descriptor sets, we picked up the following 16 candidates as pretraining labels: Kappa2, PEOE_VSA6, BertzCT, Kappa3, EState_VSA3, fr_NH0, VSA_EState3, GGI10, ATSC4i, BCUTp-1l, Kier3, AATS8p, Kier2, ABCGG, AATSC3d, and ATSC3d. A SHAP-based analysis confirmed their significant contribution as descriptors for predicting the product yield in various cross-coupling reactions within in-house datasets (Figs. S6–S8). Details in the process for selecting pretraining labels are provided in the “Methods” section. After removing molecules for which any pretraining label could not be calculated, Database A became the smallest dataset, containing 25,286 molecules. For consistency, Databases B–D were randomly sampled to match this size.

Next, we compared the chemical spaces and molecular-weight distributions of the databases (Fig. 2b, c). Chemical spaces were visualized using uniform manifold approximation and projection (UMAP) on the Morgan-fingerprint-based descriptors. While Database C, which is characterized by a higher proportion of exploitation, resulted in a narrower chemical space than Database B, molecules with higher molecular weights were more frequently generated in Database C. Thus, there is a trade-off between the breadth of the Morgan-fingerprint-based chemical space and the frequency of generating molecules with high molecular weights in our system. Meanwhile, the chemical space of Database D appeared similar to that of Database B, but its molecular-weight distribution was distinct from those of both Databases B and C (Fig. S14). Although Database A was relatively similar to Database B in terms of molecular-weight distributions, it exhibited a significantly narrower chemical space. The developed system could generate molecules with diverse complexity while maintaining a broader Morgan-fingerprint-based chemical space compared to systematic generation even with the same molecular fragments.

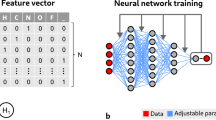

TL for predicting photocatalytic activity in C–O bond forming reactions

In this study, the yield obtained in photoreactions was the target of ML predictions, with the exception of the supervised pretraining stage. Since the product yield of each photoreaction is determined by the employed OPS, the term catalytic activity is also used as a proxy for the yield in the subsequent discussion. To compare the effectiveness of databases for the prediction of the photocatalytic activity, we first constructed pretrained DL models based on the graph convolutional network (GCN), a type of graph neural network that captures the relationships between atoms (nodes) and bonds (edges) within a molecule through graph convolution operations38. We generated features applicable to GCN using the MolGraphConvFeaturizer from DeepChem, an open-source Python toolkit39. For supervised pretraining, we employed a DL model consisting of four GCN layers, one max pooling layer, and three fully connected layers to predict the aforementioned molecular properties derived from RDKit and Mordred, using graph-based features generated from the SMILES strings of the molecules in each virtual database as input. Details of the DL architecture and this pretraining process are provided in the Supplementary Information (Fig. S9) and the “Methods” section.

Next, we performed fine-tuning to predict the catalytic activity of OPSs, i.e., the product yield, using the pretrained GCN models (Fig. 3). We used an in-house database consisting of 100 real-world OPSs, including compounds that have been used as OPSs and their derivatives featuring various substituents (for details, see Supplementary Note 2). The catalytic activities of these OPSs in C–O bond-forming reactions, which are promoted by an inorganic nickel salt and an OPS, were investigated in our previous study22. Given the importance of phenol synthesis40, this reaction system could be a valuable target for ML research. We randomly split the OPS dataset into two halves, 50% for the training set and 50% for the test set, and compared the mean R2 scores over 10 runs, with different training-test splits.

a List of target C–O bond-forming reactions. b Violin plots showing the yield distribution for each photoreaction. c Table summarizing the predictive performance. For GCN models, BertzCT was used as the pretraining label for all tasks. Mean R2 represents the R2 score averaged over 10 runs on the test set, with the standard deviation (SD) shown in parenthesis. The training and test data were randomly split in a 50:50 ratio. The corresponding RMSE values are summarized in Table S18.

For the first fine-tuning target, we used data on C–O bond formation with 4-bromobenzonitrile (1) as the substrate (CO-a in Fig. 3a). This reaction was performed for 1.5 h under visible-light irradiation. As a benchmark, we conducted yield-prediction tasks using ML models based on random forest (RF) combined with RDKit and Mordred, as well as a GCN model without pretraining. The resulting mean R2 scores were 0.49 (RF/RDKit), 0.57 (RF/Mordred), and 0.48 (GCN w/o pretraining). A preliminary investigation identified the model pretrained on BertzCT as the most effective (Table S10). While prediction accuracy improved in all cases, the most notable improvement was observed when Database B was used for pretraining (R2 = 0.74 for Database A; R2 = 0.80 for Database B; R2 = 0.69 for Database C; R2 = 0.75 for Database D). Furthermore, effective pretrained models such as the model pretrained on the BertzCT values of molecules in Database B yielded reduced variability in R2 scores (Fig. 3c and Table S10).

We performed further case studies for yield predictions in other C–O bond-forming reactions. The second fine-tuning target, denoted as CO-b, also used substrate 1 but with an extended reaction time (7.5 hours) compared to CO-a (Fig. 3a). Meanwhile, the third target, denoted as CO-c, used 4-chlorobenzonitrile (2) as the substrate (Fig. 3a). For CO-a, 54 data points with 0% yield and only eight data points with ≥80% yield were obtained. In contrast, CO-b showed 27 data points with 0% yield and 25 data points with ≥80% yield. Although CO-c did not contain too many data points with 0% yield (23 data points), the overall product yields were low owing to the poorer reactivity of 2 compared with that of 1, resulting in only five data points with ≥60% yield. These data distributions are presented as violin plots in Fig. 3b. The benchmark R2 scores averaged over 10 runs were 0.68 (RF/RDKit), 0.56 (RF/Mordred), and 0.56 (GCN w/o pretraining) for CO-b and 0.58 (RF/RDKit), 0.52 (RF/Mordred), and 0.51 (GCN w/o pretraining) for CO-c.

When fine-tuning was performed for the yield prediction in CO-b, the model pretrained on Database D achieved the best performance (R2 = 0.76 for Database A; R2 = 0.75 for Database B; R2 = 0.74 for Database C; R2 = 0.77 for Database D). To further improve predictive performance, we attempted to concatenate two databases, finding that the model pretrained on Database E, consisting of 50,572 molecules from Databases A and C, predicted the yield in CO-b more effectively (R2 = 0.81). Next, we investigated the effectiveness of our TL strategy for yield predictions in CO-c. Although models pretrained on Databases A–D were less effective for yield predictions in CO-c than in CO-a and CO-b, the model pretrained on Database E demonstrated strong performance (R2 = 0.64 for Database A; R2 = 0.66 for Database B; R2 = 0.64 for Database C; R2 = 0.65 for Database D; R2 = 0.77 for Database E). As with CO-a, BertzCT was the most effective pretraining label for the yield predictions in both CO-b and CO-c (Tables S11 and S12).

Although Databases A and C exhibit narrower Morgan-fingerprint-based chemical spaces than Database B (Fig. 2b), the chemical space of Database E appears broader than that of Database B as a result of their concatenation (Fig. S15a). In addition, Database E contains molecules spanning a wide range of molecular weights (Fig. S15b). Meanwhile, we found 323 duplicate molecules (0.6%) in Database E, but removing these duplicates did not improve the predictive performance (Table S15).

Moreover, we examined models pretrained on the same number of molecules obtained from the ZINC15 database instead of Databases A–E as those used for each task, i.e., 25,286 for CO-a and 50,572 for CO-b and CO-c (Fig. 3c). Although ZINC is composed of commercially available drug-like molecules30, these pretrained models were less effective in fine-tuning than those pretrained on Databases B and E (R2 = 0.72 for CO-a; R2 = 0.74 for CO-b; R2 = 0.65 for CO-c). We also tested ReactionT5 (Fig. 3c), a transformer-based model constructed through a two-stage pretraining process using the ZINC and ORD databases, respectively41. Using fine-tuned ReactionT5 models for yield prediction, the mean R2 scores obtained for CO-a, CO-b, and CO-c were 0.39, 0.54, and 0.49, respectively. Although transformer-based models are powerful options for TL, our findings demonstrate that the proposed GCN-based system performs effectively in specialized tasks in which small training datasets can be used, even compared with transformer-based approaches.

Application of the TL strategy to other photocatalytic reactions

To investigate the applicability of the proposed TL strategy, we tried to predict the catalytic activity of OPSs in other photoreactions. In the previous report, we also collected yield data on the C–S bond formation (CS), C–N bond formation (CN), and [2 + 2] cycloaddition (CA) (Fig. 4a)22. For these reactions, the RF models and the GCN model without pretraining exhibited the lower predictive performance than those for the C–O bond formation (Fig. 4b; R2 = 0.11 to 0.21 for CS; R2 = −0.02 to 0.19 for CN; R2 = −0.04 to 0.15 for CA).

a List of target reactions. b Table summarizing the predictive performance. For the GCN models, ABCGG was used as the pretraining label in CS and CA, while Kappa3 was used in CN. For pretraining, Database E was used in CS and CA, and Database F was used in CN. Mean R2 represents the R2 score averaged over 10 runs on the test set, with the standard deviation (SD) shown in parenthesis. The training and test data were randomly split in a 50:50 ratio. The corresponding RMSE values are summarized in Table S23.

When performing supervised pretraining, we used the molecular data from Database E with ABCGG as the pretraining label for CS and CA, while for CN, we employed the data from a combined dataset of Databases B and D (referred to as Database F) with Kappa3 as the pretraining label. As a result of fine-tuning, improvements in the predictive performance were observed across all cases (R2 = 0.34 for CS; R2 = 0.46 for CN; R2 = 0.43 for CA), particularly in comparison with the GCN models without pretraining, but the overall prediction accuracy remained unsatisfactory (Fig. 4b). These GCN models pretrained on virtual databases exhibited the superior predictive performance to GCN models pretrained on ZINC-derived datasets and ReactionT5 (Table S22).

The above-mentioned findings indicate that while the improvement in predictions by the proposed TL method led to satisfactory performance for the C–O bond formation, it failed to deliver adequate performance when the construction of accurate ML models based on SMILES-derived information was intrinsically challenging.

Insights into effective and ineffective pretraining labels

In this study, ABCGG, BertzCT, and Kappa3 were employed as pretraining labels to enhance the performance of GCN models. ABCGG, BertzCT, and Kappa3 represent the Graovac-Ghorbani atom-bond connectivity index, Bertz complexity index, and Hall-Kier third-order kappa index, respectively, providing information on molecular topology, such as complexity, shape, and branching42,43,44. Although these topological indices are derived from different computational formulas, they were found to be highly correlated across the 100 real-world OPSs, as shown in Fig. 5a (for molecules in Databases A–D, see Figs. S16–S19). In addition, each of these topological indices exhibited relatively high correlation coefficients with yields in C–O bond forming reactions (Fig. 5b; 0.52–0.72), and showed moderate correlation coefficients with those in CS, CN, and CA (Fig. 5b; 0.41–0.54).

In contrast, TL based on pretraining using BCUTp-1l as the label did not lead to improved predictive performance compared to models without TL (Tables S10–S12 and S19–S21). BCUTp-1l, which represents first lowest eigenvalue of Burden matrix weighted by polarizability45, showed low correlation coefficients with ABCGG, BertzCT, and Kappa3 (Fig. 5a; 0.09–0.17). Moreover, the poor TL performance appears to be consistent with the lower correlation observed between BCUTp-1l and the product yields (Fig. 5b; 0.04–0.36).

As described in the “Methods” section, the pretraining labels were initially selected based on their high SHAP values in ML models constructed for related photoreactions. Meanwhile, the above-mentioned results suggest that selecting molecular properties showing strong correlation with the prediction target, i.e., product yield, could also be an effective strategy for estimating useful pretraining labels. The ability to flexibly choose task-relevant labels for supervised pretraining is an advantage of the proposed approach. In contrast, while the SHAP-based analysis and correlation analysis can be useful for estimating promising candidates, identifying the single most effective pretraining label remains a challenging task.

Additional information on virtual molecular databases

Figure 6 displays 10 molecules randomly selected from Databases A and B (for Databases C and D, see Figs. S21 and S22). Since the molecules derived from our developed molecular generator were generated without labeling each fragment as the donor, bridge, or acceptor, not all the molecules in Database B include typical donor–acceptor combinations. Meanwhile, Database A contains more systematically structured molecules but also molecules with bonds that are not suitable for OPSs, such as P–S and N–O bonds. In other words, although the molecules constituting the databases are constructed using OPS-like fragments, many of them contain unnatural bonds or fragment combinations different from those in typical donor–acceptor-type OPSs such as 4CzIPN and Acr+-Mes46,47,48. Indeed, as of May 16, 2025, 84 out of 100 OPSs in our dataset could be found in the PubChem database, whereas 94–99% of the molecules in the virtual databases remained unregistered (97.5% in Database A; 94.1% in Database B; 98.9% in Database C; 96.6% in Database D). In addition, while topological indices such as ABCGG, BertzCT, and Kappa3 exhibit moderate to high correlations with the prediction target, i.e., photocatalytic activity, they are not necessarily closely related in terms of their chemical meanings from an organic-chemistry perspective. Nevertheless, it is noteworthy that GCN models pretrained on these topological indices of less realistic molecules outperformed even GCN- and transformer-based approaches that use molecules in existing databases for pretraining.

Moreover, the time required to generate the molecules constituting Database B, which could be the most efficiently constructed, and calculate their BertzCT values using a personal computer (single-core, Intel Xeon w3-2423 2.11 GHz, 64 GB RAM) was approximately 40 minutes (2529 s). Given the efficiency in constructing virtual databases, our protocol provides a data-efficient TL strategy to improve the predictive performance in catalysis research, which lacks sufficient experimental training data.

Potential limitations and future trials

In the proposed strategy, virtual databases were constructed from molecular fragments, which is an approach considered effective for molecule-related tasks. However, a potential limitation is that this method may not be applicable to chemical domains in which such building blocks for database construction cannot be clearly defined, or where readily computable labels, such as topological indices, are unavailable.

Our study also revealed that while the proposed TL method improved prediction accuracy, it still fell short of achieving satisfactory performance for tasks that are inherently challenging for constructing accurate ML models, e.g., CS, CN, and CA. In such cases, it may be necessary to incorporate more directly relevant information, such as physical properties derived from quantum chemical calculations, either as descriptors or as pretraining labels. Additionally, the most effective molecular database varied depending on the target reaction.

Overall, an investigation into the design of custom-tailored virtual molecular databases that are broadly effective for the universal prediction of photocatalytic activity, or applicable to a wider range of reactions and chemical phenomena, remains an important direction for future research. In addition, comparing the current pretraining method with supervised multitask learning or self-supervised learning could offer valuable insights for refining TL strategies based on virtual molecules. Moreover, it is worth exploring whether the proposed TL strategy could be effectively applied to more practical scenarios, such as enhancing surrogate models in ML-based reaction optimization frameworks, including Bayesian optimization.

Conclusions

We have demonstrated the conceptual effectiveness of TL from custom-tailored virtual molecular databases constructed by ourselves, rather than relying on additional experiments or existing databases, in predicting photocatalytic activity, for which experimental training data is insufficient. Databases generated using various policy settings of the molecular generator can provide a data-efficient TL approach, either when used alone or in combination with another database. Notably, molecules with chemically unconventional structures but containing OPS-like fragments provided better performance than molecules extracted from existing databases. In addition, despite not being intuitively related to photocatalytic activity, readily calculated topological indices such as ABCGG, BertzCT, and Kappa3 emerged as effective pretraining labels. We are convinced that this study can be expected to open new avenues for database-assisted strategies in molecule-related sciences.

Methods

Selection of pretraining labels

Pretraining labels were selected from the RDKit and Mordred descriptors. Specifically, we constructed ML models to predict the product yields of related but different photoreactions using either RDKit or Mordred as the descriptor set, and selected descriptors with high SHAP values as pretraining labels. For this process, histogram-based gradient boosting models were employed, using the yield data from 100 OPSs in the nickel/photocatalytic C–O, C–S, or C–N bond formation as the dataset. These reactions correspond to CO-a, CS, and CN, respectively, as described in the main text. Consequently, the following descriptors were identified as candidate pretraining labels: fr_NH0, VSA_EState3, and Kappa3 (from CO-a/RDKit); ABCGG, AATSC3d, and ATSC3d (from CO-a/Mordred); Kappa2, PEOE_VSA6, and BertzCT (from CS/RDKit); GGI10, ATSC4i, and BCUTp-1l (from CS/Mordred); Kappa2, Kappa3, and EState_VSA3 (from CN/RDKit); and Kier3, AATS8p, and Kier2 (from CN/Mordred). Meanwhile, the pretraining label used for CO-a, CO-b, and CO-c, i.e., BertzCT, was derived from the SHAP-based analysis of CS, while that for CS and CA, i.e., ABCGG, and that for CN, i.e., Kappa3, were from CO-a. Thus, all pretraining labels were selected using reactions distinct from the fine-tuning tasks. Detailed results of the SHAP-based analyses are provided in Figs. S6–S8.

Protocol for transfer learning

As pretraining, we performed supervised learning to predict topological indices, such as ABCGG, BertzCT, and Kappa3, for the molecules contained in the virtual database. The dataset was split into training, validation, and test sets with a ratio of 7:1.5:1.5. Model training was conducted for up to 100 epochs, with early stopping applied if the validation loss did not improve for 30 consecutive epochs. In the subsequent performance comparison, 50% of the entire dataset was used for training, while the remaining data was used for testing. Fine-tuning was carried out by replacing the head FC layer with a new one, followed by updating only the parameters of the FC layers while keeping all GCN layers frozen. To mitigate the impact of variations in the splitting pattern between the training and test datasets on predictive performance, we tested 10 different training-test splits with random partitioning and compared the average and standard deviation of the R2 and RMSE scores.

Data availability

The datasets supporting the findings of this study are available within the Supplementary Information, and files summarizing SMILES of molecules used for pretraining and their properties derived from RDKit and Mordred descriptor sets are available at our GitHub repository (https://github.com/Naoki-Noto/P7-20240809/tree/main/Database%20construction/Make_database_adapt1/data/result) and Zenodo49. Source data of all figures is provided as Supplementary Data 1.

Code availability

All code necessary for this research is available at our GitHub repository (https://github.com/Naoki-Noto/P7-20240809) and Zenodo49.

References

Ahneman, D. T., Estrada, J. G., Lin, S., Dreher, S. D. & Doyle, A. G. Predicting reaction performance in C–N cross-coupling using machine learning. Science 360, 186–190 (2018).

Zahrt, A. F. et al. Prediction of higher-selectivity catalysts by computer-driven workflow and machine learning. Science 363, eaau5631 (2019).

Reid, J. P. & Sigman, M. S. Holistic prediction of enantioselectivity in asymmetric catalysis. Nature 571, 343–348 (2019).

Singh, S. et al. A unified machine-learning protocol for asymmetric catalysis as a proof of concept demonstration using asymmetric hydrogenation. Proc. Natl. Acad. Sci. USA117, 1339–1345 (2020).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2009).

Weiss, K., Khoshgoftaar, T. M. & Wang, D. A survey of transfer learning. J. Big Data 3, 9 (2016).

Tan, C. et al. A survey on deep transfer learning. In Proc. Artificial Neural Networks and Machine Learning–ICANN 2018 270–279 (Springer, 2018).

Cai, C. et al. Transfer learning for drug discovery. J. Med. Chem. 63, 8683–8694 (2020).

Dou, B. et al. Machine learning methods for small data challenges in molecular science. Chem. Rev. 123, 8736–8780 (2023).

van Tilborg, D. et al. Deep learning for low-data drug discovery: Hurdles and opportunities. Curr. Opin. Struct. Biol. 86, 102818 (2024).

Romero, N. A. & Nicewicz, D. A. Organic photoredox catalysis. Chem. Rev. 116, 10075–10166 (2016).

Strieth-Kalthoff, F., James, M. J., Teders, M., Pitzer, L. & Glorius, F. Energy transfer catalysis mediated by visible light: principles, applications, directions. Chem. Soc. Rev. 47, 7190–7202 (2018).

Chan, A. Y. et al. Metallaphotoredox: the merger of photoredox and transition metal catalysis. Chem. Rev. 122, 1485–1542 (2022).

Pitre, S. P. & Overman, L. E. Strategic use of visible-light photoredox catalysis in natural product synthesis. Chem. Rev. 122, 1717–1751 (2022).

Buglak, A. A. et al. Quantitative structure–property relationship modelling for the prediction of singlet oxygen generation by heavy-atom-free BODIPY photosensitizers. Chem. Eur. J. 27, 9934–9947 (2021).

Li, X. et al. Combining machine learning and high-throughput experimentation to discover photocatalytically active organic molecules. Chem. Sci. 12, 10742–10754 (2021).

Kariofillis, S. K. et al. Using data science to guide aryl bromide substrate scope analysis in a Ni/photoredox-catalyzed cross-coupling with acetals as alcohol-derived radical sources. J. Am. Chem. Soc. 144, 1045–1055 (2022).

Noto, N., Yada, A., Yanai, T. & Saito, S. Machine-learning classification for the prediction of catalytic activity of organic photosensitizers in the nickel(II)-salt-induced synthesis of phenols. Angew. Chem. Int. Ed. 62, e202219107 (2023).

Li, X. et al. Sequential closed-loop Bayesian optimization as a guide for organic molecular metallophotocatalyst formulation discovery. Nat. Chem. 16, 1286–1294 (2024).

Schlosser, L., Rana, D., Pflüger, P., Katzenburg, F. & Glorius, F. EnTdecker—A machine learning-based platform for guiding substrate discovery in energy transfer catalysis. J. Am. Chem. Soc. 146, 13266–13275 (2024).

Dai, L. et al. Harnessing electro-descriptors for mechanistic and machine learning analysis of photocatalytic organic reactions. J. Am. Chem. Soc. 146, 19019–19029 (2024).

Noto, N. et al. Transfer learning across different photocatalytic organic reactions. Nat. Commun. 16, 3388 (2025).

Shim, E. et al. Predicting reaction conditions from limited data through active transfer learning. Chem. Sci. 13, 6655–6668 (2022).

Zhang, Z.-J. et al. Data-driven design of new chiral carboxylic acid for construction of indoles with C-central and C–N axial chirality via cobalt catalysis. Nat. Commun. 14, 3149 (2023).

Xu, X.-Y., Liu, L.-G., Xu, L.-C., Zhang, S.-Q. & Hong, X. Transfer learning-enabled ligand prediction for Ni-catalyzed atroposelective Suzuki–Miyaura cross-coupling based on mechanistic similarity: leveraging Pd knowledge for Ni discovery. J. Am. Chem. Soc. 147, 15318–15328 (2025).

Schwaller, P., Vaucher, A. C., Laino, T. & Reymond, J.-L. Prediction of chemical reaction yields using deep learning. Mach. Learn.2, 015016 (2021).

Singh, S. & Sunoj, R. B. A transfer learning protocol for chemical catalysis using a recurrent neural network adapted from natural language processing. Digit. Discov. 1, 303–312 (2022).

King-Smith, E. Transfer learning for a foundational chemistry model. Chem. Sci. 15, 5143–5151 (2024).

Raghunathan, R., Pavlo, D., Matthias, R. & von Lilienfeld, O. A. Quantum chemistry structures and properties of 134 kilo molecules. Sci. Data 1, 140022 (2014).

Sterling, T. & Irwin, J. J. ZINC 15—Ligand discovery for everyone. J. Chem. Inf. Model. 55, 2324–2337 (2015).

Irwin, J. J. et al. free ultralarge-scale chemical database for ligand discovery. J. Chem. Inf. Model. 60, 6065–6073 (2020).

Kearnes, S. M. et al. The open reaction database. J. Am. Chem. Soc. 143, 18820–18826 (2021).

Suzgun, M., Melas-Kyriazis, L., Sarkar, S. K., Kominers, S. D. & Shieber, S. M. The Harvard USPTO patent dataset: a large-scale, well-structured, and multi-purpose corpus of patent applications. In Advances in Neural Information Processing Systems 36 (NeurIPS, 2023).

Zdravzil, B. et al. The ChEMBL database in 2023: a drug discovery platform spanning multiple bioactivity data types and time periods. Nucleic Acids Res. 52, D1180–D1192 (2024).

Bohacek, R. S., McMartin, C. & Guida, W. C. The art and practice of structure-based drug design: a molecular modeling perspective. Med. Res. Rev. 16, 3–50 (1996).

Reymond, J. L. The chemical space project. Acc. Chem. Res. 48, 722–730 (2015).

Xu, S. et al. Self-improving photosensitizer discovery system via Bayesian search with first-principle simulations. J. Am. Chem. Soc. 143, 19769–19777 (2021).

Kipf, T. & Welling, N. M. Semi-supervised classification with graph convolutional networks. https://doi.org/10.48550/arXiv.1609.02907 (2016).

Ramsundar, B., Eastman, P., Walters, P. & Pande, V. Deep Learning for the Life Sciences: Applying Deep Learning to Genomics, Microscopy, Drug Discovery, and More (O’Reilly Media, 2019).

Rappoport, Z. The Chemistry of Phenols (Wiley-VCH, 2003).

Sagawa, T. & Kojima, R. ReactionT5: a pre-trained transformer model for accurate chemical reaction prediction with limited data. J. Cheminform. 17, 126 (2025).

Graovac, A. & Ghorbani, M. A New version of atom-bond connectivity index. Acta Chim. Slov. 57, 609–612 (2010).

Bertz, S. H. The first general index of molecular complexity. J. Am. Chem. Soc. 103, 3599–3601 (1981).

Hall, L. H. & Kier, L. B. The molecular connectivity chi indexes and kappa shape indexes in structure-property modeling. Rev. Comput. Chem. 367, 422 (1991).

Burden, F. R. Molecular identification number for substructure searches. J. Chem. Inf. Comput. Sci. 29, 225–227 (1989).

Fukuzumi, S. et al. Electron-transfer state of 9-mesityl-10-methylacridinium ion with a much longer lifetime and higher energy than that of the natural photosynthetic reaction center. J. Am. Chem. Soc. 126, 1600–1601 (2004).

Uoyama, H., Goushi, K., Shizu, K., Nomura, H. & Adachi, C. Highly efficient organic light-emitting diodes from delayed fluorescence. Nature 492, 234–238 (2012).

Shang, T.-Y. et al. Recent advances of 1,2,3,5-tetrakis(carbazol-9-yl)-4,6-dicyanobenzene (4CzIPN) in photocatalytic transformations. Chem. Commun. 55, 5408–5419 (2019).

Noto, N. et al. Transfer learning from custom-tailored virtual molecular databases to real-world organic photosensitizers for catalytic activity prediction, P7-20240809. https://zenodo.org/records/16928661 (2025).

Acknowledgements

This research was supported by the JSPS/MEXT Grants-in-aid for Transformative Research Areas (A) Digi-TOS (24H01071 to N.N., 24H01096 to M.F., and 21H05221 to R.K.), JSPS/MEXT Grants-in-aid for Young Scientists (23K13744 to N.N.), an AI Research Grant from the Public Foundation of the Chubu Science and Technology Center (N.N.), JSPS/MEXT Grants-in-aid for Specially Promoted Research (23H05404 to S.S.), JSPS/MEXT Grants-in-aid for Transformative Research Areas (A) Green Catalysis Science (23H04904 to S.S.), JSPS/MEXT Grants-in-aid for International Leading Research (22K21346 to S.S.), and JST CREST (JPMJCR22L2 to S.S.).

Author information

Authors and Affiliations

Contributions

Building on the in-house molecular generator developed by T.N., N.N. conceptualized this study and conducted the experiments in collaboration with M.F. and R.K. under the supervision of S.S. The original draft was written by N.N. and edited by all authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Chemistry thanks An Su and the other anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Noto, N., Nagano, T., Fujinami, M. et al. Transfer learning from custom-tailored virtual molecular databases to real-world organic photosensitizers for catalytic activity prediction. Commun Chem 8, 288 (2025). https://doi.org/10.1038/s42004-025-01678-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s42004-025-01678-w