Abstract

Ukraine’s war-exposed youth face a myriad of barriers to receiving mental health services, perhaps most notably a dearth of mental health professionals. Experts recommend evaluating digital mental health interventions (DMHIs), which require minimal clinician support. Based on the content of empirically supported treatments for war-exposed youth (e.g., Teaching Recovery Techniques), one strategy that might be useful is self-calming (e.g., paced breathing, progressive muscle relaxation). In this pre-registered randomized controlled trial (ClinicalTrials.gov Record: NCT06217705; first submitted January 12, 2024), we assessed the acceptability, utility, and clinical efficacy of one such DMHI (Project Calm) relative to a usual schoolwork control among a sample of Ukrainian students in grades 4–11. We analyzed outcomes for the full sample and subsamples with elevated symptoms at baseline. Although Calm was perceived favorably, there were no significant between-group differences in the full sample (N = 626); differences in subsample analyses demonstrated that while internalizing, externalizing, and trauma symptoms held steady for the Calm group, control participants’ symptoms reduced. We generated potential explanations for these results (e.g., interference with youths’ natural coping skills or fear extinction) through a focus group with school staff. Given that we found no evidence that calming skills taught via DMHI are effective for Ukrainian youth, we suggest that researchers test other strategies delivered by DMHI and that calming skills continue to be taught in provider-guided formats.

Similar content being viewed by others

Globally, 1/6 of children live in places impacted by political violence and armed conflict1. Russia’s invasion of Ukraine in February 2022 marked an escalation in the Russo-Ukrainian War that has deeply impacted the nation’s children, who are confronted by a myriad of stressors, including displacement and loss of loved ones. As of June 2024, more than 12,000 Ukrainian civilians had been killed, and tens of thousands of civilians had been injured since the escalation of the war2. In the first 100 days, more than five million children were left in need of humanitarian aid3, and in the first year, 16,000 instances of air raid sirens sounded for 22,995 h nationwide4.

These stressors exacerbated a mental health crisis, putting 7.5 million children’s mental health at risk1. Indeed, recent reports on Ukrainian adolescents have estimated rates of moderate-to-severe depression at 32%, anxiety at 18%5, and rates of PTSD among school-aged youths at 13%6.

These mental health challenges require clinical intervention, but most children in war-affected countries, including Ukraine, do not receive psychotherapy. Stigma and cultural conceptions of mental health play a role7,8, but the primary barrier is the limited mental health workforce. The provider workforce has been inundated with demand and stretched by war-related disruption of transportation systems and displacement within Ukraine and internationally9,10.

Creative interventions such as one leveraging community art-based workshops, as well as a brief, hybrid (face-to-face and online) version of the empirically supported teaching recovery techniques (TRT)11 have demonstrated promising results12,13. TRT, a widely accepted approach to treating large numbers of children in need of clinical intervention in low-resource settings, is a manualized treatment based on trauma-focused cognitive behavioral therapy (CBT) that includes seven sessions for youths and two for their caregivers. The sessions for children involve psychoeducation, affective modulation skills, cognitive coping and processing, trauma narratives, in vivo mastery of trauma reminders and enhancing future safety and development; caregiver sessions involve psychoeducation about traumas, information about what children learn during their sessions, and discussion of how caregivers can help their children to cope effectively. TRT has been tested in many clinical trials, with evidence suggesting its effectiveness in reducing youths’ grief14, as well as symptoms of PTSD14,15,16,17 and depression14,17. Unfortunately, current treatments such as TRT are subject to the availability of human support (e.g., clinicians and lay providers).

One partial solution involves implementing digital mental health interventions (DMHIs) that require little-to-no contact with a clinician. DMHIs can be accessed at times and in places that are convenient, and evidence supports their effectiveness in reducing symptoms of mental health conditions including anxiety, depression, and misconduct18,19,20,21,22—even in as little as a single session23,24. DMHIs are not a panacea—e.g., they cannot achieve the individual tailoring that psychotherapy can provide25, and they require devices and Internet access that may be unavailable in some war-affected regions26—but they may offer a partial solution.

The Global Resources fOr War-affected youth (GROW) Network, an international group of experts in emergency and disaster relief, child and adolescent mental health, and digital mental health, recently reviewed the research literature on DMHIs for young people affected by war27. Their systematic search yielded ~7000 hits but only six relevant studies. Most relevant interventions were tested in natural disaster settings. For example, “Bounce Back Now,” a modular CBT intervention for adolescents and their parents targeting PTSD, depression, and substance use, was tested in a randomized controlled trial (RCT) with youths affected by tornadoes in the Southern U.S.; the researchers found small improvements in PTSD and depressive symptoms28. Another study evaluated “Brave Online,” a clinician-guided, CBT-based toolkit focused on youth anxiety symptoms via a non-controlled trial involving youth who had lived through earthquakes in New Zealand; the researchers found that the intervention was associated with reductions in anxiety disorder diagnoses, anxiety symptoms, mood symptoms, and improvements in quality of life from pre- to post-treatment29. Yet only one study evaluated a DMHI for youths affected by war30. Specifically, in a single-arm trial with 125 Syrian adolescent refugees in Lebanon, the researchers evaluated a toolkit called “Happy Helping Hand” with simulations intended to help build coping skills in a series of life-like scenarios related to trauma or stressful experiences (e.g., war, displacement); the intervention was associated with significant improvement in anxiety and depression, as well as increases in well-being from pre- to post-treatment, but this study was not an RCT30. Overall, given the dearth of studies testing DMHIs for war-exposed youths, the GROW Network concluded that there is a clear need for development and rigorous testing of DMHIs for this population.

We developed Project Calm (herein, “Calm”), a self-guided DMHI based on an empirically supported therapeutic principle of change featured in treatments for youth exposed to war such as TRT (i.e., self-calming/emotion regulation31,32,33) and tested it (adapted and translated) via RCT in a region of Ukraine frequently targeted by Russian attacks. Pre-registered aims included evaluating intervention acceptability and comparing changes in mental health symptoms from baseline to 2-month follow-up (when the control group received Calm), and from 2- to 4-month follow-up. We analyzed outcomes separately for the full sample and the subsamples with elevated symptoms at baseline to determine whether Calm might be beneficial as a universal and/or targeted intervention for symptomatic youths. We hypothesized that youths would perceive Calm as acceptable and useful, and that participants randomly assigned to Calm would experience greater reductions in mental health symptoms, as well as greater improvements in calming skills and life satisfaction, compared to participants assigned to the control condition, for the full sample and youths with elevated symptoms at baseline.

Methods

This study was pre-registered on ClinicalTrials.gov (ClinicalTrials.gov Record: NCT06217705; first submitted January 12, 2024). All procedures were approved by the Harvard University IRB and partner school administrators. Pre-registered analyses, statistical code, and de-identified data are publicly available (https://osf.io/9c2b3; minor deviations from the pre-registered plan are described herein). No incidental adverse events were reported by participants or identified by the researchers during the study period.

School partnership

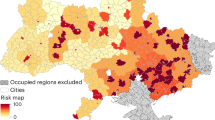

This study was conducted between January and May 2024 in collaboration with four schools in a region of Ukraine that is frequently targeted by Russian attacks (e.g., missile and rocket bombardment). Per community-engaged research guidelines34, study procedures were designed and implemented in partnership with key stakeholders: one teacher or psychologist in each school served as a “champion,” liaising between the research team and students, other teachers, families, and administrators. We minimized procedural complexity and burden by using passive (i.e., opt-out) consent procedures—each champion provided caregivers with the passive consent form and informed caregivers that they would have one week to complete an online survey to make the researchers aware of their request for their child to be excluded from the research; all other students were invited to participate and could choose for themselves whether to assent.

Participants

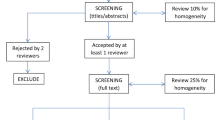

Students in grades four through 11 at our four partner schools were invited to participate. Exclusion criteria included: (i) caregiver opting their child out of the study or the child not assenting, and (ii) the child not being able to engage with the Ukrainian-language program (implicit in assenting given that the assent form was written in Ukrainian at reading level similar to the intervention). All students in our partner schools were provided with a computer/tablet to participate, and they were able to use a smartphone if they owned one. In total, 781 students assented, and 626 students (303 received Project Calm initially and 323 were randomized to the control group initially, receiving Project Calm after 2 months) were included in the intent-to-treat sample (Fig. 1).

Note. The intent-to-treat sample (ITT) was derived from the group of participants who were randomized, after removing participants who violated randomization. There are five reasons for exclusion from the ITT analyses. Exclusion Criteria 1–4 involve no randomization. Exclusion Criterion 5 excludes those participants who violated randomization, namely those who were randomized more than once, often due to having restarted the baseline survey after disruption by air raid sirens. Further, we excluded participants who were randomized twice to Project Calm due to increased exposure to the intervention; at the 2-month assessment time point, if participants completed Project Calm twice, we excluded their survey responses from the 3- and 4-month assessments but retained their 2-month assessments. Participants who were randomized more than once to only the control group were retained because they received only the questionnaires (they did not complete usual schoolwork more than once); we retained their first survey if multiple were completed, or we retained the survey that was most complete if none were complete.

Procedures

In total, 143 caregivers indicated that their child should be excluded. All students who were opted out by a caregiver and those who refused assent completed an alternative activity selected by school personnel. Assenting students completed online questionnaires and were randomized 1:1 within Qualtrics to receive Project Calm or to receive only questionnaires, after which point those who did not receive Project Calm engaged in independent schoolwork (e.g., completing math problems) selected by their teachers with the aim of maximizing ecological validity. School personnel were not explicitly informed of which participants were assigned to which condition, but this may reasonably have been inferred based on the amount of time that each student took to complete their assigned online activity in the presence of school personnel (i.e., questionnaires plus Project Calm versus only questionnaires). All participant-facing interactions were facilitated by school personnel (who met with researchers based in the U.S., England, and Poland by Zoom to review study procedures ahead of time); thus, no members of the research team responsible for designing the intervention, the study procedures, or cleaning and analyzing the data interacted with study participants unless participants or their caregivers contacted these individuals by email with questions about participation. Because randomization was performed automatically, through Qualtrics, no one was able to influence condition allocation. All outcome assessments were completed independently by participants via Qualtrics.

Participants in several schools were disrupted by air raid sirens, up to their third consecutive attempts to begin the study, which prompted all students to discontinue the intervention and to seek shelter in bunkers each time. We allowed all participants who were interrupted to resume the study when they were able to do so, but many students resumed participation on different devices than those that they used originally, and their progress was lost as a result (although their partially completed responses were still available to the researchers and were used for data cleaning and exclusion purposes). Thus, some participants began the baseline survey from the beginning, leading to re-randomization. If the new randomization violated participants’ previously assigned group, their data were excluded from analyses.

Participants were invited to complete online questionnaires 1 month (4 weeks), 2 months, 3 months, and 4 months post-intervention. At the 2-month assessment time point, students who did not receive Project Calm at baseline received Project Calm following the standard questionnaires, and those who received Project Calm at baseline received only the questionnaires. Students completed all follow-up assessments during class time to maximize response quality and completion rates. As compensation, we donated funds to participating students’ schools in amounts proportional to the number of students who participated. Study procedures ensured that all students had the choice to participate or to engage in an alternate activity, preventing teachers from influencing recruitment for personal gain. While teachers and administrators received a fixed payment for facilitating the study, the only participation-based compensation was a school-wide donation for resources, ensuring no individual staff member had a personal incentive to attempt to sway student participation.

At the end of the study, we conducted an exploratory (not pre-registered) focus group with school personnel involved with the study to solicit feedback on the study procedures and to ask for their putative explanations for the results based on their experience carrying out the study procedures and interacting with their students.

Intervention

Project Calm (https://osf.io/b5vt4) is a ~30-min self-guided DMHI based in Qualtrics. The intervention was developed by researchers in the U.S. based on other DMHIs that are effective for adolescent mental health MH23,24,35,36, and the intervention underwent feasibility and pilot testing with Canadian and American youths, through which the intervention was refined. The intervention was then adapted (with help from school personnel and a Ukrainian psychiatrist specializing in treating youths with exposure to traumas), translated, and then back-translated with help from a professional translation service. Project Calm uses exercises and graphics to teach youths strategies for calming themselves when they are feeling overwhelming negative emotions. Project Calm begins by asking youths to select a challenge that they have dealt with or might deal with in the future: (a) “I feel stressed, nervous, and/or worried a lot,” (b) “I often feel tense, unhappy, and/or bored,” or (c) “I feel angry, ague, and get in trouble more than I would like to.” Depending on which answer is selected, the youth is informed that the challenge they selected is common and can make various aspects of daily life more challenging. Then, the youth is asked to rate how they feel on a scale from 1 (extremely calm) to 10 (extremely tense) before being taught the first calming strategy: slow, deep breaths. In this exercise, youth can follow along with an animation to practice taking slow, deep breaths in which they attempt to breathe in through their nose and exhale for slightly longer than they inhaled, placing a hand on their stomach, and feeling it inflate and deflate like a balloon.

Project Calm then asks youths to note the sensations that they feel in their bodies when stressed (e.g., tense muscles, chest pain, tingling, and sweating), and informs them that calming skills can help to regulate these responses to stress, even if they are unable to change the situation directly. Next, users learn about a research study in which other youths who practiced calming skills reported improvements in the mental health problem initially selected by the user, and they read a vignette about another young person who used calming skills to address the same issue. Participants then learn how to picture a peaceful place and mindfully notice the sensations (i.e., sight, sound, etc.) associated with that place. Additionally, participants learn how to perform progressive muscle relaxation by listening to audio that instructs them to tense and relax different parts of their body while slowing their breathing; participants are able to download this audio file and an associated transcript for future reference. Finally, participants learn a skill called “Quick Calming” that involves a combination of the aforementioned strategies that can be done relatively quickly—youths are instructed to breathe in through their nose and hold the breath for a few seconds, picture their calming place and hold that image in their mind while they hold their breath, relax one part of their body that feels tense, and breathe out as slowly as possible. Youths listen to an audio vignette of another youth who uses the Quick Calming strategy, and then they are able to practice the skill by following step-by-step instructions that appear on the screen.

After each exercise, participants rate their level of tension and are informed that the more they practice the more helpful these skills are, that different skills work for different people, and that they should experiment with different skills. At the end of the intervention, youths advise a hypothetical younger student who wants their help with staying calmer (e.g., selecting which skill they should practice and when they should practice it), and the intervention concludes with the youth making a plan to practice a calming strategy in their daily life.

Because the program was originally designed in English, we worked with a professional translation service to translate (English to Ukrainian) the intervention and then to back-translate it (Ukrainian to English) to assess drift from the original intended meaning, after which point, we made a final round of edits to align the Ukrainian version with the original English version. We also worked with Ukrainian colleagues to ensure that the intervention content was culturally appropriate for Ukrainian youth.

To maximize ecological validity, we used a usual schoolwork control condition in which typical school activities were selected by our champions—teachers and psychologists at our partner schools. We asked teachers to select activities that they believed most closely aligned with those in which students would be participating on a typical school day (e.g., working independently on math problems or completing assignments to prepare for their next class period). With this design, we aimed to construct a comparison condition that was feasible for schools to execute, while also establishing a baseline for what symptom trajectories might look like in the absence of any psychological intervention to control for the passage of time and environmental stressors (namely, those related to the war) that would theoretically be experienced similarly between groups.

Primary outcome measures

The Internalizing Subscale of the Behavior and Feeling Survey (BFS37) is a six-item measure used to evaluate mental health symptoms (namely, symptoms of anxiety and depression) at all time points. All items are rated on a scale from 0 (not a problem) to 4 (a very big problem). Items include statements such as “I feel down or depressed” and “I feel nervous or afraid.” The BFS has been validated in a sample of youths ages 7–15, with results indicating robust factor structure, internal consistency, test-retest reliability, convergent and discriminant validity with similar measures, and utility for tracking changes in symptoms over time37. In our study, McDonald’s ωt38 computed at pre-intervention, 1-, 2-, 3-, and 4-month follow-ups were 0.89, 0.94, 0.97, 0.98, and 0.95, respectively. As recommended by experts39, we used McDonald’s ωt as our reliability coefficient given that Cronbach’s α has been criticized consistently (e.g.,40). One major issue with Cronbach’s α is the assumption of tau-equivalence, which implies that all items in the model load onto one factor equally. This is rarely fulfilled in practice. McDonald’s ωt is based on factor analytic techniques and doesn’t require tau-equivalence41. The main advantages of ωt over α are as follows: (1) ωt makes fewer and more realistic assumptions than α (e.g., α is biased when tau-equivalence is violated, which is the common case in practice); (2) problems associated with inflation and attenuation of internal consistency estimation are far less likely using ωt; (3) employing “ωt if item deleted” in a sample is more likely to reflect the true population estimates of reliability through the removal of a certain scale item; (4) the calculation of ωt alongside a confidence interval better reflects the variability in the estimation process, providing a more accurate degree of confidence in the consistency of the administration of a scale.

Perceived program acceptability and helpfulness were assessed immediately post-intervention via the Program Feedback Scale42. This scale consists of seven items (see Supplemental Table 26) rated on a 1–5 scale, with higher scores indicating greater acceptability and helpfulness. Items include statements such as “I enjoyed the activity” and “I agreed with the program’s message.” In line with prior work23,42 and our pre-registration, scores greater than or equal to 3.5/5.0 were considered acceptable. McDonald’s ωt computed at post-intervention in the group that received Project Calm immediately was 0.94, and 0.97 for the group that received Project Calm after a delay.

Secondary outcome measures

The Externalizing Subscale of the BFS37 is a six-item measure used to evaluate mental health symptoms at all time points. All items are rated on a scale from 0 (not a problem) to 4 (a very big problem). Sample statements include “I break rules at home or at school” and “I am rude or disrespectful to people.” In our study, McDonald’s ωt computed at pre-intervention, 1-, 2-, 3-, and 4-month follow-ups were 0.89, 0.94, 0.98, 0.98, and 0.95, respectively.

The short post-traumatic stress disorder (PTSD) Rating Interview (SPRINT) is a brief, global assessment of PTSD that has demonstrated good test-retest reliability, internal consistency, and convergent and divergent validity with other assessments of PTSD43. We used a slightly modified version of the SPRINT in which youths were asked to endorse any applicable, potentially traumatic events generated with help from a Ukrainian child psychiatrist with extensive experience providing clinical care to displaced Ukrainian children—these events and the frequencies with which they were endorsed in the sample at baseline are summarized in Table 1. After endorsing one or more of the potentially traumatic events, youths were asked to rate four items corresponding to each of the four PTSD symptom clusters (i.e., intrusion, avoidance, numbing, and hyperarousal), as well as four additional questions assessing somatic distress, being upset by stressful events, as well as interference with school/work or daily activities and relationships with family or friends. Each item is rated on a five-point scale (ranging from 0 [not at all] to 4 [very much]). McDonald’s ωt computed at pre-intervention, 1-, 2-, 3-, and 4-month follow-ups were 0.90, 0.93, 0.97, 0.98, and 0.94, respectively.

The ability to regulate one’s emotions using calming skills was assessed using an original three-item measure, with each item rated on a scale from 0 (completely disagree) to 10 (completely agree). Items are as follows: “When I feel tense or nervous, I can calm myself,” “When I feel scared, I can calm myself,” and “When I feel angry, I can calm myself.” McDonald’s ωt computed at pre-intervention, 1-, 2-, 3-, and 4-month follow-ups were 0.88, 0.91, 0.94, 0.95, and 0.94, respectively.

The functional Top Problems Assessment (TPA44) assesses severity ratings for the functional top three problems that the youth identifies as most important to them (ranging from 0 [not a problem] to 4 [a very big problem]). Psychometric analyses have demonstrated that the TPA has strong test-retest reliability, convergent and discriminant validity with standardized measures of youth mental health, and sensitivity to change during treatment44. Due to technical limitations related to our survey software and the need to use anonymous links (i.e., links that are not tied directly to any given participant, but rather can be re-used across multiple participants) to protect the privacy of participants and to comply with our IRB-approved data safety plan, we were unable to carry forward youths’ functional top three problems to be re-rated at subsequent time points as has been done in other studies using the TPA (e.g.,45); rather, participants were able to select new functional top problems at each time point, and severity ratings were collected for these three listed problems at each time point, which may or may not have been the same as those endorsed at baseline. McDonald’s ωt computed at pre-intervention,1-, 2-, 3-, and 4-month follow-ups were 0.90, 0.88, 0.89, 0.89, and 0.88, respectively.

Life satisfaction was evaluated using the Brief Multidimensional Students’ Life Satisfaction Scale—Peabody Treatment Progress Battery version, a six-item measure with sound psychometric properties (BMSLSS-PTPB46). This measure evaluates youths’ self-reported overall life satisfaction, as well as satisfaction with their family life, friendships, school experience, self, and where they live (using a five-point scale with anchors ranging from very unhappy to very happy). McDonald’s ωt computed at pre-intervention, -1, 2-, 3-, and 4-month follow-ups were 0.91, 0.94, 0.94, 0.97, and 0.96, respectively.

Sensory processing sensitivity (SPS) is increasingly recognized as a key factor influencing stress susceptibility, coping mechanisms, and therapeutic interventions47, particularly among youth exposed to severe stressors48,49. Given its association with heightened stress responses and long-term health outcomes50,51, SPS was included as a pre-registered outcome to examine its malleability in response to intervention. The Short Version of the highly sensitive person scale (HSP-1052,53) is a 10-item scale validated in studies of people aged 12-25. The scale was developed based on the U.S. Highly Sensitive Person Scale54, by selecting questions with the highest informational value based on item response theory analyses. The HSP-10 Scale can be used to compare age groups and changes over time52,53. Sample questions from the version used in this study include: “Loud noises make me feel uncomfortable” and “I don’t like it when things change in my life.” Agreement with these statements is rated on a seven-point Likert scale, ranging from 1 (not at all) to 7 (definitely). McDonald’s ωt computed at pre-intervention, 1-, 2-, 3-, and 4-month follow-ups were 0.88, 0.91, 0.96, 0.97, and 0.93, respectively.

Immediately prior to receiving Project Calm (either at baseline or at the 2-month post-baseline time point), participants rated their expectations of the intervention using an original measure of treatment expectations25. This four-item measure evaluates participants’ pre-intervention expectations regarding various aspects of the intervention (e.g., “How much do you think you will like doing the online wellness activity?”; “How much do you think the online wellness activity will help you at school?”). These items were rated from 0 (not at all) to 10 (a lot). McDonald’s ωt computed at pre-intervention for the group that received Project Calm immediately was 0.94 and 0.97 for the group that received Project Calm after a delay.

Analysis plan

Regarding our primary test of interventions effects (i.e., beta-distributed mixed effects models examining the time [baseline, 1- or 2-month follow-up] * condition[Calm or control] interaction), an a priori power analysis revealed that a sample size of 865 participants was needed to detect the smallest effect size of interest (SESOI; d = 0.20) using two-tailed tests with α = 0.05. However, pragmatically, we were limited by the number of students enrolled in our partner schools and the number of these students and their caregivers who were willing to participate. In reality, our intent-to-treat sample (N = 626) was smaller than our target sample, albeit with substantially less attrition than expected; nonetheless, we were underpowered based on our original estimate, and we were unable to recruit additional students during the school year given that all students in our partner schools whose caregivers did not opt them out of the study had already been asked to participate.

Response rates for follow-up assessments (ranging from 68.37% to 90.26%) were larger than those in related research55. We used standard available-case analysis following an intent-to-treat approach, creating item-level mean scores to account for missingness at the item level. For generalized linear mixed effects models (GLMMs; see below), the maximum likelihood implementation in the glmmTMB package uses all available data56,57. We tested for equal proportions of participants in each condition who failed to respond to follow-up surveys. These tests yielded no significant differences between conditions in assessment response rates at either of the primary assessment time points (i.e., attrition rates of 9.90% in Calm and 9.60% in control at 1-month [p = 0.999]; attrition rates of 10.89% in Calm and 9.91% in control at 2-months [p = 0.785]).

To determine whether the conditions were balanced on demographic factors and on clinical measures (e.g., the internalizing subscale of the BFS), we explored differences in baseline characteristics. No significant differences were detected between conditions for age (t = −0.44, p = 0.661), gender (χ2(2, 625) = 0.34, p = 0.843), internalizing symptoms (t = −1.08, p = 0.282), externalizing symptoms (t = −0.52, p = 0.606), top problem severity (t = 1.45, p = 0.147), trauma symptoms (t = −0.61, p = 0.545), self-reported ability to calm oneself (t = −1.11, p = 0.266), life satisfaction (t = 0.23, p = 0.816), or sensory sensitivity (t = −1.10, p = 0.270). Given the balance between groups, as well a lack of pre-registered, theory-driven covariates, no covariates were included in the main analyses.

In line with standardized measures of youth mental health (e.g.,58), the elevated baseline symptoms subsample was defined as >1 SD above the sample mean on a given measure (or >1 SD below the sample mean for measures where higher scores indicate better functioning, e.g., life satisfaction).

To examine the effects of Project Calm on outcome trajectories—within the full sample and the elevated subsamples—we implemented beta-distributed linear mixed-effects models (belonging to the GLMM class) using the glmmTMB package in RStudio56,59. In each between-groups model (i.e., baseline to 2-month follow-up), the outcome was predicted by a Condition*Time interaction (in the case of the exploratory analyses, Condition * Time * Moderator). In each within-group model (i.e., baseline to 4-month follow-up for the group who received Project Calm initially, to test the potential holding power of Project Calm’s effects; 2-month [i.e., pre-intervention] through 4-month follow-up for the group who received Project Calm after a delay), the data were subset to include data from only the immediate-Project Calm group or the delayed-Project Calm group, and each outcome was predicted by time alone. To account for individual differences in baseline scores, we specified random intercepts for participants and recruitment sites in all models. We initially attempted to fit models with random slopes for time to capture individual variability in trajectories. However, these models frequently resulted in convergence issues or singularity warnings, which are common indicators of overfitting when the data do not sufficiently support the model complexity60. This issue was particularly pronounced for some analyses, especially those involving subgroups and interaction effects, where the inclusion of random slopes led to overfitting. Because failure to converge is a consequence of attempting to fit a model that is too complex to be properly supported by the data, and our use of beta-distributed linear mixed effects models (which was necessary to accommodate the skewed, double-bounded distributions of our response variables; see below) already led to a higher complexity than a Gaussian model, we sought to use a more conservative approach to avoid model misspecification. Moreover, because our study was underpowered, we prioritized conserving statistical power—including random slopes can reduce statistical power, especially when random slope variances are small or data are limited61.

We used beta-distributed linear mixed-effects models (belonging to the GLMM class), as opposed to the pre-registered Gaussian linear mixed effects models, to account for the fact that the distributions of our outcome variables caused a violation of the normality assumption62,63. Because our data were right-skewed with natural boundaries at scale endpoints, we transformed the data to a (0,1) scale to implement beta-distributed linear mixed-effects models (using the default logit link function). Parameters produced by the glmmTMB package (using a beta distribution) are on the logit scale by default, and we then exponentiated the parameters to convert them to an odds ratio (ORs64) scale to increase interpretability. The absolute difference between the OR and 1 indicates the magnitude of the effect (with values that are further from 1 in either direction indicating larger effects); by adjusting whether the control group or the intervention group was set as the reference category in these models, we were able to keep consistent the direction of OR values that represent improvement (i.e., Project Calm was set as the reference category for outcomes for which lower scores are better [e.g., depression and anxiety symptoms on the BFS] and the control condition was set as the reference category for outcomes for which higher scores are better [e.g., life satisfaction on the Peabody measure])—that is, for all between-groups comparisons, OR values that are larger than 1 represent improvements in the Project Calm condition relative to control (and values less than 1 represent improvements in the control condition relative to Project Calm), and the difference between the OR value and 1 indicates the magnitude of the effect. For all within-group analyses, OR values that are larger than 1 represent improvements in a given group (taking into account whether higher or lower scores on a given outcome measure represent improvement), and OR values that are less than 1 represent relative worsening within that group.

As a sensitivity analysis, we examined whether the results of the between-groups analyses reported above held up when models were re-run with a reduced sample of 582 students (NCalm = 259) who spent at least 15 min completing Calm, and thus were unlikely to have rushed through the intervention.

Given our school champions’ hypothesis that older students may have benefited more from Calm than younger students, we also examined the interaction between condition (Calm vs control), time, and participant age (numeric). We also re-ran our between-group (full-sample and elevated subsample analyses) with participant age at baseline included as a covariate.

Additionally, given that many participants (NCalm = 73) were disrupted by air raid sirens during their initial attempts to complete the baseline session, we examined whether those who were interrupted reported worse outcome trajectories than those who were not. Specifically, we examined the interaction effect between disruption status (disrupted vs not disrupted) and time among immediate-Calm participants.

Further, we examined whether participants’ pre-intervention expectancies of Calm explained symptom trajectories. We speculated that youths with higher expectations of Calm may have been disappointed with the intervention’s impact, and this mismatch between expectancies and perceived impact may have made youths less hopeful that their symptoms would ever improve. Thus, we examined the interaction effect between time and participant expectations among immediate-Calm participants.

Finally, given the possibility that youths who perceived Calm less favorably in terms of acceptability and helpfulness may have been less likely to implement self-calming skills in daily life, and therefore less likely to experience symptom improvements, we explored the association between post-intervention ratings (Program Feedback Scale) and outcome trajectories. Specifically, we examined the interaction effect between post-intervention ratings and time among immediate-Calm participants.

Ethics and inclusion statement

This study was conducted in collaboration with Ukrainian school administrators, psychologists, teachers, and researchers to ensure cultural relevance, ethical integrity, and participant safety. Ethical approval was obtained from the Harvard University IRB and Ukrainian school administrators, and all procedures were developed in collaboration with our interdisciplinary team and adhered to international ethical standards. The intervention was adapted in consultation with Ukrainian colleagues, translated by professional translators, and findings were contextualized with local input through a focus group with local school personnel.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Results

Sample characteristics

Participant demographics and the frequency with which participants reported experiencing potentially traumatic events are shown in Table 1. Average internalizing scores across conditions and time for the full sample are shown in Table 2 and model output can be found in Table 3; average internalizing scores across conditions and time for the elevated subsample are shown in Table 4 and model output can be found in Table 5. Average scores across conditions and time for other outcome measures can be found in the Supplemental Materials.

Primary analyses

Students rated Calm (Mdn. Completion Time = 18.40 minutes) as acceptable and useful, as indexed by mean item-level ratings >3.5/5.0 (M = 3.86, SD = 0.92; Supplemental Table 26).

In the full sample, participants who received Calm at baseline (“immediate-Calm”) did not report significantly greater reductions in internalizing symptoms than control participants at either assessment (i.e., 1- or 2-months) per our model with a Condition * Time interaction (Tables 2 and 3, Fig. 2).

Note. Mean scores in the figure fall within a standardized range between 0 and 1 after transforming the data for beta regression, whereas Table 1 represents the mean scores on the original scale. Internalizing symptoms were assessed via the internalizing subscale of the Behavior and Feelings Survey (BFS). Blue dots indicate data points for participants in the intervention group (Project Calm) and red dots indicate data points for participants in the control condition; the same color scheme is used for trajectory lines.

Within-condition analyses for immediate-Calm participants (i.e., baseline through 4-months) revealed that, compared to baseline, internalizing symptoms were significantly higher at 2- (Odds Ratio [OR] = 0.57, 95% CI [0.46, 0.69], p < 0.001) and at 3-months (OR = 0.44, 95% CI [0.35, 0.54], p < 0.001; Supplemental Table 27).

Within-condition analyses for delayed-Calm participants (i.e., 2 months [immediately pre-intervention] through 4 months) revealed that internalizing symptoms were lower at 4 months than at 2 months (OR = 1.45, CI [1.18, 1.78], p < 0.001; Supplemental Table 35).

Control participants with elevated internalizing symptoms at baseline reported a significantly greater reduction in symptoms relative to Calm participants (whose symptoms remained relatively steady across the first 2 months) at 1-month follow-up (OR = 0.40, CI [0.22, 0.74], p = 0.003; Tables 4 and 5, Fig. 3).

Note. Mean scores in the figure fall within a standardized range between 0 and 1 after transforming the data for beta regression, whereas Table 4 represents the mean scores on the original scale. Internalizing symptoms were assessed via the internalizing subscale of the Behavior and Feelings survey (BFS). Blue dots indicate data points for participants in the intervention group (Project Calm) and red dots indicate data points for participants in the control condition; the same color scheme is used for trajectory lines.

Within-condition analyses for immediate-Calm participants with elevated internalizing symptoms at baseline revealed that—compared to baseline—internalizing symptoms were significantly lower at 4-month follow-up (OR = 1.66, CI [1.01, 2.75], p = 0.048; Supplemental Table 28).

Within-condition analyses for delayed-Calm participants with elevated internalizing symptoms at baseline revealed that internalizing symptoms were lower at 4-month than at 2-month follow-up (OR = 2.35, CI [1.49, 3.72], p < 0.001; Supplemental Table 36).

Secondary analyses

Immediate-Calm participants did not report significantly greater reductions in externalizing symptoms than control participants at either assessment (i.e., 1- or 2-months; Supplemental Tables 1 and 2, Supplemental Fig. 1).

Control participants in the elevated subsample reported significantly greater reductions in externalizing symptoms relative to immediate-Calm participants (whose symptoms remained relatively steady across the first 2 months) at 1-month follow-up (OR = 0.48, CI [0.23, 0.99], p = 0.046; Supplemental Tables 3 and 4, Supplemental Fig. 2).

Within-condition analyses for the entire group of immediate-Calm participants revealed that externalizing symptoms were significantly higher than baseline at 2 months (OR = 0.59, CI [0.48, 0.72], p < 0.001) and at 3 months (OR = 0.50, CI [0.40, 0.62], p < 0.001; Supplemental Table 29). We do not report the results of within-condition, elevated subsample analyses for secondary outcomes in-text, but additional details can be found in the Supplemental Materials (starting on page 28).

Within-condition analyses for delayed-Calm participants revealed that externalizing symptoms were lower at 4 months than at 2 months (OR = 1.51, CI [1.23, 1.86], p < 0.001; Supplemental Table 37).

Immediate-Calm participants did not report significantly greater reductions in trauma symptoms than control participants at either assessment (Supplemental Tables 5 and 6, Supplemental Fig. 3).

Control participants in the elevated subsample reported significantly greater reductions in trauma symptoms relative to immediate-Calm participants (whose symptoms remained relatively steady across the first 2 months) at 1-month (OR = 0.45, CI [0.25, 0.82], p = 0.008) and at 2-months (OR = 0.54, CI [0.30, 0.98], p = 0.043; Supplemental Tables 7 and 8, Supplemental Fig. 4).

Within-condition analyses for immediate-Calm participants revealed that trauma symptoms were significantly higher than baseline at 2-months (OR = 0.62, CI [0.51, 0.76], p < 0.001) and at 3 months (OR = 0.49, CI [0.39, 0.61], p < 0.001; Supplemental Table 30).

Within-condition analyses for delayed-Calm participants revealed that—relative to 2-months—trauma symptoms were higher at 3-months (OR = 0.77, CI [0.62, 0.94], p = 0.012), but lower at 4-months (OR = 1.81, CI [1.46, 2.24], p < 0.001; Supplemental Table 38).

Unlike the other measures used in this study, the measure of trauma symptoms (the SPRINT) has a previously established clinical cutoff score of 14—found to carry a 95% sensitivity to detect PTSD, and 96% specificity for ruling out the diagnosis, with an overall accuracy of correct assignment being 96%43. Thus, we repeated the elevated subsample analyses using this cutoff as opposed to the criterion used for the original subsample analyses (i.e., >1 SD above the sample mean at baseline). With this reclassification, 27.5% of our sample scored above the clinical cutoff at baseline (compared to 15.8% designated as “elevated” using statistical deviation from the mean); while the original elevated subsample analysis was calculated using item-level mean scores, which are robust to item-level missingness, the SPRINT’s cutoff uses sum scores, which are sensitive to item-level missingness. The results of the re-analysis were comparable: just as the initial elevated subsample analysis showed relatively greater improvement in PTSD symptoms in the control group compared to the intervention group at 1-month follow-up, so too did the clinically elevated group in the re-analysis; however, the significant condition difference favoring the control condition in the original elevated subsample analysis became statistically insignificant at the 2-month follow-up in the corresponding re-analysis (p = 0.199; Supplemental Tables 8 and 9, Supplemental Figs. 4 and 5).

Immediate-Calm participants did not report significantly greater improvements in their self-reported ability to calm oneself when experiencing unpleasant emotions than control participants at either assessment in the full sample (Supplemental Tables 10 and 11, Supplemental Fig. 6) or in the elevated subsample (i.e., those with worse self-calming abilities at baseline; Supplemental Tables 12 and 13, Supplemental Fig. 7).

Within-condition analyses for immediate-Calm participants revealed that self-calming skills were significantly higher than baseline at 2-month follow-up (OR = 1.59, CI [1.28, 1.98], p < 0.001) and 3 months (OR = 1.46, CI [1.17, 1.84], p < 0.001; Supplemental Table 31).

Within-condition analyses for delayed-Calm participants revealed that self-calming skills were lower at 4 months (OR = 0.59, CI [0.47, 0.73], p < 0.001) than at 2 months (Supplemental Table 39).

Immediate-Calm participants did not report significantly greater improvements in life satisfaction than control participants at either assessment in the full sample (Supplemental Tables 14 and 15, Supplemental Fig. 8) or in the elevated subsample (i.e., those with lower life satisfaction at baseline; Supplemental Tables 16 and 17, Supplemental Fig. 9).

Within-condition analyses for immediate-Calm participants revealed that life satisfaction was significantly higher than baseline at 2 months (OR = 1.30, CI [1.09, 1.54], p < .001) and at 3 months (OR = 1.55, CI [1.28, 1.88], p < 0.001; Supplemental Table 32).

Within-condition analyses for delayed-Calm participants revealed that—relative to 2-months—life satisfaction was higher at 3-months (OR = 1.37, CI[1.13, 1.65], p = .001), but lower at the 4-months (OR = 0.72, CI[0.59, 0.86], p < .001; Supplemental Table 40).

Immediate-Calm participants did not report significantly greater reductions in the severity of their ideographically reported top problems than control participants at either assessment in the full sample (Supplemental Tables 18 and 19, Supplemental Fig. 10) or in the elevated subsample (i.e., those with greater average top problems severity at baseline; Supplemental Tables 20 and 21, Supplemental Fig. 11).

Within-condition analyses for immediate-Calm participants revealed that—relative to baseline—the severity of ideographically reported top problems was significantly higher at 1-month (OR = 0.74, CI [0.57, 0.95], p = 0.018) and lower at 3-months (OR = 1.42, CI [1.08, 1.86], p = 0.012; Supplemental Table 33).

Within-condition analyses for delayed-Calm participants revealed no significant change relative to the 2-month time point.

Immediate-Calm participants did not report significantly greater reductions in sensory sensitivity than control participants at either assessment in the full sample (Supplemental Tables 22 and 23, Supplemental Fig. 12) or in the elevated subsample (i.e., those with greater sensory sensitivity at baseline; Supplemental Tables 24 and 25, Supplemental Fig. 13).

Within-condition analyses for immediate-Calm participants revealed that sensory sensitivity was significantly higher than baseline at 2 months (OR = 0.56, CI [0.48, 0.65], p < 0.001) and at 3 months (OR = 0.43, CI [0.36, 0.50], p < 0.001; Supplemental Table 34).

Within-condition analyses for delayed-Calm participants revealed that—relative to 2-months—sensory sensitivity was higher at 3-months (OR = 0.77, CI [0.65, 0.91], p = 0.002) and lower at 4-months (OR = 1.98, CI [1.67, 2.35], p < 0.001; Supplemental Table 41).

Exploratory sensitivity and moderator analyses

We conducted exploratory sensitivity and moderator analyses (Condition * Time * Moderator and Time * Moderator) to assess the robustness of the results, to determine whether Calm might be differentially effective for students of varying baseline characteristics, and to further explain the findings noted above. We also tested several hypotheses that arose during a focus group with our school champions in which we solicited feedback on the study procedures and asked for their interpretations of the findings (see Supplemental Materials [starting on page 34] and the “Summary of results” section below).

Summary of results

Project Calm was rated as acceptable and useful, although there were no significant Calm-versus-control condition differences at either primary assessment time point on any outcomes in the full sample or in most elevated subsample analyses. However, for the subsamples of participants with elevated internalizing, externalizing, and trauma symptoms at baseline, these symptoms held relatively steady for the Calm group, whereas control participants’ symptoms reduced. Exploratory analyses revealed that: these results were generally robust to quality checks (i.e., using survey completion time as a means of analyzing data from only those participants who spent a sufficient amount of time on the intervention); the null results for the full-sample, between-group analyses were replicated when age was incorporated as a covariate, but in the elevated subsample analyses there was no longer a significant condition difference in externalizing symptoms at the 1-month follow-up (Original Model: OR = 0.48, CI [0.23, 0.99], p = 0.046; Covariate-adjusted Model: OR = 0.51, CI [0.25, 1.05], p = 0.067) or in trauma symptoms at the 2-month follow-up (Original Model: OR = 0.54, CI [0.30, 0.98], p = 0.043; Covariate-adjusted Model: OR = 0.57, CI [0.32, 1.03], p = 0.064); relatedly, older participants demonstrated better externalizing symptom trajectories compared to younger participants in a moderation analyses; those who were disrupted by air raid sirens during the intervention reported less favorable trajectories of internalizing, externalizing, and trauma symptoms for the first two follow-up assessments; participants with lower pre-intervention expectations of the intervention demonstrated less favorable trajectories of internalizing, externalizing and trauma symptoms compared to those with higher expectations; and there was no significant moderating effect of post-intervention ratings of acceptability and utility on study outcomes.

Discussion

In this study, evidently the first RCT testing a brief DMHI among youths in a country actively at war, we tested Project Calm against a usual schoolwork control condition. We found that Calm was not associated with a significant change in mental health outcomes relative to control in the full sample; further, in the subsamples with elevated internalizing symptoms, externalizing symptoms, and trauma symptoms at baseline, control participants demonstrated a significant decline in symptoms relative to intervention participants, who reported relatively little change.

Although surprising to us, these results are not entirely at odds with the broader literature. Classroom-based interventions are thought to be suitable for promoting resilience in children living in adverse environments, but there have been few high-quality evaluations65, and much rarer are evaluations of classroom-based DMHIs, specifically. Further, recent evidence suggests that some aspects of school-based mental health interventions can increase distress and mental health symptoms relative to control activities (e.g., students may become more aware of existing symptoms without receiving sufficient information to address these symptoms66). Nonetheless, some universal, school-based mental health interventions, including DMHIs (e.g., 23), have demonstrated mental health benefits67; indeed, other researchers argue that the risk of iatrogenic harm may not outweigh potential benefits of carefully designed school-based interventions, and that this risk is not specific to school-based mental health interventions68.

Amid this controversy and calls for the development of DMHIs that might improve the mental health of war-exposed youth27, we propose several possible explanations for our findings, derived from discussions with our team of researchers and from a focus group of school psychologists and teachers in schools that carried out the study. It is important to note that this focus group was not a pre-registered component of this evaluation, and although the meeting was recorded and reviewed by multiple researchers, themes were not extracted using a formal coding manual.

The most straightforward interpretation of the full-sample, between-groups, null effect is that the intensity and chronicity of stress that Ukrainian children have endured since Russia’s invasion, including frequent enemy attacks, may be too severe to be ameliorated through such a brief DMHI teaching only one skill (Explanation I: Stressor Severity Hypothesis). The notion that Calm was not sufficient to overpower contextual effects is supported by the fact that symptom measures (e.g., internalizing, externalizing, trauma) followed the same trajectories over time across groups, and this uniformity may imply that symptom fluctuations were driven by external factors, such as ebbs and flows in war-related stressors, rather than by the intervention (Supplemental Materials [starting on page 43]). More specifically, the intervention may not have been sufficiently tailored to meet these youths’ needs, and this possibility was noted by our school champions who remarked that increased specificity of treatment content relevant to each child’s unique life circumstances may have been beneficial. Indeed, the intervention was relatively static, with simple display logic that allowed for minimal customization to participants’ particular mental health concerns. Also, perhaps the fact that the intervention did not provide formal opportunities to practice skills in daily life meant that youths were unlikely to use the skills outside of the initial session, and they also may not have known whether they were using the skills appropriately without tailored feedback. As further testament to the severity of the stressors Ukrainian children are facing, many participants (NCalm = 73) were initially interrupted by air raid sirens, and these youth demonstrated worse internalizing, externalizing, and trauma symptoms outcomes (only at 2-month follow-up) than those who were not interrupted. The need to pause the brief intervention on multiple occasions and take shelter at the sound of air raid sirens is a solemn reminder of the severity of this context and highlights the possibility that this low-intensity intervention may not have been sufficient to overpower the environmental stressors affecting youths’ mental health.

Perhaps teaching calming strategies pulled focus from other coping skills, therefore disrupting pre-existing strategies that developed naturally as a result of facing war-related stressors. This disruption of youths’ naturally developed skills may explain the null results in the full sample analyses and/or the surprising results in the subsample analyses (Explanation II: Skill Disruption Hypothesis). Previous qualitative work has demonstrated that Ukrainian youth use several strategies to foster psychological resilience, including positive thinking, trying to regain a sense of control, practicing emotion awareness and regulation, relying on close friends and relatives, and seeking support from the broader community69. While it is unclear how effective any given strategy may be in this context, perhaps teaching calming skills caused youth to replace pre-existing strategies, and some youth may have replaced relatively effective strategies with a less effective one. This hypothesis draws on the idea that not all interventions (even those that are empirically supported by evaluation(s) in a given context) will be optimal in different contexts. The importance of context-to-intervention match—that is, choosing the most relevant intervention for a particular context—has been noted in recent global mental health work (e.g., an intervention teaching study skills to Kenyan adolescents was more effective following an unanticipated, government-mandated Covid shutdown that forced three years of schoolwork into two years, thus increasing school-related stress70).

In support of the possibility that Calm disrupted youths’ naturally developed skills, possibly due to a poor context-to-intervention match, we found that despite there being no significant difference in perceived calming skills, immediate-Calm participants reported increases in perceived calming abilities concurrent with increases in mental health symptoms (internalizing and externalizing), and delayed-Calm participants demonstrated the inverse trend: perceived calming abilities decreased alongside decreases in symptoms. Whereas no change in perceived calming abilities would limit inference regarding whether changes in calming skills were associated with changes in mental health symptoms, the fact that despite positive movement on the proposed mechanism (i.e., self-calming), mental health symptoms did not improve—and vice versa—might cast doubt on the promise of calming skills in addressing this population’s needs.

Calm may have inadvertently increased youths’ awareness of times when they are physiologically tense, perhaps by implicitly suggesting that inducing a state of calmness is desirable, which caused youths to be vigilant to detect non-calm states, and feel worse as a result (Explanation III: Symptom Awareness Hypothesis). This is related to the argument made in a prominent critique of school-based mental health interventions66. The authors posited that school-based interventions may inadvertently cause adolescents to ruminate on their negative thoughts and emotions, and possibly label themselves accordingly (e.g., “I have anxiety”), which can have downstream effects on behavior (e.g., avoidance of anxiety-inducing situations). Applying a similar explanation here, if Calm increased youths’ vigilance to ‘undesirable’ physiological arousal (which may have previously been ignored through emotional blunting, for example)—assuming also that the intervention was not sufficient to address these feelings—the result may have been a lack of improvement in mental health; further, the significant difference favoring the control group over the Calm group among participants with elevated mental health symptoms at baseline (internalizing, externalizing, and trauma symptoms) might be explained by the possibility that those with elevated symptoms at baseline are presumably those who are more physiologically dysregulated, and therefore more frequently encounter this ‘undesirable’ state and are unable to address it sufficiently using calming skills.

Perhaps Calm dampened fear extinction learning that otherwise would have occurred after repeated exposure to stressors—control group youths may have become further desensitized to stressors because they were not attempting to calm themselves when confronted with them (Explanation IV: Habituation Interference Hypothesis). Systematic desensitization71 and inhibitory learning72 models of exposure therapy emphasize reducing reliance on safety behaviors (i.e., actions taken to reduce anxiety73) to maximize the effectiveness of exposure-based learning. Accordingly, experts discourage the use of safety behaviors during exposures, including calming strategies like progressive muscle relaxation, because they reduce anxiety, thereby minimizing treatment gains74 (e.g., through minimization of expectancy violation in the inhibitory learning model72). Thus, teaching youth calming skills may have been less effective than allowing them to tolerate (“sit with”) uncomfortable emotions.

Exposure therapy is intended to target fears that are disproportionate to real risk (e.g., interoceptive exposures to bring on panic symptoms when a feared outcome is dying from panic attacks), but the anxiety-provoking situations in Ukraine are quite real, and fear is often proportional to the objective level of danger. Nonetheless, it is possible that using calming skills too frequently dampened youths’ ability to tolerate even mild-to-moderate negative emotions and served as a safety behavior that increased unhelpful or unnecessary avoidance.

Finally, perhaps Calm youths were disappointed that Calm did not improve their mental health and they felt more hopeless about getting better, thus leading to worse mental health outcomes compared to control youths (Explanation V: Hopelessness Hypothesis). We speculated that children with higher expectations of Calm at baseline may have been disappointed that the intervention did not have a more positive impact on their symptoms, and this mismatch may have made youths less hopeful that their symptoms would improve. Surprisingly, we found that participants with lower expectations demonstrated less favorable trajectories for internalizing, externalizing, and trauma symptoms compared to those with higher expectations at the 3-month follow-up. An alternative explanation aligned with these results is that those with lower expectations of Calm may have been less engaged with the intervention, or they may have been less hopeful, more globally, which may be indicative of psychopathology such as depression.

Our findings should be interpreted in the context of certain limitations. First, we did not use a multi-informant approach to assessing outcomes, such as asking teachers and caregivers to evaluate youth mental health outcomes, given our desire to minimize procedural burden in a resource-limited context. Because we only obtained students’ reports of study outcomes, we do not know whether outcomes may differ across informants, as has been found frequently in previous research75,76,77. The use of multiple, structurally different informants can facilitate probing contextual differences in treatment response78,79,80.

Additionally, the final intent-to-treat sample (N = 626) was smaller than planned, leading to reduced statistical power for detecting small intervention effects. While attrition was also lower than anticipated, this sample size may have limited our ability to detect small effects, particularly for subgroup and moderator analyses. Future studies should consider larger multi-site collaborations or study designs aimed at maximizing power (e.g., multi-wave stepped wedge designs).

Further, given the lack of available mental health outcome measures that have been validated in Ukrainian, we used translated versions of measures for which psychometrics among Ukrainian youths are unknown. Relatedly, we developed an original, three-item measure of calming skills because we could not identify any relevant validated measure. It is possible that youths were not able to accurately report on their ability to calm themselves or they were reluctant to report their true negative feelings due to social desirability bias, and a more naturalistic assessment (e.g., behavioral observation) is needed. Additionally, although our measure of trauma symptoms (the SPRINT43) has strong psychometric properties, its items do not directly map onto DSM-5 or ICD-11 criteria for PTSD; relatedly, given that the majority of our outcome measures, selected for being very brief and sensitive to change, do not contain validated cutoff scores for clinical significance, much less cutoff scores derived from research among populations of youth with exposure to war, we used deviation from the mean to construct elevated subsamples (i.e., elevated relative to other youth with comparable levels of exposure to the stressors of war). However, as a result, measurement insensitivity may have influenced the results.

Finally, we encourage readers to interpret the results of our exploratory analyses (Supplemental Materials [starting on page 34]) and the corresponding hypotheses with caution, given that they were not pre-registered. However, we hope that these analyses can be useful to the literature by suggesting areas for future research.

We hope that others will build on this work, and we therefore suggest several future directions. First, given that implementing a large-scale RCT testing a DMHI in Ukraine was feasible, and the intervention was viewed as acceptable and helpful by youths despite failing to improve clinical outcomes, it is prudent to continue to conduct RCTs of interventions composed of different treatment elements to better understand which intervention components might be more effective for this and similar contexts. For example, while teaching calming skills did not demonstrate effectiveness, perhaps other skills such as those from third-wave CBT (e.g., cognitive defusion81) would be more effective. Further, our exploratory analyses suggest differences in effects depending on participant characteristics (e.g., participant age: older participants evidenced greater improvements from Calm, and controlling for age in some between-group analyses erased statistically significant differences favoring the control group), which can be probed further in future, more adequately powered DMHI studies and in studies of traditional psychotherapies for war-exposed youths; to start, evaluating the moderating role of participant age in TRT trials might be a fruitful future direction. Alternatively, it is possible that brand-new interventions might need to be developed, given that most empirically supported interventions were not designed for this context. An initial step might be further evaluating the coping skills that war-exposed youth spontaneously report using69, and exploring the relationships between these coping strategies and mental health outcomes observationally. Then, interventions drawing on the coping strategies that are associated with better mental health outcomes can be tested experimentally.

We found that a DMHI teaching calming skills did not improve mental health outcomes relative to a usual schoolwork control. Further, we found that youth with elevated internalizing, externalizing, and trauma symptoms in the control condition reported reduced symptoms over time compared to those who received the intervention. Through a focus group discussion with our school partners, we generated putative explanations for these findings, evaluated them through exploratory analyses where possible, and suggested complementary lines of future research that may inform our understanding of how to design effective interventions for war-exposed youth. Given that we found no evidence that calming skills taught via DMHI are effective for Ukrainian youth, yet implementing a large-scale RCT was feasible and the intervention was well-received by youths, we suggest that researchers transition to testing the effects of other therapeutic strategies delivered by DMHI—beginning with those that comprise empirically supported treatment packages such as TRT or those that Ukrainian youth identify as being helpful; however, at this time, calming skills should continue to be taught to war-exposed youths only in clinician- and lay-provider-led formats.

Data availability

The data reported in this manuscript have not been published previously. This study was pre-registered on ClinicalTrials.gov (NCT06217705), and the de-identified data are available on Open Science Framework (OSF; https://osf.io/9c2b3).

Code availability

The statistical code (along with the associated de-identified data) is available on Open Science Framework (OSF; https://osf.io/9c2b3).

References

Elvev†g, B. & DeLisi, L. E. The mental health consequences on children of the war in Ukraine: a commentary. Psychiatry Res. 317, 114798–114798 (2022).

Gadzo, M. Record high deaths in the Russia-Ukraine war: What you should know | Russia-Ukraine war News | Al Jazeera. https://www.aljazeera.com/news/2024/10/16/russia-ukraine-wartime-deaths (2024).

Unicef. One hundred days of war in Ukraine have left 5.2 million children in need of humanitarian assistance. https://www.unicef.org/press-releases/one-hundred-days-war-ukraine-have-left-52-million-children-need-humanitarian (2022).

Save the Children International. OVER 900 h Underground: Children In Ukraine Endure Life In Bunkers As War Enters Second Year | Save the Children International. https://www.savethechildren.net/news/over-900-hours-underground-children-ukraine-endure-life-bunkers-war-enters-second-year (2023).

Goto, R. et al. Mental health of adolescents exposed to the war in Ukraine. JAMA Pediatr. 178, 480–488 (2024).

Martsenkovskyi, D. et al. Parent-reported posttraumatic stress reactions in children and adolescents: findings from the mental health of parents and children in Ukraine study. Psychol. Trauma 16, 1269–1275 (2024).

Danese, A., McLaughlin, K. A., Samara, M. & Stover, C. S. Psychopathology in children exposed to trauma: detection and intervention needed to reduce downstream burden. Br. Med. J. 371, m3073 (2020).

Gulliver, A., Griffiths, K. M. & Christensen, H. Perceived barriers and facilitators to mental health help-seeking in young people: a systematic review. BMC Psychiatry 10, 1–9 (2010).

Danese, A. & Martsenkovskyi, D. Editorial: measuring and buffering the mental health impact of the war in Ukraine in young people. J. Am. Acad. Child Adolesc. Psychiatry 62, 294–296 (2023).

Martsenkovskyi, D., Martsenkovsky, I., Martsenkovska, I. & Lorberg, B. The Ukrainian paediatric mental health system: challenges and opportunities from the Russo-Ukrainian war. Lancet Psychiatry 9, 533–535 (2022).

Yule, W., Dyregrov, A., Raundalen, M. & Smith, P. Children and war: the work of the Children and War Foundation. Eur. J. Psychotraumatol 4, 1–8 (2013).

Lukito, S. et al. Evaluation of pilot community art-based workshops designed for Ukrainian refugee children. Front. Child Adolescent Psychiatry 2, 1260189 (2023).

Yavna, K., Sinelnichenko, Y., Zhuravel, T., Yule, W. & Rosenthal, M. Teaching Recovery Techniques (TRT) to Ukrainian children and adolescents to self-manage post-traumatic stress disorder (PTSD) symptoms following the Russian invasion of Ukraine in 2022—the first 7 months. J. Affect Disord. 351, 243–249 (2024).

Barron, I. G., Abdallah, G. & Smith, P. Randomized Control Trial of a CBT trauma recovery program in Palestinian schools. J. Loss Trauma 18, 306–321 (2013).

Barron, I., Abdallah, G. & Heltne, U. Randomized Control Trial of teaching recovery techniques in rural occupied Palestine: effect on adolescent dissociation. J. Aggress. Maltreat Trauma 25, 955–973 (2016).

Pityaratstian, N. et al. Randomized Controlled Trial of group cognitive behavioural therapy for post-traumatic stress disorder in children and adolescents exposed to tsunami in Thailand. Behav. Cogn. Psychother. 43, 549–561 (2015).

Sarkadi, A. et al. Teaching Recovery Techniques: evaluation of a group intervention for unaccompanied refugee minors with symptoms of PTSD in Sweden. Eur. Child Adolesc. Psychiatry 27, 467–479 (2018).

Ebert, D. D. et al. Internet and computer-based cognitive behavioral therapy for anxiety and depression in youth: a meta-analysis of randomized controlled outcome trials. PLoS ONE 10, e0119895–e0119895 (2015).

Florean, I. S., Dobrean, A., Păsărelu, C. R., Georgescu, R. D. & Milea, I. The efficacy of internet-based parenting programs for children and adolescents with behavior problems: a meta-analysis of Randomized Clinical Trials. Clin. Child Fam. Psychol. Rev. 23, 510–528 (2020).

Pennant, M. E. et al. Computerised therapies for anxiety and depression in children and young people: a systematic review and meta-analysis. Behav. Res. Ther. 67, 1–18 (2015).

Venturo-Conerly, K. E., Fitzpatrick, O. M., Horn, R. L., Ugueto, A. M. & Weisz, J. R. Effectiveness of youth psychotherapy delivered remotely: a meta-analysis. Am. Psychol. 77, 71–84 (2022).

Wu, Y. et al. Efficacy of internet-based cognitive-behavioral therapy for depression in adolescents: a systematic review and meta-analysis. Internet Inter. 34, 100673 (2023).

Fitzpatrick, O. M. et al. Project SOLVE: randomized, school-based trial of a single-session digital problem-solving intervention for adolescent internalizing symptoms during the coronavirus era. Sch. Ment. Health 15, 955–966 (2023).

Osborn, T. L. et al. Single-Session digital intervention for adolescent depression, anxiety, and well-being: outcomes of a randomized controlled trial with Kenyan adolescents. J. Consult Clin. Psychol. 88, 657–668 (2020).

Steinberg, J. S. et al. Is There a place for cognitive restructuring in brief, self-guided interventions? Randomized Controlled Trial of a Single-Session, digital program for adolescents. J. Clin. Child Adolesc. Psychol. https://doi.org/10.1080/15374416.2024.2384026 (2024).

Nature Editorial Team. Restore Internet access in war-torn Sudan. Nature 634, 514 (2024).

Danese, A. et al. Scoping review: digital mental health interventions for children and adolescents affected by war. J. Am. Acad. Child Adolesc. Psychiatry 64, 226–248 (2025).

Ruggiero, K. J. et al. Web intervention for adolescents affected by disaster: population-based Randomized Controlled Trial. J. Am. Acad. Child Adolesc. Psychiatry 54, 709–717 (2015).

Stasiak, K. & Moor, S. 21.6 Brave-Online: introducing online therapy for children and adolescents affected by a natural disaster. J. Am. Acad. Child Adolesc. Psychiatry 55, S33 (2016).

Schuler, B. R. & Raknes, S. Does group size and blending matter? Impact of a digital mental health game implemented with refugees in various settings. Int J. Migr. Health Soc. Care 18, 83–94 (2022).

Fitzpatrick, O. M. et al. Empirically supported principles of change in youth psychotherapy: exploring codability, frequency of use, and meta-analytic findings. Clin. Psychol. Sci. 11, 326–344 (2023).

Weisz, J. R. & Bearman, S. Kate. Principle-Guided Psychotherapy for Children and Adolescents: The FIRST Program for Behavioral and Emotional Problems. (The Guilford Press, 2020).

Weisz, J., Bearman, S. K., Santucci, L. C. & Jensen-Doss, A. Initial test of a principle-guided approach to transdiagnostic psychotherapy with children and adolescents. J. Clin. Child Adolesc. Psychol. 46, 44–58 (2017).

Key, K. D. et al. The continuum of community engagement in research: a roadmap for understanding and assessing progress. Prog. Community Health Partnersh. 13, 427–434 (2019).

Schleider, J. L., Dobias, M. L., Sung, J. Y. & Mullarkey, M. C. Future directions in single-session youth mental health interventions. J. Clin. Child Adolesc. Psychol. 49, 264–278 (2020).

Schleider, J. & Weisz, J. A single-session growth mindset intervention for adolescent anxiety and depression: 9-month outcomes of a randomized trial. J. Child Psychol. Psychiatry 59, 160–170 (2018).

Weisz, J. R. et al. Efficient monitoring of treatment response during youth psychotherapy: the behavior and feelings survey. J. Clin. Child Adolesc. Psychol. 49, 737–751 (2020).

McDonald, R. P. Test theory: a unified treatment. Test Theory: A Unified Treatment. https://doi.org/10.4324/9781410601087/Test-Theory-Roderick-Mcdonald/Accessibility-Information (2013).

Revelle, W. & Zinbarg, R. E. Coefficients alpha, beta, omega, and the glb: comments on Sijtsma. Psychometrika 74, 145–154 (2009).

Sijtsma, K. On the use, the misuse, and the very limited usefulness of Cronbach’s alpha. Psychometrika 74, 107 (2008).

Dunn, T. J., Baguley, T. & Brunsden, V. From alpha to omega: a practical solution to the pervasive problem of internal consistency estimation. Br. J. Psychol. 105, 399–412 (2014).

Schleider, J. L., Dobias, M., Sung, J., Mumper, E. & Mullarkey, M. C. Acceptability and utility of an open-access, online single-session intervention platform for adolescent mental health. JMIR Ment. Health 7, e20513 (2020).

Connor, K. M. & Davidson, J. R. T. SPRINT: a brief global assessment of post-traumatic stress disorder. Int. Clin. Psychopharmacol. 16, 279–284 (2001).

Weisz, J. R. et al. Youth Top Problems: using idiographic, consumer-guided assessment to identify treatment needs and to track change during psychotherapy. J. Consult Clin. Psychol. 79, 369–380 (2011).

Bailin, A. et al. Principle-Guided Psychotherapy for Children and Adolescents (FIRST): study protocol for a randomized controlled effectiveness trial in outpatient clinics. Trials 24, 1–14 (2023).

Michele Athay, M., Kelley, S. D. & Dew-Reeves, S. E. Brief multidimensional students’ LIfe Satisfaction Scale-PTPB Version (BMSLSS-PTPB): psychometric properties and relationship with mental health symptom severity over time. Adm. Policy Ment. Health 39, 30–40 (2012).

Benham, G. The Highly Sensitive Person: stress and physical symptom reports. Pers. Individ. Dif. 40, 1433–1440 (2006).