Abstract

We introduce an artificial intelligence model to personalize treatment in major depression, which was deployed in the Artificial Intelligence in Depression: Medication Enhancement Study. We predict probabilities of remission across multiple pharmacological treatments, validate model predictions, and examine them for biases. Data from 9042 adults with moderate to severe major depression from antidepressant clinical trials were used to train a deep learning model. On the held-out test-set, the model demonstrated an AUC of 0.65, outperformed a null model (p = 0.01). The model increased population remission rate in hypothetical and actual improvement testing. While the model identified escitalopram as generally outperforming other drugs (consistent with the input data), there was otherwise significant variation in drug rankings. The model did not amplify potentially harmful biases. We demonstrate the first model capable of predicting outcomes for 10 treatments, intended to be used at or near the start of treatment to personalize treatment selection.

Similar content being viewed by others

Introduction

With over 300 million people affected worldwide1, major depressive disorder (MDD) is a leading cause of disability. MDD poses an estimated socioeconomic burden of $326.2 billion per year in the U.S2. While several effective MDD treatments are available, only roughly one third of patients will remit with the first treatment they try3. This means that many patients must undergo a “trial and error” approach to treatment selection, which prolongs the duration of illness and leads to worse outcomes4. In order to improve outcomes in a cost- and time-effective manner, it would be useful to have a tool available which can help personalize treatment choice at the point of care, without requiring external imaging or genetic testing which can add time, expense, and complexity5,6,7.

One can cast this challenge as one of pattern recognition: determining which patterns of patient features are predictive of outcomes with specific treatments. Machine learning (ML) methods are well-suited for this kind of pattern detection, and we have shown in previous work that deep learning ML models can be used to provide treatment-specific remission predictions and that they may provide advantages over other machine learning model types5,6,7,8. A recent review9 of deep learning models for depression remission prediction found that some of the studies reviewed incorporated external testing, such as functional and structural magnetic resonance imaging, genetics, and epigenetics as features for the models. However, it remains infeasible to collect these measures routinely in clinical practice; we argue that a model that is to have a scalable impact should focus on measures that can be captured quickly and without specialized equipment, such as clinical and demographic variables10. One important limitation of most studies predicting remission in MDD is that they aim to differentiate outcomes between two treatments or predict the outcome of one treatment at a time9,11. This is not clinical reality; the reality is patients and clinicians must choose from over 20 antidepressants, psychological therapies, and neuromodulation treatments12,13. Another potential limitation of treatment selection machine learning models concerns the propagation of harmful biases (here defined as predictions which disadvantage a patient because of their socio-demographic background)7,8,14. In addition, clinicians may be apprehensive about the interpretability of the predictions of so-called “black box” machine learning models, obscuring the drivers of any given model-based recommendation15. Another important consideration is the data used to train models. Clinical trial datasets can prove difficult to work with because of the multitude of different symptom scales and outcome measures used across trials, as well as sample size and diversity limitations. On the other hand, data contained within electronic medical records requires that modelers contend with the biases inherent in observational data16,17 as well as, in many cases, the lack of clear outcome measures.

These limitations have likely hindered the development and deployment of machine learning models into real clinical environments8,17. In previous work, we demonstrated deep learning models which can perform differential treatment benefit prediction for more than two treatments, which outperform other modelling approaches, and which are predicted to improve population remission rates7,18,19. We have also demonstrated methods for assessing model bias and for unifying clinical trial datasets in order to enable model training8,19. Finally, we have implemented versions of these models in simulation center and in-clinic feasibility studies in order to determine if clinicians and patients are accepting of these models, if they are easy to use in clinical practice, and if they are perceived by clinicians and patients to offer a clinical benefit14,15,20,21. Based on these initial studies, we developed an updated version of a differential treatment benefit prediction model which includes 10 pharmacological treatment options (8 first line antidepressants and 2 common combinations of first line antidepressants). This model, intended to be used by clinicians treating adult patients with MDD, was developed and validated using the methods we will describe below, and was then deployed as a static model in the Aifred Clinical Decision Support System (Aifred CDSS)14,15,17,20,21 which served as the active intervention in the Artificial Intelligence in Depression – Medication Enhancement (AID-ME) study (NCT04655924), reported separately. Here we will report on the development and validation of the model used in this study (hereinafter referred to as the AID-ME model), in adherence to the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) reporting guidelines for prediction model development and validation22.

Our objectives herein are to 1) develop a model capable of predicting probabilities of remission across multiple pharmacological treatments for adults with at least moderate MDD and 2) validate model predictions and examine them for amplification of harmful biases. It is important to note that the results presented here could not be published prior to the conclusion of the AID-ME study in order to avoid potential unblinding of participants.

Methods

We will now discuss the methods used to develop and validate the AID-ME model (see also6,7,8). Ethical approval was provided by the research ethics board of the Douglas Research Center and the research was conducted in accordance with the Declaration of Helsinki. Ethical approval information for the studies from which the data was derived can be found in their original publications and regulatory documentation.

Source of data and study selection

The data used to develop the AID-ME model was derived from clinical trials of antidepressant medications. Clinical trial data was selected primarily to reduce confounds due to uncontrolled clinician behavior which can change over time23, biases in treatment selection, or access to care24,25; to reduce data missingness; and most importantly, because clinical trials contain clear clinical outcomes. All of the studies we used either randomized patients to treatment or assigned all patients to the same treatment (e.g., first level of STAR*D3), eliminating treatment selection bias operating at the individual clinician level. In addition, clinical trials have inclusion and exclusion criteria, which allow some assessment of potential selection biases which may be more covert in naturalistic datasets26,27, though clinical trials are not free of selection bias28. Studies came from three primary sources: the National Institutes of Mental Health (NIMH) Data Archive; data provided from clinical researchers in academic settings; and data provided by GlaxoSmithKline and Eli Lilly by way of de-identified data requests administered through the clinical study data request (CSDR) platform. Results from a model trained on the pharmaceutical dataset are available8. Studies included were not restricted based on whether the study was successful or its endpoints were met, but we did not include participants from studies who were taking experimental drugs which were later not approved by regulatory agencies and therefore not available on the market.

Studies were selected in order to be representative of the intended use population: an adult population experiencing an acute episode of major depressive disorder, with demographics reflective of North America and Western Europe. These geographic regions were selected based on the availability of data and the sites likely to participate in the AID-ME study. These would be patients, much like those in the STAR*D dataset, who 1) could be seen in either primary or psychiatric care, 2) could have new onset or recurrent depression, and 3) would likely have other psychiatric comorbidities in addition to depression. The population would be primarily outpatient and would have primary MDD -not depressive symptoms secondary to another medical condition, such as a stroke.

In order to achieve a set of studies that was consistent with this intended use population, we examined study protocols, publications, and clinical study reports. We excluded : 1) populations under age 18; 2) patients with bipolar depression/bipolar disorder; 3) studies in which the treatment of a major depressive episode was not the main objective (for example, studies of patients with dysthymia or with depressive symptoms without meeting criteria for an MDE in the context of fibromyalgia); 4) studies where the MDE is caused by another medical condition; and 5) studies of patients with only mild depression (though studies including patients with both mild and more severe depression were included, with mildly depressed patients later excluded). Given that the AID-ME study was planned to follow patients for 12 weeks, and that guidelines such as CANMAT suggest assessing remission after 6–8 weeks13, we included studies which had lengths between 6 and 14 weeks.

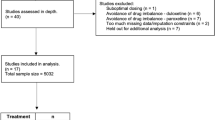

Studies were conducted between 1991 and 2016. After working to secure as many studies as possible, we began with 57 studies for consideration. After reducing the number of studies based on the criteria noted above, as well as excluding some studies to ensure that specific drugs were not excessively over-represented, we were left with 22 studies. See PRISMA diagram (Fig. 1). Important guidance in the selection of treatments of depression is provided by the Canadian Network for Mood and Anxiety (CANMAT) treatment guidelines for MDD in adults13. These CANMAT guidelines were used to identify treatments to be included in the model and were referred to during interpretation of model results. Only patients with in arms with medication doses reaching minimum effective doses as per the CANMAT guidelines were included.

Participants

Participants were all aged 18 or older and were of both sexes. They were treated in primary care or psychiatric settings. Participants were excluded if, at study baseline they had a depression of less than moderate severity based on the study’s depression rating tool. They were also excluded if they were in their respective study for less than two weeks, given that it is unlikely that an adequate determination of remission or non-remission could be made in this timeframe. Patients who remained in their studies for at least two weeks were included even if they dropped out of the study early.

Treatments

While a number of treatments were used across the studies included, we could only include treatments in the final model if there were sufficient observations of the treatment available for the model to learn to predict its outcome. In line with our previous work, we did not generate predictions for treatments provided to fewer than 100 patients in the dataset7. In addition, participants taking less than the dose range recommended in the CANMAT guidelines13 were also eliminated from the dataset, as the CANMAT guidelines formed an integral part of the AID-ME study. As our modeling technique does not depend on a placebo comparator and no placebo treatment was to be recommended by the model in clinical practice, patients who received placebo were not included. The included medications are noted in Table 1. These include a diverse array of commonly used first-line treatments as well as two combinations of first line medications which are commonly used as augmentation strategies29. After these exclusions, 9042 patients were included in the final analysis across both datasets. Patients were not excluded if they received other non-pharmacological interventions; the variability of available information in our data made it impossible to fully account for non-pharmacological treatments, but this variability is also in line with what is seen in clinical practice.

Outcome

The predicted outcome was remission, cast as a binary variable so that the model could predict remission or non-remission as a label, and provide a probability of remission. Remission was chosen as it is the gold-standard outcome in treatment guidelines; achieving remission is important because the presence of significant residual symptoms increases relapse risk13. Remission was derived from treatment scores on standardized questionnaires and binarized based on cutoffs derived from the literature. These included the Montgomery-Asberg Depression rating scale (MADRS; remission defined as a study exit score < 11)30,31,32, the Quick Inventory of Depression Scale - Self Report (QIDS-SR-16; remission defined as a score < 6) and the Hamilton Depression Rating Scale (HAMD; remission defined as a score of < 8)30,33. Remission was measured at the latest available measurement for each patient in order to preserve the most information for each patient (such that patients who remit but then become more symptomatic are not incorrectly classified as being in remission)7. Given this approach, and the fact that all patients were in their respective studies for at least two weeks, no patients were missing outcomes and outcomes were not imputed. Model developers and assessors were not blinded to the selected outcome given the limited size of the team and the need to carefully specify remission variables and cutoffs; however, final testing on the test set was carried out by a team member who was not involved in the development of the AID-ME model.

Data preprocessing and creation of stratified data splits

A full method description for the transformation pipeline and quality control measures is available in ref. 8. Briefly, we created a “transformation pipeline” to combine different questions across datasets to prepare as input variables for feature selection. First, we developed a custom taxonomy inspired by the HiTOP taxonomy system34 to categorize the study questionnaire data across clinical and sociodemographic dimensions. Standard versions of each question were tagged with at least one taxonomic category which allowed for items with the same semantic meaning to be grouped together and created into a “transformed question” representative of this category. Questions with categorical response values were grouped together and values were scaled with either linear equating or equipercentile equating, depending on the response value distributions35. No transformation was required if all response values were binary, but if there was a mix of categorical and binary questions, the categorical values were “binarized” to either 0 or 1 based on the response value text and how it compared to the response value text of the natively binary questions.

To address potential inconsistencies in input data ranges, which is crucial to consider for neural networks36, we applied a standardization process during data preprocessing. After equipercentile equating transformed responses into a unified standard across similar question groups, we standardized all input features to ensure consistency in scale and range. This approach mitigates the risk of variability in feature magnitudes negatively impacting model performance. Additionally, for features with imputed values, we added a small constant uniformly to avoid zero-valued inputs, which can destabilize the training process. This step further supported stable and effective model training. The standardization ensures that all input features are appropriately scaled for the model, aligning with best practices for neural networks37.

To generate our data splits, we stratified each division using the binary remission and treatment variables. The sequential splitting method was used to generate the three data splits: utilizing the train_test_split function from scikit-learn38, we first separated our aggregate dataset into a test set with a 90-10 stratified split. The leftover 90% was further divided to form the train and validation sets, comprising 80% and 10%, respectively, of the original data. This process yielded an 80-10-10 split for our datasets. The test set was held out and not used in any way during model training; it was only used for testing after the final model was selected.

Missing data

We handled missing data as follows. First, features with excessive missingness (over 50%; see ref. 8) were removed from the dataset. After this data was imputed using multiple imputation by chained equations (MICE) provided by the package Autoimpute39, which was built based on the R mice function. To reduce the number of variables available to feed into each variable’s imputation, we used multicollinearity analysis40,41. The variance inflation factor of each variable was used to measure the ratio of the variance of the entire model to the variance of a model with only that specific feature. The threshold for exclusion was set at 5, the default. This enabled us to have a reduced set of variables to feed into the MICE algorithm without extreme multicollinearity as would be expected given the number of available variables. To use the MICE algorithm we generated 5 datasets and took the average between all datasets for the variables using the least-squares or the predictive mean matching (PMM) strategy (continuous variables) and the mode for binary or ordinal variables42. Race/ethnicity, sex, treatment, and outcome (remission) were not imputed; all patients included had data for these variables.

In order to facilitate the deployment and interpretation of our model, we chose to pursue a single-dataset strategy when it came to imputation. However, it is commonly recognized that capturing the variability inherent in imputing a single variable during multiple imputation is important. Our approach therefore aimed to compromise between a standard multiple imputation approach and a single imputation approach by averaging our imputed datasets, as was done in a recent paper examining dementia data42.

Feature selection

As a neural network was used for the classification model, we chose to also use neural networks for feature selection43. To accomplish this we implemented a new layer called CancelOut44. CancelOut is a fully-connected layer, allowing us to create a classification model with the same task of training with a target of remission. The CancelOut layer has a custom loss function that works as a scoring method so that by the end of training we can view and select features based on their score. The retained features were input into our Bayesian optimization framework, which was used for selection of the optimal hyperparameters.

Final predictors

Predictors, also known as features, were clinical and demographic variables which were selected by the feature selection process. These features are listed in Table 1. The team developing and validating the model was not blinded to features used for outcome prediction.

Nominally, 1415 predictors were available in the data provided by the NIMH and other researchers, and 624 predictors were available in the data derived from the pharmaceutical databases. However, only 183 of these features were available in common across these data sources. Furthermore, after removing variables with excess missingness, 41 features remained. After running these predictors through our feature selection process during model development, 26 features remained (Table 1).

Model development

A deep learning model was prepared by arranging a set of hyperparameters (including, for example, the number of layers, number of nodes per layer, activation function, learning rate, number of training epochs, etc). During the training process, the model’s hyperparameters were refined using a Bayesian optimization process (BoTorch package)45. The number of layers and the Bayesian optimization targets (AUC and F1 score in the validation data) were provided to the Bayesian optimization in a fixed manner. Early stopping, within standard ranges for epoch number between 150 and 300, was used to prevent overfitting46. Our modelling pipeline additionally relied on a series of open source Python packages detailed in supplementary resources. Due to contractual obligations, data from pharmaceutical companies needed to be kept separate from data from other sources during training. As such, models were trained and their performance was optimized using the validation datasets within each data source separately. The best performing architectures were selected and then merged to produce a final model. This final merged model was then run on the test set, which was comprised of data from all data sources. This test set was not used in any way during model training.

Selection of deep learning as the modelling approach

While deep learning has been foundational to the success of many recent advances in computer science (such as computer vision), its utility in comparatively simple datasets, such as the tabular dataset used here, compared to more traditional approaches is not guaranteed (see47). In this paper our objective is to report the results of the version of our model which was included in the AID-ME trial. However, in previous work, we have demonstrated that deep learning approaches can provide advantages with the data used here. In a comparison with a gradient boosting approach that used elastic net for feature selection48, we have shown that a deep learning approach can provide roughly equivalent performance while improving generalizability of predictions6,7. In more recent work using the same approach adopted in this paper, we demonstrated that our deep learning approach improves upon results from a logistic regression model8. This, in addition to the potential for deep learning to incorporate more complex data types5 (such as images, other biosignals, or natural language) in future work, led us to pursue a deep learning approach for the current model.

Model usage after freezing

When the model is used for a new patient after training and validation is completed and the model is made static, data provided for a patient for the selected features is inputted into a forward pass of the model, and run for each of the possible medications, generating a remission probability for each treatment. We additionally use the saliency map algorithm to generate the interpretability report that provides the top 5 most salient variables for each inference performed7. Finally, we calculate the average remission probabilities across all predicted treatments. The results are then packaged and returned to the physician to make their determination (see below and Fig. 5). During clinical use, data is provided by patient via self- report questionnaires and by clinicians using a clinician questionnaire with built-in instructions, meaning that users require only minimal training to provide the data. Missing responses are not permitted and so no missing data is provided to the model when a new patient uses it.

Primary metrics

The model evaluation was primarily driven by optimizations across the Area under the Receiver Operating Curve (AUC), as well as the F1 score (where remission was predicted if the model estimated a remission probability of 50% or greater). As the AUC is scale- and classification-threshold-invariant, it provides a holistic and well-rounded view of model performance (Huang, 2005). Since the F1 Score is the harmonic mean of precision and recall it helps us assess our model’s handling of imbalanced data (Raschka, 2014). In addition, Accuracy, Positive Predictive Value (PPV), Negative Predictive Value (NPV), Sensitivity, and Specificity were also assessed, as these are commonly utilized in clinical studies and are meaningful to both machine learning engineers and clinicians. NPV and PPV especially are important in helping clinicians understand the value of positive and negative predictions.

Another important metric was model calibration (specifically expected and maximum calibration error)49. This metric reflects the relationship between the outcome predictions of the candidate model and the observed outcomes of the grouped sample. A model is said to be well calibrated if, for a group of patients with a mean predicted remission rate of x%, close to x% individuals have reached remission. Since our data doesn’t allow our models to extend their prediction certainties into the outer bounds of confidence (close to 0 or 100%), we limited our calibration evaluation towards less extreme boundaries of remission likelihood.

There was no difference in metrics used to assess the model during training and testing. A pre-specified model performance of an AUC of at least 0.65 was set as a target. This number was chosen with the following reasoning. Previous models we and others have produced had AUCs close to or near 0.76,7, and these models had previously been predicted to potentially improve treatment outcomes. The current dataset, while larger in terms of total number of subjects and available treatments than any model we have previously produced, also had fewer available high-quality features going into feature selection than our earlier models. This was a result of the need to use the common available features across disparate studies, and we were aware that certain metrics, such as education and income, which we had previously shown to be predictive of outcome6 were no longer available. As such, we expected that there might be a reduction in AUC below previous results; however, results lower than 0.65 would not be likely to be clinically useful. Therefore, 0.65 was selected as a realistic estimate that would still provide benefit over chance allocation.

Secondary metrics

To estimate clinical utility we use two analysis techniques: the “naive” and “conservative” analyses, which are described in detail in ref. 7. The naive analysis estimates the improvement in population remission rates, by taking the mean of the highest predicted remission rate for each patient from the test set from among the 10 drugs, and taking the mean of these predicted remission rates. The conservative analysis only examines predicted and actual remission rates for patients who actually received optimal treatment, utilizing a bootstrap procedure performed on the combined training and validation sets50. Both metrics allow estimation of predicted improvements in population remission rate, and we set a 5% minimum absolute increase in population remission rate, consistent with the benefit provided by other decision support systems such as pharmacogenetics51.

Interpretability report generation

We generated interpretability reports using the saliency method52 to assess the importance of the input data for each prediction produced. Using GuidedBackprop, we generated a numerical output that designates the importance of a feature in determining the output53, providing a list of prediction-specific important features which are similar to lists of ‘risk’ and ‘protective’ factors which clinicians are used to. The top 5 features in this list are provided to clinicians7 (see Fig. 6 for an example).

Bias testing

We utilized a bias testing procedure we have previously described in ref. 8 to determine if the model has learned to amplify harmful biases. For race, sex and age groupings, we create a bar graph depicting true remission rates for each group, as well as the mean predicted remission probability for that group. This allows us to assess if the model has learned to propagate any harmful biases, and to examine disparities in outcomes based on sociodemographics in the raw data (e.g., worse outcomes for non-caucasian groups). We also verify the model’s performance with respect to the observed remission rates for each treatment. The subgroups for age were 18–25.9; 26–40.9; 41–64.9; 65 + . The subgroups for race/ethnicity were Caucasian, African Descent, Asian, Hispanic, Other. Finally, subgroups for sex were male and female.

Sensitivity analyses

Sensitivity analysis was used to probe model behavior in response to manipulation of specific variables to determine if the model responds in a manner that is consistent with previous evidence. For example, we can plot how the remission probability changes in response to artificially increasing suicidality; the model should produce a clear trend towards lower remission probabilities as suicidality increases10.

Results

Participants

The final analysis dataset included 9042 participants. Their demographics, by data split (train, test, validation), are presented in Table 2. As expected with depression statistics, the sample was predominantly female (63.6%)54. The remission rate of the entire sample was 43.2%, reasonably in line with what would be expected in clinical trial data for remission in depression55. The data splits were stratified in order to ensure that remission rate and percentage allocated to each drug was similar between the splits. As noted, overall data missingness is described in the supplementary material. Due to the data preprocessing employed, no participants included in this dataset were missing outcomes.

Final model characteristics

Our Bayesian optimization procedure identified an optimal model featuring two fully-connected hidden layers, each with 40 nodes, leveraging exponential linear units (ELU) and a dropout rate of 0.15 to enhance generalization. The model’s prediction layer utilized the softmax function to calculate remission probabilities. The Adam optimization algorithm, set at a learning rate of 0.001, was employed for adjusting network parameters during the training phase. To avoid overfitting, the model incorporated an early stopping mechanism. The maximum training duration was set at 300 epochs, with a patience threshold of 100 for early stopping. The model completed the full 300 epochs of training.

Model performance

The model performance metrics are provided in Table 3 for the training, validation and test sets. As can be seen, the model generalized well to the test set (AUC is similar in the test and training set). Overall accuracy and AUC are somewhat low, but in line with results of other models in these kinds of datasets (see discussion below). In Fig. 2, we present the calibration plot for the test set; plots for the other sets are available in the supplementary material. The model was reasonably well calibrated, with an Expected Calibration Error (ECE) of 0.034 and a Maximum Calibration Error (MCE) of 0.087 (lower values are better). The model outperformed a null model (which always predicts non-remission, the modal outcome) on the test set (p = 0.01).

In Supplementary Tables 5 and 6 we present the frequency with which each drug was ranked in each possible place (from 1st to 10th). As these tables demonstrate, the model has learned to most often predict the highest remission rate for escitalopram. This is consistent with the input data (See Fig. 3) wherein escitalopram had the highest remission rate of all drugs. This is also consistent with the CANMAT guidelines, which note escitalopram among the more effective first-line antidepressants, and with a previous metanalysis which had similar treatments included56 and found that escitalopram had among the highest effectiveness ranking and superior tolerability compared to other antidepressants (we did not have access to data regarding mirtazapine monotherapy). However, as S5 and S6 demonstrate, beyond escitalopram there was significant variation in drug rankings, and at the aggregate level the rankings are reasonably consistent with previous metanalyses12,56. In addition, we provide in the supplementary material a listing of model performance for each drug.

As for the assessment of clinical utility, the naïve analysis suggest an absolute improvement in population remission rate from 43.15% to 53.99% on the test data. The average difference between the remission rate predicted for the best and worst drug, per patient, is 10.8, suggesting a wide spread between treatments. There was additionally an absolute improvement from 43.21 to 55.08% improvement under the conservative analysis on the testing data (when considering real remission rates). See Table 4 for numerical results of both analyses. The bootstrap sampling done in these analyses allows us to determine if model-directed treatment was significantly better than random allocation; improvement in predicted remission rates for those receiving model-optimal treatment was statistically significant (p < 0.001) using the procedure from46. See supplementary material for further details.

Model bias assessment and sensitivity analysis

In Fig. 3, we present results for our analysis aimed at identifying if the model has learned to amplify harmful biases. Figure 3 demonstrates, for subgroups relating to age, sex, and race/ethnicity, the observed and predicted remission rates for each subgroup. As the figure shows, for all subgroups for which more than a small number of patients were available, there are no cases in which the model predicts a significantly worse outcome (>5% below observed rate8) than is observed. In previous work8, we argued that an under-prediction of remission rate would be potentially harmful for a subgroup, and this analysis was intended to identify such under-predictions.

In Fig. 4, we present example results from our sensitivity analyses. These show remission rates decreasing with increasing severity of baseline suicidal ideation and increasing with the severity of weight loss. These are consistent with previous literature in which increased suicidality has been linked to increased severity of illness and a reduction in treatment response57,58. In addition, decreased weight as a symptom has previously been linked to an increase in antidepressant treatment response with fluoxetine59.

Model interface

Once we completed model testing and validation, the final model was saved in a frozen state to prevent further updating and uploaded into the Aifred CDSS platform. This platform will be fully described in the accompanying clinical trial results paper. In Fig. 5 and Box 1, we demonstrate how the results of the model are provided to clinicians. Probabilities, rather than class labels, are provided in order to avoid being overly prescriptive and to encourage clinicians to think of the prediction as ‘one more piece of information’ rather than as a directive60.

Top: Example result for (A) escitalopram and (B) duloxetine prediction. Information displayed includes drug class, typical dose, effective dose range, minimum/maximum dose range, dosing tips, clinical pearls, patient-specific remission probability for escitalopram, patient-specific remission probability across treatments, and population baseline. [Note: Duloxetine is an SNRI; this was a known error which was intended to be fixed in a software patch to be done after this screenshot was taken]. Bottom: Example interpretability report for (A) Escitalopram and (B) Duloxetine that provides the top 5 most salient variables for each treatment prediction. In this example, a question related to gastrointestinal symptoms is ranked first, which is interesting given that escitalopram is known to have superior tolerability, and the patient is noting significant gastrointestinal symptoms at baseline- a situation in which an SSRI with a more favorable tolerability profile would be preferred. In the second example, for duloxetine, some features are similar (e.g., gastrointestinal sensitivity) but is an interesting difference: hypochondriasis is included, which may be consistent with duloxetine’s known benefit for somatic symptoms13.

Discussion

In this paper we have presented results for a differential treatment prediction model of 10 antidepressant treatments, using solely clinical and demographic features. While combining clinical and demographic variables with other modalities, such as genetic information or imaging has previously demonstrated superior performance9, the clinical reality, in the majority of settings in which depression is treated, is that clinicians and patients will likely only have access to simple clinical and demographic information. As such, one of the main strengths of our model is that it can be easily used in any setting where an internet connection is available, with results available as soon as the straightforward questionnaire is completed and without requiring equipment other than a computer or mobile phone. This model was generated in order to be used in a clinical trial which has recently concluded (NCT04655924).

As this is one of the first treatment outcome prediction models to be deployed in a clinical environment in psychiatry, we carefully selected the decision for which the model could be of assistance, in order to avoid a situation where an incorrect model prediction could cause significant harm. For this reason, we elected to help differentiate between commonly used first line treatments. When the model makes accurate predictions, it can help improve treatment success rates and assist clinicians and patients in making decisions between the many available treatments; when the model makes incorrect predictions, patients still receive first line treatments for depression which are known to be safe and effective. This effort to mitigate risk via a careful selection of use-case notwithstanding, we attempted to examine our model thoroughly to ensure that it had the most potential to provide benefit while avoiding possible harm.

In order to assess potential benefits, we assessed potential clinical utility by determining projected improvements in remission rates and by assessing the extent the model was able to differentiate between treatments. We were able to demonstrate model metrics and potential improvements in remission rates generally consistent with our and others’ previous work6,7,61. We note some reduction in AUC compared to our previous models, likely related to the reduced feature set available as a result of merging together a large number of datasets, resulting in reduced feature availability (see below). The benefit of including this large array of datasets, however, was to allow us to make predictions for 10 different individual treatments, more than any model in MDD of which we are aware. Given the large number of treatments available, sufficient treatment coverage is crucial in order to produce a clinically useful treatment prediction model. Importantly, the model did develop a preference for escitalopram as the most effective drug and this drug is thus a main driver of the predicted improvements in population remission rate.

While the preference for escitalopram (Tables S5, S6) is consistent with both the input data and previous meta-analysis12,56, one might be concerned about the potential impact on clinician decision-making. However it is important to note that the treatments aside from escitalopram show significant variation, such that clinicians and patients, should they decide escitalopram is an inappropriate option because of cost, medication interactions, or having previously tried the medication, would likely benefit from the differential prediction between the other 9 treatments. Another beneficial aspect of our model is its interpretability report, which has in previous work been shown to support clinician trust in model predictions and, in turn, use of these predictions in treatment decisions in a simulation study15.

We recognize that, likely due to systemic factors62,63, certain subgroups may have lower observed rates of remission; this is true in the data available, and can be seen in the observed remission rates noted in Fig. 3. In order to assess potential harms of our model, we examined the performance of the model in different sub-groups to ensure that it did not amplify these existing biases. This is especially important given, as we note below, that our dataset was missing important socioeconomic information which may help explain differences in subgroup outcomes62. As noted, we did not find any signs of amplified negative bias. While this holds true in the intended use population, it is important to note the model would need to be re-validated and potentially re-trained if used in different populations. In addition, we demonstrated model validity not only by assessing performance metrics, but also by determining, through sensitivity analyses, that associations learned by the model were consistent with previous literature.

With respect to the chosen interpretability method (example results of which are available in Fig. 5), we did not want to obscure the complex nonlinear relationships that a deep learning model would harness with a method that linearizes the interactions (e.g., SHAP64). To avoid the process of linear approximations, we chose to employ a gradient based method, GuidedBackProp65 for per-prediction feature importance. To better understand global feature interactions, we employed sensitivity analysis to better understand the directionality and magnitude of prediction effects for each feature. In future experimentation, we plan to explore combinations of methods such as DeepLift66 or Generalized DeepSHAP67.

There are a number of significant limitations to the present work. The majority of these limitations are related to the quantity and quality of data available. The limitations of our approach to merging data across studies are discussed here8. There was a significant amount of missing data, and as such predictions can only be as valid as the imputation procedure (see ref. 8 for further discussion). The data used to train the model, while large in the context of psychiatric research, did have some important lacunae. For example, it did not include all first line treatments (e.g., vortioxetine, mirtazapine monotherapy). This data was not available to us, and if it was, it would likely have resulted in a model with more varied options for the most effective treatment as shown in meta-analyses12,13,56.

In addition, the dataset did not contain a great number of socio-demographic variables, which we have previously shown to be predictive of remission6,7. Our model would likely have improved performance if more features were available. Additionally, we included data mostly from North America and Western Europe, due to data availability as well as our intent to use the models in these populations; future work would be needed to further validate the model in other populations. Finally, our data only covers initial treatment choice and is not capable of adjusting predictions after treatment failure - an important area to explore in future work. It is important to consider that one of the strengths of AI systems is in their ability to learn and be updated over time as more data becomes available; while we argue that the current model has clinical utility, clinicians involved in the clinical testing of our previous models have noted their interest in seeing utility improve over time in successive versions of the model.

While this paper focuses on the documentation of the development and validation process for the model used in the AID-ME trial, future extensions or updates to this model would benefit from thorough benchmarking against other modelling approaches. This future work should first compare the deep learning model presented here to simpler modelling approaches which have been shown to be effective in datasets resembling ours in size and feature set. Aside from identifying optimal models, the benefit of this benchmarking would be to determine if simpler modelling approaches can provide comparative advantages in interpretability. The traditional models which would serve as comparators would include gradient boosting (which often performs well in datasets such as ours47); penalized logistic regression models, random forests, and support vector machines. In addition, it would be interesting to compare these modelling approaches as well as our deep learning approach against novel model types which were not available at the time of our model’s creation, but which promise improvements in performance, such as transformer-based foundation models adapted to tabular data47.

We have demonstrated that it is possible to create models which can provide differential treatment benefit predictions for a large number of treatments, without requiring complex or expensive biological testing. We present a process by which such models can be developed and validated, and describe the manner in which their results can be presented to clinicians. This model has since been field-tested in a clinical trial, representing, we hope, a step forward for the use of predictive tools in mental health clinical practice. Future focus on improving the breadth of features available for machine learning model training will likely further accelerate the clinical utility of machine learning models. Similar approaches could potentially be used to assist decision making in other illnesses.

Data availability

The authors do not have permission to share the data used in this project as it does not belong to them; those interested in using the data should contact the GlaxoSmithKline, Eli Lilly, NIMH, or the University of Pittsburgh. All GlaxoSmithKline and Eli Lilly data were obtained via the Clinical Study Data Request (CSDR) platform—we do not own any of the clinical trial data. Studies with the following IDs were utilized in this paper: gsk_29060_128, gsk_29060_115, US-HMCR, gsk_ak130939, gsk-WXL101497, gsk_29060_874, lilly_F1J-MC-HMCQ, lily_F1J-AA-HMCV, gsk_29060_810, gsk_AK1113351, lilly_FJ1-MC-HMAYa, lilly_FJ1-MC-HMAYb, lilly_FJ1-MC-HMATA, lilly_FJ1-MC-HMATb, lilly_FIJ-MC-HMAQb, lilly_F1J-MC-HMBV, lilly_FJ1-MC-HMAQa. Further detail on the pharmaceutical data model can be found in a separate publication8. All information about the model from that publication, including model weights and scaling factors, can be found at the following link: https://github.com/Aifred-Health/pharma_research_model The rest of the data used for this project was kindly provided by the NIMH as well as the IRL-GREY investigators and the University of Pittsburgh. Data and/or research tools used in the preparation of this manuscript were obtained from the National Institute of Mental Health (NIMH) Data Archive (NDA).NDA is a unified informatics platform generated and maintained by the National Institutes of Health to provide a national, publicly available resource to facilitate and accelerate research in mental health, and support the open science movement. Dataset identifier(s): Sequenced Treatment Alternatives to Relieve Depression (STAR*D) #2148, Combining Medications to Enhance Depression Outcomes (CO-MED) #2158, Research Evaluating the Value of Augmenting Medication with Psychotherapy (REVAMP) #2153, Establishing Moderators/Biosignatures of Antidepressant Response - Clinical Care (EMBARC) MDD Treatment and Controls #2199.

Code availability

Previous versions of the pipeline used to construct the model are available here: The old Vulcan (https://github.com/Aifred-Health/VulcanAI) and a full version of the model trained used the pharmaceutical data is available here: (https://github.com/Aifred-Health/pharma_research_model). Code describing model evaluation and generation of results is available upon request. The full protocol is proprietary, but its major elements are reproduced in this paper. This study was not registered but the accompanying clinical trial was (NCT04655924). People with lived experience of anxiety and depression were involved in the creation of the models described.

References

Health Organization, W. Depression and other common mental disorders: global health estimates. (2017).

Greenberg, P. E. et al. The Economic Burden of Adults with Major Depressive Disorder in the United States (2010 and 2018). Pharmacoeconomics 39, 653–665 (2021).

Rush, A. J. et al. Acute and longer-term outcomes in depressed outpatients requiring one or several treatment steps: a STAR*D report. Am. J. Psychiatry 163, 1905–1917 (2006).

Kraus, C., Kadriu, B., Lanzenberger, R., Zarate, C. A. Jr & Kasper, S. Prognosis and improved outcomes in major depression: a review. Transl. Psychiatry 9, 127 (2019).

Benrimoh, D. et al. Aifred Health, a Deep Learning Powered Clinical Decision Support System for Mental Health. in The NIPS ’17 Competition: Building Intelligent Systems 251–287 (Springer International Publishing, 2018).

Mehltretter, J. et al. Analysis of Features Selected by a Deep Learning Model for Differential Treatment Selection in Depression. Front Artif Intell 2, 31 (2019).

Mehltretter, J. et al. Differential treatment Benet prediction for treatment selection in depression: A deep learning analysis of STAR*D and CO-MED data. Comput. Psychiatr. 4, 61 (2020).

Perlman, K. et al. Development of a differential treatment selection model for depression on consolidated and transformed clinical trial datasets. medRxiv https://doi.org/10.1101/2024.02.19.24303015 (2024).

Squarcina, L., Villa, F. M., Nobile, M., Grisan, E. & Brambilla, P. Deep learning for the prediction of treatment response in depression. J. Affect. Disord. 281, 618–622 (2021).

Perlman, K. et al. A systematic meta-review of predictors of antidepressant treatment outcome in major depressive disorder. J. Affect. Disord. 243, 503–515 (2019).

Iniesta, R. et al. Combining clinical variables to optimize prediction of antidepressant treatment outcomes. J. Psychiatr. Res. 78, 94–102 (2016).

Cipriani, A. et al. Comparative efficacy and acceptability of 21 antidepressant drugs for the acute treatment of adults with major depressive disorder: a systematic review and network. meta-analysis. Lancet 391, 1357–1366 (2018).

Kennedy, S. H. et al. Canadian Network for Mood and Anxiety Treatments (CANMAT) 2016 Clinical Guidelines for the Management of Adults with Major Depressive Disorder: Section 3. Pharmacological Treatments. Can. J. Psychiatry 61, 540–560 (2016).

Tanguay-Sela, M. et al. Evaluating the perceived utility of an artificial intelligence-powered clinical decision support system for depression treatment using a simulation center. Psychiatry Res. 308, 114336 (2022).

Benrimoh, D. et al. Using a simulation centre to evaluate preliminary acceptability and impact of an artificial intelligence-powered clinical decision support system for depression treatment on the physician-patient interaction. BJPsych open 7, e22 (2021).

Hripcsak, G. & Albers, D. J. Next-generation phenotyping of electronic health records. J. Am. Med. Inform. Assoc. 20, 117–121 (2013).

Golden, G. et al. Applying artificial intelligence to clinical decision support in mental health: What have we learned?. Health Policy Technol. 13, 100844 (2024).

Kleinerman, A. et al. Treatment selection using prototyping in latent-space with application to depression treatment. PLoS One 16, e0258400 (2021).

Benrimoh, D. et al. Towards Outcome-Driven Patient Subgroups: A Machine Learning Analysis Across Six Depression Treatment Studies. Am. J. Geriatr. Psychiatry 32, 280–292 (2024).

Popescu, C. et al. Evaluating the clinical feasibility of an artificial intelligence-powered, web-based clinical decision support system for the treatment of depression in adults: longitudinal feasibility study. JMIR formative research 5, e31862 (2021).

Qassim, S. et al. A mixed-methods feasibility study of a novel AI-enabled, web-based, clinical decision support system for the treatment of major depression in adults. Journal of Affective Disorders Reports 14, 100677 (2023).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. M. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): the TRIPOD statement. Ann. Intern. Med. 162, 55–63 (2015).

Donohue, J. et al. Changes in physician antipsychotic prescribing preferences, 2002-2007. Psychiatr. Serv. 65, 315–322 (2014).

Merino, Y., Adams, L. & Hall, W. J. Implicit Bias and Mental Health Professionals: Priorities and Directions for Research. Psychiatr. Serv. 69, 723–725 (2018).

Chen, S., Ford, T. J., Jones, P. B. & Cardinal, R. N. Prevalence, progress, and subgroup disparities in pharmacological antidepressant treatment of those who screen positive for depressive symptoms: A repetitive cross-sectional study in 19 European countries. Lancet Reg Health Eur 17, 100368 (2022).

Collins, R., Bowman, L., Landray, M. & Peto, R. The Magic of Randomization versus the Myth of Real-World Evidence. N. Engl. J. Med. 382, 674–678 (2020).

Leon, A. C. Evaluation of psychiatric interventions in an observational study: issues in design and analysis. Dialogues Clin. Neurosci. 13, 191–198 (2011).

Mulder, R. et al. The limitations of using randomised controlled trials as a basis for developing treatment guidelines. Evid. Based. Ment. Health 21, 4–6 (2018).

Stahl, S. M. & Stahl, S. M. Essential Psychopharmacology: Neuroscientific Basis and Practical Applications. (Cambridge University Press, 2000).

McIntyre, R. S. et al. Measuring the severity of depression and remission in primary care: validation of the HAMD-7 scale. CMAJ 173, 1327–1334 (2005).

Asberg, M., Montgomery, S. A., Perris, C., Schalling, D. & Sedvall, G. A comprehensive psychopathological rating scale. Acta Psychiatr. Scand. Suppl. 5–27 (1978).

Kaneriya, S. H. et al. Predictors and Moderators of Remission With Aripiprazole Augmentation in Treatment-Resistant Late-Life Depression: An Analysis of the IRL-GRey Randomized Clinical Trial. JAMA Psychiatry 73, 329–336 (2016).

Rush, A. J. et al. An evaluation of the quick inventory of depressive symptomatology and the hamilton rating scale for depression: a sequenced treatment alternatives to relieve depression trial report. Biological psychiatry 59, 493–501 (2006).

Waszczuk, M. A., Kotov, R., Ruggero, C., Gamez, W. & Watson, D. Hierarchical structure of emotional disorders: From individual symptoms to the spectrum. J. Abnorm. Psychol. 126, 613–634 (2017).

Kolen, M. J. & Brennan, R. L. Test Equating, Scaling, and Linking. (Springer New York).

Sola, J. & Sevilla, J. Importance of input data normalization for the application of neural networks to complex industrial problems. IEEE Trans. Nucl. Sci. 44, 1464–1468 (1997).

Novak, R., Bahri, Y., Abolafia, D. A., Pennington, J. & Sohl-Dickstein, J. Sensitivity and generalization in neural networks: An empirical study. arXiv [stat.ML] (2018).

Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Welcome to autoimpute! — autoimpute documentation. https://autoimpute.readthedocs.io/en/latest/.

Sheather, S. A Modern Approach to Regression with R. (Springer Science & Business Media, 2009).

Kim, J. H. Multicollinearity and misleading statistical results. Korean J. Anesthesiol. 72, 558–569 (2019).

McCombe, N. et al. Practical Strategies for Extreme Missing Data Imputation in Dementia Diagnosis. IEEE J Biomed Health Inform 26, 818–827 (2022).

Figueroa Barraza, J., López Droguett, E. & Martins, M. R. Towards Interpretable Deep Learning: A Feature Selection Framework for Prognostics and Health Management Using Deep Neural Networks. Sensors 21, (2021).

Borisov, V., Haug, J. & Kasneci, G. CancelOut: A layer for feature selection in deep neural networks. in Lecture Notes in Computer Science 72–83 (Springer International Publishing, Cham, 2019).

Snoek, J., Larochelle, H. & Adams, R. P. Practical Bayesian optimization of machine learning algorithms. Adv. Neural Inf. Process. Syst. 4, 2960–2968 (2012).

Caruana, R., Lawrence, S. & Lee Giles, C. Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. Adv. Neural Inf. Process. Syst. 381–387 (2000).

Hollmann, N. et al. Accurate predictions on small data with a tabular foundation model. Nature 637, 319–326 (2025).

Chekroud, A. M. et al. Cross-trial prediction of treatment outcome in depression: a machine learning approach. Lancet Psychiatry 3, 243–250 (2016).

Moons, K. G. M. et al. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): explanation and elaboration. Ann. Intern. Med. 162, W1–W73 (2015).

Kapelner, A. et al. Evaluating the Effectiveness of Personalized Medicine With Software. Front Big Data 4, 572532 (2021).

Greden, J. F. et al. Impact of pharmacogenomics on clinical outcomes in major depressive disorder in the GUIDED trial: A large, patient- and rater-blinded, randomized, controlled study. J. Psychiatr. Res. 111, 59–67 (2019).

Adebayo, J. et al. Sanity Checks for Saliency Maps. Adv. Neural Inf. Process. Syst. 9525–9536 (2018).

Springenberg, J. T., Dosovitskiy, A., Brox, T. & Riedmiller, M. Striving for Simplicity: The All Convolutional Net. arXiv [cs.LG] (2014).

Bromet, E. et al. Cross-national epidemiology of DSM-IV major depressive episode. BMC Med. 9, 90 (2011).

Mendlewicz, J. Towards achieving remission in the treatment of depression. Dialogues Clin. Neurosci. 10, 371–375 (2008).

Cipriani, A. et al. Comparative efficacy and acceptability of 12 new-generation antidepressants: a multiple-treatments meta-analysis. Lancet 373, 746–758 (2009).

Keilp, J. G. et al. Suicidal ideation and the subjective aspects of depression. J. Affect. Disord. 140, 75–81 (2012).

Lopez-Castroman, J., Jaussent, I., Gorwood, P. & Courtet, P. Suicidal Depressed patients respond less well to antidepressants in the short term. Depress. Anxiety 33, 483–494 (2016).

Keers, R. & Aitchison, K. J. Gender differences in antidepressant drug response. Int. Rev. Psychiatry 22, 485–500 (2010).

Benrimoh, D. et al. Editorial: ML and AI safety, effectiveness and explainability in healthcare. Front. Big Data 4, 727856 (2021).

Ermers, N. J., Hagoort, K. & Scheepers, F. E. The Predictive Validity of Machine Learning Models in the Classification and Treatment of Major Depressive Disorder: State of the Art and Future Directions. Front. Psychiatry 11, 472 (2020).

Lesser, I. M. et al. Ethnicity/race and outcome in the treatment of Depression: Results from STAR*D. Med. Care 45, 1043–1051 (2007).

Fortuna, L. R., Alegria, M. & Gao, S. Retention in depression treatment among ethnic and racial minority groups in the United States. Depress. Anxiety 27, 485–494 (2010).

Lundberg, S. & Lee, S.-I. A unified approach to interpreting model predictions. arXiv [cs.AI] (2017).

Springenberg, J. T., Dosovitskiy, A., Brox, T. & Ried- Miller, M. Striving for Simplicity: The All Con- Volutional Net. (2014).

Shrikumar, A., Greenside, P. & Kundaje, A. Learning important features through propagating activation differences. arXiv [cs.CV] (2017).

Chen, H., Lundberg, S. M. & Lee, S.-I. Explaining a series of models by propagating Shapley values. Nat. Commun. 13, 4512 (2022).

Acknowledgements

This manuscript reflects the views of the authors and may not reflect the opinions or views of the data sharing platforms or initial submitters of the trial data. Funding was provided by Aifred Health; the ERA PERMED Vision 2020 grant supporting IMADAPT; MEDTEQ; The National Research Council via the IRAP program; the MITACS program; Investissement Quebec; and the Quebec Ministry of Economy and Innovation. No other funder had a role in the development or reporting of this research.

Author information

Authors and Affiliations

Contributions

D.B. helped conceptualize the study, provided supervision, and wrote and revised the manuscript. R.F., J.M., C.A. helped conceptualize the analyses, performed the analyses and helped write the manuscript; S.I., J.F.K., K.H., and S.P. reviewed the manuscrip; K.P. helped conceptualize the taxonomy used in the dataset, and helped write the manuscript; G.T. provided data access and supervision; A.K. helped conceptualize the analyses and helped write the manuscript. All authors have read and approved the manuscript

Corresponding author

Ethics declarations

Competing interests

D.B., C.A., K.P., R.F., J.M., S.I. were employees and/or shareholders of Aifred Health and supported this research in the context of their work for Aifred Health. DB receives a salary award from the Fonds de recherche du Québec – Santé (FRQS). A.K., S.P. have previously received an honorarium from Aifred Health. J.F.K. has been provided options in Aifred Health. K.H. and G.T. do not report any competing interests. All authors have read and approved the manuscript.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Benrimoh, D., Armstrong, C., Mehltretter, J. et al. Development of the treatment prediction model in the artificial intelligence in depression – medication enhancement study. npj Mental Health Res 4, 26 (2025). https://doi.org/10.1038/s44184-025-00136-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s44184-025-00136-8

This article is cited by

-

The Use of Artificial Intelligence for Personalized Treatment in Psychiatry

Current Psychiatry Reports (2025)