Abstract

Learning involves reducing the uncertainty of incoming information—does it reflect meaningful change (volatility) or random noise? Normative accounts of learning capture the interconnectedness of this uncertainty: learning increases when changes are perceived as meaningful (volatility) and reduces when changes are seen as noise. Misestimating uncertainty—especially volatility—may contribute to psychotic symptoms, yet studies often overlook the interdependence of noise. We developed a block-design task that manipulated both noise and volatility using inputs from ground-truth distributions, with incentivised trial-wise estimates. Across three general population samples (online Ns = 580/147; in-person N = 19), participants showed normative learning overall. However, psychometric schizotypy and delusional ideation were linked to non-normative patterns. Paranoia was associated with poorer performance and reduced insight. All traits showed inflexible adaptation to changing uncertainty. Computational modelling suggested that non-normative learning may reflect difficulties inferring noise. This could lead one to misinterpret randomness as meaningful. Capturing joint uncertainty estimation offers insights into psychosis and supports clinically relevant computational phenotyping.

Similar content being viewed by others

Introduction

Humans adapt their behaviour based on the outcomes they experience as a fundamental survival mechanism. However, neither sensory data nor prior knowledge of the world is completely reliable. A key question in cognitive science is how we learn effectively when faced with such uncertainty. It is proposed that we navigate this challenge by estimating the properties of uncertainty itself. These properties include the volatility (i.e. changeability) and noisiness (i.e. stochasticity) of outcomes1,2,3,4. In line with normative accounts of statistical inference, noise and volatility influence the speed of learning in opposite ways but must be jointly estimated for accurate inference3,4,5.

While recent research has emphasised the importance of considering both dimensions of uncertainty explicitly4,6, existing probabilistic learning tasks and computational modelling approaches are yet to fully disentangle the simultaneous estimation of volatility and noise. Studies that manipulate only one type of uncertainty and then use models attributing all dynamic learning rate (LR) effects to that specific uncertainty may be prone to misinterpretation. What appears to be an abnormality in volatility processing may, in fact, reflect misidentified disturbances in noise processing, and vice versa4. As such, novel assays that dissociate these two types of uncertainty will be central to developing more accurate computational phenotypes for prediction, assessment, and improved clinical interventions for individuals with altered learning processes.

Despite this, studies linking psychosis symptoms to aberrant learning under uncertainty have increased in recent years7,8,9. Findings consistently show that while performing probabilistic learning tasks, individuals across the psychosis spectrum tend to switch their choices unnecessarily and update their beliefs more rapidly than their peers10. Computational modelling suggests that this occurs due to aberrant processing of task volatility, where an overestimation of, and uncertainty about, volatility leads to miscalculations in the saliency of internal sensations and random external events (Schizophrenia: refs. 11,12; Clinical-High-Risk: ref. 13; First Episode Psychosis: refs. 14,15; Psychosis-like-traits: Delusions—refs. 16,17; Paranoia—refs. 18,19,20; Schizotypy—refs. 21,22). This arguably produces a subjective psychological perception of a changeable and unpredictable world filled with significant experiences that require elaborate and often bizarre explanations (e.g. delusional ideation)23,24,25,26. However, this body of work has yet to examine this mechanism in the context of the full problem facing the learner: the joint estimation of both volatility and noise.

To address this issue, our study developed both a task and analytical protocol designed to optimally delineate the contributions of noise and volatility during learning and thus examine statistically normative learning in relation to psychosis-like traits. The novel “Jumping Uncertainty Task” (Space JUnc Task) provided a narrative where participants, stranded on a planet, were tasked with predicting the next location of a piece of space junk that was falling from overhead unseen spacecraft—using a rover and beam (see “Methods” for full details). Locations for the falling space junk were drawn from ground-truth probability distributions to simulate noise/stochasticity (standard deviation) and volatility (mean changes). On a trial-wise basis, participants needed to infer both the location (mean) and width (standard deviation (SD)) of the spacecraft to make accurate predictions and receive maximum points/monetary rewards. This design allowed for direct access to trial-by-trial measurement of participants’ estimates of noise and volatility, including errors in their trial-wise predictions, and their speed of learning (see Table 1 for a full list of directly accessible metrics). To assess normative learning, uncertainty was manipulated via four experimental blocks, each block representing a different learning environment (Fig. 1B). To compare metrics between these different learning environments, planned contrasts were used, where each block was statistically compared to the preceding one (Fig. 1B). We also adopted an online format to enhance scalability and accessibility, while incorporating gamification to increase participant engagement27. Although we focused on collecting direct behavioural measures, we also employed computational modelling to improve interpretation of our findings alongside the existing literature. To this end, we used a version of the Hierarchical Gaussian Filter (HGF) (Jumping Gaussian Estimation Task (JGET)), which tracks both the mean and noise (SD) of an underlying distribution independently28.

A Illustration of the trial structure. Once the participant is exposed to the cue for a maximum of 5000 m/s, participants are then instructed to make an estimate of where the next piece of junk will fall, using the rover, adjustable on the x axis. Participants must also assess the noise/stochasticity in the environment and reflect this in their choice of beam width, while keeping in mind that the score is impacted by the size of the beam used to catch the junk (smaller beam = higher points if caught). Feedback, i.e. another piece of space junk drawn from the same distribution, is then presented to the participant (1000 m/s) alongside a written indication of success (green) or failure (red). Score is reflected in the right-hand corner and relates to both the success of predicting where the next junk will fall and the size of the beam used to catch it (max ten per trial). B The underlying “ground-truth” contingencies from which the junk locations were calculated. The x axis indicates the trial number. The black solid line shows the mean(s) used for each block (see Supplementary Table S1 for further details), indicating both the stability of the mean over trials (x axis) and the location of the mean on the play screen area (y axis). The standard deviation is used to simulate noise (high vs low) and is shown using the black dashed line. Here, the width of the dashed line around the mean indicates both the width of the SD used and the area of the play screen available for space junk locations to be drawn from. The red dots show the actual space junk positions that the participants were exposed to, in accordance with the underlying probability distributions. The arrows indicate a key aspect of our analytical approach. We used planned contrasts to assess normative learning. Here, each block was statistically compared to the preceding block, e.g. volatility vs noise.

To explore the connection with psychosis, we capitalised on the natural variation of psychotic-like symptoms and general susceptibility to psychosis within the general population. This approach allowed us to assess the association between normative learning and symptom measures, free from confounding variables such as medication use29,30. In the first sample (N = 580), we focused on the psychotic-like symptoms that have shown a link with altered associative learning: paranoia, delusional ideation, and psychometric schizotypy. To assess the unique relationship between psychotic-like symptoms or susceptibility, and the identified learning deficits—we also explored the connection with symptoms of depression and anxiety31. In the second sample, we pre-screened participants to obtain groups with high (n = 81) and low (n = 66) psychometric schizotypy, facilitating a more focused investigation of psychosis susceptibility. A sensitivity analysis was also conducted on an in-person cohort (N = 19) to establish the reliability of results in relation to online findings.

We hypothesised that most participants would learn in accordance with normative principles, exhibiting increased updating in response to volatility relative to noise. In contrast, individuals with psychotic-like symptoms or high psychometric schizotypy were expected to show non-normative updating patterns—either through heightened sensitivity to volatility or generalised over-updating across conditions, potentially reflecting elevated volatility effects on learning.

To test these predictions, we focused on both direct behavioural readouts—such as LR, performance error, and beam width—and computational modelling parameters. The latter included: κx, indexing the coupling between higher and lower-level belief updating about the mean (i.e. volatility); κa, indexing coupling between levels for updating about variance (i.e. noise); and β1, reflecting the extent to which participants relied on their noise belief when adjusting beam width. Normative learning would be reflected by higher LRs and performance errors in volatile blocks, wider beam use in high-noise blocks, and appropriate calibration of these parameters to environmental structure. Non-normative learning, particularly in individuals high in psychosis-relevant traits, was expected to manifest as reduced LR calibration, inappropriate beam use, diminished β1, or elevated κx across all conditions—potentially reflecting a belief that the environment is more volatile than it actually is.

Methods

Ethical approval was obtained using a REMAS (MRA-19/20-19444) via King’s College London. Individuals were given information detailing the study and provided their informed consent via Gorilla. Data collected via Gorilla were compliant with EU GDPR2016 and Gorilla’s “Data Processing Agreement” (consistent with NIHR guidance).

Across all experiments, data were subject to quality control screening, where a participant is deemed to not be attending to the task in the expected or necessary way. Exclusion criteria included: failure of technology, failure to move the beam and rover in at least 10% of trials (n = 21), and/or completion of at least 10% of trials in less than 1000 ms.

Population

For Experiments 1 and 2, participant eligibility criteria included age between 18 and 40 years, UK-based, English as a first language, no history of head injury, no diagnosis of cognitive impairment/dementia, or autism spectrum disorder. Participants were recruited and assessed over a 7-day period. In experiment 2, psychometric schizotypy scores were collected using the Schizotypal Personality Questionnaire—Brief (SPQ) in 1000 participants using an online platform Qualtrics. The top and bottom 10% of scorers were selected and asked to take part in further cognitive testing on the online platform Gorilla (see gorilla.sc).

Experiment 3 was conducted in person using participants who had previously taken part in Experiment 1 (n = 16) and Experiment 2 (n = 3). Participants were re-contacted through Prolific to participate in the on-campus experiment sessions. Recruitment and assessment took place over the course of 1 month at the Institute and Psychology, Psychiatry and Neuroscience.

Procedures

Prolific Academic (see prolific.ac), an online crowd-sourcing platform, was used to recruit all participants anonymously from the UK. Participants were then invited to complete the experiment via Gorilla, an online behavioural research platform, with the option to access the tasks via a computer/laptop or mobile phone. Before proceeding with the study, participants provided informed consent and completed a baseline demographic survey. Participants were randomised either to first respond to the symptom questionnaires or to play the Space JUnc Task to minimise fatigue effects. Within the demographic questionnaire, participants answered a question on the amount of time that they gamed per week. This was because the research involved them playing a gamified cognitive task, the success of which may be influenced by their familiarity with gaming in general. During the experiment, two attention checks were implemented. During the attention checks, participants had to answer a simple question about the colour of a square. On completion of the study, participants were debriefed, and each received an average of £6.50 per hour spent on the tasks and an additional performance-dependent bonus payment up to £2 in the Space Game. They were also invited to share any feedback or comments that they might have regarding the study.

In the on-campus study, all participants used the same laptop to complete the experiment via Gorilla. Upon completion, they were awarded a £40 Amazon voucher, comprising £35 for attendance and a £5 performance-based bonus. Participants travelling from outside London also received additional compensation for travel expenses.

Materials

Psychotic-like experiences, specifically paranoia and delusional ideation, were assessed using the Green Paranoid Thoughts Scale (GPTS) and the Peters Delusion Inventory (PDI), respectively. The GPTS includes subscales for ideas of social reference and persecutory ideas, along with associated concern, conviction, and stress32. The PDI evaluates endorsement, distress, conviction, and preoccupation related to various delusional beliefs33. Psychosis susceptibility was operationalised as high psychometric schizotypy, measured through the SPQ. The SPQ assesses nine subscales based on DSM-III-R criteria, including odd beliefs, unusual perceptual experiences, and paranoid ideation34. Sample 1 used the 22-item brief version35, while sample 2 employed the full 74-item version36. Depression and anxiety symptoms were evaluated using the 62-item Mood and Anxiety Symptom Questionnaire, which measures general distress, anxious arousal, and anhedonia37. Depression-related and anxiety-related subscales were combined into single measures. Two questions concerning suicide and death were excluded due to ethical considerations. Symptom scales correlated with each other moderately (see Supplementary Fig. 3 and Supplementary Table 4).

Learning under uncertainty was assessed using the Jumping Uncertainty Task (Space JUnc Task), a novel gamified paradigm designed to probe participants’ ability to infer and adapt to changes in environmental statistics. The task comprised 219 trials (intertrial interval: 1000–5000 ms), divided into two practice and four task blocks, each manipulating volatility and/or noise via changes to the underlying probability distribution of stimuli (see Supplementary Table 1 and Fig. 1B).

The first block required participants to pass a minimal threshold of success to continue. The second block introduced stable conditions (no mean shifts) with either high (SD = 0.12) or low (SD = 0.03) noise. Block three (“Noise block”; n = 40) maintained a fixed mean while manipulating noise alone. Block four (“Volatility block”; n = 40) introduced volatility by switching the distribution mean every ~5 trials (p = 0.25), with stable noise. Blocks five (“Combined Uncertainty”; n = 84) and six (“Bivalent Combined Uncertainty”; n = 40) varied both mean and SD approximately every 10 trials, featuring novel and previously seen means. These two blocks differed only in reward structure: the bivalent condition included potential point losses for incorrect predictions. The task was counterbalanced across participants (i.e. forward/reverse order of volatility and noise blocks). The exact means and SDs used, alongside the rules by which the distributions change, are displayed in Supplementary Table 1. These distributions were modelled after prior work38. The task inputs (space junk locations) were sampled from these distributions. The sampled inputs were then themselves subject to simulation (iterated over 100,000 replications). From the simulated inputs, the most normally distributed distribution was chosen. This was to ensure that the sampled inputs were not non-normally distributed by chance, i.e. include outliers that would confuse the participants.

Participants aimed to “catch” falling space junk—emitted from hidden spacecrafts—by adjusting an electromagnetic rover’s horizontal position (x axis) and beam width (y axis). Smaller beams conserved fuel and yielded higher (monetary) rewards, incentivising precise inference of the spacecrafts’ location and variability. Limited information about the size, location, and frequency of the incoming unseen spacecraft was given at the start of every block (see Supplementary Table 2). On the first trial of each block, participants were shown where the last piece of space junk fell and instructed to use this space junk location to predict the location of the next piece of junk. The falling junk was presented on each trial for 5000 m/s. To indicate their prediction, participants adjusted the rover and beam (which would appear at a random location on the screen x axis at the start of each trial) using the arrow keys (or WDAS) either on their keyboards or on the screen (left/right for the rover; up down for the beam). Once the participant had indicated their prediction, they were shown where the junk in fact fell, i.e. a prediction error (which could subsequently be used to make a new prediction on the next trial).

Primary performance was indexed by trial-wise scores (0–10), integrating prediction accuracy (catch success) and beam precision (smaller beams rewarded). Beam width served as an auxiliary measure of inferred variance. Derived metrics included performance error (distance between participant estimate and true mean) and LR, quantified via the beta coefficients from a linear model predicting the signed prediction error (participant rover position – space junk position at t1), from the signed rover position change (participant rover position t1 – participant rover position t2).

Participant feedback was presented trial-wise in terms of success, beam efficiency, and cumulative score, with financial bonuses tied to performance. Bonus points were awarded for every 250 points, and each bonus point earned the participant an additional 50p on top of the payment for completing the experiment.

Analysis

Analysis was carried out using R 4.2.1 GUI 1.79 High Sierra build (8095). Full analysis code can be made available. Linear mixed effects (LMEs) models were computed for the task’s main outcome measures. The structure of the data included both within- and between-subject factors, including nested elements. As such, it was necessary for the model to include multiple nested and non-nested random effects. The R package “lme4” was used, and random slopes and intercepts were fit for relevant variables, to account for the structure of the data. Specifically, all models included random effects of (i) participant and (ii) the counterbalancing condition (forward, reverse, priming block high or low) that were nested within block (not including practice blocks). This was to (i) inform the model that each participant’s data was interrelated within each subject, but different between subjects, and (ii) to inform the model that, for example, data from the counterbalancing condition forward was allowed to differ from the reversed (and so on). Fixed effects were fit for each dependent variable using model selection, and therefore small variations in the included fixed effects between models. These are fully described in the Supplement.

Each task block represented a different learning environment. To compare metrics between each learning environment, backwards contrasting methods were employed. As such, each block was statistically compared to the block before, i.e. the Volatility Block was compared to the Noise Block; the Combined Uncertainty Block compared to the Volatility Block. The final block included a shift in reward policies. Here, the Bivalent Combined Uncertainty Block was compared to the Combined Uncertainty Block. In line with all other analyses, back-contrasting methods were also used to compare extracted computational parameters. However, as priors for the noise and volatility block were not estimated for the kax and kaa parameters, respectively, LME contrasts for the model set for kax did not include the Noise Block, and the model set for kaa did not include the Volatility Block.

Model selection was carried out to identify the best model for each metric. To directly compare the models, an information theoretic approach (Akaike information criterion (AIC)39) was used. This approach is noted for its conservative approach due to the penalties it imposes on model complexity, such as additional fixed effects, as well as its ability to compare nested models40,41. To enable this comparison of models, given that we wanted to compare models with differing numbers of fixed effects, and that the data was nested, the log likelihood was used42. However, Restricted Maximum Likelihood (REML) was the choice of estimation method used once the best model had been identified43. The reported results were read from the models that had the lowest AIC values of the models compared, including the simplest (null) model (fixed effects set to 1), showing that the simpler models were the least informative. A chi-square difference test was also used to statistically test whether the models were significantly improved from baseline, i.e. the simplest model. Chi-square tests for model selection are used when comparing nested models, i.e. where the differences between models lie in the number of fixed effects44. Details of the model selection process for the linear mixed models and outcomes for each model set are in Supplement (Supplementary Tables 5–11 for Experiment 1; Supplementary Tables 12–18 for Experiment 2; and Supplementary Tables 20–23 for Experiment 3).

Given this statistical approach, overall, there were seven model sets, each trying to predict a different dependent variable of interest. Model comparison was conducted within model sets (see Supplement for full model comparison details and results). As this process produced a risk of false positive results, global false detection rate (FDR) corrections were applied45. As such, p values from all comparisons across models or sets were combined into a single list, and FDR correction was applied globally. This approach is more conservative, meaning it is more likely to adjust p values upwards, leading to fewer significant results (i.e. more false negatives but stronger error control). This approach was appropriate because we wanted to control the FDR across the entire experiment and ensure no inflation of false discoveries overall46,47.

To reflect the parallel learning demands of the task, our computational modelling approach was designed to independently estimate learning about both the mean and variance of the input distribution. As such, we used a computational modelling approach that was able to independently capture learning about the mean and variance of an input. This was achieved by using an extension of the standard HGF model (C. refs. 2,28) (perceptual model). In short, the HGF is an approximation of Bayesian learning but based on (prediction) error-based learning rules. Crucially, higher-level beliefs determine the weight that is given to lower-level prediction errors, which again affect higher-level beliefs. For instance, if a subject believes the environment to be stable (volatility belief is low), prediction errors are thereby “explained away” and will not lead to a strong update on lower levels. Respectively, high updating takes place when volatility is believed to be high. For a more in-depth explanation of the HGF, please see the original publications2,28.

The HGF JGET model (as implemented tapas-toolbox of the TNU project48) was applicable to our paradigm because it was characterised by a 2-branched HGF that “learns” about (1) the current mean value (x1) and (2) its variance (α1) of the input stream (u). It is—of course—also hierarchical; there are volatility “parents” for the variation of mean values (x2) and the variance (α2) (see Fig. 4A). As in the “classic” HGF applications, there are the same free parameters that capture inter-individual differences, e.g. κ for the coupling between levels or the level-wise ω determining the constant step-size for trial-wise updating2,28. Unlike in the previous HGF applications, both streams for learning about x and α are combined in an additional, common level that brings together beliefs about x1 and α1 relevant for trial-by-trial behaviour. Further, each stream has its own set of parameters (e.g. kax for mean learning, and kaa for SD learning). In the decision model (modified version of the ‘tapas_gaussian_obs’-script;48, we used the belief trajectories to predict the following trial-by-trial responses: belief about mean x1 → rover position and β1 weighting the belief about noise α1 → beam width. We introduced this additional free coefficient parameter β1 capturing the general tendency of an individual to use the acquired knowledge (belief) for their beam width adaptation (see Fig. 4). We did not fit an equivalent regression coefficient for weighting the effects on the belief about the mean on the rover position, as there was no variance for this parameter when setting up the modelling. This might indicate that subjects directly translated their mean belief into positioning the rover, while the translation of noise inference into beam widths might have been more complex. Note that within the Matlab-based HGF toolbox subjects are fitted individually based on a quasi-Newton algorithm.

To assess the test–retest reliability of the key outcome measures of our task, intraclass correlations (ICCs) were calculated for overall score, beam width, prediction errors, performance errors, and LRs. The average amount of time between the online and in-person assessments was 14.32 months, with a range of 11.60–27.20 months. ICCs were computed using a two-way mixed-effects model to assess absolute agreement among single measurements. To further assess the validity of our learning measures, we compared data from our in-person sample with performance on the probabilistic reversal learning (PRL) task by Reed et al.19, which was collected in the same experimental session. This task was selected because reversal learning and related instrumental learning tasks have consistently shown alterations in individuals with psychosis31, making them strong reference points for our volatility-related measures—unlike tasks that more directly target learning about means or noise. Despite differences in format (categorical choices in PRL vs continuous predictions in our task), both tasks require belief updating in dynamic environments. Whereas the PRL manipulates uncertainty via probabilistic feedback, our task presents it explicitly through noise and volatility. We focused on PRL block 2 (80–40–20% reward probabilities), which most closely resembles the volatility and combined uncertainty conditions in our task. Given that the PRL assesses strategy-level adaptation (e.g. win-switch, lose-stay rates) and does not include an explicit noise component, we focused on our volatility-related outcome measure: LR. As noise is continuous and embedded in all levels of our task, but block-specific and probabilistic in the Reed task, overall performance reflects different types of uncertainty. Therefore, comparisons are most appropriate at the strategy level, although we have included performance correlations for completeness.

Results

Experiment 1

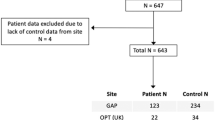

We recruited 631 individuals from a widely available recruitment platform—Prolific (M = 30.60 years; SD = 5.91), of whom 51 were excluded based on predetermined criteria (Supplementary Fig. 2, resulting in a final sample of 580 participants for analysis (see Table 2). On average, participants required 16.07 min (SD = 5.96) to complete the experimental task.

Participants effectively tracked the falling space junk through their rover placements (Fig. 2A) and demonstrated clear learning about the mean of the underlying distribution. This was evidenced by increased performance and prediction errors on trials where the mean changed (Fig. 2B). Notably, after these mean shifts, performance error decreased over the subsequent three trials (Fig. 2C), suggesting that participants recognised the shift in the underlying distribution, adapted accordingly, and used the rover to reflect their updated estimate of the mean. As expected, high noise conditions prompted participants to use a wider beam (β(SE) = −8.57(1.52), p < 0.001; Fig. 2D, E), reflecting appropriate adjustments in response to increased stochasticity.

A Rover positions matched the underlying mean. B Prediction/performance errors increased right after mean changes and C decreased with increasing stability of junk positions after changes. D shows the average beam width for each trial, across all participants (black). The background is shaded in purple and green to represent high and low noise conditions, respectively. The dark grey and light grey lines depict the cumulative standard deviation estimate given differing levels of coarseness, specifically over 5 trials (light grey), and 15 trials (dark grey). Dotted lines indicated block changes, with trial numbers on the x axis. E shows the residual beam width variance, unexplained by covariates, compared between high and low noise conditions across the whole task. F shows average learning rates by block. G Top. Here, for volatile, combined, and bivalent combined conditions, learning rates are compared between high (purple) and low (green) noise conditions. Bottom. Average block-wise learning compared for no mean change trials (lime green), and mean change trials (pink).

We hypothesised that participants would display higher LRs during periods of volatility, compared to periods of low volatility, stability, or noise4. A main effect of block was found, showing that average LRs were significantly increased in the Volatility Block compared to the Noise Block (β(SE) = 0.299(0.01), p < 0.001; Fig. 2F). In accordance with a normative account of learning, LRs showed limited change between high and low noise conditions, and increased LRs during mean change point trials vs non-mean change point trials, shown in Fig. 2G.

Planned contrasts between blocks revealed that scores significantly decreased as the game became more difficult across blocks (Noise vs Volatility Blocks: β(SE) = −0.57(0.22), p = 0.050; Volatility Blocks vs Combined Uncertainty Block: β(SE) = −0.67(0.11), p < .001). Additionally, score decreases persisted across blocks with varying reward structures, as shown by the comparison between the Combined Uncertainty Block and the Bivalent Uncertainty Block (β(SE) = −0.50(0.19), p = 0.001). Significantly higher average scores were found in males (β(SE) = 0.41(0.09), p < 0.001).

In line with a normative account of learning, the volatility block was associated with significantly higher performance errors compared to the noise block (β(SE) = 0.50(0.30), p < 0.001). Analysis of a more complex uncertainty environment showed that the Combined Uncertainty block had significantly higher errors than the Volatility Block (β(SE) = 0.10(0.03), p = 0.036). Using a mobile device significantly increased performance errors overall (β(SE) = 0.16(0.02), p < 0.001).

Individuals with higher self-reported paranoia exhibited significantly lower scores overall compared to those with lower paranoia (β(SE) = −0.01(0.00), p = 0.040; Fig. 3A). No other psychosis-related symptom scales were related to score overall or by back-contrasted block.

A shows the relationship between average block-wise score and paranoia scores, including standard error. B shows the relationship between average block-wise performance error and paranoia scores, including standard error. C shows the relationship between average block-wise performance error and delusion ideation scores. Specifically, results shown for the noise (blue) and volatility (brown) blocks demonstrating the interaction found for individuals scoring at the upper and lower end of the delusions symptom scale. D shows the the relationship between the averaged block-wise beam widths and paranoia scores in high and low noise conditions. Results show that individuals with paranoia use a significantly wider beam in low noise, and a narrower beam in high noise. E shows the relationship between learning rates and psychometric schizotypy for each block. Significance indicated for planned contrast noise block vs volatility block, showing that high and low schizotypy significantly differ in their learning rate use between these blocks. F Left: shows the learning rates in stable and volatile blocks. Each block dot is one person, and their data is joined between conditions with a straight black line. The mean for each condition is indicated in red. Right: As with FA but the learning rate difference is the difference between noisy trials and volatile trials. G shows the learning rate difference between volatile and stable blocks for each individual. The learning rate difference is then correlated with psychosis-trait symptom scores paranoia (red), delusional ideation (light blue), and psychometric schizotypy (purple). H As with (G), this plot shows the learning rate difference (volatile − noise) and symptom scores paranoia (red), delusional ideation (light blue), and psychometric schizotypy (purple).

Individuals with higher paranoia scores exhibited significantly greater overall performance errors throughout the game, with no interactions observed with any specific block, i.e. their estimates were consistently further from the mean (β(SE) = 0.01(0.00), p = 0.022; Fig. 3B). A significant interaction was found between delusional ideation and block, where those with greater delusional ideation had significantly higher performance errors during the Noise Block compared to the Volatility Block (β(SE) = 0.01(0.00), p = 0.017; Fig. 3C). At the same time, those with low delusional ideation had increased performance errors as the task moved into a high volatility environment (Noise Block to Volatility Block), as expected. Regarding beam use, elevated paranoia was associated with a significantly narrower beam in high noise conditions across the task as a whole, compared with low noise conditions, indicating poor calibration of beam width to statistical noise (β(SE) = −0.05(0.02), p = 0.050); Fig. 3D).

As expected, participants used higher LRs in volatile environments as compared to stable and noisy ones (Figs. 2F, G and 3F). LME models showed an interaction between psychometric schizotypy and LRs when comparing the Volatility and Noise Blocks (β(SE) = −0.01(0.01), p = 0.002; Fig. 3E). This indicated that for high schizotypy scorers, LRs were increased under noise compared with volatility (a non-normative pattern). To further investigate such LR calibration, we calculated the differences in LRs (LRds) for volatile vs stable and volatile vs noisy environments, as has been done in previous research (Browning et al.49). This provided a measure of the size of the update between different uncertainty environments. Ordinarily, we would expect the LRds to increase as volatility increases. However, Spearman’s rank correlations revealed a negative correlation between paranoia scores and LR calibration when updating from stable or noisy, to volatile environments (Stable–Volatility: r = −0.08, p = 0.006; Fig. 3G; Noise–Volatility: r = −0.10, p = 0.025; Fig. 3H). Similarly, psychometric schizotypy showed a significant negative correlation with LR calibration for LR update between Noise and Volatility (r = −0.12, p = 0.001; Fig. 3H) (but not for stable vs volatile environments). Delusional ideation also showed decreased calibration between Noise and Volatility environments (r = −0.09; p = 0.037), and though not significant, delusional ideation also showed a trend-level negative relationship with LRd for Stable and Volatility environments (r = −0.08, p = 0.060; Fig. 3H).

Scores were significantly higher in individuals with elevated depression symptoms (β(SE) = 0.01(0.00), p < 0.001). A significant interaction was also observed between elevated depression symptoms and the Combined Uncertainty vs the Bivalent block indicating that while these individuals achieved higher scores overall, this was particularly pronounced in the Bivalent Block compared to the Combined Uncertainty Block (with no “punishments”) (β(SE) = 0.01(0.00), p = 0.001). This performance benefit was underpinned by good acquisition of the underlying statistics shown via lower overall performance errors across the game (β(SE) = −0.01(0.00), p < 0.001), and significantly smaller beam size overall (β(SE) = 0.07(0.03), p = 0.035). They also showed a significant negative interaction with high noise conditions, indicating that they calibrated their beam width sizes appropriately by using a wider beam in higher noise conditions (β(SE) = −0.07(0.03), p < 0.002). Higher overall LRs were also identified in individuals with elevated depressive symptoms (β(SE) = 0.01(0.01), p = 0.020). Anxiety: a significant negative correlation with anxiety scores was found for LRds/calibration between stable and volatile environments (r = −0.13, p = 0.003), and between Noise and Volatility environments (r = −0.09, p = 0.045). Please see Supplementary Fig 3.

We can see from Fig. 4A, B that the simulated predictions of each of the blocks capture the data well, both in terms of the (median) participants’ estimate of the junk positions, and their (median) beam widths. The total number of individuals excluded due to poor fit overall was 47 leading to a new total of 533 participants (exclusions: Noise Block n = 6; Volatility Block n = 18; Combined Uncertainty Block n = 11; Bivalent Combined Uncertainty Block n = 13). We ran parameter recovery analyses and recoverability differed between levels (see Supplementary Fig 6A, B) for heatmaps and level-wise scatter plots). It should be noted that the recoverability of the κ parameters were poor for the Volatility and Noise Blocks (r < 0.5) and generally prone to extreme values. The recoverability for the noise belief regression weight β1 ranged from poor (r = 0.4 in the Volatility Block) to good (r = 0.7 in the Combined Uncertainty Block).

A shows a depiction of the Jumping Gaussian Estimation Task modelling process used in this study. This version of an extended HGF model captured learning about the mean (x) and variance (a) independently. Pentagons represent trial-by-trial inputs (yx = rover positions; ya = beam widths). Squares represent the modelling parameters, relating to learning about the mean (x) and noise (a) separately: θ refers to the constant step-size in volatility learning (µ2), ω is the equivalent on the lower-level belief and κ determines the coupling strength between levels. Parameters for u refer to the lowest level, where beliefs about noise and mean are combined for a common prediction about the mean. The decision model includes ζx and ζa for determining the decision noise or SD when drawing from a Gaussian distribution around the respective belief (µ1) about mean and noise. The β1 parameter captures how much an individual makes use of her belief about noise when adapting the beam width. Using the Volatility Block as an example, the HGF outputs are shown to demonstrate the ability of the model to capture and predict the behaviour anticipated. B shows a comparison between participant estimates, and estimates made via the model simulations, across blocks. The x axis displays the trial number for each block. The y axis shows the screen position scale. Here, 0 indicates the middle of the screen, while positive and minus figures show the right- and left-hand sides of the screen, respectively. In this way, mean estimates (rover positions) and noise estimates (beam widths) can both be displayed as positions on the screen. Median participant estimates of the mean, i.e. rover positions, are indicated by the black solid lines. Median simulated estimates of the mean based on the JGET HGF model are shown in orange. The median participant’s noise estimate (beam width) for each trial is shown using the width of the screen in black/grey. The median simulated noise estimate is shown as a width in orange. C, D show the relationship between our decision model parameter “use of noise belief” (β1) and delusional ideation scores. Plot (C) looks specifically at the Noise Block (purple) and the Volatility Block (pink), whereas plot (D) looks at the Combined Uncertainty Block (gold) and the Bivalent Combined Uncertainty Block (red). E shows the relationship between β1 values in the Combined Uncertainty Block (gold) and the Bivalent Combined Uncertainty Block (red), and scores on the psychometric schizotypy scale.

Further analysis assessed how parameters of interest varied with symptom scales, specifically comparing the influence of noise belief (β1), i.e. the amount the belief about noise impacted the decision, mean kappa (κ, kax), i.e. belief updating on a trial wise basis relating to the mean/volatility, and variance kappa (κ, kaa), i.e. trial wise updating in relation to the underlying variance. Planned contrasts excluded blocks where priors had been provided (Noise Block excluded for kax, Volatility Block excluded for kaa).

The kaa parameter significantly increased from the Noise Block to the Combined Uncertainty Block (β(SE) = 0.23(0.03), p < 0.001), and from the Combined Uncertainty Block to the Bivalent Block (β(SE) = 0.05(0.03), p = 0.032). The kaa parameter negatively correlated with overall mean scores across the task (r = −0.17, p < 0.001). The kax parameter significantly increased between the Combined Uncertainty Block and the Bivalent Block (β(SE) = 0.13(0.04), p = 0.032).

Higher paranoia scores were associated with higher overall kax values (β(SE) = 0.01(0.00), p = 0.032). Additionally, individuals with increased delusional ideation showed trend-level lower kax values in the bivalent block compared to the combined uncertainty block (β(SE) = −0.01(0.00), p = 0.059 (trend-level significance given FDR corrections). The kax parameter negatively correlated with overall mean scores across the task (r = −0.14, p < 0.001).

The noise belief (β1) parameter significantly increased across all block comparisons: Noise vs Volatility Blocks (β(SE) = 0.03(0.01), p < 0.001), Volatility Blocks vs Combined Uncertainty (β(SE) = 0.05(0.01), p < 0.001), and Combined Uncertainty vs the Bivalent Block (β(SE) = 0.03(0.01), p < 0.001). The picture was more mixed for psychotic-like experiences. For delusional ideation, scores significantly decreased between two planned comparisons (Noise vs Volatility: β(SE) = −0.01(0.01), p = 0.032; Fig. 4C; Combined Uncertainty vs Bivalent Block (β(SE) = −0.01(0.01), p = 0.029; Fig. 4D). Whereas schizotypy showed significantly higher (over and above the main effect) of β1 in Combined Uncertainty vs Bivalent Blocks (β(SE) = 0.02(0.01), p = 0.016; Fig. 4E). β1 showed a significant negative correlation with overall mean scores (r = −0.17, p < 0.001).

Experiment 2

The top and bottom 10% of scorers on the Schizotypal Personality Questionnaire were selected out of 1000 participants. After excluding 18 individuals for failing to meet inclusion criteria (see Supplementary Notes, 2.4), 147 participants remained (high psychometric schizotypy = 81, low psychometric schizotypy = 66; see Table 3).

As in Experiment 1, participants effectively tracked the falling junk with their rover placements (Supplementary Fig. 4A). They learned the mean of the space junk distributions, as shown by increased performance and prediction errors at mean change points, which rapidly decreased afterward, indicating improved accuracy (Supplementary Fig. 4B, C). Noise levels (high/low) significantly influenced beam usage, with participants using a wider beam during high noise periods compared to volatile ones (β(SE) = −10.15(1.027), p < 0.001; Supplementary Fig. 4D, E), suggesting appropriate beam adjustment. In line with our previous findings, averaged block-wise scores decreased between all blocks, i.e. as the task increased in difficulty (Noise Block vs Volatility Block: β(SE) = − 0.37(0.11), p = 0.013); Volatility Block vs Combined Uncertainty Block: β(SE) = −0.83(0.12), p < 0.001; Combined Uncertainty vs Bivalent Block (β(SE) = −0.80(0.11), p < 0.001). Average performance error was significantly increased during the Volatility Block, compared with the Noise Block (β(SE) = 0.47(0.04), p = <0.001; Supplementary Fig. 4B), in accordance with Experiment 1. As expected, LRs increased in the Volatility block compared to the Noise Block (β(SE) = 0.19(0.01), p = <0.001). LRs were also found to be higher in the Combined Uncertainty Block, compared to Volatility Alone (β(SE) = 0.14(0.01), p = <0.001); and for the Bivalent compared to the Combined Uncertainty Block (β(SE) = 0.09(0.01), p = <0.001; Supplementary Fig. 4F). Females displayed significantly higher LRs overall than males (β(SE) = 0.04(0.01), p = 0.032).

No significant effects or interactions were found between the psychometric schizotypy groups for overall performance (score), performance error, beam width, or LR. LRs in the Volatility Block were significantly increased compared to both noisy (low volatility) and stable environments (β(SE) = 0.27(0.02), p < 0.001; Supplementary Fig. 5A, B). There was no significant relationship between the extent of LR calibration and schizotypy (Noise–Volatility: β(SE) = 0.02(0.03), p = 0.503; Stable–Volatile: β(SE) = 0.06(0.05), p = 0.273).

Figure 5A shows that the simulated predictions of each of these blocks capture the data well, both in terms of the (median) participants’ estimate of the junk positions and their (median) beam widths. Model comparison findings indicated our task manipulation worked as expected (detailed in the Supplementary Notes 2.1). The total number of individuals excluded due to poor fit overall was 14 leading to a new total of 139 participants (removed block-wise: noise block n = 7; volatility block n = 6; combined uncertainty block n = 4; bivalent combined uncertainty block n = 1). These individuals were excluded from further analysis. Comparable to Experiment 1, the recoverability of kappa parameters ranged from low (r = 0.3 in the Combined Uncertainty Block) to good (r = 0.7 in the Bivalent Block), but these parameters were again prone to outliers. The recoverability of the noise belief coefficient weight β1 ranged from poor (r = 0.3 in the Volatility Block) to good (r = 0.8 in the Combined Uncertainty Block) (see Supplementary Fig. 7A, B).

A shows a comparison between participant estimates, and estimates made via the model simulations, across blocks. The x axis displays the trial number for each block. The y axis shows the screen position scale. Here, 0 indicates the middle of the screen, while positive and minus figures show the right- and left-hand sides of the screen, respectively. In this way, mean estimates (rover positions) and noise estimates (beam widths) can both be displayed as positions on the screen. Median participant estimates of the mean, i.e. rover positions, are indicated by the black solid lines. Median simulated estimates of the mean based on the JGET HGF model are shown in orange. The median participant’s noise estimate (beam width) for each trial is shown using the width of the screen in black/grey. The median simulated noise estimate is shown as a width in orange. B shows the pattern of noise belief parameter change across blocks. C shows noise belief parameter change across blocks, split by psychometric-schizotypy group (high and low).

The kax parameter significantly decreased between the Volatility Block and the Combined Uncertainty Block (β(SE) = −0.61(0.21), p = 0.019), with no group effects observed. Kax also showed a significant negative correlation with overall participant mean scores (r = −0.14, p < 0.001). The Kaa parameter was significantly negatively correlated with overall participant mean scores (r = −0.23, p < 0.001). The noise belief (β1) parameter significantly increased between the Noise and Volatility Blocks (β(SE) = 0.03(0.01), p < 0.001), Volatility and Combined Uncertainty Blocks (β(SE) = 0.06(0.01), p < 0.001), and Combined Uncertainty and Bivalent Blocks (β(SE) = 0.04(0.01), p < 0.001; Fig. 5B). A main effect of psychometric schizotypy (high/low) was found, with the high group showing a main effect of overall lower β1 values, that remained trend level once strict multiple corrections were applied (β(SE) = −0.01(0.01), p = 0.051). This decreasing trend for the β1 parameter in high schizotypy continued between the Volatility and Combined Uncertainty Blocks (NB trend-level significance post FDR corrections) (β(SE) = −0.02(0.01), p = 0.063; Fig. 5C). The β1 parameter was negatively correlated with overall mean scores (r = −0.2, p < 0.001).

Experiment 3

A total of 19 participants completed the experiment in person, and no one was excluded with all meeting the quality control standards (M = 30.42 years; SD = 6.53; female = 57.9%; for full details of sample characteristics, please see Supplementary Table 19). The average completion time was 13.63 min (SD = 2.92), ranging from 8.32 to 18.13 min.

In accordance with Experiments 1 and 2, participants effectively tracked the falling space junk using their rover placement (Supplementary Fig. 7A). Performance errors increased at mean change points, and rapidly decreased following these changes, reflecting increased accuracy Supplementary Fig. 7B, C). Results showed that average performance error was significantly increased during the Volatility Block, compared with the Noise Block (β(SE) = 0.36(0.04), p = <0.001), in accordance with Experiments 1 and 2; and that noise levels (high/low) significantly affected beam usage, with participants using a wider beam during high noise periods compared to volatile ones (β(SE) = 10.68(1.86), p < 0.001). LRs also increased in the Volatility Block compared to the Noise Block (β(SE) = 0.11(0.02), p = <0.001), and as the task increased in complexity (Volatility Block vs Combined Uncertainty Block: (β(SE) = 0.07(0.02), p = 0.008)). Block-wise scores decreased as the task increased in difficulty (Volatility Block vs Combined Block: β(SE) = −0.44(0.11), p < 0.001); Combined Uncertainty Block vs Bivalent Block (β(SE) = −0.51(0.10), p < 0.001).

Individuals with higher psychometric schizotypy and paranoia scores exhibited significantly poorer performance (Schizotypy: β(SE) = −0.14(0.05), p < 0.001; Paranoia: β(SE) = −0.05(0.01), p = 0.002), and higher schizotypy was also associated with the use of wider beams (β(SE) = 2.60(0.55), p < 0.001). In contrast, individuals with higher levels of delusional ideation showed the opposite pattern, with better performance (β(SE) = 0.03(0.01), p < 0.001) and narrower beam widths (β(SE) = −0.61(0.08), p < 0.001) overall. No significant effects or interactions were observed between symptom measures and performance error or LRs. We also conducted a sensitivity analysis excluding one participant with an unusually high delusional ideation score yielding similar task validation results (see Supplementary Table 25). Given the small sample size and the validation-focused nature of Experiment 3, further research in larger in-person samples is needed.

A sensitivity analysis was conducted on an in-person cohort, drawn from participants who previously took part in Experiment 1 or 2, to establish the reliability of results in relation to online findings. Intraclass correlations between the online and in-person results ranged from 0.6 to 0.78, indicating moderate-to-good reliability (see Supplementary Fig. 7D–G), despite differences in context, such as setting, and device used.

To examine convergent validity in the in-person sample, we correlated LRs from each block of our task with behavioural strategy measures (win-switch and lose-stay rates) and total scores from block 2 of the probabilistic reversal learning (PRL) task19. LRs in the volatility and combined uncertainty blocks showed strong negative correlations with win-switch rates (both r = –0.53), and moderate positive correlations with lose-stay rates (r = 0.43 and 0.50, respectively). These patterns align with adaptive behaviour in volatile environments: lower win-switching and greater lose-staying reflect stability in response to probabilistic losses, rather than overreacting to noise19. In contrast, LRs in the noise-only block showed weak correlations with PRL strategy measures (win-switch: r = 0.25; lose-stay: r = 0.16), consistent with the idea that updating is less adaptive under pure noise. The bivalent combined uncertainty block showed moderate correlations (win-switch: r = –0.30; lose-stay: r = 0.28), though this block differs structurally by increasing stakes, making the relationship less direct. Correlations between total scores in our task and the PRL were weak to moderate across blocks (r = 0.35, 0.35, 0.33, and 0.28). This suggests some overlap in overall performance, but the comparison is difficult to interpret directly. The PRL score primarily reflects reward maximisation under probabilistic reversal, while our task’s score includes sensitivity to environmental changes and noise or preliminary symptom findings, please see the Supplement (Supplementary Notes 2.5 and Supplementary Fig. 8. Task effect findings across all three experiments can be found in the Supplement (Supplementary Table 24).

Discussion

This study introduced an experimental learning paradigm in which participants were motivated to estimate two types of uncertainty—noise (stochasticity) and volatility (changeability). This was tested across three independent experiments (online: N = 580; N = 147; in-person N = 19). The results provided strong evidence that participants followed a normative learning framework and demonstrated that the novel paradigm effectively captured these behaviours across all contexts. This was reflected in task outcomes that could be directly observed, such as the ability to learn and adapt to volatility (performance error), noise/stochasticity (beam width), and LR (calibration). Consistent with a normative learning account, participants showed significant increases in LR, and performance and prediction errors in volatile environments compared to stable and noisy ones. They accurately estimated underlying noise and distinguished it from volatility, as demonstrated by the use of the beam. The computational modelling approach further disentangled the effects of noise and mean changes, confirming that participants’ priors aligned with the task instructions.

In the first experiment, directly accessible task measures revealed a non-normative learning pattern in individuals with higher psychometric schizotypy and delusional ideation. These individuals exhibited higher LRs or performance errors in noisy environments compared to volatile ones, contrary to what is expected for successful learning and what was seen in those with lower psychotic-like symptoms and susceptibility. Additionally, all psychosis-like traits tested (paranoia, delusions, schizotypy (Experiment 1)), were linked to reduced LR adaptation when transitioning from noisy to volatile environments, i.e. their LR updates were smaller despite increased volatility. For schizotypy, individuals additionally showed poor adaptation between stable and noisy environments. This inflexible learning strategy has been found across psychosis and anxiety disorders, but not depression31,49,50,51. Interestingly, this was also reflected in our findings. In these studies, this inflexible adaptation of LRs led to suboptimal decision-making strategies when learning under volatility. In our study, while we see less flexible updating to changes in uncertainty and evidence of non-normative learning, only paranoia saw an impact on overall performance. While findings relating to direct task metrics remained stable in Experiment 2, we were unable to replicate the symptom findings in a preselected schizotypy sample. The reasons for this are explored in the limitations section below; however, a post hoc power analysis revealed this may relate to insufficient power, with replication needed (see Supplementary Notes2.3 and Supplementary Materials: S3.2.4 for full power analysis).

Computational modelling showed that participants used their belief about the noise in the environment (β1) increasingly across the task blocks (as complexity increased). However, those scoring high in delusional ideation (block-specific) and pre-selected psychometric schizotypy (Experiment 2; main effect) presented with lower noise belief values, i.e. they used their beliefs about the noise in the environment less than others when making decisions. Aberrant belief updating has been linked to abnormalities in processing volatility in psychosis12,19,52. However, previous tasks and models have not fully integrated beliefs about both noise and volatility within a unified framework. This is crucial because dysfunction in one area will trigger compensatory changes in the other. Consequently, the literature has produced mixed findings on volatility beliefs, with some studies suggesting over-updating, while others report reduced updating, flexibility, or overconfidence53,54. By considering both types of uncertainty, our findings suggest that psychosis-susceptibility and delusional ideation may be more closely related to impaired noise detection. This impairment seems to lead to a compensatory increase in perceived volatility, resulting in inflexible updating and non-normative learning patterns. For example, symptoms may disrupt an individual’s ability to accurately infer environmental noise, leading them to misinterpret noise (random, meaningless information) as volatility (meaningful change) and adjust their beliefs inappropriately. This rise in superfluous information may increase the likelihood of perceiving illusory patterns, potentially leading to maladaptive responses. A less flexible adaptation to noise during volatility may heighten feelings of uncertainty and strangeness, potentially triggering dysfunctional compensatory strategies such as apophenia, hypermentalisation, and jumping to conclusions55,56,57.

Individuals with higher paranoia scores showed globally reduced performance (score), underpinned by a poorer grasp of underlying statistical contingencies, regardless of the environment (e.g. consistent incorrect beam use and mean estimation). Poor global performance on probabilistic learning tasks is common in psychotic disorders8,9,11,58,59,60,61,62, yet it is unclear whether these findings relate to impaired task comprehension, or if these individuals perceive the world to be highly changeable and, as such, show more “random” responding (temperature parameter)53,63. This task was intentionally designed to mitigate cognitive load, providing limited uncertainty-related information via instructions before each block within a gamified context (see Supplementary Table 2). Moreover, mid-to-high scores across all experiments suggest the task was not overly difficult. Given this, the results may suggest that poorer performance was due to a reduced ability to learn the statistical components of the task (e.g. larger performance errors and inappropriate beam width) and (perhaps leading to) a failure to adapt to changes in volatility, both from stable to volatile environments and from noisy to volatile ones. While replication is needed, computational modelling showed evidence that this may be related to higher trial-wise estimates of volatility more generally (kax). For individuals with higher paranoia, it seems that the type of uncertainty is less relevant, with a general overestimation of volatility potentially leading to unstable internal models and noisier, less optimal behaviour. These findings may indicate that aberrant inference under uncertainty may show subtle differences between symptoms, within disorders—something which may be important when moving forward toward more clinically useful computational phenotyping.

Including symptom scales that captured commonly co-occurring symptoms such as anxiety and depression proved fruitful. The overlap between symptom scales was reflected in the moderately sized correlation coefficients (see Supplementary Table 4), highlighting the importance of including potentially comorbid symptoms in analysis. While we were able to capture some of the variance associated with these symptoms within our modelling approach, this could be improved upon by the use of dimension reduction techniques, such as clustering64. Some interesting findings emerged that warrant further investigation. For example, individuals with higher depression scores showed an overall performance benefit that was supported by more accurate estimates of the mean (performance error) and variance (beam width), as well as increased overall LRs. The overall improved performance was further increased in our bivalent block—indicating that they further increased their lead when learning was not only driven by rewards but also by punishment.

We note a number of limitations and potential avenues for future research in the following section. Firstly, in relation to task design and model assumptions, we suggest that while beam width closely tracked the underlying standard deviation across conditions, it was also linked to reward. Thus, our computational β1 parameter may partially reflect risk aversion rather than noise sensitivity alone. Additionally, the task employed a novel computational framework (JGET) with untested priors and some model fit issues in a subset of participants. While block-wise fits and simulations were encouraging, future work should compare this model to more established alternatives (e.g. refs. 4,6,65), especially in modelling stochasticity-related learning, which remains underexplored (but see ref. 5). Extending trial counts could support more complex hierarchical models, though this must be balanced against practical limitations in clinical and online settings.

Secondly, while our task elicited consistent group-level effects across samples and moderate to good intraclass correlations between online and in-person settings, the individual-level parameters may still not yet meet reliability thresholds required for clinical use66. Parameter recovery serves as an upper bound to reliability, thus we have to note that our computational parameters of interest failed to meet these psychometric standards in at least some of the task blocks. This is in line with a previous study investigating psychometric properties of volatility-based learning with the HGF model66. Thus, especially our schizotypy findings for the noise belief coefficient in the Volatility Block should be treated with caution. Technically, this might be due to a mismatch between the task (no variation in noise) and the computational model (the noise belief affects the variance in response), while at the same time this highlights the difficulty in experimentally assessing non-normative/aberrant learning that we hypothesise for the psychosis prone state. Longitudinal or repeated-measures designs are needed to establish the stability of learning metrics and model parameters over time.

It is also important to consider convergent validity and generalisability. We compared outputs from the Space JUnc task to an established paradigm that is well known for capturing aberrant learning under uncertainty in psychosis, a probabilistic reversal learning task19. We found a moderate relationship between comparable task blocks (i.e. the volatility and combined uncertainty levels). However, as Eckstein et al.67 highlight, the interpretation of computational parameters is highly context-dependent, and their generalisability across tasks is often limited67. While both tasks compared here require participants to update their beliefs about a hidden, changing environment, the comparison computed in this experiment suffers from differences in the format of the tasks, e.g. categorical choices in the PRL task (selecting one of three decks) vs continuous predictions in our task (inferring a numeric mean), as well as there being no explicit measure of trial-wise noise estimates in the PRL. As such, the comparison focused primarily on strategy-level responses, and we were unable to compare noise explicitly across both tasks. Future work should include further cross-task comparisons to establish construct validity. In the meantime, it remains unclear whether task-derived indices like β1 or κx capture domain-general learning processes.

Another important consideration is effect sizes and clinical utility. The observed associations between psychosis-relevant traits and task metrics were statistically significant but small (r ~ 0.10–0.15), explaining approximately 1–2% of behavioural variance. While this magnitude is typical of computational psychiatry studies68,69, it limits immediate clinical utility. Rather than simply calling for replication, future work should focus on developing task designs and modelling approaches that increase signal-to-noise ratio and produce more substantial individual differences. Approaches may include adaptive task designs, richer incentive structures, or integration of multimodal data (e.g. neuroimaging, ecological momentary assessment) to better capture variance relevant for diagnosis or prognosis. From a theoretical perspective, these small effects provide only modest support for volatility- or noise-based accounts of psychosis. While the findings are broadly consistent with hypotheses of aberrant uncertainty processing25,53, they explain a limited portion of the variance and should be interpreted accordingly. Nonetheless, small but consistent effects can serve as building blocks for more comprehensive mechanistic models when interpreted in conjunction with other data sources (e.g. pharmacology, genetics, neuroimaging).

While online, digital data collection methods are becoming more frequent, particularly using gamified formats27,70, limitations in attentional control must be acknowledged, despite benefits like increased anonymity71. To understand the impact of the setting, we tested the task in both in-person and online environments. Replicable effects across online and in-person samples were found, as well as indications of consistent engagement.

Finally, in our preselected schizotypy sample, we did not replicate symptom effects; however, post hoc power analysis suggested only 36% power to detect effects observed in Experiment 1 (see Supplementary Notes 2.2 and Supplementary Fig. 3), indicating the null may reflect low power rather than a true absence72. This highlights the broader challenge in computational psychiatry of detecting subtle individual differences, especially with limited trait variance66. As Hedge et al.73 note, tasks yielding strong group effects may still lack reliability at the individual level73. Our task likely captures group-level learning but may not reliably distinguish between individuals. Future research should prioritise task reliability and repeated measures to better detect individual variability in uncertainty processing.

In sum, while our findings offer a promising framework for studying uncertainty estimation in relation to psychosis-like traits, future work should (1) assess test–retest reliability, (2) validate task parameters against other paradigms, (3) explore alternative models of uncertainty processing, and (4) test clinical groups with designs optimised for individual-level prediction.

In conclusion, this study demonstrates that joint uncertainty estimation can be effectively captured using a novel method. Once accounting for the joint estimation of uncertainty during learning, our results indicate that, rather than psychotic traits being driven by the overestimation of volatility, aberrant belief updating may instead stem from the misattribution of noisy (non-meaningful) information as volatile (meaningful). This deficit in uncertainty estimation could heighten the risk of information overload and contribute to symptom development at a premorbid stage. Accurate assessment of the joint estimation of uncertainty has the potential to produce reliable, transdiagnostic, and clinically meaningful computational phenotypes that can be measured in a minimally invasive and engaging way.

Data availability

De-identified data will be made available on request. All code relating to the analysis is available here: https://github.com/ToniGibbsDean/Gibbs-Dean-Katthagen-et-al.-2024.git.

Code availability

The code base for all analyses can be found at https://github.com/ToniGibbsDean/Gibbs-Dean-Katthagen-et-al.-2024.git.

References

Behrens, T. E. J., Woolrich, M. W., Walton, M. E. & Rushworth, M. F. S. Learning the value of information in an uncertain world. Nat. Neurosci.10, 1214–1221 (2007).

Mathys, C., Daunizeau, J., Friston, K. J. & Stephan, K. E. A Bayesian foundation for individual learning under uncertainty. Front. Hum. Neurosci. 5, 39 (2011).

Pulcu, E. & Browning, M. The misestimation of uncertainty in affective disorders. Trends Cogn. Sci. 23, 865–875 (2019).

Piray, P. & Daw, N. D. A model for learning based on the joint estimation of stochasticity and volatility. Nat. Commun. 12, 1–16 (2021).

Mikus, N., Lamm, C. & Mathys, C. Computational phenotyping of aberrant belief updating in individuals with schizotypal traits and schizophrenia. Biol. Psychiatry https://doi.org/10.1016/J.BIOPSYCH.2024.08.021 (2024).

Piray, P. & Daw, N. D. Computational processes of simultaneous learning of stochasticity and volatility in humans. Nat. Commun.15, 1–16 (2024).

Fletcher, P. C. & Frith, C. D. Perceiving is believing: a Bayesian approach to explaining the positive symptoms of schizophrenia. Nat. Rev. Neurosci.10, 48–58 (2008).

Kaplan, C. M. et al. Estimating changing contexts in schizophrenia. Brain 139, 2082–2095 (2016).

Katthagen, T., Kaminski, J., Heinz, A., Buchert, R. & Schlagenhauf, F. Striatal dopamine and reward prediction error signaling in unmedicated schizophrenia patients. Schizophr. Bull. 46, 1535–1546 (2020).

Griffin, J. D. et al. Distinct alterations in probabilistic reversal learning across at-risk mental state, first episode psychosis and persistent schizophrenia. Sci. Rep.14, 1–18 (2024).

Schlagenhauf, F. et al. Striatal dysfunction during reversal learning in unmedicated schizophrenia patients. Neuroimage 89, 171–180 (2014).

Deserno, L. et al. Volatility estimates increase choice switching and relate to prefrontal activity in schizophrenia. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 5, 173–183 (2020).

Cole, D. M. et al. Atypical processing of uncertainty in individuals at risk for psychosis. Neuroimage Clin. 26, 102239 (2020).

Diaconescu, A. O., Hauke, D. J. & Borgwardt, S. Models of persecutory delusions: a mechanistic insight into the early stages of psychosis. Mol. Psychiatry 24, 1258–1267 (2019).

Hauke, D. J. et al. Altered perception of environmental volatility during social learning in emerging psychosis. Comput. Psychiatry 8, 1 (2024).

Fromm, S., Katthagen, T., Deserno, L., Heinz, A., Kaminski, J., & Schlagenhauf, F. Belief updating in subclinical and clinical delusions. Schizophr. Bull. Open 4, sgac074 (2023).

Sheffield, J. M., Smith, R., Suthaharan, P., Leptourgos, P. & Corlett, P. R. Relationships between cognitive biases, decision-making, and delusions. Sci. Rep.13, 1–16 (2023).

Suthaharan, P. et al. Paranoia and belief updating during the COVID-19 crisis. Nat. Hum. Behav. 5, 1190–1202 (2021).

Reed, E. J. et al. Paranoia as a deficit in non-social belief updating. ELife 9, 1–55 (2020).

Nour, M. M. et al. Dopaminergic basis for signaling belief updates, but not surprise, and the link to paranoia. Proc. Natl. Acad. Sci. USA 115, E10167–E10176 (2018).

Stuke, H., Weilnhammer, V. A., Sterzer, P. & Schmack, K. Delusion proneness is linked to a reduced usage of prior beliefs in perceptual decisions. Schizophr. Bull. 45, 80–86 (2019).

Zhao, W. & Cannon, T. D. Moral learning and positive schizotypy: Social cognitive mechanisms in psychosis- proneness. Schizophr. Res. 266, 156–164 (2024).

Kapur, S. Psychosis as a state of aberrant salience: a framework linking biology, phenomenology, and pharmacology in schizophrenia. Am. J. Psychiatry 160, 13–23 (2003).

Sterzer, P. et al. The predictive coding account of psychosis. Biol. Psychiatry 84, 634–643 (2018).

Adams, R. A. In Computational Psychiatry: Mathematical Modeling of Mental Illness (eds Anticevic, A. & Murray, J. D.) 175–195 (Academic Press, 2018).

Howes, O. D., Hird, E. J., Adams, R. A., Corlett, P. R. & McGuire, P. Aberrant salience, information processing, and dopaminergic signaling in people at clinical high risk for psychosis. Biol. Psychiatry 88, 304–314 (2020).

Lumsden, J., Edwards, E. A., Lawrence, N. S., Coyle, D. & Munafò, M. R. Gamification of cognitive assessment and cognitive training: a systematic review of applications and efficacy. JMIR Serious Games 4, e5888 (2016).

Mathys, C. D. et al. Uncertainty in perception and the Hierarchical Gaussian Filter. Front. Hum. Neurosci. 8, 825 (2014).

Johns, L. C. & van Os, J. The continuity of psychotic experiences in the general population. Clin. Psychol. Rev. 21, 1125–1141 (2001).

van Os, J., Linscott, R. J., Myin-Germeys, I., Delespaul, P. & Krabbendam, L. A systematic review and meta-analysis of the psychosis continuum: evidence for a psychosis proneness-persistence-impairment model of psychotic disorder. Psychol. Med. 39, 179–195 (2009).

Gibbs-Dean, T. et al. Belief updating in psychosis, depression and anxiety disorders: a systematic review across computational modelling approaches. Neurosci. Biobehav. Rev. 147, 105087 (2023).

Freeman, D. et al. The revised Green et al., Paranoid Thoughts Scale (R-GPTS): psychometric properties, severity ranges, and clinical cut-offs. Psychol. Med. 51, 244–253 (2021).

Peters, E. R., Joseph, S. A. & Garety, P. A. Measurement of delusional ideation in the normal population: introducing the PDI (Peters et al. Delusions Inventory). Schizophr. Bull. 25, 553–576 (1999).

American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (3rd Revised) (APA, 1987).

Raine, A. & Benishay, D. The SPQ-B: a brief screening instrument for schizotypal personality disorder. J. Pers. Disord. 9, 346–355 (1995).

Raine, A. The SPQ: a scale for the assessment of schizotypal personality based on DSM-III-R criteria. Schizophr. Bull. 17, 555–564 (1991).

Clark, L. A. & Watson, D. Tripartite model of anxiety and depression: psychometric evidence and taxonomic implications. J. Abnorm. Psychol. 100, 316–336 (1991).

Berniker, M., Voss, M. & Kording, K. Learning priors for Bayesian computations in the nervous system. PLoS ONE 5, e12686 (2010).

Akaike, H. In Selected Papers of Hirotugu Akaike. Springer Series in Statistics (eds Parzen, E., Tanabe, K. & Kitagawa, G.) 199–213 (Springer, 1998).

Wagenmakers, E. J. & Farrell, S. AIC model selection using Akaike weights. Psychon. Bull. Rev. 11, 192–196 (2004).

Vrieze, S. I. Model selection and psychological theory: a discussion of the differences between the Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Psychol. Methods 17, 228–243 (2012).

Verbyla, A. P. A note on model selection using information criteria for general linear models estimated using REML. Aust. N. Z. J. Stat. 61, 39–50 (2019).

Corbeil, R. R. & Searle, S. R. Restricted maximum likelihood (REML) estimation of variance components in the mixed model. Technometrics 18, 31–38 (1976).

Schermelleh-Engel, K., Moosbrugger, H. & Müller, H. Evaluating the fit of structural equation models: tests of significance and descriptive goodness-of-fit measures. Methods Psychol. Res. 8, 23–74 (2003).

Benjamini, Y. & Hochberg, Y. On the adaptive control of the false discovery rate in multiple testing with independent statistics. J. Educ. Behav. Stat. 25, 60–83 (2000).

Benjamini, Y. & Yekutieli, D. The control of the false discovery rate in multiple testing under dependency. Ann. Stat. 29, 1165–1188 (2001).

Leek, J. T. & Storey, J. D. A general framework for multiple testing dependence. Proc. Natl. Acad. Sci. USA 105, 18718–18723 (2008).

Frässle, S. et al. TAPAS: an open-source software package for translational neuromodeling and computational psychiatry. Front. Psychiatry 12, 680811 (2021).

Browning, M., Behrens, T. E., Jocham, G., O’Reilly, J. X. & Bishop, S. J. Anxious individuals have difficulty learning the causal statistics of aversive environments. Nat. Neurosci. 18, 590–596 (2015).

Hernaus, D., Gold, J. M., Waltz, J. A. & Frank, M. J. Impaired expected value computations coupled with overreliance on stimulus-response learning in schizophrenia. Biol. Psychiatry Cogn. Neurosci. Neuroimaging3, 916–926 (2018).

Huang, H., Thompson, W. & Paulus, M. P. Computational dysfunctions in anxiety: failure to differentiate signal from noise. Biol. Psychiatry 82, 440–446 (2017).

Henco, L. et al. Aberrant computational mechanisms of social learning and decision-making in schizophrenia and borderline personality disorder. PLoS Comput. Biol. 16, e1008162 (2020).

Baker, S. C., Konova, A. B., Daw, N. D. & Horga, G. A distinct inferential mechanism for delusions in schizophrenia. Brain 142, 1797–1812 (2019).

Nassar, M. R., Waltz, J. A., Albrecht, M. A., Gold, J. M. & Frank, M. J. All or nothing belief updating in patients with schizophrenia reduces precision and flexibility of beliefs. Brain 144, 1013 (2021).

Broome, M. R. et al. Delusion formation and reasoning biases in those at clinical high risk for psychosis. Br. J. Psychiatry Suppl. 51, s38–s42 (2007).

Fyfe, S., Williams, C., Mason, O. J. & Pickup, G. J. Apophenia, theory of mind and schizotypy: perceiving meaning and intentionality in randomness. Cortex 44, 1316–1325 (2008).

Whitson, J. A. & Galinsky, A. D. Lacking control increases illusory pattern perception. Science 322, 115–117 (2008).