Abstract

Building facade structures form the foundation for 3D model reconstructions, making the extraction of facade structures from 3D point clouds a key research area. A method for extracting the building facade structure from image-based laser point clouds by considering semantic information is proposed. First, point cloud segmentation and clustering are applied to organize the data into distinct planes. Second, semantic images and corresponding semantic image laser point cloud models are generated from each plane. Finally, an enhanced method named as SemColorED extracts the facade structures, and followed by optimization based on building morphology. Evaluation of the method using actual 3D laser point cloud data and the Semantic3D dataset shows improved accuracy, recall, and integrity compared to the current methods.

Similar content being viewed by others

Introduction

As urban informatization rapidly advances, precise and high-quality three-dimensional (3D) building modeling has become a critical component of smart cities. The framework for constructing 3D building models is provided by building facades, which consist of edge segments such as doors, windows, balconies, columns, eaves, staircases, and wall segmentation lines. Therefore, building facade structures are significant across various disciplines, such as refined 3D building modeling1,2, damage monitoring3,4, reconstruction5,6,7, and renovation8,9. Furthermore, 3D laser point cloud data (LPCD) possesses advantages, such as easy access, compactness, and accurate positioning, which can represent the building facade structures4,10,11. At present, the extraction of facade structures from 3D LPCD represents a key research priority for the advancement of fine 3D building modeling12,13.

Facade structure extraction methods of the building 3D LPCD mainly include direct extraction, image-assisted extraction, and semantic-based extraction methods. In the direct extraction method, the coordinate information and reflectance intensity of 3D LPCD are directly utilized to calculate the gradient, normal vectors, and curvature of the point cloud14,15. Features corresponding to corners or boundary structures within the facade point cloud are identified and extracted based on computational data attributes16,17,18. The direct extraction method operates independently without relying on additional data and encountering conversion errors. However, challenges such as noise in point clouds and variability in building styles hinder the accuracy and effectiveness of the extraction method. A deeper exploration of point cloud data is needed.

The image-assisted extraction method leverages details in two-dimensional (2D) images, such as color and texture, to facilitate the extraction of facade features from building 3D LPCD19,20. 2D image encompasses precise indexing details and rich textural attributes. The integration of LPCD with detailed image indexing can gather information about the building facade structures accurately. However, extracting results are influenced by abundant textures in the images because of building exteriors21. Further exploration and research are required for the image-assisted extraction method.

The semantic descriptive model is constructed from the contextual information of various structures within the building 3D LPCD, establishing the foundation of sematic extraction methods7,22. The semantic features of the point clouds in the training set were analyzed by configuring the training set manually. Furthermore, the distinct features among the training sets are utilized to recognize the structural information of building facades23. The method enhances the accuracy of structural detail extraction, providing a more precise reflection of the actual condition of buildings. However, the effectiveness of semantic extraction depends on selecting an appropriate training set. Accurately delineating the scope of structural lines is challenging, potentially resulting in redundant or sparse extraction outcomes. Therefore, obtaining an accurate and continuous facade structure using semantic extraction methods requires further discussion.

Overall, all three extraction methods exhibit respective shortcomings that make accuracy improvement challenging. Meanwhile, the advantages of these three methods can effectively complement one another. 3D laser point clouds offer precise coordinate attributes, semantic information aids in delineating structural boundaries, and 2D images provide topological information and texture details. Integrating these data has promising potential to improve building facade structure extraction accuracy. Therefore, extracting building facade structures accurately and comprehensively requires a data model that includes semantic, 2D images, and 3D laser point clouds.

An image-based laser point cloud building facade structure extraction method is proposed in this study by considering semantic information. The method utilizes the regional growth approach for point cloud segmentation and clustering to determine plane membership for the building 3D LPCD. The semantic image laser point cloud (SILPC) model is constructed based on the semantic images generated from corresponding plane point clouds. Subsequently, an improved SemColorED method based on the ColorED detector is proposed within the data model framework to enhancing the accuracy of facade structure extraction24. Finally, accurate and comprehensive extraction results are obtained through the optimization method, which takes into account the morphological characteristics of building facade structures. “Methods” introduces the basic idea and details of the proposed method. “Results” conducts an extraction case study using three groups of 3D building LPCD (i.e., the Teaching Building in Gannan University of Science and Technology, the Civil and Surveying & Mapping Engineering College Building (CSMECB) in Jiangxi University of Science and Technology (JXUST), and Partial building 3D LPCD named Stgallen3 of open-source data sets (Semantic3D))25. “Evaluation and validation” discusses the effectiveness, value setting of parameters, and potential limitations of the proposed method. Finally, “Discussion” presents the conclusion of the proposed method.

Methods

Basic idea and overall design

2D image obtains precise topological relationships and abundant texture information, semantic information aids in delineating structural boundaries, and 3D laser point clouds provide accurate coordinate information. The complementary advantages of the aforementioned data can potentially improve the accuracy of extracting building facade structures from building 3D LPCD. However, existing methods suffer from suboptimal extraction outcomes due to the lack of a suitable data model that integrates 2D images, semantic information, and 3D laser point clouds. This limitation hampers the ability to fully exploit the potential offered by semantic, textural, and spatial information. Furthermore, building structures exhibit prominent morphological characteristics that can be leveraged to optimize the extracted facade structure line segments. Therefore, developing a data model that incorporates semantics, 2D images, and 3D laser point clouds is anticipated to improve the accuracy of building facade extraction.

The proposed method mainly involves three steps (Fig. 1). (1). Constructing SILPC model. After determining the scale of the building 3D LPCD, the input point clouds are segmented and clustered based on region-growing strategies. Semantic images are generated from the LPCD of respective planes to construct the comprehensive SILPC model. (2). Extracting facade structure based on the point cloud model. Based on the constructed SILPC data model, the SemColorED method is proposed for extracting building 3D facade structures. 3D facade structures are restored from 2D line segments after filtering non-conforming edge pixels, considering the assigned semantic labels. (3). Optimizing extraction structure based on morphological features. To make the structural lines complete and accurate, an optimization strategy is proposed based on the morphological characteristics of building facade structures.

SILPC model construction

The integration of semantic information, 2D images, and 3D laser point clouds holds the promising potential to achieve effective complementarity among one another, thereby mitigating the influence of point cloud texture and noise. Therefore, constructing the SILPC model is crucial for the accurate extraction of building facades, extending from our recently proposed image-based LPCD model19. The semantic information utilized in this study is obtained by our recently proposed improved CANUPO method in 202423.

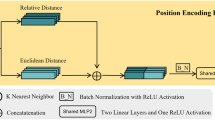

A concept called point cloud scale \({P}_{{Scale}}\), is proposed to achieve an accurate and objective construction of point cloud models suitable for most buildings. \({P}_{{Scale}}\) is defined as the comprehensive distance between each point in the point cloud, as shown in Fig. 2. Setting a point cloud set P as {\({P}_{0},{\,P}_{1},{\,P}_{2}\cdots {P}_{i}\cdots {P}_{k}\)}, the nearest neighboring points (\({P}_{n1}\), \({P}_{n2}\), \({P}_{n3}\)) is obtained for each point \({P}_{i}\) in the P. The average distance between \({P}_{i}\) and its nearest neighbors, \({P}_{n1}\), \({P}_{n2}\), and \({P}_{n3}\), is computed to determine \({P}_{{Scale}}\) of the corresponding point, as shown in Formula 1.

A region-growing-based point cloud segmentation clustering method is proposed to solve the problem of crucial structural information loss, as shown in Algorithm 1. Building 3D LPCD is a 3D dataset that comprises multiple planes. Hence, directly projecting the complete building 3D LPCD into a 2D image would result in data loss. The proposed method is divided into point cloud segmentation and subsequent clustering. First, the point cloud is sorted in ascending order based on curvature. Second, similarity metrics, such as the angle between normals, color information, and distance, are used to assess similarity between points, as shown in Formula 2. Finally, point cloud segmentation for building planes is accomplished by evaluating whether the distance and angle conform to predefined threshold values, as shown in Fig. 3.

where \({{\bf{n}}}_{{\rm{i}}}\) and \({{\bf{n}}}_{{\rm{j}}}\) are the normals of the added points and the points under consideration, respectively; θ is the angle threshold set to 15°. \({{\bf{Col}}}_{{\rm{i}}}\) and \({{\bf{Col}}}_{{\rm{j}}}\) refer to the hue-saturation value of the added points and points under consideration, respectively, where H ranges from 0 to 255, and S and V range from 0 to 100. \({{\bf{P}}}_{{\rm{i}}}\) and \({{\bf{P}}}_{{\rm{j}}}\) are the coordinates of the added points and points under consideration, respectively. Colth is the color threshold with a value of 250, while th signifies the parallel distance threshold set to 30\({P}_{{Scale}}\).

Algorithm 1 Region Growing

Input: thAngle: Threshold angle for normal deviation

1: pointData: 3D point cloud data

2: pcaInfos: PCA information for each point

Output: regions: List of detected regions (clusters)

3: thNormal ← cos(thAngle) ▷ Compute normal threshold

4: Sort points by curvature λ0 in ascending order

5:. for each point in sorted list do

6: if point is not used then

7: Initialize a cluster with the current point

8: for each neighboring point do

9: Compute normal deviation

10: if normal deviation ≥ thNormal then

11: Continue

12: end if

13: Compute distance and color deviation

14: if distance or color deviation ≥ threshold then

15: Continue

16: end if

17: Add point to the cluster and mark it as used

18: end for

19: end if

20: end for

21: return regions ▷ Return the detected regions

Strict control of the growth threshold is necessary during the point cloud segmentation process to ensure accuracy. As a result, over-segmentation occurs inevitably. A point cloud clustering strategy is proposed to mitigate the excessive fragmentation of planes, as shown in Algorithm 2. Adjacent point cloud planes with similar colors and normal vectors are merged. The region-growing-based point cloud clustering method employs similar principles and similarity metrics as segmentation but differs in transitioning from point growth to neighboring regions26. An initial seed region is selected, and neighboring regions resembling the seed region are subsequently incorporated, as shown in Fig. 4.

Algorithm 2 Region Clustering

Input: thAngle: Threshold angle for plane deviation regions: Input regions

Output: regions: Merged regions

1: Step 1: Fit plane for each region using PCA i ∈ 1…regions.size()

2: Extract points for region i

3: Apply PCA to extract plane information for region i

4: Calculate average scale scaleAvg and update patches[i].scale

5: Step 2: Label each point with its region i ∈ 1…regions.size()

6: Label each point in regions[i] with its region index

7: Step 3: Find adjacent patches i ∈ 1…patches.size() neighboring point in patches[i]

8: Check if the neighboring point belongs to an adjacent patch

9: if true then

10: Add it to the list of adjacent patches for patches[i]

11: end if

12: Step 4: Merge adjacent patches i ∈ 1…patches.size()

13: if patch i is not merged then

14: Start a new cluster with patch i

15: while not all adjacent patches processed do adjacent patch

16: Check if merge conditions (angle, distance, color) are met

17: if conditions are met then

18: Add patch to the cluster

19: end if

20: if cluster size exceeds thRegionSize then

21: Stop merging

22: end if

23: end while

24: end if

25: return regions

The point cloud clustering strategy mainly involves three steps.

-

(1)

Fitted planes are computed for segmented point clouds along with normal vectors, colors, and distribution scale. Then, a label is assigned to each point within the respective region, thereby facilitating subsequent indexing.

-

(2)

Based on the point cloud labels, the neighboring regions of each plane region are retrieved, (i.e., regions with inconsistent labels of adjacent point clouds). Subsequently, the suitability of neighboring regions for clustering is determined based on a predefined similarity criterion. The point cloud regions that meet clustering requirements are merged and unified labels.

-

(3)

The steps are performed repeatedly and iteratively until no region in the dataset meets the clustering requirements.

The semantic image is a 2D image that contains semantic information created by projecting the point cloud onto their planes. The image consists of RGB color and semantic label layers, shown in Fig. 5. To generate a semantic image, a projection strategy is used to map clustered point cloud planes onto a 2D image by establishing a correspondence between 3D spatial coordinates and 2D pixels. The projection strategy mainly involves three steps. First, the points that belong to each plane are projected onto the corresponding plane. Second, the grid method is used to transform the 2D coordinates into pixel coordinates. The grid width is determined by the max value at the point cloud scale within the corresponding plane, as shown in Formula (3).

where \({u}_{i}\) and \({v}_{i}\) are the pixel coordinates, and P (\({x}_{i},\,{\,y}_{i}\)) is the 2D coordinate. P (\({x}_{min},\,{\,y}_{{m}{i}{n}}\)) is the minimum point within the 2D coordinate system. \({S}_{\max }\) is the max value at the point cloud scale.

Finally, the semantic image is created by merging the RGB color and semantic label layers using the semantic labels and RGB color properties of the point cloud. Furthermore, the point cloud, semantic image, and projection matrix are recorded to construct the SILPC model, as shown in Fig. 6.

Facade structure extraction based on point cloud model

An extraction method called SemColorED is introduced to detect and extract facade structures from the SILPC model. The SemColorED method is improved from the ColorED detector24, which is augmented with an expanded input interface to incorporate semantic information within the decision-making framework of the algorithm. Through the ColorED detector, the relevance of the extracted edge pixels is determined based on the semantic label layer of the image. Only the pixels within the semantic range and those that meet the predefined threshold are kept, as shown in Fig. 7. The distance threshold for finding the nearest labeled point in the semantic layer to a given target pixel is dynamically adjusted based on \({P}_{{Scale}}\).

The proposed method involves three key steps. (1) Construction of the semantic image index. A kd-tree index is constructed using the semantic label and RGB color layers of the point cloud model. (2) Extraction of facade structure point cloud. The ColorED detector extracts edge pixels from each pixel in the RGB color layer. Then, a thorough search is conducted to determine which pixels are adjacent to the semantic regions corresponding to building facades. (3) Fitting of structural line segments. The edge pixels are subjected to a fitting process to create facade line segments. Moreover, the projection matrix is used to restore the 3D structure from the 2D facade in the model.

Extraction structure optimization based on morphological feature

To improve the accuracy of extracting facade structures, an optimization method based on the morphological characteristics of building facades is proposed. This method mitigates the adverse effects of scattered and noisy line segments, which are short and inconsistent with the main direction. The optimization process consists of two parts. (1). Scattered line segments are merged and consolidated by considering vectors and distances between them. (2). A noise line segment filtering method is proposed to filter out noise line segments that deviate from the prominent structural direction of the building based on the primary orientation.

Three scenarios must be assessed to determine whether scattered structural line segments need optimizing, as shown in Fig. 8. (1). The vectors are approximately parallel, meet the length threshold, and the extension of one line segment intersects with another. (2). The line segments intersect, and the vectors are approximately parallel with a suitable distance. (3). The vectors are parallel, and the spaces meet the length threshold. The approximate parallel angle threshold is set at 10°, and the distance threshold adjusts dynamically based on \({P}_{{Scale}}\) variable.

Most buildings are constructed with a rectangular structure supplemented by triangular frameworks to ensure structural stability. Therefore, the noise line segment filtering method designates parallel structural vectors as one category. In contrast, the two categories with the highest quantity and mutually perpendicular vector directions are assigned as the primary building orientation. The building structure lines are divided into primary orientation, non-primary orientation long structures, and non-primary orientation fragmented structures (noisy line segments), as shown in Fig. 9. An optimized filtering of extraction results is accomplished by removing fragmented non-primary orientation structures. The line segment threshold adjusts dynamically with \({P}_{{Scale}}\).

Evaluation metrics

The extraction results of building facade structures can be categorized into two distinct scenarios: accurate extraction and inaccurate extraction. A binary classification is used to represent the extraction results. Positive results are labeled as 1 and negative as 0. Table 1 shows four distinct states based on the truth and extraction values of the final results.

Three metrics, namely, Accuracy, Recall, and Integrities, are used to evaluate the facade extraction results based on the Confusion matrix.

(1) Accuracy refers to the ratio of correctly extracted values to the total number of values, comprehensively evaluating the overall extraction correctness for all extracted values.

(2) Recall corresponds to the ratio of correctly extracted values to the total number of true values, a statistical measure to evaluate the effectiveness of building structure extraction.

(3) Integrities is the ratio between the accurately extracted structural line length and the true length, which is a crucial metric for evaluating the completeness of facade structure line extraction.

where \({L}_{{total}}\) is the total length of line segments obtained through the facade extraction method, \({L}_{{true}}\) is the true length of manually extracted building facade structures.

Data set

Three groups of 3D building LPCD are used as test data, as shown in Fig. 10. Groups 1 and 2 are collected by the RIEGL VZ-1000 3D laser scanner in JXUST. Group 1 data are collected by setting up scanners on the west side of the CSMECB, which is colored based on the transformation matrix obtained through the registration process between the scanner and the camera. The CSMECB contains mostly regular line segment facade structures, such as rectangular doors, windows, cylindrical pillars, and awnings. Group 2 data are collected by setting up a scanner in the south direction of the Administration Building, which is colored based on the transformation matrix calculated through manually selecting points. Issues, such as inaccurate color positioning and texture deformation in CSMECB, serve as a practical test to validate the robustness of the proposed method. Group 3 data from the open-source data set called Stgallen3 is a small building with multiple complex structures of line and curve segments25. Details of the test data are shown in Table 2.

Results

Analysis

This study employs combined data utilizing measured and open-source data to validate the effectiveness and robustness of the proposed method in extracting building facade structures. Three distinct building facade point clouds, namely, the CSMECB, the Administration Building, and the Stgallen3, are meticulously selected to facilitate the extraction of building facade structures. The extraction and optimization results of the test data set are shown in Fig. 11. The windows, pillars, canopies, staircases, and complex wall shapes are all accurately extracted. Complex curved structures, such as the outlines of doors and windows on the Stgallen3 facade, are also extracted accurately by the proposed method. The deformation of windows in the CSMECB can affect extraction results, but the overall framework of extracted structures remains intact without errors.

To quantitatively evaluate the effectiveness of the extracted building facade structures, Accuracy, Recall, and Integrities are used to assess excerpts of the extraction results from the test data set, as shown in Fig. 12. For CSMECB, the Accuracy is 0.87, Recall is 0.91, and Integrities is 0.94. Accuracy is 0.78, Recall is 0.80, and Integrities is 0.92 for the Administration Building. For the Stgallen3, Accuracy is 0.87, Recall is 0.90, and Integrities is 0.91. For the total extraction results, the average Accuracy is 0.85, the average Recall is 0.87, and the average Integrities is 0.92, as shown in Table 3. The proposed method has extracted 87% of building facades with 85% accuracy while achieving 92% completeness of line segments. The facade structures are well extracted using the proposed method according to the extraction and evaluation results.

Evaluation and validation

To comprehensively evaluate the effectiveness and validation of the proposed method, a qualitative comparison was first conducted across four methods for building structure extraction: the Optical image method, Range-image method, Wang method, and Lu method, as shown in Fig. 1321,26. Among the four methods, Lu method demonstrated the most competitive performance. Therefore, it was selected for further quantitative comparisons with the proposed method.

To further validate the effectiveness and robustness of the proposed method, a comparative experiment is conducted using the “Lu method.” The extraction results of the Lu method and the proposed method on three test sets are shown in Figs. 14, 15, and 16. For the proposed method, linear and curvilinear structures of building facades are extracted with utmost completeness and accuracy, as shown in the green area of Fig. 16. The extracted structural line segments are continuous, robust, and resistant to interference from non-structural lines, as shown in the red area of Figs. 14, 15, 16. The Lu method does not accurately distinguish between wall textures and structural lines, resulting in incomplete door and window structure extractions.

To evaluate the extraction results of the two methods quantitatively, three metrics, namely, Accuracy, Recall, and Integrities, are used to assess the local extraction structures, as presented in Fig. 17 and Table 4. The proposed method achieves Accuracy of 0.87, 0.78, and 0.87, whereas the Lu method obtained 0.85, 0.23, and 0.32. The Lu method produced numerous erroneous structural lines due to reliance on texture information. In the Administration Building and Stgallen3 datasets, the Accuracy of the Lu method declined below 30%. The proposed method achieves respective Recall of 0.91, 0.80, and 0.90, whereas the Lu method is 0.85, 0.42, and 0.87. The minimum Recall for the Lu method is only 0.42 because of the challenges in managing texture deformations in the Administration Building dataset. The proposed method achieves Integrities of 0.94, 0.90, and 0.92, whereas the Lu method is only 0.78, 0.15, and 0.71. The discrepancy of the Lu method is due to inherent shortcomings, where extracted line segments are considered trivial. Therefore, the proposed method is better than the Lu method, which enables accurate, efficient, and comprehensive extraction of building facade point clouds.

a The Ground truth of CSMECB; b The proposed method of CSMECB; c The Lu method of CSMECB; d The Ground truth of Administration Building; e The proposed method of Administration Building; f The Lu method of Administration Building; g The Ground truth of Stgallen3; h The proposed method of Stgallen3; i The Lu method of Stgallen3.

Discussion

The generation of semantic images relies on a pivotal parameter known as grid width, which determines the quality of the generated semantic images, thereby affecting the accuracy of extracted facade structures sensitively. To analyze the influence of the grid width on the accuracy of structure extraction, four sets of extraction results, each utilizing different grid widths, are shown in Fig. 18.

Figure 18 demonstrates that larger grid widths mean rougher extracted structures. By contrast, smaller grid widths mean finer extracted structures. The grid width determines the resolution of semantic images. If the width is too small, then empty grid cells will lead to discontinuities in the extracted structures. Therefore, the empirical value of the grid width is set to the max value of the \({P}_{{Scale}}\) collection in this study.

Some limitations compromising the accuracy and effectiveness of the extraction results exist within the proposed method. Terrain occlusion inevitably occurs during the building point cloud scanning process, thereby resulting in point holes in the scan results. Therefore, finding a suitable method to fit and repair holes in point clouds can solve missed detections and false alarms in building 3D LPCD.

An image-based laser point cloud building facade structure extraction method is proposed in this study by considering semantic information to achieve accurate and comprehensive extraction facade results. The proposed method mainly involves three contributions. First, the building 3D LPCD is segmented and clustered using the region-growing strategies. The semantic images and SILPC model are generated based on the clustered point cloud. Second, the SemColorED method is proposed for extracting edge structures from the semantic image based on the SILPC model. The extracted 2D line segments are transformed into 3D with the projection matrix. Third, a facade structure line segment optimization method is proposed based on morphological features, which enhances the extraction process. Based on the proposed method, an accurate and integrity building facade structure can be extracted for refined modeling.

An extraction case study is conducted by using three groups of test data to verify the accuracy and effectiveness of the proposed method. In future work, efforts will be made to address the point cloud poles resulting from terrain occlusion by refining the extracted building structure line segments. Additionally, further investigation into viewpoint selection based on visibility is planned. The application of this method to urban reconstruction will also be explored.

Data availability

The data contains the core buildings of Jiangxi University of Science and Technology that support the findings of this study are available from the corresponding author upon reasonable request. And the open-source data can be find in https://www.semantic3d.net/.

Code availability

The code that support the findings of this study are available from the corresponding author upon reasonable request.

References

Zuo, Z. & Li, Y. A framework for reconstructing building parametric models with hierarchical relationships from point clouds. Int. J. Appl. Earth Obs. 119, 103327 (2023).

Zhao, Q., Zhou, L. & Lv, G. A 3D modeling method for buildings based on LiDAR point cloud and DLG. Comput. Environ. Urban 102, 101974 (2023).

Luo, R., Zhou, Z., Chu, X., Ma, W. & Meng, J. 3D deformation monitoring method for temporary structures based on multi-thread LiDAR point cloud. Measurement 200, 111545 (2022).

Yuan, Y. et al. Three dimensional deformation measurement method based on image guided point cloud registration. Opt. Laser Eng. 161, 107399 (2023).

Haghighatgou, N., Daniel, S. & Badard, T. A method for automatic identification of openings in buildings facades based on mobile LiDAR point clouds for assessing impacts of floodings. Int. J. Appl. Earth Observ. Geoinf. 108, 102757 (2022).

Lu, Y. et al. A deep learning method for building façade parsing utilizing improved SOLOv2 instance segmentation. Energ. Build. 295, 113275 (2023).

Su, Y. et al. DLA-Net: Learning dual local attention features for semantic segmentation of large-scale building facade point clouds. Pattern Recognit. 123, 108372 (2022).

Gao, X. et al. Ancient Chinese architecture 3D preservation by merging ground and aerial point clouds. ISPRS J. Photogramm. 143, 72–84 (2018).

Yin, Y. & Antonio, J. Application of 3D laser scanning technology for image data processing in the protection of ancient building sites through deep learning. Image Vis. Comput. 102, 103969 (2020).

Feng, H. et al. Automated extraction of building instances from dual-channel airborne LiDAR point clouds. Int. J. Appl. Earth Obs. 114, 103042 (2022).

Hamid-Lakzaeian, F. Structural-based point cloud segmentation of highly ornate building façades for computational modelling. Autom. Constr. 108, 102892 (2019).

Maru M. B., Wang Y., Kim H., Yoon H., Park S. Improved building facade segmentation through digital twin-enabled RandLA-Net with empirical intensity correction model. J. Build Eng. 107520 (2023).

Zolanvari, S. M. I., Laefer, D. F. & Natanzi, A. S. Three-dimensional building façade segmentation and opening area detection from point clouds. ISPRS J. Photogramm. Remote Sens. 143, 134–149 (2018).

Dong, X., Xiao, Y., Chen, Z., Yao, J. & Guo, X. GPU-based supervoxel segmentation for 3D point clouds. Comput. Aided Geometr. Des. 93, 102080 (2022).

Poux, F., Mattes, C., Selman, Z. & Kobbelt, L. Automatic region-growing system for the segmentation of large point clouds. Autom. Constr. 138, 104250 (2022).

Khaloo, A. & Lattanzi, D. Robust normal estimation and region growing segmentation of infrastructure 3D point cloud models. Adv. Eng. Inform. 34, 1–16 (2017).

Kim, M., Lee, D., Kim, T., Oh, S. & Cho, H. Automated extraction of geometric primitives with solid lines from unstructured point clouds for creating digital buildings models. Autom. Constr. 145, 104642 (2023).

Wang, Y. et al. Laser point cloud registration method based on iterative closest point improved by Gaussian mixture model considering corner features. Int. J. Remote Sens. 43, 932–960 (2022).

Wang, Y., Hu, X., Zhou, T., Ma, Y. & Li, Z. Efficient building facade structure extraction method using image-based laser point cloud. Trans. GIS 27, 1145–1163 (2023).

Xie, Y., Tian, J. & Zhu, X. X. A co-learning method to utilize optical images and photogrammetric point clouds for building extraction. Int. J. Appl. Earth Obs. 116, 103165 (2023).

Wang, Y., Ma, Y., Zhu, A., Zhao, H. & Liao, L. Accurate facade feature extraction method for buildings from three-dimensional point cloud data considering structural information. ISPRS J. Photogramm. Remote Sens. 139, 146–153 (2018).

Zhang, H. X. & Zou, Z. Quality assurance for building components through point cloud segmentation leveraging synthetic data. Autom. Constr. 155, 105045 (2023).

Hu, X., Wang, Y., Zhou, T. & Mwakapesa, D. S. Building facade structure extraction method based on three-dimensional laser point cloud by considering semantic information. Ann. GIS 30, 291–305 (2024).

Akinlar, C. & Topal, C. ColorED: Color edge and segment detection by Edge Drawing (ED). J. Vis. Commun. Image Represent 44, 82–94 (2017).

Hackel, T. et al. Semantic3D.net: a new large-scale point cloud classification benchmark. ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences; IV-1/W1 (2017).

Lu X., Liu Y., Li K. Fast 3D Line Segment Detection From Unorganized Point Cloud [Internet]. arXiv; 2019 [cited 2022 Jul 3]. Available from: http://arxiv.org/abs/1901.02532.

Acknowledgements

This study is partly supported by the National Natural Science Foundation of China (No. 42471472) and the Youth Jinggang Scholars Program in Jiangxi Province (No. QNJG2020046). The authors would also like to acknowledge the contributions of Jacqueline Wah to the spelling and grammar check for this paper.

Author information

Authors and Affiliations

Contributions

X.H. designed the study, undertook experimental work, data collection and wrote the draft manuscript. Y.W. worked on designing the study and drafting the manuscript. W.Y. worked on designing the experiments and worked on the draft as well as the final manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hu, X., Wang, Y. & Yu, W. Image-based laser point cloud building facade structure extraction method by considering semantic information. npj Herit. Sci. 13, 160 (2025). https://doi.org/10.1038/s40494-025-01728-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s40494-025-01728-5