Abstract

Single-pixel imaging (SPI) faces significant challenges in reconstructing high-quality images under complex real-world degradation conditions. This paper presents an innovative degradation model for the physical processes in SPI, providing the first comprehensive and quantitative analysis of various SPI noise sources encountered in real-world applications. Especially, pattern-dependent global noise propagation and object jitter modelling methods for SPI are proposed. Subsequently, a deep-blind neural network is developed to remove the necessity of obtaining parameters of all the degradation factors in real-world image compensation. Our method can operate without degradation parameters and significantly improve the resolution and fidelity of SPI image reconstruction. The deep-blind network training is guided by the proposed comprehensive SPI degradation model that describes real-world SPI impairments, enabling the network to generalize across a wide range of degradation combinations. The experiment validates its advanced performance in real-world SPI imaging at ultra-low sampling rates. The proposed method holds great potential for applications in remote sensing, biomedical imaging, and privacy-preserving surveillance.

Similar content being viewed by others

Introduction

Single-pixel imaging (SPI) is an innovative and cost-effective imaging technology that leverages a single-pixel detector and specific light field modulation patterns to capture spatial information and reconstruct images1,2,3. This technique is especially applicable in conditions where the use of pixel array detectors becomes impractical due to their high costs or challenges in fabrication, particularly for certain non-visible light wavebands4. On the other hand, in conditions of low light intensity, where pixel array detectors struggle to perform well, SPI demonstrates significant advantages, providing a superior solution compared to traditional imaging methodologies5. It has been applied in various fields and demonstrated unique advantages, including image compressive sensing6,7, remote sensing8, three-dimensional imaging9, privacy protection10, moving object trajectory tracking11,12, and multispectral imaging13,14.

The role of a degradation model in image recovery is of paramount importance15. Creating a comprehensive model that closely mimics the actual circumstances can lead to remarkable improvements in image quality16. However, the degradation model for SPI is markedly different from that of a conventional pixel array camera. Compared with conventional imaging, SPI includes distinct operations, such as coded illumination modulation, intensity integration across the entire scene and computational image reconstruction. The detailed comparison of the degradation processes between the conventional passive imaging and SPI is provided in Supplementary Section 1 and Fig. S1.

Some studies are conducted for SPI degradation modeling. For instance, in 2017, Lyu et al.17 proposed a neural network that maps noisy computational ghost imaging (CGI) reconstructions to high-quality images. However, this method lacks generalization to different degradation types. In 2021, Hu et al.18 developed a generative adversarial network (GAN) to enhance Fourier SPI degraded by frequency truncation at low sampling ratios. This method reduces the ringing artefacts in the reconstructed images. In many real-world scenarios, the imaged objects are not perfectly static. Due to the sequential acquisition process of SPI, even minor temporal fluctuations—such as object motion, platform jitter, or illumination instability—can cause significant inconsistencies across measurements. This introduces complex and global artefacts in the reconstructed image, highlighting the need for a degradation model that faithfully captures the temporal sensitivity of SPI systems. In 2020, Huang et al. investigated temporal fluctuations in SPI caused by object or platform trembling19. They propose to treat the movement process as a number of intermediate static states. However, it requires extensive computation. Scattering-like degradations—such as haze, fog, optical defocus, and mild underwater turbidity—are ubiquitous in real-world environments and can profoundly impact the effectiveness of SPI applications. Le et al.20 conducted underwater SPI to examine the robustness of CGI reconstruction under scattering and attenuation. Huang et al.21 extended their analysis to wind conditions by modeling the optical wavefront with airflow-induced phase perturbations. While some degradation factors of SPI imaging have been studied, pattern-dependent global noise propagation process and object jitter modeling methods remain to be further investigated. More importantly, most of the existing degradation compensation methods only compensate for one degradation factor and require accurate degradation parameters. A comprehensive study and a feasible solution for complex real-world degradations are needed in the SPI field.

This study addresses the challenge of robust reconstruction in SPI under complex and unknown degradations by proposing a pair of physically grounded and generalizable degradation modeling and compensation approaches. A comprehensive physical degradation model and two analytic approaches are developed, including: (1) a pattern-dependent global noise propagation mechanism, which describes how the local measurement noise in SPI propagates globally in the reconstructed image; and (2) a boundary-aware multiplicative noise model, which simulates jitter-induced signal instability in SPI imaging. This physical model enables the learning of a deep-blind reconstruction network that performs robustly under real-world SPI degradations and low sampling rates. The proposed approach is compatible with various structured illumination schemes, including Hadamard and Fourier-based SPI22,23. It is applicable to diverse domains such as remote sensing, biomedical imaging, and privacy-preserving surveillance.

Results

Structured degradation modeling and blind compensation architecture

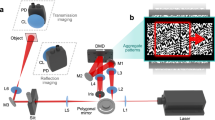

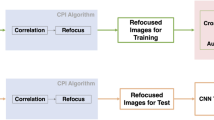

As depicted in Fig. 1, an analytic and comprehensive degradation model is developed to train a deep-blind degradation compensation neural network that can compensate for different types of degradations at the same time. The object is illuminated by a series of Hadamard patterns, and the corresponding reflected intensities are captured by a single-pixel detector. Then, the object image is reconstructed by correlating the known illumination patterns with the measured intensities through CGI24. Details of the CGI algorithm are shown in Supplementary Section 2. After low-resolution image reconstruction via the CGI method, the proposed neural network, named Blind Super-Resolution Single-Pixel Imaging (BSRSPI), compensates for complex real-world degradations and improves the image resolution.

A comprehensive degradation model is studied for a variety of real-world image impairments. The reconstruction process begins with computational ghost imaging (CGI) applied to single-pixel measurements, yielding a coarse, low-resolution image. A neural network trained based on the degradation physical models restores and generates high-resolution images

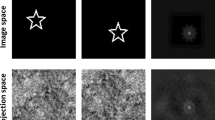

The degradation process of SPI is indicated in Fig. 2a. The illumination patterns from the projector undergo scattering and non-ideal focus, introducing blur during the illumination stage. The modulated light pattern is projected onto the object, where spatial downsampling occurs due to the limited resolution of the patterns. Mechanical jitters between the object and the projection system during acquisition introduce relative misalignment, leading to multiplicative fluctuations in the measurement. After modulation, the reflected light may experience additional degradation along the detection path due to scattering imperfections, resulting in further blur. Finally, the photon shot noise and the electronic noise affect the detection process. The photon shot noise is modeled as a Poisson-distributed function due to the quantum nature of light.

a Schematic illustration of the single-pixel imaging system with key degradation sources. b Schematic visualization of the boundaries of the binary illumination patterns. The purple boxes mark the regions sensitive to spatial jitter during data acquisition. c Normalized boundary length of 16,384 Hadamard patterns, defined as the total length of bright–dark transition edges in each binary pattern. Patterns with longer illuminated boundaries are more susceptible to spatial jitters during acquisition, as reflected in the corresponding variance of the multiplicative Gaussian noise model

The forward measurement of SPI under illumination pattern \({P}_{m}\left(x\right)\) is modeled as:

Here:

-

\(x\) is the location of each pixel;

-

\({GT}\left(x\right)\): Ground-truth reflectance or transmittance from the scene;

-

\({{\rm{\varepsilon }}}_{\mathrm{illum},m}\sim {\mathcal{N}}(1,{\sigma }_{\text{illum}}^{2})\): Multiplicative Gaussian noise due to illumination fluctuations;

-

\({{\mathcal{B}}}_{1}\left(\cdot \right)\): Blur kernel of illumination-path degradations (e.g., scattering, defocus);

-

\({\downarrow }_{s}\left(\cdot \right)\): Downsampling of factor \(s\);

-

\({{\mathcal{B}}}_{2}\left(\cdot \right)\): Blur kernel of detection-path degradations (primarily scattering);

-

\({P}_{m}\left(x\right)\): mth illumination pattern;

-

\({D}_{m}\): Measurement result of the mth pattern;

-

\({\delta }_{m}\sim {\mathcal{N}}\left(0,{\sigma }_{\text{jitter},m}^{2}\right)\): Multiplicative Gaussian noise due to object or pattern jitter for the mth pattern;

-

\({\varepsilon }_{\mathrm{add},m}\sim {\mathcal{N}}\left(0,{\sigma }_{{\rm{P}},m}^{2}+{\sigma }_{\mathrm{AG}}^{2}\right)\): Combined additive Gaussian noise, where \({\sigma }_{{\rm{P}},m}^{2}\) approximates the variance of Poisson-distributed photon noise for the mth measurement. When the number of detected photons is sufficiently large, Poisson noise can be approximated by a signal-dependent Gaussian distribution with variance proportional to the signal intensity. The second term \({\sigma }_{{\rm{AG}}}^{2}\) represents the variance of signal-independent additive Gaussian noise.

The detailed analysis of each degradation factor is listed in the following sections.

Noise

As an active imaging procedure, the sources of noise in SPI are more diverse than in conventional passive imaging due to active illumination. The degradation types and orders are different. Another key difference lies in the error propagation procedure. In conventional imaging, additive noise typically affects each pixel within a small local region. In SPI, as the single-pixel detector integrates the collected light intensities from the entire scene, any noise from each photodetector readout can propagate and spread to the entire image after reconstruction. This section elaborates on noise factors in SPI, such as multiplicative Gaussian noise, additive Gaussian noise, Poisson noise, etc.

Multiplicative Gaussian noise (MGN) NMG

During the process of SPI, random jitters from the object or the illumination platform can degrade the quality of image reconstruction19. As this degradation is directly proportional to the illumination intensity, it is modeled as a MGN

Here, \({S}_{m}\) represents the original signal for the m-th pattern. \({D}_{m}\) denotes the resulting signal for that pattern. \({\delta }_{m}\) signifies the MGN. This noise is characterized as a Gaussian random process with a zero mean and a standard deviation of \({\sigma }_{\text{jitter},m}\). The probability density function (PDF) of MGN is given by:

Here, \({\delta }_{m}\) denotes the multiplicative Gaussian noise for the mth pattern. It can be discerned from Fig. 2b, the jitter noise primarily emanates from the illuminated boundaries of the projection patterns. The longer these lighting boundaries are, the more noticeable the noise becomes. To describe this relationship quantitatively, the noise variance \({\sigma }_{\text{jitter},m}^{2}\) is defined as a term proportional to the boundary length of the mth illumination pattern:

where \({B}_{m}\) denotes the normalized boundary length of the mth pattern and \(\alpha\) is a global scaling coefficient. In this study, the illuminated boundary lengths are taken from the 128 × 128 cake-cutting Hadamard illumination patterns25 employed in the experiments. The results are depicted in Fig. 2c, where the x axis signifies the order of the projection patterns, and the y-axis indicates the length of the illuminated boundaries.

In addition to the jitter-induced multiplicative Gaussian noise discussed above, the light source itself may introduce fluctuations in illumination intensity due to electrical instability or temporal flicker. These fluctuations manifest as multiplicative noise applied before projection and are independent of the object or pattern structure. This is modeled as a scalar multiplicative perturbation \({\varepsilon }_{\mathrm{illum},m}\sim {\mathcal{N}}\left(1,{{\sigma }_{\mathrm{illum}}}^{2}\right)\), where \({{\sigma }_{{\rm{illum}}}}^{2}\) reflects the fluctuation level of the illumination source. This noise acts globally across the entire field of view, modifying the projected pattern intensity \({P}_{m}\left(x\right)\) as:

Additive Gaussian noise (AGN) NAG

In SPI systems, additive Gaussian white noise can arise at various stages of the signal acquisition pipeline. Major sources include readout noise from the photodetector and analog front-end circuitry (e.g., charge-to-voltage conversion, amplifier noise, analog-to-digital converter quantization), noise due to ambient light interference or background leakage, thermal noise and electronic fluctuations in power and amplification circuits. The distribution of this noise can be expressed as

Here, NAG denotes the AGN, while \({\sigma }_{{\rm{AG}}}\) represents its standard deviation26.

Poisson noise (PN) NP

In single-pixel imaging, the capture of photons by the detector is governed by the quantum nature of photons, making their arrival a stochastic event that follows a Poisson distribution. When the number of photons is sufficiently large, the photon statistical noise can be approximated as additive Gaussian noise \(\omega {\sigma }_{m}{\varepsilon }_{m}\):

In this model, \({D}_{m}\) is the measured signal corresponding to the mth illumination pattern. \(x\) is the location of each pixel. \({P}_{m}\left(x\right)\) denotes the intensity distribution of the mth pattern. \({GT}\left(x\right)\) is the ground-truth reflectance or transmittance from the scene. The noise term is expressed as \({\sigma }_{m}{\varepsilon }_{m}\), where \({\varepsilon }_{m}\sim {\mathcal{N}}\left(\mathrm{0,1}\right)\) denotes standard Gaussian white noise. \({\sigma }_{m}\) denotes the baseline standard deviation of photon noise, which is proportional to the square root of the signal intensity, in accordance with Poisson statistics. The scalar \(\omega\) represents the overall noise level or system-specific noise scaling factor17,27.

The variance of the noise term is given by:

In this formulation, the Poisson-distributed photon noise is approximated by a Gaussian distribution with a signal-dependent variance. This approximation is valid in the high-photon regime, where the Poisson distribution converges toward a Gaussian according to the central limit theorem. Despite being signal-dependent, the additive form of this noise allows it to be jointly modeled with signal-independent Gaussian noise in a unified formulation. The combined variance of these additive Gaussian components is given by:

where \({\sigma }_{{\rm{P}}}^{2}\) denotes the photon noise variance, and \({\sigma }_{\text{AG}}^{2}\) represents signal-independent additive Gaussian noise.

Downsampling

In SPI, each measurement intrinsically corresponds to the spatial integration of light reflected or transmitted from the scene modulated by the projected pattern. Therefore, to maintain physical consistency with the data acquisition process, downsampling is modeled as a uniform averaging operation in the high-resolution (HR) image.

Let \({I}_{{\rm{HR}}}\) denote a high-resolution image of size M × M, and \({I}_{{\rm{LR}}}\) the corresponding low-resolution image of size N × N, where M = kN and k is an integer downsampling factor. Under this model, each pixel in the low-resolution image is computed as the average of a k × k block in the HR image:

for \(i,j\) = 0,1,…,N − 1. Here, \(i,j\) represent the row and column indices of pixels in the low-resolution image \({I}_{{\rm{LR}}}\). \(a,b\) denote the row and column indices of pixels in the high-resolution image \({I}_{{\rm{HR}}}\). This formulation ensures that the simulated downsampling process faithfully reflects the spatial integration nature of SPI measurements.

Blur

In prior studies, SPI blur is often modeled by an isotropic Gaussian blur kernel28, which effectively approximates uniform defocus and symmetric scattering effects—such as those induced by thin mist layers or ground-glass diffusers. However, in practical optical systems, various factors—such as mild lens misalignment, directional defocus, and the anisotropic distribution or geometry of scattering media—can introduce direction-dependent blurring29. As such, it is necessary to incorporate anisotropic blur kernels into the degradation model.

In the context of this study, two distinct Gaussian blurring processes are incorporated. The first employs an isotropic Gaussian kernel, denoted as Biso, which assumes uniform blurring in all directions. The second, on the other hand, utilizes an anisotropic Gaussian kernel, represented as Baniso30. This anisotropic kernel accounts for variable blurring across different directions, providing a more intricate and possibly more accurate representation of real-world optical degradations than isotropic modeling. Their formulations are as follows,

For the isotropic Gaussian kernel, \({\sigma }_{{\rm{iso}}}\) represents the standard deviation. In the case of the anisotropic Gaussian kernel, \({\sigma }_{x}\) and \({\sigma }_{y}\) denote the standard deviations in the x and y directions, respectively. The variables x and y indicate the horizontal and vertical distances from the center of the kernel.

Network structure

To address the challenges of signal-dependent global degradation in SPI, a GAN-based super-resolution architecture is constructed, comprising a generator and a multi-scale discriminator. The generator employs a densely connected residual backbone and progressive upsampling to recover high-resolution images from CGI inputs (LR image). The discriminator has hierarchical downsampling and skip-connected upsampling to achieve both global consistency and local texture fidelity.

The network is trained under a composite loss function that combines pixel-wise image loss, adversarial loss, and perceptual loss. While L1 loss enforces pixel-level fidelity, it often leads to over-smoothed results under blind or severe degradations. To overcome this problem, a perceptual loss computed in the Visual Geometry Group31 feature space is introduced to encourage visually realistic textures and high-frequency details. The adversarial loss further regularizes the output distribution toward natural image statistics. More details of our network are provided in Supplementary Section 3 and Fig. S2.

Simulation results

To evaluate the robustness of the proposed degradation compensation framework under complex, unfavorable conditions, simulation experiments are conducted on various representative degradations commonly found in SPI, including (1) Blur, including isotropic and anisotropic Gaussian kernels, with varying standard deviations to simulate different degrees of defocus and scattering; (2) Noise, encompassing both additive and multiplicative types, further categorized into pattern-dependent (e.g., signal-dependent Poisson noise and jitter-induced multiplicative noise) and pattern-independent (e.g., sensor readout noise and illumination fluctuations). Each noise source is sampled with a variance drawn from a predefined interval; (3) Sampling ratios, which are randomly selected within the range of 5.0% to 6.25% to simulate ultra-low acquisition conditions. This structured stochastic degradation strategy allows the network to experience a broad distribution of degradation conditions during training, thereby enhancing its generalization to blind and real-world SPI scenarios. The details of data generation are provided in Supplementary Section 4. The definitions of degradation levels are provided in Supplementary Section 5.

The proposed method is compared against several state-of-the-art approaches that incorporate recent deep learning technologies, including diffusion models, dual-branch CNNs, and adversarial frameworks. Specifically, DDPMGI (Denoising Diffusion Probabilistic Model Ghost Imaging)32, PCM-DRGI (Photon Contribution Model-based Degradation-Guided Ghost Imaging)33, and GAN-SRSPI (GAN-based Super-Resolution Single-Pixel Imaging)34 are selected as representative baselines. A combination of the DIV2K35 dataset (900 images) and the Flickr2K36 dataset (2650 images) is used for comparison, resulting in a total of 3550 diverse high-resolution images for model training in single-image super-resolution tasks. To avoid overfitting and ensure reliable evaluation, 10% of the images (355) are reserved as a held-out test set. The synthesized measurements are generated using the proposed physically grounded SPI degradation model.

The SPI imaging results comparison under the above-mentioned comprehensive degradations are presented in Fig. 3. More details of the degradation composition and the SPI imaging results under each individual degradation factor are provided in Supplementary Section 5 (Figs. S3–S6). The reconstruction quality is evaluated using four metrics: PSNR, Structural Similarity Index Measure (SSIM), Multi-Scale Structural Similarity Index Measure (MS-SSIM), and Learned Perceptual Image Patch Similarity (LPIPS). PSNR quantifies pixel-wise fidelity, while SSIM and MS-SSIM assess structural similarity at local and multi-scale levels, respectively. LPIPS evaluates semantic-level similarity by computing feature differences extracted from a convolutional neural network pretrained on a large-scale image dataset (AlexNet on ImageNet)37. Lower LPIPS values indicate better perceptual similarity. These metrics provide a comprehensive evaluation that reflects both numerical accuracy and semantic consistency of the reconstructed images. From the simulation results, the proposed method consistently outperforms the comparative methods across all the evaluation metrics and degradation levels. Notably, the performance gap becomes more pronounced under severe degradation conditions, highlighting our method’s robustness and generalization capability.

Composite degradations are applied to images from Set538 and Set1439 datasets to simulate complex real-world conditions. An image from the Set5 datasets is used to visualize representative reconstruction results, as shown in Fig. 4a. The model is also tested on the Tsinghua University logo, with the result shown in Fig. 4b. Additionally, to verify the model’s generalization capability, several images from the biomedical field are tested: a mitochondrion in a rod cell of a mouse retina40, a neuron in the molecular layer of a mouse cerebellum41, and the immunological synapse between a human cytotoxic T lymphocyte and a target cell42. The cerebellar neuron result is shown in Fig. 4c, with two additional examples provided in Supplementary Section 6, Fig. S8. It is noteworthy that these biomedical images are not in the dataset used for training. In this domain shift test, our method consistently achieves the best reconstruction results across all metrics and test samples. This generalization performance is attributed to the incorporation of the physical degradation model of single-pixel imaging, which enables the network to learn degradation-robust representations.

The comparison includes PCM-DRGI33, DDPMGI32, and GAN-SRSPI34. Three representative cases are shown: a Set1439 No.009, b Tsinghua University logo, c Neuron in the molecular layer of a mouse cerebellum41. Their corresponding composite degradation settings and sampling rates are: a level 2 degradation, 5% sampling; b level 2 degradation, 6% sampling; and (c) level 2 degradation, 6.25% sampling. BSRSPI achieves the best perceptual quality across all conditions

Experimental results

The performance of different reconstruction methods, including PCM-DRGI33, DDPMGI32, and GAN-SRSPI34, is evaluated on real-world degraded scenes in Fig. 5. All the tested samples are experimental single-pixel images collected under realistic conditions involving mist, jitter, and sensor noise. Reconstruction results are compared using LPIPS, a full-reference evaluation metric that evaluates image similarity in the deep feature space of a pretrained network. As shown in Fig. 5, the proposed method consistently achieves the lowest LPIPS scores across all samples, demonstrating superior semantic-level reconstruction quality under complex real-world degradations.

As shown in Fig. 5, experimental comparisons on the color image reconstruction performance of reconstruction methods PCM-DRGI33, DDPMGI32, GAN-SRSPI34, and the proposed BSRSPI are conducted on simulated color SPI data. The color measurements are generated using Hadamard-Bayer illumination patterns, which spatially encode RGB channels within a single pattern to enable full-color acquisition43. To ensure fairness and consistency with the experimental encoding scheme, all compared neural networks are fine-tuned under the corresponding physical model. Quantitative evaluation based on the LPIPS metric further confirms this advantage, with our proposed BSRSPI consistently obtaining the lowest LPIPS values, indicating greater perceptual consistency with the ground truth in terms of high-level image features. Additional experimental results are provided in Supplementary Section 6 (Fig. S9). The framework also supports varying levels of prior knowledge. In our experiment, the network operates in a fully blind setting that has no knowledge of the degradation type. The network can also be fine-tuned for better image reconstruction quality if the parameters of the degradation sources are known.

Discussion

This work proposes a comprehensive degradation model for SPI. The model systematically integrates multiple physical degradation factors, such as downsampling, jitter, scattering, and readout noise. Two SPI degradation mechanisms are proposed: a pattern-dependent global noise propagation model that describes the non-ideal intensity variations in structured illumination, and a jitter-aware multiplicative noise model that accounts for the relative motion between the object and the projection system. Based on this structured degradation formulation, a deep-blind reconstruction network is trained to compensate for complex degradation combinations without requiring prior knowledge of degradation parameters. Experimental validation on both simulated and real-world data demonstrates that the proposed method consistently outperforms existing approaches across a wide range of degradation levels, confirming its robustness and effectiveness in practical SPI scenarios.

The proposed method serves as a general-purpose framework for modeling complex, compound degradations in real-world imaging systems. Its modular design allows degradation components to be inserted or adjusted based on application-specific requirements. For example, photobleaching or nonlinear scattering effects can be incorporated into the degradation model to compensate for artifacts that are difficult to parameterize and often lack aligned high-resolution ground truth. Beyond biomedical imaging, the method is adaptable to tasks such as remote sensing, surveillance, or industrial inspection, where unknown degradations commonly arise. By learning from a wide variety of simulated degradation patterns, the model maintains strong generalization capacity during deployment, even without large-scale retraining. These attributes highlight the framework’s practical value across diverse imaging scenarios.

Materials and methods

Experimental setup and real-world test

The experimental setup for verifying the reconstructed image quality of SPI is illustrated in Fig. 2a. A photograph of the actual experimental setup is provided in Supplementary Section 6, Fig. S7. The projector (JMGO G7) projects Hadamard basis illumination patterns arranged in the order of cake-cutting25 onto the printed target object. Simultaneously, the single-pixel detector (Thorlabs DET100A2) records the light intensity data of the reflected light. The analog output from the single-pixel detector is digitized using a digital oscilloscope (Rigol DS7014) and transmitted to a computer for image reconstruction. In the experiments, three types of real-world degradation are introduced in the optical path: noise, blur, and jitter. Noise is added to the collected single-pixel intensity signals to simulate sensor and photon-related fluctuations. Blur is induced by placing an ultrasonic humidifier (Jisulife HU18) between the optical system and the object to create a thin, scattering mist layer. Ultrasonic humidifiers are often used to generate micrometer-scale water droplets that mimic the optical degradation caused by fog, haze, or thin condensation layers in recent studies44,45,46. Jitter is introduced by manually perturbing the object position during acquisition to simulate mechanical instability or object movement in real-world scenarios. Moreover, recent advances in computational imaging highlight that deep learning provides powerful tools to model and compensate for such degradations47.

Network training details

All training and inference procedures are implemented in PyTorch 2.4.1 (cu121) with CUDA 12.1 and executed on a Windows 10 workstation equipped with an NVIDIA RTX 3090 GPU. The proposed model is trained for 100,000 iterations. Our average inference time per 128 × 128 image is ~60 milliseconds. For the proposed model, the hyperparameters of the loss function are as follows: \({\alpha }_{{\rm{img}}}=1,{{\alpha }}_{{\rm{per}}}=0.1,{{\alpha }}_{{\rm{adv}}}=0.1\). These values are selected based on an ablation study discussed in Supplementary Section 7.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Edgar, M. P., Gibson, G. M. & Padgett, M. J. Principles and prospects for single-pixel imaging. Nat. Photonics 13, 13–20 (2019).

Liu, Z. H. et al. Adaptive super-resolution networks for single-pixel imaging at ultra-low sampling rates. IEEE Access 12, 78496–78504 (2024).

Zhang, Y. F. et al. Single-pixel imaging robust to arbitrary translational motion. Opt. Lett. 49, 6892–6895 (2024).

Shrekenhamer, D., Watts, C. M. & Padilla, W. J. Terahertz single pixel imaging with an optically controlled dynamic spatial light modulator. Opt. Express 21, 12507–12518 (2013).

Morris, P. A. et al. Imaging with a small number of photons. Nat. Commun. 6, 5913 (2015).

Wang, P. & Yuan, X. SAUNet: spatial-attention unfolding network for image compressive sensing. In Proceedings of the 31st ACM International Conference on Multimedia. Ottawa, ON, Canada: Association for Computing Machinery, 5099–5108 (2023).

Wang, P. et al. Proximal algorithm unrolling: flexible and efficient reconstruction networks for single-pixel imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Nashville, TN, USA: IEEE, 411–421 (2025).

Ma, S. et al. Ghost imaging LiDAR via sparsity constraints using push-broom scanning. Opt. Express 27, 13219–13228 (2019).

Yang, X. et al. Phase-coded modulation 3D ghost imaging. Optik 220, 165184 (2020).

Tsoy, A. et al. Image-free single-pixel keypoint detection for privacy preserving human pose estimation. Opt. Lett. 49, 546–549 (2024).

Zhang, H. et al. Ultra-efficient single-pixel tracking and imaging of moving objects based on geometric moment. In Proceedings of SPIE 12617, 9th Symposium on Novel Photoelectronic Detection Technology and Applications. Hefei, China: SPIE, 2022, 126177N (2022).

Zhang, H. et al. Prior-free 3D tracking of a fast-moving object at 6667 frames per second with single-pixel detectors. Opt. Lett. 49, 3628–3631 (2024).

Wu, J. R. et al. Experimental results of the balloon-borne spectral camera based on ghost imaging via sparsity constraints. IEEE Access 6, 68740–68748 (2018).

Zhang, Y. et al. Physics and data-driven alternative optimization enabled ultra-low-sampling single-pixel imaging. Adv. Photonics Nexus 4, 036005 (2025).

Qu, G., Wang, P. & Yuan, X. Dual-scale transformer for large-scale single-pixel imaging. In Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, WA, USA: IEEE, 25327–25337 (2024).

Zhang, K. et al. Designing a practical degradation model for deep blind image super-resolution. In Proceedings of 2021 IEEE/CVF International Conference on Computer Vision. Montreal, QC, Canada: IEEE, 4771–4780 (2021).

Lyu, M. et al. Deep-learning-based ghost imaging. Sci. Rep. 7, 17865 (2017).

Hu, Y. D. et al. Optimizing the quality of Fourier single-pixel imaging via generative adversarial network. Optik 227, 166060 (2021).

Huang, X. W. et al. Ghost imaging for detecting trembling with random temporal changing. Opt. Lett. 45, 1354–1357 (2020).

Le, M. N. et al. Underwater computational ghost imaging. Opt. Express 25, 22859–22868 (2017).

Huang, X. W. et al. Ghost imaging influenced by a supersonic wind-induced random environment. Opt. Lett. 46, 1009–1012 (2021).

Zhang, Z. B., Ma, X. & Zhong, J. G. Single-pixel imaging by means of Fourier spectrum acquisition. Nat. Commun. 6, 6225 (2015).

Zhang, Z. B. et al. Hadamard single-pixel imaging versus Fourier single-pixel imaging. Opt. Express 25, 19619–19639 (2017).

Bromberg, Y., Katz, O. & Silberberg, Y. Ghost imaging with a single detector. Phys. Rev. A 79, 053840 (2009).

Yu, W. K. Super sub-nyquist single-pixel imaging by means of cake-cutting hadamard basis sort. Sensors 19, 4122 (2019).

Bian, L. H. et al. Experimental comparison of single-pixel imaging algorithms. J. Opt. Soc. Am. A 35, 78–87 (2018).

Katkovnik, V. & Astola, J. Compressive sensing computational ghost imaging. J. Opt. Soc. Am. A 29, 1556–1567 (2012).

Gao, Z. Q. et al. Computational ghost imaging in scattering media using simulation-based deep learning. IEEE Photonics J. 12, 6803115 (2020).

Zhang, K. H. et al. Deep image deblurring: a survey. Int. J. Comput. Vis. 130, 2103–2130 (2022).

Bell-Kligler, S., Shocher, A. & Irani, M. Blind super-resolution kernel estimation using an internal-GAN. In Proceedings of the 33rd International Conference on Neural Information Processing Systems. Vancouver, BC, Canada: Curran Associates Inc., 26 (2019).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations. San Diego, CA, USA: ICLR, 1–14 (2015).

Mao, S. et al. High-quality and high-diversity conditionally generative ghost imaging based on denoising diffusion probabilistic model. Opt. Express 31, 25104–25116 (2023).

Gao, Z. Q. et al. Extendible ghost imaging with high reconstruction quality in strong scattering medium. Opt. Express 30, 45759–45775 (2022).

Liu, Z. H. et al. GAN-SRSPI: super-resolution single-pixel imaging using generative adversarial networks. In Proceedings of SPIE 12617, 9th Symposium on Novel Photoelectronic Detection Technology and Applications. Hefei, China: SPIE, 2022,

Agustsson, E. & Timofte, R. NTIRE 2017 challenge on single image super-resolution: dataset and study. In Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 1122–1131 (2017).

Lim, B. et al. Enhanced deep residual networks for single image super-resolution. In Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Honolulu, HI, USA: IEEE, 1132–1140 (2017).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In Proceedings of 2009 IEEE Conference on Computer Vision and Pattern Recognition. Miami, FL, USA: IEEE, 248–255 (2009).

Bevilacqua, M. et al. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the British Machine Vision Conference 2012. Surrey, UK: BMVA Press, 135.1–135.10 (2012).

Zeyde, R., Elad, M. & Protter, M. On single image scale-up using sparse-representations. In Proceedings of the 7th International Conference on Curves and Surfaces. Avignon, France: Springer, 711–730 (2010).

Fox, D. & Perkins, G. CCDB:54, mus musculus, mitochondrion, photoreceptor/cone. Cell Centered Database (CCDB). https://doi.org/10.7295/W9CCDB54 (2001).

Ellisman, M., Sosinsky, G. & Jones, Y. CCDB:3649, rat, neuropil. Cell Centered Database (CCDB). https://doi.org/10.7295/W9CCDB3649 (2004).

Fuller, S. et al. CCDB:3632, Homo sapiens, CTL immunological synapse, cytotoxic T lymphocyte. Cell Centered Database (CCDB). https://doi.org/10.7295/W9CCDB3632 (2004).

Ye, Z. Y. et al. Simultaneous full-color single-pixel imaging and visible watermarking using Hadamard-Bayer illumination patterns. Opt. Lasers Eng. 127, 105955 (2020).

Chen, B. C. et al. Thermowave: a new paradigm of wireless passive temperature monitoring via mmwave sensing. In: Proceedings of the 26th Annual International Conference on Mobile Computing and Networking. London, UK: Association for Computing Machinery, 27 (2020).

Mondal, I. et al. Cost-effective smart window: transparency modulation via surface contact angle controlled mist formation. ACS Appl. Mater. Interfaces 15, 3613–3620 (2023).

Heifetz, A. et al. Millimeter-wave scattering from neutral and charged water droplets. J. Quant. Spectrosc. Radiat. Transf. 111, 2550–2557 (2010).

Luo, X. Y. et al. Revolutionizing optical imaging: computational imaging via deep learning. Photonics Insights 4, R03 (2025).

Acknowledgements

National Natural Science Foundation of China (62305184); Science, Technology and Innovation Commission of Shenzhen Municipality (JCYJ20241202123919027); Science, Technology and Innovation Commission of Shenzhen Municipality (WDZC20220818100259004); Basic and Applied Basic Research Foundation of Guangdong Province (2023A1515012932).

Author information

Authors and Affiliations

Contributions

Z.L. and Z.G. contributed equally to this work. Z.L. and Z.G. conceived the research, developed the model, and conducted the simulations. Z.L., B.Y., Y.Z., M.K.C., F.L., and Z.G. contributed to experimental implementation, data analysis, and theoretical comparisons. M.K.C., F.L., J.S., X.Y., and Z.G. supervised the research and provided technical support. Z.G. guided the conceptual development and provided technical direction throughout the project, and contributed substantially to manuscript preparation and coordination. All the authors have accepted responsibility for the entire content of this submitted manuscript and approved the submission. All authors analyzed the results and contributed to preparing the manuscript and discussions.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, Z., Yang, B., Zhang, Y. et al. Comprehensive compensation of real-world degradations for robust single-pixel imaging. Light Sci Appl 14, 365 (2025). https://doi.org/10.1038/s41377-025-02021-7

Received:

Revised:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41377-025-02021-7