Abstract

Major depressive disorder (MDD) often goes undiagnosed due to the absence of clear biomarkers. We sought to identify voice biomarkers for MDD and separate biomarkers indicative of MDD predisposition from biomarkers reflecting current depressive symptoms. Using a two-stage meta-analytic design to remove confounds, we tested the association between features representing vocal pitch and MDD in a multisite case-control cohort study of Chinese women with recurrent depression. Sixteen features were replicated in an independent cohort, with absolute association coefficients (beta values) from the combined analysis ranging from 0.24 to 1.07, indicating moderate to large effects. The statistical significance of these associations remained robust, with P values ranging from 7.2 × 10–6 to 6.8 × 10–58. Eleven features were significantly associated with current depressive symptoms. Using genotype data, we found that this association was driven in part by a genetic correlation with MDD. Significant voice features, reflecting a slower pitch change and a lower pitch, achieved an AUC-ROC of 0.90 (sensitivity of 0.85 and specificity of 0.81) in MDD classification. Our results return vocal features to a more central position in clinical and research work on MDD.

Similar content being viewed by others

Introduction

Changes in human pitch and tone of speech have been noted as an important sign of depression for over a century [1, 2]. Although not contained in symptomatic criteria for major depressive disorder (MDD) in DSM-III [3], DSM-IIIR [4], DSM-IV [5], or DSM-5 [6], they are found in 26 out of 28 detailed clinical descriptions of melancholia published from 1880-1900 [1] and in 19 out of 21 of such descriptions of depression published in the 20th century [2]. Given the current challenges in diagnosing MDD [2, 7, 8], where a large proportion of cases (ranging from 50 to 90%) remain untreated [9,10,11], the transformation of voice phenomena into diagnostic biomarkers could aid in both clinical and research arenas.

Clinical observations describe the speech patterns of depressed patients as slow, weak, low-pitched, and monotonous [1, 2, 12, 13]. These phenomena are typically quantified by increased pause time, lower volume, lower pitch, and reduced pitch variability [14,15,16]. Many studies have sought to develop features from pitch as a biomarker for depression [17,18,19,20,21,22,23,24,25,26], but none have, to date, achieved sufficient accuracy and precision for clinical utility. The large number of both vocal features and confounds [24, 25] imposes a multi-testing burden that requires large sample sizes which few studies have obtained [16]. Also, a critical distinction between current mood and susceptibility to MDD on effects on voice has never been addressed [27,28,29]. Furthermore, MDD is likely heterogeneous [30, 31]: studies not accounting for this may be underpowered [16].

Our study of the relationship between voice features and MDD was designed to be well-powered, using thousands of subjects, to be more robust to heterogeneity by analyzing cases with recurrent depression of one sex only, and able to separate susceptibility to MDD from the effect of current mood on voice features by using genetic data. We used a large case-control study of MDD where we could replicate findings in an independent sample, both from China. Since, of the four groups of speech features (source, spectral, prosodic, and formant features [16]), the association between depression and a key component of prosody, pitch, has repeatedly been observed [15, 16], our analysis was restricted to examining pitch-related features. Our results return vocal features to a more central position in clinical and research work on MDD.

Results

Subjects

We used recordings conducted as part of the CONVERGE [32] (China, Oxford, and VCU Experimental Research on Genetic Epidemiology) study (3968 cases and 4354 controls). CONVERGE recruited only women with recurrent MDD from hospital settings and compared them with matched controls with no history of MDD, thus reducing heterogeneity in both genetic and vocal signals [33, 34]. A summary of demographic data from cases and controls is provided in Table S1; the relation of these to depression is reported in earlier publications [31, 35,36,37,38,39,40]. All recordings, obtained during diagnostic interviews, were listened to, and segments that contained only the patient’s voice at an adequate quality for the analyses (see the Method section in Supplementary for details) were identified. In this study, a “segment” refers to the longest continuous portion of an audio recording that contains only the patient’s voice, uninterrupted by other speakers. Segments are not split at pauses within the patient’s speech but are instead defined by changes in the speaker, ensuring that each segment represents uninterrupted speech from the patient alone. It can be as short as a single word or as long as a complex sentence or multiple sentences. This resulted in 364,929 voice segments with a duration greater than two seconds from 7654 subjects. The selection of subjects for each component of the study is shown in Fig. S1, which provides an overview of the design of the project (Fig. S1a), and a PRISMA diagram to indicate how many cases and controls were discarded at different stages and pathways of analyses (Fig. S1b).

Feature identification

The perceptual attribute of pitch corresponds to the physical measurement of fundamental frequency, or F0 [15]. Our interest in prosodic features of speech, particularly pitch (hereafter referred to as the F0) and change in pitch (ΔF0), led us to choose the INTERSPEECH 2016 Computational Paralinguistics Evaluation (COMPARE16) feature set [41, 42]. The primary application for the feature set has been depression detection [24, 43,44,45,46,47] and it captures typical temporal information and long-term information either statically, through the use of utterance level statistics/functionals, or dynamically, through frame-based delta (ΔF0) coefficients, reflecting differences between the adjacent vectors’ feature coefficients [48, 49]. Its feature extraction process is well documented [50], with standardized and well-referenced methodologies that facilitate reproducibility and validation by other researchers in many languages (including Chinese) [51,52,53,54].

The COMPARE16 feature set contains 83 F0/ΔF0-based features, many of which are highly correlated (Fig. S2). Implementing a feature selection process to remove redundancy (described in Supplementary Methods), we extracted 30 voice features (Table S2, their distributions are in Fig. S3 and Fig. S4), providing a comprehensive characterization of the speaker’s prosodic patterns, pitch, and intonation [41, 42] We calculated statistics and functions based on the time series of F0, and its differential values, namely pitch change speed (ΔF0). These statistics and functions include mean values, quartiles, range, and regression coefficients, which capture pitch trends and dynamics in speech.

A two-stage meta-analysis identifies 20 features associated with MDD

Our association analysis had to account for a number of potential confounds. Not everyone in the study spoke the same language: 60% of the subjects spoke in standard Mandarin, whereas the rest spoke either local languages or Mandarin with local accents. This might not matter if language differences were randomized with respect to case status, but uneven case/control ratios between hospitals could confound the analysis. Similarly, the quality of the recordings varied, potentially confounding the association testing. While we made every attempt to ensure that the location of interviews was comparable (in outpatient departments) and that the interviews were carried out in the same way by clinically experienced interviewers that we had trained (Supplementary Methods), these and other, unknown confounders might impact the voice features.

We dealt with these issues as follows. First, during the process of identifying the patients’ voice segments, we annotated background noise and language. The noise was categorized into five levels, and a binary indicator tagged whether the subjects’ speech was in standard Mandarin or not. We included these features as covariates in our analyses.

Second, to account for differences between hospitals, we implemented a two-stage meta-analysis, in which associations were first calculated at the hospital level, including demographic features as covariates (Table S1), noise levels, and speech indicators, and subsequently pooling results using a random-effects model. We selected 27 hospitals with at least 100 individuals, yielding a total subject count of N = 5681 (Fig. S1). By analyzing cases and controls within each hospital first and then combining the results in a meta-analysis, we alleviated the risk of site-specific confounders. We identified 20 features significantly associated with MDD at a 5% FDR threshold (Table 1; a description of the vocal features is given in Table S2). All features were standardized (to give a standard deviation of 1) before analysis so that beta coefficients can be compared and interpreted. Eighteen features were significant under a family-wise error rate control using Bonferroni (P value < 0.0017), with 14 features showing absolute β coefficients greater than 0.3. The most significantly associated feature is a ΔF0 measure (interquartile range; β = –1.07, SE = 0.07, PFDR=1.1 × 10–49).

To test the sensitivity of our results to differences between cases and controls, we compared analyses with and without the inclusion of 20 genetic principal components (PCs). The results of this analysis are presented in Table S3, and a comparison of the betas with and without adjusting for genetic PCs is presented in Fig. S5. The correlation between betas in the two analyses was r = 0.99, P = 8.7 × 10–28. These results demonstrate a high degree of consistency in the estimated association effects, irrespective of the adjustment for genetic PCs. There is no overall decrease in significance with the inclusion of the genetic covariates, implying that cases and controls are overall adequately matched by location, which implicitly also means matching by accent.

Replication in an independent sample

We evaluated the 20 associated features in an independent sample. The replication sample was collected six years after the discovery CONVERGE data, using the same selection criteria and interview protocol (described in Supplementary Methods). The replication sample collected data from three new hospitals, and one that was part of the CONVERGE sample. It used none of the same interviewers and the participants did not overlap with the discovery sample. A description of the sample is given in Table S4. While the replication sample is smaller than the discovery sample (1084, Fig. S1), power to detect twelve of the features was greater than 80% (Table 1). Again, we listened to all recordings, annotated them for quality and accent, extracted prosodic features, analyzed the association within each hospital, and combined results by meta-analysis. As Table 1 shows, 14 features exceeded a Bonferroni corrected threshold of 0.0025 (0.05/20) and 16 exceeded an FDR 5% threshold. We argue that this strong replication finding in an independent sample, excludes systematic bias in the way recordings were made, the way interviews were conducted, and differences in accent among subjects and differences between hospitals.

Finally, we jointly analyzed the discovery and replication samples by meta-analysis and show the results in Table 1. Eighteen features exceeded the Bonferroni corrected threshold and all exceeded the 5% FDR threshold. The three most significantly associated features were ΔF0 interquartile range \(({{{\rm{\beta }}}}=-1.07,{{{\rm{SE}}}}=0.07,{{{{\rm{P}}}}}_{{{{\rm{FDR}}}}}=6.8\times {10}^{-58})\), which measures the range between the 25th and 75th percentile of pitch change speed (ΔF0), ΔF0 maximum \(({{{\rm{\beta }}}}=-0.97,{{{\rm{SE}}}}=0.07,{{{{\rm{P}}}}}_{{{{\rm{FDR}}}}}=1.8\times {10}^{-48})\), which measures the highest value of pitch change speed, and time with F0>90th percentile \(({{{\rm{\beta }}}}=-0.80,{{{\rm{SE}}}}=0.06,{{{{\rm{P}}}}}_{{{{\rm{FDR}}}}}=1.3\times {10}^{-44})\), which measures the amount of time the pitch stays above the 90th percentile of its range. We found that there was no significant heterogeneity in the association effects between Mandarin speakers and non-Mandarin speakers (Supplementary Results).

Differences between case and control interviews do not account for the associations

We considered next one additional potential confound: the possible impact of the questions asked at interview. The interview for cases is typically more than twice as long as for controls, as we ask about past occurrences of depression and associated stressful life events. Could the emotion associated with this questioning alter speech in such a way as bias our findings? We conducted a sensitivity analysis on responses to neutral questions to check if the effects remained consistent across contexts.

We selected two questions from the demographic section of the interview based on their high response rates (Table S5) and neutral nature. These questions (D2.A: “What is your date of birth?” and D10: “How much do you weigh while wearing indoor clothing?”) were chosen because they are unlikely to trigger emotional differences between MDD cases and controls. We identified 533 subjects with voice responses to question D2.A and 617 to question D10. The average segment durations were 3.37 s (SD = 3.05), and 8.47 s (SD = 2.69), respectively. For each question, we used the corresponding segments to extract the 16 pitch features that were associated with MDD in our main analysis. Using the two-stage meta-analysis method again, we re-estimated their associations. Due to the small sample sizes, power to detect effects was low so we used a one-sided binomial sign test to test consistency in the direction of association effects between the two analyses.

The estimated association effects in context-constrained analysis are reported in Table S6. We found that for question D10, three out of 16 pitch features maintained significant associations with MDD at FDR < 0.05. Remarkably, 15 out of 16 features showed the same direction of association effects, a fraction significantly higher than chance (Binomial P = 0.00026). For D2.A, despite the average duration being only 3.37 s, three features achieved nominal significance for associations (uncorrected P < 0.05), and 12 out of 16 pitch features showed consistent directions of association effects (Binomial P = 0.038). In total, 12 out of 16 voice features showed consistent directions of association effects across all four analyses. We conclude that the findings from the main analyses are not biased by the context of the interview.

As a summary for these analyses, Fig. S6 shows the effect sizes (beta coefficients) and the 95% confidence intervals for the association between 16 voice F0/ΔF0 features and MDD in the discovery (CONVERGE), replication and single segment analyses.

Genetic correlations between pitch features and MDD

Cases for the CONVERGE study were identified as those who have a history of recurrent MDD, and though all were ascertained through hospitals, many were in remission. This raises the important question as to what the association with voice features represents: does it reflect their current low mood, compared to controls, or does it reflect their history of MDD? We addressed this question in the following way.

To see if any voice features correlated with current mood, we used a standard assessment of current mood for subjects, the depressive symptom checklist (SCL) [55]. These data were only available for the replication sample. The distributions of SCL scores for cases and controls are presented in Fig. S7. Of the 16 pitch features, 11 showed a significant association with current depressive symptoms, after FDR correction (Table 2).

These results confirm that most of the features we found to be associated with MDD are correlated with current mood (the relatively smaller sample for this analysis cannot exclude the possibility that all features are thus associated). To examine whether the association reflected a genetic effect common to both variability in vocal features and susceptibility to MDD we estimated the SNP-based heritability for each of the 16 pitch features (this analysis was carried out with CONVERGE data, the only group for which there are genetic data [32]). Results are presented in Table 3. Four features were heritable at FDR < 0.05. We repeated the heritability analyses adjusting for more genetic PCs to determine whether population structure might contribute to the correlation and found that the heritability remained significant even after adjusting for as many as 60 genetic PCs (Table S7). While we cannot rule out the possibility that all vocal features are to some extent heritable (our sample size is too small to confidently detect heritabilities of less than 10%), Table 3 shows that SNP-based heritability varies significantly: the estimated confidence interval of the heritability for two, ΔF0_maxPos and ΔF0_qregc1, lie outside those for ΔF0_iqr1-3. The low heritability of these features indicates the genetic effects are unlikely to be the only contributing factor to the association with MDD. We did not find any genome-wide significant SNPs for these heritable features (Supplementary Results), presumably owing to the limited sample size.

We estimated the genetic correlation with MDD for the four features with evidence of heritability and found that three ΔF0 features had significant genetic correlations (Table 3). They were: (1) the interquartile range (IQR1-3), quantifying the variation of speed in pitch change; (2) the kurtosis, signaling the extremity of speed in pitch change; and (3) the maximum, representing the speed of the fastest pitch change. There was no detectable genetic correlation with MDD for one heritable feature, ΔF0_kurtosis. Again, while we cannot exclude the possibility of some degree of genetic correlation for this and other features, our results indicate that genetic effects alone cannot explain the association with MDD for all features. The vocal features index a composite of heritable and non-heritable contributions to mood change.

Associations between pitch features and MDD symptoms, risk factors, and comorbidities

The deep set of phenotypes available in CONVERGE, which includes MDD symptoms, environmental risk factors, comorbid disease, and suicidality, permit us to explore other associations for the vocal features associated with MDD. For these exploratory analyses we included all 30 voice features and tested association with 33 traits (detailed in Table S8). The results of within-case two-stage meta-analysis are shown in Table S8. We categorized the traits into six classes (MDD symptoms, MDD clinical features, suicidal features, co-morbid psychiatric disease, neuroticism and stressful life events) as we were interested in determining the effects on these categories.

110 associations are significant at an uncorrected 5% significance threshold (where 50 are expected by chance). Surprisingly, features assessing stressful life events showed the greatest enrichment of low P values. After applying a Bonferroni correction for the 990 tests (P < 0.05/990 = 5.1 × 10–5) six associations were significant, four for stressful life events, one for the personality trait neuroticism, and one for premenstrual syndrome score. Two features replicated (corrected threshold P < 0.05/6 = 0.008). Both associations were between the total number of stressful life events and ΔF0 features, including the IQR1-3 of ΔF0 (16 hospitals in CONVERGE, total N = 2,064, β = - 0.21, SE = 0.03, uncorrected P=1.9 × 10–11; four hospitals in the replication, total N = 295, β = –0.20, SE = 0.07, uncorrected P = 0.0078) and maximum of ΔF0 (16 hospitals in CONVERGE, total N = 2064, β = –0.19, SE = 0.03, uncorrected P = 6.5 × 10–10; four hospitals in the replication, total N = 295, β = –0.20, SE = 0.08, uncorrected P = 0.0074).

Classification performance

If the voice features are to have any clinical utility, they must not just be associated with MDD, but they must predict it accurately. We took advantage of access to our two independently collected samples (discovery and replication), using the discovery group for training data (n = 7654) and the replication sample (n = 1189) to test the classification performance.

We compared the classification performance of a full model against a null model. The null model was a logistic regression (LR) model trained on the covariates. We then trained a full model using the covariates together with the identified voice features in discovery, based on the same LR method. Table 4 illustrates these comparisons. Integrating voice data significantly enhanced the predictive accuracy of our models. Adding voice features to the LR model increased the AUC-ROC from 0.70 to 0.83 and the accuracy from 0.63 to 0.76.

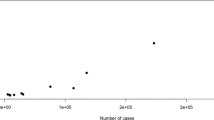

We then tested whether the classification results were robust to different machine learning methods. We evaluated this on three established methods suitable for our dataset, support vector machine (SVM), extreme gradient boosting (XGBoost), and multi-layer perceptron (MLP). Results improved prediction, with XGBoost delivering an AUC of 0.90 (sensitivity of 0.85, and specificity of 0.81). Figure 1 plots the ROC curves for a null model (using covariates only to classify depression) and the four models using voice features. The precision-recall curve of these models are in Fig. S8.

The figure shows the ROC for four models for predicting depression from voice features, and a null model, a logistic regression model trained on demographic covariates only (LR-Covar). The full models are logistic regression (LR), support vector machine (SVM), multi-layer perceptron (MLP), and extreme gradient boosting (XGBoost), trained on voice and covariates (Covar+Voice). AUC: area under the curve.

Discussion

We set out to find voice pitch features associated with MDD. By using a large and homogeneous case-control cohort, a two-stage meta-analysis and an independent replication, we provide robust evidence that certain pitch features distinguished MDD cases from matched controls. The associated features were a slower change in pitch and a particularly uneven distribution of these variations. Features measuring the variability and extremity of pitch change speed were heritable and had genetic correlations with MDD, which we interpret to mean that at least some of the association between variation in pitch and susceptibility to depression is genetic in origin. Classification of those with and without depression, based on vocal features, was achieved with an AUC of 0.90, highlighting the potential use of these features as biomarkers for MDD detection and secondary prevention.

Establishing a robust, replicable association between voice features and MDD is difficult because of the numerous confounds that could potentially introduce systematic differences between cases and controls and thus corrupt our findings. We addressed this concern by using a large, and as far as possible homogeneous sample of depression. Our study used only women with recurrent MDD in a population where many comorbid disorders, such as smoking, alcohol, and drug abuse, are rare or practically non-existent [32]. By adopting a two-stage meta-analysis to take into account variation between hospitals, and using an independent replication sample, our results are unlikely to be explained by differences between hospitals, location, accent, quality of the recording or the interview questions.

Our findings support and extend previous studies which have indicated potential links between pitch patterns and MDD, but were limited by smaller sample sizes or more heterogeneous cohorts [15, 16]. First, our large sample size provided adequate power to test several pitch features from a standardized features set, providing more fine-grained quantitative evidence for the descriptions of the monotonous speech pattern in MDD than in previous studies. Previous studies have found that depressed people speak more slowly with lower pitch and decreased variability [16, 25]. Here, our study showed MDD was negatively associated with features measuring how fast pitch changes (the maximum, the 3rd quartile, and the root quadratic mean of ΔF0, Table 1), indicating that the reduced rate of change in pitch is a characteristic of voice in MDD patients. We also found that MDD patients spend less time in their upper vocal range (Time with F0>90th percentile, Table 1), affirming the “low-pitched” pattern.

Second, our results indicate that MDD’s pitch dynamics involve more than reduced variability, showing a broader pitch range and more extreme values (range and kurtosis of F0, Table 1). Our analysis also revealed an uneven distribution of the speed with which an MDD patient’s pitch changes, as shown by the negative association between MDD and the flatness of ΔF0 and the positive association with the kurtosis of ΔF0 (Table 1). Overall, these various features enrich our understanding of pitch dynamics, demonstrating a pattern of slower change in pitch, yet with more frequent occurrences of extreme values and pitch change speed.

Third, our research examined the relationship between voice features and the effects of current low mood, and the effects of susceptibility to MDD. Some vocal features might be more reflective of a person’s underlying propensity towards developing MDD, while others could be more indicative of a current depressive state. We found that some vocal features were indeed heritable, but still correlated with changes in current mood: individuals with an increased genetic risk of MDD may have a smaller value of speed for the fastest pitch change, thus being unable to speak as fast as those without depression. They may show a narrower IQR of pitch change speeds and more frequently occurring extreme changes of pitch (higher kurtosis). Shared genetic effects exist between at least some ΔF0 features and MDD, but while our low power to detect heritability and genetic correlations raises the possibility that the other features may also be associated in this way, our findings are consistent with vocal features’ association with both current low mood and susceptibility to MDD.

We also found that two heritable voice features were associated with the number of stressful life events. The reason for these associations is unclear, but suggests the possibility that stressful life events reveal a latent predisposition to depression [56, 57], evidenced through a change in vocal features.

It is interesting to consider physiological interpretations of our findings. Possibly, changes in pitch could reflect tiredness, or the psychomotor retardation that characterizes an episode of MDD. We do not have physiological assessments of our subjects that characterize these features (all of our data are from interviews, and therefore reflect the subjects’ perceptions) but it is worth pointing out that at some level physiological and psychological contributions will be confounded. For example retardation of thought (psychological) can result in a slowness of expression (motor effect), so that in many cases the distinction may not be relevant.

Could the features we identified provide clinically useful predictions? A key aim of our research was to find vocal biomarkers that could take on this role. Applying XGBoost to the independent test dataset we obtained an AUC-ROC of 0.90, and a level of accuracy indicating that the features could be useful in identifying cases of MDD. We applied three models for classification (SVM, XGBoost, and MLP) because relying on a single model would not provide sufficient evidence for the robustness and generalizability of the voice features across different machine learning approaches. SVM excels at handling high-dimensional data and constructing optimal decision boundaries, but it assumes that the data is linearly separable in the kernel-transformed feature space [58]. If the relationship between the features and depression states is highly non-linear, SVM may struggle to find an optimal solution. In contrast, XGBoost [59], an ensemble of decision trees, can capture complex feature interactions and handle non-linear relationships. However, it may not be as effective as deep learning models like MLP in capturing hierarchical representations of the data. MLP, with its deep learning architecture, can learn intricate patterns and hierarchical representations, but it is prone to overfitting, particularly when the dataset is small or the network is overly complex [60]. By applying the voice features and geographical covariates to all three models, we provide a robust justification for the usefulness and generalizability of the voice features in depression classification.

Our results should be assessed with respect to several limitations. First, we only recruited Han Chinese women with recurrent MDD. Our results may not extrapolate to men, those with single episode MDD, or to non-Chinese speakers. Second, our analysis focused solely on pitch features, and future studies should explore other feature types. Neural network-based approaches, which can learn directly from raw audio signals, also hold promise for detecting depression but face challenges in portability and interpretability, particularly when addressing complex confounding factors [61] (Supplementary Results). Third, although our context-constrained analysis demonstrates that the signals we found are persistent across speech content, we cannot separate pitch differences due to word choice from pitch differences due to emotional content without additional experiments directly controlling the linguistic context.

While we don’t know how far results will generalize outside the female Chinese cohort, the findings reveal that vocal features can be used to identify MDD cases with high accuracy and we expect that with improvements, such as the inclusion of additional voice features, even higher predictive accuracy may be obtainable. Our hope is that these findings will further encourage efforts to assess changes in the voice, long understood by experienced clinicians to be a valuable sign, returning it to a more central position in clinical and research work on MDD.

Methods

Participants

We used data from the CONVERGE [32] study, in which women with recurrent MDD were recruited from 58 provincial mental health centers and psychiatric departments of general medical hospitals in 45 cities and 23 provinces of China. Participants were aged between 30 and 60, with two or more episodes of MDD that met the DSM-IV criteria [5], with the first episode occurring between ages 14–50. Cases were excluded if they had pre-existing bipolar disorder, nonaffective psychosis, smoking/nicotine dependence (alcohol and substance abuse were virtually absent in this study, so it was not assessed), or mental retardation. Control subjects, screened to exclude a history of MDD, were recruited from patients undergoing minor surgical procedures at general hospitals and individuals attending local community centers. The replication study [43] used the same inclusion/exclusion criteria as CONVERGE, and recruited samples from 20 different hospitals in China, with a final sample size of 1189 (Fig. S1). This study was approved by Institutional Review Boards at UCLA, Bio-x Center, Shanghai Jiao Tong University (M16033), and local hospitals. All participants provided written informed consent.

Data collection

All subjects went through a semi-structured interview using a computerized assessment system as outlined previously [43] and described in Supplementary Methods. Recordings for cases were obtained in outpatient clinics. Controls were recorded in outpatient clinics and in community health centers. Recordings were not standardized and varied in quality and content. All participants provided DNA samples for genetic analysis. Details of DNA sequencing and genotype imputation have been previously reported [32] and described briefly in Supplementary Methods.

Covariates

The covariates were five demographic variables and two recording quality variables. The demographic variables were age, education level, occupation, marital status, and social class. The recording quality referred to noise level and accent. The noise level and accent label were determined subjectively by the listeners during the process of identifying the patients’ voice segments. The noise was categorized into four levels: (1) No noise; (2) Slight noise but the subject’s speech was clear; (3) Noise present but the content of the subject’s speech could be clearly heard; (4) High noise levels and unclear speech. Note that noise level 4 means that the speech, although difficult to understand, can still be comprehended with extra effort. And samples were excluded during quality controls stage if the speech is not able to comprehended at all. The accent was a binary label that indicated whether the subjects’ speech was in standard Mandarin or not.

Voice data preprocessing

In total, 8322 subjects the subjects recruited in CONVERGE had interview recordings (Fig. S1). To obtain the subjects’ utterances, a group of undergraduates listened to the recordings to identify any voice segments from the subjects with a duration >2 s. Audio samples were excluded where the noise was so prominent that the content of the subject’s speech could not be understood, which yielded 7654 subjects with available segments. All segments from the same subject were concatenated in the order in which they occur in the interview and down sampled to 8 kHz. Two postgraduate psychological students listened to all the segments to ensure that no speech voice other than the subjects was included in the segments and that no words were cut off mid-way.

The preprocessing procedure in the replication study was the same as in CONVERGE. Of the initially recruited 1301 participants (551 cases), 1189 subjects (including 490 cases) had available voice segments (Fig. S1). All segments from the same subject were concatenated into one, to extract voice features for replicating the association between voice and MDD and identifying the voice features associated with SCL (see distribution of the audio segment length in Fig. S9). The speech segments in the replication study were further annotated to indicate the specific question that prompted each spoken response (Table S5). This additional level of data analysis was introduced to better understand the relationship between the interview content and the participants’ speech patterns. We additionally extracted the same voice features on the non-concatenated segments corresponding to a single question for a sensitivity analysis, which we referred to as, the context-constrained analysis (described below).

Voice features

We used the INTERSPEECH 2016 Computational Paralinguistics Evaluation [41, 42]. Calculations were implemented in the openSMILE python package v2.4.2 [62] and described in Supplementary Methods. Given that many of the features were highly correlated (for example, the arithmetic and root-quadratic mean of F0, as shown in Fig. S2), we removed redundant features (described in Supplementary Methods), resulting in a set of 15 F0-based features and 15 ΔF0-based features. We provide in Supplementary Table S2 technical definitions of the 30 features used, along with non-technical explanations of what each feature measures.

Two-stage meta-analysis

We used a two-stage meta-analytic framework to take into account differences between hospitals. In the first stage, for each hospital a linear regression model was fitted for each F0-related feature as the dependent variable using MDD and covariates as the predictor variables. We applied rank-based inverse normal transformation to the voice features. At stage 2, beta coefficients for MDD and standard errors from stage 1 were pooled using random-effects meta-analysis [63], assuming that the true effect sizes in different sites are not exactly the same but are drawn from a distribution of effect sizes. P values were FDR-adjusted [64]. In the second stage, we repeated the analyses in four hospitals with sample sizes ≥100 (N = 1084, Fig. S1). We performed the same procedure as in the two-stage meta-analysis above. We combined results from both discovery and replication cohorts using random-effects meta-analysis [63].

Heritability and genetic correlations

Heritability and genetic correlations were estimated on the 7654 subjects in CONVERGE (Fig. S1). The SNP-based heritability used a generalized REML (restricted maximum likelihood) method implemented in LDAK [65]. We applied rank-based inverse normal transformation to the voice features and incorporated the above covariates and 20 genetic PCs. P values were FDR-adjusted. For heritable voice features, we estimated their genetic correlation with MDD, adjusting for these same covariates and 20 genetic PCs. The genetic correlation was calculated through a bivariate GREML analysis implemented in GCTA [66, 67]. P values were FDR-adjusted based on the number of heritable voice features.

Associations between pitch features and current mood

To identify biomarkers for current mood, subjects in the replication cohort were given a 16-item, self-administered questionnaire assessing the severity of depression-related symptoms on a five-point distress scale over the past 30 days (subscales for depression symptom checklist, SCL) [55]. We used the same two-stage meta-analysis method to estimate the association between the 16 voice features and SCL scores. All four hospitals from the replication cohort with sample sizes ≥100 were selected (N = 1084, Fig. S1). At stage 1, for each hospital, a linear regression model was fitted for each pitch feature as the dependent variable using SCL scores and the covariates as the predictor variables. At stage 2, beta coefficients for SCL and standard errors from stage 1 were pooled using random-effects meta-analyses [63]. SCL scores were standardized using rank-based inverse normal transformation. P values were FDR-adjusted.

Classification model

We employed a logistic regression model using seven covariates (age, education level, occupation, marital status, social class, noise level, and accent) to establish a null model. Full models that incorporated both these covariates and voice features were then developed. We included all 20 voice features identified as associated with MDD during the discovery stage, including those not replicated. We used samples available in the discovery group for training data (n = 7654). For the test data set we used the replication sample (n = 1189). There was no re-estimation of weights in the test sample.

We compared the results of the full model with the null model based on logistic regression. Then we tested the classification performances using SVM, XGBoost, and MLP as the classifiers. The performance of models was evaluated across several metrics: accuracy, sensitivity, specificity, AUC-ROC, AUC-PR, and F1-score. To identify the best hyperparameters for each model, a grid search with 5-folds cross-validation was employed on the training dataset. The above process was implemented in python with package scikit-learn [68] v1.2.2 and xgboost [59] v2.0.3.

Associations between pitch features and MDD symptoms, risk factors, and comorbidities

We examined the relationship between the 30 voice F0/ΔF0 features with 33 variables related to MDD, including eight risk factor variables, 11 comorbidity variables, seven symptoms, three variables about suicidality, age of onset, number of MDD episodes, neuroticism, and premenstrual syndrome score (summarized in Table S8). We again employed the two-stage meta-analysis procedure. At stage 1, for each hospital, a multivariate linear regression model was fitted for each pitch feature as the dependent variable using one of the above variables and covariates as the independent variables. At stage 2, we used the Q statistics to measure the heterogeneity of the pooled beta coefficients and standard errors. If the heterogeneity test is significant (P < 0.05), we then used the random-effects model for meta-analyses, otherwise we used fixed-effects [64]. We applied a Bonferroni correction to obtain a 5% significance threshold. Due to the high endorsement rates for certain variables within some hospitals, the hospitals included in the meta-analysis varied depending on the variable being analyzed (for example, if all cases from one hospital did not have suicidal attempts, this hospital would be excluded for the analysis of suicidal attempts at stage 1). We reported the number of hospitals and sample size for each association along with the meta-results.

Context-constrained analysis

We counted the total number of available voice segments for each question in the replication cohort and selected the two most frequently answered questions from the demographic section of the interview: D2.A (“What is your date of birth?”) and D10 (“How much do you weigh while wearing indoor clothing?”). For each question, we used the corresponding segments to extract the 16 voice features that were associated with MDD in our previous analysis. Finally, we re-assessed the associations between these voice features and MDD through the two-stage meta-analysis method. The limited voice duration and small sample size reduced power to detect a significant signal. We applied the one-sided binomial sign test to determine whether the number of voice features demonstrating consistent directions of association effects between the concatenated segments and the context-constrained segments was greater than expected by chance (that is, a one-sided test of whether this fraction is greater than 0.5).

Data availability

Site-level summary statistics necessary for generating the final meta-analysis results, as well as labels and model predictions required to reproduce the ROC and precision-recall curves are available from the corresponding author upon reasonable request.

Code availability

Scripts to run the two-stage meta-analysis and classification modeling are available from the corresponding author upon reasonable request.

References

Kendler KS. The genealogy of major depression: symptoms and signs of melancholia from 1880 to 1900. Mol Psychiatry. 2017;22:1539–53.

Kendler KS. The phenomenology of major depression and the representativeness and nature of DSM criteria. AJP. 2016;173:771–80.

American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, Third Edition. Washington, D.C: American Psychiatric Association; 1980.

American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, Revised Third Edition. Washington, D.C: American Psychiatric Association; 1987.

American Psychiatric Association. Diagnostic and statistical manual of mental disorders, Fourth Edition. Washington, D.C: American Psychiatric Association; 1994.

American Psychiatric Association. Diagnostic and statistical manual of mental disorders (DSM-5®). Washington, D.C: American Psychiatric Association; 2013.

Lux V, Kendler KS. Deconstructing major depression: a validation study of the DSM-IV symptomatic criteria. Psychol Med. 2010;40:1679–90.

Hyman SE. Can neuroscience be integrated into the DSM-V? Nat Rev Neurosci. 2007;8:725–32.

Kessler RC, Berglund P, Demler O, Jin R, Koretz D, Merikangas KR, et al. The epidemiology of major depressive disorder results from the national comorbidity survey replication (NCS-R). JAMA. 2003;289:3095–105.

Lu J, Xu X, Huang Y, Li T, Ma C, Xu G, et al. Prevalence of depressive disorders and treatment in China: a cross-sectional epidemiological study. Lancet Psychiatry. 2021;8:981–90.

Thornicroft G, Chatterji S, Evans-Lacko S, Gruber M, Sampson N, Aguilar-Gaxiola S, et al. Undertreatment of people with major depressive disorder in 21 countries. Br J Psychiatry. 2017;210:119–24.

Guislain J Orales sur Les Phrénopathies, ou Traitê Thêorique Et Pratique Des Maladies Mentales: Cours Donné A La Clinique Des Êtablissements D’Aliénés A Gand. Vol. 1. Paris, & Bonn,: Gand; 1852.

Kraepelin E Manic-depressive insanity and paranoia. Edinburgh: E. & S. Livingstone; 1921.

Sobin C Psychomotor Symptoms of Depression. A m J Psychiatry. 1997;15.

Cummins N, Scherer S, Krajewski J, Schnieder S, Epps J, Quatieri TF. A review of depression and suicide risk assessment using speech analysis. Speech Commun. 2015;71:10–49.

Low DM, Bentley KH, Ghosh SS. Automated assessment of psychiatric disorders using speech: A systematic review. Laryngoscope Investig Otolaryngol. 2020;5:96–116.

Nilsonne Å. Acoustic analysis of speech variables during depression and after improvement. Acta Psychiatr Scand. 1987;76:235–45.

Nilsonne Å. Speech characteristics as indicators of depressive illness. Acta Psychiatr Scand. 1988;77:253–63.

Mundt JC, Snyder PJ, Cannizzaro MS, Chappie K, Geralts DS. Voice acoustic measures of depression severity and treatment response collected via interactive voice response (IVR) technology. J Neurolinguist. 2007;20:50–64.

Mundt JC, Vogel AP, Feltner DE, Lenderking WR. Vocal acoustic biomarkers of depression severity and treatment response. Biol Psychiatry. 2012;72:580–7.

Kuny S, Stassen HH. Speaking behavior and voice sound characteristics in depressive patients during recovery. J Psychiatr Res. 1993;27:289–307.

Cannizzaro M, Harel B, Reilly N, Chappell P, Snyder PJ. Voice acoustical measurement of the severity of major depression. Brain Cogn. 2004;56:30–5.

Alpert M, Pouget ER, Silva RR. Reflections of depression in acoustic measures of the patient’s speech. J Affect Disord. 2001;66:59–69.

Pan W, Flint J, Shenhav L, Liu T, Liu M, Hu B, et al. Re-examining the robustness of voice features in predicting depression: Compared with baseline of confounders. Li Z, editor. PLoS ONE. 2019;14:e0218172.

Wang J, Zhang L, Liu T, Pan W, Hu B, Zhu T. Acoustic differences between healthy and depressed people: a cross-situation study. BMC Psychiatry. 2019;19:300.

Schultebraucks K, Yadav V, Shalev AY, Bonanno GA, Galatzer-Levy IR. Deep learning-based classification of posttraumatic stress disorder and depression following trauma utilizing visual and auditory markers of arousal and mood. Psychol Med. 2022;52:957–67.

Di Y, Wang J, Liu X, Zhu T. Combining polygenic risk score and voice features to detect major depressive disorders. Front Genet. 2021;12:2451.

Flint J The genetic basis of major depressive disorder. Mol Psychiatry [Internet]. 2023 Jan 26 [cited 2023 Jan 31]; Available from: https://www.nature.com/articles/s41380-023-01957-9

Hasler G, Drevets WC, Manji HK, Charney DS. Discovering endophenotypes for major depression. Neuropsychopharmacol. 2004;29:1765–81.

Kendler KS, Aggen SH, Neale MC. Evidence for multiple genetic factors underlying DSM-IV criteria for major depression. JAMA Psychiatry. 2013;70:599–607.

Peterson RE, Cai N, Dahl AW, Bigdeli TB, Edwards AC, Webb BT, et al. Molecular genetic analysis subdivided by adversity exposure suggests etiologic heterogeneity in major depression. AJP. 2018;175:545–54.

CONVERGE consortium. Sparse whole-genome sequencing identifies two loci for major depressive disorder. Nature. 2015;523:588–91.

Andrianopoulos MV, Darrow KN, Chen J. Multimodal standardization of voice among four multicultural populations: fundamental frequency and spectral characteristics. J Voice. 2001;15:194–219.

Kendler KS, Gardner C, Neale M, Prescott C. Genetic risk factors for major depression in men and women: similar or different heritabilities and same or partly distinct genes? Psychol Med. 2001;31:605.

Tao M, Li Y, Xie D, Wang Z, Qiu J, Wu W, et al. Examining the relationship between lifetime stressful life events and the onset of major depression in Chinese women. J Affect Disord. 2011;135:95–9.

Gao J, Li Y, Cai Y, Chen J, Shen Y, Ni S, et al. Perceived parenting and risk for major depression in Chinese women. Psychol Med. 2012;42:921–30.

Gan Z, Li Y, Xie D, Shao C, Yang F, Shen Y, et al. The impact of educational status on the clinical features of major depressive disorder among Chinese women. J Affect Disord. 2012;136:988–92.

Yang F, Li Y, Xie D, Shao C, Ren J, Wu W, et al. Age at onset of major depressive disorder in Han Chinese women: Relationship with clinical features and family history. J Affect Disord. 2011;135:89–94.

Shi J, Zhang Y, Liu F, Li Y, Wang J, Flint J, et al. Associations of educational attainment, occupation, social class and major depressive disorder among Han Chinese women. PLOS ONE. 2014;9:e86674.

Li Y, Shi S, Yang F, Gao J, Li Y, Tao M, et al. Patterns of co-morbidity with anxiety disorders in Chinese women with recurrent major depression. Psychol Med. 2012;42:1239–48.

Schuller B, Steidl S, Batliner A, Hirschberg J, Burgoon JK, Baird A, et al. The INTERSPEECH 2016 Computational Paralinguistics Challenge: Deception, Sincerity & Native Language. In: Interspeech 2016 [Internet]. ISCA; 2016 [cited 2023 Apr 19]. p. 2001–5. Available from: https://www.isca-speech.org/archive/interspeech_2016/schuller16_interspeech.html

Weninger F, Eyben F, Schuller BW, Mortillaro M, Scherer KR On the Acoustics of Emotion in Audio: What Speech, Music, and Sound have in Common. Front Psychol [Internet]. 2013 [cited 2021 Dec 20];4. Available from: http://journal.frontiersin.org/article/10.3389/fpsyg.2013.00292/abstract

Di Y, Wang J, Li W, Zhu T. Using i-vectors from voice features to identify major depressive disorder. J Affect Disord. 2021;288:161–6.

Afshan A, Guo J, Park SJ, Ravi V, Flint J, Alwan A Effectiveness of Voice Quality Features in Detecting Depression. Interspeech 2018 [Internet]. 2018 Sep [cited 2023 Apr 19]; Available from: https://par.nsf.gov/biblio/10098305-effectiveness-voice-quality-features-detecting-depression

Alghowinem S, Goecke R, Epps J, Wagner M, Cohn J Cross-Cultural Depression Recognition from Vocal Biomarkers. In: Interspeech 2016 [Internet]. ISCA; 2016 [cited 2023 May 23]. p. 1943–7. Available from: https://www.isca-speech.org/archive/interspeech_2016/alghowinem16_interspeech.html

Quatieri TF, Malyska N Vocal-source biomarkers for depression: a link to psychomotor activity. In: Interspeech 2012 [Internet]. ISCA; 2012 [cited 2022 Jul 7]. p. 1059–62. Available from: https://www.isca-speech.org/archive/interspeech_2012/quatieri12_interspeech.html

Syed ZS, Schroeter J, Sidorov K, Marshall D Computational Paralinguistics: Automatic Assessment of Emotions, Mood and Behavioural State from Acoustics of Speech. In: Interspeech 2018 [Internet]. ISCA; 2018 [cited 2023 Nov 27]. p. 511–5. Available from: https://www.isca-speech.org/archive/interspeech_2018/syed18_interspeech.html

Schuller B, Batliner A, Steidl S, Seppi D. Recognising realistic emotions and affect in speech: State of the art and lessons learnt from the first challenge. Speech Commun. 2011;53:1062–87.

Schuller B, Steidl S, Batliner A, Burkhardt F, Devillers L, Müller C, et al. Paralinguistics in speech and language—State-of-the-art and the challenge. Comput Speech Lang. 2013;27:4–39.

Eyben F Real-time speech and music classification by large audio feature space extraction. Springer; 2015.

Mao K, Wu Y, Chen J. A systematic review on automated clinical depression diagnosis. npj Ment Health Res. 2023;20:1–17.

Xu S, Yang Z, Chakraborty D, Chua YHV, Tolomeo S, Winkler S, et al. Identifying psychiatric manifestations in schizophrenia and depression from audio-visual behavioural indicators through a machine-learning approach. Schizophr. 2022;8:1–13.

Ringeval F, Schuller B, Valstar M, Cummins Ni, Cowie R, Tavabi L, et al. AVEC 2019 Workshop and Challenge: State-of-Mind, Detecting Depression with AI, and Cross-Cultural Affect Recognition. arXiv:190711510 [cs, stat] [Internet]. 2019 Jul 10 [cited 2021 Jan 21]; Available from: http://arxiv.org/abs/1907.11510

Hansen L, Rocca R, Simonsen A, Olsen L, Parola A, Bliksted V, et al. Speech- and text-based classification of neuropsychiatric conditions in a multidiagnostic setting. Nat Ment Health. 2023;1:971–81.

Derogatis LR. SCL-90: an outpatient psychiatric rating scale-preliminary report. Psychopharmacol Bull. 1973;9:13–28.

Kendler KS, Karkowski-Shuman L. Stressful life events and genetic liability to major depression: genetic control of exposure to the environment? Psychol Med. 1997;27:539–47.

Kendler KS, Kessler RC, Walters EE, MacLean C, Neale MC, Heath AC, et al. Stressful life events, genetic liability, and onset of an episode of major depression in women. FOC. 2010;8:459–70.

Suthaharan S Support Vector Machine. In: Suthaharan S, editor. Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning [Internet]. Boston, MA: Springer US; 2016. p. 207–35. Available from: https://doi.org/10.1007/978-1-4899-7641-3_9

Chen T, Guestrin C XGBoost: A Scalable Tree Boosting System. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining [Internet]. San Francisco California USA: ACM; 2016 [cited 2024 May 15]. p. 785–94. Available from: https://dl.acm.org/doi/10.1145/2939672.2939785

Glorot X, Bengio Y Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics [Internet]. JMLR Workshop and Conference Proceedings; 2010 [cited 2024 May 16]. p. 249–56. Available from: https://proceedings.mlr.press/v9/glorot10a.html

Wang J, Ravi V, Flint J, Alwan A. Speechformer-CTC: sequential modeling of depression detection with speech temporal classification. Speech Commun. 2024;163:103106. Sep 1

Eyben F, Wöllmer M, Schuller B Opensmile: the munich versatile and fast open-source audio feature extractor. In: Proceedings of the 18th ACM international conference on Multimedia [Internet]. Firenze Italy: ACM; 2010 [cited 2023 May 24]. p. 1459–62. Available from: https://dl.acm.org/doi/10.1145/1873951.1874246

Viechtbauer W. Conducting Meta-Analyses in R with the metafor Package. J Stat Softw. 2010;36:1–48.

Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Ser B (Methodol). 1995;57:289–300.

Speed D, Cai N, Johnson MR, Nejentsev S, Balding DJ. Reevaluation of SNP heritability in complex human traits. Nat Genet. 2017;49:986–92.

Lee SH, Yang J, Goddard ME, Visscher PM, Wray NR. Estimation of pleiotropy between complex diseases using single-nucleotide polymorphism-derived genomic relationships and restricted maximum likelihood. Bioinformatics. 2012;28:2540–2.

Yang J, Lee SH, Goddard ME, Visscher PM. GCTA: a tool for genome-wide complex trait analysis. Am J Hum Genet. 2011;88:76–82.

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: machine learning in python. J Mach Learn Res. 2011;12:2825–30.

Funding

This work was supported by R01-MH122596 from the National Institute of Mental Health, 200176/A/15/Z from the Wellcome Trust, and a philanthropic donation from Shirley and Walter Wang. The funding agencies and donors were not involved in the conduct, analysis, or reporting of this work.

Author information

Authors and Affiliations

Contributions

Conceived and designed the study: JF, TZ, AA, ER; Analysed the data: YD, JM, JW, VR, AG. Wrote the paper: YD, KSK, JF. All authors contributed to the interpretation of data, provided feedback on drafts, and approved the final draft.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

This study was approved by Institutional Review Boards at UCLA, Bio-x Center, Shanghai Jiao Tong University (M16033), and local hospitals. All participants provided written informed consent. All methods were performed in accordance with relevant guidelines and regulations.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Di, Y., Rahmani, E., Mefford, J. et al. Unraveling the associations between voice pitch and major depressive disorder: a multisite genetic study. Mol Psychiatry 30, 2686–2695 (2025). https://doi.org/10.1038/s41380-024-02877-y

Received:

Revised:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41380-024-02877-y

This article is cited by

-

Sprachmodelle in der Diagnostik und Therapie psychischer Erkrankungen

DNP – Die Neurologie & Psychiatrie (2025)