Abstract

Quantum generative models provide inherently efficient sampling strategies and thus show promise for achieving an advantage using quantum hardware. In this work, we investigate the barriers to the trainability of quantum generative models posed by barren plateaus and exponential loss concentration. We explore the interplay between explicit and implicit models and losses, and show that using quantum generative models with explicit losses such as the KL divergence leads to a new flavor of barren plateaus. In contrast, the implicit Maximum Mean Discrepancy loss can be viewed as the expectation value of an observable that is either low-bodied and provably trainable, or global and untrainable depending on the choice of kernel. In parallel, we find that solely low-bodied implicit losses cannot in general distinguish high-order correlations in the target data, while some quantum loss estimation strategies can. We validate our findings by comparing different loss functions for modeling data from High-Energy-Physics.

Similar content being viewed by others

Introduction

The advent of quantum computing has opened up new avenues for solving classically intractable problems1,2,3,4. Naturally, researchers gravitate towards finding the first high-value applications that could be tackled with near- and mid-term quantum devices5. This includes not only speed-ups3,6,7,8 but potentially superior memory efficiency9 or concrete qualitative improvements10,11. Quantum machine learning (QML) is one of the domains that attracts this attention2. Quantum systems, in being inherently probabilistic, are particularly well suited to generative modeling tasks12. Generative models aim to learn the underlying distribution of a dataset and thereby provide a means of generating new data samples that are similar to the original data. As well as providing a naturally efficient means of generating samples, quantum generative models can provably encode probability distributions that are out of reach for classical models13,14,15, and have been proposed for various applications, such as handwritten digits16, finance17, or High-Energy-Physics (HEP)18,19.

Despite the excitement surrounding the potential of generative QML, there remain substantial questions concerning its scalability. This is non-trivial to assess since implementations are constrained by hardware limitations to small-scale proof-of-principle problems16,17,20,21,22. Thus analytic results are essential to guide the successful development of this field. Of particular concern is the growing body of literature on cost function concentration and barren plateaus (BPs)23,24,25,26,27,28,29,30, where loss function values can exponentially concentrate around a fixed value and loss gradients vanish exponentially with growing problem size. This phenomenon, which exponentially increases the resources required for training, originates from different sources23,25,31,32,33,34,35,36,37,38,39, and has been studied in a number of architectures23,25,30,40,41,42,43,44,45 as well as classes of cost function32,37,40. However, its impact on quantum generative modeling thus far has, except for the odd notable exception46, and very recent developments47, been largely overlooked.

In this work, we provide a thorough study of trainability barriers and opportunities in quantum generative modeling. Critical to our analysis is the distinction between explicit and implicit models and losses. Explicit models provide efficient access directly to the model probabilities, whereas implicit models only provide samples drawn from their distribution48. Quantum circuit Born machines (QCBMs)49, the focus of this work, encode a probability distribution in an n-qubit pure state and thus are a paradigmatic example of an implicit model. Mirroring the capabilities of the models, explicit losses are those that are formulated explicitly in terms of the model and target probabilities, whereas implicit losses compare samples from the model and the training distribution. The most commonly used explicit loss for quantum generative models is the Kullbach-Leibler (KL) divergence50. Other examples include the Jensen-Shannon divergence (JSD), the total variation distance (TVD) and the classical fidelity. The Maximum Mean Discrepancy (MMD)51 on the other hand is one of the leading examples of an implicit loss.

Here we argue that the tension between using an implicit generative model (providing only samples) with an explicit loss (requiring access to probabilities) leads to a new flavor of BP. This result disqualifies all before-mentioned explicit losses, and crucially the KL divergence, for efficient training of QCBMs without a strong inductive bias towards the target distribution. In contrast, the MMD as an implicit loss exhibits more nuanced behavior and can be either trainable or untrainable. By viewing the classical MMD loss as the expectation value of a quantum observable, we show that varying the bandwidth parameter of a Gaussian kernel interpolates the MMD loss between a loss composed of predominantly global terms and one composed of low-bodied terms with either exponentially or polynomially decaying loss variances in the number of qubits. In particular, we derive a polynomial lower bound on the loss for a wide family of different classes of structured and unstructured models that depends only on the effective entanglement light cone of the circuit. These results are summarized in Table 1.

In parallel, we provide insights into how the globality of a generative loss affects the types of correlations in a dataset that can reliably be learned. In particular, we show that a k-bodied loss (see Fig. 5) cannot distinguish between distributions that agree on all k-marginals but disagree about higher-order correlations. Hence we argue that in the context of quantum generative modeling, it is advantageous to train on full-bodied losses, that is losses containing both low and high-bodied terms, rather than the purely local losses advocated elsewhere in QML. The MMD is then a promising candidate choice for the training of QCBMs as its bodyness can be controlled via the bandwidth parameter.

We additionally expand the pool of viable loss functions by proposing a new local quantum fidelity (LQF)-type loss which leverages what we call a quantum strategy for evaluating losses. This is to be contrasted with the conventional measurement strategy which simply uses samples from the model distribution in the computational basis. We provide an efficient training protocol using the LQF loss with provable trainability guarantees.

Finally, we support our analysis with a comparison of the performance of the KL divergence, MMD, and LQF losses for modeling HEP data. Specifically, we consider electron energy depositions in the electromagnetic calorimeter (ECAL) part of detectors involved in a typical proton-proton collision experiment at the LHC. We learn to generate hits in the detector as black and white images of various sizes, with up to 16 qubits. We confirm that the properly-tuned MMD and the LQF losses remain trainable using a restrictive shot budget, while training with the KL divergence becomes increasingly futile.

Results

Framework

The goal of generative modeling is to use samples from a target distribution p(x) to learn a model of p(x) which can be used to generate new samples. More concretely, as sketched in Fig. 1, a generative model takes as input a training dataset \(\tilde{P}\) consisting of \(M=| \tilde{P}|\) samples drawn from the target distribution p(x). This training set can be used to construct the empirical probability distribution \(\tilde{p}({\boldsymbol{x}})\) for all samples \({\boldsymbol{x}}\in \tilde{P}\). The training dataset, or the training distribution, is then used to train the variational parameters θ of a parameterized probability distribution qθ(x). If successful, the output of the algorithm is a set of optimized parameters θopt such that the trained model \({q}_{{{\boldsymbol{\theta }}}_{{\bf{opt}}}}({\boldsymbol{x}})\) well-approximates the unknown target distribution p(x). The trained model \({q}_{{{\boldsymbol{\theta }}}_{{\bf{opt}}}}({\boldsymbol{x}})\) can then be used to generate new and previously unseen data. For compactness, we use the notation p and qθ to denote the target and model distributions respectively.

Given a training dataset \(\tilde{P}\) with distribution \(\tilde{p}({\boldsymbol{x}})\) over discrete data samples x, the goal of a QCBM is to learn a distribution qθ(x) which models the real-world distribution p(x) from which the training data itself was sampled. This is done by tuning the parameters θ of a parametrized quantum circuit such that the QCBM minimizes a loss function that estimates the distance between the model and the training distribution. The QCBM is an implicit model and can thus in general not be paired with an explicit loss function, but it may be trainable using an implicit loss. In contrast to the conventional loss estimation strategy (solid lines) of generating a set of samples \({\tilde{Q}}_{{\boldsymbol{\theta }}}\) and forming an empirical distribution \({\tilde{q}}_{{\boldsymbol{\theta }}}({\boldsymbol{x}})\), strategies that are `more quantum' (dashed lines) can be employed with the aim of allowing QCBMs to be trained with loss functions which conventionally appear explicit.

The process of training requires a loss function \({\mathcal{L}}({\boldsymbol{\theta }})\) which estimates the distance between the model distribution qθ(x) and the training distribution \(\tilde{p}({\boldsymbol{x}})\). For typical choices in loss function (detailed further in Section “Loss functions”), the loss is minimized when the model parameters θ are tuned such that the model distribution perfectly matches the empirical distribution obtained from the training data. That is, \({\mathcal{L}}({\boldsymbol{\theta }})=0\) if and only if \({q}_{{\boldsymbol{\theta }}}({\boldsymbol{x}})=\tilde{p}({\boldsymbol{x}})\) over the entire data space \({\mathcal{X}}\). Thus, by perfectly minimizing the loss, one perfectly learns the empirical distribution \(\tilde{p}({\boldsymbol{x}})\) but not the true target distribution p(x). This scenario is commonly called overfitting (In contrast, discriminative machine learning models can be perfectly minimized on the training data and not be overfitted.). To allow for generalization52, whereby the model can generate novel data with similar properties to the training data, one seeks to significantly reduce (but not perfectly minimize) the training loss. While generalization is the end-all goal of generative models, it is not the focus of this work. Instead, we focus on the training component of the generative framework, as failing to train also prohibits generalization.

Quantum circuit models

One prototypical quantum generative model is the quantum circuit Born machine (QCBM)13,49,53,54. Owing its name to the Born rule of quantum mechanics, a QCBM encodes a probability distribution over discrete data (here bitstrings) in an n-qubit pure quantum state that depends on a parameterized unitary U(θ),

Here \(\left\vert {\boldsymbol{x}}\right\rangle\) is a computational basis state corresponding to a bitstring x and, without loss of generality, an initial state can be chosen as \(\left\vert {\boldsymbol{0}}\right\rangle ={\left\vert 0\right\rangle }^{\otimes n}\). We note that estimating qθ(x) is equivalent to finding the expectation value of a global projector \(|\boldsymbol{x}\rangle\langle \boldsymbol{x}|\). More fundamentally, QCBMs enable the encoded distribution to be efficiently sampled simply by measuring in a chosen computational basis. That is, every measurement of the quantum state provides an unbiased sample from the encoded distribution (in an ideal noise-free setting). This is a very desirable property in generative models that many (classical) generative models do not share with the QCBM. Sampling techniques for classical generative models are often unreliable and may break down for certain distributions, as is the case for restricted Boltzmann machines (RBMs)55,56. Born machines represent an effort to create a powerful, flexible, and efficient generative model for classical discrete data, and as well as numerous ‘standard’ digital quantum implemenations16,17,20,21,22, they have been widely implemented using tensor networks57,58,59,60, continuous variable hardware61, in a conditional setting18,62, with non-linearities63.

An important, but rather subtle, distinction in generative modeling is that between explicit and implicit generative models48,64. Explicit generative models are ones that allow efficient access to the model probability qθ(x) for any data sample x. Here, “efficient” means that the probabilities can be computed in a time and memory that are polynomial in the size of the data samples, i.e., \({\mathcal{O}}({\rm{poly}}(n))\) resources. Explicit (classical) generative models include for example auto-regressive models65, RNNs66, tensor networks without loops (which includes tensor network Born machines)57,58, and many forms of density estimators. In contrast, implicit models lack this property and instead offer efficient access to samples from qθ(x), which some forms of explicit models may struggle with. A popular example of an implicit generative model is Generative Adversarial Networks (GANs)67 that leverage an implicit training scheme to learn powerful generators.

In the case of QCBMs implemented on quantum devices, it becomes evident that we do not have (efficient) explicit access to qθ(x), but only to samples of the distribution in the computational basis. Consequently, QCBMs can be classified as implicit generative models. In this work, we study the trainability issues that QCBMs suffer from as a result.

Loss functions

Similarly to the distinction between explicit and implicit generative models, we draw a distinction between explicit and implicit loss functions. In broad terms, explicit losses are those that can only be formulated explicitly in terms of the target and model probabilities, whereas implicit losses are those that can be formulated in terms of an average over model and training data samples. This distinction at the level of loss functions thus mirrors the capabilities and limitations of explicit and implicit generative models.

More concretely, we define an explicit loss as a loss function \({\mathcal{L}}\) that can be written solely as a function of the probabilities of the target and model distributions, without any dependence on the data itself. Explicit losses thus take the general form

where f(⋅) is a function that depends on the target probabilities p(xi) and model probabilities qθ(xi) for data variables \({{\boldsymbol{x}}}_{i}\in {\mathcal{X}}\) with i = 1, …, r. For this loss to be useful, the function f should be chosen such that it measures the distance between the probability distributions p and qθ. Crucially, the function f does not take the data values x themselves as arguments.

While in full generality explicit losses could compare multiple copies of the target and model probabilities (i.e., we can have r > 1), in practice, they usually take the simpler form

We call such losses pairwise explicit losses since they compare the model and target probabilities on the same data samples, or in our case, bitstrings. The pairwise explicit loss covers all so-called f-divergences68, including the commonly encountered KL divergence (KLD)69,

the reverse-KLD,

the Jensen-Shannon divergence (JSD)70,

and the total variation distance (TVD),

Another example of loss function that can be written in this form is the classical fidelity,

Notably, any non-data-dependent post-processing of an explicit loss retains its explicit character. Thus, any non-data-dependent function of an explicit loss (Eq. (2)) may also be considered an explicit loss. For example, the Rényi divergence71

with 0 < α < ∞ and α ≠ 1 can be classified as an explicit loss function.

On the other hand, we define an implicit loss as one that can be written as an average over samples drawn from the target and model distributions. That is, an implicit loss function can be expressed as

where g(x1, …, xr) is some function that depends on the data (but not probabilities), and the expectation is taken over data variables x1, …, xr sampled either from the data distribution p or the model distribution qθ.

As a key example of an implicit loss, we focus on the commonly used Maximum Mean Discrepancy (MMD)51 loss. The MMD takes the form

where K(x, y) is a freely chosen kernel function. We consider the popular choice of a classical Gaussian kernel, which is defined as

Here, ∥. ∥2 is the 2-norm, σ > 0 is the so-called bandwidth parameter, and xi, yi are the values of bit i in bitstring x, y, respectively. This kernel in effect provides a continuous measure of the distance between target and model bitstrings.

Interestingly, an implicit loss can always additionally be expressed in a form where it contains the target and model probabilities. Taking the MMD loss in Eq. (11) as a concrete example, the loss can be re-written as

However, we stress that due to the data dependence in the kernel K(x, y), the MMD loss function can in general not be classified as an explicit loss.

Nonetheless, this brings us to the subtle point that explicitness and implicitness are in fact not strictly mutually exclusive, i.e., one may be able to find a loss function that satisfies both Eq. (2) and Eq. (10) in specific cases. For example, for the MMD this occurs if the kernel is chosen to be a Kronecker delta function, K(x, y) = δxy. However, such hybrid losses are very much rare edge cases, and the overwhelming majority of losses are either explicit or implicit. A more detailed discussion of the technical nuances of the explicit and implicit loss distinction is provided in Supplementary Note I.

Loss measurement strategies

Central to the trainability of quantum generative models is the measurement strategy used to estimate the loss. Here we draw a distinction between conventional and quantum measurement strategies. For simplicity we now restrict our discussion to implicit quantum generative models such as the QCBM.

The conventional measurement strategy, which can be employed by both classical and quantum implicit models, starts by collecting sample data from the target and model distributions in the bases in which the data distribution is modeled, e.g., the computational basis for the case of classical data. For an implicit loss these samples can then be directly used to evaluate the loss function in Eq. (10). For an explicit loss, this is not possible, and instead one needs to use the collected samples to recreate an empirical estimate \({\tilde{q}}_{{\boldsymbol{\theta }}}\) of the true model distributions qθ.

More formally, as sketched in Fig. 1, consider the set of bitstrings \({\tilde{Q}}_{{\boldsymbol{\theta }}}\) obtained after collecting N samples from the model and the empirical model distribution \({\tilde{q}}_{{\boldsymbol{\theta }}}({\boldsymbol{x}})\) constructed from these samples. Then, the statistical estimate of the pairwise explicit loss function \(\tilde{{\mathcal{L}}}({\boldsymbol{\theta }})\) in Eq. (3) can be expressed as

Crucially, since this proxy is all we have access to, the properties of this statistical estimate are what determine the trainability of an explicit loss function when evaluated via the conventional strategy. We note that zero-estimates of the model probabilities with \({\tilde{q}}_{{\boldsymbol{\theta }}}({\boldsymbol{x}})=0\) are often “clipped” with a small regularization parameter ϵ ≪ 1 in order to avoid numerical instabilities in the loss computation.

This conventional strategy is somewhat classical in the sense that after sampling is performed on the quantum model, the post processing required to compute the cost is entirely classical. However, “more quantum” measurement strategies are also possible. In this case, a quantum circuit is used to compute functions of the probabilities, potentially more directly and/or collectively.

For example, rather than computing the classical fidelity in Eq. (8) by explicitly computing the probabilities qθ(x), one could encode the target distribution in a quantum state \(\left\vert \phi \right\rangle ={\sum }_{{\boldsymbol{x}}}\sqrt{\tilde{p}({\boldsymbol{x}})}\left\vert {\boldsymbol{x}}\right\rangle\) and compute the quantum fidelity

Up to arbitrary global phase factors (and a mod-square) this is equivalent to the classical fidelity. However, it can be computed via coherent strategies—namely a Loschmidt echo circuit72,73,74,75 or a SWAP test76,77. We note that in this case quantum generative modeling is equivalent to a state learning problem. While this expression seemingly requires the entire training dataset to be loaded into a wavefunction, we present an approach in Sec. II B 5 to estimate this cost using pairwise Hadamard tests.

More generally, it remains an open question if/when commonly encountered losses for generative modeling can be computed using quantum strategies and whether or not this brings any advantages (Beyond QCBMs, Quantum Generative Adversarial Networks (QGANs)78 trained with classical discriminators79,80,81 in effect use a conventional measurement strategy, whereas their variant with quantum discriminators82 use a quantum strategy.). Nonetheless, we suggest that this is an interesting avenue for future research.

Exponential concentration and barren plateaus

For a quantum generative model to be trained successfully, the loss landscape must be sufficiently featured to enable a solution to be found. There is a growing awareness of the importance of BPs, and its sister phenomenon exponential concentration, for QML23,24,25,26,27,28,29,30. A BP is a loss landscape where the magnitudes of gradients vanish exponentially with growing problem size23,25,26,27,28,29,31,32,33,34,35,36,37. Closely related and equally problematic is exponential concentration where the loss is shown to concentrate with high probability to a single fixed value24. This, with high probability, results in poorly trained models using a polynomial number of measurement shots (regardless of the optimization method employed)27. More precisely, exponential concentration can be formally defined as follows.

Definition 1

(Exponential concentration). Consider a quantity X(α) that depends on a set of variables α and can be measured from a quantum computer as the expectation of some observable. X(α) is said to be deterministically exponentially concentrated in the number of qubits n towards a certain fixed value μ if

for some b > 1 and all α. Analogously, X(α) is probabilistically exponentially concentrated if

for b > 1. That is, the probability that X(α) deviates from μ by a small amount δ is exponentially small for all α.

A number of causes of exponential concentration and BPs have been identified including using parameterized circuits that are too expressive23,25,31,43 or too entangling33,34,44. Hardware noise35,36,83 has also been shown to exponentially flatten the loss landscapes, which strongly hinders the potential of current noisy quantum devices. The exponential concentration can also happen due to randomness in the training dataset37,38,39. In addition, there are studies on the exponential concentration in different QML models including dissipative parametrized quantum circuits44 as well as quantum kernel-based models30.

Finally, the choice of loss function can also induce these phenomena. Thus far, loss concentration has predominantly been studied in the context of losses of the form

where ρ is an n-qubit input state and O is a Hermitian operator. In particular, it has been shown that “global”32 losses, i.e., those where O acts non-trivially on \({\mathcal{O}}(n)\) qubits, induce loss concentration even for very shallow random circuits. Conversely, local losses where O acts non-trivially on at most log(n) adjacent qubits (and more generally low-body losses where the adjacency constraint is lifted—see panel a) of (Fig. 5) have been shown to enjoy trainability guarantees32,40 with shallow unstructured circuits. Furthermore, we note that how BPs affect parametrized quantum circuits with a non-linear loss in the discriminative QML setting has been studied in ref. 37.

Here we study exponential concentration for generative modeling tasks on classical discrete data using implicit quantum generative models, and use our insights to establish guidelines of how best to train such models. Crucially, in this generative modeling context, the fixed points of the model probabilities tend to be exponentially small and the loss function contains the sum over exponentially many terms. These two together render previously used tools not directly applicable for studying the trainability of quantum generative models.

Large gradient variances are not enough

The presence or absence of BPs is usually diagnosed by computing the variance of the loss over a given parameter distribution. Crucially this is usually computed for the exact loss, i.e., not including the effect of shot noise. Here we argue that this approach can fail in the context of quantum generative modeling. In particular, if one computes the variance of the KLD loss then the loss variance can be non-exponentially vanishing even for very deep circuits. However, as we will argue in this section, the KLD loss is untrainable for both deep and shallow unstructured circuits if the model is implicit (i.e., only gives efficient access to samples and not to the probabilities).

We now show that the variance of the exact KL divergence depends directly on the support of the target distribution and hence can be polynomially large. This is quantified by the following proposition which we prove in Supplementary Note III.

Proposition 1

Consider the KLD loss as defined in Eq. (4). Assume access to the exact target distribution p(x) and the model distribution qθ(x). Then, we have

-

For deep (Haar random) parametrized circuit U(θ), the variance of the loss scales asymptotically (2n ≫ 1) as

$${{\rm{Var}}}_{{\boldsymbol{\theta }}}[{{\mathcal{L}}}^{{\rm{KLD}}}({\boldsymbol{\theta }})]=\frac{{\pi }^{2}}{6}\sum _{{\boldsymbol{x}}}{p}^{2}({\boldsymbol{x}}).$$(20) -

For a random tensor product circuit \(U({\boldsymbol{\theta }}){ = \bigotimes }_{i = 1}^{n}{U}_{i}({\theta }_{i})\) where Ui(θi) is a random single-qubit unitary, the variance of the loss scales as

$${{\rm{Var}}}_{{\boldsymbol{\theta }}}[{{\mathcal{L}}}^{{\rm{KLD}}}({\boldsymbol{\theta }})]=n-\frac{{\pi }^{2}}{6}\sum _{{\boldsymbol{x}},{{\boldsymbol{x}}}^{{\prime} }}p({\boldsymbol{x}})p({{\boldsymbol{x}}}^{{\prime} }){\left\Vert {\boldsymbol{x}}-{{\boldsymbol{x}}}^{{\prime} }\right\Vert }_{H},$$(21)where ∥ ⋅ ∥H is a Hamming distance.

It follows that the variance of the exact KLD can be non-exponentially vanishing even for a deep circuit, where one would generally expect a BP23, if the purity ∑x p2(x) of the target distribution is non-exponentially vanishing. For this condition to be met, all we need is that at least one probability p(x) of the target distribution is non-exponentially vanishing. This is captured by the following corollary.

Corollary 1

Under the same assumption as in Proposition 1, for the target distribution, at least one probability is at least polynomially large. Then, the variance of the KLD loss function does not vanish exponentially with the system’s size. That is, \(\exists {\boldsymbol{x}}:\,p({\boldsymbol{x}})\in \Omega \left(\frac{1}{{\rm{poly}}(n)}\right)\), we have

for some constant b > 1.

We note that any distribution with support on at most D bit strings necessarily has at least one probability that is 1/D large. Thus the support of a distribution lower bounds the variance of the exact KLD. This is reflected in Fig. 2. For the GHZ dataset, which has \({\mathcal{O}}(1)\) support, we observe a strong evidence for non-vanishing variance for all circuit depths. For linear and quadratic support datasets, the variances moderately decrease as the number of qubits increases for deep circuits.

Numerical evidence that the exact KLD loss can have a non-vanishing loss variance even when model probabilities exhibit exponential concentration. We study the loss concentration in randomly initialized line-topology circuits for various datasets, and increasing the number of qubits n and circuit depth. We emphasize that the model probabilities qθ(x) where evaluated exactly and in the absence of shot noise. We also show the infinite layer results beyond 6 qubits that are generated using Eq. (20). The GHZ dataset consists of the all-0 and all-1 bitstrings (\({\mathcal{O}}(1)\) support), the \({\mathcal{O}}(n)\) and \({\mathcal{O}}({n}^{2})\) datasets consist of n and n2 random bitstrings, respectively, and the cardinality dataset contains all bitstrings with \(\frac{n}{2}\) cardinality (\({\mathcal{O}}({2}^{n})\) support). There appears to be a strong data dependence for the magnitude of the loss variance, which could lead to exponential concentration.

Thus we see that for certain target probability distributions, the KLD does not exhibit a BP for explicit models. This suggests that quantum-inspired models that can provide direct access to probabilities (e.g., tensor network Born machines57,58) might be trainable with the KLD. However, current generative models running on quantum devices only provide access to samples from a distribution via measurements and, as we will argue in the next section, the large variance of the exact loss, in contrast to standard VQE-style losses, does not translate to substantial loss gradients in practise.

Trainability analysis on loss functions

In this section, we analyze the trainability of different loss functions used in quantum generative modeling.

Pairwise explicit losses

Part of the power of quantum generative models is that they can be used to continuously parameterize and express distributions over discrete data with exponential support. That is, an n-qubit model can be used to model distributions over 2n different n-bitstrings. However, while the true target distribution may have exponential support, the amount of training data \(\tilde{P}\) is in practise restricted. More precisely, for large n (e.g., n > 50), it is reasonable to assume that the number of bitstrings in the training dataset scales at most polynomially in n. Similarly, the number of bitstrings samples obtained from the model must also scale at most polynomially in n. That is, \(| \tilde{P}| ,| {\tilde{Q}}_{{\boldsymbol{\theta }}}| \in {\mathcal{O}}({\rm{poly}}(n))\).

This discrepancy between the polynomial support of the training data and the exponential support of the model, can make it highly challenging to train implicit models using pairwise explicit loss functions. In loose terms, the problem is that the only bitstrings that contribute to the evaluation of a statistical estimate of an explicit cost are those corresponding to bitstrings \(\tilde{P}\) in the training data. To estimate the loss one thus needs good estimates of the model distributions over the support of \(\tilde{P}\). However, for an implicit model these estimates are obtained via sampling and the set \(\tilde{P}\) contains an exponentially small proportion of the total number of bitstrings. As such, for generic models (i.e., those using no information about the particular dataset at hand), the probability of measuring any bitstring in the training set will also be exponentially small (as sketched in Fig. 3), leading to a poor statistical estimate of the loss. This observation was in fact one of the original motivations for moving away from the KLD and introducing the MMD loss in a quantum context in ref. 54 or follow-up works such as ref. 13.

In the space with 2n unique n-bit bitstrings, samples x generated from an uninformed model with high probability do not coincide with any of the training bitstrings. In other words, the empirical model distribution \({\tilde{q}}_{{\boldsymbol{\theta }}}({\boldsymbol{x}})\) and the training distribution \(\tilde{p}({\boldsymbol{x}})\) do not both have non-zero probabilities for any bitstring x. On the other hand, an implicit loss function such as the MMD provides a continuous measure of distance between the distributions by use of a Gaussian kernel with bandwidth σ.

Concentration of pairwise explicit losses

To make this line of argument more concrete, the first family of models we will consider are those where the individual model probabilities qθ(x) are exponentially concentrated over different values of θ. This is the case for a large family of unstructured parameterized quantum circuits. Since estimating qθ(x) is equivalent to computing the expectation value of the global projector \(| \boldsymbol{x}\rangle\langle \boldsymbol{x}|\), the concentration of qθ(x) can be viewed as resulting from the global-measurement-induced BP phenomenon32. In this case, concentration is observed even for an ansatz that is comprised of only a single layer of single-qubit rotations. However, alternative phenomena (e.g., noise35 or expressibility31) can also lead to the exponential concentration of qθ(x). More formally, the following proposition holds.

Proposition 2

(Concentration of model). For all possible bitstrings \({\boldsymbol{x}}\in {\mathcal{X}}\), the underlying probability qθ(x) of the quantum model exponentially concentrates towards some exponentially small fixed point μ ∈ O(1/bn) for b > 1 if the quantum generative model is constructed with:

-

A single layer of random single qubit gates \(U({\boldsymbol{\theta }}){ = \bigotimes }_{i = 1}^{n}{U}_{i}({{\boldsymbol{\theta }}}_{i})\). Or, more precisely, if \({\{{U}_{i}({{\boldsymbol{\theta }}}_{i})\}}_{{{\boldsymbol{\theta }}}_{i}}\) forms a local 2-design on qubit i32.

-

L layers of random k-local 2-designs, i.e., \(U({\boldsymbol{\theta }})=\mathop{\prod }\nolimits_{l = 1}^{L}\mathop{\bigotimes}\nolimits_{j=1}^{n/k}{U}_{l,j}({{\boldsymbol{\theta }}}_{l,j})\) with each Ul,j(θl,j) acting on k qubits and \({\{{U}_{l,j}({{\boldsymbol{\theta }}}_{l,j})\}}_{{{\boldsymbol{\theta }}}_{l,j}}\) forming a k-local 2-design over θl,j32.

-

A parameterized quantum circuit U(θ) such that its ensemble over θ i.e., {U(θ)}θ forms an approximate 2-design on n qubits23,31. This holds even for the problem-inspired circuits25.

-

A linear-depth quantum circuit subject to local Pauli noise between each layer35.

Proposition 2 provides examples of cases where the model probabilities exponentially concentrate over all bitstrings in \({\mathcal{X}}\). However, we find that in fact trainability difficulties arise even if model probabilities are only exponentially concentrated over the training dataset (but perhaps not on points outside the dataset). That is, all that is required for untrainability is that the probability of measuring a sample that is also in the dataset is practically zero. This is likely to be the case even for highly structured quantum circuits if the generative model is built without a strong inductive bias. We formalize this intuition in Supplementary Note II B.

We now argue that the exponential concentration of probabilities qθ(x) over the dataset causes \(\tilde{{\mathcal{L}}}({\boldsymbol{\theta }})\) to also exponentially concentrate. To understand why, let us look at the probability of measuring one specific bitstring (e.g., x0—the all-zero bitstring) and assume that qθ(x0) is exponentially concentrated towards some exponentially small value μ. Then, for any given parameter constellation, it is highly likely that qθ(x0) is exponentially close to μ. To estimate qθ(x0) on a quantum computer we sample N bitstrings from the quantum model and record the observations. The chance that none of the sampled bitstrings are the specific bitstring that we are interested in is \({(1-{q}_{{\boldsymbol{\theta }}}({{\boldsymbol{x}}}_{0}))}^{N}\approx 1-N\mu\). However, the number of circuits N that can be efficiently run is necessarily limited—here we will assume N ∈ poly(n). Thus we have that the probability of not measuring the bitstring we are interested in is exponentially close to 1. That is, the statistical estimate of \({\tilde{q}}_{{\boldsymbol{\theta }}}({{\boldsymbol{x}}}_{0})\) is almost always zero. We can then generalize this intuition for a single bitstring to the estimation of each of the (polynomially many) target bitstrings and therefore the whole loss function. The following theorem formalizes this argument.

Theorem 1

(Concentration of pairwise explicit loss for concentrated models). Consider the loss function of the form in Eq. (3). Assume that for all bitstrings in the training dataset, \({\boldsymbol{x}}\in \tilde{P}\), the quantum generative model qθ(x) exponentially concentrates towards some exponentially small value (as defined in Definition 1). Suppose that \(N\in {\mathcal{O}}({\rm{poly}}(n))\) samples are collected from the quantum model corresponding to the set of sampled bitstrings \({\tilde{Q}}_{{\boldsymbol{\theta }}}\), and that the training dataset \(\tilde{P}\) contains \(M\in {\mathcal{O}}({\rm{poly}}(n))\) samples. We define the fixed point of the loss as

with \({\mathcal{P}}\) (and \({{\mathcal{Q}}}_{{\boldsymbol{\theta }}}\)) being a set of unique bitstrings in \(\tilde{P}\) (and \({\tilde{Q}}_{{\boldsymbol{\theta }}}\)). Then, the probability that the estimated value \(\tilde{{\mathcal{L}}}({\boldsymbol{\theta }})\) is equal to \({{\mathcal{L}}}_{0}(\tilde{P},{\tilde{Q}}_{{\boldsymbol{\theta }}})\) is exponentially close to 1, i.e.,

with \(\delta \in {\mathcal{O}}\left(\frac{{\rm{poly}}(n)}{{c}^{n}}\right)\) for some c > 1.

As a direct consequence of Theorem 1, the following corollary gives the concentration points of some specific explicit loss functions mentioned in this work.

Corollary 2

(Concentration points of common explicit loss functions). Under the same conditions as in Theorem 1, the following loss functions concentrate at

-

KL-divergence:

$${{\mathcal{L}}}_{0}^{{\rm{KLD}}}(\tilde{P},{\tilde{Q}}_{{\boldsymbol{\theta }}})=\sum _{{\boldsymbol{x}}\in {\mathcal{P}}}\tilde{p}({\boldsymbol{x}})\log \left(\frac{\tilde{p}({\boldsymbol{x}})}{\epsilon }\right).$$(25)Here ϵ ≪ 1 is a clipping value, which is common practice to avoid the singularity of the logarithm at qθ(x) = 0.

-

Classical fidelity:

$${{\mathcal{L}}}_{0}^{{\rm{CF}}}(\tilde{P},{\tilde{Q}}_{{\boldsymbol{\theta }}})=1.$$(26) -

Reverse KL-divergence:

$${{\mathcal{L}}}_{0}^{{\rm{rev}}-{\rm{KLD}}}(\tilde{P},{\tilde{Q}}_{{\boldsymbol{\theta }}})=\sum _{{\boldsymbol{x}}\in {{\mathcal{Q}}}_{{\boldsymbol{\theta }}}}{\tilde{q}}_{{\boldsymbol{\theta }}}({\boldsymbol{x}})\log \left(\frac{{\tilde{q}}_{{\boldsymbol{\theta }}}({\boldsymbol{x}})}{\epsilon }\right).$$(27) -

Total variation distance:

$${{\mathcal{L}}}_{0}^{{\rm{TVD}}}(\tilde{P},{\tilde{Q}}_{{\boldsymbol{\theta }}})=2.$$(28)

Looking at the expressions for the fixed points given above, in the case of the KL divergence, classical fidelity and total variational distance, the fixed point is independent of θ. Thus it is clear that the costs cannot be used to train the quantum circuit model. In the case of the reverse KL divergence, the fixed point depends on θ but is independent of the training data and thus the reverse KL also cannot be used to train the model to learn the target distribution.

More generally, for all explicit losses of the form Eq. (3), the concentration point \({{\mathcal{L}}}_{0}(\tilde{P},{\tilde{Q}}_{{\boldsymbol{\theta }}})\), Eq. (23), can be separated into two terms: (i) the term that involves only \(\tilde{P}\) and (ii) the other that involves only \({\tilde{Q}}_{{\boldsymbol{\theta }}}\). In other words, the θ dependence of the estimator of the loss is independent of the target distribution and thus the estimate of the loss is worthless for training the generative model. This no-go result is rigorously established in Corollary 3. Our approach is to show that the loss function at two arbitrary parameter values θ1 and θ2, contains no information about the training distribution.

Corollary 3

(Untrainability of pairwise explicit loss functions). Under the same conditions as in Theorem 1, the probability that the difference between the two statistical estimates of the loss function at θ1 and θ2 does not contain any information about the training distribution is exponentially close to 1. Particularly, we have

with \(\delta \in {\mathcal{O}}\left(\frac{{\rm{poly}}(n)}{{c}^{n}}\right)\) for some c > 1, \({\tilde{Q}}_{{{\boldsymbol{\theta }}}_{1}}\) (and \({\tilde{Q}}_{{{\boldsymbol{\theta }}}_{2}}\)) is a set of sampling bitstrings obtained from the quantum generative model at the parameter value θ1 (and θ2), as well as

with \({{\mathcal{Q}}}_{{{\boldsymbol{\theta }}}_{1}}\) (and \({{\mathcal{Q}}}_{{{\boldsymbol{\theta}}}_{2}}\)) being a set of unique bit-strings in \({\tilde{Q}}_{{{\boldsymbol{\theta }}}_{1}}\) (and \({\tilde{Q}}_{{{\boldsymbol{\theta}}}_{2}}\)). Crucially, \(\Delta {{\mathcal{L}}}_{0}({\tilde{Q}}_{{{\boldsymbol{\theta }}}_{1}},{\tilde{Q}}_{{{\boldsymbol{\theta }}}_{2}})\) does not depend on any \(\tilde{p}({\boldsymbol{x}})\in \tilde{P}\).

To support our analytic claims we further conducted a numerical study of the exponential concentration of pairwise explicit costs. For concreteness, we here decided to focus on the KL divergence. In Fig. 4, we plot the mean and variance (over θ) of the KL divergence for the target distribution \(\tilde{p}({\boldsymbol{0}})=1\) as a function of the number of measurement shots and qubits. For simplicity we take our model to be a (Haar) random product state.

Concentration of the KL divergence loss as a function of the number of measurements and qubits for random product state circuits. Here we take the target distribution to be \(\tilde{p}({\boldsymbol{0}})=1\) and take the cutoff of the KLD to be ϵ = 10−14. Vertical lines indicate where the number of measurements equal 2n. Thus, we see that the the KLD estimate is biased upwards with any finite number of measurements, and the number of measurements required to achieve a reasonable level of uncertainty increases exponentially with the number of qubits n.

We see in (Fig. 4a) that with a polynomial number of measurements, as per Eq. (25), the empirical estimate of the loss concentrates at \(\log (1/\epsilon )\approx 32.2\) for ϵ = 10−14. Correspondingly, with a polynomial number of measurements the variance in (Fig. 4b) is exponentially close to zero. Using an exponential number of measurements, the estimate of the KL tends towards its true value and the variance is again small. The transition between these two regimes is marked by a very high variance corresponding to the case where the measurement count is high enough for there to be some overlap between the sampled bits strings and the 0 bitstring, but not enough overlap to obtain a reliable estimate of qθ(0). This results in the loss estimate to sporadically fluctuate between \(\log (1/\epsilon )\) and \(\log (1/{q}_{{\boldsymbol{\theta }}}({\boldsymbol{x}}))\) with qθ(x) > 0. While in Fig. 4 the target dataset consists of a single bitstring, larger datasets only shift the curves to the left by a polynomial amount.

Broader Implications. While our results above are formulated for training QCBMs with pairwise explicit costs, we argue that the underlying problem is more general and immune to simple solutions. One approach, for example, might be to take non-data-dependent functions of pairwise explicit losses, as in the case of the Rényi-divergence in Eq. (9). However, such loss functions exponentially concentrate in the same manner as the explicit losses themselves when employing the conventional measurement strategy. A more promising but challenging approach would be to attempt to measure such losses via quantum strategies. We discuss this further in Section “Quantum strategies: quantum fidelity”.

More generally, while we provide strict no-go results only for pairwise explicit losses, we believe that any explicit losses in the general form of Eq. (2) will suffer from concentration or exponential imprecision due to the inherent inability of implicit models to accurately estimate the model probabilities in polynomial time (A possible exception is if a particular implicit model instead allows for efficient estimation of gradients of an explicit loss function, as it is the case for RBMs training on the KL divergence loss function.). We are however not aware of any practical explicit loss function that cannot be brought into the pairwise explicit form.

We further stress that our results hold for unstructured ansätze or ansätze that lack an appropriate inductive initial bias. Thus, while explicit losses such as the KLD will not work at scale with implicit models straight out-of-the-box, our no-go theorems could be side-stepped using clever initialization strategies in conjunction with specialized ansätze. For example, while we argue in Supplementary Note II B that initializing the quantum circuit model on a subset of training states will not alleviate the fundamental issue when using a generic ansatz, this may work if one leverages a quantum circuit that constrains the model to the symmetry sector of the data. Among other hard constraints, this is conceivable if the data consists only of samples with a certain hamming weight or cardinality, as it can be the case in certain financial applications84,85. However, many real-world datasets may not contain strong symmetries that one can leverage so straightforwardly. It is therefore critically important to study the effect of strong parameter initializations and inductive biases using explicit losses—both theoretically and experimentally.

Implicit losses: maximum mean discrepancy

In the previous section we saw that an explicit loss function, used in conjunction with an implicit generative model and the conventional sampling strategy, exhibits exponential concentration and hence is untrainable. The root cause was, at least in part, a miss-match between using an explicit loss function with an implicit model. Thus it is natural to ask whether an implicit quantum loss would fare better.

Here we focus on analyzing the MMD loss function (see Eqs. (11) and (13)), which is a commonly-used implicit loss. In contrast to the pairwise explicit losses discussed previously, each bitstring drawn from the model is generally compared with all training bitstrings, with the kernel function K(x, y) controlling the contribution of each comparison. With a poor choice in kernel it is clear that the MMD will be susceptible to exponential concentration. For example, the Gaussian kernel with the bandwidth σ → 0 is equivalent to a delta function kernel, K(x, y) = 〈x, y〉 = δxy. In this case the MMD reduces to the pairwise explicit loss \({\sum }_{{\boldsymbol{x}}\in {\mathcal{X}}}{(p({\boldsymbol{x}})-{q}_{{\boldsymbol{\theta }}}({\boldsymbol{x}}))}^{2}\) (see Supplementary Note I for details), and consequently is subject to our no-go result in Theorem 1. This thus prompts the question of how exactly σ affects trainability.

Properties of the MMD loss. To study the properties of the MMD loss, it is helpful to note that each term in the MMD can be viewed as the expectation value of an observable whose properties depend on the choice of σ. This change in perspective allows us to leverage existing knowledge from the VQA trainability literature. In particular, prior no-go results on VQAs with observable-type loss functions are now directly applicable here, including those on cost function induced32, expressiblity-induced23,31, and noise-induced35 BPs.

Specifically, each term in the MMD can be written as

where we have defined the MMD observable

This observable acts on 2n qubits, namely n qubits corresponding to the QCBM, \({\rho }_{{\boldsymbol{\theta }}}=| \psi ({\boldsymbol{\theta }})\rangle\langle \psi ({\boldsymbol{\theta }})|\), and n qubits corresponding to the dataset, \({\rho }_{\tilde{p}}={\sum }_{{\boldsymbol{y}}}\tilde{p}({\boldsymbol{y}})| \boldsymbol{y}\rangle\langle \boldsymbol{y}|\). For the first term in the MMD, both x and y are sampled from the QCBM and we have \(\rho ={\rho }^{{\prime} }={\rho }_{{\boldsymbol{\theta }}}\). The cross-term instead has ρ = ρθ and \({\rho }^{{\prime} }={\rho }_{\tilde{p}}\), and the final term has \(\rho ={\rho }^{{\prime} }={\rho }_{\tilde{p}}\).

In the Pauli basis, the MMD observable \({O}_{{\rm{MMD}}}^{(\sigma )}\) takes the elegant form

where D2l are normalized 2l-body diagonal operators (defined explicitly in Supplementary Note IV A), and

are Bernoulli-distributed weights with effective probability

Thus estimating the MMD loss function in Eq. (11) using a batch of measurements \(\tilde{Q}\) is equivalent to using the same measurements to estimate a weighted expectation of the observables D2l.

The properties of the MMD observable clearly depend on the distribution of the terms of different bodyness through the wσ(l) factor. Figure 5 shows how wσ(l) are distributed for different σ. Owing to the Bernoulli-distributed weights, we can straightforwardly provide the average bodyness of \({O}_{{\rm{MMD}}}^{(\sigma )}\), which is given by

and the variance in the bodyness, which is

From these expressions it follows that the MMD loss is predominantly composed of global operators when \(\sigma \in {\mathcal{O}}(1)\). More concretely the following proposition holds.

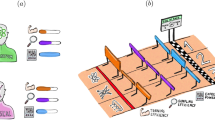

a We illustrate the difference between “low-body”, “local”, “global” and “full-body” operators. An operator O is low-bodied if it acts non-trivially on at most \({\mathcal{O}}(\log (n))\) qubits. If a low-bodied operator acts on qubits that are adjacent to each other, then O is said to be local. On the other hand, O is global if it acts non-trivially on Θ(n) qubits. Lastly, a full-body operator consists of the sum of several operators that are low-body/local and global. b We depict the weight wσ(k) for the terms in the MMD operator as a function of their bodyness k for n = 50, 100, and 200 qubits and the bandwidths σ = 0, 1, and n/4. The average weight over these three σ values is also shown. For small σ, the MMD operator is a sum of predominantly global operators, i.e., with \(\sigma \in {\mathcal{O}}(1)\) the mean bodyness is Θ(n). In contrast, σ ∈ Θ(n) results in predominantly low-bodied operators. c Sketch of the expected landscapes for low-body, global and full-body losses respectively. Because low-body and global operators are exclusively sensitive to low-body and global features, respectively, their loss landscapes exhibit spurious minima, which don’t coincide with the minimum of the true target distribution. A full-body loss on the other hand should have a single optimal solution solution where all its constituent operator’s minima align.

Proposition 3

(MMD consists largely of global terms for \(\sigma \in {\mathcal{O}}(1)\)). For \(\sigma \in {\mathcal{O}}(1)\), the average bodyness of the MMD operator containing Pauli terms with weight wσ(l) is

Similarly, the variance in the bodyness is given by

This shows that with fixed-size bandwidths σ, as is commonly done (e.g., ref. 54), the MMD suffers from global loss function-induced BPs32 and hence is untrainable. This practice of using constant bandwidths is carried over from classical ML literature86,87,88, but Proposition 3 shows that this is fundamentally incompatible with quantum generative models using unstructured circuits.

In contrast, we show that if the bandwidth scales linearly in the number of qubits, σ ∈ Θ(n), the MMD loss function is approximately low-bodied. We recall that being low-bodied is more general than being local, the latter corresponding to the case where an operator is low-bodied and each term only acts non-trivially on adjacent qubits. The following proposition formalizes this relation by quantifying the error made when truncating the MMD observable after a certain bodyness.

Proposition 4

(MMD consists largely of low-body terms for σ ∈ Θ(n)). Let \({\tilde{{\mathcal{L}}}}_{{\rm{MMD}}}^{(\sigma ,k)}({\boldsymbol{\theta }})\) be a truncated MMD loss with a truncated operator \({\tilde{O}}_{{\rm{MMD}}}^{(\sigma ,k)}\) that contains up to the 2k-body interactions in \({O}_{{\rm{MMD}}}^{(\sigma )}\),

where wσ(l) are Bernoulli-distributed weights defined in Eq. (34). For σ ∈ Θ(n), the difference between the exact and local approximation of the loss is bounded as

with

for some positive constant c.

This implies that one can view the MMD loss with a bandwidth σ ∈ Θ(n) as composed almost exclusively of low-body contributions. We therefore expect, given the results of refs. 32,40, that the the MMD is trainable for σ ∈ Θ(n) for quantum generative models which employ shallow quantum circuits. We note that there appears to be no merit in increasing σ beyond Θ(n), as that simply increases the relative weight of the constant l = 0 term in Eq. (33). That is, the MMD operator tends towards the trivial identity measurement for σ → ∞.

To probe this further, and get a better understanding of the effect of σ on the trainability of the MMD loss, we start by considering the case of QCBM with a product ansatz. This allows us to find a closed-form expression of the MMD variance as a function of the circuit parameters (Supplemental Proposition 2) from which we can study the concentration of the MMD for different σ values. Our findings are summarized by the following Theorem (proven in Supplementary Note IV B 3).

Theorem 2

(Product ansatz trainability of MMD, informal). Consider the MMD loss function \({{\mathcal{L}}}_{{\rm{MMD}}}^{(\sigma )}({\boldsymbol{\theta }})\) as defined in Eq. (11), which uses the classical Gaussian kernel as defined in Eq. (12) with the bandwidth σ > 0, and a quantum circuit generative model that is comprised of a tensor-product ansatz \(U{ = \bigotimes }_{i}^{n}{U}_{i}({\theta }_{i})\) with \({\{{U}_{i}({\theta }_{i})\}}_{{\theta }_{i}}\) being single-qubit (Haar) random unitaries. Given a training dataset \(\tilde{P}\), the asymptotic scaling of the variance of the MMD loss depends on the value of σ.

For \(\sigma \in {\mathcal{O}}(1)\), we have

with some b > 1.

On the other hand, for σ ∈ Θ(n), we have

We numerically verify Theorem 2 in Fig. 6. In panel (a) we show that the analytical predictions for different bandwidths coincide perfectly with the numerical estimates. The exponentially vanishing loss variances observed for σ ∈ O(1) are expected to render the loss untrainable. This is demonstrated in panel (b), where we further train a QCBM with σ = n/4 (which approximately maximizes the variance) and σ = 1. We find that a QCBM with σ = n/4 can be successfully trained even for n = 1000 qubits. In contrast, the training starts to fail to learn the \(\left\vert {\boldsymbol{0}}\right\rangle\) target state after n ≈ 50 and is fully untrainable at n = 100 when σ = 1 is used.

a Comparison of the MMD variance between the analytical prediction (see Eq. (182) in Supplementary Information for an exact analytical expression) and empirical variance using 100 measurements from a product state ansatz. b Training a product state ansatz on the \(\left\vert {\boldsymbol{0}}\right\rangle\) target state for σ = 1 and σ = n/4 using the CMA-ES113 optimizer and 512 measurements. In both cases the QCBM ansatz consists of a single layer of Ry rotations on each qubit.

It is interesting to note that the approximately optimal bandwidth \(\sigma \sim \frac{n}{4}\) for the product state ansatz coincides with the so-called median heuristic51 from classical ML literature. For random circuits, the median (hamming) distance between bitstrings is in fact \(\frac{n}{2}\), which we satisfy with the factor of 2 in our kernel convention.

To go towards more practical generative modeling, we recall that ref. 40 proves that cost functions of the form of Eq. (19) using 2k-body observables with \(k\in {\mathcal{O}}(\log (n))\) are trainable using 1D-random \(\log (n)\) depth circuits. Since Proposition 4 implies that the MMD is well approximated by a \(\log (n)\)-body cost, it should follow that the MMD is also trainable at \(\log (n)\) depths. There are a few technical caveats associated with constructing a full proof. For example, the first term of the MMD requires working with 4-designs instead of 2-designs, and the second term depends on the target distribution, leading to additional subtleties. However, there is no strong reason to expect that these technicalities make the MMD untrainable.

To extend the trainability result beyond a simple tensor product ansatz, we consider a generic Pauli rotation ansatz of the form UPQC(θ) = U(α)Utensor(β) where

such that the generators \({\{{G}_{k}\}}_{k = 1}^{M}\) are some n-qubit Pauli strings \({G}_{k}\in {\{{\mathbb{1}},X,Y,Z\}}^{n}\) and \({\{{V}_{k}\}}_{k = 1}^{M}\) is a set of non-parametrized Clifford gates, and \({U}_{{\rm{tensor}}}({\boldsymbol{\beta }}){ = \bigotimes }_{i = {1}^{n}}{U}_{i}({\beta }_{i})\) is a layer of random single qubit rotations Ui(βi). We additionally assume all parameters are uncorrelated. Then, we have the variance of the MMD loss scales as follows.

Theorem 3

(General Pauli rotation ansatz trainability of MMD, informal). Consider a general Pauli rotation ansatz of the form in Eq. (45) and the MMD loss function as defined in Eq. (11) using the Gaussian kernel in Eq. (12) with the bandwidth σ ∈ Θ(n). As long as the average light cone of the back-propagated MMD observable with \({U}^{{\prime} }({\boldsymbol{\alpha }})\) remains in the order of \(\log (n)\), then the QCBM is trainable in the sense that

Theorem 3 indicates that in practice one has to only determine the average light cone of the effective MMD observable back-propagated by a given ansatz (see Eq. (243) in Supplementary Note IV C for a formal definition) to have a trainability guarantee. In Supplementary Note IV C, we provide further details on how to compute this average light cone for the Pauli rotation ansatz. An example of an ansatz satisfying the few-body light cone condition is a shallow depth circuit with nearest neighbor connectivity (which could be either hardware efficient or problem inspired). Crucially, we emphasize that this light cone argument goes beyond the 2-design assumption and is expected to work even more generally to any ansatz that may not even be in the Pauli rotation form.

It is worth noting that as few mild technical assumptions are required to more formally state Theorem 3. These, along with our proof are provided in Supplementary Note IV C. We further remark that although some proof techniques are similar to ref. 89, the main technical challenges here are to analytically show that the covariance between different terms which involve higher moments vanish and compute the exact form of the variance lower bound of the purity term. We further remark that although some proof techniques are similar to ref. 89, the main technical challenges here have arisen from dealing with the two system registers of the MMD which involves computing the exact form of the higher moments.

Theorem 3 is further supported by our numerical evidence for the trainability of the MMD for deeper circuits and more realistic datasets shown in Fig. 7. Here we plot the loss variance as a function of circuit depth L and the number of qubits n for σ = n/4 on four datasets from four different target distributions. We observe that the polynomial scaling of the loss variance does in fact extend beyond product states to shallow circuits, i.e., \(L\in {\mathcal{O}}(\log (n))\). However, for sufficiently deep circuits, i.e., L ∈ Ω(n), the MMD variance appears to decay exponentially. This aligns with expressibility-induced BPs observed in other VQA applications, which occur even for maximally local loss functions, i.e., k = 1.

Numerical evidence that the MMD loss with Gaussian bandwidth parameter σ = n/4 does not exhibit global or explicit loss function barren plateaus, but does exhibit loss concentration with deep quantum circuits. We study the loss concentration in randomly initialized line-topology circuits for various datasets, and increasing number of qubits n and circuit depth. The infinite layers results were generated by drawing wavefunctions from the Porter-Thomas distribution114. The GHZ dataset consists of the all-0 and all-1 bitstrings (\({\mathcal{O}}(1)\) support), the \({\mathcal{O}}(n)\) and \({\mathcal{O}}({n}^{2})\) datasets consist of n and n2 random bitstrings, respectively, and the cardinality dataset contains all bitstrings with \(\frac{n}{2}\) cardinality (\({\mathcal{O}}({2}^{n})\) support). There does not appear to be a strong data-dependence for the magnitude of the loss variance.

The role of local minima

Our results so far appear to indicate that picking a single bandwidth σ ∈ Θ(n) maximizes the trainability of the MMD loss function with a Gaussian kernel. While it is true that this choice maximizes the expected magnitude of initial gradients for a QCBM, non-vanishing gradients are a necessary condition but not sufficient to guarantee reliable training performance. And in fact it turns out that while low-body losses exhibit large gradients they come with other limitations. Particularly, we show that the bodyness of a generative loss function defines the maximal order of marginals of the target distribution that can be distinguished. That is, the model only learns to match the target distribution on subsets of bits, i.e., on its marginals. This introduces a continuous family of minima which are indistinguishable from the true minimum when using a low-bodied loss function, but which are systematically wrong for the purposes of generative modeling. The worry is that the non-vanishing loss gradients in low-bodied losses are predominantly due to the presence of such spurious minima and do not point in the direction of the true global minimum. This is sketched in Fig. 5.

Formally, let qθ(xA) denote the marginal model distribution on a subset A ⊆ {1, 2, …, n} of qubits, and \(\tilde{p}({{\boldsymbol{x}}}_{A})\) the marginal target distribution on that same subset. For more details we refer to Eq. (368) and Eq. (370) in Supplementary Note IV D. The connection between the bodyness of the loss operator and the marginals of the model and target distributions is then formalized in the following Proposition.

Proposition 5

(The truncated MMD loss is not faithful). Consider a distribution qθ(x) that agrees with the training distribution \(\tilde{p}({\boldsymbol{x}})\) on all the marginals up to k bits, but disagrees on higher-order marginals. The distribution qθ(x) minimizes the truncated MMD loss. That is, suppose

for all A ⊆ {1, 2, …, n} with ∣A∣ ⩽ k, then

Crucially, this is true even if for some B ⊆ {1, 2, …, n} with ∣B∣ > k

In other words, if the MMD operator can be approximated well by a truncated operator with at most 2k-body terms, model distributions that match the target distribution exactly up to k-body marginals or higher cannot be distinguished from ones that match fully. As an example of such distributions, consider the uniform distribution over the bitstrings [001, 011, 101, 110], where the third bit is the bit-wise addition of the previous two bits. Using only second-order marginals, it is not possible to distinguish this correlated distribution from the uniform distribution over all eight possible outcomes.

Notably, long-range correlations in the data can still be learned by the low-bodied MMD loss, just not ones that are particularly high-order (Note that this is in contrast to a loss composed purely of local terms which would be restricted to learning local/short-range correlations.). Not all distributions will however exhibit such higher-order correlations and thus some distributions will be learnable using losses composed of low-body terms. Viewing this result through the lens of generalization to the underlying distribution, there are two opposing consequences that would need to be studied in future works. On the one hand, convergence to the systematically wrong low-order minima is likely to impede generalization. On the other hand, one could also imagine that being ignorant to very high-order marginals of the training set could reduce overfitting and thus enhance generalization.

Proposition 5 thus establishes that to fulfill the promise of quantum generative models, that is to be able to learn both long-range and many-body correlations, one cannot use exclusively low-body losses. However, such a requirement is in immediate tension with the low-bodyness required for the trainability guarantees (see Theorem 2). In particular, in Proposition 4 we show that for σ ∈ Θ(n) the contribution of k ∈ Θ(n) terms are exponentially small in n. Thus, although the loss is still strictly faithful given an infinite shot budget, with a reasonable shot budget we will not be able to resolve the contribution from the exponentially small high-body terms. Hence, there can be spurious minima that we cannot resolve from the true minimum and therefore for all practical purposes the loss is effectively not truly faithful.

One approach to resolving this tension would be to adapt the initial value of σ from Θ(n), where the loss exhibits large gradients but predominantly learns low-order marginals, towards \({\mathcal{O}}(1)\) to also learn high-order correlations as the model improves. This is in line with studies from the classical ML literature showing that bandwidths for optimal MMD performance are oftentimes smaller than the so-called median heuristic90,91,92, which coincides with our result of σ ∈ Θ(n). Another approach, which is also already employed in classical ML literature, is to use a kernel that averages the effects of several σ86,87,88. That is, the kernel is taken to be

for a set of bandwidths c = {σ1, σ2, …}. The resulting weight of each 2k—body term of the new MMD observable is an average of the weightings corresponding to each σi in c as shown in Fig. 5. Theorem 2 shows that for a QCBM without inductive bias to not fall prey to exponential concentration, at least one of the σi needs to be Θ(n). But the results of Proposition 5 suggest that for data sets exhibiting high-order correlations a small bandwidth \({\sigma }_{i}\in {\mathcal{O}}(1)\) is required for correct convergence. It stands to reason that the optimal set c contains a spectrum of bandwidths that both enable trainability and faithful convergence to the target distribution (as sketched in Fig. 5c). How successful this strategy is in practice remains to be determined.

Broader Implications. Our work highlights that one can treat classical machine learning losses as quantum observables to study their properties. This implies that our results transfer to other types of quantum generative models beyond the QCBM that will also be affected by the fundamental limitations described by Proposition 5. In fact, we show in Supplementary Note IV E that any generative modeling loss function for classical data that can be brought into the form \({\mathcal{L}}({\boldsymbol{\theta }})={\rm{Tr}}[{\mathcal{M}}{\rho }_{{\boldsymbol{\theta }}}]\), with a diagonal measurement operator \({\mathcal{M}}\), faces the same tension described above. That is, if \({\mathcal{M}}\) contains at most k-body terms in the Pauli basis representation, then the loss cannot distinguish two distributions that agree on all k-order marginals but disagree on higher-order marginals. Thus losses composed exclusively of local terms (with the conventional measurement strategy) cannot be used in generative modeling to learn complex higher-order correlations.

With a little thought it becomes clear that an exclusively global loss is also undesirable. Not only do such losses exhibit exponential concentration for unstructured circuits, they will also in general possess spurious minima in virtue of only probing global properties of the distribution (i.e., the average global parity), as shown in Fig. 5. Instead we advocate using full-body losses which contain both low and high-body terms, such as those obtained by averaging in Fig. 5. Even then, global contributions cannot be vanishingly small or else they will not be possible to resolve with a realistic shot budget.

For another example, one may aim to train a quantum generative model using a QGAN framework, where a Discriminator D provides a score D(x) to every sample. The corresponding operator can then be written as \({\mathcal{M}}={\sum }_{{\boldsymbol{x}}}D({\boldsymbol{x}})| \boldsymbol{x}\rangle\langle \boldsymbol{x}|\). The Discriminator may have to initially implement an effectively low-bodied operator to facilitate initial gradients, but later in training become higher-bodied to learn global features. That is not to say that the Discriminator should only classify marginals of the bitstring such as in ref. 93. Rather, the architecture and initialization should be such that the operator \({\mathcal{M}}\) in the Pauli basis initially contains low-body terms but can include high-body terms during convergence. Interestingly, the interpolation from trainable to faithful could be naturally full-filled during training when the Generator and Discriminator are optimized in tandem.

Fine-tuning the interplay between the loss function gradients, density of local minima and the faithfulness of a generative loss is beyond the scope of this work, but is an important direction for future research. We especially emphasize the necessity to evaluate the implications of our results on models and datasets of practical relevance. In Section “Training on a HEP dataset” we take steps in this direction by investigating training a QCBM to model real data from the HEP domain.

Quantum strategies: quantum fidelity

While the classical fidelity in Eq. (8) is an explicit cost function, the quantum fidelity, defined in Eq. (15), allows for a simple known quantum estimation strategy. Key to the quantum fidelity loss is to interpret the training distribution as a target state \(\left\vert \phi \right\rangle ={\sum }_{{\boldsymbol{x}}}\sqrt{\tilde{p}({\boldsymbol{x}})}\left\vert {\boldsymbol{x}}\right\rangle\). The QCBM model loss can then be rewritten as the expectation of an observable, e.g., in the form of Eq. (19), with \(\rho = |\phi\rangle \langle\phi|\) and \(O=|\boldsymbol{0}\rangle \langle \boldsymbol{0}|\) being the all-zero projective measurement. Crucially, as \(O=|\boldsymbol{0}\rangle \langle \boldsymbol{0}|\) is a global projector, the quantum fidelity is subject to a globality-induced BPs32 and the loss exponentially concentrates towards one24. That is, we have

This global-measurement-induced BP can however be avoided by localizing \({{\mathcal{L}}}_{QF}({\boldsymbol{\theta }})\). That is, we replace the global projective measurement \(|0\rangle \langle 0|\) with its local version \({H}_{L}=\frac{1}{n}\mathop{\sum }\nolimits_{i = 1}^{n}| 0_i \rangle \langle 0_i| \otimes {{\mathbb{1}}}_{\bar{i}}\), where \(\bar{i}\) indicates all qubits except qubit i. The new localized version of the quantum fidelity loss is given by

This local loss is faithful to its global variant for product state training in the sense that it vanishes under the same conditions94, i.e., when the QCBM distribution matches the data distribution exactly. However, it enjoys trainability guarantees via the results of ref. 32. This implies that, unlike the MMD and other classical losses that utilize the conventional measurement strategy, the LQF can effectively distinguish between high-order marginals even at k = 1 bodyness. However, although the local loss function can evade global measurement-induced BPs, it still suffers under BPs from other sources, such as expressibility or noise. Additionally, it is not yet explored how practical a fidelity loss is for the purposes of generalizing from training data.

Figure 8 depicts numerical variance results for the fidelity loss on a range of datasets, circuit depths, and numbers of qubits. For all datasets, the LQF exhibits only polynomially decaying variance over random parameters when the quantum circuits are not too deep. As a reference, we additionally depict the global quantum fidelity which exponentially decays for all circuit depths.

Numerical evidence that the local quantum fidelity loss function does not exhibit global or explicit loss function barren plateaus. It does however exhibit expressivity-induced barren plateaus with deeper and deeper circuits, as it is the case for all generic VQA-type loss functions in the form of Eq. (19). In contrast, the global quantum fidelity variance decays exponentially at all circuit depths. The numerical setup is the same as for the MMD in Fig. 7, and the infinite layers results were generated by drawing wavefunctions from the Porter-Thomas distribution114.

The challenge now becomes how to estimate \({{\mathcal{L}}}_{QF}^{(L)}({\boldsymbol{\theta }})\) using measurements from the quantum computer. The seemingly straight-forward approach is to prepare the initial state \(\left\vert \phi \right\rangle\), evolve it under U†(θ), and then evaluate the observable defined by HL through measurements in the computational basis. However, loading classical data into a quantum state \(\left\vert \phi \right\rangle\) is not expected to be feasible in general. In Supplementary Note V, we propose an approach that can be used to estimate \({{\mathcal{L}}}_{QF}^{(L)}({\boldsymbol{\theta }})\) using a series of Hadamard tests without needing to prepare \(\left\vert \phi \right\rangle\). We note that, while in theory our approach requires a number of Hadamard tests that scales with the amount training data, we expect stochastic techniques, such as stochastic gradient descent95, to be sufficient in practice.

Broader Implications. In this section, we have presented one example of a quantum strategy to measure a fidelity-based loss for quantum generative modeling. While this approach puts more load on the quantum computer as compared to losses employing the conventional measurement strategy, it enjoys simultaneous trainability and faithfulness to the target distribution.

An interesting extension would be to explore other quantum approaches for efficiently training QML models. One could for example attempt to compute the KL divergence or other explicit losses directly on the quantum computer. Although the implementation of non-linear operations on quantum computers has been demonstrated in refs. 96,97,98, we are not yet aware of quantum strategies beyond one related demonstration for the Rènyi divergence in ref. 46. One alternative approach would be to attempt to indirectly turn the QCBM into an explicit generative model by estimating its probabilities using amplitude amplification or other techniques. As discussed in Section “Large gradient variances are not enough”, with access to exact probabilities the KL divergence can provably avoid BPs even with unstructured circuits for certain target distributions.

Training on a HEP dataset

In this section, we perform realistic training of QCBMs on a more practical dataset which is derived from HEP colliders experiments. We compare the implicit cost functions MMD and LQF with the explicit KL divergence for an increasing number of circuit depth L and the number of qubits n, and across several measurement budgets. To summarize our results, we observe that the presence of shot noise causes the training with KLD to fail, while the MMD and LQF both hold up significantly better.

Dataset. We consider a dataset consisting of energy depositions in an ECAL99. The data was generated using a Monte Carlo approach (theGeant4 toolkit100), which accurately describes the ECAL detector behavior under a typically proton- proton collision at a LHC experiment. The dataset consists of the energy deposition on a 25 × 25 × 25 grid, that we downsized to a two-dimensional grid of various sizes. The images are converted to a black and white scale by considering the pixel ‘hit’ if the energy deposition exceeds a certain threshold, which is chosen as one tenth of the mean energy deposit. We map each pixel to a qubit and take the state \(\left\vert 1\right\rangle\) to represent a hit. This dataset naturally has a polynomial support and thus is precisely the type of dataset that we might hope to learn using QML.

Training. We use a parametrized quantum circuit of the form

where R(θl) is a layer of arbitrary single qubit unitaries that can be parameterized using 3n Euler angles, \(W({{\boldsymbol{\alpha }}}_{l}):= \mathop{\prod }\nolimits_{i = 1}^{n-1}{{\rm{CX}}}_{i,i+1}{{\rm{RY}}}_{i}({\alpha }_{l}^{i}){{\rm{CX}}}_{i,i+1}\) acts as parametrized entangling gate with CXi,j a CNOT gate between qubits i and j and \({\rm{RY}}_{i}({\alpha }_{l}^{i})\) a single qubit rotation of qubit i around the y-axis, and the parameters θ = {θl, αl}. We use the TVD, see Eq. (7), as a common metric to assess the performance of each loss function. To verify performance accurately, we compute the TVD using exact simulation. The gradients for each loss are computed using the parameter shift rule101 which provides estimates of the analytical gradient, and the parameters are updated with the ADAM102 optimizer with a decaying learning rate lr(t) = \(\max (0.01{e}^{-\beta t},1{0}^{-5})\), where t is the optimization step and β = 0.005 the decaying rate. The computation of the KLD is stabilized using a regulariser of ϵ = 10−6, which has been tuned through trial and error. To follow best-practices with the MMD, we average the gradient estimation over several different bandwidths

which incurs no additional quantum resources. This makes the loss full-bodied and thus keeps the model trainable while aiding convergence. We note that these are likely not the optimal bandwidths to average over but it demonstrates the best-practice approach.

Results. Figure 9 shows the TVD, computed with infinite statistics, on the training curve of the QCBMs with varying numbers of qubits n ∈ {4, 9, 12} (rows) and layers (symbols), where the gradients are computed with different numbers of shots (columns) for different loss function (colors). The lines denote the median over ten random parameter initialization while the shaded area denotes the 25% to 75% percentile. We observe that the performance of the KLD quickly deteriorates as the number of shots is reduced while the MMD and LQF remain more stable. We further observe that increasing the expressivity of the QCBM from \({\log }_{2}n\) to n layers does not lead to a significant increase in performance for a low number of shots.