Abstract

A pervasive dilemma in brain-wide association studies1 (BWAS) is whether to prioritize functional magnetic resonance imaging (fMRI) scan time or sample size. We derive a theoretical model showing that individual-level phenotypic prediction accuracy increases with sample size and total scan duration (sample size × scan time per participant). The model explains empirical prediction accuracies well across 76 phenotypes from nine resting-fMRI and task-fMRI datasets (R2 = 0.89), spanning diverse scanners, acquisitions, racial groups, disorders and ages. For scans of ≤20 min, accuracy increases linearly with the logarithm of the total scan duration, suggesting that sample size and scan time are initially interchangeable. However, sample size is ultimately more important. Nevertheless, when accounting for the overhead costs of each participant (such as recruitment), longer scans can be substantially cheaper than larger sample size for improving prediction performance. To achieve high prediction performance, 10 min scans are cost inefficient. In most scenarios, the optimal scan time is at least 20 min. On average, 30 min scans are the most cost-effective, yielding 22% savings over 10 min scans. Overshooting the optimal scan time is cheaper than undershooting it, so we recommend a scan time of at least 30 min. Compared with resting-state whole-brain BWAS, the most cost-effective scan time is shorter for task-fMRI and longer for subcortical-to-whole-brain BWAS. In contrast to standard power calculations, our results suggest that jointly optimizing sample size and scan time can boost prediction accuracy while cutting costs. Our empirical reference is available online for future study design (https://thomasyeolab.github.io/OptimalScanTimeCalculator/index.html).

Similar content being viewed by others

Main

A fundamental question in systems neuroscience is how individual differences in brain function are related to common variation in phenotypic traits, such as cognitive ability or physical health. Following recent work1, we define BWAS as studies of the associations between phenotypic traits and common interindividual variability of the human brain. An important subclass of BWAS seeks to predict individual-level phenotypes using machine learning. Individual-level prediction is important for addressing basic neuroscience questions and is critical for precision medicine2,3,4,5,6,7.

Many BWAS are underpowered, leading to low reproducibility and inflated prediction performance8,9,10,11,12,13. Larger sample sizes increase the reliability of brain–behaviour associations14,15 and individual-level prediction accuracy16,17. Indeed, reliable BWAS typically requires thousands of participants1, although certain multivariate approaches might reduce sample-size requirements15.

In parallel, other studies have emphasized the importance of a longer fMRI scan time per participant during both resting and task states, which leads to improved data quality and reliability12,18,19,20,21,22,23, as well as new insights into the brain24,25,26,27. When sample size is fixed, increasing resting-state fMRI scan time per participant improves the individual-level prediction accuracy of some cognitive measures28.

Thus, in a world with infinite resources, fMRI-based BWAS should maximize both sample size and scan time for each participant. However, in reality, BWAS investigators have to decide between scanning more participants (for a shorter duration) or fewer participants (for a longer duration). Furthermore, there is a fundamental asymmetry between sample size and scan time per participant owing to inherent overhead cost associated with each participant that can be quite substantial, for example, when recruiting from a rare population. Notably, the exact trade-off between sample size and scan time per participant has not been comprehensively characterized. This trade-off is not only relevant for small-scale studies, but also important for large-scale data collection, given competing interests among investigators and limited participant availability.

Here we systematically characterize the effects of sample size and scan time of fMRI on BWAS prediction accuracy, using the Adolescent Brain and Cognitive Development (ABCD) study and the Human Connectome Project (HCP). To derive a reference for future study design, we also considered the Transdiagnostic Connectome Project (TCP), Major Depressive Disorder (MDD), Alzheimer’s Disease Neuroimaging Initiative (ADNI) and the Singapore Geriatric Intervention Study to Reduce Cognitive Decline and Physical Frailty (SINGER) datasets (Extended Data Table 1; see the ‘Datasets, phenotypes and participants’ section of the Methods). We find that, to increase prediction power, longer scans and larger sample sizes can yield substantial cost savings compared with increasing only sample size.

Sample-size and scan-time interchangeability

For each participant in the HCP and ABCD datasets, we calculated a 419 × 419 resting-state functional connectivity (RSFC) matrix using the first T minutes of fMRI29,30 (see the ‘Image processing’ section of the Methods). T was varied from 2 min to the maximum scan time in each dataset in intervals of 2 min. The RSFC matrices (from the first T minutes) served as input features to predict a range of phenotypes in each dataset using kernel ridge regression (KRR) through a nested inner-loop cross-validation procedure (see the ‘Prediction workflow’ section of the Methods). The analyses were repeated with different numbers of training participants (that is, different training sample size N). Within each cross-validation loop, the test participants were fixed across different training set sizes, so that the prediction accuracy was comparable across different training set sizes (Extended Data Fig. 1). The whole procedure was repeated multiple times and averaged. The sample sizes and maximum scan times of all datasets are provided in Extended Data Table 1.

We first considered the cognitive factor score because the cognitive factor score was predicted the best across all phenotypes31. Figure 1a shows the prediction accuracy (Pearson’s correlation) of the ABCD cognitive factor score (HCP results are shown in Supplementary Fig. 1). Along a black iso-contour line, prediction accuracy is (almost) constant even though scan time and sample size are changing. Consistent with previous literature16,32, increasing the number of training participants (when scan time per participant is fixed) improved prediction performance. Similarly, increasing the scan time per participant (when sample size is fixed) also improved prediction performance28.

a, The prediction accuracy (Pearson’s correlation) of the cognitive factor score as a function of the scan time T used to generate the functional connectivity matrix, and the number of training participants N used to train the predictive model in the ABCD dataset. Increasing the number of training participants and scan time both improved the prediction performance. The asterisk indicates that all of the available participants were used and the sample size is therefore close to, but not exactly, the number shown. b, The cognitive factor prediction accuracy (Pearson’s correlation) in the ABCD and HCP datasets. There are 30 dots in this plot. Each dot represents the prediction accuracy in each dataset for a particular pair of sample size and scan time per participant. The Pearson’s correlation between the 30 pairs of dots was 0.98.

Although cognitive factor scores are not necessarily comparable across datasets (due to population and phenotypic differences), prediction accuracies were highly similar between the ABCD and HCP datasets (Pearson’s r = 0.98; Fig. 1b). Similar conclusions were also obtained when we measured the prediction accuracy using the coefficient of determination (COD) instead of Pearson’s correlation (Supplementary Fig. 2), computed RSFC using the first T minutes of uncensored data (Supplementary Fig. 3), did not perform censoring of high motion frames (Supplementary Fig. 4) or used linear ridge regression (LRR) instead of KRR (Supplementary Figs. 5 and 6).

Notably, the prediction accuracy of the cognitive factor score increased with the total scan duration (number training participants × scan time per participant) in both the ABCD (Spearman’s ρ = 0.99) and HCP (Spearman’s ρ = 0.96) datasets (Fig. 2a). In both datasets, there were diminishing returns of sample size and scan time, whereby each unit increase in sample size or scan duration resulted in progressively smaller gains in prediction accuracy (Fig. 2a and Supplementary Table 1).

a, The prediction accuracy (Pearson’s correlation) of the cognitive factor score as a function of the total scan duration (defined as the number of training participants × scan time per participant). There are 90 dots in the ABCD plot (left) and 174 dots in the HCP plot (right). Each colour shade represents a different total number of participants used to train the prediction algorithm. The asterisk indicates that all available participants were used and the sample size is therefore close to, but not exactly, the number shown. In both datasets, there were diminishing returns of both sample size and scan time, whereby each unit increase in sample size or scan duration resulted in progressively smaller gains in prediction accuracy. In the HCP dataset, the diminishing returns of scan time were more prominent beyond 30 min (Supplementary Table 1). b, The normalized (norm.) prediction accuracy of the two cognitive factor scores and 34 other phenotypes versus log2[total scan duration], ignoring data beyond 20 min of scan time. Cognitive, mental health, personality, physicality, emotional and well-being measures are shown in shades of red, grey, blue, yellow, green and pink, respectively. The black line shows that the logarithm of total scan duration explained prediction performance well across phenotypic domains and datasets. The Pearson’s correlation was computed between the log of total scan duration and normalized prediction performance based on 2,520 dots in the panel (16 total scan durations × 90 ABCD phenotypes + 18 total scan durations × 60 HCP phenotypes = 2,520). P values were computed using subsampling (to ensure independence) and 1,000 permutations (Supplementary Table 1). Attn prob, attention problems; cog, cognition; cryst, crystalized; disc, discounting; emo match, emotional face matching; ep mem, episodic memory; exec funct, executive function; flex, flexibility; int, intelligence; mem, memory; orient, orientation; proc spd, processing speed; PSQI, Pittsburgh Sleep Quality Index; rel proc, relational processing; satis, satisfaction; SusAttn (spec), sustained attention (specificity); vocab, vocabulary; vs, visuospatial.

In the HCP dataset, we also observed diminishing returns of scan time relative to sample size, especially beyond 30 min (Fig. 2a and Supplementary Table 1). For example, starting from an accuracy of 0.33 with 200 participants × 14 min scans, a 3.5× larger sample (N = 700) increased the accuracy to 0.45, whereas a 4.1× longer scan (T = 58 min) raised it only to 0.40.

Beyond the cognitive factor scores, we focused on 29 (out of 59) HCP phenotypes and 23 (out of 37) ABCD phenotypes with maximum prediction accuracies of r > 0.1 (Supplementary Table 2). In total, 90% of HCP phenotypes (that is, 26 out of 29) and 100% of ABCD phenotypes (that is, 23 out of 23) exhibited prediction accuracies that increased with the total scan duration (Spearman’s ρ = 0.85). Diminishing returns of scan time (relative to the sample size) were observed for many HCP phenotypes, especially beyond 20 min (Supplementary Table 1). This phenomenon was less pronounced for the ABCD phenotypes, potentially because the maximum scan time was only 20 min (Supplementary Table 1).

A logarithmic pattern between prediction accuracy and total scan duration was evident in 73% (19 out of 26) HCP and 74% (17 out of 23) of ABCD phenotypes (Supplementary Table 2 and Supplementary Figs. 7 and 8). To quantify the logarithmic relationship, for each of the 19 HCP and 17 ABCD phenotypes, we fitted a logarithm curve (with two free parameters) between prediction accuracy and total scan duration (ignoring data beyond 20 min per participant; see the ‘Fitting the logarithmic model’ section of the Methods). Overall, total scan duration explained prediction accuracy across HCP and ABCD phenotypes very well (COD or R2 = 0.88 and 0.89, respectively; Supplementary Table 3).

The logarithm fit allowed phenotypic measures from both datasets to be plotted on the same normalized prediction performance scale (Fig. 2b and Extended Data Fig. 2). The logarithm of the total scan duration explained prediction accuracy very well (r = 0.95; P = 0.001). This suggests that sample size and scan time are broadly interchangeable, in the sense that a larger sample size can compensate for a smaller scan time and vice versa. The exact degree of interchangeability is characterized in the next section.

The logarithm curve was also able to explain prediction accuracy well across different prediction algorithms (KRR and LRR) and different performance metrics (COD and r), as illustrated for the cognitive factor scores in Supplementary Fig. 9.

Diminishing returns of scanning longer

We have observed diminishing returns of scan time relative to sample size. To examine this phenomenon more closely, we considered the prediction accuracy of the HCP factor score as we progressively increased scan time per participant in 10 min increments while maintaining 6,000 min of total scan duration (Fig. 3a). The prediction accuracy decreased with increasing scan time per participant, despite maintaining 6,000 min of total scan duration (Fig. 3a). However, the accuracy reduction was modest for short scan times (Fig. 3a and Supplementary Table 1). Similar conclusions were obtained for all 19 HCP and 17 ABCD phenotypes that followed a logarithmic fit (Extended Data Fig. 3). These results indicate that, while longer scan times can offset smaller sample sizes, the required increase in scan time becomes progressively larger as scan duration extends.

a, The prediction accuracy of the HCP cognition factor score when total scan duration is fixed at 6,000 min, while varying the scan time per participant. N refers to the sample size and T refers to the scan time per participant. We repeated a tenfold cross-validation 50 times. Each violin plot shows the distribution of prediction accuracies across 50 random repetitions (that is, there were 50 datapoints in each violin with each dot corresponding to the average accuracy for a particular cross-validation split). The boxes inside violins represent the interquartile range (IQR; from the 25th to 75th percentile) and whiskers extend to the most extreme datapoints not considered outliers (within 1.5× IQR). Two-tailed paired-sample corrected-resampled t-tests58 were performed between the largest sample size (N = 600, T = 10 min) and the other sample sizes. Each corrected resampled t-test was performed on 500 pairs of prediction accuracy values. P values were as follows: 7.9 × 10−3 (N = 600 versus N = 120) and 9.8 × 10−4 (N = 600 versus N = 100). The asterisks indicate statistical significance after false discovery rate (FDR) correction; q < 0.05. P values of all tests and details of the statistical tests are provided in Supplementary Table 1. b, Prediction accuracy against total scan duration for the cognitive factor score in the HCP dataset. The curves were obtained by fitting a theoretical model to the prediction accuracies of the cognitive factor score. There are 174 datapoints in the panel. The theoretical model explains why the sample size is more important than scan time (see the main text).

To gain insights into this phenomenon, we derived a closed-form mathematical relationship relating prediction accuracy (Pearson’s correlation) with scan time per participant T and sample size N (see the ‘Fitting the theoretical model’ section of the Methods). To provide an intuition for the theoretical derivations, we note that phenotypic prediction can be theoretically decomposed into two components: one component relating to an average prediction (common to all participants) and a second component relating to a participant’s deviation from this average prediction.

The uncertainty (variance) of the first component scales as 1/N, like a conventional standard error of the mean. For the second component, we note that the prediction can be written as regression coefficients × functional connectivity (FC) for linear regression. The uncertainty (variance) of the regression coefficient estimates scales with 1/N. The uncertainty (variance) of the FC estimates scales with 1/T (that is, reliability improves with T). Thus, the uncertainty of the second component scales with 1/NT. Overall, our theoretical derivation suggests that prediction accuracy can be expressed as a function of 1/N and 1/NT with three free parameters.

The theoretical derivations do not tell us the relative importance of the 1/N and 1/NT terms. We therefore fitted the theoretical model to actual prediction accuracies in the HCP and ABCD datasets. The goal was to determine (1) whether our theoretical model (despite the simplifying assumptions) would still explain the empirical results, and (2) to determine the relative importance of 1/N and 1/NT (see the ‘Fitting the theoretical model’ section of the Methods).

We found an excellent fit with actual prediction accuracies for the 19 HCP and 17 ABCD phenotypes that followed a logarithmic fit (Fig. 3b and Supplementary Figs. 10 and 11): R2 = 0.89 for both datasets (Supplementary Table 2). When T was small, the 1/NT term dominated the 1/N term, which explained the almost one-to-one interchangeability between the scan time and the sample size for shorter scan times. The existence of the 1/N term ensured that sample size was still slightly more important than scan time even for small T. The FC reliability eventually saturated with increasing T. Thus, the 1/N term eventually dominated the 1/NT term, so the sample size became much more important than the scan time.

For 20-min scans, the logarithmic and theoretical models performed equally well with equivalent goodness of fit (R2) across the 17 ABCD phenotypes (P = 0.57; Supplementary Table 1). For longer scan times, the theoretical model exhibited better fit than the logarithmic model across the 19 HCP phenotypes (P = 0.002; Supplementary Table 1 and Supplementary Fig. 12). Furthermore, prediction accuracy under the logarithmic model will exceed a correlation of one for sufficiently large N and T, which should not be possible. We therefore use the theoretical model in the remaining portions of the study.

Predictability increases model adherence

To explore the limits of the theoretical model, recall that the 17 ABCD phenotypes and 19 HCP phenotypes were predicted with maximum prediction accuracies of Pearson’s r > 0.1, and that the theoretical model was able to explain their prediction accuracies with an average COD or R2 of 89% (Supplementary Table 2). If we loosened the prediction threshold to include phenotypes of which the prediction accuracies (Pearson’s r) were positive in at least 90% of all combinations of sample size N and scan time T (Supplementary Table 2), the model fit was lower but still relatively high with an average COD or R2 of 76% and 73% in ABCD and HCP datasets, respectively (Supplementary Table 2).

More generally, phenotypes with high overall prediction accuracies adhered to the theoretical model well (an example is shown in Extended Data Fig. 4a), while phenotypes with poor prediction accuracies resulted in poor adherence to the model (an example is shown in Extended Data Fig. 4b). Indeed, the model fit was strongly correlated with prediction accuracy across phenotypes in both datasets (Spearman’s ρ = 0.90; P = 0.001; Extended Data Fig. 4c,d). These findings suggest that the imperfect fit of the theoretical model for some phenotypes may be due to their poor predictability, rather than true variation in prediction accuracy with respect to sample size and scan time.

Non-stationarity weakens model adherence

As noted above, some phenotypes probably fail to match the theoretical model owing to intrinsically poor predictability. However, there were also phenotypes that were reasonably well predicted, yet still exhibited a poor fit to the theoretical model. For example, ‘Anger: Aggression’ was reasonably well predicted in the HCP dataset. While the prediction accuracy increased with larger sample sizes (Spearman’s ρ = 1.00), extending the scan duration did not generate a similarly consistent effect for this phenotype (Spearman’s ρ = 0.21; Extended Data Fig. 5a).

This suggests that fMRI–phenotype relationships might be non-stationary for certain phenotypes, which violates an assumption in the theoretical model. To put this in more colloquial terms, the assumption is that the FC–phenotype relationship is the same (that is, stationary) regardless of whether FC was computed based on 5 min of fMRI from the beginning, middle or end of the MRI session. We note that, for both HCP and ABCD datasets, fMRI was collected over four runs. To test for non-stationarity, we randomized the fMRI run order independently for each participant and repeated the FC computation (and prediction) using the first T min of resting-state fMRI data under the randomized run order (see the ‘Non-stationarity analysis’ section of the Methods). The run randomization improved the goodness of fit of the theoretical model (P < 4 × 10−5), suggesting the presence of non-stationarities (Extended Data Fig. 5b,c).

Arousal changes between or during resting-state scans are well established33,34,35,36,37; we therefore expect fMRI scans, especially longer-duration scans, to be non-stationary. However, as run randomization affected some phenotypes more than others, this suggests that there is an interaction between fMRI non-stationarity and phenotypes, that is, the fMRI–phenotype relationship is also non-stationary.

Higher overhead costs favour longer scans

We have shown that investigators have some flexibility in attaining a specified prediction accuracy through different combinations of sample size and scan time per participant (Fig. 1). Furthermore, the theoretical model suggests that the sample size is more important than the scan time (Fig. 3). However, when designing a study, it is important to consider the fundamental asymmetry between sample size and scan time per participant owing to the inherent overhead cost associated with each participant. These overhead costs might include recruitment effort, manpower to perform neuropsychological tests, additional MRI modalities (for example, anatomical T1, diffusion MRI), other biomarkers (for example, positron emission tomography (PET) or blood tests). Thus, the overhead cost can often be higher than the cost of the fMRI scan itself.

To derive a reference for future studies, we considered four additional resting-state datasets (TCP, MDD, ADNI and SINGER; see the ‘Datasets, phenotypes and participants’ section of the Methods). In total, 34 phenotypes exhibited good fit to the theoretical model (Supplementary Table 3 and Supplementary Figs. 13–16). We also considered task-FC of the three ABCD tasks, and found that the number of phenotypes with a good fit to the theoretical model ranged from 16 to 19 (Supplementary Table 3 and Supplementary Figs. 17–19).

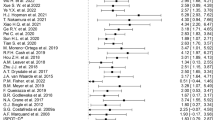

In total, we considered nine datasets: six resting-fMRI datasets and three ABCD task-fMRI datasets. We fitted the theoretical model to 76 phenotypes in the nine datasets, yielding an average COD or R2 of 89% (Supplementary Table 1). These datasets span multiple fMRI sequences (single-echo single-band, single-echo multiband, multi-echo multiband), coordinate systems (fsLR, fsaverage, MNI152), racial groups (Western and Asian populations), mental health conditions (healthy, neurological and psychiatric) and age groups (children, young adults and older individuals). More dataset characteristics are shown in Supplementary Table 5.

For each phenotype, the fitted model was normalized to the phenotype’s maximum achievable accuracy (estimated by the theoretical model), yielding a fraction of maximum achievable prediction accuracy for every combination of sample size and scan time per participant. The fraction of maximum achievable prediction accuracy was then averaged across the phenotypes under a hypothetical tenfold cross-validation scenario (Fig. 4a). Note that the Pearson’s correlation between Figs. 4a and 1a across corresponding sample sizes and scan durations was 0.97 (Supplementary Table 1).

a, The fraction of maximum prediction accuracy as a function of sample size and scan time per participant averaged across 76 phenotypes from nine datasets (six resting-fMRI and three ABCD task-fMRI datasets). We assumed that 90% of participants were used for training the predictive model, and 10% for model evaluation (that is, tenfold cross-validation). b, The optimal scan time (minimizing costs) across 108 scenarios. Given three possible accuracy targets (80%, 90% or 95% of the maximum achievable accuracy), 2 possible overhead costs (US$500 or US$1,000 per participant) and 2 possible scan costs per hour (US$500 or US$1,000), there were 3 × 2 × 2 = 12 conditions. In total, we had 9 datasets × 12 conditions = 108 scenarios. For 85% of scenarios, the cost-optimal scan time was ≥20 min (red dashed line). c, The normalized cost inefficiency (across the 108 scenarios) as a function of fixed scan time per participant, relative to the optimal scan time in b. In practice, the optimal scan time in b is not known in advance, so this plot seeks to derive a fixed optimal scan time generalizable to most situations. Each box plot contains 108 datapoints (corresponding to 108 scenarios). The box limits show the IQR, the horizontal lines show the median values and the whiskers span non-outlier extremes (within 1.5 ×IQR). For visualization, box plots were normalized by subtracting the cost inefficiency of the best possible fixed scan time (30 min in this case), so that the normalized cost inefficiency of the best possible fixed scan time is centred at zero. d, The cost savings relative to 10 min of scan time per participant. The greatest cost saving (22%) was achieved at 30 min.

Given a scan cost per hour (for example, US$500) and overhead cost per participant (for example, US$500), we can find all pairs of sample sizes and scan times that fit within a particular fMRI budget (for example, US$1 million). We can then use Fig. 4a to find the optimal sample size and scan time leading to the largest fraction of maximum prediction accuracy (see the ‘Optimizing within a fixed fMRI budget’ section of the Methods). Extended Data Fig. 6 illustrates the prediction accuracy that is achievable with different fMRI budgets, costs per hour of scan time and overhead costs per participant. Extended Data Table 2 shows the optimal scan times for a wider range of fMRI budgets, scan costs per hour and overhead costs per participant.

Larger fMRI budgets, lower scan costs and lower overhead costs enable larger sample sizes and scan times, leading to a greater achievable prediction accuracy (Extended Data Fig. 6). From the curves, we can determine the optimal scan time to achieve the greatest prediction accuracy within a fixed scan budget (Extended Data Fig. 6 (solid circles)). The optimal scan time increases with larger overhead costs, lower fMRI budget and lower scan costs. As the scan time per participant increases, all curves exhibit a steep initial ascent, followed by a gradual decline. The asymmetry of the curves suggests that it is better to overshoot than undershoot optimal scan time (Supplementary Table 1).

For example, consider a US$2.5 million US National Institutes of Health (NIH) R01 grant. Assuming an fMRI budget of US$1 million, a scan cost of US$500 per hour and an overhead cost of US$500 per participant, the optimal scan time would be 34.5 min per participant. Suppose PET data were also collected, then the overhead cost might increase to US$5,000 per participant, resulting in an optimal scan time of 159.3 min per participant.

30-min scans are the most cost-effective

Beyond optimizing scan time to maximize prediction accuracy within a fixed scan budget (previous section), the model fits shown in Fig. 4a can also be used to optimize scan time to minimize the study cost to achieve a fixed accuracy target. For example, suppose we want to achieve 90% of the maximum achievable accuracy, we can find all pairs of sample size and scan time per participant along the black contour line corresponding to 0.9 in Fig. 4a. For every pair of sample size and scan time, we can then compute the study cost given a particular scan cost per hour (for example, US$500) and a particular overhead cost per participant (for example, US$1,000). The optimal scan time (and sample size) with the lowest study cost can then be obtained (see the ‘Optimizing to achieve a fixed accuracy’ section of the Methods).

Here we considered three possible accuracy targets (80%, 90% or 95% of maximum accuracy), two possible overhead costs (US$500 or US$1,000 per participant) and two possible scan costs per hour (US$500 or US$1,000). In total there were 3 × 2 × 2 = 12 conditions. As there were nine datasets, this resulted in 12 × 9 = 108 scenarios. In the vast majority (85%) of these 108 scenarios, the optimal scan time was at least 20 min (Fig. 4b).

However, during study design, the optimal scan time is not known in advance. We therefore also aimed to identify a fixed scan time that is cost-effective in most situations. Figure 4c shows the normalized cost inefficiency of various fixed scan times relative to the optimal scan time for each of 108 scenarios. Many consortium BWAS collect 10 min fMRI scans, which is highly cost inefficient. On average across resting and task states, 30 min scans were the most cost-effective (95% bootstrapped confidence interval (CI) = 25–40; Extended Data Fig. 7 and Supplementary Table 1), yielding 22% cost savings over 10 min scans (Fig. 4d). We again note the asymmetry in the cost curves, so it is cheaper to overshoot than undershoot the most cost-effective scan time. For example, 50-min scans overshoot the optimum by 20 min, but still incur 18% cost savings over 10 min scans (which undershoot the optimum by 20 min).

Minimizing task-fMRI costs

Across the six resting-state datasets (Fig. 5a), the most cost-effective scan time was the longest for ABCD (60 min; CI = 40–100) and shortest for the TCP and ADNI datasets (20 min; TCP, CI = 10–35; ADNI, CI = 15–35). However, a scan time of 30 min was still relatively cost-effective for all datasets, owing to a flat cost curve near the optimum and the asymmetry of the cost curve. For example, even for the TCP dataset, which had the shortest most cost-effective scan time of 20 min, over-scanning with 30-min scans led to only a 3.7% higher cost relative to 20 min, compared with a 7.3% higher cost for under-scanning with 10 min scans.

a, Cost inefficiency as a function of the scan time per participant for the six resting-state datasets. This plot provides the same information as Fig. 4c, but shown for each dataset separately. b, Cost inefficiency for ABCD resting-state and task-state fMRI. c, Cost inefficiency when scans collected in two separate sessions versus one session (based on the HCP dataset). Similar to Fig. 4c, for visualization, each curve is normalized by subtracting the cost inefficiency of the best possible fixed scan time (of each curve), so that the normalized cost inefficiency of the best possible fixed scan time is centred at zero. For a–c, the numbers in brackets indicate the number of phenotypes. The arrows indicate the most cost-effective scan time. 95% bootstrapped CIs are reported in Supplementary Table 1.

Previous studies have shown that task-FC yields better prediction performance for cognitive measures38,39. Here we extend previous results, finding that the most cost-effective scan time was shorter for ABCD task-fMRI than ABCD resting-state fMRI (Fig. 5b and Supplementary Table 1). Among the three tasks, the most cost-effective scan time was the shortest for N-back at 25 min (CI = 20–35), but 30 min scans led to only a 0.9% higher cost (relative to 25 min), compared with a 16.1% higher cost for 10 min scans.

These task results suggest that the most cost-effective scan time is sensitive to brain state manipulation. Task-based fMRI may preferentially engage cognitive and physiological mechanisms that are closely tied to the expression of specific phenotypes (for example, processing speed), thereby enhancing the specificity of functional connectivity estimates for phenotypic prediction. Tasks may also facilitate shorter, more-efficient scan durations by aligning brain states across individuals in a controlled manner, thereby reducing spurious non-stationary influences that could otherwise obscure reliable modelling of interindividual differences. This alignment might be better achieved in tasks that present stimuli and conditions with identical timing across participants—whether using event-related or block designs.

Non-stationarity may also be potentially increased by distributing resting-state fMRI runs across multiple sessions. As the HCP dataset was collected on two different days (sessions), we were also able to directly compare the effect of a two-session versus a one-session design. The most cost-effective scan time for the two-session design was only slightly longer than for the original HCP analysis (Fig. 5c): 40 min (CI = 30–55) versus 30 min (CI = 25–40).

Overall, these results suggest that state manipulation can influence the most cost-effective scan time, and that a relatively large state manipulation (for example, task fMRI) can significantly influence the cost-effectiveness.

Variation across phenotypes and scan parameters

There were clear variations across phenotypes. For example, there were phenotypes that could be predicted well and demonstrated prediction gains up to the maximum amount of data per participant (for example, age in the ADNI dataset; Supplementary Fig. 16). However, there were also other phenotypes that were predicted less well (for example, BMI in the SINGER dataset; Supplementary Fig. 13) but showed prediction gains up to the maximum amount of data per participant. As single phenotypes are not easily interpreted, we grouped the phenotypes into seven phenotypic domains to study phenotypic variation in more detail.

For five out of the seven phenotypic domains, the most cost-effective scan times ranged from 25 min to 40 min (Extended Data Fig. 8a). The most cost-effective scan time for the emotion domain was exceptionally long, but this outlier was driven by a single phenotypic measure, so should not be overinterpreted. For the PET phenotypic domain, our original scenarios assumed overhead costs of US$500 or US$1,000 per participant, which was unrealistic. Assuming a more realistic overhead PET cost per participant (US$5,000 or $10,000) yielded 50 min as the most cost-effective scan time.

Although there was a strong relationship between phenotypic prediction accuracy and goodness-of-fit to the theoretical model (Extended Data Fig. 4), we did not find an obvious relationship between phenotypic prediction accuracy and optimal scan time (Extended Data Fig. 8b and Supplementary Table 1). Recent studies have also demonstrated that phenotypic reliability is important for BWAS power40,41. In our theoretical model, phenotypic reliability directly impacts the overall prediction accuracy but does not directly contribute to the trade-off between sample size and scan time. Indeed, there was not an obvious relationship between phenotypic test–retest reliability and optimal scan time (Extended Data Fig. 8c and Supplementary Table 1).

There was also not an obvious relationship between optimal scan time and temporal resolution, voxel resolution or scan sequence (Extended Data Figs. 8d–f and Supplementary Table 1). We emphasize that we are not claiming that scan parameters do not matter, but that other variations between datasets (for example, phenotypes, populations) might exert a greater impact than common variation in scan parameters.

Consistent with the previous sections, we note that, for the vast majority of phenotypes and scan parameters, the optimal scan time was at least 20 min and, on average, the most cost-effective scan time was 30 min (Fig. 4c).

Minimizing costs of subcortical BWAS

Our main analyses involved a cortical parcellation with 400 regions and 19 subcortical regions, yielding 419 × 419 RSFC matrices. We also repeated the analyses using 19 × 419 subcortical-to-whole-brain RSFC matrices. The most cost-effective scan time for subcortical RSFC was about double that of whole-brain RSFC (Fig. 6a, Supplementary Table 1 and Extended Data Fig. 7). This might arise due to the lower signal-to-noise ratio (SNR) in subcortical regions, resulting in the need for a longer scan time to achieve a better estimate of subcortical FC.

a, Cost inefficiency as a function of scan time per participant with subcortical-to-whole-brain FC versus whole-brain FC. For visualization, similar to Fig. 4c, the curves are normalized by subtracting the cost inefficiency of the best possible fixed scan time (of each curve), so that the normalized cost inefficiency of the best possible fixed scan time is centred at zero. The numbers in brackets indicate the number of phenotypes in each condition. The arrows indicate the most cost-effective scan time. 95% bootstrapped CIs are reported in Supplementary Table 1. b, The optimal scan time for predicting the cognitive factor score as a function of simulated Gaussian noise with s.d. of σ. c, The optimal scan time for predicting the cognitive factor score as a function of the cortical parcellation resolution. For b and c, consistent with Fig. 4c, there were 12 conditions, resulting in 12 curves, but some curves overlap, so are not obvious. SC, subcortical.

To explore the effects of fMRI SNR, for each parcel time course, we z-normalized the fMRI time course, so the resulting s.d. of the time course was equal to one. We then added zero mean Gaussian noise with s.d. of σ. Even doubling the noise (σ = 1) had very little impact on the optimal scan time (Fig. 6b and Supplementary Table 1). As a sanity check, we added a large quantity of noise (σ = 3), which led to a much longer optimal scan time (Fig. 6b and Supplementary Table 1).

Intuitively, this is not surprising because a lower SNR means that a longer scan time is necessary to get an accurate estimate of individual-level FC. It is interesting that a large SNR change is necessary to make a noticeable difference in optimal scan time, which might explain the robustness of optimal scan times across the common scan parameters that we explored in the previous section (Extended Data Fig. 8). Thus, even with small to moderate technological improvements in SNR, the most cost-effective scan time is unlikely to substantially deviate from our estimate. However, a major increase in SNR could shorten the most cost-effective scan time from the current estimates.

We also varied the resolution of the cortical parcellation with 200, 400, 600, 800 or 1,000 parcels for predicting the cognitive factor scores in the HCP and ABCD datasets. There was a weak trend in which higher parcellation resolution led to slightly lower optimal scan time, although there was a big drop in the optimal scan time from 200 parcels to 400 parcels in the ABCD dataset (Fig. 6c and Supplementary Table 1). Given that subcortical-to-whole-brain FC has fewer edges (features) than whole-brain FC, this could be another reason why subcortical-to-whole-brain FC requires longer optimal scan time.

Accuracy versus reliability

Finally, we examine the effects of sample size and scan time per participant on the reliability of BWAS1 using a previously established split-half procedure14,15 (Supplementary Fig. 20; see the ‘Brain-wide association reliability’ section of the Methods). For both univariate and multivariate BWAS reliability, diminishing returns of scan time (relative to sample size) occurred beyond 10 min per participant (Supplementary Figs. 21–29), instead of 20 min for prediction accuracy (Fig. 2). We note that reliability is necessary but not sufficient for validity21,42. For example, hardware artifacts may appear reliably in measurements without having any biological relevance. Thus, reliable BWAS features do not guarantee accurate prediction of phenotypic measures. As such, we recommend that researchers prioritize prediction accuracy.

Longer scans are more cost-effective

To summarize, 30 min scans are on average the most cost-effective across resting-state and task-state whole-brain BWAS (Fig. 4c). The cost curves are also asymmetric, so it is cheaper to overshoot than undershoot the optimum (Fig. 4d). Thus, even when the most-effective scan time is shorter than 30 min (for example, N-back task or TCP dataset), 30-min scans incur only a small penalty relative to knowing the true optimal scan time a priori. Furthermore, for subcortical BWAS, the most cost-effective scans are much longer than 30 min.

Our results present a compelling case for moving beyond traditional power analyses, of which the only inputs are sample size, to inform BWAS design. Such power analyses can only point towards maximizing the sample size, so the scan time becomes implicitly minimized under budget constraints. Our findings show that we can achieve higher prediction performance by increasing both the sample size and the scan time, while generating substantial cost-savings compared with increasing the sample size alone.

Our results complement recent advocation for larger sample sizes to increase BWAS reproducibility1. Consistent with previous studies43, when sample size is small, there is a high degree of variability across cross-validation folds (Fig. 3a). Furthermore, large sample sizes are still necessary for high prediction accuracy. To achieve 80% of the maximum prediction accuracy with 30-min scans, a sample size of about 900 is necessary (Fig. 4a), which is much larger than typical BWAS1. To achieve 90% of the maximum prediction accuracy with 30-min scans, a sample size of around 2,500 is necessary (Fig. 4a).

In addition to increasing the sample size and scan time, BWAS effect sizes can also be enhanced through innovative study designs. Recent work showed that U-shaped population sampling can enhance the strength of associations between functional connectivity and phenotypic measures44. However, more complex screening procedures will increase the overhead costs per participant, which might lengthen optimal scan time.

The current analysis was focused on high target accuracies (80%, 90% or 95%) and relatively low overhead costs (US$500 or US$1,000). Lower target accuracies (in smaller-scale studies) and higher overhead costs (for example, PET, multisite data collection) will lead to longer cost-effective scan time (Extended Data Fig. 6). In practice, scans are also more likely to be spuriously shortened (for example, due to participant discomfort) than to be spuriously extended. We therefore recommend a scan time of at least 30 min.

Overall, 10 min scans are rarely cost-effective, and the optimum scan time is at least 20 min in most BWAS (Fig. 4c). Among the datasets that we analysed, four included scans of at least 20 min, providing robust evidence to support this conclusion across multiple datasets. By contrast, we could identify only one dataset (HCP) with scans exceeding 30 min and a sufficiently large sample size for inclusion in our study. Similarly, although the ABCD task-fMRI scans are among the longest in existing large-scale datasets, the longest scan duration is less than 13 min. This limitation underscores the importance of our findings, emphasizing the need for BWAS to prioritize longer scans.

Non-economic considerations

Beyond economic considerations, the representativeness of the data sample and the generalizability of predictive models to subpopulations are also important factors when designing a study45,46,47,48,49,50. One approach would be to aim for a larger sample size (potentially at the expense of scan time) to ensure sufficient sample sizes for subpopulations. Alternatively, one could also make the participant-selection criteria more stringent to maintain the representativeness of a subpopulation. However, this would drive up the recruitment cost for the subpopulation, so our results suggest that it might be more economically efficient to scan harder-to-recruit subpopulations longer. For example, instead of 20 min resting-state scans for all ABCD participants, perhaps subpopulations (for example, Black participants) could be scanned for a longer period of time.

In other situations, the sample size is out of the investigator’s control, for example, if the investigator wants to scan an existing cohort. In the case of the SINGER dataset, the sample size was determined by the power calculation of the actual lifestyle intervention51 with the imaging data included to gain further insights into the intervention. As another example, in large-scale prospective studies (for example, the UK Biobank), the sample size is determined by the fact that only a small proportion of participants will develop a given condition in the future52. In these situations, the scan time becomes constrained by the overall budget and fitting all phenotyping efforts within a small number of sessions (to avoid participant fatigue). Nevertheless, even in these situations in which the sample size is predetermined, Fig. 4a can still provide an empirical reference on the marginal gains in prediction accuracy as a function of scan time.

Finally, some studies may necessitate extensive scan time per participant by virtue of the scientific question. For example, when studying sleep stages, it is not easy to predict how long a participant would need to enter a particular sleep stage. Conversely, some phenomena of interest might be inherently short-lived. For example, if the goal is to characterize the effects of a fast-acting drug (for example, nitrous oxide), then it might not make sense to collect long fMRI scans. Furthermore, not all studies are interested in cross-sectional relationships between brain and non-brain-imaging phenotypes. For example, in the case of personalized brain stimulation53,54 or neurosurgical planning55, a substantial quantity of resting-state fMRI data might be necessary for accurate individual-level network estimation24,56,57.

A web application for study design

Beyond our broad recommendation of scan times of at least 30 min, we recognize that investigators might be interested in achieving the optimal sample size and scan time specific to their study’s constraints. We therefore built a web application to help to facilitate flexible study design (https://thomasyeolab.github.io/OptimalScanTimeCalculator/index.html). The web application includes additional constraints that were not analysed in the current study. For example, certain demographic and patient populations might not be able to tolerate longer scans, so an additional factor will be the maximum scan time in each MRI session. Furthermore, our analysis was performed on participants whose data survived quality control. We have therefore also provided an option on the web application to allow researchers to specify their estimate of the percentage of participants whose data might be lost due to poor data quality or participant drop out. Overall, our empirically established guidelines provide actionable insights for significantly reducing costs, while improving BWAS individual-level prediction performance.

Methods

Datasets, phenotypes and participants

Following previous studies, we considered 58 HCP phenotypes59,60 and 36 ABCD phenotypes15,39. We also consider a cognition factor score derived from all phenotypes from each dataset31, yielding a total of 59 HCP and 37 ABCD phenotypes (Supplementary Table 4).

In this study, we used resting-state fMRI from the HCP WU-Minn S1200 release. We filtered participants from a previously reported set of 953 participants60, excluding participants who did not have at least 40 min of uncensored data (censoring criteria are discussed in the ‘Image processing’ section) or did not have the full set of the 59 non-brain-imaging phenotypes (hereafter, phenotypes) that we investigated. This resulted in a final set of 792 participants of whom the demographics are described in Supplementary Table 5. The HCP data collection was approved by a consortium of institutional review boards (IRBs) in the USA and Europe, led by Washington University in St Louis and the University of Minnesota (WU-Minn HCP Consortium).

We also considered resting-state fMRI from the ABCD 2.0.1 release. We filtered participants from a previously reported set of 5,260 participants15. We excluded participants who did not have at least 15 min of uncensored resting-fMRI data (censoring criteria are discussed in the ‘Image processing’ section) or did not have the full set of the 37 phenotypes that we investigated. This resulted in a final set of 2,565 participants of whom the demographics are described in Supplementary Table 5. Most ABCD research sites relied on a central IRB at the University of California, San Diego, for the ethical review and approval of the research protocol, while the others obtained local IRB approval.

We also used resting-state fMRI from the SINGER baseline cohort. We filtered participants from an initial set of 759 participants, excluding participants who did not have at least 10 min of resting-fMRI data or did not have the full set of the 19 phenotypes that we investigated (Supplementary Table 4). This resulted in a final set of 642 participants of whom the demographics described in Supplementary Table 5. The SINGER study has been approved by the National Healthcare Group Domain-Specific Review Board and is registered under ClinicalTrials.gov (NCT05007353) with written informed consent obtained from all participants before enrolment into the study.

We used resting-state fMRI from the TCP dataset. We filtered participants from an initial set of 241 participants, excluding participants who did not have at least 26 min of resting-fMRI data or did not have the full set of the 19 phenotypes that we investigated (Supplementary Table 4). This resulted in a final set of 194 participants of whom the demographics are described in Supplementary Table 5. The participants from the TCP study provided written informed consent following guidelines established by the Yale University and McLean Hospital (Partners Healthcare) IRBs.

We used resting-state fMRI from the MDD dataset. We filtered participants from an initial set of 306 participants. We excluded participants who did not have at least 23 min of resting-fMRI data or did not have the full set of the 20 phenotypes that we investigated (Supplementary Table 4). This resulted in a final set of 287 participants of whom the demographics are described in Supplementary Table 5. The MDD dataset was collected from multiple rTMS clinical trials, and all data were obtained at the pretreatment stage. These trials include ChiCTR2300067671 (approved by the Institutional Review Boards of Beijing Anding Hospital, Henan Provincial People’s Hospital, and Tianjin Medical University General Hospital); NCT05842278, NCT05842291 and NCT06166082 (all approved by the IRB of Beijing HuiLongGuan Hospital); and NCT06095778 (approved by the IRB of the Affiliated Brain Hospital of Guangzhou Medical University).

We used resting-state fMRI from the ADNI datasets (ADNI 2, ADNI 3 and ADNI GO). We filtered participants from an initial set of 768 participants with both fMRI and PET scans acquired within 1 year of each other. We excluded participants who did not have at least 9 min of resting-fMRI data or did not have the full set of the six phenotypes that we investigated (Supplementary Table 4). This resulted in a final set of 586 participants of whom the demographics are described in Supplementary Table 5. The ADNI study was approved by the IRBs of all participating institutions with informed written consent from all participants at each site.

Moreover, we considered task-fMRI from the ABCD 2.0.1 release. We filtered participants from a previously described set of 5,260 participants15. We excluded participants who did not have all three task-fMRI data remaining after quality control, or did not have the full set of the 37 phenotypes that we investigated. This resulted in a final set of 2,262 participants, of whom the demographics are described in Supplementary Table 5.

Image processing

For the HCP dataset, the MSMAll ICA-FIX resting state scans were used61. Global signal regression (GSR) has been shown to improve behavioural prediction60, so we further applied GSR and censoring, consistent with our previous studies16,60,62. The censoring process entailed flagging frames with either FD (framewise displacement) > 0.2 mm or DVARS (differential variance) > 75. The frames immediately before and after flagged frames were marked as censored. Moreover, uncensored segments of data consisting of less than five frames were also censored during downstream processing.

For the ABCD dataset, the minimally processed resting state scans were used63. Processing of functional data was performed consistent with our previous study39. Specifically, we additionally processed the minimally processed data with the following steps. (1) The functional images were aligned to the T1 images using boundary-based registration64. (2) Respiratory pseudomotion filtering was performed by applying a bandstop filter of 0.31–0.43 Hz (ref. 65). (3) Frames with FD > 0.3 mm or DVARS > 50 were flagged. The flagged frame, as well as the frame immediately before and two frames immediately after the marked frame were censored. Furthermore, uncensored segments of data consisting of less than five frames were also censored. (4) Global, white matter and ventricular signals, six motion parameters and their temporal derivatives were regressed from the functional data. Regression coefficients were estimated from uncensored data. (5) Censored frames were interpolated with the Lomb–Scargle periodogram66. (6) The data underwent bandpass filtering (0.009–0.08 Hz). (7) Lastly, the data were projected onto FreeSurfer fsaverage6 surface space and smoothed using a 6 mm full-width half-maximum kernel. Task-fMRI data were processed in the same way as the resting-state fMRI data.

For the SINGER dataset, we processed the functional data with the following steps. (1) Removal of the first four frames. (2) Slice time correction. (3) Motion correction and outlier detection: frames with FD > 0.3 mm or DVARS > 60 were flagged as censored frames. 1 frame before and 2 frames after these volumes were flagged as censored frames. Uncensored segments of data lasting fewer than five contiguous frames were also labelled as censored frames. Runs with over half of the frames censored were removed. (4) Correcting for susceptibility-induced spatial distortion. (5) Multi-echo denoising67. (6) Alignment with structural image using boundary-based registration64. (7) Global, white matter and ventricular signals, six motion parameters and their temporal derivatives were regressed from the functional data. Regression coefficients were estimated from uncensored data. (8) Censored frames were interpolated with the Lomb–Scargle periodogram66. (9) The data underwent bandpass filtering (0.009–0.08 Hz). (10) Lastly, the data were then projected onto FreeSurfer fsaverage6 surface space and smoothed using a 6 mm full-width half-maximum kernel.

For the TCP dataset, the details of data processing can be found elsewhere68. In brief, the functional data were processed by following the HCP minimal processing pipeline with ICA-FIX, followed by GSR. The processed data were then projected onto MNI space.

For the MDD dataset, we processed the functional data with the following steps. (1) Slice time correction. (2) Motion correction. (3) Normalization for global mean signal intensity. (4) Alignment with structural image using boundary-based registration64. (5) Linear detrending and bandpass filtering (0.01–0.08 Hz). (6) Global, white matter and ventricular signals, six motion parameters and their temporal derivatives were regressed from the functional data. (7) Lastly, the data were then projected onto FreeSurfer fsaverage6 surface space and smoothed using a 6 mm full-width half-maximum kernel.

For the ADNI dataset, we processed the functional data with the following steps. (1) Slice time correction. (2) Motion correction. (3) Alignment with structural image using boundary-based registration64. (4) Global, white matter and ventricular signals, six motion parameters, and their temporal derivatives were regressed from the functional data. (5) Lastly, the data were then projected onto FreeSurfer fsaverage6 surface space and smoothed using a 6 mm full-width half-maximum kernel.

We derived a 419 × 419 RSFC matrix for each participant of each dataset using the first T minutes of scan time. The 419 regions consisted of 400 parcels from the Schaefer parcellation30, and 19 subcortical regions of interest69. For the HCP, ABCD and TCP datasets, T was varied from 2 to the maximum scan time in intervals of 2 min. This resulted in 29 RSFC matrices per participant in the HCP dataset (generated from using the minimum amount of 2 min to the maximum amount of 58 min in intervals of 2 min), 10 RSFC matrices per participant in the ABCD dataset (generated from using the minimum amount of 2 min to the maximum amount of 20 min in intervals of 2 min) and 13 RSFC matrices per participant in the TCP dataset (generated from using the minimum amount of 2 min to the maximum amount of 26 min in intervals of 2 min).

In the case of the MDD dataset, the total scan time was an odd number (23 min), so T was varied from 3 to the maximum of 23 min in intervals of 2 min, which resulted in 11 RSFC matrices per participant. For SINGER, ADNI and ABCD task-fMRI data, as the scans were relatively short (around 10 min), T was varied from 2 min the maximum scan time in intervals of 1 min. This resulted in 9 RSFC matrices per participant in the SINGER datasets (generated from using the minimum amount of 2 min to the maximum amount of 10 min), 8 RSFC matrices per participant in the ADNI datasets (generated from using the minimum amount of 2 min to the maximum amount of 9 min), 9 RSFC matrices per participant in the ABCD N-back task (from using the minimum amount of 2 min to the maximum amount of 9.65 min), 11 RSFC matrices per participant in the ABCD SST task (from using the minimum amount of 2 min to the maximum amount of 11.65 min) and 10 RSFC matrices per participant in the ABCD MID task (from using the minimum amount of 2 min to the maximum amount of 10.74 min).

We note that the above preprocessed data were collated across multiple laboratories and, even within the same laboratory, datasets were processed by different individuals many years apart. This led to significant preprocessing heterogeneity across datasets. For example, raw FD was used in the HCP dataset because it was processed many years ago, while the more recently processed ABCD dataset used a filtered version of FD, which has been shown to be more effective. Another variation is that some datasets were projected to fsaverage space, while other datasets were projected to MNI152 or fsLR space.

Prediction workflow

The RSFC generated from the first T minutes was used to predict each phenotypic measure using KRR16 with an inner-loop (nested) cross-validation procedure.

Let us illustrate the procedure using the HCP dataset (Extended Data Fig. 1). We began with the full set of participants. A tenfold nested cross-validation procedure was used. The participants were divided in ten folds (Extended Data Fig. 1 (first row)). We note that care was taken so siblings were not split across folds, so the ten folds were not exactly the same sizes. For each of ten iterations, one fold was reserved for testing (that is, test set), and the remainder was used for training (that is, the training set). As there were 792 HCP participants, the training set size was roughly 792 × 0.9 ≈ 700 participants. The KRR hyperparameter was selected through a tenfold cross-validation of the training set. The best hyperparameter was then used to train a final KRR model in the training set and applied to the test set. Prediction accuracy was measured using Pearson’s correlation and COD39.

The above analysis was repeated with different training set sizes achieved by subsampling each training fold (Extended Data Fig. 1 (second and third rows)), while the test set remained identical across different training set sizes, so the results are comparable across different training set sizes. The training set size was subsampled from 200 to 600 (in intervals of 100). Together with the full training set size of approximately 700 participants, there were 6 different training set sizes, corresponding to 200, 300, 400, 500, 600 and 700.

The whole procedure was repeated with different values of T. As there were 29 values of T, there were in total 29 × 6 sets of prediction accuracies for each phenotypic measure. To ensure robustness, the above procedure was repeated 50 times with different splits of the participants into ten folds to ensure stability (Extended Data Fig. 1). The prediction accuracies were averaged across all test folds and all 50 repetitions.

The procedure for the other datasets followed the same principle as the HCP dataset. However, the ABCD (rest and task) and ADNI datasets comprised participants from multiple sites. Thus, following our previous studies31,39, we combined ABCD participants across the 22 imaging sites into 10 site-clusters and combined ADNI participants across the 71 imaging sites into 20 site-clusters (Supplementary Table 5). Each site-cluster has at least 227, 156 and 29 participants in the ABCD (rest), ABCD (task) and ADNI datasets respectively.

Instead of the tenfold inner-loop (nested) cross-validation procedure in the HCP dataset, we performed a leave-three-site-clusters-out inner-loop (nested) cross-validation (that is, seven site-clusters are used for training and three site-clusters are used for testing) in the ABCD rest and task datasets. The hyperparameter was again selected using a tenfold CV within the training set. This nested cross-validation procedure was performed for every possible split of the site clusters, resulting in 120 replications. The prediction accuracies were averaged across all 120 replications.

We did not perform a leave-one-site-cluster-out procedure because the site-clusters are ‘fixed’, so the cross-validation procedure can only be repeated ten times under a leave-one-site-cluster-out scenario (instead of 120 times). Similarly, we did not go for leave-two-site-clusters-out procedure because that will only yield a maximum of 45 repetitions of cross-validation. On the other hand, if we left more than three site clusters out (for example, leave-five-site-clusters-out), we could achieve more cross-validation repetitions, but at the cost of reducing the maximum training set size. We therefore opted for the leave-three-site-clusters-out procedure, consistent with our previous study39.

To be consistent with the ABCD dataset, for the ADNI dataset, we also performed a leave-three-site-clusters-out inner-loop (nested) cross-validation procedure. This procedure was performed for every possible split of the site clusters, resulting in 1,140 replications. The prediction accuracies were averaged across all 1,140 replications.

We also performed tenfold inner-loop (nested) cross-validation procedure in the TCP, MDD and SINGER datasets. Although the data from the TCP and MDD datasets were acquired from multiple sites, the number of sites was much smaller (2 and 5, respectively) than that of the ABCD and ADNI datasets. We were therefore unable to use the leave-some-site-out cross-validation strategy because that would reduce the training set size by too much. We therefore ran a tenfold nested cross-validation strategy (similar to the HCP). However, we regress sites from the target phenotype in the training set, which were then applied to the test set. In other words, our prediction was performed on the residuals of phenotypes after site regression. Site regression was unnecessary for the SINGER dataset as the data were collected from only a single site. The rest of the prediction workflow was the same as the HCP dataset, except for the number of repetitions. As TCP, MDD and SINGER datasets had smaller sample sizes than the HCP dataset, the tenfold cross-validation was repeated 350 times. The prediction accuracies were averaged across all test folds and all repetitions.

Similar to the HCP, the analyses were repeated with different numbers of training participants, ranging from 200 to 1,600 ABCD (rest) participants (in intervals of 200). Together with the full training set size of approximately 1,800 participants, there were 9 different training set sizes. The whole procedure was repeated with different values of T. As there were 10 values of T in the ABCD (rest) dataset, there were in total 10 × 9 values of prediction accuracies for each phenotype. In the case of ABCD (task), the sample size was smaller with maximum training set size of approximately 1,600 participants, so there were only eight different training set sizes.

The ADNI and SINGER datasets had less participants than the HCP dataset, so we decided to sample the training set size more finely. More specifically, we repeated the analyses by varying the number of training participants from the minimum sample size of 100 to the maximum sample size in intervals of 100. For SINGER, the full training set size is around 580 participants, so there were 6 different training set sizes in total (100, 200, 300, 400, 500 and ~580). For ADNI, the full training set size is around 530, so there were also 6 different training set sizes in total (100, 200, 300, 400, 500 and ~530).

Finally, TCP and MDD datasets were the smallest, so the training set size was sampled even more finely. More specifically, we repeated the analyses by varying the number of training participants from the minimum sample size of 50 to the maximum sample size in intervals of 25. For TCP, the full training set size is ~175, so there 6 training set sizes in total (50, 75, 100, 125, 150 and 175). For MDD, the full training set size is ~258, so there 10 training set sizes in total (50, 75, 100, 125, 150, 175, 200, 225, 250 and 258).

Current best MRI practices suggest that the model hyperparameter should be optimized70, so in the current study, we did not consider the case where the hyperparameter was fixed. As an aside, we note that for all analyses, the best hyperparameter was selected using a tenfold cross-validation within the training set. The best hyperparameter was then used to train the model on the full training set. Thus, the full training set was used for hyperparameter selection and for training the model. Furthermore, we needed to select only one hyperparameter, while training the model required fitting many more parameters. We therefore do not expect the hyperparameter selection to be more dependent on the training set size than training the actual model itself.

We also note that our study focused on out-of-sample prediction within the same dataset, but did not explore cross-dataset prediction71. For predictive models to be clinically useful, these models must generalize to completely new datasets. The best way to achieve this goal is by training models from multiple datasets jointly, so as to maximize the diversity of the training data72,73. However, we did not consider cross-dataset prediction in the current study because most studies are not designed with the primary aim of combining the collected data with other datasets.

A full table of prediction accuracies for every combination of sample size and scan time per participant is provided in the Supplementary Information.

Fitting the logarithmic model

By plotting prediction accuracy against total scan duration (number of training participants × scan duration per participant) for each phenotypic measure, we observed diminishing returns of scan time (relative to sample size), especially beyond 20 min per participant.

Furthermore, visual inspection suggests that a logarithmic curve might fit well to each phenotypic measure when scan time per participant is 20 min or less. To explore the universality of a logarithmic relationship between total scan duration and prediction accuracy, for each phenotypic measure p, we fitted the function yp = zplog2(tp) + kp, where yp was the prediction accuracy for phenotypic measure p, and tp is the total scan duration. zp and kp were estimated from data by minimizing the square error, yielding \({\hat{z}}_{p}\) and \({\hat{k}}_{p}\).

In addition to fitting the logarithmic curve to different phenotypic measures, the fitting can also be performed with different prediction accuracy measures (Pearson’s correlation or COD) and different predictive models (KRR and LRR). Assuming the datapoints are well explained by the logarithmic curve, the normalized accuracies \(({y}_{p}-{\hat{k}}_{p})/{\hat{z}}_{p}\) should follow a standard log2(t) curve across phenotypic measures, prediction accuracies, predictive models and datasets. For example, Supplementary Fig. 9a shows the normalized prediction performance of the cognitive factors for different prediction accuracy measures (Pearson’s correlation or COD) and different predictive models (KRR and LRR) across HCP and ABCD datasets.

Here we have chosen to use KRR and linear regression because previous studies have shown that they have comparable prediction performance, and also exhibited similar prediction accuracies as several deep neural networks16,39. Indeed, a recent study suggested that linear dynamical models provide a better fit to resting-state brain dynamics (as measured by fMRI and intracranial electroencephalogram) than nonlinear models, suggesting that, due to the challenges of in vivo recordings, linear models might be sufficiently powerful to explain macroscopic brain measurements. However, we note that, in the current study, we are not making a similar claim. Instead, our results suggest that the trade-off between scan time and sample size are similar for different regression models, and phenotypic domains, scanners, acquisition protocols, racial groups, mental disorders, age groups, as well as resting-state and task-state functional connectivity.

Fitting the theoretical model

We observed that sample size and scan time per participant did not contribute equally to prediction accuracy, with sample size having a more important role than scan time. To explain this observation, we derived a mathematical relationship relating the expected prediction accuracy (Pearson’s correlation) between noisy brain measurements and non-brain-imaging phenotype with scan time and sample size.

Based on a linear regression model with no regularization and assumptions including (1) stationarity of fMRI (that is, autocorrelation in fMRI is the same at all timepoints), and (2) prediction errors are uncorrelated with errors in brain measurements, we found that

where \(E(\widehat{\rho })\) is the expected correlation between the predicted phenotype estimated from noisy brain measurements and the observed phenotype. K0 is related to the ideal association between brain measurements and phenotype, attenuated by phenotypic reliability. K1 is related to the noise-free ideal association between brain measurements and phenotype. K2 is related to brain–phenotype prediction errors due to brain measurement inaccuracies. Full derivations are provided in Supplementary Methods 1.1 and 1.2.

On the basis of the above equation, we fitted the following function \({y}_{p}={K}_{0,p}\sqrt{\frac{1}{1+{K}_{1,p}/N+{K}_{2,p}/(NT)}}\), where yp is the prediction accuracy for phenotypic measure p, N is the sample size and T is the scan time per participant. K0,p,K1,p and K2,p were estimated by minimizing the mean squared error between the above function and actual observation of yp using gradient descent.

Non-stationarity analysis

In the original analysis, FC matrices were generated with increasing time T based on the original run order. To account for the possibility of fMRI-phenotype non-stationarity effects, we randomized the order in which the runs were considered for each participant. As both the HCP and ABCD datasets contained 4 runs of resting-fMRI, we generated FC matrices from all 24 possible permutations of run order. For each cross-validation split, the FC matrix for a given participant was randomly sampled from 1 of the 24 possible permutations. We note that the randomization was independently performed for each participant.

To elaborate further, let us consider an ABCD participant with the original run order (run 1, run 2, run 3, run 4). Each run was 5 min long. In the original analysis, if scan time T was 5 min, then we used all the data from run 1 to compute FC. If scan time T was 10 min, then we used run 1 and run 2 to compute FC. If scan time T was 15 min, then we used runs 1, 2 and 3 to compute FC. Finally, if scan time T was 20 min, we used all 4 runs to compute FC.

On the other hand, after run randomization, for the purpose of this exposition, let us assume that this specific participant’s run order had become run 3, run 2, run 4, run 1. In this situation, if the scan time T was 5 min, then we used all data from run 3 to compute FC. If scan time T was 10 min, then we used run 3 and run 2 to compute FC. If scan time T was 15 min, then we used runs 3, 2 and 4 to compute FC. Finally, if T was 20 min, we used all 4 runs to compute FC.

Optimizing within a fixed fMRI budget

To generate Extended Data Fig. 6, we note that given a particular scan cost per hour S and overhead cost per participant O, the total budget for scanning N participants with T min per participant is given by (T/60 × S + O) × N. Thus, given a fixed fMRI budget (for example, US$1 million), scan cost per hour (for example, US$500) and overhead cost per participant (for example, US$500), we increase scan time T in 1 min intervals from 1 to 200 and, for each value of T, we can find the largest sample size N, such that the scan costs stayed within the fMRI budget. For each pair of sample size N and scan time T, we can then compute the fraction of maximum accuracy based on Fig. 4a.

Optimizing to achieve a fixed accuracy

To generate Figs. 4b,c, 5 and 6, suppose we want to achieve 90% of maximum achievable accuracy, we can find all pairs of sample size and scan time per participant along the 0.9 black contour line in Fig. 4a. For every pair of sample size N and scan time T, we can then compute the study cost given a particular scan cost per hour S (for example, US$500) and a particular overhead cost per participant O (for example, US$1,000): (T/60 × S + O) × N. The optimal scan time (and sample size) with the lowest study cost can then be obtained.

Brain-wide association reliability

To explore the reliability of univariate brain-wide association analyses (BWAS)1, we followed a previously established split-half procedure14,15.

Let us illustrate the procedure using the HCP dataset (Supplementary Fig. 20a). We began with the full set of participants, which were then divided into ten folds (Supplementary Fig. 20a (first row)). We note that care was taken so siblings were not split across folds, so the ten folds were not exactly the same sizes. The ten folds were divided into two non-overlapping sets of five folds. For each set of five folds and each phenotype, we computed the Pearson’s correlation between each RSFC edge and phenotype across participants, yielding a 419 × 419 correlation matrix, which was then converted into a 419 × 419 t-statistic matrix. Split-half reliability between the (lower triangular portions of the symmetric) t-statistic matrices from the two sets of five folds was then computed using the intraclass correlation formula14,15.

The above analysis was repeated with different sample sizes achieved by subsampling each fold (Supplementary Fig. 20a (second and third rows)). The split-half sample sizes were subsampled from 150 to 350 (in intervals of 50). Together with the full sample size of approximately 800 participants (corresponding to a split-half sample size of around 400), there were 6 split-half sample sizes corresponding to 150, 200, 250, 300, 350 and 400 participants.

The whole procedure was also repeated with different values of T. As there were 29 values of T, there were in total 29 × 6 univariate BWAS split-half reliability values for each phenotype. To ensure robustness, the above procedure was repeated 50 times with different split of the participants into 10 folds to ensure stability (Supplementary Fig. 20a). The reliability values were averaged across all 50 repetitions.

The same procedure was followed in the case of the ABCD dataset, except as previously explained, the ABCD participants were divided into ten site-clusters. Thus, the split-half reliability was performed between two sets of five non-overlapping site-clusters. In total, this procedure was repeated 126 times as there were 126 ways to divide 10 site-clusters into two sets of 5 non-overlapping site-clusters.

Similar to the HCP, the analyses were repeated with different numbers of split-half participants, ranging from 200 to 1,000 ABCD participants (in intervals of 200). Together with the full training set size of approximately 2,400 participants (corresponding to a split-half sample size of approximately 1,200 participants, there were 6 split-half sample sizes, corresponding to 200, 400, 600, 800, 1,000, 1,200.

The whole procedure was also repeated with different values of T. As there were 10 values of T in the ABCD dataset, there were in total 10 × 6 values univariate BWAS split-half reliability values for each phenotype.

Previous studies have suggested the Haufe-transformed coefficients from multivariate prediction are significantly more reliable than univariate BWAS14,15. We therefore repeated the above analyses by replacing BWAS with the multivariate Haufe-transform.

A full table of split-half BWAS reliability for each given combination of sample size and scan time per participant is provided in the Supplementary Information.

Statistical analyses