Abstract

As a relatively new form of sport, esports offers unparalleled data availability. Our work aims to open esports to a broader scientific community by supplying raw and pre-processed files from StarCraft II esports tournaments. These files can be used in statistical and machine learning modeling tasks and compared to laboratory-based measurements. Additionally, we open-sourced and published all the custom tools that were developed in the process of creating our dataset. These tools include PyTorch and PyTorch Lightning API abstractions to load and model the data. Our dataset contains replays from major and premiere StarCraft II tournaments since 2016. We processed 55 “replaypacks” that contained 17930 files with game-state information. Our dataset is one of the few large publicly available sources of StarCraft II data upon its publication. Analysis of the extracted data holds promise for further Artificial Intelligence (AI), Machine Learning (ML), psychological, Human-Computer Interaction (HCI), and sports-related studies in a variety of supervised and self-supervised tasks.

Similar content being viewed by others

Background & Summary

Electronic sports (esports) are a relatively new and exciting multidisciplinary field of study1,2. There are multiple groups of stakeholders involved in the business of esports3, as well as interest from the academic community.

From the perspective of sports copmpetition, esports is both in its infancy and at the forefront of using analytics to optimize training and performance. New training methods are derived from an ever increasing pool of data and research aimed at generating actionable insights, mostly by applying methods from sport science4,5,6,7,8,9. Rule changes in sports come at varying time intervals and frequently with unpredictable effects on their dynamics. It is especially relevant to share esports data to assess rapid changes in game design and their impact on professional players in the relatively unstructured nature of esports competition and development10,11. Regarding academia, esports have been utilized in several fields for diverse purposes. Advancements in Artificial Intelligence (AI) and Machine Learning (ML) have shown that Reinforcement Learning (RL) agents are capable of outmatching human players in many different types of games, including esports12,13,14,15. Psychological research on neuroplasticity has also shown the potential of esports and video games in general, such as their propensity for inducing structural brain adaptation16. Further, previous studies have shown that playing video games can enhance cognitive functioining in a wide range of domains, including perceptual, attentional and spatial ability17,18.

As such, esports provide a platform for studying complex task performance in both humans and complex AI systems. Data obtained from esports titles - gathered from high-level tournament performance - may provide a path to improving the quality and reproducibility of research in these fields, owing to the stability of such data relative to that gathered in the wild. A lower technical overhead and greater data availability for modeling could assist further research19,20,21. Despite the digital nature of esports - which are their greatest asset with respect to data gathering - there seems to be a lack of high-quality pre-processed data published for scientific and practical use. The goal of our work is to mitigate this issue by publishing datasets containing StarCraft II replays and pre-processed data from esports events, classified as “Premiere” and “Major” by Liquipedia (https://liquipedia.net/starcraft2/Portal:Leagues) in the timeframe from 2016 until 2022.

StarCraft II is a widely played and longstanding esports title, which can briefly be described as follows:

“StarCraft II: Legacy of The Void (SC2) contains various game modes: 1v1, 2v2, 3v3, 4v4, Archon, and Arcade. The most competitive and esports related mode (1v1) can be classified as a two-person combat, real-time strategy (RTS) game. The goal of the game for each of the competitors is either to destroy all of the opponent’s structures or to make them resign.” Moreover, StarCraft II contains multiple matchmaking options: “Ranked game - Players use a built-in system that selects their opponent based on Matchmaking Rating (MMR) points. Unranked game - Players use a built-in system that selects their opponent based on a hidden MMR - such games do not affect the position in the official ranking. Custom game - Players join the lobby (game room), where all game settings are set and the readiness to play is verified by both players - this mode is used in tournament games. Immediately after the start of the game, players have access to one main structure, which allows for further development and production of units.”22.

While reviewing StarCraft II related sources, we were able to find some publicly available datasets made in 2013 “SkillCraft1”23 and 2017 “MSC”24. These datasets are related to video games and in that regard could be classified as “gaming” datasets. However, it is not clear what percentage of games included within these datasets contain actively competing esports players. Our efforts aim to remedy this while also providing tools to make such data more easily accessible and usable.

A summary of the contributions stemming from this work is as follows: (1) The development of a set of four tools to work with StarCraft II data25,26,27,28; (2) The publication of a collection of raw replays from various public sources after pre-processing29; (3) The processing of raw data and publishing results as a dataset30; (4) and the preparation of an official API to interact with our data using PyTorch and PyTorch Lightning for ease of experimentation in further research31.

Related work

In Table 1 we present a comparison of two other StarCraft II datasets to our own. Authors of the SkillCraft1 dataset distinguished the level of players based on the data. They proposed a new feature in the form of the Perception-Action Cycle (PAC), which was calculated from the game data. This research can be viewed as the first step toward developing new training methods and analytical depth in electronic sports. It provided vital information describing different levels of gameplay and optimization in competitive settings32.

There are existing datasets in other games. Due to the major differences in game implementations, these could not be directly compared to our work. Despite that, such publications build upon a similar idea of sharing gaming or esports data for wider scientific audience and should be mentioned. Out of all related work, STARDATA dataset is notable in that it comes from prior generation of StarCraft game. This dataset seems to be the largest StarCraft: Brood War dataset available33. Moreover, in the game of League of Legends, a multimodal dataset including physiological data is available34.

Related publications focused on in-game player performance analyses and psychological, technical, mechanical or physiological indices. These studies were conducted with use of various video games such as: Overwatch35,36, League of Legends37,38,39,40,41, Dota 242,43,44,45,46, StarCraft47,48,49, StarCraft II50,51,52,53,54, Heroes of the Storm42, Rocket League55, and Counter-Strike: Global Offensive56,57,58,59,60, among others61. In some cases a comparison between professional and recreational players was conducted.

Most studies did not provide data as a part of their publication. In other cases, the authors used replays that were provided by the game publishers or were publicly available online - such data collections in their proprietary format are unsuitable for immediate data modeling tasks without prior pre-processing. The researchers used raw files in MPQ (SC2Replay) format with their custom code when dealing with StarCraft II most often built upon existing specifications - s2client-proto62. Other studies solved technical problems that are apparent when working with esports data and different sensing technologies, including visualization, but with no publication of data63,64,65,66,67. Some researchers have attempted to measure tiredness in an undisclosed game via electroencephalography (EEG)68, and player burnout using a multimodal dataset that consisted of EEG, Electromyography (EMG), galvanic skin response (GSR), heart rate (HR), eyetracking, and other physiological measures in esports69.

Methods

Dataset sources

The files used in the presented information extraction process were publicly available due to a StarCraft 2 community effort. Tournament organizers and tournament administrators for events classified as “Premiere” and “Major” made the replays available immediately after the tournament to share the information with the broader StarCraft II community for research, manual analysis, and in-game improvement. Sources that were searched for replaypacks include Liquipedia, Spawning Tool, Reddit, Twitter, and tournament organizer websites - these are fully specified in the supplemental file. All replaypacks required to construct the dataset were identified and downloaded manually from the publicly available sources. Replaypack sources did not provide any further information on re-distribution. It is customary for tournament administrators to act as judges/referees in case of any issues and have full access to the players PC’s. The mechanism of recording a single replay is automatic and turned on for all of the users that join a game lobby unless turned off on purpose. Recording replays is most often mandatory for tournament gameplay in case of potential disputes between players or technical problems. In case of technical difficulties in tournaments, StarCraft 2 has a built-in mechanism to resume a game from a recorded replay. After the tournament is finished, the administrators with access to all of the replays share them with the StarCraft 2 community for use at will. Ownership of all data before processing with additional software is subject to Blizzard’s EULA and other Blizzard licenses. Therefore, as the tournament organizers shared these data with the public, we deemed no additional permissions required. The entirety of our processing conformed with the “Blizzard StarCraft II AI and Machine Learning License” and Blizzard representatives were contacted before the release of processed data.

SC2ReSet description

Our raw data release “SC2ReSet: StarCraft II Esport Replaypack Set”29 is subject to the original End User License Agreement (EULA) published by Blizzard, and depending on use case can be processed under the “Blizzard StarCraft II AI and Machine Learning License”.

The dataset with raw data contains replaypacks in.zip format, with each compressed archive representing a single tournament. Within each archive all files with the “.SC2Replay” extension are MPQ archives (custom Blizzard format) and hold all information to recreate a game with the game engine or to acquire the game state data with a replay parsing library. Additionally, each archive has a “.json” file with metadata containing a mapping between the current file hash and previous directory structure that may contain information about the tournament stage for a given replay file.

Dataset pre-processing

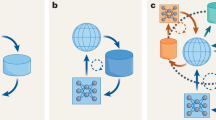

Dataset pre-processing required the use of a custom toolset. Initially, the Python programming language was used to process the directory structure which held additional tournament stage information. We include this information in the dataset in a separate file for each tournament, effectively mapping the initial directory structure onto the resulting unique hashed filenames. Moreover, a custom tool for downloading the maps was used; only the maps that were used within the replays were downloaded26. Finally, to ensure proper translation to English map names in the final data structures, a custom C++ tool implementation was used. Information extraction was performed on map files that contained all necessary localization data27. The entirety of our processing pipeline is visualized in Fig. 1.

Data processing

Custom software was implemented in the Go programming language (Golang) and built upon authorized and public GitHub repositories endorsed by the game publisher - s2prot. The tool was used to perform information extraction from files in MPQ format with the SC2Replay extension. Information extraction was performed for each pre-processed directory that corresponded to a single tournament. Depending on the use case, different processing approaches are possible by providing command line arguments25.

Data parsing and integrity

The parsing capabilities of the tooling were defined with a Golang high-level parser API available on GitHub - s2prot. After initial data-structures were obtained, the next step checked the integrity of the data. This was accomplished by comparing information available across different duplicate data structures that corresponded to: the number of players, map name, length of the player list, game version, and Blizzard map boolean (signifying whether a map was published by Blizzard). If a replay parser or custom integrity check failed, the replay was omitted.

Data filtering and restructuring

Filtering for different game modes was omitted as collected replay files were a part of esports tournament matches. Most often, StarCraft II tournament matches are played in the form of one versus one player combat. Therefore, it was assumed that filtering for the number of players was not required at this step. Custom data structures were created and populated at this stage. This allowed for more control over the processing, summary generation, and final output. Merging data structures containing duplicate information was performed where applicable.

Summarization and JSON Output to zip archive

Replay summarization was required in order to provide information that can be accessed without unpacking the dataset. Finally, the data was converted from Golang data structures into JavaScript Object Notation (JSON) format, and compressed into a zip archive.

Data Records

Dataset description

The final dataset “SC2EGSet: StarCraft II Esport Game State Dataset”30 is indexed on Zenodo and published under the CC BY 4.0 International conforming with the “Blizzard StarCraft II AI and Machine Learning License”. This dataset was processed using tools that were a derivative work of officially endorsed and published replay parsing specification. Blizzard representatives were contacted before the release of processed data to ensure if there are no issues with the dataset publication in respect to potential licensing infringement.

Each tournament in the dataset is a.zip file. Within each archive there are 5 files: (1) Nested.zip with the processed data named “ReplaypackName_data.zip”; (2) Main log of the processing tool used for extraction named “main_log.log”; (3) Secondary log in JSON format containing two fields “processedFiles” and “failedToProcess” that contains a list of files that were parsed, e.g. “processed_failed_0.json”; (4) Mapping between the current file hash and previous directory structure named “processed_mapping.json” as introduced in Section SC2ReSet Description; (5) JSON file with descriptive statistics named “ReplaypackName_summary.json” containing information such as: game version histogram, dates at which the observed matches were played, server information, picked race information, match length, detected spawned units, and race picked versus game time histogram.

Dataset properties

The collected dataset consisted of 55 tournaments. Within the available tournaments, 18309 matches were processed. The final processing yielded 17895 files. While inspecting the processed data, we observed three major game versions. The critical properties of our work are as follows:

-

To secure the availability of the raw replays for further research and extraction with other toolsets built by the community, the SC2ReSet: StarCraft II Esport Replaypack Set was created29.

-

The replays were processed under the licenses provided by the game publisher: “End User License Agreement (EULA)”, and “Blizzard StarCraft II AI and Machine Learning License”.

-

Our dataset is released under CC BY 4.0 International to comply with Blizzard EULA and the aforementioned “Blizzard StarCraft II AI and Machine Learning License”.

Data fields

The top level fields available in each JSON represent a single StarCraft II replay. All of the available events and fields not listed here are described in the API documentation for the JSON parser in our repository - https://github.com/Kaszanas/SC2_Datasets, which loads the data for further experiments31. Interpretation for some of the fields is not included due to the limitations of the official publisher documentation - s2protocol documentation. Additionally, some of the fields acquired from the parsing steps are left as duplicates and can be used to verify the soundness of the provided data.

details

Field containing arbitrary “details” on the processed StarCraft II game.

-

gameSpeed: Game speed setting as set in the game options. Can be one of “slower”, “slow”, “normal”, “fast”, or “faster”. Typically, competitive or ranked games are played on the “faster” setting. Additional information is available at: https://liquipedia.net/starcraft2/Game_Speed,

-

isBlizzardMap: Specifies if the map that was used in replay was approved and published by Blizzard (game publisher),

-

timeUTC: Denotes the time at which the game was started.

header

Field containing details available in the header of the processed StarCraft II match.

-

elapsedGameLoops: Specifies how many game loops (game-engine ticks) the game lasted,

-

version: Specifies the game version that players used to play the game.

initData

Field containing details on the game initialization.

-

GameDescription: contains information such as the GameOptions.

metadata

Field that contains some game “metadata”. Available fields are:

-

baseBuild: build number of the game engine,

-

dataBuild: number of the build,

-

gameVersion: game version number,

-

mapName: name of the map the game was played on.

gameEvents

Field that contains a list of game events with additional information on player actions. For a full description of nested fields in each of the available events please refer to the official API documentation as referenced in the API repository provided along with the dataset - https://github.com/Kaszanas/SC2_Datasets31. Available events are:

-

CameraSave: user saved the camera location to be accessible by a hotkey,

-

CameraUpdate: user updated the camera view,

-

ControlGroupUpdate: user updated a control group,

-

GameUserLeave: denotes an event that occurs when user left the game,

-

UserOptions: denotes the game settings that the user has set,

-

Cmd,

-

CmdUpdateTargetPoint,

-

CommandManagerState,

-

SelectionDelta.

messageEvents

Field that contains a list of events with additional information on player messages. Available events are:

-

Chat: denotes that a player wrote something in the in-game chat.

trackerEvents

Field that contains a list of “tracker” events. Available types of events are:

-

PlayerStats: information on the economy of the players,

-

PlayerSetup: contains basic information mapping userId to playerId to slotId,

-

UnitInit: when the player initializes production of a unit,

-

UnitBorn: when the unit spawns,

-

UnitDied: when the unit stops existing,

-

Upgrade: when an upgrade finishes,

-

UnitDone,

-

UnitOwnerChange,

-

UnitPositions,

-

UnitTypeChange.

ToonPlayerDescMap

Field that contains an object mapping the unique player ID to additional information on the player’s metadata.

Technical Validation

Technical validation description

Technical validation for the raw replay files contained in “SC2ReSet: StarCraft II Esport Replaypack Set”29 is built into the tools that were used to create the dataset and was introduced in the method section as processing steps. We assume that all of the replays that went through our process of data extraction are correct and up to the Blizzard’s shared specification for replay parsing.

Validating the “SC2EGSet: StarCraft II Esport Game State Dataset”30 included manual and visual verification and scripted checks for the soundness of obtained data. Processing logs are attached to each of the manipulated replaypacks. Figure 2 depicts the frequency with which each of the races played against the other and the distribution of races observed within the tournaments. Figure 3 depicts the distribution of match times that were observed.

The oldest observed tournament was IEM 10 Taipei, which was played in 2016. The most recent observed tournament was IEM Katowice, which finished on 2022.02.27. The game contains different “races” that differ in the mechanics required for gameplay. Figure 4 shows visible differences in the distribution of match time for players that picked different races.

Technical validation experiments

To further ensure the technical validity of our data, we performed two sets of classification experiments using different model implementations.

Data preparation

Matches were initially filtered to only include those exceeding or equaling a length of 9 minutes, which is approximately the 25th percentile of match length values. Next, a set of features was generated from the available economy-related indicators. Additional features were generated by combining mineral and vespene indicators into summed resource indicators. Match data were then aggregated by averaging across match time for each player, resulting in 22,230 samples of averaged match data (from 11,115 unique matches). Standard deviations were computed in addition to averaged values where applicable. Further features were then generated by computing ratios of resources killed/resources lost for army, economy and technology contexts, along with a ratio of food made to food used. As a final step, prior to feature standardization, each feature was filtered for outliers (replacing with median) that exceeded an upper limit of 3 standard deviations from the feature mean.

Feature selection

The feature count was reduced by first computing point biserial correlations between features and match outcome, selecting for features with a statistically significant (α = 0.001) coefficient value exceeding that of ±0.050. Next, a matrix of correlations was computed for the remaining features and redundant features were removed. As a result, 17 features remained after this process, of which 8 were basic features (mineralsLostArmy, mineralsKilledArmy, mineralsLostEconomy, mineralsKilledEconomy, and the SD for each).

Modelling

Modelling was conducted on features (economic indicators) that represented the global average gamestate, in which all time points were aggregated into a single state, and also as a time series in which the gamestate was represented at a sampling rate of approximately 7 seconds. Three algorithms were chosen for comparative purposes: Logistic Regression (sklearn.linear_model.LogisticRegression), Support Vector Machine (sklearn.svm.SVC)70,71, and Extreme Gradient Boosting (xgboost.XGBClassifier)72. Each algorithm was initiated with settings aimed at binary classification and with typical starting hyperparameters. A 5-fold cross validation procedure was implemented across the models.

Two sets of models were trained for the average gamestate and one for the gamestate as a time series. In the first averaged set of models the input features represented the economic gamestate of a single player without reference to their opponent, with the model output representing outcome prediction accuracy for that player - a binary classification problem on scalar win/loss classes. The second averaged set of models differed in that it used the averaged economic gamestate of both players as input features, and attempted to predict the outcome of “Player 1” for each match. Finally, the time series models consisted of a feature input vector containing the economic gamestate at 7 second intervals - the task here was also to predict the outcome of a match based on only a single player’s economic features, as in the single-player averaged set of models.

Label counts were equalized to the minimal label count prior to generating the data folds, resulting in 10,744 samples of “Win” and “Loss” labels each for the single-player averaged models and the time series models. For the two-player set of averaged models (containing the features of both players in a given match), the total number of matches used was 10,440. Accuracy was chosen as the model performance evaluation metric in all three cases. Computation was performed on a standard desktop-class PC without additional resources.

Results

As the results indicate (see Table 2), good outcome prediction can be achieved from economic indicators only, even without exhaustive optimization of each model’s hyperparameters. For the one-player averaged set of models, SVM and XGBoost displayed similar performance, with the logistic classifier lagging slightly behind. For the two-player averaged set of models, all three algorithms performed essentially equally well. Feature importances were taken from a single-player XGBoost model (with identical hyperparameters) that was applied to the entire dataset for illustrative purposes. Figure 5 depicts the top five features by importance. It is interesting to note that importance was more heavily centered around mineral-related features than those tied to vespene, which is likely tied to how mineral and vespene needs are distributed across unit/building/technology costs. Further feature investigation is required to verify this tendency.

Figure 6 depicts the time series application of these models as an illustration of outcome prediction accuracy over time. It should be noted that these time series results are not based on any form of data aggregation, and as such only basic economic features could be used for classification (18 features in total).

Each timepoint contains the average accuracy for 5-fold cross validation, with a minimum match length of 9 minutes and a maximum match length of approximately 15 minutes. All three algorithms provided similar performance over time, although this may be an effect of the minimal hyperparameter optimization that was performed. Further, it is also interesting to note and that all three algorithms meet a classification performance asymptote at approximately the same match time (550 seconds), which may indicate that this is where economic indicators begin to lose their predictive power and (presumably) other factors such as army size, composition, and their application become the primary determinants. The code for our experiments is available at a dedicated GitHub repository: https://github.com/Kaszanas/SC2EGSet_article_experiments.

Usage Notes

Dataset loading

Interacting with the dataset is possible via PyTorch73 and PyTorch Lightning74 abstractions. Our API implementations exposes a few key features: (1) Automatic dataset downloading and extraction from Zenodo archive; (2) Custom validators that filter or verify the integrity of the dataset; (3) The ability of our abstractions to load and use any other dataset that was pre-processed using our toolset.

The required disk space to succesfully download and extract our dataset is approximately 170 gigabytes. We showcase the example use of our API in Fig. 7. Please note that the API31 is subject to change and any users should refer to the official documentation as referenced in the API repository for the latest release features and usage information - https://github.com/Kaszanas/SC2_Datasets. Additional listing showcasing the use of a pre-defined SC2EGSetDataset class through our API is shown below in Fig. 8.

Limitations

We acknowledge that our work is not without limitations. The design and implementation of our dataset do not consider the ability to obtain StarCraft II data through game-engine simulation at a much higher resolution. Because of this, the extracted dataset cannot reflect exact unit positioning. Replays in their original MPQ (SC2Replay) format contain all necessary information to recreate a game using game-engine API. Therefore, we plan to continue our research and provide more datasets that will expand the scientific possibilities within gaming and esports. Further, it should be noted that the experiments described here are more illustrative than investigative in nature, and could be greatly expanded upon in future work. Additionally, due to many changes to the game over time some data fields may be set to have a value of zero; in such cases any further usage of this datasets needs to take into consideration that the representation of a game changed over time. We recommend further research to use SC2ReSet29 to compute game-engine simulated information. We do not provide simulation observation data that allows more detailed spatiotemporal information to be extracted at a higher computational cost. Moreover, it is worth noting that the dataset completeness was dependent on which tournament organizers and tournament administrators decided to publish replaypacks.

Additionally, we would like to note that this dataset consists only of the tournament-level esports replays and their data. In that regard there is no possibility to do more generalized analyses that would take into consideration people of varying skill levels.

Future authors may want to filter out replays that ended prematurely due to unknown reasons. Our dataset may contain replays that are undesirable for esports research. We have decided against the deletion of replays to preserve the initial distributions of data. Additionally, as filtering was omitted (besides that performed for the purposes of the described experiments), there is a risk that the dataset contains matches that were a part of the tournament itself but did not count towards the tournament standings. Due to the timeframe of the tournaments and game version changes, despite our best efforts, some information might be missing or corrupted and is subject to further processing and research.

Code availability

Our dataset was created by using open-source tools that were published with separate digital object identifiers (doi) minted for each of the repositories. These tools are indexed on Zenodo25,26,27.

We have made available a PyTorch73 and PyTorch Lightning74 API published to PyPI for accessing our dataset and performing various analyses. Additionally, Our API is accessible in the form of a GitHub repository - https://github.com/Kaszanas/SC2_Datasets, which is available on Zenodo with a separate doi. All of the instructions for accessing the data and specific field documentation are published there31.

The code used for technical validation experiments is available for preview in a GitHub repository: https://github.com/Kaszanas/SC2EGSet_article_experiments.

In the process of preparing this article, PyTorch Lightning has changed its name into Lightning. We have decided to use the old form of the name, following the citation template provided by the Lightning project on GitHub74.

GitHub Links to the tooling used in the dataset preparation:

• https://github.com/Kaszanas/SC2InfoExtractorGo,

• https://github.com/Kaszanas/SC2DatasetPreparator,

• https://github.com/Kaszanas/SC2MapLocaleExtractor,

The official dataset API is available at the following repository: https://github.com/Kaszanas/SC2_Datasets.

Additional tooling for potential anonymization tasks with data from private collections is available at: https://github.com/Kaszanas/SC2AnonServerPy.

References

Reitman, J. G., Anderson-Coto, M. J., Wu, M., Lee, J. S. & Steinkuehler, C. Esports Research: A Literature Review. Games and Culture 15, 32–50, https://doi.org/10.1177/1555412019840892 (2020).

Chiu, W., Fan, T. C. M., Nam, S.-B. & Sun, P.-H. Knowledge Mapping and Sustainable Development of eSports Research: A Bibliometric and Visualized Analysis. Sustainability 13, https://doi.org/10.3390/su131810354 (2021).

Scholz, T. M. A Short History of eSports and Management, 17–41 (Springer International Publishing, Cham, 2019).

Pustišek, M., Wei, Y., Sun, Y., Umek, A. & Kos, A. The role of technology for accelerated motor learning in sport. Personal and Ubiquitous Computing https://doi.org/10.1007/s00779-019-01274-5 (2019).

Giblin, G., Tor, E. & Parrington, L. The impact of technology on elite sports performance. Sensoria: A Journal of Mind, Brain & Culture 12, https://doi.org/10.7790/sa.v12i2.436 (2016).

Baerg, A. Big Data, Sport, and the Digital Divide: Theorizing How Athletes Might Respond to Big Data Monitoring. Journal of Sport and Social Issues 41, 3–20, https://doi.org/10.1177/0193723516673409 (2017).

Chen, M. A., Spanton, K., van Schaik, P., Spears, I. & Eaves, D. The Effects of Biofeedback on Performance and Technique of the Boxing Jab. Perceptual and Motor Skills 128, 1607–1622, https://doi.org/10.1177/00315125211013251. PMID: 33940988 (2021).

Rajšp, A. & Fister, I. jr A Systematic Literature Review of Intelligent Data Analysis Methods for Smart Sport Training. Applied Sciences 10, https://doi.org/10.3390/app10093013 (2020).

Kos, A. & Umek, A. Smart sport equipment: SmartSki prototype for biofeedback applications in skiing. Personal and Ubiquitous Computing 22, https://doi.org/10.1007/s00779-018-1146-1 (2018).

Seif El-Nasr, M., Drachen, A. & Canossa, A. (eds.) Game Analytics: Maximizing the Value of Player Data (Springer London, London, 2013).

Su, Y., Backlund, P. & Engström, H. Comprehensive review and classification of game analytics. Service Oriented Computing and Applications 15, 141–156, https://doi.org/10.1007/s11761-020-00303-z (2021).

Vinyals, O. et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 575, 350–354, https://doi.org/10.1038/s41586-019-1724-z (2019).

Jaderberg, M. et al. Human-level performance in 3D multiplayer games with population-based reinforcement learning. Science 364, 859–865, https://doi.org/10.1126/science.aau6249 (2019).

Silver, D. et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 362, 1140–1144, https://doi.org/10.1126/science.aar6404 (2018).

Berner, C. et al. Dota 2 with large scale deep reinforcement learning. arXiv preprint arXiv:1912.06680 (2019).

Kowalczyk-Grębska, N. et al. Real-time strategy video game experience and structural connectivity - A diffusion tensor imaging study. Human Brain Mapping 39, https://doi.org/10.1002/hbm.24208 (2018).

Green, C. S. & Bavelier, D. Action video game modifies visual selective attention. Nature 423, 534–537, https://doi.org/10.1038/nature01647 (2003).

Green, C. S. & Bavelier, D. Learning, attentional control, and action video games. Current biology: CB 22, R197–R206, https://doi.org/10.1016/j.cub.2012.02.012 (2012).

Alfonso, F. et al. Data Sharing: A New Editorial Initiative of the International Committee of Medical Journal Editors. Implications for the Editors’ Network. Revista Portuguesa de Cardiologia 36, 397–403, https://doi.org/10.1016/j.repc.2017.02.001 (2017).

Ghasemaghaei, M. Does data analytics use improve firm decision making quality? The role of knowledge sharing and data analytics competency. Decision Support Systems 120, 14–24, https://doi.org/10.1016/j.dss.2019.03.004 (2019).

Zuiderwijk, A. & Spiers, H. Sharing and re-using open data: A case study of motivations in astrophysics. International Journal of Information Management 49, 228–241, https://doi.org/10.1016/j.ijinfomgt.2019.05.024 (2019).

Białecki, A., Gajewski, J., Białecki, P., Phatak, A. & Memmert, D. Determinants of victory in Esports - StarCraft II https://doi.org/10.1007/s11042-022-13373-2 (2022).

Blair, M., Thompson, J., Henrey, A. & Chen, B. SkillCraft1 Master Table Dataset. UCI Machine Learning Repository. Acessed: 2022-06-03 (2013).

Wu, H., Zhang, J. & Huang, K. MSC: A Dataset for Macro-Management in StarCraft II https://doi.org/10.48550/ARXIV.1710.03131 (2017).

Białecki, A., Krupiński, L. & Białecki, P. Kaszanas/SC2InfoExtractorGo: 1.2.1 SC2InfoExtractorGo Release. Zenodo https://doi.org/10.5281/zenodo.5296788 (2022).

Białecki, A., Białecki, P. & Krupiński, L. Kaszanas/SC2DatasetPreparator: 1.2.0 SC2DatasetPreparator Release. Zenodo https://doi.org/10.5281/zenodo.5296664 (2022).

Białecki, A. & Białecki, P. Kaszanas/SC2MapLocaleExtractor: 1.1.1 SC2MapLocaleExtractor Release. Zenodo https://doi.org/10.5281/zenodo.zenodo.4733264 (2021).

Białecki, A. & Białecki, P. Kaszanas/SC2AnonServerPy: 1.0.1 SC2AnonyServerPy Release. Zenodo https://doi.org/10.5281/zenodo.5138313 (2021).

Białecki, A. SC2ReSet: StarCraft II Esport Replaypack Set. Zenodo https://doi.org/10.5281/zenodo.5575796 (2022).

Białecki, A. et al. SC2EGSet: StarCraft II Esport Game State Dataset. Zenodo https://doi.org/10.5281/zenodo.5503997 (2023).

Białecki, A., Białecki, P., Szczap, A. & Krupiński, L. Kaszanas/SC2_Datasets: 1.0.0 SC2_Datasets Release. Zenodo https://doi.org/10.5281/zenodo.6629005 (2022).

Thompson, J. J., Blair, M., Chen, L. & Henrey, A. J. Video Game Telemetry as a Critical Tool in the Study of Complex Skill Learning. PLoS ONE 8, https://doi.org/10.1371/journal.pone.0075129 (2013).

Lin, Z., Gehring, J., Khalidov, V. & Synnaeve, G. STARDATA: A StarCraft AI Research Dataset. Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment 13, 50–56 (2021).

Smerdov, A., Zhou, B., Lukowicz, P. & Somov, A. Collection and Validation of Psychophysiological Data from Professional and Amateur Players: a Multimodal eSports Dataset https://doi.org/10.48550/ARXIV.2011.00958 (2020).

Braun, P. et al. Game Data Mining: Clustering and Visualization of Online Game Data in Cyber-Physical Worlds. Procedia Computer Science 112, 2259–2268, https://doi.org/10.1016/j.procs.2017.08.141 (2017).

Glass, J. & McGregor, C. Towards Player Health Analytics in Overwatch. In 2020 IEEE 8th International Conference on Serious Games and Applications for Health (SeGAH), 1–5, https://doi.org/10.1109/SeGAH49190.2020.9201733 (2020).

Blom, P. M., Bakkes, S. & Spronck, P. Towards Multi-modal Stress Response Modelling in Competitive League of Legends. In 2019 IEEE Conference on Games (CoG), 1–4, https://doi.org/10.1109/CIG.2019.8848004 (2019).

Ani, R., Harikumar, V., Devan, A. K. & Deepa, O. Victory prediction in League of Legends using Feature Selection and Ensemble methods. In 2019 International Conference on Intelligent Computing and Control Systems (ICCS), 74–77, https://doi.org/10.1109/ICCS45141.2019.9065758 (2019).

Aung, M. et al. Predicting skill learning outcomes in a large, longitudinal MOBA dataset. In Proceedings of the IEEE Computational Intelligence in Games, https://doi.org/10.1109/CIG.2018.8490431 (IEEE, 2018).

Maymin, P. Z. Smart kills and worthless deaths: eSports analytics for League of Legends. Journal of Quantitative Analysis in Sports 17, 11–27, https://doi.org/10.1515/jqas-2019-0096 (2021).

Lee, H., Hwang, D., Kim, H., Lee, B. & Choo, J. DraftRec: Personalized Draft Recommendation for Winning in Multi-Player Online Battle Arena Games. In Proceedings of the ACM Web Conference 2022, WWW ‘22, 3428–3439, https://doi.org/10.1145/3485447.3512278 (Association for Computing Machinery, New York, NY, USA, 2022).

Gourdeau, D. & Archambault, L. Discriminative neural network for hero selection in professional Heroes of the Storm and DOTA 2. IEEE Transactions on Games 1–1, https://doi.org/10.1109/TG.2020.2972463 (2020).

Hodge, V. et al. Win Prediction in Esports: Mixed-Rank Match Prediction in Multi-player Online Battle Arena Games https://doi.org/10.48550/ARXIV.1711.06498 (2017).

Hodge, V. et al. Win Prediction in Multi-Player Esports: Live Professional Match Prediction. IEEE Transactions on Games 1–1, https://doi.org/10.1109/TG.2019.2948469 (2019).

Cavadenti, O., Codocedo, V., Boulicaut, J.-F. & Kaytoue, M. What Did I Do Wrong in My MOBA Game? Mining Patterns Discriminating Deviant Behaviours. In 2016 IEEE International Conference on Data Science and Advanced Analytics (DSAA), 662–671, https://doi.org/10.1109/DSAA.2016.75 (2016).

Pedrassoli Chitayat, A. et al. WARDS: Modelling the Worth of Vision in MOBA’s. In Arai, K., Kapoor, S. & Bhatia, R. (eds.) Intelligent Computing, 63–81, https://doi.org/10.1007/978-3-030-52246-9_5 (Springer International Publishing, Cham, 2020).

Sánchez-Ruiz, A. A. & Miranda, M. A machine learning approach to predict the winner in StarCraft based on influence maps. Entertainment Computing 19, 29–41, https://doi.org/10.1016/j.entcom.2016.11.005 (2017).

Stanescu, M., Barriga, N. & Buro, M. Using Lanchester Attrition Laws for Combat Prediction in StarCraft. Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment 11, 86–92, https://doi.org/10.1609/aiide.v11i1.12780 (2021).

Norouzzadeh Ravari, Y., Bakkes, S. & Spronck, P. StarCraft Winner Prediction. Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment 12, 2–8, https://doi.org/10.1609/aiide.v12i2.12887 (2021).

Helmke, I., Kreymer, D. & Wiegand, K. Approximation Models of Combat in StarCraft 2 https://doi.org/10.48550/ARXIV.1403.1521 (2014).

Lee, D., Kim, M.-J. & Ahn, C. W. Predicting combat outcomes and optimizing armies in StarCraft II by deep learning. Expert Systems with Applications 185, 115592, https://doi.org/10.1016/j.eswa.2021.115592 (2021).

Lee, C. M. & Ahn, C. W. Feature Extraction for StarCraft II League Prediction. Electronics 10, https://doi.org/10.3390/electronics10080909 (2021).

Cavadenti, O., Codocedo, V., Boulicaut, J.-F. & Kaytoue, M. When cyberathletes conceal their game: Clustering confusion matrices to identify avatar aliases. In 2015 IEEE International Conference on Data Science and Advanced Analytics (DSAA), 1–10, https://doi.org/10.1109/DSAA.2015.7344824 (2015).

Volz, V., Preuss, M. & Bonde, M. K. Towards Embodied StarCraft II Winner Prediction. In Cazenave, T., Saffidine, A. & Sturtevant, N. (eds.) Computer Games, 3–22, https://doi.org/10.1007/978-3-030-24337-1_1 (Springer International Publishing, Cham, 2019).

Mathonat, R., Boulicaut, J.-F. & Kaytoue, M. A Behavioral Pattern Mining Approach to Model Player Skills in Rocket League. In 2020 IEEE Conference on Games (CoG), 267–274, https://doi.org/10.1109/CoG47356.2020.9231739 (2020).

Khromov, N. et al. Esports Athletes and Players: A Comparative Study. IEEE Pervasive Computing 18, 31–39, https://doi.org/10.1109/MPRV.2019.2926247 (2019).

Koposov, D. et al. Analysis of the Reaction Time of eSports Players through the Gaze Tracking and Personality Trait. In 2020 IEEE 29th International Symposium on Industrial Electronics (ISIE), 1560–1565, https://doi.org/10.1109/ISIE45063.2020.9152422 (2020).

Smerdov, A., Burnaev, E. & Somov, A. eSports Pro-Players Behavior During the Game Events: Statistical Analysis of Data Obtained Using the Smart Chair. In 2019 IEEE SmartWorld, Ubiquitous Intelligence Computing, Advanced Trusted Computing, Scalable Computing Communications, Cloud Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), 1768–1775, https://doi.org/10.1109/SmartWorld-UIC-ATC-SCALCOM-IOP-SCI.2019.00314 (2019).

Xenopoulos, P., Freeman, W. R. & Silva, C. Analyzing the Differences between Professional and Amateur Esports through Win Probability. In Proceedings of the ACM Web Conference 2022, WWW ‘22, 3418–3427, https://doi.org/10.1145/3485447.3512277 (Association for Computing Machinery, New York, NY, USA, 2022).

Jonnalagadda, A., Frosio, I., Schneider, S., McGuire, M. & Kim, J. Robust Vision-Based Cheat Detection in Competitive Gaming. The Proceedings of the ACM in Computer Graphics and Interactive Techniques 4, https://doi.org/10.1145/3451259 (2021).

Galli, L., Loiacono, D., Cardamone, L. & Lanzi, P. L. A cheating detection framework for Unreal Tournament III: A machine learning approach. In 2011 IEEE Conference on Computational Intelligence and Games (CIG'11), 266–272, https://doi.org/10.1109/CIG.2011.6032016 (2011).

Wang, X. et al. SCC: an efficient deep reinforcement learning agent mastering the game of StarCraft II. CoRR abs/2012.13169 https://arxiv.org/abs/2012.13169. 2012.13169 (2020).

Bednárek, D., Krulis, M., Yaghob, J. & Zavoral, F. Data Preprocessing of eSport Game Records - Counter-Strike: Global Offensive. 269–276, https://doi.org/10.5220/0006475002690276 (2017).

Feitosa, V. R. M., Maia, J. G. R., Moreira, L. O. & Gomes, G. A. M. GameVis: Game Data Visualization for the Web. In 2015 14th Brazilian Symposium on Computer Games and Digital Entertainment (SBGames), 70–79, https://doi.org/10.1109/SBGames.2015.21 (2015).

Afonso, A. P., Carmo, M. B. & Moucho, T. Comparison of Visualization Tools for Matches Analysis of a MOBA Game. In 2019 23rd International Conference Information Visualisation (IV), 118–126, https://doi.org/10.1109/IV.2019.00029 (2019).

Stepanov, A. et al. Sensors and Game Synchronization for Data Analysis in eSports. 2019 IEEE 17th International Conference on Industrial Informatics (INDIN) 1, 933–938, https://doi.org/10.1109/INDIN41052.2019.8972249 (2019).

Korotin, A. et al. Towards Understanding of eSports Athletes’ Potentialities: The Sensing System for Data Collection and Analysis. In 2019 IEEE SmartWorld, Ubiquitous Intelligence Computing, Advanced Trusted Computing, Scalable Computing Communications, Cloud Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), 1804–1810, https://doi.org/10.1109/SmartWorld-UIC-ATC-SCALCOM-IOP-SCI.2019.00319 (2019).

Melentev, N. et al. eSports Players Professional Level and Tiredness Prediction using EEG and Machine Learning. In 2020 IEEE SENSORS, 1–4, https://doi.org/10.1109/SENSORS47125.2020.9278704 (2020).

Smerdov, A., Somov, A., Burnaev, E., Zhou, B. & Lukowicz, P. Detecting Video Game Player Burnout With the Use of Sensor Data and Machine Learning. IEEE Internet of Things Journal 8, 16680–16691, https://doi.org/10.1109/JIOT.2021.3074740 (2021).

Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 12, 2825–2830 (2011).

Buitinck, L. et al. API design for machine learning software: experiences from the scikit-learn project. In ECML PKDD Workshop: Languages for Data Mining and Machine Learning, 108–122 (2013).

Chen, T. & Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ‘16, 785–794, https://doi.org/10.1145/2939672.2939785 (Association for Computing Machinery, New York, NY, USA, 2016).

Paszke, A. et al. Pytorch: An imperative style, high-performance deep learning library. In Wallach, H. et al. (eds.) Advances in Neural Information Processing Systems, vol. 32 (Curran Associates, Inc., 2019).

Falcon, W. The PyTorch Lightning team. PyTorch Lightning. Zenodo https://doi.org/10.5281/zenodo.3828935 (2019).

Acknowledgements

We would like to acknowledge various contributions by the members of the technical and research community, with special thanks to: Timo Ewalds (DeepMind, Google), Anthony Martin (Sc2ReplayStats), Mateusz “Gerald” Budziak, and András Belicza for assisting with our technical questions. Moreover, we extend our thanks to the StarCraft II esports community for sharing their experiences, playing together, and discussing key aspects of the gameplay in various esports. We extend our thanks especially to: Mikołaj “Elazer” Ogonowski, Konrad “Angry” Pieszak, Igor “Indy” Kaczmarek, Adam “Ziomek” Skorynko, Jakub “Trifax” Kosior, Michał “PAPI” Królikowski, and Damian “Damrud” Rudnicki. Open Access fees for the study were jointly financed by the Faculty of Electronics and Information Technology on Warsaw University of Technology, and the Polish Ministry of Education and Science in the years 2023–2024 under the University Research Project no 3 at Józef Piłsudski University of Physical Education in Warsaw “Postural assessment and accelerometric characterisation of movement technique in selected sports disciplines”

Author information

Authors and Affiliations

Contributions

This work was conceptualized by: A. B.; methodology was devised by A.B., N.J., P.D., P.B., L.K.; formal analysis concluded by: A.B., N.J., P.D.; investigated: A.B., N.J., P.D., P.B., R.B.; writing - original draft: A.B.; writing - review and editing: A.B., P.D., A.S., P.B., R.B., J.G.; Data curated by: A.B., A.S.; technical oversight by: P.B.; software was written by: A.B., L.K.; technical documentation was prepared by: A.B., A.S.; and supervised by: A.B., J.G.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Białecki, A., Jakubowska, N., Dobrowolski, P. et al. SC2EGSet: StarCraft II Esport Replay and Game-state Dataset. Sci Data 10, 600 (2023). https://doi.org/10.1038/s41597-023-02510-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-023-02510-7