Abstract

Handwritten signatures in biometric authentication leverage unique individual characteristics for identification, offering high specificity through dynamic and static properties. However, this modality faces significant challenges from sophisticated forgery attempts, underscoring the need for enhanced security measures in common applications. To address forgery in signature-based biometric systems, integrating a forgery-resistant modality, namely, noninvasive electroencephalography (EEG), which captures unique brain activity patterns, can significantly enhance system robustness by leveraging multimodality’s strengths. By combining EEG, a physiological modality, with handwritten signatures, a behavioral modality, our approach capitalizes on the strengths of both, significantly fortifying the robustness of biometric systems through this multimodal integration. In addition, EEG’s resistance to replication offers a high-security level, making it a robust addition to user identification and verification. This study presents a new multimodal SignEEG v1.0 dataset based on EEG and hand-drawn signatures from 70 subjects. EEG signals and hand-drawn signatures have been collected with Emotiv Insight and Wacom One sensors, respectively. The multimodal data consists of three paradigms based on mental, & motor imagery, and physical execution: i) thinking of the signature’s image, (ii) drawing the signature mentally, and (iii) drawing a signature physically. Extensive experiments have been conducted to establish a baseline with machine learning classifiers. The results demonstrate that multimodality in biometric systems significantly enhances robustness, achieving high reliability even with limited sample sizes. We release the raw, pre-processed data and easy-to-follow implementation details.

Similar content being viewed by others

Background & Summary

In biometrics, user identification refers to establishing unique credentials or attributes within a system, while user verification confirms the claimed identity through interaction with the system. In terms of biometrics, user identification involves establishing the identity of an individual from a larger dataset, while user verification focuses on confirming the claimed identity of an individual through a one-to-one match. Various physical traits, such as retina, iris, sclera, ear, hand, palm geometry, fingerprints, and face, along with behavioral characteristics like keystroke pattern, voice, signature, and gait, have been utilized in the design of biometric systems1.

Signature-based biometric systems2,3 are preferred due to their convenience, non-invasiveness, cost-effectiveness, wide adoption, cancelable nature, and legal recognition. Biometric authentication4 via handwritten signatures leverages the distinct characteristics inherent in an individual’s signature, offering a unique method for identification and verification, particularly in commercial contexts like bank check processing and legal agreements. The intricate process and dynamic motion patterns of each handwritten signature confer a high level of individual specificity, rendering it an effective biometric modality. However, forgery attempts pose a substantial challenge in signature-based biometrics. These forgeries are sophisticated efforts to replicate an individual’s unique signature style, encompassing its dynamic and static characteristics.

To mitigate forgery risks, a viable strategy involves incorporating an additional, user-specific data modality that is inherently resistant to forgery. Simultaneously, this approach would capitalize on the strengths of multimodality, thereby enhancing the overall robustness of the biometric system. Incorporating EEG as a physiological trait and handwritten signatures as a behavioral trait, our approach effectively utilizes the distinct yet complementary aspects of the same individual. This multimodal integration substantially strengthens the robustness of biometric systems, emphasizing the synergy between physiological and behavioral modalities.

EEG-based biometric systems provide a high level of security against forgery due to the inherent difficulty of replicating an individual’s unique brain activity patterns5. Unlike signatures or physical biometrics, EEG signals are challenging to forge, making them a reliable and robust method for identity verification.

EEG-based biometric systems6 use electroencephalogram (EEG) signals for the automatic recognition of people’s identities. These systems have been successfully used in various states, such as resting, visual stimuli, mental tasks, and emotional stimuli. The extraction of features from EEG signals is a crucial processing step, and a higher quality of identity information is necessary to improve the distinctiveness between subjects in EEG-based biometric systems. EEG-based biometric systems6 have several advantages over other neuroimaging techniques. They use relatively simple and inexpensive equipment for signal acquisition and can be used in most environments. The feature extraction and classification techniques of these systems have demonstrated high accuracy rates, even as the number of channels is reduced, and the signal acquisition process becomes less complicated. Given the susceptibility of signature-based biometric systems to sophisticated forgery, integrating EEG capturing unique brainwave patterns impervious to replication would make the biometric system more robust against such fraudulent attempts. There are a few research works focused on integrating the EEG with online7 and offline8 signatures with confidential data. In addition to the lack of data availability, they investigate EEG in limited settings.

This study integrates brain signals (EEG) and hand-drawn signatures within a multimodal biometric framework to develop SignEEG v1.0 dataset, considering multiple EEG paradigms with different tasks while focusing on two primary objectives that have been defined, namely, user identification and verification.

The existing research7,8 on integrating hand-drawn signatures and EEG signals is scarce and constrained to a single paradigm. Furthermore, the unavailability of publicly accessible data from these studies hinders researchers from further investigations. These EEG and signature-based multimodal works are summarized in Table 1.

SignEEG v1.0 consists of three paradigms, unlike others where only one paradigm is used. The proposed dataset gives the opportunity to develop multimodal biometric systems as it covers different paradigms and hand-drawn signatures. By incorporating these three paradigms, a multimodal approach to user authentication can be established, combining the strengths of mental imagery, motor imagery, and physical execution. This comprehensive approach enhances the security and reliability of the biometric system by capturing multiple facets of the individual’s unique signature-related brain activity and behavior. To conclude, our key contributions can be summarized as:

-

Our study establishes a novel multimodal approach for developing robust biometric systems by comprehensively encompassing an individual’s traits from both the behavioral aspect, as evidenced by handwritten signatures, and the physiological aspect, as reflected in EEG patterns.

-

We develop a multimodal biometric dataset (SignEEG v1.0) with EEG and hand-drawn signatures from 70 healthy participants collected over several months.

The rest of the paper is organized as follows. Section Methods describes the data acquisition process, including details of participants, tools used, recording setup, experimental paradigms, data acquisition protocol, and objectives, followed by data pre-processing. Sections Data records, Baseline Results and Discussion, and Conclusion discuss the physical structure of the data, results, and conclusion, respectively.

Methods

In this section, we describe in detail the data acquisition process, including devices used, participants (subjects), the experimental paradigm, recording setup, and acquisition protocol.

Participants

The dataset development involved 70 enrolled participants (anonymized). All 70 participants were healthy individuals, comprising five females and sixty-five males, aged between seventeen and thirty-six years, and without any neurological disorders or brain-related medications/surgery. None of the participants had prior experience with EEG. Participants were instructed not to consume alcohol or tobacco for two days prior to the data collection. After the data collection, the participants independently rated their satisfaction, boringness, horribleness, and calmness regarding their experience, and the average scores (out of 10) were 9.56, 1.43, 1.17, and 9.50, respectively. Written informed consent was obtained from all participants before they participated in the experiment; a sample consent form may be provided as supplementary material if required. The ethical approval was obtained from the ethical review board - REC Sonbhadra, U.P., India, with reference number:72/13/REC/SONBH/2021.

Device and software details

The Emotiv Insight headset9 is a wireless EEG device designed for research and personal use. It has five channels (AF3, AF4, T7, T8, Pz) for recording EEG signals and two references (CMS, DRL), with a sampling rate of 128 Hz and a resolution of 14 bits. It uses a built-in digital 5th order Sinc filter for antialiasing. The electrode placement according to the 10-20 international system10 is shown in Fig. 1. Colors indicate the quality of the electrical signal passing through the sensors and the reference; colors correspond to the impedance measured by Emotiv’s patented system for both the contact and the signal quality. The impedance ranges between 5-20kΩ, where green and red colored electrodes indicate the lowest and highest impedance values, respectively. The Emotiv Insight headset used Semi-dry polymer material in their sensors; a primer fluid may be used to improve the contact quality with skin9.

Emotiv Insight headset9 (left) and Electrode placement (right) according to the 10-20 international system10. The green and orange colors indicate the good and moderate (OK) quality of the signal, respectively. While red and black colors indicate bad and very bad signal quality, respectively. Colors correspond to the impedance measurements. Please refer to the technical specifications for more details9.

The headset is lightweight, comfortable, and easy to set up, making it suitable for brain-computer interfaces (BCI) research. The Emotiv Insight requires an EmotivPRO software toolkit11; it allows researchers to record, visualize, and analyze EEG data collected from the headset. The software provides a user-friendly interface for easy data analysis and visualization. Additionally, the toolkit is quite useful in the development of custom applications and experiments using the Emotiv headset.

The Wacom One Display Pen Tablet12 has a 13.3-inch Full HD display with a resolution of 1920 × 1080 pixels. It supports up to 4096 levels of pressure sensitivity and has a battery-free pen with tilt support. The tablet has a viewing angle of 170 degrees and a color gamut of 72% NTSC (National Television System Committee). It has a durable and scratch-resistant surface and comes with an adjustable stand for comfortable use. The tablet also features HDMI and USB Type-C ports for connectivity. Online signatures drawn on the tablet were captured using the Wacom SignatureScope software tool13. The software captures real-time signature data and provides trajectory information, including coordinates (x and y), timestamp, stroke, pressure, azimuth, altitude of the pen, and other related information. This information is captured at a sampling rate of 200 frames per second.

Recording setup

To ensure a controlled environment for data collection, a physical recording studio was set up, which is illustrated in Fig. 2(ii). The studio is designed with acoustic walls to minimize external noise and is maintained at a constant temperature of 20°C. The subjects are seated comfortably and provided with a monitor screen and a Wacom tablet with a stylus for online signature recording. Audio instructions are provided through a speaker to ensure consistency across all subjects. The dataset curator is responsible for monitoring and recording the EEG and online signature data, while an assistant helps the subjects with the EEG headset placement. Both the curator and assistant maintain a safe distance from the subject to avoid any interference with the data collection process. In addition, the studio is thoroughly cleaned and disinfected to ensure hygiene, and the power supply is checked to ensure a stable recording environment before the start of each recording session.

Experimental paradigms

The multimodal dataset SignEEG v1.0 consists of three paradigms based on mental & motor imagery, and physical execution: (i) thinking the signature’s image, (ii) drawing the signature mentally, and (iii) drawing the signature physically. These three paradigms are of interest to neuroscience, BCI, and the machine learning research community. During mental & motor imagery in the first two paradigms, participants have their eyes closed while performing the task. In the third paradigm, participants perform the signature on the Wacom tab with open eyes. The acquisition protocol also includes auditory instructions and a rest period before each EEG activity (with eyes closed), as depicted in Fig. 3. The three paradigms facilitate researchers in developing unimodal EEG-based biometric systems while having hand-drawn signatures extends the research toward developing multimodal biometric systems. The paradigms are discussed below (also depicted in Fig. 2(i)).

-

Closed eyes, thinking the signature’s image: This paradigm involves mental imagery14– 16, the creation of a sensory experience in the absence of external stimuli. When a person closes their eyes and thinks of the signature, they are engaging in mental imagery. This is the simplest of the three paradigms, as it requires minimal motor activity and cognitive effort as compared to others. The main focus is on generating a visual image in the mind’s eye.

-

Closed eyes, drawing the signature mentally: This paradigm also involves mental imagery14,15; however, it requires more complex cognitive processes such as spatial reasoning and motor planning. The person needs to create a mental representation of the signature and then simulate the motor movements needed to draw it. This can activate additional brain regions involved in motor planning and execution. This paradigm may also be seen as a motor imagery task from the conventional definition of motor imagery16. The detailed discussion can be found in16,17.

-

Open eyes, drawing a signature physically: This paradigm involves actual motor execution18,19 and visual perception20. The person needs to visually perceive the signature and then use motor planning and execution to draw it. This paradigm can activate additional brain regions involved in sensorimotor integration, feedback control, and visual perception. This paradigm requires coordination between the motor and visual systems and engages higher-level cognitive processes such as planning, attention, and working memory.

Different regions of the brain may activate differently in different paradigms; therefore, these paradigms can be analyzed individually or combined.

Acquisition Protocol

The Emotiv Insight headset9 and Wacom One tab12 have been used to collect the multimodal SignEEG v1.0 dataset. Wacom tablets (Wacom One and earlier versions) have been used often in handwriting and signature verification research. However, Emotiv Insight is a relatively new headset for BCI research. Researchers have used Emotiv Insight and developed many applications. The study21 explored emotion recognition using EEG signals, focusing on the identification of happy and sad states through the analysis of brain signal features and power spectral density derived from two EEG channels. The research utilized data from 26 subjects, applying preprocessing and Empirical Mode Decomposition, followed by classification via an artificial neural network with an accuracy of 88.5%. Similarly, Olivares-Figueroa et al.22 proposed a BCI method to monitor emotional states in drone pilots, using EEG data and a database classifying ‘Quiet’ and ‘Very Tense’ states with an algorithm for real-time artifact removal and feature extraction for classification through K-Nearest Neighbors (KNN) and Support Vector Machine (SVM), achieving notable accuracy in emotional state differentiation. Zabcikova23 evaluated the Emotiv Insight headset for measuring brain responses to visual and auditory stimuli, utilizing the Emotiv Xavier ControlPanel software. Results indicated significant variations in engagement, stress, and excitement levels among participants responding to different stimuli. The findings indicated that the Emotiv Insight device is well-suited for entertainment applications.

The acquisition protocol is designed based on mental & motor imagery and physical execution of the activity performed by the subjects. This would allow researchers to develop EEG-based unimodal systems and multimodal systems with signatures included. An acquisition protocol has been established to facilitate the SignEEG v1.0 multimodal dataset collection. The protocol employed for recording both EEG and online signature data is illustrated in Fig. 3. Initially, subjects sit in a calm/resting state; the subject’s head is fitted with the EEG headset, and electrodes are placed on the scalp according to the 10-20 system10. After the channel and EEG signal quality indicators reach a stable state (indicated by the green color in the Emotiv Pro software), the EEG data acquisition is initiated following the procedure outlined in Fig. 3.

During the EEG data collection, except for the period when the subjects are providing their physical signatures, their eyes are closed. The subjects are instructed via audio commands throughout the data collection process, with the audio instructions playing simultaneously with the EEG recording. At the start of the data collection, the audio instructs the subjects to ‘Welcome to the Brain Research. Please close your eyes, sit in the Rest position Now’. Following this audio instruction, a marker with a value of 4 is inserted into the EEG recording, signifying the beginning of the rest period. During this rest period, which lasts for 10 seconds, the subjects are instructed to maintain a resting position with their eyes closed.

Subsequently, the audio command instructs the subjects to ‘Please visualize the signature Image Now,’ and a second marker is inserted into the EEG recording. During the following 10 seconds, the subjects are instructed to visualize the image of their signature in their minds’ eye, and if the image disappears, they are to recall it in their minds again. After the 10 seconds have elapsed, the audio instruction advises the subjects to ‘Please relax, keep your eyes closed Now.’ Following this instruction, a third marker is inserted into the EEG recording, and the subjects are instructed to remain in a resting position for an additional 10 seconds.

Subsequently, the audio instruction directs the subjects to ‘Please visualize the signature Trajectory Now.’ For the signature trajectory task, the subjects are instructed beforehand to simulate the act of signing on paper in their minds. They are required to envision the process of producing the signature from start to finish as if they were signing on a physical sheet of paper. Following this instruction, a fourth marker is inserted into the EEG recording, and the subjects simulate the signature as many times as they can within the next 10 seconds. After the 10 seconds have passed, the audio command advises the subjects to ‘Please relax, keep your eyes closed Now.’ Following this instruction, a fifth marker is inserted into the EEG recording, and the subjects are required to remain in a resting position for a further 10 seconds.

Following the resting period, the audio instruction prompts the subjects to ‘Please open your eyes, take the Wacom pen in your hand, do the signature Now.’ After the audio instruction, the subjects opened their eyes and picked up the Wacom stylus in their hand. Once they are ready to perform the signature on the Wacom tablet, a sixth marker is inserted into the EEG recording. The subjects proceed to sign on the Wacom tablet, and the final marker (7th time) is inserted into the EEG recording, indicating that the signature process has been completed. At this point, the dataset curator saves both the EEG and online signature data into permanent storage.

All markers inserted into the EEG recordings, except for the last one (7th marker), indicate the beginning of the EEG activities, whether it is the resting period or related to the signature tasks. It is noteworthy that all markers in the EEG recordings are represented by the digit 4. Additionally, in an EEG recording, each of the EEG activities, except for the one corresponding to the physical signature task, has a duration of 10 seconds. The final EEG activity associated with the physical signature has a duration equivalent to the time spent performing the signature on the Wacom tablet.

Objectives and Tasks

The complete data acquisition process intends to explore two major objectives: user identification and verification. User Identification involves establishing the identity of an individual from a larger dataset. To identify who the subject is from the pool, given the input data. User Verification focuses on confirming the claimed identity of an individual through a one-to-one match. Given input data, it aims to verify if the subject is Genuine or Forged. The following tasks are designed to evaluate the above-mentioned objectives for different experimental paradigms :

-

Task 1: This task aims to develop a biometric system based on paradigm one that corresponds to the EEG signals of mental imagery of the participant’s signatures.

-

Task 2: This task aims to develop a biometric system based on paradigm two that corresponds to the EEG signals of motor imagery of the participant’s signatures.

-

Task 3: This task aims to develop a biometric system based on paradigm three that corresponds to the EEG signals of the physical execution of the signatures.

-

Task 4: This task aims to develop a biometric system combining paradigm three and the Wacom signatures.

These tasks encourage the researchers to develop unimodal and multimodal biometric systems with EEG signals and Wacom signatures.

Data pre-processing

The pre-processing of the EEG data was done with EEGLAB24 (a MATLAB-based toolbox) following the EEG data pre-processing pipeline as depicted in Fig. 4. Epoching refers to segmenting the continuous EEG data into smaller segments for further analysis. The EEG data obtained using the acquisition protocol underwent further processing before being subjected to signal processing. For each subject, there were instances of genuine and forgery attempts (approximately 30 in total) recorded. EEG activities corresponding to rest and signatures were extracted by identifying inserted markers (marker value = 4). Each of the EEG activities related to the first six markers had a duration of 10 seconds, equivalent to 1280 frames starting from the marker’s position, given that the EEG headset had a sampling rate of 128 frames per second. The duration of the last EEG activity varied depending on when the subject completed the signature on the Wacom tablet.

Data Records

The data are available in Zenodo25. In total, 2’047 (×3, edf, csv, mat) instances have been recorded and pre-processed. Each subject is given a unique number (e.g., 200894). The directory structure ‘200894\ Genuine’ and ‘200894\ Forged’ consists of instances of genuine and forgery attempts for the subject 200894, respectively. The file naming convention is as follows.

EDF: SubjectID_{‘Genuine\ Forged’}_SignerID_d.edf. This is a recorded EEG datum in standard EDF format. The digit d represents the instance number. A signer (SignerID) performs the EEG and signatures for the subject (SubjectID). SubjectID and SignerID are the same for a Genuine attempt, and they differ for a forgery (Forged) attempt. The EDF file contains the EEG signals corresponding to the channels in the Emotiv Insight headset, along with other EEG-related information.

CSV: SubjectID_ ‘Genuine\ Forged’}_SignerID_d.csv. This is a recorded Wacom signature datum in standard CSV (comma-separated value) format. It follows the same naming convention as EDF except for the extension. It consists of the pen data (Index, Stroke, Btn, coordinate X, coordinate Y, Timestamp (T), Pressure, Azimuth, Altitude) per frame.

MAT: SubjectID_{‘Genuine\Forged’}_SignerID_d_epochLength_diff.mat. This is a MATLAB structure array (unordered struct, with MATLAB variable name subject) consisting of data related to EEG and Wacom signature after pre-processing. It follows the same naming convention as EDF; however, it has additional information in the filename. epochLength is the epoch length, and diff is an averaged absolute difference of EEG signals before and after applying ICA. The fields of the MATLAB structure and their description are given in Table 2.

Out of 70, the data of 5 subjects is kept aside for robustness analysis in anticipated future research.

Technical Validation

It is recommended that the epoch length be consistent for a specific type of EEG activity. Therefore, the duration of the last EEG activity, which corresponds to physical signatures, is constant in all instances (genuine and forged) for a given subject. The instances corresponding to the last EEG activity for each subject were interpolated26 to match the length of the longest genuine instance for that subject. This means that all instances (genuine and forged) for a particular subject have the same length (epoch length = 5 × 10 × 128 +k), where k is the duration of the last EEG activity. The value of k varies among subjects. Once the EEG data has been segmented using epoching, a bandpass filter is applied to the data, with a frequency range of 0.1-60 Hz, followed by the baseline removal. The purpose of baseline removal is to eliminate non-neural activity in the EEG signal by subtracting the mean of the signal from its data points. This process results in a more refined signal that reflects the neural activity under investigation. Following epoching and filtering, Independent Component Analysis (ICA) is utilized to remove artifacts resulting from various sources such as eye movements, muscle activities, and environmental noise.

Toa et al.27 and Heunis28 have also used ICA on Emotiv Insight data to remove the noise from the EEG signals. By applying ICA, the components associated with the artifacts, as well as the brain activity, are identified. The components with a substantial amount of artifacts are eliminated, while the components linked with the brain activity are kept to produce the final pre-processed signals. The brain maps (corresponding to components (IC) 2, 3, and 4) for two subjects are depicted in Fig. 5. It can be noticed from the figure that substantial brain data is preserved while the artifacts are substantially eliminated. A signal before (black) and after (red) the application of ICA is shown in Fig. 6, indicating that artifacts are substantially reduced. Besides traditional ICA-based methods, deep learning-based approaches29,30 can also be used for artifact removal from EEG signals.

Baseline results and discussion

Models and features

Table 3 summarizes the performance of several models on two independent objectives, namely identification and verification.

For verification, Table 3 represents the combined accuracy across all the subjects, i.e., combining all the true positives, true negatives, and so on. The models include Gaussian Naive Bayes (NB), K-Nearest Neighbors (KNN), Linear Support Vector Machine (SVM), Random Forest, Gradient Boost, and XGBoost. These models were evaluated using different sets of features derived from electroencephalogram (EEG) data (statistical and wavelet)31, Wacom tablet data32, or a combination of both. Feature extraction is a process in which relevant information is extracted from raw data to represent it in a more compact and meaningful form. Feature extraction refers to the process of identifying and quantifying distinctive patterns or characteristics that are informative for both identification and verification objectives.

The feature extraction process from EEG signals involves normalizing (Z-score normalization) the data channel-wise to ensure consistency. Several statistical features31 are derived from the normalized data, including the sum of values, energy, standard deviation, root mean square (RMS), skewness, and kurtosis. These features capture the overall activity, power, variability, magnitude, asymmetry, and peakedness of the signals. Wavelets have also been used to extract wavelet-based features31,33 from EEG signals. In this work, the DB4 wavelet is used for the wavelet decomposition of the EEG signals. The resulting wavelet coefficients are normalized, and the same statistical features are extracted from these coefficients. This approach captures both time-domain and frequency-domain characteristics, providing a comprehensive set of features that enhance the analysis and classification of EEG data.

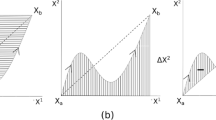

The feature extraction process from Wacom signature data involves calculating various statistical and dynamic measures32,34 to comprehensively capture the signature’s characteristics. Firstly, the raw data, including X and Y coordinates, pressure, azimuth, and altitude, is normalized (Z-score normalization) to standardize the measurements across different sessions. From the normalized data, the first derivatives, representing speed, and the second derivatives, representing acceleration, are calculated for the X and Y coordinates. This allows for the assessment of the movement dynamics. Several statistical features are then extracted from these measures. Specifically, for each of the five parameters-speed, acceleration, pressure, azimuth, and altitude-the mean, standard deviation, and maximum value are computed. The mean provides an average value, reflecting the general level of the measure. The standard deviation indicates the variability or dispersion, showing how much the values fluctuate. The maximum value captures the peak level attained, indicating the most extreme observed value. Additionally, specific features such as the maximum speed and maximum acceleration are explicitly calculated to highlight the highest rates of change in position and velocity. Experiments on EEG and Wacom data have been conducted in different feature settings, as stated in the Table 3 header.

Results and discussion

The data has been split into train (60%), validation (20%), and test (20%) sets for the experiments. For the statistical features derived from EEG signals in time domain, the Gaussian (NB), (KNN), Linear SVM, and random forest models exhibit similar performance with accuracy in the low 90% range. Gradient boost and XGBoost also perform well, with accuracies of 92.88% and 93.93%, respectively. Among these, XGBoost performs the best. While on the wavelet features, the performance of KNN is unsatisfactory. Of all the rest of the models, gradient boost and XGBoost perform relatively better, with an accuracy of around 90%. Compared to EEG signal-based features, the model performs less optimally for features from the Wacom signal. Random forest gives the highest accuracy at 55.25%. The combination of statistical and Wacom features drastically improves the performance of all models. XGBoost shows the highest accuracy at 98.68%, followed closely by Random Forest at 97.63%. All other models also exhibit significant improvement in their accuracies. Similar improvements in performance can be observed when wavelet and Wacom features are taken into account. However, KNN does not seem to benefit significantly from this combination, maintaining a low accuracy of 51.98%. The combination of EEG statistical, EEG wavelet, and Wacom features gives most models the highest accuracy, with random forest delivering the best results (98.94%). The only exception is KNN, which only achieves 59.10% accuracy.

In general, it has been noted that the accuracy of KNN is suboptimal compared to alternative models. This may be attributed to the selection of the parameter K, which denotes the number of neighbors to be taken into account during the prediction process. In order to ensure fair evaluation, a consistent value of K (i.e., 5) has been utilized across all features. However, this may prove inadequate for wavelet and Wacom features, resulting in a decline in performance. On the other hand, XGBoost and random forest excel for most of the experiments, whether statistical or wavelet features. XGBoost offers several advantages that may explain its superior performance in handling various types of features, such as in-built L1 and L2 regularization and cross-validation, tree pruning, capability to handle missing as well as sparse data, and many more. The random forest ensemble learning method shows a strong performance due to its ability to mitigate overfitting through the aggregation of results from multiple decision trees. The pattern in the performance across different models and features seen during identification also appears during verification. Random forest and gradient boost both achieve the highest accuracy when using statistical, wavelet, and Wacom features combined, with accuracies of 94.89% and 93.40%, respectively.

This paper focuses on multimodal biometrics with noninvasive EEG-based brain signals and hand-drawn signatures. However, the medical community has used invasive methods for brain-to-text communication. In35, authors used an intracortical Brain-Computer Interface (BCI) that decodes attempted handwriting movements from neural activity in the motor cortex and translates it to text in real-time, using a recurrent neural network decoding approach. They have achieved typing speeds of 90 characters per minute with 94.1% accuracy for individuals whose hand was paralyzed from spinal cord injury.

Besides, noninvasive methods with EEG have also been developed in robotic research. In36, authors developed a noninvasive approach using EEG to control a robotic device, which greatly enhances learning and control in realistic tasks, namely Discrete Trial (DT) and Continuous Pursuit (CP). A repeated-measures two-way ANOVA test has been performed (with 22 subjects) to analyze the significance of the performances of these tasks, where CP-based learning was found to be more effective than DT.

Limitation

In this study, we propose the SignEEG v1.0 dataset combining behavioral (handwritten signatures) with physiological (EEG) data to develop robust biometric identification and verification systems. Alongside traditional unimodal tasks, the SignEEG v1.0 dataset uniquely includes a task dedicated to the development of a multimodal biometric system. The results from our extensive empirical evaluation conclusively demonstrate that the integration of signatures and EEG in a multimodal framework significantly enhances system robustness, effectively utilizing the combined strengths of both modalities. Our study reveals that the combination of behavioral biometrics, exemplified by handwritten signatures, with physiological metrics, represented by EEG, confers a significant advantage to our multimodal approach. The interplay between these two distinct modalities not only complements but also reinforces each other, underscoring the efficacy of this integrated system.

The longitudinal temporal dynamics of EEG are yet to be explored. Therefore, in the future, we will intensively explore the temporal dynamics of EEG and handwritten signatures in a multimodal context through prolonged, longitudinal data collection from the same subjects. Emphasizing the temporal aspect, this approach aims to reveal how these biometric modalities evolve and interact over time, offering critical insights for advancing multimodal biometric system accuracy and reliability.

Code availability

The data and source code used in this study are distributed under the Creative Commons Attribution 4.0 International (CC BY 4.0) License (https://creativecommons.org/licenses/by/4.0/) and are available at Zenodo25.

References

Jain, A., Hong, L. & Pankanti, S. Biometric identification. Communications of the ACM 43, 90–98 (2000).

Tolosana, R. et al. Icdar 2021 competition on on-line signature verification. In Document Analysis and Recognition–ICDAR 2021: 16th International Conference, Lausanne, Switzerland, September 5–10, 2021, Proceedings, Part IV 16, 723–737 (Springer, 2021).

Tolosana, R. et al. Svc-ongoing: Signature verification competition. Pattern Recognition 127, 108609 (2022).

Dutta, S., Saini, R., Kumar, P. & Roy, P. P. An efficient approach for recognition and verification of on-line signatures using pso. In 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR), 882–887 (IEEE, 2017).

Palaniappan, R. Two-stage biometric authentication method using thought activity brain waves. International journal of neural systems 18, 59–66 (2008).

Rodrigues, J. D. C., Filho, P. P. R., Damaeviius, R. & Albuquerque, V. H. C. EEG-based biometric systems. In Neurotechnology: Methods, advances and applications (2020).

Kumar, P., Saini, R., Kaur, B., Roy, P. P. & Scheme, E. Fusion of neuro-signals and dynamic signatures for person authentication. Sensors 19, 4641 (2019).

Saini, R. et al. Don’t just sign use brain too: A novel multimodal approach for user identification and verification. Information Sciences 430, 163–178 (2018).

Inc, E. Insight User Manual — emotiv.gitbook.io. https://emotiv.gitbook.io/insight-manual/ [Accessed 06-Jun-2023] (2020).

Sharbrough, F. et al. American electroencephalographic society guidelines for standard electrode position nomenclature. Clinical Neurophysiology 8, 200–202 (1991).

Inc, E. Insight User Manual — emotiv.gitbook.io. https://emotiv.gitbook.io/emotivpro-v3/ [Accessed 06-Jun-2023] (2023).

Wacom. Wacom User Help (DTC133) — 101.wacom.com. http://101.wacom.com/UserHelp/en/TOC/DTC133.html [Accessed 06-Jun-2023] (2020).

Wacom. Signature scope - developer support. https://developer-support.wacom.com/hc/en-us/sections/9304704351895-Signature-Scope (Accessed on 06/06/2023). (2020).

Kosslyn, S. M., Thompson, W. L. & Ganis, G.The case for mental imagery. (Oxford University Press, New York, NY, US, 2006).

Ganis, G., Thompson, W. L. & Kosslyn, S. M. Brain areas underlying visual mental imagery and visual perception: an fmri study. Cognitive Brain Research 20, 226–241 (2004).

Nanay, B. Mental Imagery (Stanford Encyclopedia of Philosophy) — plato.stanford.edu. https://plato.stanford.edu/entries/mental-imagery/#MentImagVsMotoImag [Accessed 09-May-2023] (1997).

Jeannerod, M. The representing brain: Neural correlates of motor intention and imagery. Behavioral and Brain sciences 17, 187–202 (1994).

Frenkel-Toledo, S., Bentin, S., Perry, A., Liebermann, D. G. & Soroker, N. Dynamics of the eeg power in the frequency and spatial domains during observation and execution of manual movements. Brain research 1509, 43–57 (2013).

Rimbert, S., Al-Chwa, R., Zaepffel, M. & Bougrain, L. Electroencephalographic modulations during an open-or closed-eyes motor task. PeerJ 6, e4492 (2018).

Taylor, P. C. J. & Thut, G. Brain activity underlying visual perception and attention as inferred from tms–eeg: A review. Brain stimulation 5, 124–129 (2012).

Halim, N., Fuad, N., Marwan, M. & Nasir, E. Emotion state recognition using band power of eeg signals. In Proceedings of the 6th International Conference on Electrical, Control and Computer Engineering: InECCE2021, Kuantan, Pahang, Malaysia, 23rd August, 939–950 (Springer, 2022).

Olivares-Figueroa, J. D., Cruz-Vega, I., Ramírez-Cortés, J., Gómez-Gil, P. & Martínez-Carranza, J. A compact approach for emotional assessment of drone pilots using bci. In 12th international micro air vehicle conference, Puebla, México, 57–62 (2021).

Zabcikova, M. Visual and auditory stimuli response, measured by emotiv insight headset. In MATEC Web of Conferences, vol. 292, 01024 (EDP Sciences, 2019).

Delorme, A. & Makeig, S. Eeglab: an open source toolbox for analysis of single-trial eeg dynamics including independent component analysis. Journal of neuroscience methods 134, 9–21 (2004).

Mishra, A. R. et al. SignEEG v1.0 : Multimodal Electroencephalography and Signature Database for Biometric Systems. In Zenodo, https://doi.org/10.5281/zenodo.8332198 (2023).

Gribbon, K. T. & Bailey, D. G. A novel approach to real-time bilinear interpolation. In Proceedings. DELTA 2004. Second IEEE international workshop on electronic design, test and applications, 126–131 (IEEE, 2004).

Toa, C. K., Sim, K. S. & Tan, S. C. Emotiv insight with convolutional neural network: Visual attention test classification. In Advances in Computational Collective Intelligence: 13th International Conference, ICCCI 2021, Kallithea, Rhodes, Greece, September 29–October 1, 2021, Proceedings 13, 348–357 (Springer, 2021).

Heunis, C. Export and analysis of emotiv insight eeg data via eeglab. Developing Alternative Stroke Rehabilitation to Reinforce Neural Pathways and Synapses of Middle Cerebral Artery Stroke Patients 2016, 1–11 (2016).

Zhang, H. et al. Eegdenoisenet: A benchmark dataset for deep learning solutions of eeg denoising. Journal of Neural Engineering 18, 056057 (2021).

Yu, J., Li, C., Lou, K., Wei, C. & Liu, Q. Embedding decomposition for artifacts removal in eeg signals. Journal of Neural Engineering 19, 026052 (2022).

Saini, R. et al. Imagined object recognition using eeg-based neurological brain signals. In Recent Trends in Image Processing and Pattern Recognition, 305–319 (Springer International Publishing, 2022).

Hasan, M. A. M., Shin, J. & Maniruzzaman, M. Online kanji characters based writer identification using sequential forward floating selection and support vector machine. Applied Sciences 12, 10249 (2022).

Crasto, N. & Upadhyay, R. Wavelet decomposition based automatic sleep stage classification using eeg. In Bioinformatics and Biomedical Engineering: 5th International Work-Conference, IWBBIO 2017, Granada, Spain, April 26–28, 2017, Proceedings, Part I 5, 508–516 (Springer, 2017).

Khalid, M., Yusof, R. & Mokayed, H. Fusion of multi-classifiers for online signature verification using fuzzy logic inference. International Journal of Innovative Computing 7, 2709–2726 (2011).

Willett, F. R., Avansino, D. T., Hochberg, L. R., Henderson, J. M. & Shenoy, K. V. High-performance brain-to-text communication via handwriting. Nature 593, 249–254 (2021).

Edelman, B. J. et al. Noninvasive neuroimaging enhances continuous neural tracking for robotic device control. Science robotics 4, eaaw6844 (2019).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mishra, A.R., Kumar, R., Gupta, V. et al. SignEEG v1.0: Multimodal Dataset with Electroencephalography and Hand-written Signature for Biometric Systems. Sci Data 11, 718 (2024). https://doi.org/10.1038/s41597-024-03546-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-024-03546-z