Abstract

Pollution sources release contaminants into water bodies via sewage outfalls (SOs). Using high-resolution images to interpret SOs is laborious and expensive because it needs specific knowledge and must be done by hand. Integrating unmanned aerial vehicles (UAVs) and deep learning technology could assist in constructing an automated effluent SOs detection tool by gaining specialized knowledge. Achieving this objective requires high-quality image datasets for model training and testing. However, there is no satisfactory dataset of SOs. This study presents a high-quality dataset named the images for sewage outfalls objective detection (iSOOD). The 10481 images in iSOOD were captured using UAVs and handheld cameras by individuals from the river basin in China. This study has carefully annotated these images to ensure accuracy and consistency. The iSOOD has undergone technical validation utilizing the YOLOv10 series objective detection model. Our study could provide high-quality SOs datasets for enhancing deep-learning models with UAVs to achieve efficient and intelligent river basin management.

Similar content being viewed by others

Background & Summary

Much sewage and wastewater are being released into natural water bodies, resulting in water scarcity and environmental issues such as eutrophication, excessive metal contamination and plastic pollution1,2,3. Sewage outfalls (SOs), found extensively on both sides of the river, are specific channels for releasing pollutants from various sources of pollution into the water bodies4. Many managers recognize the importance of locating, obstructing, and regulating SOs to protect the natural water bodies5,6. High-resolution images are suitable for the analysis and interpretation of SOs. Currently, the interpretation must be performed by individuals with specialised environmental science knowledge. The over-reliance on professionals has several disadvantages, including the time-consuming and labour-intensive, which has impeded the widespread identification of SOs in large-scale river basins7,8.

Deep learning has advanced visual technology, enabling computers to acquire the expertise of image interpreters and become intelligent tools capable of detecting SOs objectives in large-scale river basins9. A high-resolution image set of SOs that can be used for advanced model training, validation, and testing is a necessary prerequisite for monitoring SOs to benefit from deep learning10,11. Therefore, a high-quality SOs image dataset is significant for engineers, scientists, and managers in SOs identification. However, there is no satisfactory dataset of SOs.

Several conducted research can serve as references for creating image datasets for SOs objective detection, such as those by Xu et al.12 and Huang et al.10. Xu et al. used the UAVs, which capture high-resolution images of SOs by operating at low altitudes. However, these photos are only about 600, which makes it challenging to meet the requirements of deep learning12. Huang et al. operated UAVs at a significant elevation and obtained around 7000 images of SOs10. Nevertheless, several disadvantages of these images lead to a diminished level of accuracy in identifying SOs, including (i) long-distance shooting leads to the size of the SOs in the field of view being too small to be identified; (ii) vertical photography makes it easy to ignore the sewage outlet with a small protruding amplitude. In recent years, China’s official administration has conducted a comprehensive survey of SOs to determine their exact spatial locations and capture images. This effort has accumulated a significant number of pictures. Nevertheless, these photos are unrefined and devoid of annotations, rendering them challenging to utilize directly for deep learning models. Fortunately, this study thoroughly examines these materials and establishes a standard SOs dataset. Moreover, the YOLOv10 series, one of the state-of-the-art target detection models, is used to evaluate the performance of the SOs dataset in this study13.

The main contribution of this study is the development of a high-quality dataset named the images for sewage outfalls objective detection (iSOOD) for the first time. The construction of iSOOD is determined by the following criteria14:

-

(i)

Diversity. Images are acquired in diverse geographical locations and lighting situations, encompassing various kinds of SOs;

-

(ii)

Accuracy. Our research team has completed and repeatedly checked the annotation work to ensure accuracy.

-

(iii)

Consistency. The annotation of the images follows the standard YOLOv10 format15;

-

(iv)

Extensibility. The images match specific attribute information, such as the category.

The iSOOD dataset consists of 10481 images and 10481 records of specific attribute information. The purpose is to encourage researchers to create advanced deep learning models using this iSOOD dataset and collaborate with us. Our mission is to promote the implementation of advanced detection technologies for SOs globally to enhance the intelligent management capabilities of river basins.

Methods

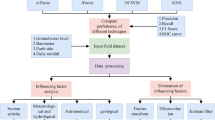

Figure 1 illustrates the essential steps involved in the development of iSOOD datasets.

Data collection

21246 images and 24466 attributes were acquired from original field investigations conducted in China in the Yangtze River basin and the Yellow River basin. Field investigations were conducted in the same regions using UAVs and handheld cameras. As a result, hidden and frequently unnoticed SOs were identified.

Image processing and attribute creation

Following acquiring original data, this study eliminates redundant, low-resolution images. Furthermore, annotation guidelines for iSOOD datasets were established16. This study used the Autodistill tool package in the Roboflow platform for labelling work17. This technique automatically applies pre-annotations to the SOs. The preliminary annotation findings allow our researchers to efficiently prioritize the SOs in the photos and make necessary modifications to the unsatisfactory annotations. To guarantee the exceptional quality of iSOOD, this study implemented a multi-level quality inspection approach. Each image must undergo review by at least two independent groups of researchers. Furthermore, the potential labelling mistakes are addressed through regular reviews. When confronted with ambiguous or contentious annotations, the researchers would engage in thorough discussion and ultimately reach a consensus. Finally, the first generation of the iSOOD dataset has been released, consisting of 10481 images of high quality, together with corresponding attributes.

The factors leading to the differences and changes in the quantity of images and attributes are as follows. (i) The differences between 21246 in the initial image files and 24466 in the original attributes arise due to numerous SOs in certain images. It is worth noting that this study merged the attributes to achieve a one-to-one correspondence between the SOs and the attributes. This ensures that the iSOOD dataset has both a single SO and multiple SOs images. (ii) The changes between the original SOs and the final SOs arise because some images are mistaken for the SOs, such as drinking water pipes and blurred images.

Technical validation

The iSOOD with 10481 images was split into a training set (80%), test set (10%), and validation set (10%). These sets were utilized to train the YOLOv10 series models, and the technical verification was reported based on the obtained performance.

Data Records

The iSOOD is freely shared via the Zenodo platform18. The iSOOD dataset consists of an image dataset in YOLO format accompanied by annotation files and attribute information in Excel format. Each row represents a single record of a sewage outfall at a specific location. The columns in the dataset are as follows:

-

1.

Image_name: Corresponds to the image file name in the dataset (sequentially numbered starting with 1, such as 1. jpg, 2. jpg).

-

2.

Outfall_code: Every outfall has a unique code.

-

3.

basin: River basin affiliation (1 = Yangtze River, 2 = Yellow River).

-

4.

typ: Type of sewage outfalls. (1 = Combined sewer, 2 = Rainwater, 3 = Industrial wastewater, 4 = Agricultural drainage, 5 = Livestock breeding, 6 = Aquaculture, 7 = Surface runoff, 8 = Wastewater treatment plant, 9 = Domestic wastewater (e.g., wastewater not collected by wastewater treatment plants), 10 = Other).

Statistics and examples of the dataset

The iSOOD dataset has a total of 10481 SOs images in YOLO format, which were collected from the Yangtze River (9285 SOs images) and the Yellow River (1196 SOs images) in China (Fig. 2). The iSOOD dataset contains around ten types of SOs, as shown in Fig. 6. These SOs images in the iSOOD dataset have a range of pixel distribution (Fig. 3). Approximately 95.1% of the images in the iSOOD dataset have high pixels (Fig. 7). Figure 4 displays the heatmap illustrating the annotation box centre distribution of the iSOOD dataset. 77.3% of the images depict SOs located near the centre of the picture, while the remaining 22.7% show SOs around the edge area (Fig. 8). The number of large-sized SOs object images with pixel values greater than 96*96 is the largest, accounting for 80.0% of the iSOOD dataset. The second largest is the medium-sized SOs targets (accounting for 18.6%), and the smallest is the small-sized SOs targets with pixel values less than 32*32 (accounting for 1.4%) (Fig. 5). The original size of the annotation boxes and SOs is shown in Fig. 9.

Technical Validation

Environment settings

Given the potential application scenario of using the UAVs for real-time detection of SOs, the deep learning model must have the characteristics of rapid target recognition and compatibility with low-version hardware ports. Therefore, the YOLOv10 series, one of the state-of-the-art target detection models, was used to evaluate the performance of the iSOOD dataset. Compared with the previous YOLOv series, the YOLOv10 series has faster speed and higher accuracy13. The iSOOD dataset, comprising 10481 images of SOs, was randomly split into a training set (80%), test set (10%) and validation set (10%)19. The training of the YOLOv10 series utilized a personal computer with RTX 3090 24GB GPU, employing the default hyper-parameters. The batch sizes for the series were set to 16. The training epochs for the YOLOv10 series were set to 100. Before training, the iSOOD images were randomly translated, flipped, and scaled.

Evaluation metrics

This study evaluates the efficacy of integrating the iSOOD dataset with the YOLOv10 series. We utilized the pycocotools to extract the average precision (AP) and the average recall (AR) metrics20. The AP metric evaluates a model’s capacity to accurately identify relevant objects by quantifying the proportion of actual positive detection. The AR metric evaluates the model’s ability to detect all relevant cases by measuring the percentage of actual positive detection among all relevant ground truths. The metrics evaluate the model’s ability by comparing the bounding boxes identified by the model with the annotation bounding boxes of the SOs21. The higher the values of AP and AR, the more satisfactory evaluation outcomes. The Intersection over the Union (IoU) threshold is a critical parameter that significantly impacts the evaluation results. The AP@50:5:95 is calculated at different IoU thresholds, typically from 0.5 to 0.95, with a step size of 0.05. The AP50 and AP75 are calculated with IoU values set to 0.50 and 0.75, respectively22. Furthermore, the study assessed the detection performance of images of various sizes of SOs[]. The AR01, AR10, and AR100 represent the average AR of 1, 10, and 100 maximum number detection objectives for all IoU thresholds.

Performance evaluation

Table 1 shows the performance evaluation for the YOLOv10 series at different IoU thresholds. The training, validation and testing performances demonstrated that there was no over-fitting. On the test dataset, the AP and AR metrics are 0.626~0.883 and 0.597~0.785, which are better than the results of previous studies10,12. These results indicate that the iSOOD dataset is suitable for developing a deep learning model to utilise SOs objective detection in natural environments effectively. Table 2 shows the performance evaluation for the YOLOv10 series at different sizes of SOs objectives. On the test dataset, the AP and AR metrics for small-size SOs objectives range are 0.078~0.196 and 0.236~0.336, indicating a relatively low accuracy in identifying small-sized objectives. This is not related to the limitation of the models because the feature information contained in small-sized SOs images is very sparse. To ensure the precision of SOs detection in practical applications, one effective method is to operate the UAVs close to the river bank to take high-resolution images of SOs23.

Usage Notes

This study presents the first fine-grained dataset for SOs objective detection in natural environments. The iSOOD includes 10481 images captured by UAVs and handheld cameras. Our researchers meticulously annotated the iSOOD to assign labels to SOs. The iSOOD have been publicly released after desensitization to promote interdisciplinary collaboration and accelerate advancements in intelligence watershed management. We expect the iSOOD dataset to inspire further research on the SOs detection and the control of pollution migration paths and serve as a fundamental resource for using advanced deep learning visual technology in environmental monitoring.

Implications of the iSOOD for intelligence watershed management

The importance of SOs inspection in improving the water ecological environment has gradually attracted the recognition of policymakers. There is a significant demand for iSOOD and related technology in watershed management. For example, the Chinese administration is initiating an in-depth investigation into SOs across the country. China has allocated billions of dollars and employed tens of thousands of knowledgeable employees only in the Yangtze River and Yellow River basins’ upper and middle sections. Nevertheless, the ongoing investigation of the SOs constitutes only about 10% of the overall effort. Internationally, countries can also examine the “China model” to investigate the SOs within the river basin to guarantee the water’s ecological safety. The extensive application scenarios mean that iSOOD and related intelligence technologies have great potential to replace manual labour in SOs detection, significantly reducing costs and enhancing efficiency.

The most important recommendation is to implement artificial intelligence technologies related to iSOOD on the UAV platform for watershed management. More precisely, the specific details are as follows. (i) There is a requirement for UAVs that can operate at low altitudes and be easily navigated, along with flight control algorithms that are compatible with these platforms. This study found that the precision of identifying minor SOs is relatively low. To cope with this challenge, a reliable UAV platform is required to acquire high-resolution images of SOs within its range of vision using automated cruse and near-up flights. (ii) The YOLO series algorithm architecture is the primary focus of application in artificial intelligence for automatically identifying SOs. Object detection techniques can be classified into two-stage and one-stage algorithm methods. As a one-stage algorithm, the YOLO series offers the benefit of rapid processing, making it particularly well-suited for real-time surveillance24,25. Nevertheless, compared to the standard two-stage approach, YOLO also has the drawback of reduced detection accuracy26,27,28. Hence, it is imperative to conduct further research to enhance the detection speed and accuracy of algorithms built upon YOLO. (iii) The iSOOD dataset could be able to continuously gather and accumulate images to enhance its performance in tasks associated with SOs detection worldwide.

Code availability

The Python 3.9 scripts used for generating statistics presented in the article, as well as the code for validating the completeness of the dataset (including YOLOv5 and YOLOv10), are available at https://github.com/Daniel00ll/iSOOD-code.

References

Woodward, J., Li, J., Rothwell, J. & Hurley, R. Acute Riverine Microplastic Contamination Due to Avoidable Releases of Untreated Wastewater. Nature Sustainability. 4, 793–802 (2021).

Tong, Y. et al. Decline in Chinese Lake Phosphorus Concentration Accompanied by Shift in Sources Since 2006. Nat. Geosci. 10, 507–511 (2017).

Shao, P. et al. Mixed-Valence Molybdenum Oxide as a Recyclable Sorbent for Silver Removal and Recovery From Wastewater. Nat. Commun. 14, 1365 (2023).

Xu, J. et al. Response of Water Quality to Land Use and Sewage Outfalls in Different Seasons. Sci. Total Environ. 696, 134014 (2019).

Mendonça, A., Losada, M. Á., Reis, M. T. & Neves, M. G. Risk Assessment in Submarine Outfall Projects: The Case of Portugal. J. Environ. Manage. 116, 186–195 (2013).

Alkhalidi, M. A., Hasan, S. M. & Almarshed, B. F. Assessing Coastal Outfall Impact On Shallow Enclosed Bays Water Quality: Field and Statistical Analysis. Journal of Engineering Research. (2023).

Wang, Y. et al. Automatic Detection of Suspected Sewage Discharge From Coastal Outfalls Based On Sentinel-2 Imagery. Sci. Total Environ. 853, 158374 (2022).

Zhang, J., Zou, T. & Lai, Y. Novel Method for Industrial Sewage Outfall Detection: Water Pollution Monitoring Based On Web Crawler and Remote Sensing Interpretation Techniques. J. Clean. Prod. 312, 127640 (2021).

Wu, X., Sahoo, D. & Hoi, S. C. H. Recent Advances in Deep Learning for Object Detection. Neurocomputing. 396, 39–64 (2020).

Huang, Y. & Wu, C. Evaluation of Deep Learning Benchmarks in Retrieving Outfalls Into Rivers with Uas Images. Ieee T. Geosci. Remote. 61, 1–12 (2023).

Cao, Z., Kooistra, L., Wang, W., Guo, L. & Valente, J. Real-Time Object Detection Based On Uav Remote Sensing: A Systematic Literature Review. Drones. 7, 620 (2023).

Xu, H. et al. Uav-Ods: A Real-Time Outfall Detection System Based On Uav Remote Sensing and Edge Computing.: IEEE, 2022:1-9.

Wang, A. et al. Yolov10: Real-Time End-to-End Object Detection. Ithaca: Cornell University Library, arXiv.org, 2024.

Gong, Y., Liu, G., Xue, Y., Li, R. & Meng, L. A Survey On Dataset Quality in Machine Learning. Inform. Software Tech. 162, 107268 (2023).

Jiang, P., Ergu, D., Liu, F., Cai, Y. & Ma, B. A Review of Yolo Algorithm Developments. Procedia Computer Science. 199, 1066–1073 (2022).

Everingham, M., Van Gool, L., Williams, C. K. I., Winn, J. & Zisserman, A. The Pascal Visual Object Classes (Voc) Challenge. Int. J. Comput. Vision. 88, 303–338 (2010).

Lin, Q., Ye, G., Wang, J. & Liu, H. Roboflow: A Data-Centric Workflow Management System for Developing Ai-Enhanced Robots. 5th Conference on Robot Learning (CoRL 2021). London, UK, 2021.

Tian, Y., Deng, N., Xu, J. & Wen, Z. A Fine-Grained Dataset Named iSOOD for Sewage Outfalls Objective Detection in Natural Environments. Zenodo https://doi.org/10.5281/zenodo.10903574 (2024).

Vayssade, J., Arquet, R., Troupe, W. & Bonneau, M. Cherrychèvre: A Fine-Grained Dataset for Goat Detection in Natural Environments. Scientific Data. 10, 689 (2023).

Lin, T., Maire, M., Belongie, S., Bourdev, L. & Girshick, R. Microsoft Coco: Common Objects in Context, 2014.

Wang, Y. et al. Remote Sensing Image Super-Resolution and Object Detection: Benchmark and State of the Art. Expert Syst. Appl. 197, 116793 (2022).

Padilla, R., Netto, S. L. & Da Silva, E. A. B. A Survey On Performance Metrics for Object-Detection Algorithms. IEEE, 2020:237-242.

Chen, C. & Lyu, F. Unmanned-System-Based Solution for Coastal Submerged Outfall Detection. IEEE, 2021:1768-1771.

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection.: IEEE, 2016:779-788.

Sun, X., Wang, P., Wang, C., Liu, Y. & Fu, K. Pbnet: Part-Based Convolutional Neural Network for Complex Composite Object Detection in Remote Sensing Imagery. Isprs J. Photogramm. 173, 50–65 (2021).

Liu, Z., Gao, Y., Du, Q., Chen, M. & Lv, W. Yolo-Extract: Improved Yolov5 for Aircraft Object Detection in Remote Sensing Images. Ieee Access. 11, 1742–1751 (2023).

Li, Y., Wang, J. & Shi, B. Comparison of Two Target Detection Algorithms Based On Remote Sensing Images. International Conference on Computer Information Science and Artificial Intelligence. Kunming, China, 2021.

Li, W., Feng, X. S., Zha, K., Li, S. & Zhu, H. S. Summary of Target Detection Algorithms. Journal of Physics: Conference Series. 1757, 12003 (2021).

Acknowledgements

This work was supported by the National Key Research and Development Program of China (2023YFC3205501).

Author information

Authors and Affiliations

Contributions

Yuqing Tian: Conceptualization, Methodology, Formal analysis, Investigation, Data annotation, Data curation, Writing & original draft, Writing & review & editing, Visualization, Validation, Funding acquisition. Ning Deng: Acquisition, Methodology, Data annotation, Visualization, Validation. Jie Xu: Supervision, Investigation. Zongguo Wen: Supervision, Project administration.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tian, Y., Deng, N., Xu, J. et al. A fine-grained dataset for sewage outfalls objective detection in natural environments. Sci Data 11, 724 (2024). https://doi.org/10.1038/s41597-024-03574-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-024-03574-9

This article is cited by

-

YOLO advances to its genesis: a decadal and comprehensive review of the You Only Look Once (YOLO) series

Artificial Intelligence Review (2025)