Abstract

3D-ARM-Gaze is a public dataset designed to provide natural arm movements together with visual and gaze information when reaching objects in a wide reachable space from a precisely controlled, comfortably seated posture. Participants were involved in picking and placing objects in various positions and orientations in a virtual environment, whereby a specific procedure maximized the workspace explored while ensuring a consistent seated posture by guiding participants to a predetermined neutral posture via visual feedback from the trunk and shoulders. These experimental settings enabled to capture natural arm movements with high median success rates (>98% objects reached) and minimal compensatory movements. The dataset regroups more than 2.5 million samples recorded from 20 healthy participants performing 14 000 single pick-and-place movements (700 per participant). While initially designed to explore novel prosthesis control strategies based on natural eye-hand and arm coordination, this dataset will also be useful to researchers interested in core sensorimotor control, humanoid robotics, human-robot interactions, as well as for the development and testing of associated solutions in gaze-guided computer vision.

Similar content being viewed by others

Background & Summary

Every object manipulation starts with a natural arm movement designed to reach and grasp it. Most of the time, this object is recognized based on visual information gathered during gaze-fixation. Understanding eye-hand coordination during reaching movements is a great challenge in human sensorimotor control, with huge applications in motor rehabilitation, humanoid robotics, human-robot interactions, and prosthesis control.

With respect to prosthesis control, recent developments include movement-based control strategies whereby the movement of the prosthetic limb is controlled based on the motion of the residual limb and its natural coordination with the missing joints1,2,3,4,5,6,7. These methods employ arm motion data to train nonlinear regression techniques, such as artificial neural networks (ANNs), radial basis function networks, or locally weighted regressions, in order to learn and use the coordination between residual and missing joints. Several public datasets exist containing arm movement data, which could be used to model and exploit this natural coordination (for a comprehensive review, see8). Noteworthy among these are the Kit Whole-Body Human Motion dataset9 and the U Limb dataset10. The former is a valuable resource for human motion analysis, with its applications leaning towards modeling the entire body11,12,13 due to its comprehensive coverage of whole-body motion. The latter, instead, primarily focuses on arm motion, making it particularly valuable for characterizing upper limb kinematics post-stroke and comparing it to natural motion14,15, training movement-based prosthesis control systems16, analyzing muscle synergies17, and evaluating trajectory optimization frameworks for rehabilitation robots18. However, these datasets primarily contain recordings of daily life activities, with only a few selected objects poses, as depicted in Fig. 2 of19. None of these datasets were designed to maximize the workspace spanned by participants with their arm (i.e., the set of positions and orientations reachable depending on their morphologies and ranges of motion). Additionally, the overall body postures from which arm movements were produced were generally left free or poorly controlled. Any difference in posture or limit in the workspace explored in those databases as compared to the use case would therefore inevitably translate into malfunctioning control for those posture or workspace areas.

Furthermore, although 6D pose estimation is a very active area of computer vision20,21,22,23 with applications far beyond prosthesis control (e.g., augmented reality, healthcare or industrial robotics), precise determination of object pose from first person view/egocentric videos with gaze information acquired in natural context is still an open research issue24. Here again, several public datasets exist onto which object 6D pose estimation could be trained on and tested25,26, but none of those include realistic, egocentric visual information like gaze, obtained in a functionally relevant context (i.e., with natural arm movements that require reaching and displacing objects).

We provide here a dataset designed to overcome these aforementioned limitations. First, the workspace was maximized by a procedure that initially covers the widest plausible workspace (i.e., set by the maximal range of motion of participants), and then subsequently uses a self-organizing map to best represent the space actually covered by participants producing natural arm movements within this space (i.e., filtering out unachieved configurations)6. Second, we recorded head and gaze motion in addition to movements of the trunk, shoulders, and arm joints, thereby collecting the entire kinematic chain between the object the participant is gating (and gazing) at and the hand moved to reach it. This information, combined with the VR technology, enables reconstructing visual data with gaze from which computer vision would need to extract 6D pose of the object of interest in a real-life application. Because the ground truth of the object pose is known by design in VR, and the visual environment could be replayed and manipulated at wish, this dataset can also be used to generate synthetic data consistent with physiological head, gaze, and arm movements, and necessary to develop and test 6D pose estimation algorithms geared toward realistic contexts. Additionally, the initial body posture from which arm movements were produced was precisely controlled by guiding participants to a predetermined neutral posture between trials via visual feedback from the trunk and shoulders in a virtual reality (VR) environment. Although a comfortable seated posture was used here to ensure that the produced arm movements were as natural as possible, the specificity to this controlled posture might represent a limitation of the resulting dataset. This limitation, however, could be addressed by collecting data from a variety of starting postures using the method described herein.

Besides the specific goal of providing information relevant to movement-based prosthesis control1,2,3,4,5,6,7, and in particular those missing in6 to get closer to a real-life setting, we believe that the present dataset could be useful to a range of broader contexts.

This dataset can be used to revisit several issues lying at the core of human sensorimotor control. First, our 3D recordings of the entire kinematic chain (shoulder, elbow, wrist) are clearly relevant to assess how multiple joints are coordinated in a system exhibiting redundant degrees of freedom (DOF). For instance, this dataset may help to establish whether hand movements are better accounted for by a model in which planning is performed in (intrinsic) joint space or through vectorial coordinates in (extrinsic) task space27,28. One may also be interested in further testing of the Optimal Feedback Control theory29 or the Uncontrolled Manifold hypothesis30,31, two approaches suggesting that fluctuations in joint kinematics across trials are constrained differently by the nervous system along task-relevant and task-irrelevant dimensions. We believe that the current dataset would be particularly relevant to assess to what extent these two approaches account with similar accuracy for movements that unfold in different regions of the workspace, regions that may rely differently on proximal/distal joints and that may provide different levels of joint redundancy. Because we recorded the maximal range of motion at each individual joint, our dataset is also suitable to investigate to what extent arm configurations can be accounted for by cost functions using postural comfort as a key variable (i.e. maximizing postures away from extreme joint angles32,33). As our dataset was acquired in a large workspace, it can also be used to fuel ongoing debates about the straightness and smoothness of hand movements in 3D space27,28,34,35,36,37. Because our dataset also includes gaze recordings, it can be used to address issues regarding eye-head-arm coordination38,39,40. Finally, how final arm posture may depend on initial arm posture (and initial hand/object position) can also be addressed with the current dataset28.

On a more applied side, the current dataset offers a benchmark of natural arm movements that can be used in motor rehabilitation contexts (e.g., to generate assistance with upper limb exoskeleton for stroke rehabilitation41), or for arm control in humanoid robotics42,43,44. In the context of human-robot interactions, the production of natural arm movements is important as it increases movement predictability, which is known to positively impact our capacity to collaborate with robots45,46. In line with those attempts, the present dataset can be used either to feed methods aiming at reproducing natural arm movement on robotic platforms, or to evaluate the human-likeness of these methods in a wide workspace (see47 for a review of various methods for human-like arm motion generation).

The following sections describe the methods and content of the dataset, acquired while participants were performing a pick-and-place task illustrated in Video 1, with a precisely controlled posture the participants had to return to periodically. The dataset is available on an open repository which also contains basic code for the analyses presented here, as well as a data player allowing both to replay/visualize the data in 3D (Video 2), and to generate new video data with various fictitious visual backgrounds and various objects (Video 3). This last capability will be particularly attractive to computer vision scientists aiming to develop and test efficient 6D pose estimation algorithms from egocentric vision with gaze. Indeed, being able to increase the variability of objects and backgrounds in the learning phrase can prevent over-fitting.

Methods

Participants

The study was conducted on twenty participants (six males and fourteen females), aged 19–44 (mean 25.1; SD 6.46) with normal or corrected-to-normal vision. Participants’ handedness was assessed using the Edinburgh Handedness Inventory (EHI)48. All participants were able-bodied, right-handed (mean EHI 81; SD 23) and none of them suffered from any mental or motor disorder that could interfere with the performance of the task. All participants signed an informed consent, and the study was approved by local ethics committee (CPP Est II: n°2 019- A02890-57).

Experimental setup

The participant sat on a stool and wore the headset (ViveTM Pro, HTC Corporation) and five motion trackers (ViveTM Tracker, HTC Corporation), attached to the trunk, the main segments (upper arm, forearm, and hand) of the right arm and the upper arm of the left arm, using straps, as shown in Fig. 1a. The trunk tracker was positioned at the center of the chest. The upper arm tracker was placed at the midpoint of the upper arm, on the lateral side, to avoid contact with the torso during arm movements. The forearm tracker was positioned on the frontal side of the forearm, in the middle of the segment, to prevent contact with the hand tracker during wrist flexion. The hand tracker was centered over the metacarpal bones. Please note that the method used for joint center estimation (cf. Calibration) does not depend on the precise placement of the trackers and should effectively identify and maintain accurate joint center estimation as long as the trackers do not slip during or after the calibration process. After placing the trackers, participants were therefore instructed to move their arms to ensure that the trackers did not come into contact with each other, and that all straps were secured tightly to ensure stability during movements.

Each tracker as well as the headset provided measurements of their 3D position and orientation relative to the virtual environment’s reference frame. Two cameras, placed at the corners of the room, received signals from the trackers and the headset at 90 Hz (sampling rate) using SteamVR (Valve Corporation) as middleware. The Unity engine (Unity Technologies, version 2022.2.1) was employed to manage the virtual scene’s contents and interaction with the participant. The main extensions and modifications made to the Unity engine to conduct the experiment, as well as the virtual objects and the virtual arm, are available in the Zenodo repository49 under the folder code_unity. Custom scripts were developed to control the experiment phases and virtual object behaviors. The virtual environment, an empty room surrounded by four walls, was scaled to match real-world dimensions via the HTC Vive calibration process: the ground plane was set at the same height as the actual floor and centered on the stool, where the participant was seated. At the onset of each experiment, participants, already equipped with the headset and immersed in the virtual scene, were instructed to look forward. Following this, adjustments were made to the position and orientation of the virtual room to align with those of the participant’s head along both the horizontal and frontal axes.

Calibration

After the participant was equipped with the trackers and the headset, the software for eye tracking was calibrated throughout the method already included in SteamVR (https://www.vive.com/ca/support/vive-pro-eye/category_howto/calibrating-eye-tracking.html). Then, a calibration procedure was carried out to associate the virtual arm with the trackers and therefore, with the participant’s real arm.

The virtual arm, made through the open-source software MakeHuman (http://www.makehumancommunity.org/), was composed by three rigid segments (upper arm, forearm, hand) connected by spherical joints at shoulder, elbow and wrist levels. The calibration consisted of three steps: data recording, joint centers estimation, association of virtual and real arm. In the first step, the participant was asked to perform slow movements using all the DOFs of both arms for 15s and then of the head for 10s. Based on these data, the method described in50 was used to estimate the following joint centers’ locations: left shoulder, neck, right shoulder, elbow, and wrist, shown in Fig. 1b as yellow spheres. Additionally, the center of the trunk, represented by a red sphere in Fig. 1b, was estimated as the orthogonal projection of the joint center of the neck onto the line connecting right and left shoulder.

Then, the virtual arm (right side) appeared locked in a reference posture with the shoulder placed so that it coincided with the real shoulder of the participant and the virtual arm dimensions resized according to the participant’s ones, computed as the distance between estimated joint centers. In the last step, the participant saw the virtual arm and an arm composed of sticks and spheres, representing their real arm. To complete the calibration, the participant had to overlap the arm of sticks and spheres with the virtual one in order to bind them (see6 for more details).

Range of motion determination

After the calibration, the headset was temporarily taken off and data were collected with the purpose of computing the range of motion of each DOF (shoulder flexion/extension (S-FE), shoulder abduction/adduction (S-AA), humeral rotation (H-R), elbow flexion/extension (E-FE), forearm pronation/supination (F-PS), wrist radioulnar deviation (W-RU), wrist flexion/extension (W-FE)) of the participant’s arm (see Supplementary Fig. 1). Participants were asked to slowly perform a few repetitions of an elementary movement for each joint with maximal amplitude in each direction. For each movement, the experimenter performed a demonstration that the participants were required to mimic with their arm. This demonstration and movement recording lasted about 10s for each movement and included at least four complete back-and-forth excursions. Then, the joint angular limits were estimated as the extreme reached values (i.e., the minimum and maximum angles reached during each joint repetition).

Task

Data were collected while the participant was engaged in a pick-and-place task involving picking a bottle from one platform (red disk visible at the base of the semi-transparent bottle) and released it into another one, as shown in Fig. 2 and in Video 1.

Illustration of the task. After the participant has picked a bottle from a previous platform (cf. bottle already in the hand), they will transport it toward the next platform (a,b), onto which they will release it (c). Please note that a semi-transparent bottle has been added on the target platform (a,b) to help the participant fitting their bottle with the target zone and that the bottle turned red (c) when within the target zone.

One pick-and-place movement was defined as the action from picking the bottle (target) on a platform to releasing on the next platform, or equivalently, from releasing it on a platform to picking it on the next platform. The task did not directly involve the opening and closing of the hand: the participant had to place the virtual hand in the target zone within 2 cm of the bottle center and match the virtual hand orientation with that of the bottle within an angular tolerance of 5°. When these two criteria were met, the bottle became red (see Fig. 2c), and after 0.6s of continuous red coloring (validation time), the bottle was automatically grasped or released. The participant had 5s to complete each pick-and-place movement: if not achieved within that time, the trial was considered failed and a new target appeared.

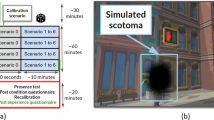

Return to Neutral Posture procedure

In most research focusing on a specific pattern of movement, it is a common procedure to have participants returning to a neutral posture between trials (or movements) in order to maintain recordings consistency and limit drifts or carry over effects between trials51,52,53. Instead of merely relying on participants compliance with instruction to return to a neutral posture, or upon the experimenter’s observation of it, we capitalized on our VR set-up to design and apply a controlled procedure called Return to Neutral Posture (RNP). This was particularly suited for our purpose, which was to collect data about natural arm movements issued from a comfortable posture, including shoulder and trunk. This procedure was implemented using the display of two sets of three cubes (see Fig. 3), each cube corresponding either to a shoulder position (left or right) or to the head position, projected 45 cm forward in front of the participant. The green cubes were fixed and represented the target posture the participant had to return to, while the position of the blue cubes was updated according to the actual participant shoulders and head positions. The RNP was achieved by overlapping the blue cubes with the green ones within a given tolerance for a duration of 0.6s. The specific tolerances used were determined empirically to be tight enough to ensure consistency in the given posture participants had to return to, while avoiding control difficulties inherent to unreasonably small tolerance. This was achieved with an angular tolerance of 5°; for the head and shoulders, and a spatial tolerance of 2 cm for the head and 3 cm for the shoulders. Video 1 shows a representative participant performing the pick-and-place task, with the RNP procedure between each pair of targets (i.e., between each pick-and-place movement).

Return to Neutral Posture (RNP) procedure. (a) After the participant completed a pick-and-place movement, the RNP procedure started and the cubes appeared. The green cubes represented the neutral posture the participant had to return to, while the blue cubes the actual participant shoulders and head positions. (b) By overlapping the blue cubes with the green cubes within a given tolerance, all cubes turned red to indicate that the participant had successfully achieved the RNP.

Protocol

The experimental protocol was composed of five phases: Familiarization, Initial Acquisition, RNP familiarization, Test RNP after pauses and Test RNP after target pairs (see Fig. 4).

Different target sets were generated as explained below for the different phases of the experiment, all being filtered according to the following criteria:

-

Exclude targets pointing downward, defined by an angle between the target’s axis and the vertical axis that would exceed 100°.

-

Exclude targets too close to the participant’s trunk, defined by a distance between the target’s center and the participant’s frontal plane that would not exceed a third of the participant’s arm length.

-

Exclude targets too close to the participant’s legs, defined by a distance between the target’s center and the horizontal plane passing through the participant’s shoulder that would exceed two-thirds of the participant’s arm length.

To generate the first target set, 7-DOF arm angular configurations were drawn at random within the participant ranges of motion following a multivariate uniform probability distribution. Then, forward kinematics was used to compute the target locations (i.e. positions and orientations), which were then filtered as indicated above (see6 for more details). This target set contained at least 300 targets, subsequently used both in the Familiarization phase and in the Initial Acquisition phase.

The first phase aimed at familiarizing the participant with the virtual environment and the task. For this reason, the time to complete a pick-and-place task was lengthened to 10s. When the participant felt comfortable with the task, after approximately 60-100 targets, the experimenter skipped the remaining targets and proceeded to the next phase.

The second phase, Initial Acquisition (IA), aimed at recording data used to sample more accurately the reachable workspace which is specific to each participant. This was achieved using an unsupervised self-organizing network (more specifically, a Growing Neural Gas (GNG) algorithm54), trained on all arm postures produced by each participant during the IA phase, in order to generate a new set of 200 targets that best represent those postures. This algorithm maps the space of the joint configurations executed by the participants during the IA phase by placing nodes (or neurons) and iteratively adjusting their positions based on the input data. By effectively learning the distribution of joint configurations, it identifies the neurons that best represent the arm configurations explored by the participant. These configurations are then transformed into targets through forward kinematics. This step was necessary to circumvent problems inherent to the fact that anatomical joints’ limits are interdependent making some arm configurations impossible, in particular when maximal excursion at multiple joints is involved simultaneously (see6 for more details). The resulting new set of 200 targets that best represent the reachable workspace of each participant was then used for all the next phases.

In the third phase, RNP familiarization, the participants freely choose their neutral posture to return to and got familiar with the RNP procedure, which was introduced every four targets in this familiarization phase. At any time, the participant could ask to reposition the cubes defining that neutral posture, as the goal was to ensure the most natural and comfortable posture. As for the Familiarization phase, when the participant felt comfortable with the neutral posture and the RNP procedure, the experimenter skipped the remaining targets and proceeded to the next phases (typically after 60-80 targets).

Two Test RNP phases were then conducted: one in which the RNP procedure occurred at the beginning of the phase and after each pause introduced every 50 pick-and-place movements (i.e., 3 pauses in a phase of 200 movements), and one in which the RNP procedure occurred after each single pick-and-place movement. This last phase, Test RNP after target pairs (RNP ATP), was designed to prevent any change or drift in baseline posture that might occur from movement to movement. The preceding phase, Test RNP after pauses (RNP AP), aimed at testing whether a RNP procedure introduced only every 50 movements was sufficient to maintain both performance and a reliable neutral posture. Comparisons between our dependent variables (i.e., success rate, movement time and shoulder spread volume, cf. Technical Validation section) obtained in both conditions will indicate whether this is the case. If performance and stable posture are maintained despite the RNP procedure being introduced only every 50 movements, future protocols using this pick-and-place task might use it as this would simplify and accelerate the procedure as compared to a RNP introduced after each single movement.

Data Records

The data recorded during the whole experiment, and the code used for data exploration (see following sections), are available on the open repository Zenodo49. The repository also included a Documentation folder with files useful to understand the structure of the dataset and the code provided.

The files are arranged by subject with each subject folder, denominated by the letter “s” and the subject number, containing the following files:

-

“subj_info.json”: information about the subject (body height and upper limb segment dimensions)

-

“range_of_motion_determination.json”: data acquired during the range of motion determination

-

“range_of_motion.csv”: ranges of motion computed from the data acquired during the range of motion determination

-

for each phase (Initial Acquisition, Test RNP after pause, Test RNP after target pairs):

-

“phase.json”: data acquired during the phase,

-

“targets_phase.json”: target set used in the phase,

-

“exclude_targets_phase.json”: lists of targets of the phase that should not be considered due to sensor “freeze” or “jump”,

-

“exclude_times_phase.json”: lists of time intervals of the phase containing sensors “freeze” or “jump”,

-

“exclude_indexs_phase.json”: lists of timestamp indexes of the phase containing sensors “freeze” or “jump”.

-

The files “exclude_targets_phase.json”, “exclude_times_phase.json”, and “exclude_indexs_phase.json” are generated by the code “excludes_generator.py”, which can be found in the folder “code_python” inside “DBAS22_CodeOnline”. This procedure was designed to remove measurement errors associated with motion capture, by applying two filters: one for “freezing” behaviour, defined as a portion lasting at least 0.5s where a sensor’s measurement has not changed value, and one for “jumping” behaviour, when a sensor’s measurement has jumped in position by at least 10 cm between two samples. This code generates therefore three lists (one of target numbers, one of time intervals and one of timestamps indexes) of materials that should not be considered when analyzing the data. On average, this filtering process led to the exclusion of 2.6% of targets per participant during each phase.

In the Documentation folder, the file “MainDataExplained” exhaustively describes all the variables recorded. All data is expressed in the world coordinate system if not specified otherwise in the name of the variable.

Two arms information, referred to as the virtual arm and the custom arm, are acquired during the experiment. Both arms are composed of four elements - shoulder, elbow, wrist and hand (end-effector) - for which 3D positions and orientations are recorded. The virtual arm is a 9-DOFs arm directly linked to the sensors’ information, which has been further corrected for errors in humeral rotation. Indeed, soft tissues around the biceps and the triceps are such that the upper arm sensor is not able to follow accurately the humeral rotation. To counter this, we applied the same method as designed in6 to correct errors in humeral rotation based on additional information from the forearm sensor. Then, the virtual arm was simplified, switching from 9-DOFs to 7-DOFs to obtain the custom arm which matches an ideal anatomical arm description. This was achieved by extracting, from the segment orientations of the 9-DOFs kinematic chain of the virtual arm, the seven joint angles that follow an ideal arm kinematic model, composed of three DOFs at shoulder level, one DOF at elbow level and three DOFs at wrist level (see Supplementary Fig. 1, and6 for further details).

Other data acquired during the experiment include time, position and orientation of the left shoulder, the trunk and the neck, as well as other useful information including the target (position, orientation, type and number) and eyes data (position of the pupils and direction of the gaze for right and left eye).

Technical Validation

As explained in the Protocol section, the IA phase was designed to generate data useful to sample more accurately the reachable workspace which is specific to each participant, and the two RNP phases were designed to prevent changes or drifts in initial posture that might occur from movement to movement. To assess those, three dependent variables were computed for each participant and phase of the experiment, and subjected to statistical treatment (Friedman tests, followed if significant by post-hoc Conover tests with Bonferroni correction; for more details, please refer to the file “statistical_analysis.R” in the Zenodo repository49): success rate (the percentage of pick-and-place movements validated in a phase), movement time (the median movement time associated with all validated movements of a phase), and shoulder spread volume (the volume of the ellipsoidal region containing 97% of the shoulder positions recorded while producing all validated movements of a phase, as a proxy of postural stability and body compensation within a phase2). Considering the high success rates obtained in all the phases (medians above 96%), movement times and shoulder spread volumes were calculated on successful pick-and-place movements only.

As illustrated in Fig. 5, success rates were significantly lower and movement times significantly longer in the IA phase than in the two following RNP phases (IA vs RNP AP vs RNP ATP; n = 20; median success rates of 96.3% vs 98.2% vs 99.4%; Friedman test chi.sq = 24.08, p < 0.001; IA vs RNP AP, p = 0.002; IA vs RNP ATP, p < 0.001; median movement times of 1.61s vs 1.30s vs 1.22s; Friedman test chi.sq = 32.5, p < 0.001; IA vs RNP AP, p < 0.001; IA vs RNP ATP, p < 0.001). This is consistent with the view that some targets, drawn randomly within the range of motion determined individually at each joint of a participant, were difficult to reach, and that the set of targets established on the basis of movements actually produced in the IA phase, and used in the RNP phases, provides a better sample of the reachable space of each participant. The difficulty to reach some targets of the IA phase is further corroborated by four times difference in shoulder spread volumes between this condition and the other RNP phases (IA vs RNP AP vs RNP ATP; n = 20; median shoulder spread volumes of 0.76 vs 0.17 vs 0.21; Friedman test chi.sq = 25.2, p < 0.001; IA vs RNP AP, p = 0.001; IA vs RNP ATP, p < 0.001).

When comparing between the two RNP phases, none of the dependent variables exhibited a significant difference. Pick-and-place movements were therefore performed with similarly high success rates (RNP AP vs RNP ATP; median success rates of 98.2% vs 99.4%; p = 0.997), reliable movement times (RNP AP vs RNP ATP; movement times of 1.30s vs 1.22s; p = 0.366) and led to similar shoulder spread volumes (RNP AP vs RNP ATP; median shoulder spread volumes of 0.17 vs 0.21; p = 1), whether the return to a neutral posture procedure was introduced every 50 pick-and-place movements, or between each pick-and-place movements. This lack of difference across RNP phases means that returning to a baseline posture only every 50 pick-and-place movements is sufficient to prevent postural changes or drifts, thereby allowing the release of postural constraints in future protocols using this task.

Explored workspace

To provide an overview of the workspace effectively covered by our dataset, we computed from all arm configurations outputted by the GNG algorithm the corresponding targets (represented as red arrows in Fig. 6) using forward kinematics with the limb dimensions of the avatar depicted in the figure. From the initial workspace, defined by the ranges of motion of the participants and represented by the targets selected within those ranges of motion for the Initial Acquisition phase, the workspace defined by the GNG algorithm has shrunk by 13.74% (i.e., averaged reduction in the range of motion at each angular joint). Although this might appear to contradict our goal of maximizing the workspace covered by participants, experimental results confirmed that this was the price to pay to ensure (medians above 98%) with minimal postural adjustments (medians below 0.22 dm3), thereby keeping the focus on natural arm movements.

When comparing pick-and-place tasks, existing datasets offer a limited selection of object poses with few orientations (e.g., the U-Limb dataset10 includes only 20 object poses). In our dataset, the target set included a wide range of positions and orientations, and each participant reached 200 different object poses, allowing for a more comprehensive exploration of the workspace reachable with natural arm movements, as illustrated in Supplementary Fig. 2.

Usage Notes

We provide here a brief overview of two methods that we have implemented to analyze and manipulate this dataset: a python code for basic data analyses such as those presented in Figs. 5 and 7, and a Unity project called DataPlayer, to playback and visualize previously recorded data, as well as to generate new synthetic data with various visual backgrounds and various objects.

Data collected from participant 8, phase RNP AP, targets 15, 16 and 17. Blue lines represent the target appearance. The red shaded areas represent the time window during which the position and orientation tolerances are met to validate the target (automatic validation after 0.6s of consecutive time within the tolerances). (a) Time series of joint angles (see Supplementary Fig. 1 for a detailed explanation of each angle). (b) Time series of the 3D distance between the hand and the target. (c) Time series of the 2D distance (x, y in an egocentric view) between the point of gaze and the target.

Data analysis

The file “data_analysis.py” in the folder “code_python” contains the code used to analyze the data and generate Fig. 5. It includes several useful functions for handling and analyzing the data, such as extracting, filtering the raw data, computing and plotting variables of interest. We also included some basic code to visualize the time series of the angles, of the 3D hand-target distance, and of the 2D gaze-target distance in egocentric views. All of those are displayed in Fig. 7, which was generated using this code.

DataPlayer

We implemented a Unity project, the DataPlayer, allowing to playback the data recorded during the experiment. When selecting a file from the dataset, the DataPlayer reproduces the participant’s arm movements produced during the pick-and-place task, together with gaze data, including the pupil axis and the point of gaze computed with the method described in55. Figure 8a shows the interface of the DataPlayer. At the bottom, a control panel allows users to adjust replay speed, trigger pauses, or rewind as needed. In the upper-right corner, some text displays essential information about the data, such as the time, the target number and if the tolerances for target validation are met (tgtRed). In the lower-left corner, a button allows the user to switch between two viewing modes: egocentric and free. In the egocentric view (Fig. 8, left image), the perspective matches that of the participant during the experiment, while in the free mode (Fig. 8, right image), the user can navigate around the scene, explore it from various angles and select a preferred view.

DataPlayer showing an egocentric view (left image) from participant 8 reaching target 23 of the phase RNP AP. DataPlayer showing the same data from a different viewpoint (right image), selected using the free mode. The two white lines represent the pupil axis, and the white sphere the point the participant is looking at.

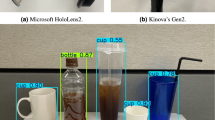

The DataPlayer, being a Unity project, offers extensive customization options, granting users the freedom to tailor every aspect of the virtual environment according to their preferences and requirements. Users have the flexibility to modify various elements, including the shape, color, and dimensions of the target object. Furthermore, they can manipulate the surrounding environment by adding or removing objects, and adjust other parameters as needed (e.g., lighting conditions). Customization proves particularly advantageous in applications where the environment must reflect specific contexts. For instance, the ability to modify objects, attributes, and scenarios provides a fertile ground for computer vision scientists aiming to develop and test robust 6D pose estimation algorithms from egocentric vision with gaze. The project’s malleability allows for simulating diverse real-world scenarios, transforming, for example, the environment into a kitchen setting, complete with culinary items, as shown in Fig. 9 and in Video 3. To facilitate this exploration and testing, we have integrated an option in the interface enabling the generation and recording of synthetic data based on the recorded data. This feature preserves the user’s movements, gaze data, and target object poses while allowing for modifications to all visual aspects of the environment. Consequently, researchers can leverage this capability to develop and evaluate computer vision algorithms for recognizing objects in complex environments, as the ground truth is known by design. This might be considered an intermediate step compared to object recognition in a completely uncontrolled environment of natural egocentric video, such as in the Grasping-in-the-Wild (GITW) dataset from56.

Videos

The following videos are also available on the open repository Zenodo49.

Video 1 :https://youtu.be/4ZRYN6ljeCQ

A participant performing the pick-and-place task with the Return to Neutral Posture (RNP) procedure between each pair of pick-and-place movements.

Video 2 :https://youtu.be/2lSEX_VLK4A

Tutorial on how to launch and use the DataPlayer to replay and visualize data in 3D.

Video 3 :https://youtu.be/RZhN5IR34_c

Examples of new video data generated with the DataPlayer by combining real data about arm and gaze with various fictitious visual backgrounds and objects.

Code availability

Data and codes are all available on the open repository Zenodo49. This includes the script “data_analysis.py” used for the technical validation and the data analysis described in the Usage Notes, and the DataPlayer which is provided in two versions: a user-friendly stand-alone app which does not require any software installation to replay data as illustrated in Fig. 8 and Video 2, and a Unity project (folder “code_unity”) which is customizable at wish, as illustrated in Fig. 9 and Video 3. In the Documentation folder, the file “DataPlayerGuide” contains a tutorial on how to run the project; the file “CodeExplanations” lists and explains all the code scripts related to data analysis; and the document “GuideInstall” provides a tutorial on how to install the environment and the correct packages to run the code.

References

Gloumakov, Y., Bimbo, J. & Dollar, A. M. Trajectory control-an effective strategy for controlling multi-DOF upper limb prosthetic devices. IEEE Transactions on Neural Systems and Rehabilitation Engineering 30, 420–430, https://ieeexplore.ieee.org/document/9714908/ (2022).

Mick, S. et al. Shoulder kinematics plus contextual target information enable control of multiple distal joints of a simulated prosthetic arm and hand. Journal of NeuroEngineering and Rehabilitation 18, 3, https://jneuroengrehab.biomedcentral.com/articles/10.1186/s12984-020-00793-0 (2021).

Popovic, M. & Popovic, D. Cloning biological synergies improves control of elbow neuroprostheses. IEEE Engineering in Medicine and Biology Magazine 20, 74–81, http://ieeexplore.ieee.org/document/897830/ (2001).

Kaliki, R. R., Davoodi, R. & Loeb, G. E. Evaluation of a noninvasive command scheme for upper-limb prostheses in a virtual reality reach and grasp task. IEEE Transactions on Biomedical Engineering 60, 792–802, http://ieeexplore.ieee.org/document/6138294/ (2013).

Merad, M. et al. Assessment of an automatic prosthetic elbow control strategy using residual limb motion for transhumeral amputated individuals with socket or osseointegrated prostheses. IEEE Transactions on Medical Robotics and Bionics 2, 38–49, https://ieeexplore.ieee.org/document/8972569/ (2020).

Segas, E. et al. Intuitive movement-based prosthesis control enables arm amputees to reach naturally in virtual reality. eLife 12, RP87317, https://elifesciences.org/articles/87317 (2023).

Montagnani, F., Controzzi, M. & Cipriani, C. Exploiting arm posture synergies in activities of daily living to control the wrist rotation in upper limb prostheses: A feasibility study. In 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 2462–2465, http://ieeexplore.ieee.org/document/7318892/ (IEEE, 2015-08).

Huang, Y., Bianchi, M., Liarokapis, M. & Sun, Y. Recent data sets on object manipulation: A survey. Big Data 4, 197–216, http://www.liebertpub.com/doi/10.1089/big.2016.0042 (2016).

Mandery, C., Terlemez, O., Do, M., Vahrenkamp, N. & Asfour, T. The KIT whole-body human motion database. In 2015 International Conference on Advanced Robotics (ICAR), 329–336, http://ieeexplore.ieee.org/document/7251476/ (IEEE, 2015-07).

Averta, G. et al. U-limb: A multi-modal, multi-center database on arm motion control in healthy and post-stroke conditions. GigaScience 10, giab043, https://academic.oup.com/gigascience/article/doi/10.1093/gigascience/giab043/6304920 (2021).

Rempe, D. et al. HuMoR: 3d human motion model for robust pose estimation. http://arxiv.org/abs/2105.04668 (2021-08-18).

Corona, E., Pumarola, A., Alenyà, G. & Moreno-Noguer, F. Context-aware human motion prediction. http://arxiv.org/abs/1904.03419 (2020-03-23).

Vatsal, V. & Hoffman, G. The wearable robotic forearm: Design and predictive control of a collaborative supernumerary robot. Robotics 10, 91, https://www.mdpi.com/2218-6581/10/3/91 (2021).

Schwarz, A. et al. Characterization of stroke-related upper limb motor impairments across various upper limb activities by use of kinematic core set measures. Journal of NeuroEngineering and Rehabilitation 19, 2, https://jneuroengrehab.biomedcentral.com/articles/10.1186/s12984-021-00979-0 (2022).

Kanzler, C. M. et al. A low-dimensional representation of arm movements and hand grip forces in post-stroke individuals. Scientific Reports 12, 7601, https://www.nature.com/articles/s41598-022-11806-4 (2022).

Kühn, J. et al. The synergy complement control approach for seamless limb-driven prostheses. Nature Machine Intelligence 6, 481–492, https://www.nature.com/articles/s42256-024-00825-7 (2024).

O’Reilly, D. & Delis, I. Dissecting muscle synergies in the task space. eLife 12, RP87651, https://elifesciences.org/articles/87651 (2024).

Sommerhalder, M. et al. Trajectory optimization framework for rehabilitation robots with multi-workspace objectives and constraints. IEEE Robotics and Automation Letters 8, 6819–6826, https://ieeexplore.ieee.org/document/10238755/ (2023).

Hu, T., Kuehn, J. & Haddadin, S. Identification of human shoulder-arm kinematic and muscular synergies during daily-life manipulation tasks. In 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), 1011–1018, https://ieeexplore.ieee.org/document/8487190/ (IEEE, 2018-08).

Liu, Y. Gen6d: Generalizable model-free 6-DoF object pose estimation from RGB images. https://doi.org/10.48550/arXiv.2204.10776 (2023).

Nguyen, V., Du, Y., Xiao, Y., Ramamonjisoa, M. & Lepetit, V. PIZZA: A powerful image-only zero-shot zero-CAD approach to 6 DoF tracking. https://doi.org/10.48550/arXiv.2209.07589 (2022).

Labbé, Y., Carpentier, J., Aubry, M. & Sivic, J. CosyPose: Consistent multi-view multi-object 6d pose estimation. https://doi.org/10.48550/arXiv.2008.08465 (2020).

Labbé, Y. et al. MegaPose: 6d pose estimation of novel objects via render & compare. https://arxiv.org/abs/2212.06870 (2022).

Rogez, G., Supancic, J. S. & Ramanan, D. First-person pose recognition using egocentric workspaces. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 4325–4333, http://ieeexplore.ieee.org/document/7299061/ (IEEE, 2015-06).

Calli, B. et al. The YCB object and model set: Towards common benchmarks for manipulation research. In 2015 International Conference on Advanced Robotics (ICAR), 510–517, http://ieeexplore.ieee.org/document/7251504/ (IEEE)

Li, C. et al. Simultaneous multiple object detection and pose estimation using 3d model infusion with monocular vision. https://arxiv.org/abs/2211.11188 (2022).

Desmurget, M. et al. Postural and synergic control for three-dimensional movements of reaching and grasping. Journal of Neurophysiology 74, 905–910, https://www.physiology.org/doi/10.1152/jn.1995.74.2.905 (1995).

Desmurget, M. & Prablanc, C. Postural control of three-dimensional prehension movements. Journal of Neurophysiology 77, 452–464, https://www.physiology.org/doi/10.1152/jn.1997.77.1.452 (1997).

Todorov, E. & Jordan, M. I. Optimal feedback control as a theory of motor coordination. Nature Neuroscience 5, 1226–1235 https://www.nature.com/articles/nn963 (2002).

Scholz, J. P. & Schöner, G. The uncontrolled manifold concept: identifying control variables for a functional task. Experimental Brain Research 126, 289–306, http://link.springer.com/10.1007/s002210050738 (1999).

Latash, M. L., Scholz, J. P. & Schoner, G. Motor control strategies revealed in the structure of motor variability:. Exercise and Sport Sciences Reviews 30, 26–31, http://journals.lww.com/00003677-200201000-00006 (2002).

Cruse, H., Brüwer, M. & Dean, J. Control of three- and four-joint arm movement: Strategies for a manipulator with redundant degrees of freedom. Journal of Motor Behavior 25, 131–139, http://www.tandfonline.com/doi/abs/10.1080/00222895.1993.9942044 (1993).

Rossetti, Y., Meckler, C. & Prablanc, C. Is there an optimal arm posture? deterioration of finger localization precision and comfort sensation in extreme arm-joint postures. Experimental Brain Research 99 http://link.springer.com/10.1007/BF00241417 (1994-05).

Atkeson, C. & Hollerbach, J. Kinematic features of unrestrained vertical arm movements. The Journal of Neuroscience 5, 2318–2330, https://www.jneurosci.org/lookup/doi/10.1523/JNEUROSCI.05-09-02318.1985 (1985).

Flash, T. & Hogan, N. The coordination of arm movements: an experimentally confirmed mathematical model. The Journal of Neuroscience 5, 1688–1703, https://www.jneurosci.org/lookup/doi/10.1523/JNEUROSCI.05-07-01688.1985 (1985).

Viviani, P. & Flash, T. Minimum-jerk, two-thirds power law, and isochrony: converging approaches to movement planning. Journal of Experimental Psychology: Human Perception and Performance 21, 32–53, http://doi.apa.org/getdoi.cfm?doi=10.1037/0096-1523.21.1.32 (1995).

Hagiwara, T., Ganesh, G., Sugimoto, M., Inami, M. & Kitazaki, M. Individuals prioritize the reach straightness and hand jerk of a shared avatar over their own. iScience 23, 101732, https://linkinghub.elsevier.com/retrieve/pii/S2589004220309299 (2020).

Pelz, J., Hayhoe, M. & Loeber, R. The coordination of eye, head, and hand movements in a natural task. Experimental Brain Research 139, 266–277, http://link.springer.com/10.1007/s002210100745 (2001).

Vercher, J., Magenes, G., Prablanc, C. & Gauthier, G. Eye-head-hand coordination in pointing at visual targets: spatial and temporal analysis. Experimental Brain Research 99, http://link.springer.com/10.1007/BF00228987 (1994).

Henriques, D. Y. P., Medendorp, W. P., Gielen, C. C. A. M. & Crawford, J. D. Geometric computations underlying eye-hand coordination: orientations of the two eyes and the head. Experimental Brain Research 152, 70–78, http://link.springer.com/10.1007/s00221-003-1523-4 (2003).

Nann, M. et al. Restoring activities of daily living using an EEG/EOG-controlled semiautonomous and mobile whole-arm exoskeleton in chronic stroke. IEEE Systems Journal 15, 2314–2321, https://ieeexplore.ieee.org/document/9199380/ (2021).

Kim, S., Kim, C. & Park, J. Human-like arm motion generation for humanoid robots using motion capture database. In 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, 3486–3491, http://ieeexplore.ieee.org/document/4058941/ (IEEE, 2006-10).

De Momi, E., Kranendonk, L., Valenti, M., Enayati, N. & Ferrigno, G. A neural network-based approach for trajectory planning in robot-human handover tasks. Frontiers in Robotics and AI3 http://journal.frontiersin.org/Article/10.3389/frobt.2016.00034/abstract (2016-06-27).

Koskinopoulou, M. & Trahanias, P. A methodological framework for robotic reproduction of observed human actions: Formulation using latent space representation. In 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), 565–572, http://ieeexplore.ieee.org/document/7803331/ (IEEE, 2016-11).

Koppenborg, M., Nickel, P., Naber, B., Lungfiel, A. & Huelke, M. Effects of movement speed and predictability in human-robot collaboration. Human Factors and Ergonomics in Manufacturing & Service Industries 27, 197–209, https://onlinelibrary.wiley.com/doi/10.1002/hfm.20703 (2017).

Dragan, A. & Srinivasa, S. Familiarization to robot motion. In Proceedings of the 2014 ACM/IEEE international conference on Human-robot interaction, 366–373, https://doi.org/10.1145/2559636.2559674 (ACM, 2014-03-03).

Gulletta, G., Erlhagen, W. & Bicho, E. Human-like arm motion generation: A review. Robotics 9, 102, https://www.mdpi.com/2218-6581/9/4/102 (2020).

Oldfield, R. The assessment and analysis of handedness: The edinburgh inventory. Neuropsychologia 9, 97–113, https://doi.org/10.1016/0028-3932(71)90067-4 (1971).

Lento, B. et al. Data and code for 3d-ARM-gaze: a public dataset of 3d arm reaching movements with gaze information in virtual reality. Zenodo https://doi.org/10.5281/zenodo.10567366 (2024-01-25).

O’Brien, J., Bodenheimer, R., Brostow, G. & Hodgins, J. Automatic joint parameter estimation from magnetic motion capture data. Proceedings of Graphics Interface 2000 Montréal, In collab. with Fels, S. & Poulin, P. Artwork Size: 8 pages, 1.17 MB. ISSN: 0713-5424, http://graphicsinterface.org/proceedings/gi2000/gi2000-9/ (2000).

Montagnani, F., Controzzi, M. & Cipriani, C. Is it finger or wrist dexterity that is missing in current hand prostheses? IEEE Transactions on Neural Systems and Rehabilitation Engineering 23, 600–609, https://ieeexplore.ieee.org/document/7031898/ (2015).

Major, M. J., Stine, R. L., Heckathorne, C. W., Fatone, S. & Gard, S. A. Comparison of range-of-motion and variability in upper body movements between transradial prosthesis users and able-bodied controls when executing goal-oriented tasks. Journal of NeuroEngineering and Rehabilitation 11, 132, http://jneuroengrehab.biomedcentral.com/articles/10.1186/1743-0003-11-132 (2014).

Light, C. M., Chappell, P. H. & Kyberd, P. J. Establishing a standardized clinical assessment tool of pathologic and prosthetic hand function: Normative data, reliability, and validity. Archives of Physical Medicine and Rehabilitation 83, 776–783, https://linkinghub.elsevier.com/retrieve/pii/S0003999302095795 (2002).

Fritzke, B. A growing neural gas network learns topologies. Neural Information Processing Systems 7 (1995-03).

Bailakanavar, M., Liu, Y., Fish, J. & Zheng, Y. Automated modeling of random inclusion composites. Engineering with Computers 30, 609–625, http://link.springer.com/10.1007/s00366-012-0310-x (2014).

González-Díaz, I., Benois-Pineau, J., Domenger, J.-P., Cattaert, D. & De Rugy, A. Perceptually-guided deep neural networks for ego-action prediction: Object grasping. Pattern Recognition 88, 223–235, https://linkinghub.elsevier.com/retrieve/pii/S0031320318304011 (2019).

Acknowledgements

This work was supported by a PhD grant from the French Direction Générale de l’Armement (DGA) awarded to B.L., and the ANR-PRCE grant I-Wrist (ANR-23-CE19-0031-01) awarded to A.d.R., R.P. and J.B.-P.

Author information

Authors and Affiliations

Contributions

A.d.R., J.B.-P. and R.P. conceived the study and obtained funding for it. B.L. designed the protocol, performed data acquisition and data processing, organized all materials for the manuscript, and wrote the initial version of the manuscript. A.d.R., E.S. and V.L. participated in the design of the protocol. V.L. contributed to the organization of the materials for the manuscript, including code and data analysis. E.D. performed participants’ inclusion and data acquisition. F.D. contributed to the conception of the study and the writing of the manuscript. All authors reviewed and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lento, B., Segas, E., Leconte, V. et al. 3D-ARM-Gaze: a public dataset of 3D Arm Reaching Movements with Gaze information in virtual reality. Sci Data 11, 951 (2024). https://doi.org/10.1038/s41597-024-03765-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-024-03765-4