Abstract

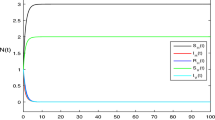

In this paper, we investigate a stochastic hybrid switching DS-I-A epidemic model. The extinction and the prevalence of the disease are discussed, and so, the threshold is given. Furthermore, the sufficient conditions for the existence of positive recurrence of the solutions are established by stochastic Lyapunov functions. At last, some examples and simulations are provided to illustrate our results.

Similar content being viewed by others

Introduction

Human immunodeficiency virus (HIV) infection is characterized by three different phases, namely the primary infection, clinically asymptomatic stage (chronic infection), and acquired immunodeficiency syndrome (AIDS) or drug therapy. Basic developed techniques for measuring HIV RNA levels are allowing researchers to develop a picture of HIV infection patterns. HIV–1 RNA levels in plasma and serum become extremely high during the 1–2 weeks of acute primary infection, before there was a detectable immune response1,2. These levels are higher than at any other time during infection. Acute primary infection is followed by a chronic phase. During the chronic phase, HIV RNA levels drop several orders of magnitude and remain ‘nearly constant’ for years3,4,5, where ‘nearly constant’ includes fluctuations that are less than an order of magnitude up and down for about 90% of the cohort and less than a factor of 100 for the remaining4. Fluctuations may be caused by transient illnesses and vaccinations. Successful therapy causes a drop in the viral load to a new level that is maintained until viral resistance develops6. Viral levels differ by many orders of magnitude between individuals. Those people with high viral loads in the chronic phase tend to progress rapidly to AIDS, whereas those with very low loads tend to be slow or nonprogressors4,5,7,8. During late chronic infection, there is a small increase in HIV-1 RNA levels, at most tenfold, in many individuals3.

Mathematical modeling is useful for understanding the spread of HIV/AIDS. Thus various models have been developed to describe the spread of this disease according to its characteristics, see refs9,10,11,12,13. A simple homogeneous AIDS model has given by the following system of ODEs13. The model classifies the sexually active population into three classes that are: susceptibles, infectives and AIDS cases, with population numbers in each class denoted as functions of time by S(t), I(t) and A(t) respectively. Sexually mature susceptibles S(t), contain sexually mature people in the population who have had no contact with the virus. This compartment increases through maturation of individuals into sexually mature age group and decreases by contagion going to the next compartment and natural death. Sexually mature infectives I(t), contains sexually mature individuals who are infected with the virus but have not yet developed AIDS symptoms. The number of people in this group would decrease through natural death and development to AIDS after a certain stay in this class (develop symptomatic AIDS). AIDS cases A(t), are those individuals who have developed fully symptomatic AIDS and exhibit specific clinical features and this class would decrease by natural death and AIDS-related death. The population is assumed to be uniform and homogeneously mixing. The total adult population and sexually interacting adult population are denoted by N(t) = S(t) + I(t). A recruitment-death demographic structure is assumed. Individuals enter the susceptible class at a constant rate μS 0 > 0. The natural death rate is assumed to be proportional to the population number in each class, with rate constant μ > 0. The model assumes a constant rate γ > 0 of development to AIDS. In addition, there is an AIDS-related death in the AIDS class which is assumed to be proportional to the population number in that class, with rate constant δ > 0 which is the sum of natural mortality rate and mortality due to illness. In most epidemiological models, bilinear incidence rate is frequently used. The incidence implies that the contact number between susceptible individuals and infected individuals is proportional to susceptible individuals. But it is more realistic that the rate of infection depends on the transmission probability per contact of individuals and the proportion of individuals. Thus the model assumes standard incidence of the form \(\frac{\beta \alpha S(t)\,I(t)}{N(t)}\) where β > 0 is the probability of being infected from a new sexual partner, α > 0 is the is the susceptibility of susceptible individuals and βα > 0 becomes the average number of effective contacts of one infective individual per unit time:

where the parameters μ, S 0, α, γ and \(\delta \in {{\mathbb{R}}}_{+}\). (1.1) assume a homogeneous susceptible population such that there is one group of susceptible individuals, the mean number of contacts, the mean transmission probability, and the mean duration of infection can be defined so that the reproductive number can be always given as the product of these three means. However genetic evidence now exists that individuals may be exhibiting differential susceptibility to the infection. Host genetic factors play a major role in determining the susceptibility to infectious diseases. Further studies are needed to determine the hosts differential susceptibility to various disease as well as its implications to public health. In ref.13, James M. Hyman and Jia Li, formulated compartmental differential susceptibility (DS) susceptible-infective-AIDS (SIA) models by dividing the susceptible population into multiple subgroups according to the susceptibility of individuals in each group. They derived an explicit formula for the reproductive number of infection for each model. They further proved that the infection-free equilibrium and endemic equilibria of each model were globally asymptotically stable. In this paper, we consider a simple differential susceptibility (DS) model in which the infected population is homogeneous13:

in which \(N(t)={\sum }_{k=1}^{n}\,{S}_{k}(t)+I(t)\), \({S}_{i}(t)\,(i=1,2,\ldots ,n)\) denote the n individuals susceptible to infection subgroups. Hence, the individuals in each group have homogeneous susceptibility, but the susceptibilities of individuals from different groups are distinct. The susceptibles are distributed into n susceptible subgroups based on their inherent susceptibilities. This is done in such a way that the input flow into group S k is \(\mu {S}_{k}^{0}\) (k = 1, 2, …, n); α k (k = 1, 2, …, n) the susceptibility of susceptible individuals in subgroup I and \(\frac{\beta {\alpha }_{k}I(t)\,{S}_{k}(t)}{N(t)}\) the standard incidence ratio of susceptible subgroups S k . Since the dynamics of group A has no effect on the disease transmission dynamics, thus we only consider

The threshold conditions can be calculated which determine whether an infectious disease will spread in susceptible population when the disease is introduced into the crowed, according to research the disease free equilibrium \({E}_{0}({S}_{1}^{0},{S}_{2}^{0},\ldots ,{S}_{n}^{0},\mathrm{0)}\) of system (1.3) in ref.14.

And reproductive number obtained in ref.13

If R 0 < 1, E 0 is local asymptotic stabile and disease extinct. When R 0 > 1, then E 0 is unstable and the disease will persistent existence (see ref.13). The effective contact rate of infected individual in subgroup S k (k = 1, 2, …, n) is α k β(k = 1, 2, …, n). So for initial time \(({S}_{i}={S}_{i}^{0})\), the average effective contact rate of infected individual in subgroup S k (k = 1, 2, …, n) is \(\frac{\beta {\sum }_{k=1}^{n}\,{\alpha }_{k}{S}_{k}^{0}}{{\sum }_{k=1}^{n}\,{S}_{k}^{0}}\). \(\frac{1}{\mu +\gamma }\) the average disease period of infected individuals. So R 0 is basic reproductive number.

It is well recognized fact that real life is full of randomness and stochasticity. Hence the epidemic models are always affected by the environmental noise (in cite refs15,16,17,18,19,20,21,22). In refs23,24,25,26,27,28, the stochastic models may be more convenient epidemic models in many situations29. Have previously used the technique of parameter perturbation to examine the effect of environmental stochasticity in a model of AIDS and condom use. They found that the introduction of stochastic noise changes the basic reproduction number of the disease and can stabilize an otherwise unstable system. Thus in this paper, we first introduce white noise to consider the small perturbation in environment. To establish the stochastic differential equation (SDE) model, we naturally use the equation in the form of differential

Here [t, t + Δt) is a small time interval and d⋅ for the small change. For example dS k (t) = S k (t + dt) − S k (t), 1 ≤ k ≤ n and the change dS k (t) is described by (1.5). Consider the effective contact rate constant of infected individual βα k , 1 ≤ k ≤ n in the deterministic model. The total number of newly increased I in the small interval [t, t + dt) is

Now suppose that some stochastic environment factors act simultaneously on each subgroups in the disease. In this case, βα k , 1 ≤ k ≤ n changes to a random variable \(\tilde{\beta {\alpha }_{k}}\), 1 ≤ k ≤ n. More precisely

Here dB k (t) = B k (t + dt) − B k (t) (k = 1, 2, …, n) is the increment of a standard Brownian motion. And B k (t) (k = 1, 2, …, n) are independent standard Brownian motions with B k (0) = 0 (k = 1, 2, …, n) and \({\sigma }_{k}^{2} > 0\) \((k=1,2,\ldots ,n)\) denote the intensities of the white noise. Thus the number of newly increasing I that each subgroups S k , 1 ≤ k ≤ n infected in [t, t + dt] is normally distributed with mean βα k dt and variance \({\sigma }_{k}^{2}dt\), where k = 1, 2, …, n.

Therefore we replace βα k dt in equation (1.5) by \(\tilde{\beta {\alpha }_{k}}dt=\beta {\alpha }_{k}dt+{\sigma }_{k}dB(t)\) to get

Note that βα i dt now denotes the mean of the stochastic number of S i infected in the infinitesimally small time interval [t, t + dt). Similarly, the first equation of (1.3) becomes another SDE. That is, the deterministic infectious diseases model (1.3) becomes the \(It\,\hat{o}\)’s SDE

Other parameters are the same as in system (1.3). Besides white noise, epidemic models may be disturbed by telegraph noise which makes population systems switch from one regime to another. Let us now take a further step by considering another type of environmental noise, namely, color noise, say telegraph noise (see refs30 and31). The telegraph noise can be illustrated as a switching between two or more regimes of environment, which differs by factors such as nutrition or as rain falls32,33,34,35. For example, the growth rate for some fish in dry season will be much different from it in rainy season. Telegraph noise can also be illustrated as a switching between different environments, which differ by factors such as climatic characteristics or socio-cultural factors. The latter may cause the disease to spread faster or slower. Frequently, the switching among different environments is memoryless and the waiting time for the next switch is exponentially distributed36. Therefore the regime switching can be modeled by a continuous time finite-state Markov chain (ξ(t)) t≥0 with values in a finite state space \( {\mathcal M} =\{1,2,\ldots ,L\}\). In this paper, we consider the HIV disease spread between environmental regimes. Because the HIV epidemic model may be influenced by different social cultures, we also introduce the telegraph noise to consider HIV disease spread between different social cultures that is the large disturbance in environment. That is following stochastic DS-I-A model disturbed by white and telephone noises.

The switching between these L regimes is governed by a Markov chain on the state space \( {\mathcal M} =\{1,2,\ldots ,L\}\). The DS-I-A systems under regime switching can therefore be described by the following stochastic model (SDE):

where ξ(t) is a continuous time Markov chain with values in finite state space \( {\mathcal M} =\{1,2,\ldots ,L\}\), the parameters \(\mu (l)\), \({S}_{k}^{0}(l)\), \({\sigma }_{k}(l)\), \(\beta (l)\), \({\alpha }_{k}(l)\), \(\gamma (l)\), \(k=1,2,\ldots ,n\), are all positive constants for each \(l\in {\mathcal M} \). This system is operated as follows: If ξ(1) = l 1, the system obeys systems (1.7) with l = l 1 till time τ 1 when the Markov chain jumps to l 2 from l 1; the systems will then obey (1.7) with l = l 2 from τ 1 till τ 2 when the Markov chain jumps to l 3 from l 2. The system will continue to switch as long as the Markov chain jumps. In other words, the SDE (1.6) can be regarded as (1.7) switching from one to another according to the law of the Markov Chain. Each of (1.7) \(l\in {\mathcal M} \) is hence called a subsystem of the SDE (1.6). We aim to investigate the positive recurrence and extinction. Since system (1.6) is perturbed by both white and telegraph noises, the existence of positive recurrence of the solutions is an important issue. However, to the best of our knowledge, there has been no result related this. In this paper, we attempt to do some work in this field to fill the gap. The theory we used is developed by Zhu and Yin ref.37. The key difficulty is how to construct a suitable Lyapunov function and a bounded domain. So one of the main aim of this paper is to establish sufficient conditions for the existence of positive recurrence of the solutions to system (1.6).

This paper is organized as follows. In Section 2, we present some preliminaries that will be used in our following analysis. In Section 3, we show that there exists a unique global positive solution of system (1.8). In Section 4, we establish sufficient conditions for extinction of disease. The condition for the disease being persistent is given in Sections 5. In Section 6, we show the existence of positive recurrence.

Preliminaries

Throughout this paper, unless otherwise specified, let \(({\rm{\Omega }}, {\mathcal F} ,{\{{ {\mathcal F} }_{t}\}}_{t\ge 0},P)\) be a complete probability space with a filtration \({\{{ {\mathcal F} }_{t}\}}_{t\ge 0}\) satisfying the usual conditions(i.e. it is right continuous and \({ {\mathcal F} }_{0}\) contains all P-null sets). Denote

We consider the general n + 1-dimensional stochastic differential equation

with initial value \(x({t}_{0})={x}_{0}\in {{\mathbb{R}}}^{n+1}\), where B(t) denotes n + 1-dimensional standard Brownian motions defined on the above probability space.

Define the differential operator \( {\mathcal L} \) associated with Eq. (2.1) by

If \( {\mathcal L} \) acts on a function V ∈ C 2,1 \(({{\mathbb{R}}}^{n}\times {{\mathbb{R}}}_{+};{{\mathbb{R}}}_{+})\), then

where \({V}_{t}=\frac{\partial V}{\partial t}\), \({V}_{x}=(\frac{\partial V}{\partial {x}_{1}},\ldots ,\frac{\partial V}{\partial {x}_{n+1}})\) and \({V}_{xx}={(\frac{{\partial }^{2}V}{\partial {x}_{i}\partial {x}_{j}})}_{n+1\times n+1}\). By It \(\hat{o}\)’s formula, if x(t) is a solution of Eq. (2.1), then

In Eq. (2.1), we assume that f(0, t) = 0 and g(0, t) = 0 for all t ≥ t 0. So x(t) ≡ 0 is a solution of Eq. (2.1), called the trivial solution or equilibrium position.

By the definition of stochastic differential, the equation (2.1) is equivalent to the following stochastic integral equation

For any vector g = (g(1), …, g(L)), set \(\hat{g}={{\rm{\min }}}_{k\in {\mathcal M} }\,\{g(k)\}\) and \(\breve{g}={{\rm{\max }}}_{k\in {\mathcal M} }\,\{g(k)\}\). Suppose the generator Γ = (γ ij ) L×L of the Markov chain is given by

where δ > 0, γ ij ≥ 0 for any i ≠ j is the transition rate from i to j if i ≠ j while \({\sum }_{j=1}^{L}\,{\gamma }_{ij}=0\). In this paper, we assume γ ij > 0, for any i ≠ j. Assume further that Markov chain ξ(t) is irreducible and has a unique stationary distribution π = {π 1, π 2, …, π L } which can be determined by equation

subject to

We assume that Brownian motion and Markov chain are independent.

Let (X(t), ξ(t)) be the diffusion process described by the following equation:

where \(b(\cdot ,\cdot ):{{\mathbb{R}}}^{n}\times {\mathcal M} \to {{\mathbb{R}}}^{n},\sigma (\cdot ,\cdot ):{{\mathbb{R}}}^{n}\times {\mathcal M} \to {{\mathbb{R}}}^{n\times n}\), and D(x, l) = σ(x, l)σ T (x, l) = (d ij (x, l)). For each \(l\in {\mathcal M} \), let V(·, l) be any twice continuously differentiable function, the operator \( {\mathcal L} \) can be defined by

Existence and Uniqueness of Positive Solution

In this section we first show that the solution of system (1.8) is positive and global. To get a unique global (i.e. no explosion in a finite time) solution for any initial value, the coefficients of the equation are required to satisfy the linear growth condition and the local lipschitz condition. However, the coefficients of system (1.8) do not satisfy the linear growth condition, as the item \(\frac{\beta {\alpha }_{k}{S}_{k}(t)\,I(t)}{N(t)}\) is nonlinear. So the solution of system (1.8) may explode to infinity in a finite time. In this section, we show that the solution of system (1.8) is positive and global by using the Lyapunov analysis method.

Theorem 3.1

There is a unique positive solution X(t) = (S 1(t), S 2(t), …, S n (t), I(t)) of system (1.8) on t ≥ 0 for any initial value \(({S}_{1}\mathrm{(0)},{S}_{2}\mathrm{(0)},\ldots ,{S}_{n}\mathrm{(0)},I\mathrm{(0}))\in {{\mathbb{R}}}_{+}^{n+1}\), and the solution will remain in \({{\mathbb{R}}}_{+}^{n+1}\) with probability 1, namely, \(({S}_{1}(t),{S}_{2}(t),\ldots ,{S}_{n}(t),I(t))\in {{\mathbb{R}}}_{+}^{n+1}\) for all t ≥ 0.

Proof. Since the coefficients of the equation are locally Lipschitz continuous for given initial value \(({S}_{1}^{0},{S}_{2}^{0},\ldots ,{S}_{n}^{0},{I}_{0})\in {R}_{+}^{n+1}\). There is a unique local solution (S 1(t), S 2(t), …, S n (t), I(t)) on t ∈ [0,τ e ], where τ e is the explosion time18. To show the solution is global, we only need to verify that τ e = ∞ a.s. Let m 0 ≥ 0 be sufficiently large so that every component of X(0) lies within the interval [1/m 0, m 0]. For each m ≥ m 0, we define the stopping time

where we set \({\rm{\inf }}\,\,\varphi =\infty \) (as usual \(\varphi \) denotes the empty set) throughout the paper. According to the definition, τ m is increasing when m → ∞. Set \({\tau }_{\infty }=\mathop{\mathrm{lim}}\limits_{m\to \infty }\,{\tau }_{m}\), then τ ∞ ≤ τ e a.s. In the following, we need to prove that τ ∞ = ∞ a.s., then τ e = ∞ and \(({S}_{1}(t),{S}_{2}(t),\ldots ,{S}_{n}(t),I(t))\in {{\mathbb{R}}}_{+}^{n+1}\) a.s. for all t ≥ 0. In other words, to complete the proof all we need to show is that τ ∞ = ∞ a.s. If this assertion is violated then there exists a pair of constants T > 0 and ε ∈ (0, 1) such that

Hence there is an integer m 1 ≥ m 0 such that

For t ≤ τ m , we can see, for each m,

Therefore

Let \(C\,:=\,{\rm{\max }}\,\{{\sum }_{k=1}^{n}\,{{\check{S}}}_{k}^{0},{\sum }_{k=1}^{n}\,{S}_{k}\mathrm{(0)}+I\mathrm{(0)}\}\). Define a C 2-function \(V:{{\mathbb{R}}}_{+}^{n+1}\to {{\mathbb{R}}}_{+}\) by

The non-negativity of this function can be see from \(u-1-\,\mathrm{log}\,u\ge 0\), \(\forall u > 0\). Let m ≥ m 0 and T > 0 be arbitrary then by It \(\hat{o}\)’s formula one obtains

where M is a positive constant which is independent of S 1, S 2, …, S n , I and t. The remainder of the proof follows that in ref.38.

Remark 3.1

From Theorem 3.1 there is a unique global solution \(({S}_{1}(t),{S}_{2}(t),\ldots ,{S}_{n}(t),I(t))\in {{\mathbb{R}}}_{+}^{n+1}\) almost surely of system (1.8), for any initial value \(({S}_{1}\mathrm{(0)},{S}_{2}\mathrm{(0)},\ldots ,{S}_{n}\mathrm{(0),}\,I\mathrm{(0))}\in {{\mathbb{R}}}_{+}^{n+1}\). Hence

and

If \({\sum }_{k=1}^{n}\,{S}_{k}\mathrm{(0)}+I\mathrm{(0)} < {\sum }_{k=1}^{n}\,{{\check{S}}}_{k}^{0}\), then \({\sum }_{k=1}^{n}\,{S}_{k}(t)+I(t) < {\sum }_{k=1}^{n}\,{{\check{S}}}_{k}^{0}\,a.s.\) Thus the region

is a positively invariant set of system (1.8).

Extinction

The other main concern in epidemiology is how we can regulate the disease dynamics so that the disease will be eradicated in a long term. In this section, we shall give a sharp result of the extinction of disease in the stochastic model (1.8).

Theorem 4.1

If following conditions satisfied:

-

(i)

\({\bar{R}}_{0}^{\ast }:=\frac{{\sum }_{k=1}^{n}\,{\sum }_{l=1}^{L}\,{\pi }_{l}\frac{{\beta }^{2}(l){\alpha }_{k}^{2}(l)}{2{\sigma }_{k}^{2}(l)}}{{\sum }_{l=1}^{L}\,{\pi }_{l}(\mu (l)+\gamma (l))} < 1\), then the disease I(t) will die out exponentially with probability one, i.e.,

$$\mathop{\mathrm{lim}\,{\rm{\sup }}}\limits_{t\to \infty }\,\frac{\mathrm{ln}\,I(t)}{t}\le \sum _{l=1}^{L}\,{\pi }_{l}(\mu (l)+\gamma (l))\,({\bar{R}}_{0}^{\ast }-\mathrm{1)} < 0\quad a.s.$$or

-

(ii)

if \({\sigma }_{k}^{2}(l) < \beta (l)\,{\alpha }_{k}(l)\), where \(k=1,2,\ldots ,n\), \(\,\forall l\in {\mathcal M} \). \({\bar{R}}_{0}^{\ast }:=\frac{{\sum }_{k=1}^{n}\,{\sum }_{l=1}^{L}\,{\pi }_{l}\beta (l)\,{\alpha }_{k}(l)}{{\sum }_{l=1}^{L}\,{\pi }_{l}(\mu (l)+\gamma (l)+{\sum }_{k=1}^{n}\,\frac{{\sigma }_{k}^{2}(l)}{2})} < 1\), then the disease I(t) will die out exponentially with probability one, i.e.,

Proof

Making use of the It \(\hat{o}\)’s formula to ln I(t), one has

Let \(\frac{{S}_{k}}{N}={z}_{k}\), k = 1, 2, …, n, and 0 < z k ≤ 1, \(l\in {\mathcal M} \) we can obtain

Case 1: We can obtain:

where k = 1, 2, …, n.

As ergodic properties of ξ(t), we can obtain:

Integrating (4.3) from 0 to t, we have

An application of the strong law of large numbers (in ref.18) we can obtain

Taking the superior limit on both side of (4.4) and combining with (4.5), one arrives at

Case 2: When \(1 < \frac{\beta (l)\,{\alpha }_{k}(l)}{{\sigma }_{k}^{2}(l)}\), that is \({\sigma }_{k}^{2}(l) < \beta (l)\,{\alpha }_{k}(l)\), then f(z k ) ≤ f(1), we obtain:

where \(\forall k=1,2,\ldots ,n\), \(\forall l\in {\mathcal M} \).

As ergodic properties of ξ(t), we can obtain:

Integrating (4.7) from 0 to t, we have

An application of the strong law of large numbers (in ref.18) we can obtain Taking the superior limit on both side of (4.4) and combining with (4.5), one arrives at

which implies that \({\mathrm{lim}}_{t\to \infty }\,I(t)=0\) a.s. Thus the disease I(t) will tend to zero exponentially with probability one.

By system (1.8) and (1.2), it is easy to see that when \({\mathrm{lim}}_{t\to \infty }\,I(t)=0\) a.s., then \({\mathrm{lim}}_{t\to \infty }\,A(t)=0\) a.s. This completes the proof.

Remark 4.1 Sufficient criteria of extinction are established for the HIV infectious disease in the stochastic system. Condition (1) of Theorem 4.1 tells us if the noise is strong, then the disease will die out. Condition (2) of Theorem 4.1 is to say if the noise is weak, then the disease also will die out under specific condition.

Persistence

Definition 5.1 System (1.8) is said to be persistet in the mean if

We define a parameter

Theorem 5.1

Assume that \({R}_{0}^{s} > 1\), then for any initial value (S 1(0), S 2(0), …, S n (0), I(0), ξ(0)) ∈ Γ* the solution (S 1(t), S 2(t), …, S n (t), I(t), ξ(t)) of system (1.8) has the following property:

where k = 1, 2, …, n.

Proof.

where k = 1, 2, …, n,

Hence we define

with

in which k = 1, 2, …, n.

Using It \(\hat{o}\)’s formula and Basic inequality \(\frac{a+b+c}{3}\ge \sqrt[3]{abc}\) one can write

By c k , a k , k = 1, 2, …, n, we obtain

in which k = 1, 2, …, n.

As ergodic properties of ξ(t), we can obtain:

Integrating (5.3) from 0 to t and dividing by t, we can get

Since \({\sum }_{k=1}^{n}\,{S}_{k}(t)+I(t)\le C\), we can obtain

An application of the strong law of large numbers (in ref.18) we can obtain

Taking the superior limit on both side of (5.4) and combining with (5.5), (5.6) and (5.7) one arrives at

Therefore, by the condition \({R}_{0}^{s} > 1\), we have assertion (5.2). This complete the proof of Theorem 5.1.

Remark 5.1 Theorem 5.1 tells us if the the condition of Theorem 5.1 is satisfied, then the disease will proceed. The conditions for the persistence of HIV infectious disease in the stochastic system are sufficient but not necessary.

Positive Recurrence

In this section, we show the persistence of the disease in the population, but from another point of view. Precisely, we find a domain \(D\subset {{\rm{\Gamma }}}^{\ast }\) in which the process (S 1(t), S 2(t), …, S n (t), I(t)) is positive recurrent. Generally, the process \({X}_{t}^{x}\) where X 0 = x is recurrent with respect to D, if for any \(x\notin D\), \({\mathbb{P}}({\tau }_{D} < +\infty )=1\), where τ D is the hitting time of D for the process \({X}_{t}^{x}\), that is

The process \({X}_{t}^{x}\) is said to be positive recurrent with respect to D if \({\mathbb{E}}({\tau }_{D}) < +\infty \), for any \(x\notin D\).

Theorem 6.1

Let (S 1(t), S 2(t), …, S n (t), I(t), ξ(t)) be the solution of system (1.8) with initial value \(({S}_{1}\mathrm{(0)},{S}_{2}\mathrm{(0)},\ldots ,\) \({S}_{n}\mathrm{(0)},I\mathrm{(0)},\xi \mathrm{(0))}\in {{\rm{\Gamma }}}^{\ast }\times {\mathcal M} \). Assume that \({R}_{0}^{s} > 1\) (defined by Section 4), then (S 1(t), S 2(t), …, S n (t), I(t), ξ(t)) is positive recurrent with respect to the domain \(D\times {\mathcal M} \), where

in which ε 1,ε 2,ε 3 are sufficiently small constants.

Proof. Since the coefficients of (1.8) are constants, it is not difficult to show that they satisfy (5.1), (5.2). For all initial value \(({S}_{1}\mathrm{(0)},{S}_{2}\mathrm{(0)},\ldots ,{S}_{n}\mathrm{(0)},I\mathrm{(0)},\xi \mathrm{(0))}\in {{\rm{\Gamma }}}^{\ast }\times {\mathcal M} \), the solution of (1.8) is regular by Theorem (3.1).

Defining a C 2–function

in which U(S 1, S 2, …, S n , I) is defined by section (5). Let (w(1), w(2), …, w(L))T be the solution of the following Poisson system

where \({\bar{R}}_{0}={({\bar{R}}_{0}\mathrm{(1)},{\bar{R}}_{0}\mathrm{(2)},\ldots ,{\bar{R}}_{0}(L))}^{T}\) then

By c k , a k , k = 1, 2, …, n, we obtain

in which k = 1, 2, …, n. Then we get

in which \({R}_{0}^{s}\) is defined in (5.1). And the following condition for M > 0 is satisfied

It is easy to check that

In addition, \(\widehat{V}({S}_{1},{S}_{2},\ldots ,{S}_{n},I,l)\) is a continuous function on \({\bar{U}}_{k}\). Therefore \(\widehat{V}(({S}_{1},{S}_{2},\ldots ,{S}_{n},I,l)\) has a minimum value point \(({\bar{S}}_{1},{\bar{S}}_{2},\ldots ,{\bar{S}}_{n},\bar{I},l)\) in the interior of \({{\rm{\Gamma }}}^{\ast }\times {\mathcal M} \). Then we define a nonnegative C 2–function V: \({{\rm{\Gamma }}}^{\ast }\times {\mathcal M} \to {\mathbb{R}}\) as follows

The differential operator \( {\mathcal L} \) acting on the function V leads to

Consider the bounded open subset

and ε i > 0 (i = 1, 2, 3) are sufficiently small constants. In the set Γ*\D, we can get ε i (i = 1, 2, 3) sufficiently small such that the following conditions hold

For the purpose of convenience, we can divide Γ*\D into the following 2n + 2 domains,

Clearly, \({D}^{c}={D}_{1}\cup {D}_{2}\cup {D}_{3}\cup \cdots \cup {D}_{n+2}\). Next we will prove that \( {\mathcal L} V({S}_{1},{S}_{2},\ldots ,{S}_{n},I,l)\le -1\) on \({D}^{c}\times {\mathcal M} \), which is equivalent to show it on the above n + 2 domains.

Case 1. If (S 1, S 2, …, S n , I) ∈ D k , (k = 1, 2, …, n), then

In view of (6.3), one has

Case 2. If (S 1, S 2, …, S n , I) ∈ D n+1, then

According to (6.4) one can see that

Combining with (6.2) and (6.6), one has for sufficiently small ε 1,

Case 3. If (S 1, S 2, …, S n , I) ∈ D n+2, then

In view of (6.8), one has

Obviously, from (6.9), (6.10), and (6.11) one can obtain that for a sufficiently small ε i (i = 1, 2, 3),

Now, let (S 1(0), S 2(0), …, S n (0), I(0)) ∈ D c. Thanks to the generalized It\(\hat{o}\) formula established by Skorokhod39 (Lemma 3, Pages 104) and using (6.12), we obtain

Thus, by the positivity of V, one can deduce that

The proof is complete.

Remark 6.1 Theorem 6.1 tells us if the condition of Theorem 6.1 satisfied, then system (1.8) correspond to the boundedness of the disease to corresponding deterministic system. That is to say the disease can not spread to whole susceptible population or go to extinct.

Conclusion

In this paper, we extended the classical DS-I-A epidemic model from a deterministic framework to a stochastic one by incorporating both white and color environmental noise. we have looked at the long-term behavior of our stochastic DS-I-A epidemic model. We established conditions for extinction and persistence of disease which both are sufficient but not necessary. We also proved that the DS-I-A model (1.8) is positive recurrent. We first discussed the conditions for extinction of disease in Section 4, it means that the disease can not spread or be endemic disease. Furthermore, we discussed the condition persistence of disease and if the disease proceed, we explore long-term behavior of the disease in Section 5 and Section 6. That is to say the disease is bounded, namely the disease will be endemic and can not spread to whole susceptible population or go to extinct.

It is interesting to find that if condition of Theorem 5.1 is satisfied, then the equation in (1.8) which k = 1, 2, is stochastically permanent, respectively. Hence Theorem 5.1 tells us that if every individual equation is stochastically permanent, then as the result of Markovian switching, the overall behavior, i.e. SDE (1.8), remains stochastically permanent. On the other hand, if condition of Theorem 4.1 is satisfied, for some \(l\in {\mathcal M} \), then every individual in (1.8) is extinctive. Hence Theorem 4.1 tells us that if every individual is extinctive, then as the result of Markovian switching, the overall behavior of SDE (1.8) remains extinctive. However, Theorems 4.1 and 5.1 provide a more interesting result that if some individual equations in (1.8) are stochastically permanent while some are extinctive, again as the result of Markovian switching, the overall behavior of SDE (1.8) may be stochastically permanent or extinctive, depending on the sign of the stationary distribution (π 1,…,π L ) of the Markov chain r(t).

Thus the results show that the stationary distribution (π 1,…,π L ) of the Markov chain r(t) plays a very important role in determining extinction or persistence of the epidemic in the population. We derived explicit conditions of extinction or persistence of the epidemic:

-

(1)

If following conditions satisfied:

-

(i)

\({\bar{R}}_{0}^{\ast }:=\frac{{\sum }_{k=1}^{n}\,{\sum }_{l=1}^{L}\,{\pi }_{l}\frac{{\beta }^{2}(l)\,{\alpha }_{k}^{2}(l)}{2{\sigma }_{k}^{2}(l)}}{{\sum }_{l=1}^{L}\,{\pi }_{l}(\mu (l)+\gamma (l))} < 1\), then the disease I(t) will die out exponentially with probability one, i.e.,

$$\mathop{\mathrm{lim}\,{\rm{\sup }}}\limits_{t\to \infty }\,\frac{\mathrm{ln}\,I(t)}{t}\le \sum _{l\mathrm{=1}}^{L}{\pi }_{l}(\mu (l)+\gamma (l))\,({\hat{R}}_{0}^{s}-\mathrm{1)} < 0\quad a.s.$$or

-

(ii)

if \({\sigma }_{k}^{2}(l) < \beta (l)\,{\alpha }_{k}(l)\), where \(k=1,2,\ldots ,n\), \(\forall l\in {\mathcal M} \). \({\bar{R}}_{0}^{\ast }:=\frac{{\sum }_{k=1}^{n}\,{\sum }_{l=1}^{L}\,{\pi }_{l}\beta (l)\,{\alpha }_{k}(l)}{{\sum }_{l=1}^{L}\,{\pi }_{l}(\mu (l)+\gamma (l)+{\sum }_{k=1}^{n}\,\frac{{\sigma }_{k}^{2}(l)}{2})} < 1\), then the disease I(t) will die out exponentially with probability one, i.e.,

That is to say the disease will die out. It means that the disease can not spread or be endemic disease.

-

(2)

Let (S 1(t), S 2(t), …, S n (t), I(t), ξ(t)) be the solution of system (1.8) with initial value \(({S}_{1}\mathrm{(0)},{S}_{2}\mathrm{(0)},\ldots ,{S}_{n}\mathrm{(0)},I\mathrm{(0)},\xi \mathrm{(0))}\in {{\rm{\Gamma }}}^{\ast }\times {\mathcal M} \). Assume that \({R}_{0}^{s} > 1\), where

then (S 1(t), S 2(t), …, S n (t), I(t), ξ(t)) is persistence and is positive recurrent with respect to the domain \(D\times {\mathcal M} \), where

in which ε 1, ε 2, ε 3 are sufficiently small constants.

That is to say the disease will proceed and can not spread to whole susceptible population or go to extinct.

We have illustrated our theoretical results with computer simulations. Finally, this paper is only a first step in introducing switching regime into an epidemic model. In future investigations, we plan to introduce white and color noises into more realistic epidemic models.

Some interesting topics deserve further consideration. On the one hand, one may propose some more realistic but complex models, such as considering the effects of impulsive perturbations on system (1.8). On the other hand, it is necessary to reveal that the methods used in this paper can be also applied to investigate other interesting epidemic models. We leave these as our future work.

References

Pratt, R. D., Shapiro, J. F., McKinney, N., Kwok, S. & Spector, S. A. Virologic characterization of primary HIV-1 infection in a health care worker following needlestick injury. J. Infect. Dis. 172, 851 (1995).

Quinn, T. C. Acute primary HIV infection. JAMA 278, 58 (1997).

Henrard, D. R., Phillips, J. F., Muenz, L. R., Blattner, W. A., Weisner, D., Eyster, M. E. & Goedert, J. J. Natural history of HIV-1 cell-free viremia. JAMA 274, 554 (1995).

O’Brien, T. R. et al. Serum HIV-1 RNA levels and time to development of AIDS in the multicenter hemophilia cohort study. JAMA 276, 105 (1996).

Wong, M. T. et al. Patterns of virus burder and T cell phenotype are established early and are correlated with the rate of disease progression in HIV type 1 infected persons. J. Infect. Dis. 173, 877 (1996).

Piatak, M. et al. High levels of HIV-1 in plasma during all stages of Infection determined by competitive PCR. Science 259, 1749 (1993).

Baltimore, D. Lessons from people with nonprogressive HIV infection. N. Engl. J. Med. 332, 259 (1995).

Cao, Y., Qin, L., Zhang, L., Safrit, J. & Ho, D. D. Virologic and immunologic characterization of long-term survivors of HIV type 1 infection. N. Engl. J. Med. 332, 201 (1995).

Anderson, R. M., Medley, G. F., May, R. M. & Johnson, A. M. A preliminary study of the transmission dynamics of the human immunodeficiency virus (HIV), the causative agent of AIDS. IMA J Math Appl Med Biol 3, 229–263 (1986).

Blythe, S. P. & Anderson, R. M. Variable infectiousness in HIV transmission models. IMA J Math Appl Med Biol 5, 181–200 (1988).

Isham, V. Mathematical modelling of the transmission dynamics of HIV infection and AIDS: a review. Mathematical & Computer Modelling 12, 1187–1187 (1989).

Ida, A., Oharu, S. & Oharu, Y. A mathematical approach to HIV infection dynamics. J. Comput Appl Math. 204, 172–186 (2007).

Hyman, J. & Li, J. An intuitive formulation for the reproductive number for the spread of diseases in heterogeneous populations. Math. Biosci 167, 65–86 (2000).

Castillo-Chavez, C., Huang, W. & Li, J. Competitive exclusion in gonorrhea models and other sexually-transmitted diseases. SIAM J.Applied Math 56, 494–508 (1996).

Allen, L. J. S. An Introduction to Stochastic Epidemic Models, In Mathematical Epidemiology, Springer, 81–130 (2008).

Thomas, C. G. Introduction to Stochastic Differential Equations, Dekker, New York (1988).

Øksendal, B. Stochastic Differential Equations: An Introduction with Applications, Springer (2010).

Mao, X. Stochastic Differential Equations and Their Applications, Horwood, Chichester (1997).

Mao, X., Marion, G. & Renshaw, E. Environmental noise suppresses explosion in population dynamics, Stoch. Process. Appl 97, 95–110 (2002).

Durrett, R. Stochastic spatial models. SIAM Rev 41, 677–718 (1999).

Yang, Q., Jiang, D., Shi, N. & Ji, C. The ergodicity and extinction of stochastically perturbed SIR and SEIR epidemic models with saturated incidence. J. Math. Anal. Appl 388, 248–271 (2012).

Gray, A., Greenhalgh, D., Hu, L., Mao, X. & Pan, J. A stochastic differential equation SIS epidemic model. SIAM J. Appl. Math 71, 876–902 (2011).

Khasminskii, R. Z. & Klebaner, F. C. Long term behavior of solutions of the Lotka-Volterra system under small random perturbations. Ann. Appl. Prob 11, 952–963 (2001).

Xu, Y., Jin, X. & Zhang, H. Parallel logic gates in synthetic gene networks induced by non-gaussian noise. Phy. Rev. E 88, 052721, Nov (2013).

Li, Cui, J., Liu, M. & Liu, S. The evolutionary dynamics of stochastic epidemic model with nonlinear incidence rate. Bull. Math. Biol 77, 1705–1743 (2015).

Lahrouz, A. & Omari, L. Extinction and stationary distribution of a stochastic SIRS epidemicmodel with non-linear incidence. Statis. Prob. Lett 83, 960–968 (2013).

Yang, Q. & Mao, X. Extinction and recurrence of multi-group SEIR epidemic models with stochastic perturbations, Nonlinear Anal. RWA 14, 1434–1456 (2013).

Liu, M. & Wang, K. Dynamics of a two-prey one predator system in random environments. J. Nonlinear Sci 23, 751–775 (2013).

Dalal, Nirav, Greenhalgh, D. & Mao, X. A stochastic model of AIDS and condom use. J. Math. Anal. Appl. 325.1, 36–53 (2007).

Luo, Q. & Mao, X. Stochastic population dynamics under regime switching. J. Math. Anal. Appl. 334, 69–84 (2007).

Takeuchi, Y., Du, N. H., Hieu, N. T. & Sato, K. Evolution of predator-prey systems described by a Lotka-Volterra equation under random environment. J. Math. Anal. Appl. 323, 938–957 (2006).

Du, N. H., Kon, R., Sato, K. & Takeuchi, Y. Dynamical behavior of Lotka-Volterra competition systems: Non-autonomous bistable case and the effect of telegraph noise. J. Comput. Appl. Math. 170, 399–422 (2004).

Slatkin, M. The dynamics of a population in a Markovian environment. Ecology 59, 249–256 (1978).

Zu, L., Jiang, D. & ORegan, D. Conditions for persistence and ergodicity of a stochastic lotkacvolterra predatorcprey model with regime switching. Commun Nonlin Sci Numer Simul 29, 1–11 (2015).

Zhang, X., Jiang, D., Alsaedi, A. & Hayat, T. Stationary distribution of stochastic SIS epidemic model with vaccination under regime switching. Appl. Math. Lett. 59, 87–93 (2016).

Settati, A. & Lahrouz, A. Stationary distribution of stochastic population systems under regime switching. Appl. Math. Comput. 244, 235–243 (2014).

Zhu, C. & Yin, G. Asymptotic properties of hybrid diffusion systems. SIAM J. Control. Optim. 46, 1155–1179 (2007).

Liu, S., Xu, X., Jiang, D., Hayatb, T. & Ahmadb, B. Stationary distribution and extinction of the DS-I-A model disease with periodic parameter function and Markovian switching. Appl. Math. Comput. 311, 66–84 (2017).

Skorohod, A. V. Asymptotic Methods in the Theory of Stochastic Differential Equations. American Mathematical Society, Providence (1989).

Acknowledgements

The authors would like to thank the two referees for their helpful comments. The work was supported by National Natural Science Foundation of China (No. 11571207, No. 11371085), Shandong Provincial Natural Science Foundation of China (No. Zr2015AL003), The Fundamental Research Funds for the central Universities (No. 16CX02015A, No. 15CX08011A).

Author information

Authors and Affiliations

Contributions

Xiaojie Xu and Daqing Jiang developed the study concept and design. Songnan Liu analyzed and drafted the manuscript. Tasawar Hayat and Bashir Ahmad corrected some spelling mistakes and inaccuracy of expressions. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, S., Jiang, D., Xu, X. et al. Dynamics of hybrid switching DS-I-A epidemic model. Sci Rep 7, 12332 (2017). https://doi.org/10.1038/s41598-017-11901-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-11901-x