Abstract

Conventional transistor electronics are reaching their limits in terms of scalability, power dissipation, and the underlying Boolean system architecture. To overcome this obstacle neuromorphic analogue systems are recently highly investigated. Particularly, the use of memristive devices in VLSI analogue concepts provides a promising pathway to realize novel bio-inspired computing architectures, which are able to unravel the foreseen difficulties of traditional electronics. Currently, a variety of materials and device structures are being studied along with novel computing schemes to make use of the attractive features of memristive devices for neuromorphic computing. However, a number of obstacles still have to be overcome to cast memristive devices into hardware systems. Most important is a physical implementation of memristive devices, which can cope with the high complexity of neural networks. This includes the integration of analogue and electroforming-free memristive devices into crossbar structures with no additional electronic components, such as selector devices. Here, an unsupervised, bio-motivated Hebbian based learning platform for visual pattern recognition is presented. The heart of the system is a crossbar array (16 × 16) which consists of selector-free and forming-free (non-filamentary) memristive devices, which exhibit analogue I-V characteristics.

Similar content being viewed by others

Introduction

A long standing dream in machine learning is to create artificial neural networks (ANN) which match nature’s efficiency in performing cognitive tasks like pattern recognition or unsupervised learning1,2. Due to the impressive performance improvements of deep learning algorithms, digital computers are able to deal with complex cognitive tasks3,4. However, there is a drawback in terms of power dissipation and device overhead compared to the characteristic features of biological networks. The amazing performance of today’s terminal devices in cognitive tasks, such as speech recognition, is the result of skilful data sharing between the local, cost-effective device and the intelligent data processing in the “cloud”. The latter is a massive power consuming computer (server) often located in another part of the world. Although this strategy seems to be genius at first glance, severe questions appear if a local data processing (at the particular place of action) is desired, where energy supply and space is typically very limited. For example, in the future local systems of autonomously driven vehicles might be preferable to avoid external cyber attacks on million of cars simultaneously. This data security argument also holds for biomedical applications. For respecting the patient, sensible data should not be spread out to everybody but should be handled locally and reasonably. All this motivate a paradigm shift in the research of ANN and initiated a new pathway towards analogue, in-memory computing architectures for so called, energy efficient and compact neuromorphic circuits5,6.

Neuromorphic engineering uses analogue VLSI (Very Large Scale Integration) based on CMOS (Complementary Metal Oxide Semiconductor) technology5,6. This allows the creation of real-time computation schemes in a parallel and energy-efficient architecture. These systems can be expected to handle the highly complex neural network connections better than serial computational schemes6. However, the energy efficiency of neuromorphic systems is decidedly determined by realization of the interconnections between artificial neurons (synapses). The implementation of synaptic functionalities needs non-volatile devices, which emulate the analogue and plastic learning behaviour of synapses in an integrated circuit. In this context, memristive devices offer unique perspectives for neuromorphic circuits due to their low power consumption and the high integration density of the devices on a chip7,8,9. Moreover, memristive devices enable the emulation of synaptic functionality in a detailed and efficient manner8,9,10,11,12,13,14,15. So far, important local biological synaptic mechanisms have been realized, such as Hebb´s learning rules10 including spike-timing dependent plasticity (STDP)8,16, long-term potentiation (LTP) and its counterpart long-term depression (LTD)17. Due to this unique behaviour of memristive devices, promising computing schemes, including implicit learning schemes18,19, auto-associative networks20,21, and perceptron networks for pattern recognition22,23, classification24,25,26,27, and unsupervised learning28,29,30, have been already presented.

However, a gap remains between these promising computing schemes and their hardware realization. In particular, the integration of the memristive devices with a silicon based analogous VLSI technology is a great challenge. This applies both to CMOS manufacturing processes and to the electrical signals used in the data processing schemes. Furthermore, the electrical adaptation of memristive crossbar memory arrays in conjunction with a suitable neural computing scheme have to take the inherent limitations of the memristive devices (variability, reliability, and dynamic range) into account 31,32. Although considerable progress has been made in the last few years in this field, a variety of challenges remains. This includes the need of memristive devices with a high I-V non-linearity and asymmetry to avoid additional selector devices for each cell of the crossbar array31,32,33,34,35. Another obstacle is the lack of electroforming-free and analogue, crossbar-integrated memristive devices to fully use the advantages of memristors over conventional CMOS technology.

In this investigation we will demonstrate memristive crossbar arrays (16 × 16) that require no selector devices and do not need initial electroforming steps. In other words, such as-fabricated crossbar arrays are “plug and play” units. Furthermore, these filament-free devices feature truly analogue, synaptic-like resistive switching without special writing schemes. In those devices an ultra-thin memristive layer is incorporated in between a tunnel and a Schottky barrier with the benefit that the tunnel barrier limits current through the device. The resistance switching takes place at the insulator-metal interface (Schottky contact), where the ion motion under applied electrical fields lead to a variation of the energy barrier of the Schottky contact36,37,38. These memristive devices are used as hardware synapses together with software neurons in a mixed signal circuit which allows unsupervised Hebbian learning of visual patterns.

Results

Crossbar integration and device characteristics

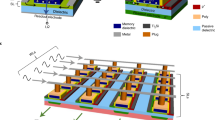

Figure 1(a) shows the layer sequence of the used memristive cells together with its physical implementation into a 16 × 16 crossbar array consisting of 256 single cells. In the double barrier device structure an ultra-thin memristive layer (NbxOy) is sandwiched between an Al2O3 tunnel barrier and Au layer. The NbxOy/Au interface can be described as a Schottky like contact36. Figure 1(b) shows the similarities in |I|-V curves between a crossbar device (red) and an individual cell from a different wafer. In this measurement, the voltage has been ramped from 0 to 2.8 V (cf. Fig. 1(b)) to set the device from its initial high resistant state (HRS) to a low resistance state (LRS). Afterwards, the voltage has been ramped down to −1.4 V (cf. Fig. 1(b)) and back to 0 V to reset the device. The prominent features are a distinct |I|-V non-linearity and an asymmetry between positive and negative bias, which are due to the diode characteristic of the device. The uniformity of the device resistances within the crossbar array is shown in Fig. 1(c). Here the initial resistances of the 256 junctions of the crossbar array, measured at a read voltage of 0.9 V, are shown.

Double-barrier memristive device and crossbar integration: (a) Schematic cross-section of the Al/Al2O3/NbxOy/Au double-barrier memristive device. Those devices have been implemented physically into a 16 × 16 crossbar array consisting of 256 single cells. (b) Comparison of I-V curves for a crossbar-device and an individual device from different wafers (for crossbar measurement, the other cells of the array were floated). To visualize the obtained change in resistance better the absolute value and a logarithmic scale was used. (c) Resistance map of one crossbar containing the initial resistances of 256 memristive cells measured at 0.9 V.

The underlying physical mechanism of the device behaviour has been described in detail in36,37,38 and is based on the movement of oxygen ions within the NbxOy layer under the applied electric bias field. Under positive bias voltages, the electric field across the NbxOy layer ensures that negatively charged oxygen ions drift towards the Au interface. This results in a decrease of the Schottky barrier height as well as in an increase of electron injection through the tunneling barrier due to a decrease of the Au/NbxOy interfacial potential. This mechanism provides several advantages compared to single barrier devices. Chemical diffusion barriers for the ions, a defined interfacial potential, and a homogeneous resistance change rather than a binary switch observed by filamentary based devices. This decreases the device variability and improves the retention characteristics compared to single barrier devices. Furthermore, due to the Schottky-like interface, voltages below 1 V cannot affect the ions, allowing a non-destructive read-out of each cell and a selector device free addressing in the crossbar structure, as we will discuss in more detail below.

Emulation of neural plasticity

Learning in biological networks is manifested at the cellular synaptic level, where the connectivity between neurons varies in respect to their level of activity39,40. This process is called synaptic plasticity and it induces a long-lasting increase or decrease of synaptic connections, so-called long term potentiation (LTP) or long-term depression (LTD)41. For the implementation of LTP or LTD, mainly two aspects are important: first, the level of activity of the pre- and the post-synaptic neurons and second, the relative timing of their activities, known as spike-timing-dependent plasticity (STDP)16,42. A variety of concepts have been presented in the last couple of years which use memristive devices to emulate synaptic plasticity43. In the following the concept applied here to emulate neural plasticity is described.

Figure 2(a) shows the gradual change of the device conductance of a single memristive cell under a voltage train with n voltage pulses V p . The voltage train consists of 200 equivalent positive voltage pulses (potentiation pulses of ΔV = 3.3 V and Δt = 100 ms in red) followed by 200 equivalent negative voltage pulses (depression pulses of ΔV = −1.1 V and Δt = 300 ms in blue). To measure the device conductance, a read voltage of 0.9 V (well below the threshold voltage of the device) was applied and the current was measured after every potentiation/depression pulse. The obtained conductance values G(n) were normalized by the average initial conductance G0 for a better illustration. We found a gradual change of the device conductance up to 3300% and a saturation of the conductance which bound G between G0 and a maximal conductance G max . To further quantify the conductance variation, the experimental data has been fitted by

where β p and β d are positive constants which describe the experimental characteristic best for β p = 3.4 s−1 and β d = 0.13 s−1 (cf. solid lines in Fig. 2(a)) and emphasize the |I|-V non-linearity and self-saturation characteristics of the double-barrier memristive device particularly.

Emulation of neural plasticity. (a) A sequence of 200 potentiation pulses of +3.3 V with a pulse length of 100 ms and 200 depression pulses of −1.1 V with a pulse length of 300 ms (upper graph). Black dots are experimental data measured at 0.9 V, while red and blue lines correspond to the data obtained using Eq. 1. (b) Illustration of one memristive device connecting a pre- and a post-neuron. For the pre-neuron, a stochastic coding scheme is used which decodes the input signal intensity S(t) either into a positive input activity υj = +1, or a negative activity υj = −1. As post-neuron, a leaky integrate-and-fire neuron is used. (c) The implemented Hebbian learning formalism: a potentiation pulse V p is applied in case (I), where the activity of the pre- and post-neuron is positive. No voltage pulse is applied to the memristive device in the cases (II) and (IV), since here is either the pre- or the post-neuron active. The dashed lines in (II) shall indicate that for the pre-neuron υj can either be +1 or −1. A depression pulse V d is applied in case (III), where the pre-neuron activity is negative, while the post-neuron is active.

As a first step to integrate double barrier memristive devices into a network structure, we discuss how a single memristive device is connected to a pre- and post-neuron. This configuration is sketched in Fig. 2(b). For the pre-neuron, a stochastic coding scheme is used in which the pre-neuron decodes the probability of a spike generation depending on the input signal intensity S(t)44,45. For this purpose, the input signal is normalized to the interval [−1, 1] and in each time step a random number r ϵ (0, 1) is generated to calculate the activity of the pre-neuron υj. The pre-neuron is active for |S(t)| > r(t). The generated pulse is positive for S(t) > 0 (υj = +1) and negative for S(t) < 0) (υj = −1). As post-neuron, a leaky integrate-and-fire neuron is used, where a membrane capacitance C is connected in parallel to a conductor g L . They are driven by an input current I(t) according to

Here, u(t) is the membrane potential. The application of an input current causes an integration of u(t) up to the threshold potential θ thr , at which the activity of the post-neuron υi is set from 0 to +1.

We used the Hebbian learning formalism to get a local learning condition which appropriately adjusts the device conductance in a network environment. Accordingly, the conductance G is changed whenever the pre- and the post-synaptic neurons are simultaneously active (cf. Fig. 2(b)):

Here, α denotes the local learning rate of the conductance update, which can be derived from Eq. 1 for the double barrier device by using t = nΔt. For potentiation, α is given by α = G(t−1) β p exp(−β p Δt), while in the case of depression α reads α = G(t−1) β d (1−exp(−β d Δt)). The difference between depression and potentiation is defined by the sign of the product υj∙υi. Thus, in total four different cases are possible, as illustrated in Fig. 2(c). In case (I), the pre-synaptic activity is positive, while the post neuron is active as well. In this case, a potentiation pulse V p is applied (cf. bottom panel in Fig. 2(c)). Case (II) corresponds to the condition where only the pre-synaptic neuron is active. In this case, no change in the device conductance is generated. Further, (III) defines the case when the pre-neuron’s activity is negative while the post-neuron is active. In this case, a depression pulse V d is applied which decreases the device connectivity (cf. bottom panel in Fig. 2(c)). If the post-synaptic neuron is active without pre-neuron activity, the device conductance is not affected (case (IV)).

Equation 2 and the described learning conditions have been implemented in a combined software-hardware scheme. The activities of the neurons are calculated on a digital computer connected to a microcontroller. This addresses the single memristive cells within the crossbar array, as it will be described in the following in detail. We would like to emphasize that the digital computer is not necessary, and was only used in this proof-of-principle investigation to assess the usability of the memristive crossbar arrays.

Network structure

The network structure is illustrated in Fig. 3. The network consists of two neural layers connected via an array of memristive devices into feed-forward direction. As input signal for the pre-neurons pixel values of 2-dimensional gray-scale matrices are used, which have been transformed, prior to their application, into a 1-dimensional vector. The individual pixel values define the input intensity S(t) for the pre-neurons, which stochastically generate the neural activity patterns of the input-layer according to the computation scheme explained above.

Schematics of the implemented neural network: The network consists of a stochastic coding scheme of the input data, leaky-integrate-and fire output neurons (LIF), which are laterally coupled in an inhibitory winner-take-it-all network (WTA), and memristive devices which are arranged in a crossbar structure. Receptive fields are a result of a Hebbian learning scheme.

Leaky integrate-and-fire neurons are used as post-neurons in the output layer. In addition to the above described neuron model, the output neurons are laterally coupled within an inhibitory winner-takes-it-all network, including adaptive thresholds for the spiking, as proposed in44. The winner-takes-it-all approach is necessary to allow unsupervised learning within the network structure, in which the first spiking neuron resets the integration of all other neurons. The adjustable neuron firing threshold is crucial for unsupervised learning, because it guarantees that all output neurons participate equaly in the learning phase. This can be motivated by considering the process of homeostasis in biological systems44,45,46. Therefore, the firing threshold of a neuron is increased whenever the spike number (activity) of a neuron is above the desired activity, and vice versa. This can be achieved by using

for the threshold voltage adaptation. Here, γ th , A avg , and A tar are respectively the gain factor, the mean activity of an individual neuron, and the target activity (γ th = 0.01 and A tar = 2 during an interval of 60 images).

The Hebbian learning scheme of Eq. 3 is employed to increase or decrease the device conductance. This results in the following back-propagation procedure: if one of the post-neurons spikes, then a voltage pulse is applied to the respective memristive cells which connect the active pre- and post-neurons. In the case of υj = +1, a potentiation pulse is generated, while for υj = −1, a depression pulse is initiated (cf. the blue filed field in Fig. 3). Thus, every output neuron creates its own specific receptive field during learning (cf. sketch in Fig. 3). In the recognition phase, the neurons are able to spike in accordance with the previously learned pattern for varying input signals.

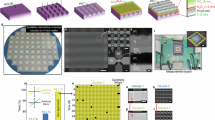

The neural network scheme has been implemented on a custom-made printed circuit board, as sketched in Fig. 4 and described in the method part. It consists of hardware synapses, i.e. memristive devices, and software neurons.

Technical realization: The crossbar array composed of memristive devices is connected through wire bonds to a custom PCB sample holder. Different sets of analogue switches allow to address a specific device. During the measurement, a single cell from the crossbar array is measured while all other cells in the crossbar array are left floating.

Formation of receptive fields

To study the formation of receptive fields, the network has been trained with three training patterns, which are shown in Fig. 5(a). The used parameters for the learning algorithm are listed in Table 1. Each of the patterns is a 6 × 6 pixel image with two colour values (black and white). Therefore, the input layer contains 36 single neurons. Five output neurons were provided, using 180 memristive devices (5 × 36) out of 256 devices in the crossbar array. To investigate the learning performance of the network, the patterns were applied in total 22,000 times to the network. Voltage pulses with different parameters were used for the potentiation (Δt = 100 ms, ΔV = 3.6 V) and depression (Δt = 300 ms, ΔV = −1.1 V). All other parameters are summarized in Table 1. We would like to emphasize, that the crossbar arrays are passive and do not require on-chip selector devices. This is possible, because the strong I-V non-linearity (cf. Fig. 1(b)) prevents interference with neighbouring devices. This can be understood regarding the I-V curve shown in Fig. 1(b): an increase in pulse height from 2 to 2.8 V leads to an increase in device conductance by a factor of 10, while below 1 V the device conductance remains unaffected. This asymmetry ensures that the electrical field for ion drift is sufficiently low in neighbouring devices during the set and reset process. Hence, the conductance of these neighbouring devices do not change. Moreover, in accordance to the non-filamentary switching mechanism, no initial forming procedure was necessary which facilitated the application of the crossbar arrays significantly.

Formation of receptive fields: (a) Used training patterns. (b) Obtained receptive fields during unsupervised learning in the case of 5 output neurons. The pixels correspond to the resistance values (synaptic weights) of the memristive devices. The used parameters for the learning algorithm are listed in Table 1.

In Fig. 5(b) the development of the receptive fields during the learning phase are shown. For this purpose, six characteristic instances in time were selected, showing the unsupervised learning mechanism. In the beginning, the conductance values of the memristive cells are randomly distributed (cf. row 1 in Fig. 5(b)). While the patterns “1” and “C” have formed quite fast in the receptive fields of the post-neurons 3 and 5, the receptive fields of the other post-neurons have been adapted by the network, so that pattern “U” was learned as well. The adaptation can be seen at the receptive field of the post-neuron 1, which learns pattern “U” (cf. first column in Fig. 5(b)). While first a mixture of pattern “C” and “U” is represented in the receptive field of this neuron, both patterns disappear and finally the pattern “U” appears in the final configuration.

After the learning phase, the network is able to distinguish the learned patterns. The back propagation is suppressed during the recognition phase, i.e. no set and reset pulses are initiated. For the case shown in Fig. 5(b) this means: post-neurons 1, 2, and 4 are active for pattern “U”, post-neuron 3 is active for pattern “1”, while neuron 5 is active whenever pattern “C” is applied as the input.

Discussion

Crucial for machine learning schemes are their storage and discrimination capabilities, which allow them to be used in complex classification tasks. On the one hand, distinguishing similar patterns is important, since it enables an adequate response of the system in multi-disciplinary tasks. On the other hand, it is required that the system is robust against variations in input patterns, which ensures an accurate performance if the input data is noisy or incomplete. In fact, this is a rather general property of learning and the formation of memory in the brain47, this is why it has attracted an intense research interest in the field of neuroscience over the last decades. Two cognitive functions have been identified to be particularly important: pattern separation and pattern completion48,49. Pattern separation, whereby similar inputs are stored in non-overlapping and distinct representations, can be regarded as a mechanism which balances the network against pattern completion.

The function of pattern separation and pattern completion within the here presented network has been investigated and the obtained results are shown in Fig. 6. In Fig. 6(a), a conceptual illustration of pattern separation and pattern completion is given, which describes the transfer behavior of information through the network: pattern completion increases the overlap of the representation of two patterns (labeled as A and B in Fig. 6(a)), while the process of pattern separation reduces the overlap. The obtained transfer characteristic of the here implemented network has been evaluated by using the two non-overlapping patterns shown in Fig. 6(b). The transfer characteristic which is obtained therewith is shown in Fig. 6(c). Here, the recognition rates as functions of the overlap between the two input patterns (A and B) are plotted. The recognition rates are defined as the spike intensities of the post-neurons during the recognition phase. For this purpose, only two neurons are used and the spiking dynamics under a varying input of those two output neurons are investigated. Furthermore, the response of the two outputs is digitalized, so that either pattern A (blue curve in Fig. 6(c)) or pattern B (red curve in Fig. 6(c)) has been recognized if neuron 1 or neuron 2 is active, respectively. The input has not been recognized (green curve in Fig. 6(c)) if neither neuron 1 nor neuron 2 is active or they are both active together. In total, the input patterns are applied 14,000 times. We found a recognition rate of 100% if the number of replaced pixel is below 25%. However, when half of the patterns A and B are applied at the same time, a marked increase in the non-recognition rate is observed. This case might be interpreted that neither pattern A nor pattern B has been recognized. In this case the system might regard the input as new, i.e. as a pattern which has not been learned during the training phase.

Input-output transfer characteristic of the network: (a) Conceptual representation of pattern separation and pattern completion as key cognitive functionalities which underline the process of pattern recognition. (b) Patterns used to train the network. For these two non-overlapping patterns, a network with 36 input neurons and 2 output neurons is used. (c) Recognition rates of the network depending on the strength of the overlap of patterns A and B.

In order to account best for a possible variability within the input patterns for this computing scheme the number of output neurons can be increased. This point has been addressed in a couple of theoretical investigations44,46,50. It has been found that recognition rates up to 93.5% are possible with such a simple two-layered network. However, those networks need ≈235,000 memristive cells, which poses strong requirements on the device variability44. In this respect, our double-barrier memristive device has a suitable variability as we were able to show recently51.

In conclusion, an unsupervised learning scheme for pattern recognition has been implemented in a mixed signal circuit consisting of analogue hardware synapses and digital software neurons. For this purpose, memristive double barrier devices are integrated into a crossbar array structure, which contains 256 single memristive cells. The strong I-V non-linearity and asymmetry of the individual cells has been used to implement associative Hebbian learning in a selector device free crossbar configuration. Particularly, this enables the realization of a local learning scheme for the formation of receptive fields. The transfer characteristics of the implemented circuit have been used to analyze their capability for pattern separation and pattern completion. At this respect, the crucial factors are the storage and discrimination capabilities of the network, which are restricted to the number of memristive cells in the crossbar array. The presented system is in principle able to cope with more complex tasks, with the drawback of a larger demand of memristive cells. Due to the large HRS and LRS in comparison to the individual wiring resistance (≈100 Ω for an individual wire with the size 1,100 × 40 × 0.5 µm3) in the present crossbar we expect that larger (m × n) arrays will function as well.

Methods

Sample preparation

Memristive tunneling junctions were fabricated on 4-inch Si wafers with 400 nm of SiO2 (thermally oxidized) using a standard optical lithography process. The devices were fabricated using the following procedure: First of all, the multilayer (including top- and bottom-electrode) is deposited without breaking the vacuum using DC magnetron sputtering. The Al2O3 tunnel barrier was fabricated by depositing Al which was afterwards partially oxidized in-situ, the NbxOy layer was deposited by reactive sputtering in an O2/Ar-atmosphere. Following the subsequent lift-off, the junction area was defined by etching the Au top electrode using wet etching (potassium iodide). The etched parts were then covered with thermally evaporated SiO to insulate the bottom electrode from the subsequently deposited Ti/Au-wiring to contact the top electrode.

Electrical characterization

To measure the resistance of every single memristive device, voltage pulses were applied with the measurement setup of Fig. 4. The voltage sweeps were applied to memristive cells within the crossbar array while the current was measured simultaneously using an HP4156A source meter.

Technical implementation

The control unit is a microcontroller (Arduino Mega 2560) managing the hardware-software interface. The microcontroller receives commands from the neurons and addresses the desired memristive devices out of the 16 × 16 crossbar array accordingly. For each row and column, the specific electrode is electrically connected using low-resistance analogue switches (DG212BDJ). By addressing the desired memristive cell, an analogue switch connects the top electrode of the device to an on-board pulse generator and the bottom electrode to a current measurement unit (see Fig. 4). Depending on the demand of the learning scheme, a read pulse or a pulse to change the conductance is applied. For this purpose, the pulse generator applies time-variable pulses of variable amplitude. The operational amplifier (LF356N) is used in transimpedance mode to measure the current with an Agilent 34411 A digital multimeter.

Circuit layout

The electronic circuit was fabricated on a printed circuit board (PCB). The software EAGLE developed by CadSoft was employed for the circuit design. To electrically connect the memristive crossbar array to the electrical circuit, the crossbar arrays were wire bonded to custom sample holders.

References

Chouard, T. & Venema, L. Machine intelligence. Nature 521, 435–435 (2015).

Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 61, 85–117 (2015).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning. (MIT Press, 2016).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Shih-Chii Liu et al. Analog VLSI. MIT Press (2002). Available at: https://mitpress.mit.edu/books/analog-vlsi. (Accessed: 3rd August 2016).

Chicca, E., Stefanini, F., Bartolozzi, C. & Indiveri, G. Neuromorphic Electronic Circuits for Building Autonomous Cognitive Systems. Proc. IEEE 102, 1367–1388 (2014).

Indiveri, G., Linn, E. & Ambrogio, S. ReRAM-Based Neuromorphic Computing. in Resistive Switching (eds Ielmini, D. & Waser, R.) 715–736 (Wiley-VCH Verlag GmbH & Co. KGaA, 2016).

Jo, S. H. et al. Nanoscale Memristor Device as Synapse in Neuromorphic Systems. Nano Lett. 10, 1297–1301 (2010).

Jeong, D. S., Kim, I., Ziegler, M. & Kohlstedt, H. Towards artificial neurons and synapses: a materials point of view. RSC Adv. 3, 3169–3183 (2013).

Ziegler, M., Riggert, C., Hansen, M., Bartsch, T. & Kohlstedt, H. Memristive Hebbian Plasticity Model: Device Requirements for the Emulation of Hebbian Plasticity Based on Memristive Devices. IEEE Trans. Biomed. Circuits Syst. 9, 197–206 (2015).

Memristors and Memristive Systems | Ronald Tetzlaff | Springer.

Yu, S., Wu, Y., Jeyasingh, R., Kuzum, D. & Wong, H. S. P. An Electronic Synapse Device Based on Metal Oxide Resistive Switching Memory for Neuromorphic Computation. IEEE Trans. Electron Devices 58, 2729–2737 (2011).

Kim, S. et al. Experimental Demonstration of a Second-Order Memristor and Its Ability to Biorealistically Implement Synaptic Plasticity. Nano Lett. 15, 2203–2211 (2015).

Wang, Z. et al. Memristors with diffusive dynamics as synaptic emulators for neuromorphic computing. Nat. Mater. 16, 101–108 (2017).

Choi, S. et al. SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018).

Linares-Barranco, B. et al. On spike-timing-dependent-plasticity, memristive devices, and building a self-learning visual cortex. Neuromorphic Eng. 5, 26 (2011).

Ohno, T. et al. Short-term plasticity and long-term potentiation mimicked in single inorganic synapses. Nat. Mater. 10, 591–595 (2011).

Ziegler, M. et al. An Electronic Version of Pavlov’s Dog. Adv. Funct. Mater. 22, 2744–2749 (2012).

Bichler, O. et al. Pavlov’s Dog Associative Learning Demonstrated on Synaptic-Like Organic Transistors. Neural Comput. 25, 549–566 (2012).

Eryilmaz, S. B. et al. Brain-like associative learning using a nanoscale non-volatile phase change synaptic device array. Front. Neurosci. 8, (2014).

Hu, S. G. et al. Associative memory realized by a reconfigurable memristive Hopfield neural network. Nat. Commun. 6, ncomms8522 (2015).

Park, S. et al. Electronic system with memristive synapses for pattern recognition. Sci. Rep. 5, srep10123 (2015).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017).

Alibart, F., Zamanidoost, E. & Strukov, D. B. Pattern classification by memristive crossbar circuits using ex situ and in situ training. Nat. Commun. 4, ncomms3072 (2013).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Hu, M. et al. Memristor‐Based Analog Computation and Neural Network Classification with a Dot Product Engine. Adv. Mater. 30 (2018).

Serb, A. et al. Unsupervised learning in probabilistic neural networks with multi-state metal-oxide memristive synapses. Nat. Commun. 7, ncomms12611 (2016).

Pedretti, G. et al. Memristive neural network for on-line learning and tracking with brain-inspired spike timing dependent plasticity. Sci. Rep. 7 (2017).

Wang, Z. et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 1, 137–145 (2018).

Yang, J. J., Strukov, D. B. & Stewart, D. R. Memristive devices for computing. Nat. Nanotechnol. 8, 13–24 (2013).

Burr, G. W. et al. Neuromorphic computing using non-volatile memory. Adv. Phys. X 2, 89–124 (2017).

Burr, G. W. et al. Access devices for 3D crosspoint memory. J. Vac. Sci. Technol. B 32, 040802 (2014).

Jo, S. H., Kumar, T., Narayanan, S., Lu, W. D. & Nazarian, H. 3D-stackable crossbar resistive memory based on Field Assisted Superlinear Threshold (FAST) selector. in 2014 IEEE International Electron Devices Meeting 6.7.1-6.7.4 https://doi.org/10.1109/IEDM.2014.7046999 (2014).

Midya, R. et al. Anatomy of Ag/Hafnia‐Based Selectors with 1010 Nonlinearity. Adv. Mater. 29 (2017).

Hansen, M. et al. A double barrier memristive device. Sci. Rep. 5, 13753 (2015).

Dirkmann, S., Hansen, M., Ziegler, M., Kohlstedt, H. & Mussenbrock, T. The role of ion transport phenomena in memristive double barrier devices. Sci. Rep. 6, srep35686 (2016).

Solan, E. et al. An enhanced lumped element electrical model of a double barrier memristive device. J. Phys. Appl. Phys. 50, 195102 (2017).

Kandel, E. & Schwartz, J. Principles of Neural Science, Fifth Edition. (McGraw Hill Professional, 2013).

Andersen, P. The Hippocampus Book. (Oxford University Press, USA, 2007).

Bliss, T. V. P. & Lømo, T. Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. J. Physiol. 232, 331–356 (1973).

Markram, H., Gerstner, W. & Sjöström, P. J. Frontiers | Spike-timing dependent plasticity. (2012).

Prezioso, M. et al. Spiking neuromorphic networks with metal-oxide memristors. in 2016 IEEE International Symposium on Circuits and Systems (ISCAS) 177–180, https://doi.org/10.1109/ISCAS.2016.7527199 (2016).

Querlioz, D., Bichler, O. & Gamrat, C. Simulation of a memristor-based spiking neural network immune to device variations. In The 2011 International Joint Conference on Neural Networks 1775–1781, https://doi.org/10.1109/IJCNN.2011.6033439 (2011)

Hansen, M., Zahari, F., Ziegler, M. & Kohlstedt, H. Double-Barrier Memristive Devices for Unsupervised Learning and Pattern Recognition. Front. Neurosci. 11 (2017).

Zahari, F., Hansen, M., Mussenbrock, T., Ziegler, M. & Kohlstedt, H. Pattern recognition with TiOx-based memristive devices. Mater. 2015 Vol 2 Pages 203–216, https://doi.org/10.3934/matersci.2015.3.203 (2015).

Kandel, E. R. In search of memory: the emergence of a new science of mind. (W.W. Norton & Co., 2006).

Bakker, A., Kirwan, C. B., Miller, M. & Stark, C. E. L. Pattern Separation in the Human Hippocampal CA3 and Dentate Gyrus. Science 319, 1640–1642 (2008).

Rolls, E. T. The mechanisms for pattern completion and pattern separation in the hippocampus. Front. Syst. Neurosci. 7 (2013).

Sheridan, P., Ma, W. & Lu, W. Pattern recognition with memristor networks. In 2014 IEEE International Symposium on Circuits and Systems (ISCAS) 1078–1081, https://doi.org/10.1109/ISCAS.2014.6865326 (2014).

Hansen, M., Zahari, F., Ziegler, M. & Kohlstedt, H. Double-barrier memristive devices for unsupervised learning and pattern recognition. Front. Neurosci. (2017).

Acknowledgements

Financial support by the Deutsche Forschungsgemeinschaft through FOR 2093 is gratefully acknowledged.

Author information

Authors and Affiliations

Contributions

M.H. and F.Z. designed the electrical circuit, performed the measurements, analyzed the experimental results and co-wrote the manuscript. M.H. prepared the memristive devices. M.Z. supported the measurements and data interpretation. F.Z. and M.Z. developed the computing model. The simulation results were discussed and interpreted between F.Z., M.H., M.Z., and H.K. H.K. and M.Z. conceived the idea, initiated and supervised the experimental research. F.Z., M.H., M.Z., and H.K. discussed the experimental results and contributed to the refinement of the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hansen, M., Zahari, F., Kohlstedt, H. et al. Unsupervised Hebbian learning experimentally realized with analogue memristive crossbar arrays. Sci Rep 8, 8914 (2018). https://doi.org/10.1038/s41598-018-27033-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-018-27033-9

This article is cited by

-

Identifying and understanding the nonlinear behavior of memristive devices

Scientific Reports (2024)

-

Memristor-based circuit implementation of Competitive Neural Network based on online unsupervised Hebbian learning rule for pattern recognition

Neural Computing and Applications (2022)

-

Tendon stem cell-derived exosomes regulate inflammation and promote the high-quality healing of injured tendon

Stem Cell Research & Therapy (2020)

-

Analogue pattern recognition with stochastic switching binary CMOS-integrated memristive devices

Scientific Reports (2020)