Abstract

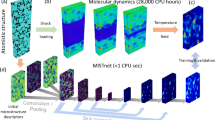

Finding the chemical composition and processing history from a microstructure morphology for heterogeneous materials is desired in many applications. While the simulation methods based on physical concepts such as the phase-field method can predict the spatio-temporal evolution of the materials’ microstructure, they are not efficient techniques for predicting processing and chemistry if a specific morphology is desired. In this study, we propose a framework based on a deep learning approach that enables us to predict the chemistry and processing history just by reading the morphological distribution of one element. As a case study, we used a dataset from spinodal decomposition simulation of Fe–Cr–Co alloy created by the phase-field method. The mixed dataset, which includes both images, i.e., the morphology of Fe distribution, and continuous data, i.e., the Fe minimum and maximum concentration in the microstructures, are used as input data, and the spinodal temperature and initial chemical composition are utilized as the output data to train the proposed deep neural network. The proposed convolutional layers were compared with pretrained EfficientNet convolutional layers as transfer learning in microstructure feature extraction. The results show that the trained shallow network is effective for chemistry prediction. However, accurate prediction of processing temperature requires more complex feature extraction from the morphology of the microstructure. We benchmarked the model predictive accuracy for real alloy systems with a Fe–Cr–Co transmission electron microscopy micrograph. The predicted chemistry and heat treatment temperature were in good agreement with the ground truth.

Similar content being viewed by others

Introduction

Heterogeneous materials are widely used in various industries such as aerospace, automotive, and construction. These materials’ properties greatly depend on their microstructure, which is a function of the chemical composition and operational process of materials production. To accelerate the novel materials design process, the construction of process-structure–property (PSP) linkages is necessary. Establishing PSP linkages with sole experiments is not practical as the process is costly and time-consuming. Therefore, computational methods are used to study the structure of materials and their properties. A basic assumption for computational modeling of materials is that they are periodic on the microscopic scale and can be approximated by representative elements (RVE)1. Finding the effects of process conditions and the chemical composition on the characteristics of the RVE, such as volume fraction, microstructure, grain size, and consequently, the materials’ properties, will lead to the development of PSP linkages. In the past two decades, the phase-field (PF) method has been increasingly used as a robust method for studying the spatio-temporal evolution of the materials’ microstructure and physical properties2. It has been widely used to simulate different evolutionary phenomena, including grain growth and coarsening3, solidification4, thin-film deposition5, dislocation dynamics6, vesicle formation in biological membranes7, and crack propagation8. PF models solve a system of partial differential equations (PDEs) for a set of continuous variables of the processes. However, solving high fidelity PF equations is inherently computationally expensive because it requires solving several coupled PDEs simultaneously9. Therefore, PSP construction, particularly for complex materials, only based on the PF method is inefficient. To address this challenge, machine learning (ML) methods have recently been proposed as an alternative for creating these linkages based on the limited experimental/simulation data or both10.

Artificial intelligence (AI), ML, and data science are beneficial in speeding up and simplifying the process of discovering new materials11. In recent years, using data science in various fields of materials science has increased significantly12,13,14,15,16,17. For instance, data science is applied to help density functional theory calculations to establish a relationship between atoms’ interaction with the properties of materials based on quantum mechanics18,19,20,21. AI is also utilized to establish PSP linkages in the context of materials mechanics. In this case, ML can be used to design new materials with desired properties or employed to optimize the production process of the existing materials for properties improvement. Through data science, researchers will be able to examine the complex and nonlinear behavior of a materials production process that directly affects the materials’ properties22. Many studies have focused on solving cause-effect design, i.e., finding the material properties from the microstructure or processing history. These studies have attempted to predict the structure of the materials from processing parameters or materials properties from microstructure and processing history10,12,23,24,25,26,27,28,29,30. A less addressed but essential problem is a goal-driven design that tries to find the processing history of the materials from their microstructures. In these cases, the optimal microstructure that provides the optimal properties is known, e.g., via physics-based models, and it is desirable to find the chemistry and processing routes that would lead to the desirable microstructure.

The use of microstructure images in ML modeling is challenging. The microstructure quantification has been reported as the central nucleus in PSP linkages construction24. Microstructure quantification is important from two perspectives. First, it can increase the accuracy of the developed data-driven model. Second, an in-depth understanding of the microstructures can improve the comprehension of the effects of process variables and chemical composition on the properties of materials24. In recent years, deep learning (DL) methods have been successfully used in other fields, such as computer vision. Their limited applications in materials science have also proven them as reliable and promising methods25. The main advantages of DL methods are their simplicity, flexibility, and applicability for all types of microstructures. Furthermore, DL has been broadly applied in material science to improve the targeted properties21,26,27,28,29,30,31,32,33. One form of DL models that has been extensively used for feature extraction in various applications such as image, video, voice, and natural language processing is Convolutional Neural Networks (CNN)34,35,36,37. In materials science, CNN has been used for various image-related problems. Cang et al. used CNN to achieve a 1000-fold dimension reduction from the microstructure space38. DeCost et al.39 applied CNN for microstructure segmentation. Xie and Grossman40 used CNN to quantify the crystal graphs to predict the material properties. Their developed framework was able to predict eight different material properties such as formation energy, bandgap, and shear moduli with high accuracy. CNN has also been employed to index the electron backscatter diffraction patterns and determine the crystalline materials’ crystal orientation41. The stiffness in two-phase composites has been predicted successfully by the deep learning approach, including convolutional and fully-connected layers42. In a comparative study, the CNN and the materials knowledge systems (MKS), proposed in the Kalidindi group based on the idea of using the n-point correlation method for microstructures quantification43,44,45, were used for microstructure quantification and then, the produced data were employed to predict the strain in the microstructural volume elements. The comparison showed that the extracted features by CNN could provide more accurate predictions46. Cecen et al.47 proposed CNN to find the salient features of a collection of 5900 microstructures. The results showed that the obtained features from CNN could predict the properties more accurately than the 2-point correlation, while the computation cost was also significantly reduced. Comparing DL approaches, including CNN, with the MKS method, single-agent, and multi-agent methods shows that DL always performs more accurately46,48,49. Zhao et al. utilized the electronic charge density (ECD) as a generic unified 3D descriptor for elasticity prediction. The results showed a better prediction power for bulk modulus than shear modulus50. CNN has also been applied for finding universal 3D voxel descriptors to predict the target properties of solid-state material51. The introduced descriptors outperformed the other descriptors in the prediction of Hartree energies for solid-state materials.

Training a deep CNN usually requires an extensive training dataset that is not always available in many applications. Therefore, a transfer learning method that uses a pretrained network can be applied for new applications. In transfer learning, all or a part of the pretrained networks such as VGG16, VGG1952, Xception53, ResNet54, and Inception55, which were trained by computer vision research community with lots of open source image datasets such as ImageNet, MS, CoCo, and Pascal, can be used for the desired application. In particular, in materials science which generally the image-based data are not greatly abundant, transfer learning could be beneficial. DeCost et al.56 adopted VGG16 to classify the microstructures based on their annealing conditions. Ling et al.12 applied VGG16 to extract the feature from scanning electron microscope (SEM) images and classify them. Lubbers et al.57 used the VGG 19 pretrained model to identify the physical meaningful descriptors in microstructures. Li et al.58 proposed a framework based on VGG19 for microstructure reconstruction and structure–property predictions. The pretrained VGG19 network was also utilized to reconstruct the 3D microstructures from 2D microstructures by Bostanabad59.

Review provided above shows that the majority of the ML-microstructure related works in the materials science community were primarily focused on using ML techniques for microstructure classification60,61,62, recognition63, microstructure reconstruction58,59, or as a feature-engineering-free framework to connect microstructure to the properties of the materials42,64,65. However, the process and chemistry prediction from a microstructure morphology image have received limited attention. This is a critical knowledge gap to address specifically for the problems in them the ideal microstructure or morphology with the specific chemistry associated with the morphology domains are known, but the chemistry and processing which would lead to that ideal morphology is unknown. The problem becomes much more challenging for multicomponent alloys with complex processing steps. Recently, Kautz et al.65 have used the CNN for microstructure classification and segmentation on Uranium alloyed with 10 wt% molybdenum (U-10Mo). They used the segmentation algorithm to calculate the area fraction of the lamellar transformation products of α-U + γ-UMo, and by feeding the total area fraction into the Johnson–Mehl–Avrami-Kolmogorov equation, they were able to predict the annealing parameters, i.e., time and temperature. However, Kautz’s et al.65 work for aging time prediction did not consider the morphology and particle distribution, and also, no chemistry was involved in the model. To address the knowledge gap, in this work we develop a mixed-data deep neural network that is capable to predict the chemistry and processing history of a micrograph. The model alloy used in this work is Fe–Cr–Co permanent magnets. These alloys experience spinodal decomposition at temperatures around 853 – 963 K. We use the PF method to create the training and test dataset for the DL network. CNN will quantify the produced microstructures by the PF method, then the salient features will be used by another deep neural network to predict the temperature and chemical composition.

Methods

Phase-field modeling

With the enormous increase in computational power and advances in numerical methods, the PF approach has become a powerful tool for quantitative modeling of microstructures' temporal and spatial evolution. Some applications of this method include modeling materials undergoing martensitic transformation66, crack propagation67, grain growth68, and materials microstructure prediction for optimization of their properties69.

The PF method eliminates the need for the system to track each moving boundary by having the interfaces to be of finite width where they gradually transform from one composition or phase to another2. This essentially causes the system to be modeled as a diffusivity problem, which can be solved by using the continuum nonlinear PDEs. There are two main PF PDEs for representing the evolution of various PF variables. One being the Allen–Cahn equation70 for solving non-conserved order parameters (e.g., phase regions and grains), and the other one being the Cahn–Hilliard equation71 for solving conserved order parameters (e.g., concentrations).

Since the diffusion of constituent elements controls the process of phase separation, we only need to track the conserved variables, i.e., Fe, Cr, and Co concentration, during isothermal spinodal phase decomposition. Thus, our model will be governed by Cahn–Hilliard equations. The PF model in this work is primarily adopted from72. For the spinodal decomposition of the Fe–Cr–Co ternary system, the Cahn–Hilliard equations are,

The microstructure evolution is primarily driven by the minimization of the total free energy Ftot of the system. The free energy functional, using N conserved variables ci at the location \(\vec{r}\) is described by:

In this model, N = 3 conserved variables are cFe, cCr, and cCo, and they denote the composition of Fe, Cr, and Co, respectively. fgr is the gradient energy density and is described by

where κi is the gradient energy coefficient. In this case, κ is considered a constant value. floc is the local Gibbs free energy density as a function of all concentrations, ci, and temperature, T. For this work, we will model the body-centered cubic phase of Fe–Cr–Co, where the Gibbs free energy of the system is described as72,

where fi0 is the Gibbs free energy of the pure element i and fE is the excess free energy defined by

where LFe,Cr, LFe,Co, and LCr,Co are interaction parameters. fmg is the magnetic energy contribution and can be expressed as

where β is the atomic magnetic moment, f(τ) is a function of τ ≡ T/TC. TC is the Curie temperature. Eel in Eq. (3) is the elastic strain energy added to the system and is expressed as

where \(\varepsilon_{ij}^{el} \left( {\vec{r},t} \right)\) is the elastic strain and \(C_{ijkl}\) are the elastic coefficients of the stiffness tensor. \(\varepsilon_{ij}^{0} \left( {\vec{r},t} \right)\) is the eigen-strain and is expressed by

where εCr and εCo are lattice mismatches between Cr with Fe and Co with Fe, respectively. \(c_{Cr}^{0}\) and \(c_{Co}^{0}\) are the initial concentrations of Cr and Co, respectively and δij is the Kronecker delta. The constrained strain, \(\varepsilon_{ij}^{c} \left( {\vec{r},t} \right)\), is solved using the finite element method.

Mij in Eq. (2) are Onsager coefficients and are scalar mobilities from the coupled system involving the concentrations. They can be determined by72,

The mobility Mi of each element i is determined by

where \(D_{i}^{0}\) is the self-diffusion coefficient and Qi is the diffusion activation energy.

The Fe–Cr–Co evolutionary PDEs were solved using the Multiphysics Object-Oriented Simulation Environment (MOOSE) framework73. MOOSE is an open-source, highly parallel, finite element package developed by Idaho National Laboratory in which we took advantage of their modular structure to build our PF simulations. Using MOOSE’s prebuilt series of weak form residuals of the Cahn–Hilliard equations, we solved the coupled Cahn–Hilliard equations with the input parameters from Table S1 in Supplementary Materials.

Training and test dataset

Since the compositions are subject to the constraint that they must sum to one, the dataset was produced based on the mixture design as a design of experiments method74. The Simplex-Lattice75 designs were adopted to provide the data for simulation. The simulation variables and their range of values are given in Table 1. The simulations were run on Boise State University R2 cluster computers76 using the MOOSE framework73.

After running the simulations, the microstructures were collected from the results showing the phase separation. The extracted microstructures for Fe, i.e., the morphology of Fe distribution, from the PF simulations, along with the minimum and maximum compositions of Fe in each microstructure, are utilized as the inputs to predict spinodal temperature, Cr, and Co compositions as processing history parameters. Indeed, the input data is a mixed dataset combined of microstructures, as image data, and Fe composition, as numerical or continuous data. Since these values constitute different data types, the machine learning model must be able to ingest the mixed data. In general, handling the mixed data is challenging because each data type may require separate preprocessing steps, including scaling, normalization, and feature engineering77.

Deep learning methodology

Deep learning (DL), as an artificial intelligence (AI) tool, is usually used for image and natural language processing as well as object and speech recognition based on human brain mimicking36,78. Indeed, DL is a deep neural network that can be applied for supervised, e.g., classification and regression tasks, and unsupervised, e.g., clustering, learning. In this work, since we have two different data types as input, two various networks are needed for data processing. The numerical data is fed into fully-connected layers while image features are extracted through the convolutional layers. For images involving a large number of pixel values, it is often not feasible to directly utilize all the pixel values for fully-connected layers because it can cause overfitting, increased complexity, and difficulty in model convergence. Hence, convolutional layers are applied to reduce the dimensionality of the image data by finding the image features61,79.

Fully-connected layers

Fully-connected layers are hidden layers consist of hidden neurons and activation function80. The number of hidden neurons is usually selected based on trial and error. The neural networks can predict complex nonlinear behaviors of systems through activation functions. Any nonlinear function that is differentiable can be used as an activation function. However, there are some activation functions such as rectified linear (ReLU), leaky rectified linear, hyperbolic tangent (Tanh), sigmoid, Swish, and softmax that have been successfully used in different applications in neural networks81. In particular, ReLU (f(x) = max (0, x)) and Swish (f(x) = x sigmoid(x)) activation functions have been recommended for hidden layers in deep neural networks82.

Convolutional neural networks

A convolutional neural network (CNN) is a deep network that is applied for image processing and computer vision tasks. For the first time, LeCun et al. proposed using CNN in image recognition83. CNN, like other deep neural networks, consists of input, output, and hidden layers. But the main difference lies in the use of hidden layers consisting of convolutional, pooling, and fully-connected layers that follow each other. Several convolutional and pooling layers can be designed in the CNN architectures.

Convolutional layers can extract the salient features of images without losing the information. At the same time, the dimensionality of the generated data gets reduced and then fed as input to the fully-connected layer. Two significant advantages of CNN are parameter sharing and sparsity of the connections. A schematic diagram for CNN is given in Fig. 1. The convolutional layer consists of filters that pass over the image and scanning the pixel values to make a feature map. The produced map proceeds through the activation function to add nonlinearity property. The pooling layer involves a pooling operation, e.g., maximum or average, which acts as a filter on the feature map. The pooling layer reduces the size of the feature map by pooling operation. Different combinations of convolutional and pooling layers are usually used in various CNN architectures. Finally, the fully-connected layers are added to train on image extracted features for a particular task such as classification or regression.

Similar to other neural networks, a cost function is used to train a CNN and update the weights and biases by backpropagation. There are many hyperparameters such as the number of filters, size of filters, regularization values, dropout values, optimizer parameters, initial weights, and biases that must be initialized before training. Training a CNN usually needs an extensive training dataset that is not always available for all applications. In this situation, transfer learning can be helpful in developing a CNN. In transfer learning, all or part of a pretrained network like VGG16, VGG1952, Xception53, ResNet54, and Inception55, which were trained by computer vision research community with lots of open source image datasets such as ImageNet, MS, CoCo, and Pascal, can be used for the desired application. The state-of-the-art pretrained network is EfficientNet which was proposed by Tan and Le84. This method is based on the idea that scaling up the CNN can increase its accuracy85. Since there was no complete understanding of the effect of network enlargement on the accuracy, Tan and Le proposed a systematic approach for scaling up the CNNs. There are different ways to scale up the CNNs by their depth85, width86, and resolution87. Tan and Le proposed to scale up all the depth, width, and resolution factors for the CNN with fixed scaling coefficients84. The results demonstrated that their proposed network, EfficientNet-B7, had better accuracy than the best-existing networks while uses 8.4 times fewer parameters and performs 6.1 times faster. In addition, they provided other EfficientNet-B0 to -B6, which can overcome the models with the corresponding scale such as ResNet-15285 and AmoebaNet-C88 in terms of accuracy with much fewer parameters. Due to the outstanding performance of EfficientNet, although it is trained based on the ImageNet dataset which is completely different from materials microstructures, it seems the EfficientNets convolutional layers have the potential to extract the features of images from other sources like materials microstructures.

Proposed model

The training and test datasets are produced using the PF method. In this work, two different algorithms, including CNN and transfer learning, were proposed to extract the salient features of the microstructure morphologies. We applied a proposed CNN (Fig. S1) or part of pretrained EfficienctNet B-6 and B-7 convolutional layers (Fig. 2) to find the features of the microstructures. The architecture of the proposed CNN was found by testing different combinations of convolutional layers and their parameters based on the best accuracy. In the transfer learning part, different layers of the pretrained convolutional layers were tested to find the best convolutional layers for feature extraction.

On the other hand, the minimum and maximum Fe composition in the microstructure, as numerical data, is fed into the fully-connected layers. The extracted features from microstructures and the output of the fully-connected layers are combined to feed other fully-connected layers to predict the processing temperature and initial Cr and Co compositions. Different hyperparameters such as network architecture, cost function, and optimizer are tested to find the model with the highest accuracy. The model specifications, compilations (here loss function, optimizer, and metrics), and cross-validation parameters are listed in Table 2.

Results and discussion

Phase-field modeling and dataset generation

Different microstructures are produced by PF modeling for different chemical compositions and temperatures. The chemical compositions and temperature were designed based on the design of experiment method. Since the chemical compositions are subject to the constraint that they must sum to one, the Simplex-Lattice design as a standard mixture design was adopted to produce the samples. In this regard, the compositions start from 0.05 and increase to 0.90 at 0.05 intervals, and the temperature rises from 853 to 963 K at 10 K increment, see Table 1. Therefore, 2053 different samples were simulated by the PF method, and the microstructures were constructed for different chemical compositions and temperatures. All the proposed operating conditions were simulated for the 100 h spinodal decomposition process. Figure 3 depicts three sample results of the PF simulation. The MOOSE-generated data can be presented in different color formats. In most transmission electron microscopy (TEM) images in literature, the Fe-rich and Cr-rich phases have been shown by bright and dark contrasts, respectively. We followed the same coloring for the extracted microstructures from the MOOSE. The Chigger python library in MOOSE has been used for microstructures extraction.

Since decomposition does not occur in all the proposed operating conditions and chemistries, the microstructures showing the 0.05 difference in Fe composition between Cr-rich and Fe-rich phases were considered spinodally decomposed results. Hence, 454 samples in which decomposition has taken place are used to create the database. 80% of 454 samples were used for training and 20% for testing. The training was validated by fivefold cross-validation. The Fe-based composition microstructure morphologies, as well as minimum and maximum of Fe compositions in the microstructure along with corresponding chemical compositions and temperatures, form the dataset. A sample workflow on the dataset construction is given in Fig. 4.

Convolutional layers for feature extraction

The overreaching goal of the convolutional layers is feature extraction from the images. First, we train a proposed CNN, which includes three convolutional layers, batch normalization, max pooling, and ReLU activation function. Filters in each convolutional layer encode the salient features of images. Once the input images are fed into the network, the filters in the convolutional layers are activated to produce the response maps as an output of the filters. Some response maps of each convolutional layer in the proposed CNN are given in Fig. 5. Then, as a comparison, the EfficientNet-B6 and EfficientNet-B7 convolutional layers were also applied to extract the salient features of produced microstructure by the PF method. The EfficientNet-B6 and EfficientNet-B7 have 43 and 66 million parameters which are less than other network parameters with similar accuracy. The trained weights and biases of the EfficientNet models on the ImageNet dataset for classification tasks are loaded for convolutional layers without top fully-connected layers. EfficientNet-B6 and EfficientNet-B7 have 668 and 815 layers, including 139 and 168 convolutional layers, respectively. The response maps for some layers are given in Fig. 6 and Fig. S2 for EfficientNetB7 and EfficientNetB6, respectively. They represent the locations of the encoded features by the filters on the input image.

The response maps for both trained CNN and pretrained EfficientNet show that the first layers capture the simple features like edges, colors, and orientations, while the deeper layers extract more complicated features that are less visually interpretable, see Fig. 6; similar observations are reported in other studies53,55,89. The filters from the first layers can extensively detect the edges; hence the microstructures are segmented by the borders of two different phases. By going into deeper layers, understanding the extracted information by the filters becomes more difficult and can only be analyzed by their effects on the accuracy of the final model. Since the pretrained EfficientNet has deeper layers, they can extract more complicated features from the microstructure morphologies. Indeed, we can use different layers for microstructure information extraction and test them to predict the processing history and find the most optimum network.

Temperature and chemical compositions prediction

The mixed dataset contains microstructure morphologies as image data and the minimum and maximum of Fe composition in the microstructures as numeric data. The most common reported experimental images in literature for the spinodally decomposed microstructures are greyscale TEM images. To enable the model to predict the chemistry and processing history of the experimental microstructures, we have used the greyscale images in the network training. The proposed CNN, as well as EfficientNet-B6 and EfficientNet-B7 pretrained networks, were used for microstructures’ feature extraction. Then, the extracted features are passed through the fully-connected layers with batch normalization, Swish activation function, and dropout. The numeric data was proceeded by fully-connected layers with the ReLU activation function. The output of both layers was combined with other fully-connected layers to predict temperature and chemical compositions through the linear activation in the last fully-connected layer. After testing different fully-connected layer sizes, the best architecture was selected based on prediction accuracy and stability, which is shown in Fig. S1, supplementary materials, for the proposed CNN and Fig. 7 for pretrained networks. The models were trained on XSEDE resources90.

As a starting point, the proposed CNN network with fully-connected layers was trained to predict the processing history parameters. After testing different CNN architectures, the presented network in Fig. S1, in Supplementary Materials, provided the best results that are given in Fig. S3. The results show that the proposed network can predict the chemical compositions reasonably well, but the temperature accuracy is poor. Temperature is a key parameter in the spinodal decomposition process and developing a model with higher accuracy is required. To increase the accuracy, we need to extract more subtle features from the morphologies. However, training a CNN with more layers requires numerous training data. A pretrained network can extract more valuable features from images and consequently can be helpful for accuracy improvement. Therefore, after fixing the architecture of fully-connected layers, different layers of EfficientNet-B6 and EfficientNet-B7 were tested to find the best layer for microstructures’ feature extraction. Herein, layers 96, 111, 142, 231, 304, 319, 362, 392, 496, 556, 631, 659, and 663 from EfficientNet-B6 and layers 25, 108, 212, 286, 346, 406, 464, 509, 613, 673, 806, and 810 from EfficientNet-B7 were selected to quantify the microstructures. The models were run, based on the given parameters in Table 2, for different layers. The model training was repeated five times. The average R Squares and mean square error (MSE) for cross-validation and test set are given in supplementary materials, Tables S2 and S3, for EfficientNet-B6 and EfficientNet-B7, respectively. Indeed, the models were validated by fivefold cross-validation during training, and the test set contains the data that the model never sees in the training process. According to the results, both trained models based on EfficientNet-B6 and EfficientNet-B7 can predict the Co composition very well and while the prediction of temperature and Cr composition is good, they are more challenging. Accordingly, the most accurate prediction belongs to the models that use up to layer 319 of the EfficientNet-B6 and layer 806 of EfficientNet-B7 for microstructures’ quantification.

In addition to cross-validation and test set accuracy, which can be used for overfitting identification, tracking the loss change in each epoch during the training process can also help in overfitting detection. Figure 8a depicts the loss change in each epoch for the developed model based on EfficientNet-B7, a corresponding plot for EfficientNet-B6 is available in supplementary materials (Fig. S4a). Figure 8a shows that both training and validation losses reduce smoothly with the epoch increase. The insignificant gap between the train and validation losses proves that the models’ parameters converge to the optimal values without overfitting. To better understand the application of the developed models, the models were tested by a sample from the test set; the microstructure belongs to the spinodal decomposition of 20% Fe, 40% Cr, and 40% Co at 913 K after 100 h. The model predictions for temperature and chemical compositions are given in Fig. 8b, for EfficientNet-B7, and Fig. S4b, for EfficientNet-B6. The comparison between the ground truth and prediction demonstrates that the models can predict the chemistry and processing history reasonably well. To quantify the models’ predictive accuracy on all test data points, we have used the parity plots in which the models’ predictions are compared with ground truth in an x–y coordinate system. For an ideal 100% accurate model all data points will overlap on a 45-degree line. The parity plots of the models, i.e., EfficientNet-B7 and EfficientNet-B6, for temperature, Cr composition, and Co composition along with their accuracy parameters are given in Fig. 8c and Fig. S4c. The results show that the models can predict the Co composition with the highest accuracy. It seems that temperature prediction is the most challenging variable for the models, but still, there is a good agreement between the models’ prediction and ground truth.

(a) Training and validation loss per each epoch, (b) prediction of temperature and chemical compositions for a random test dataset, and (c) the parity plots of temperature and chemical compositions for the testing dataset from the proposed model when first 806 layers of EfficientNetB7 are used for microstructures’ feature extraction (The size of the input images are 224 × 224 pixels).

The results include two important points. First, while the extracted features from the shallow trained CNN can predict the compositions well, we need deep CNN to precisely predict the temperature. For this reason, the deep pretrained EfficientNet networks were used, which could predict temperature with higher accuracy. This observation indicates that the compositions are more relevant to simple extracted features of the microstructure morphology, however, more complicated extracted features are required to estimate the temperature. The physical concepts of the problem can also explain this. A small change in compositions would alter the microstructure morphology much more dramatically than a small change in temperature. The differences among the microstructures with different compositions and the same processing temperature are easily recognizable. For example, with a slight change in chemistry the volume fraction of the decomposed phases would vary and this information, i.e., change in the number of white and black pixels, can easily get extracted from the very first layers of the network. However, there are subtle differences between the microstructure morphologies when we slightly change the processing temperature. Therefore, much more complex features are needed to distinguish the differences among the morphologies with small processing temperature variations. Extraction of these complex features requires deeper convolutional layers. In addition, with convolutional layers increasing, the receptive field size would improve. And that ensures no important information is left out from the microstructure when making predictions. Therefore, more information is extracted from the microstructures, and it would also increase the temperature prediction accuracy. On the other hand, training a deep CNN with limited training and test dataset is not practical. To overcome this challenge, transfer learning can be helpful, and some other studies have shown that pretrained networks are effective in feature extraction in materials science-related micrographs12,23,39,60,91,92,93.

Validation of the proposed model with the experimental data

The model accuracy against the test dataset, i.e., the data that the model has never seen in the training process, is good, but the test dataset is still from phase-field simulation. Since the ultimate goal of the developed framework is to facilitate the microstructure mediated materials design via predicting chemistry and processing history for experimental microstructures, it is valuable to test the model accuracy on the real microstructures. For this purpose, we have tested the model against an experimental TEM image for spinodal decomposition of Fe–Cr–Co with initial composition 46% Fe, 31% Cr, and 23% Co after 100 h heat treatment at 873 K from Okada et al.94. Since the Fe composition of the micrograph was not reported in Okada et al.’s paper, we selected the Fe composition by interpolating between the adjacent simulation points in our database. Figure 9 shows the predictions of the proposed network for an experimental TEM microstructure.

adopted from Okada et al.94. The original image was cropped to be in the desired size of 224 × 224 pixels.

Prediction of chemistry and processing temperature for an experimental TEM image

While Co composition and processing temperature prediction is very good, we see a 16% error in Cr composition prediction. We believe the error could stem from several factors. Firstly, the TEM micrograph that we used does not have the image quality of the training dataset. Secondly, the Fe composition associated with the micrograph was not reported in the original paper94, and we used a phase-field-informed Fe composition. Thirdly, the dimension of the experimental image was larger than the simulated data, and it was cropped to be at the same size as the required input microstructure size. Despite all these limitations, the proposed model based on the first 806 convolutional layers of EfficientNetB7 predicts the chemistry and processing temperature of an experimental TEM image reasonably well. And it demonstrates that the developed model in this work is suitable for finding the process history behind the experimental microstructures.

Beyond the specific model alloy that we used in this work, the developed model can also be generalized to other materials by considering the material production processes. The developed framework can be used for other ternary alloys that are produced by spinodal decomposition. The model performance in the process history and chemistry prediction should be considered for other spinodal decomposed alloys with less or more elements. The domain adaptation methods such as unsupervised domain adaptation95 can provide the ability to use the developed model for other spinodal decomposed alloys. In practice, the proposed model needs two experimental inputs, 1) a TEM micrograph that shows the morphology and, 2) X-ray fluorescence spectroscopy (XRF) that provides the corresponding compositions.

Conclusion

We introduced a framework based on a deep neural network to predict the chemistry and processing history from the materials’ microstructure morphologies. As a case study, we generated the training and test dataset from phase-field modeling of the spinodal decomposition process of Fe–Cr–Co alloy. We considered a mixed input dataset by combining the image data, the produced microstructure morphologies based on Fe composition, with numeric data, the minimum and maximum of Fe composition in the microstructure. The temperature and chemical compositions were predicted as processing history. We quantified the microstructures by a proposed CNN and different convolutional layers of EfficientNet-B6 and EfficientNet-B7 pretrained networks. Then, the produced features were combined with the output of a fully-connected layer for numeric data processing by other fully-connected layers to predict processing history. After testing different architectures, the best network was found based on the model’s accuracy. A detailed analysis of the model’s performance indicated that the model parameters were optimized based on training and validation loss reduction. The results show that while the simple extracted features from the microstructure morphology by the first convolutional layers are enough for the chemistry prediction, the temperature needs more complicated features that can be extracted by deeper layers. The model benchmark against an experimental TEM micrograph indicates the model’s well predictive accuracy for real alloy systems. We demonstrated that the pretrained convolutional layers of EfficientNet networks could be used to extract the meaningful features relevant to the compositions and temperature from the microstructure morphology. In general, the proposed models were able to predict the processing history based on the materials’ microstructure reasonably well.

Data availability

The raw/processed data and codes required to reproduce these findings are available at https://github.com/Amir1361/Materials_Design_by_ML_DL.

References

Rao, C. & Liu, Y. Three-dimensional convolutional neural network (3D-CNN) for heterogeneous material homogenization. Comput. Mater. Sci. 184, 109850. https://doi.org/10.1016/j.commatsci.2020.109850 (2020).

Chen, L.-Q. Phase-field models for microstructure evolution. Annu. Rev. Mater. Res. 32, 113–140. https://doi.org/10.1146/annurev.matsci.32.112001.132041 (2002).

Miyoshi, E. et al. Large-scale phase-field simulation of three-dimensional isotropic grain growth in polycrystalline thin films. Modell. Simul. Mater. Sci. Eng. 27, 054003. https://doi.org/10.1088/1361-651x/ab1e8b (2019).

Zhao, Y., Zhang, B., Hou, H., Chen, W. & Wang, M. Phase-field simulation for the evolution of solid/liquid interface front in directional solidification process. J. Mater. Sci. Technol. 35, 1044–1052. https://doi.org/10.1016/j.jmst.2018.12.009 (2019).

Stewart, J. A. & Dingreville, R. Microstructure morphology and concentration modulation of nanocomposite thin-films during simulated physical vapor deposition. Acta Mater. 188, 181–191. https://doi.org/10.1016/j.actamat.2020.02.011 (2020).

Beyerlein, I. J. & Hunter, A. Understanding dislocation mechanics at the mesoscale using phase field dislocation dynamics. Philos Trans A Math Phys Eng Sci 374, 20150166. https://doi.org/10.1098/rsta.2015.0166 (2016).

Elliott, C. M. & Stinner, B. A surface phase field model for two-phase biological membranes. SIAM J. Appl. Math. 70, 2904–2928 (2010).

Karma, A., Kessler, D. A. & Levine, H. Phase-field model of mode III dynamic fracture. Phys. Rev. Lett. 87, 045501 (2001).

Montes de Oca Zapiain, D., Stewart, J. A. & Dingreville, R. Accelerating phase-field-based microstructure evolution predictions via surrogate models trained by machine learning methods. npj Comput. Mater. 7, 3. https://doi.org/10.1038/s41524-020-00471-8 (2021).

Brough, D. B., Wheeler, D. & Kalidindi, S. R. Materials knowledge systems in python—A data science framework for accelerated development of hierarchical materials. Integr. Mater. Manuf. Innov. 6, 36–53. https://doi.org/10.1007/s40192-017-0089-0 (2017).

Meredig, B. Five high-impact research areas in machine learning for materials science. Chem. Mater. 31, 9579–9581. https://doi.org/10.1021/acs.chemmater.9b04078 (2019).

Ling, J. et al. Building data-driven models with microstructural images: Generalization and interpretability. Mater. Discov. 10, 19–28. https://doi.org/10.1016/j.md.2018.03.002 (2017).

Farizhandi, A. A. K., Zhao, H. & Lau, R. Modeling the change in particle size distribution in a gas-solid fluidized bed due to particle attrition using a hybrid artificial neural network-genetic algorithm approach. Chem. Eng. Sci. 155, 210–220 (2016).

Farizhandi, A. A. K., Zhao, H., Chen, T. & Lau, R. Evaluation of material properties using planetary ball milling for modeling the change of particle size distribution in a gas-solid fluidized bed using a hybrid artificial neural network-genetic algorithm approach. Chem. Eng. Sci. 215, 115469 (2020).

Farizhandi, A. A. K. et al. Evaluation of carrier size and surface morphology in carrier-based dry powder inhalation by surrogate modeling. Chem. Eng. Sci. 193, 144–155. https://doi.org/10.1016/j.ces.2018.09.007 (2019).

Farizhandi, K. & Abbas, A. Surrogate Modeling Applications in Chemical and Biomedical Processes Doctor of Philosophy thesis, Nanyang Technological University (2017).

Farizhandi, A. A. K., Alishiri, M. & Lau, R. Machine learning approach for carrier surface design in carrier-based dry powder inhalation. Comput. Chem. Eng. https://doi.org/10.1016/j.compchemeng.2021.107367 (2021).

Li, L. et al. Understanding machine-learned density functionals. Int. J. Quantum Chem. 116, 819–833. https://doi.org/10.1002/qua.25040 (2016).

Nagai, R., Akashi, R. & Sugino, O. Completing density functional theory by machine learning hidden messages from molecules. npj Comput. Mater. 6, 43. https://doi.org/10.1038/s41524-020-0310-0 (2020).

Snyder, J. C., Rupp, M., Hansen, K., Müller, K.-R. & Burke, K. Finding density functionals with machine learning. Phys. Rev. Lett. 108, 253002. https://doi.org/10.1103/PhysRevLett.108.253002 (2012).

Gubernatis, J. E. & Lookman, T. Machine learning in materials design and discovery: Examples from the present and suggestions for the future. Phys. Rev. Mater. 2, 120301. https://doi.org/10.1103/PhysRevMaterials.2.120301 (2018).

Liu, R. et al. A predictive machine learning approach for microstructure optimization and materials design. Sci. Rep. 5, 11551. https://doi.org/10.1038/srep11551 (2015).

Kautz, E. et al. An image-driven machine learning approach to kinetic modeling of a discontinuous precipitation reaction. Mater. Charact. https://doi.org/10.1016/j.matchar.2020.110379 (2020).

Bostanabad, R. et al. Computational microstructure characterization and reconstruction: Review of the state-of-the-art techniques. Prog. Mater Sci. 95, 1–41. https://doi.org/10.1016/j.pmatsci.2018.01.005 (2018).

Agrawal, A. & Choudhary, A. Deep materials informatics: Applications of deep learning in materials science. MRS Communications 9, 779–792. https://doi.org/10.1557/mrc.2019.73 (2019).

Jha, D. et al. Elemnet: Deep learning the chemistry of materials from only elemental composition. Sci. Rep. 8, 1–13 (2018).

Xue, D. et al. An informatics approach to transformation temperatures of NiTi-based shape memory alloys. Acta Mater. 125, 532–541 (2017).

Meredig, B. et al. Can machine learning identify the next high-temperature superconductor? Examining extrapolation performance for materials discovery. Mol. Syst. Des. Eng. 3, 819–825 (2018).

Meredig, B. et al. Combinatorial screening for new materials in unconstrained composition space with machine learning. Phys. Rev. B 89, 094104 (2014).

Teichert, G. H. & Garikipati, K. Machine learning materials physics: Surrogate optimization and multi-fidelity algorithms predict precipitate morphology in an alternative to phase field dynamics. Comput. Methods Appl. Mech. Eng. 344, 666–693 (2019).

Pilania, G., Wang, C., Jiang, X., Rajasekaran, S. & Ramprasad, R. Accelerating materials property predictions using machine learning. Sci. Rep. 3, 1–6 (2013).

Del Rosario, Z., Rupp, M., Kim, Y., Antono, E. & Ling, J. Assessing the frontier: Active learning, model accuracy, and multi-objective candidate discovery and optimization. J. Chem. Phys. 153, 24–112 (2020).

Jha, D. et al. Enhancing materials property prediction by leveraging computational and experimental data using deep transfer learning. Nat. Commun. 10, 1–12 (2019).

Chen, Y., Duffner, S., Stoian, A., Dufour, J.-Y. & Baskurt, A. Deep and low-level feature based attribute learning for person re-identification. Image Vis. Comput. 79, 25–34. https://doi.org/10.1016/j.imavis.2018.09.001 (2018).

Hinton, G. E. To recognize shapes, first learn to generate images. Prog. Brain Res. 165, 535–547 (2007).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Amodei, D. et al. in International Conference on Machine Learning 173–182.

Cang, R. et al. Microstructure representation and reconstruction of heterogeneous materials via deep belief network for computational material design. J. Mech. Des. 139, 66 (2017).

DeCost, B. L., Lei, B., Francis, T. & Holm, E. A. High throughput quantitative metallography for complex microstructures using deep learning: A case study in ultrahigh carbon steel. Microsc. Microanal. 25, 21–29 (2019).

Xie, T. & Grossman, J. C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett. 120, 145–301 (2018).

Ryan, K., Lengyel, J. & Shatruk, M. Crystal structure prediction via deep learning. J. Am. Chem. Soc. 140, 10158–10168. https://doi.org/10.1021/jacs.8b03913 (2018).

Yang, Z. et al. Deep learning approaches for mining structure-property linkages in high contrast composites from simulation datasets. Comput. Mater. Sci. 151, 278–287. https://doi.org/10.1016/j.commatsci.2018.05.014 (2018).

Landi, G., Niezgoda, S. R. & Kalidindi, S. R. Multi-scale modeling of elastic response of three-dimensional voxel-based microstructure datasets using novel DFT-based knowledge systems. Acta Mater. 58, 2716–2725 (2010).

Kalidindi, S. R., Niezgoda, S. R., Landi, G., Vachhani, S. & Fast, T. A novel framework for building materials knowledge systems. Comput. Mater. Contin. 17, 103–125 (2010).

Fast, T. & Kalidindi, S. R. Formulation and calibration of higher-order elastic localization relationships using the MKS approach. Acta Mater. 59, 4595–4605 (2011).

Yang, Z. et al. Establishing structure-property localization linkages for elastic deformation of three-dimensional high contrast composites using deep learning approaches. Acta Mater. 166, 335–345. https://doi.org/10.1016/j.actamat.2018.12.045 (2019).

Cecen, A., Dai, H., Yabansu, Y. C., Kalidindi, S. R. & Song, L. Material structure-property linkages using three-dimensional convolutional neural networks. Acta Mater. 146, 76–84. https://doi.org/10.1016/j.actamat.2017.11.053 (2018).

Liu, R., Yabansu, Y. C., Agrawal, A., Kalidindi, S. R. & Choudhary, A. N. Machine learning approaches for elastic localization linkages in high-contrast composite materials. Integr. Mater. Manuf. Innov. 4, 192–208. https://doi.org/10.1186/s40192-015-0042-z (2015).

Liu, R. et al. Context aware machine learning approaches for modeling elastic localization in three-dimensional composite microstructures. Integr. Mater. Manuf. Innov. 6, 160–171. https://doi.org/10.1007/s40192-017-0094-3 (2017).

Zhao, Y. et al. Predicting elastic properties of materials from electronic charge density using 3D deep convolutional neural networks. J. Phys. Chem. C 124, 17262–17273. https://doi.org/10.1021/acs.jpcc.0c02348 (2020).

Kajita, S., Ohba, N., Jinnouchi, R. & Asahi, R. A universal 3D voxel descriptor for solid-state material informatics with deep convolutional neural networks. Sci. Rep. 7, 16991. https://doi.org/10.1038/s41598-017-17299-w (2017).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

Chollet, F. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1251–1258.

He, K., Zhang, X., Ren, S. & Sun, J. in European Conference on Computer Vision 630–645 (Springer).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2818–2826.

DeCost, B. L., Francis, T. & Holm, E. A. Exploring the microstructure manifold: image texture representations applied to ultrahigh carbon steel microstructures. Acta Mater. 133, 30–40 (2017).

Lubbers, N., Lookman, T. & Barros, K. Inferring low-dimensional microstructure representations using convolutional neural networks. Phys. Rev. E 96, 052111 (2017).

Li, X. et al. A transfer learning approach for microstructure reconstruction and structure-property predictions. Sci. Rep. 8, 1–13 (2018).

Bostanabad, R. Reconstruction of 3D microstructures from 2D images via transfer learning. Comput. Aided Des. 128, 102–906 (2020).

Cohn, R. & Holm, E. Unsupervised machine learning via transfer learning and k-means clustering to classify materials image data. Integr. Mater. Manuf. Innov. https://doi.org/10.1007/s40192-021-00205-8 (2021).

Russakovsky, O. et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vision 115, 211–252 (2015).

Luo, Q., Holm, E. A. & Wang, C. A transfer learning approach for improved classification of carbon nanomaterials from TEM images. Nanoscale Adv. 3, 206–213. https://doi.org/10.1039/D0NA00634C (2021).

Chowdhury, A., Kautz, E., Yener, B. & Lewis, D. Image driven machine learning methods for microstructure recognition. Comput. Mater. Sci. 123, 176–187. https://doi.org/10.1016/j.commatsci.2016.05.034 (2016).

Ma, W. et al. Image-driven discriminative and generative machine learning algorithms for establishing microstructure–processing relationships. J. Appl. Phys. 128, 134901. https://doi.org/10.1063/5.0013720 (2020).

Kautz, E. et al. An image-driven machine learning approach to kinetic modeling of a discontinuous precipitation reaction. Mater. Charact. 166, 110379. https://doi.org/10.1016/j.matchar.2020.110379 (2020).

Moshkelgosha, E. & Mamivand, M. Concurrent modeling of martensitic transformation and crack growth in polycrystalline shape memory ceramics. Eng. Fract. Mech. 241, 107–403 (2021).

Landis, C. M. & Hughes, T. J. Phase-Field Modeling and Computation of Crack Propagation and Fracture (Texas Univ at Austin, 2014).

Mehrer, H. in Diffusion in solids: Fundamentals, methods, materials, diffusion-controlled processes 553-582 (Springer, 2007).

Furrer, D. U. Application of phase-field modeling to industrial materials and manufacturing processes. Curr. Opin. Solid State Mater. Sci. 15, 134–140 (2011).

Allen, S. M. & Cahn, J. W. A microscopic theory for antiphase boundary motion and its application to antiphase domain coarsening. Acta Metall. 27, 1085–1095. https://doi.org/10.1016/0001-6160(79)90196-2 (1979).

Cahn, J. W. & Hilliard, J. E. Free energy of a nonuniform system. I. Interfacial free energy. J. Chem. Phys. 28, 258–267. https://doi.org/10.1063/1.1744102 (1958).

Koyama, T. & Onodera, H. Phase-field simulation of phase decomposition in Fe−Cr−Co alloy under an external magnetic field. Met. Mater. Int. 10, 321–326 (2004).

Permann, C. J. et al. MOOSE: Enabling massively parallel multiphysics simulation. SoftwareX 11, 100430. https://doi.org/10.1016/j.softx.2020.100430 (2020).

Cornell, J. A. Experiments with Mixtures: Designs, Models, and the Analysis of Mixture Data, Vol. 403 (Wile, 2011).

Cornell, J. A. Experiments with mixtures: A review. Technometrics 15, 437–455. https://doi.org/10.1080/00401706.1973.10489071 (1973).

Department, B. S. s. R. C. (Boise State University Boise, ID, 2017).

Yuan, Z., Jiang, Y., Li, J. & Huang, H. Hybrid-DNNs: Hybrid deep neural networks for mixed inputs. arXiv preprint arXiv:2005.08419 (2020).

Wang, H. & Raj, B. On the origin of deep learning. arXiv preprint arXiv:1702.07800 (2017).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Commun. ACM 60, 84–90 (2017).

Nielsen, M. A. Neural Networks and Deep Learning, Vol. 25 (Determination press, 2015).

Nwankpa, C., Ijomah, W., Gachagan, A. & Marshall, S. Activation functions: Comparison of trends in practice and research for deep learning. arXiv preprint arXiv:1811.03378 (2018).

Szandała, T. in Bio-inspired Neurocomputing 203–224 (Springer, 2021).

Lecun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324. https://doi.org/10.1109/5.726791 (1998).

Tan, M. & Le, Q. V. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv preprint arXiv:1905.11946 (2019).

He, K., Zhang, X., Ren, S. & Sun, J. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778.

Zagoruyko, S. & Komodakis, N. Wide residual networks. arXiv preprint arXiv:1605.07146 (2016).

Huang, Y. et al. in Advances in Neural Information Processing Systems 103–112.

Cubuk, E. D., Zoph, B., Mane, D., Vasudevan, V. & Le, Q. V. Autoaugment: Learning augmentation policies from data. arXiv preprint arXiv:1805.09501 (2018).

Chollet, F. Deep Learning with Python, Vol. 361 (Manning, 2018).

Towns, J. et al. XSEDE: Accelerating scientific discovery. Comput. Sci. Eng. 16, 62–74. https://doi.org/10.1109/MCSE.2014.80 (2014).

Yang, Z. et al. Microstructural materials design via deep adversarial learning methodology. J. Mech. Des. 140, 66. https://doi.org/10.1115/1.4041371 (2018).

Azimi, S. M., Britz, D., Engstler, M., Fritz, M. & Mücklich, F. Advanced steel microstructural classification by deep learning methods. Sci. Rep. 8, 2128. https://doi.org/10.1038/s41598-018-20037-5 (2018).

Kondo, R., Yamakawa, S., Masuoka, Y., Tajima, S. & Asahi, R. Microstructure recognition using convolutional neural networks for prediction of ionic conductivity in ceramics. Acta Mater. 141, 29–38. https://doi.org/10.1016/j.actamat.2017.09.004 (2017).

Okada, M., Thomas, G., Homma, M. & Kaneko, H. Microstructure and magnetic properties of Fe-Cr–Co alloys. IEEE Trans. Magn. 14, 245–252. https://doi.org/10.1109/TMAG.1978.1059752 (1978).

Miller, T. in Proceedings of the Conference. Association for Computational Linguistics. North American Chapter. Meeting. 414 (NIH Public Access).

Acknowledgements

The authors appreciate the support of Boise State University and Idaho NASA EPSCoR. This work was supported in part by the National Science Foundation grant DMR-2142935. We also would like to acknowledge the high-performance computing support of the R2 compute cluster (https://doi.org/10.18122/B2S41H) provided by Boise State University’s Research Computing Department. This work also used the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by the National Science Foundation grant number ACI-1548562.

Author information

Authors and Affiliations

Contributions

A.K.: Conceptualization, Methodology, Phase-field Simulation, Software, Deep Learning Coding, Data Analysis, Writing- Original draft preparation. O.B.: Phase-field Simulation, Software. M.M.: Conceptualization, Investigation, Data curation, Reviewing and Editing, Supervision, Funding Acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Farizhandi, A.A.K., Betancourt, O. & Mamivand, M. Deep learning approach for chemistry and processing history prediction from materials microstructure. Sci Rep 12, 4552 (2022). https://doi.org/10.1038/s41598-022-08484-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-022-08484-7

This article is cited by

-

A novel method based on deep learning algorithms for material deformation rate detection

Journal of Intelligent Manufacturing (2025)

-

Advances of machine learning in materials science: Ideas and techniques

Frontiers of Physics (2024)