Abstract

With the increasing development of coiled tubing drilling technology, the advantages of coiled tubing drilling technology are becoming more and more obvious. In the operation process of coiled tubing, Due to various different drilling parameters, manufacturing defects, and improper human handling, the coiled tubing can curl up and cause stuck drilling or shortened service life problems. Circulation pressure, wellhead pressure, and total weight have an important influence on the working period of coiled tubing. For production safety, this paper predicts circulation pressure, ROP, wellhead pressure, and finger weight using GAN–LSTM after studying drilling engineering theory and analyzing a large amount of downhole data. Experimental results show that GAN–LSTM can predict the parameters of circulation pressure, wellhead pressure ROP and total weight to a certain extent. After much training, the accuracy is about 90%, which is about 17% higher than that of the GAN and LSTM. It has a certain guiding significance for coiled tubing operation, increasing operational safety and drilling efficiency, thus reducing production costs.

Similar content being viewed by others

Introduction

With the rapid development of modern drilling technology, the advantages of coiled tubing drilling technology are becoming more and more obvious. Coiled tubing has the characteristics of high strength and toughness in physical structure, and it also has the advantages of high mobility, safety and environmental protection. Therefore, it is widely used in oil and gas field service industry such as drilling, completion and logging. As coiled tubing is relatively a kind of hose, problems such as curling and jamming may occur during operation, triggering the generation of physical defects of coiled tubing, thus reducing the service life of coiled tubing. In this paper, we predict the drilling parameters of continuous tubing by deep learning algorithm to increase the service life of coiled tubing, reduce the production cost and improve the oil productivity. There is a paucity of research combining machine learning techniques with coiled tubing drilling techniques. Therefore, the integration of deep learning algorithms and coiled tubing drilling technology is a highly exploratory and valuable process. In this process, deep learning algorithms for traditional drilling parameter prediction need to be applied to coiled tubing drilling parameter prediction methods.

Currently, deep learning algorithms are widely used in conventional drilling. For example, ANN, BP neural network model, CNN model and ACO have achieved excellent results in prediction and optimization of drilling parameters (Full abbreviations are detailed in Table 1). After reviewing relevant information. Shao-Hu Liu et al. developed a new theoretical model for the problem that coiled tubing is prone to low circumference fatigue failure during operation. With this theoretical model, it was found that the reel radius, OD, and internal pressure are important parameters affecting the fatigue life of coiled tubing1. Wanyi Jiang et al. determined the optimal ROP by combining an artificial neural network (ANN) and an ant colony algorithm (ACO). The validity of the optimal ROP is then tested by comparing the Bayesian regularized neural network with the ROP-modified Warren model2. Chengxi Li and Chris Cheng applied Savitzky-Golay (SG) smoothing filter to reduce the noise in the original data set. The IGA is then used to maximize the ROP by matching the optimal ANN input parameters and the best network structure3 (Full abbreviations are detailed in Table 1). Cao Jie et al. analyzed the feature values affecting ROP based on feature correlation and relative importance by applying a feature engineering approach. Thus, the manual input feature parameters based on physical correlation are reduced from 12 to 8, which substantially simplifies the network model4. Huang et al. improved the robustness of the model by integrating the particle swarm optimization algorithm and LSTM so that the model can adapt to the complex variation pattern of oil and gas production capacity (Full abbreviations are detailed in Table 1). And it was found that the performance of LSTM is much higher than that of ordinary neural networks in time series data5. Liu et al. proposed a learning model that integrates LSTM and an integrated empirical model and used a genetic algorithm to determine the hyperparameters of LSTM, which can greatly improve the accuracy of the model prediction. The results show that the method exhibits very good generalization performance in terms of accuracy in predicting well production6.

The current difficulty in using neural networks for coiled tubing research is in two areas. One is the data, the drilling data has a confidentiality agreement can't be easily used to study, and the amount of data is huge, complex and inaccurate. The second is the selection of neural network, because the downhole data is a set of sequence data, the connection between the data before and after is relatively large, only through the RNN model to achieve better prediction effect. Therefore, after referring to Koochali's study of using GAN to predict sequential data7 (Full abbreviations are detailed in Table 1). In this paper, we propose a GAN and LSTM fusion model for prediction of circulation pressure, wellhead pressure, ROP and total weight data, which solves the problems arising from RNN when predicting multiple parameters and data size is too large. The experimental results show that the accuracy of the prediction of the GAN–LSTM model is around 90%.

GAN and LSTM fusion

Generative adversarial network

The generative adversarial network consists of a generator model (G) and a discriminator (D). The role of the generative model is to capture the data distribution and generate new data. The role of the discriminant model is to determine whether the data is real data or data generated by the generative model. Its basic structure is shown in Fig. 1.

The training set data vector Z-p(z) is used as the input to the generative model, The new data G(z) is generated after the generator network G. The input of the discriminant model D is either a real data sample or the sample G(Z) generated by the generator network. The discriminator network model is trained to determine whether its input is from the sample of real data or from the sample generated by the generator model. Then, the generator model is trained by the already trained discriminator model to generate data that more closely matches the real data distribution to deceive the discriminator. The two models play each other and are trained alternately to reach an optimal equilibrium point. At this point the generative model is able to generate data that is closest to the real data, The discriminator model cannot distinguish whether the data comes from real data or generated data. The GAN model is trained with a loss function of the following form:

Long short-term memory

Long Short-Term Memory (LSTM), which belongs to Recurrent Neural Network, LSTM is a modified version of a recurrent neural network. The original RNN has a hidden layer with only one state H. RNNs are very sensitive to short-term inputs and relatively weak for long-term inputs. At this point, a state C is added to the RNN, so that the RNN keeps a long-term state, thus constituting a long-short time memory network. It is usually used to process data sets with time series. LSTM can better capture the long-term dependencies between data. LSTM remembers the values in an arbitrary time interval by introducing a memory unit. Simultaneous use of input gates, output gates, and forget gates to regulate the flow of information in and out of the memory cell. Effectively solves the problem of gradient disappearance or gradient explosion of recurrent neural networks when the data size is too large. The structure of the neural unit of the LSTM is shown in Fig. 2:

First is the forgetting threshold layer. This layer is used to determine what data is to be forgotten. The forgetting gate produces a value 0~1 through the output of the previous neuron and an input variable after a sigmoid operation. The part of information near 0 will be forgotten, Instead, it continues to pass on in the united state again. This determines how much information is missing from the previous state \(C_{t - 1}\).

The function of the input threshold is to update the status of the old unit, this layer executes the information added or forgotten by the previous layer. A new candidate memory unit is obtained through the tanh layer. Update the previous state to \(C_{t}\) under the action of the input layer.

Finally, the output threshold determines what value is output. A sigmoid layer to determine which outputs are needed, then pass a \(\tanh\) layer to get a value between \(- 1 \sim 1\). Multiply this value with the sigmoid value to determine the final output value.

GAN–LSTM converged network

When a coiled tubing jam occurs at the bottom of the well, it causes changes in parameters such as bottom-hole pressure, ROP, circulation pressure, and total weight. And then cause a change in the wellhead pressure and flow rate. Data for these parameters can be measured by sensors on the surface and downhole. These datasets are used to build deep-learning models to predict drilling parameters such as total weight and ROP8,9,10.

Drilling history data is a typical time series data, with the characteristics of large data volume and large correlation between before and after data. Therefore, a recurrent neural network model can be used to predict drilling parameters. The disadvantage of recurrent neural networks is that they are prone to the problems of gradient disappearance and gradient explosion, resulting in poor generalization of the model11,12,13. The properties of LSTM can compensate for the problems of recurrent neural networks in terms of a gradient. When the output of the LSTM is multiple variables, the accuracy of the model prediction is significantly lower than that of the model whose output is a single variable. That is, as the dimensionality of the output data increases, the prediction accuracy decreases. And the error rate of the model increases as the depth of the predicted parameters increases14,15,16. In order to solve the above two problems, the generative model of GAN can be used to optimize the LSTM. With the powerful generative model in GAN, the low-dimensional data output from LSTM is used as the input to the generative model of GAN. The ultimate goal is to predict multiple variables and also to avoid the problem that the model accuracy decreases when the dimensionality of the output data increases. The GAN network model consists of a generative network model and a discriminative network model. The GAN–LSTM fusion network needs to use the generative network model of GAN, so the GAN and LSTM need to be trained separately during the training. The model structure of GAN–LSTM is illustrated in Fig. 3.

-

Step 1 Divide the original feature variables.

A part of the variables is predicted by LSTM and the others are predicted by GAN. The LSTM part of the model was analyzed and experimentally attempted to predict total weight and ROP, and the GAN part predicted wellhead pressure and circulation pressure.

-

Step 2 Train the two models separately.

LSTM: Input: Well depth, circulation pressure, wellhead pressure, ROP, and total weight. Output: ROP and total weight.

GAN: Inputs: Well depth, circulation pressure, wellhead pressure, ROP, total weight. Output: Wellhead pressure and circulation pressure.

Parameter prediction for coiled tubing drilling

Data source

Based on the historical data of a single directional well in the west Sichuan area, the data from selected well sections were screened in six times, and these data were partitioned into a training set and a test set for model training by cross-validation. As shown in Table 2:

Data processing

Most of the downhole data is measured by sensors and therefore generates a lot of discrete, duplicate and missing data. These data can cause the overall error of the model to become larger and affect the generalization ability of the model, so some cleaning operations need to be performed on the data17,18,19. For example, removing discrete and duplicate data by clustering method, filling missing values by mean interpolation method, etc. As Fig. 4 shows the flowchart of data cleaning process.

The comparison of data samples before and after data cleaning is shown in Table 3.

The dataset after cleaning has 11 data features, among which 4 feature values have 90% overlap in the data. For example, the data of ROP and ROP_1 are almost the same, and after removing these 4 feature values, only 7 valid feature values are retained. The accuracy of the model is only about 50% when the data set with 7 feature values is trained. In the analysis of the data of flow and flow accumulation, it was found that. The data of the flow had 70% of 0 values and 30% of stacked duplicate values, as shown in Fig. 5. Most of the data for flow accumulation had duplicate values, as shown in Fig. 6. Therefore, after excluding the features of flow and flow accumulation. The accuracy of the model is improved by about 30%. The analysis of Eqs. 8, 9, 10, 11 and the data shows a strong correlation between the remaining five data features, which are Well depth, circulation pressure, wellhead pressure, ROP, and total weight.

In the formula: \(v_{pc}\): ROP, m/h; d: drilling pressure index (d = 0.5366 + 0.1993kd), unfactored quantity; kd: Rock drillability grade value; λ: rotational speed index (λ = 0.9250–0.0375kd), uncaused quantity; f: formation hydraulic index (f = 0.7011–0.05682kd), uncaused quantity; Ws: drilling pressure per unit bit diameter (specific drilling pressure), KN/mm; nr: rotational speed, r/min. HPe: nozzle equivalent specific water power, W/mm2. \(\Delta \rho_{d}\): drilling fluid density difference coefficient (0.97673kd–7.2703), unfactored quantity; \(\rho_{d}\): density of drilling fluid, g/cm3. \(P_{bs}\): specific water power of the drill bit, W/mm2. d1, d2, d3: are the drill nozzle diameters, mm, respectively.

In the formula: \(\Delta P_{pa}\): drill pipe external circulation pressure loss, MPa. kpa: coefficient of pressure loss in external circulation of drill pipe, unfactored quantity; LP: drill pipe length, m;

Q: flow rate, m3/h;

In the formula: \(P_{s}\): actual pumping pressure of the drilling pump, MPa; \(\Delta P_{b}\): pressure drop of the drill nozzle, MPa; \(\Delta P_{g}\): pressure loss of surface pipe sink, MPa; \(\Delta P_{cs}\): circulation system pressure loss, MPa.

In the construction of the data set, the well-depth data are used as index values in the well-depth. 200 well-depth data were used as sample X (depth matrix)20,21,22. For example, the data from 1 -200 m were used to predict data such as total weight and ROP for 201-400 m. Predicting parameters such as total weight and ROP, extends the life of coiled tubing and increases operational safety and drilling efficiency.

The data of various parameters in the well are prone to some large differences in values due to different physical quantities. It causes great difficulties for model building and affects the generalization ability of the model to a great extent. Therefore, this paper normalizes the data by the commonly used Min–Max Feature scaling method. This method can simply and brutally deflate the data to between [0,1]. The formula for this method is as follows.

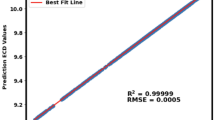

Prediction results of different models

In this paper, after data cleaning and data normalization, we obtain valid data for five relevant features such as circulating pressure, wellhead pressure, ROP, depth, and total weight. The GAN–LSTM model is used to train the dataset with these five features data. "Mape" is the training set loss rate and "Val_mape "is the test set loss rate. The specific network parameters of GAN–LSTM are shown in Table 4.

Figure 7 shows the process of error rate change when predicting the total weight for the network model of GAN–LSTM fusion and the LSTM network model. It can be seen from Fig. 7 that the difference in error rate between the two models is small in the initial stage, and the GAN–LSTM continues to decline and stabilize after 50 rounds. The LSTM network model error rate showed large fluctuations in the early stage and gradually stabilized after 130 rounds. The error rate of the GAN–LSTM network model gradually stabilizes at around 10% after training.

Figure 8 shows the fitted curves of the GAN–LSTM model to predict the total weight. The GAN–LSTM model fits relatively stable in most cases, and the average difference between the predicted and true values is around 100. The influence of some hyperparameters causes a relatively large fluctuation between 2400 and 2470 m. Figure 9 shows the fitted curves of the LSTM model to predict the total weight. The average difference between the predicted and true values of the LSTM model in the previous section is around 200. After 2400 m the average difference of the LSTM model predictions is around 400 due to the error rate only converging to about 20%.

Figure 10 shows the loss rate variation process of the GAN–LSTM network model and the LSTM network model in predicting the ROP. From Fig. 10, we can see that the error rate of the GAN–LSTM model starts to converge downward from about 90%, and the front converges relatively fast. The error rate of the GAN–LSTM model continued to decrease and stabilized at about 10% after 130 rounds. The error rate of the LSTM network model converges downward from about 70%, and there is a fluctuation of about 10% in the error rate in the first period. The error rate of the LSTM model gradually stabilized at about 27% after 140 rounds.

Figure 11 shows the fitted curve of the GAN–LSTM predicted ROP. The GAN–LSTM model is a relatively stable fit in most cases, and the average difference between the predicted and true values is around 1.5 m/h. The fluctuation between 2400 and 2470 m is around 3 m/h due to some hyperparameters. Figure 12 shows the fitted curves of the LSTM predicted ROP. The error rate of the LSTM model predicting ROP eventually converges only to about 27%, resulting in large fluctuations in most of the curves. The average difference between the predicted and true values is around 6 m/h.

Conclusion

-

1.

By combining the advantages of GAN and LSTM, a GAN–LSTM based coiled tubing drilling parameter prediction model is developed. The model consists of two parts, one part to predict wellhead pressure and circulation pressure by GAN, and one part to predict ROP and total weight by LSTM. The fusion of GAN and LSTM enhances the stability of LSTM in predicting multiple parameters, which effectively improves the generalization performance of the prediction model compared to the traditional ANN.

-

2.

By eliminating duplicate and discrete data from the original dataset and filling in the missing data. The size of the original dataset was reduced from 1 million to 700,000 items. And based on the cleaned original dataset, four duplicate feature parameters and two feature parameters with more data 0 values and duplicate values were removed, which reduced the dimensionality of the dataset from 11 to 5 dimensions. Training with the processed dataset reveals that the accuracy of GAN–LSTM model after data cleaning is improved by 10% compared with that before cleaning.

-

3.

A comparison of the training results of GAN, LSTM and GAN–LSTM models reveals that the average loss rate of the model with GAN–LSTM is at 10%, which is better than 25% for GAN and 28% for LSTM.

Data availability

Many thanks to the editors for their attention and recognition of our research work. We appreciate the SCI journal's request for data sharing but due to our lab's confidentiality agreement, we are unable to provide raw data. If the editors and reviewers have questions about specific data, we will do our best to provide more detailed explanations and clarifications. If anyone would like to obtain data from this study, please contact Dr. Bai at baikai@yangtzeu.edu.cn.

Abbreviations

- \(v_{pc}\) :

-

ROP (m/h)

- d:

-

Drilling pressure index (d = 0.5366 + 0.1993kd), unfactored quantity

- kd:

-

Rock drillability grade value

- λ:

-

Rotational speed index (λ = 0.9250–0.0375kd), uncaused quantity

- f:

-

Formation hydraulic index (f = 0.7011–0.05682kd), uncaused quantity

- Ws :

-

Drilling pressure per unit bit diameter (specific drilling pressure) (KN/mm)

- nr :

-

Rotational speed (r/min)

- HPe :

-

Nozzle equivalent specific water power (W/mm2)

- \(\Delta \rho_{d}\) :

-

Drilling fluid density difference coefficient (0.97673kd–7.2703), unfactored quantity

- \(\rho_{d}\) :

-

Density of drilling fluid (g/cm3)

- \(P_{bs}\) :

-

Specific water power of the drill bit (W/mm2)

- d1, d2, d3 :

-

Are the drill nozzle diameters, mm, respectively

- \(\Delta P_{pa}\) :

-

Drill pipe external circulation pressure loss (MPa)

- kpa :

-

Coefficient of pressure loss in external circulation of drill pipe, unfactored quantity

- LP :

-

Drill pipe length (m)

- Q:

-

Flow rate (m3/h)

- \(P_{s}\) :

-

Actual pumping pressure of the drilling pump (MPa)

- \(\Delta P_{b}\) :

-

Pressure drop of the drill nozzle (MPa)

- \(\Delta P_{g}\) :

-

Pressure loss of surface pipe sink (MPa)

- \(\Delta P_{cs}\) :

-

Circulation system pressure loss (MPa)

References

Liu, S., Zhou, H., Xiao, H. & Gan, Q. A new theoretical model of low cycle fatigue life for coiled tubing under coupling load. Eng. Fail. Anal. 124, 105365 (2021).

Jiang, W. & Samuel, R. Optimization of rate of penetration in a convoluted drilling framework using ant colony optimization. In IADC/SPE Drilling Conference and Exhibition. https://doi.org/10.2118/178847-MS (2016).

Li, C. & Cheng, C. Prediction and optimization of rate of penetration using a hybrid artificial intelligence method based on an improved genetic algorithm and artificial neural network. In Abu Dhabi International Petroleum Exhibition & Conference. https://doi.org/10.2118/203229-MS (2020).

Cao, J., et al. Feature investigation on the ROP machine learning model using real time drilling data. In Journal of Physics: Conference Series, Vol. 2024 (2021).

Huang, R. et al. Well performance prediction based on Long Short-Term Memory (LSTM) neural network. J. Pet. Sci. Eng. 208, 109686 (2022).

Liu, W., Liu, W. D. & Gu, J. Forecasting oil production using ensemble empirical model decomposition based Long Short-Term Memory neural network. J. Pet. Sci. Eng. 189, 107013 (2020).

Koochali, A., Schichtel, P., Dengel, A. & Ahmed, S. Probabilistic forecasting of sensory data with generative adversarial networks–forgan. IEEE Access. 7, 63868–63880 (2019).

Ishak, J. & Badr, E. A. A modified rule for estimating notch root strains in ball defects existing in coiled tubing. Eng. Fail. Anal. 134, 106026 (2022).

Sun, G., Wang, G., Liu, J., Yang, M. & Qiang, Z. Experimental study on the influence of bending and straightening cycles for non-destructive and destructive coiled tubing. Eng. Fail. Anal. 123, 105218 (2021).

Zhu, J. et al. A sequence-based method for dynamic reliability assessment of MPD systems. Process Saf. Environ. Prot. 146, 927–942 (2021).

Shi, J. et al. Risk-taking behavior of drilling workers: A study based on the structural equation model. Int. J. Ind. Ergon. 86, 103219 (2021).

Lu, C., Yang, W. & Lin, B. Experimental study on drill bit torque influenced by artificial specimen mechanical properties and drilling parameters. Arab. J. Geosci. 15, 459 (2022).

Viswanth, R. et al. Optimization of drilling parameters using improved play-back methodology. J. Pet. Sci. Eng. 206, 108991 (2021).

Wang, Z. et al. A new approach to predict dynamic mooring tension using LSTM neural network based on responses of floating structure. Ocean Eng. 249, 110905 (2022).

Sauki, A. et al. Development of a modified Bourgoyne and Young model for predicting drilling rate. J. Pet. Sci. Eng. 205, 108994 (2021).

Wang, X. et al. Prediction of the deformation of aluminum alloy drill pipes in thermal assembly based on a BP neural network. Appl. Sci. 12, 757 (2022).

de Moura, J., Xiao, Y., Yang, J. & Butt, S. D. An empirical model for the drilling performance prediction for roller-cone drill bits. J. Pet. Sci. Eng. 204, 108791 (2021).

Farahbod, F. Experimental investigation of thermo-physical properties of drilling fluid integrated with nanoparticles: Improvement of drilling operation performance. Powder Technol. 384, 125–131 (2021).

Li, M. et al. A new generative adversarial network based imbalanced fault diagnosis method. Measurement 194, 111045 (2022).

Syah, R. et al. Implementation of artificial intelligence and support vector machine learning to estimate the drilling fluid density in high-pressure high-temperature wells. Energy Rep. 7, 4106–4113 (2021).

Novara, R., Rafati, R. & Haddad, A. S. Amin rheological and filtration property evaluations of the nano-based muds for drilling applications in low temperature environments. Colloids Surf. A Physicochem. Eng. Aspects 622, 126632 (2021).

Wang, C. et al. ACGAN and BN based method for downhole incident diagnosis during the drilling process with small sample data size. Ocean Eng. 256, 111516 (2022).

Acknowledgements

This work was supported in part by the Open Foundation of Cooperative Innovation Center of Unconventional Oil and Gas, Yangtze University (Ministry of Education & Hubei Province) No. UOG2022-06, Open Fund of Xi’an Key Laboratory of Tight oil (Shale oil) XSTS-202101, Development of the Scientific Research Projects of the Hubei Provincial Department of Education (D20201304).

Author information

Authors and Affiliations

Contributions

All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, W., Bai, K., Zhan, C. et al. Parameter prediction of coiled tubing drilling based on GAN–LSTM. Sci Rep 13, 10875 (2023). https://doi.org/10.1038/s41598-023-37960-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-023-37960-x

This article is cited by

-

Rock image classification based on improved EfficientNet

Scientific Reports (2025)