Abstract

The current study provides the numerical solutions of the language-based model through the artificial intelligence (AI) procedure based on the scale conjugate gradient neural network (SCJGNN). The mathematical learning language differential model is characterized into three classes, named as unknown, familiar, and mastered. A dataset is generalized by using the performance of the Adam scheme, which is used to reduce to mean square error. The AI based SCJGNN procedure works by taking the data with the ratio of testing (12%), validation (13%), and training (75%). An activation log-sigmoid function, twelve numbers of neurons, SCJG optimization, hidden and output layers are presented in this stochastic computing work for solving the learning language model. The correctness of AI based SCJGNN is noted through the overlapping of the results along with the small calculated absolute error that are around 10–06 to 10–08 for each class of the model. Moreover, the regression performances for each case of the model is performed as one that shows the perfect model. Additionally, the dependability of AI based SCJGNN is approved using the histogram, and function fitness.

Similar content being viewed by others

Introduction

There are various differential equations used to model the variety of applications. In disease systems, the susceptible, infected, and recovered (SIR) model is one of the mathematical forms that provide the ideas of different viruses1,2. In the SIR model, the addition of exposed, quarantine and treatment classes make these models, SEIR, SIQR and SITR3,4,5,6,7. Several differential equations have been used in the modeling of economic and environment systems, food chain networks, ocean engineering and fluid dynamical models8,9,10,11,12,13. The differential form of the models is not only limited to the biological frameworks, but it also has some applications in the language learning progression. The language learning differential models are employed to clarify and describe how an individual would eventually acquire a new language. These models take into account how numerous factors, including motivation, linguistic ability, and exposure to the language, may interact to affect the language learning.

Language acquisition is a complex topic, and no single model can account for all variables that influence it. The fundamental idea is to separate each language’s learning knowledge into three categories: unfamiliar, familiar, and mastered. These categories include vocabulary, pronunciation, listening comprehension, and grammar14,15. The unnamed class refers to characteristics of the language that have essentially fallen by the wayside or have not yet been addressed. The term "familiar" refers to language features that are recognized but not fully mastered. “Mastered" (M) specifies the class, which was used to fully learn and is available in a person's working memory. The mathematical learning language differential model is based on three classes, unknown, familiar, and mastered is given as:

The sum of u, f and m must be taken as one as they characterize the language’s proportion. \(\alpha\) and \(\beta\) are the constants that are taken in 0 and 1. The parameter \(\alpha\) shows that how rapidly an individual learns the new information, while \(\beta\) represents how the person quickly loses or forgets proficiency in information that they already educated.

There are various language systems, e.g., GloVe, Word2Vec, and embedding learned in the process of neural networks defines the words as a vector of high-dimension. The dimensionality reduction schemes can also be used to imagine these embedding in double or triple sizes. The language system provides the attention appliances to examine the weights, which can deliver understandings into those input parts of the model by focusing the predictions. To introduce the combative instances is a significant step in order to assess the robustness of the model. Few examples are provided based on the samples of the input deliberately, which are calculated to misinform the system while performing the vague to a human viewer. To evaluate the performance of the language model based on the examples, which support classify potential susceptibilities, limitations and biases. To integrate the adversarial challenging into the assessment of language model are generation of adversarial text, transferability testing, evaluation of the matrices, fairness and bias examination, iterative testing, adversarial training, tactics of adversarial defense, and human evaluation.

Artificial intelligence (AI) highly depends on language models to enable robots to comprehend and produce human language16. These systems are a type of machine learning that uses the statistical patterns to predict the probability of the next sequence of words in each text or sentence17. Language models can recognize and learn patterns that how people use language, like context, syntax, and semantics. This enables language models to carry out a variety of tasks involving language processing, e.g., text categorization, sentiment analysis, chatbot generation, translation, and summarization18,19. Moreover, language systems are a crucial part of neural translation models that involve the process of deep learning to transform text across different languages20,21,22,23,24,25,26,27.

The current investigations present the solutions of learning language differential model by using the artificial intelligence (AI) procedure of scale conjugate gradient neural network (SCJGNN). In recent years, the stochastic computing solvers have been exploited in various applications, like susceptible, exposed, infected and recovered nonlinear model based on the worms propagation using the networks of wireless sensor28, eye surgery differential system29,30, predictive networks for the delayed model of tumor-immune31, HIV infection system32,33, delay differential model based avian influenza34, Lane-Emden model35,36, influenza-A epidemic system37, smoking model38,39, multi-delayed model based tumor oncolytic virotherapy40, thermal explosion model41, and biological differential system42. Some novel features of this research are presented as:

-

The solutions of the learning language differential model using the stochastic AI along with the SCJGNN solver are presented successfully.

-

The competence of Adams numerical scheme is confirmed to accomplish the dataset using the certification and train/test data process.

-

The constant and reducible absolute error (AE) validate the implication of SCJGNN method to solve the learning language differential model.

-

The reliable matching of results, a strong treaty using the typical manufactures provide the precision of AI along with the SCJGNN for solving the language model.

The remaining paper’s portions are given as: "Designed stochastic AI along with the SCJGNN solver" is related to the designed computing solver. "Numerical results of the language model" designates the output representations; the conclusions are provided in the final section.

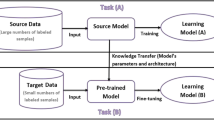

Designed stochastic AI along with the SCJGNN solver

This section portrays the computational framework using the AI along with the SCJGNN in two steps. The SCJGNN features are stated together with the execution process in Fig. 1, however the network performances based on the multi-layers are depicted in Fig. 2. The AI along with the SCJGNN procedure for the language-based model is provided by using the ‘nftool’ (built-in Matlab), cross validation (n-folded), activation function (log-sigmoid), epochs (maximum 1000), tolerances (10–07), step size (0.01), hidden layers (twelve neurons), algorithm (SCJG), and layers (output/hidden/input). The label statics using the performance of training with input/target are obtained through the basic outputs.

The neural network constructions involve various major forms, e.g., architecture defining, stipulating the cost function, selecting the activation function, and training of the model. To integrate the scaling into this procedure might mean considering in order to deal with the input topographies, regularization, and weight initialization. Some of the steps of the general structure for neural network designing with a motivation on scaling are presented as:

-

Problem description: The problem is define based on the language learning model using the process of regression by selecting the nature of data and the value ranges for input topographies.

-

Data preprocessing: Standardize or normalize the input topographies to an analogous gage. The data preprocessing provides the support to the model in order to converge quicker during the process of training in order to upgrade the performance.

-

Design of architecture: The design of neural network based on the types and number of layers is presented by using the layers of normalization to support the interior covariate shift that is relevant to scaling.

-

Weight initialization: Select an appropriate scheme to initialize the weights. The weights have been selected with care and concentrations.

-

Activation functions: The activation function is also selected carefully, the log-sigmoid function is selected in this study, which is shown in the Fig. 3 in the process of hidden layers.

-

Loss function: This function is selected by using the mean squared error (MSE) for regression.

-

Optimization: The optimization is performed by using the SCJG.

-

Training: The training of the model is performed in order to train the data, and to observe the performance using the sets of authentication.

-

Evaluation: The evaluation of the model is performed based on the set of separate tests to measure its generalization presentation.

Table 1 presents the adjustment of the parameters by using the SCJGNN to solve the language nonlinear mathematical model. To assess the limitations and advantages of one of the numerical schemes SCJGNN, the following factors are considered, like the performance of the method is used to approximate the problem’s result, the method perform the consistently and quickly convergence for the solutions, the SCJGNN scheme perform computationally competent, particularly large-scale of problems, the robustness of the SCJGNN scheme is performed to deal with numerous forms of the input data.

The computational stochastic AI along with the SCJGNN solver performances has been presented to solve the learning language differential model using the twelve neurons, which is validated with the optimal performance of underfitting/overfitting using the process of train and corroboration at epochs 49, 58 and 55. The under fitting (premature) convergence is provided by taking small values of the neurons, while the comparable accuracy via the higher complexity (overfitting) is monitored for larger values of the neurons. The label data based on the training and input/target measures is performed through the basic outputs with testing (12%), validation (13%), and training (75%) ratio for solving the nonlinear learning language differential model. The predictable values are found in input [0, 1] through the computational stochastic AI along with the SCJGNN solver are presented to solve the learning language differential model. The layers performances have been performed in Fig. 3 for solving the learning language differential model.

Numerical results of the language model

The current section performs the solutions of the learning language differential system by using the computing framework of the AI along with the SCJGNN solver. Three different cases of the model are presented as:

Case 1: Consider the values \(\alpha = 0.5\), and \(\beta = 0.1\) are in Eq. (1) as:

Case 2: Consider the values \(\alpha = 0.5\), and \(\beta = 0.5\) are in Eq. (1) as:

Case 3: Consider the values \(\alpha = 0.5\), and \(\beta = 0.9\) are in Eq. (1) as:

Figures 4, 5, 6, 7 and 8 represent the AI procedure along with the SCJGNN for the learning language differential model. Figure 4 presents the MSE and state of transition (SoT) by applying the AI procedure along with the SCJGNN. The assessed MSE performances have been illustrated in Figs. 4a–c based on the best curves, training, and accreditation, while the values of the SoT are given in Fig. 4d–f. The optimal outputs of the learning language differential model are represented at epochs 49, 58 and 55, which are shown as 1.36973934 × 10–13, 5.44578 × 10–12 and 5.77556 × 10–11. The gradient for the classes unknown, familiar, and mastered are measured as 9.5716 × 10–08, 9.9898 × 10–08 and 9.5247 × 10–08. These gradient curves indicate the convergence, exactitude, and exactness, and of the AI along with the SCJGNN solver for the nonlinear learning language differential model. Figure 5 depicts the fitting curves for the learning language differential model based on the comparison of results. Figure 5d–f signifies the values of the error histogram (EHs) for the learning language differential model through the stochastic performances of AI along with the SCJGNN solver. These measures have been reported as 7.73 × 10–08 for unknown class, 5.63 × 10–07 for familiar category, and 9.90 × 10–07 for mastered class. Figures 6, 7 and 8 presents the correlation graphs for the differential form of the learning language model by applying the stochastic computing AI along with the SCJGNN performance. These graphs show that the determination coefficient R2 is 1 for the unknown, familiar, and mastered categories. The curves based on the testing, validation, and training representations authenticate the precision of the AI along with the SCJGNN for solving the differential form of the learning language model. The convergence of the train, epochs, endorsement, backpropagation, test and intricacy are tabulated in Table 2. The complexity performances using the AI along with the SCJGNN for solving the differential form of the learning language model are capable to prove the network’s training (epoch performance).

Figures 9 and 10 shows the outputs comparison along with the values of the AE using the obtained and reference solutions for the learning language differential model. The calculated and source solutions of the learning language differential model have been matched clearly. Figure 9 provides the AE measures for each class of the learning language differential model. The values of the AE is a metric, which is applied to assess the accuracy of the language learning model. It provides the average absolute difference that is calculated between the obtained and actual performances. The implication of the AE is based on its interpretability and simplicity. Dissimilar to some other operator like mean absolute deviation, the AE provides equal weights to each error and not used to penalize the large values of the errors more deeply. In case 1, the AE measures for the unknown class are reported as 10–06 to 10–07, whereas the familiar, and mastered categories are performed as 10–05 to 10–06. In case 2, the AE are performed as 10–06 to 10–07 for unknown, 10–05 to 10–07 for familiar category and 10–05 to 10–06 for mastered class of the model. In case 3, the AE measures are reported as 10–07 to 10–09 for unknown, 10–05 to 10–07 for familiar category and 10–05 to 10–08 for mastered dynamic of the mathematical model. These insignificant AE performances represent the exactness of the AI procedure along with the SCJGNN for the learning language differential model.

Conclusions

The motive of current investigations is to present the solutions of the learning language differential model by applying the artificial neural networks. The differential models are not only played a role in the diseased spread modeling, but also have some role in the learning language differential models. Hence a language-based differential model is numerically presented through the process of artificial intelligence along with the optimization of scale conjugate gradient neural network. The mathematical dynamics of the learning language differential model is characterized into three forms, called as unknown, mastered, and familiar. The AI based SCJGNN procedure has been programmatic by applying the statics of testing (12%), validation (13%), and training (75%). In the process of neural network for solving the learning language model, a transfer function log sigmoid, SCJG optimization, twelve numbers of neurons, output, hidden and output layers have been presented in this stochastic computing framework to present the numerical solutions of the learning language model. The correctness of the AI based SCJGNN has been observed through the overlapping of the obtained and source (Adam) results. The negligible absolute error has been performed around 10–07 to 10–09 for respective cases of the language model. In addition, the reliability of the AI based SCJGNN is observed by applying the function fitness, histogram, and correlation/regression of the language differential model.

In future, the designed AI based SCJGNN structure can be developed for the computational framework of the mathematical model, fluid dynamics, and nonlinear models43,44,45,46,47,48,49,50,51,52.

Data availability

All data generated or analysed during this study are included in this article.

References

Cooper, I., Mondal, A. & Antonopoulos, C. G. A SIR model assumption for the spread of COVID-19 in different communities. Chaos Solitons Fract. 139, 110057 (2020).

Chen, Y. C., Lu, P. E., Chang, C. S. & Liu, T. H. A time-dependent SIR model for COVID-19 with undetectable infected persons. IEEE Trans. Netw. Sci. Eng. 7(4), 3279–3294 (2020).

Li, M. Y. & Muldowney, J. S. Global stability for the SEIR model in epidemiology. Math. Biosci. 125(2), 155–164 (1995).

Biswas, M. H. A., Paiva, L. T. & de Pinho, M. D. R. A SEIR model for control of infectious diseases with constraints. Math. Biosci. Eng. 11(4), 761–784 (2014).

Odagaki, T. Analysis of the outbreak of COVID-19 in Japan by SIQR model. Infect. Dis. Model. 5, 691–698 (2020).

Wu, L. I. & Feng, Z. Homoclinic bifurcation in an SIQR model for childhood diseases. J. Differ. Equ. 168(1), 150–167 (2000).

Umar, M. et al. Integrated neuro-swarm heuristic with interior-point for nonlinear SITR model for dynamics of novel COVID-19. Alex. Eng. J. 60(3), 2811–2824 (2021).

Charoenwong, C. et al. The effect of rolling stock characteristics on differential railway track settlement: An engineering-economic model. Transport. Geotech. 37, 100845 (2022).

Sabir, Z. Stochastic numerical investigations for nonlinear three-species food chain system. Int. J. Biomath. 15(04), 2250005 (2022).

Khayyer, A., Tsuruta, N., Shimizu, Y. & Gotoh, H. Multi-resolution MPS for incompressible fluid-elastic structure interactions in ocean engineering. Appl. Ocean Res. 82, 397–414 (2019).

Bilal, M., Younis, M., Ahmad, J. & Younas, U. Investigation of new solitons and other solutions to the modified nonlinear Schrödinger equation in ocean engineering. J. Ocean Eng. Sci. 4, 31 (2022).

Sabir, Z. et al. A computational analysis of two-phase Casson nanofluid passing a stretching sheet using chemical reactions and gyrotactic microorganisms. Math. Probl. Eng. 2019(4), 1–12 (2019).

Sajid, T., Tanveer, S., Munsab, M. & Sabir, Z. Impact of oxytactic microorganisms and variable species diffusivity on blood-gold Reiner–Philippoff nanofluid. Appl. Nanosci. 11(1), 321–333 (2021).

Shi, W., Chen, S., Zhang, C., Jia, R. & Yu, Z. Just fine-tune twice: Selective differential privacy for large language models. arXiv preprint arXiv:2204.07667 (2022).

Wei, W. Dynamic model of language propagation in English translation based on differential equations. Math. Probl. Eng. 4, 1–12 (2022).

Kung, T. H. et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit. Health 2(2), e0000198 (2023).

Mosavi, A. et al. State of the art of machine learning models in energy systems, a systematic review. Energies 12(7), 1301 (2019).

Minaee, S. et al. Deep learning-based text classification: a comprehensive review. ACM Comput. Surv. (CSUR) 54(3), 1–40 (2021).

Safi, Z., Abd-Alrazaq, A., Khalifa, M. & Househ, M. Technical aspects of developing chatbots for medical applications: Scoping review. J. Med. Internet Res. 22(12), e19127 (2020).

Liu, C., Xie, L., Han, Y., Wei, D. & Yuan, X. AutoCaption: An approach to generate natural language description from visualization automatically. In 2020 IEEE Pacific Visualization Symposium (PacificVis). 191–195 (IEEE, 2020).

Sadaka, G. A Logos Masquerade: The Unity of Language and Woman’s Body in Anne Brontë’s the Tenant of Wildfell Hall. 1–11 (Brontë Studies, 2023).

Fekih-Romdhane, F. et al. Translation and validation of the mindful eating behaviour scale in the Arabic language. BMC Psychiatry 23(1), 120 (2023).

Arayssi, S. I., Bahous, R., Diab, R. & Nabhani, M. Language teachers’ perceptions of practitioner research. J. Appl. Res. Higher Educ. 12(5), 897–914 (2020).

Diab, N. M. Effect of language learning strategies and teacher versus peer feedback on reducing lexical errors of university learners. Int. J. Arabic-English Stud. 22(1), 101–124 (2022).

Oueini, A., Awada, G. & Kaissi, F. S. Effects of diglossia on classical Arabic: Language developments in bilingual learners. GEMA Online J. Lang. Stud. 20(2), 14 (2020).

Dickerson, L. K. et al. Language impairment in adults with end-stage liver disease: Application of natural language processing towards patient-generated health records. npj Digit. Med. 2(1), 106 (2019).

Jin, D., Jin, Z., Zhou, J.T. and Szolovits, P. Is bert really robust? A strong baseline for natural language attack on text classification and entailment. In Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34(05). 8018–8025 (2020).

Shoaib, M. et al. Neuro-computational intelligence for numerical treatment of multiple delays SEIR model of worms propagation in wireless sensor networks. Biomed. Signal Process. Control 84, 104797 (2023).

Umar, M., Amin, F., Wahab, H. A. & Baleanu, D. Unsupervised constrained neural network modeling of boundary value corneal model for eye surgery. Appl. Soft Comput. 85, 105826 (2019).

Wang, B. et al. Numerical computing to solve the nonlinear corneal system of eye surgery using the capability of Morlet wavelet artificial neural networks. Fractals 3, 2240147 (2022).

Anwar, N., Ahmad, I., Kiani, A. K., Shoaib, M. & Raja, M. A. Z. Intelligent solution predictive networks for non-linear tumor-immune delayed model. Comput. Methods Biomech. Biomed. Eng. 4, 1–28 (2023).

Umar, M. et al. A novel study of Morlet neural networks to solve the nonlinear HIV infection system of latently infected cells. Results Phys. 25, 104235 (2021).

Sabir, Z., Umar, M., Raja, M.A.Z. & Baleanu, D. Numerical solutions of a novel designed prevention class in the HIV nonlinear model. In CMES-Computer Modeling in Engineering & Sciences Vol. 129(1) (2021).

Anwar, N. et al. Design of intelligent Bayesian supervised predictive networks for nonlinear delay differential systems of avian influenza model. Eur. Phys. J. Plus 138(10), 911 (2023).

Sabir, Z., Raja, M. A. Z. & Baleanu, D. Fractional mayer neuro-swarm heuristic solver for multi-fractional order doubly singular model based on Lane–Emden equation. Fractals 29(05), 2140017 (2021).

Sabir, Z. & Alhazmi, S. E. A design of novel Gudermannian neural networks for the nonlinear multi-pantograph delay differential singular model. Phys. Scr. 98(10), 105233 (2023).

Anwar, N. et al. Intelligent computing networks for nonlinear influenza-A epidemic model. Int. J. Biomath. 16(04), 2250097 (2023).

Saeed, T., Sabir, Z., Alhodaly, M. S., Alsulami, H. H. & Sánchez, Y. G. An advanced heuristic approach for a nonlinear mathematical based medical smoking model. Results Phys. 32, 105137 (2022).

Sabir, Z., Raja, M. A. Z., Alnahdi, A. S., Jeelani, M. B. & Abdelkawy, M. A. Numerical investigations of the nonlinear smoke model using the Gudermannian neural networks. Math. Biosci. Eng 19(1), 351–370 (2022).

Anwar, N., Ahmad, I., Kiani, A. K., Shoaib, M. & Raja, M. A. Z. Novel intelligent Bayesian computing networks for predictive solutions of nonlinear multi-delayed tumor oncolytic virotherapy systems. Int. J. Biomath. 6, 2350070 (2023).

Sabir, Z. Neuron analysis through the swarming procedures for the singular two-point boundary value problems arising in the theory of thermal explosion. Eur. Phys. J. Plus 137(5), 638 (2022).

Mukdasai, K. et al. A numerical simulation of the fractional order Leptospirosis model using the supervise neural network. Alex. Eng. J. 61(12), 12431–12441 (2022).

Tian, M., El Khoury, R. & Alshater, M. M. The nonlinear and negative tail dependence and risk spillovers between foreign exchange and stock markets in emerging economies. J. Int. Financ. Markets Inst. Money 82, 101712 (2023).

Issa, J. S. A nonlinear absorber for the reflection of travelling waves in bars. Nonlinear Dyn. 108(4), 3279–3295 (2022).

Kassis, M.T., Tannir, D., Toukhtarian, R. & Khazaka, R. Moments-based sensitivity analysis of x-parameters with respect to linear and nonlinear circuit components. In 2019 IEEE 28th Conference on Electrical Performance of Electronic Packaging and Systems (EPEPS). 1–3 (IEEE, 2019).

Abi Younes, G. & El Khatib, N. Mathematical modeling of inflammatory processes of atherosclerosis. Math. Model. Nat. Phenomena 17, 5 (2022).

Abi Younes, G. & El Khatib, N. Mathematical modeling of atherogenesis: Atheroprotective role of HDL. J. Theor. Biol. 529, 110855 (2021).

Abi Younes, G., El Khatib, N. & Volpert, V. A free boundary mathematical model of atherosclerosis. Appl. Anal. 8, 1–23 (2023).

Ross, P. International journal of mathematical education in science and technology. Coll. Math. J. 34(4), 340 (2003).

Akl, J., Alladkani, F. & Akle, B. Ionic buoyancy engines: Finite element modeling and experimental validation. In Electroactive Polymer Actuators and Devices (EAPAD) XXI. Vol. 10966. 197–203. (SPIE, 2019).

El Khatib, N., Forcadel, N. & Zaydan, M. Homogenization of a microscopic pedestrians model on a convergent junction. Math. Model. Nat. Phenomena 17, 21 (2022).

Ghizzawi, F. & Tannir, D. Circuit-averaged modeling of non-ideal low-power DC–AC inverters. In 2020 IEEE Texas Power and Energy Conference (TPEC). 1–6 (IEEE, 2020).

Funding

The authors are thankful to United Arab Emirates University for the financial support through the UPAR grant 12S121.

Author information

Authors and Affiliations

Contributions

Z.S.: Methodology and writing paper. S.B.S.: Simulations. Q.A.-M.: Supervision and writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sabir, Z., Ben Said, S. & Al-Mdallal, Q. An artificial neural network approach for the language learning model. Sci Rep 13, 22693 (2023). https://doi.org/10.1038/s41598-023-50219-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-023-50219-9

This article is cited by

-

AI-Powered Predictive Modeling to Optimize Pharmaceutical Formulation and Precise Drug Delivery in Modified Release Tablets

Journal of Pharmaceutical Innovation (2026)

-

Fractional-order stochastic delayed neural networks with impulses: mean square finite-time contractive synchronization

Scientific Reports (2025)