Abstract

Implicit sentiment identification has become the classic challenge in text mining due to its lack of sentiment words. Recently, graph neural network (GNN) has made great progress in natural language processing (NLP) because of its powerful feature capture ability, but there are still two problems with the current method. On the one hand, the graph structure constructed for implicit sentiment text is relatively single, without comprehensively considering the information of the text, and it is more difficult to understand the semantics. On the other hand, the constructed initial static graph structure is more dependent on human labor and domain expertise, and the introduced errors cannot be corrected. To solve these problems, we introduce a dynamic graph structure framework (SIF) based on the complementarity of semantic and structural information. Specifically, for the first problem, SIF integrates the semantic and structural information of the text, and constructs two graph structures, structural information graph and semantic information graph, respectively, based on specialized knowledge, which complements the information between the two graph structures, provides rich semantic features for the downstream identification task, and helps to understanding of the contextual information between implicit sentiment semantics. To deal with the second issue, SIF dynamically learns the initial static graph structure to eliminate the noise information in the graph structure, preventing noise accumulation that affects the performance of the downstream identification task. We compare SIF with mainstream natural language processing methods in three publicly available datasets, all of which outperform the benchmark model. The accuracy on the Puns of day dataset, SemEval-2021 task 7 dataset, and Reddit dataset reaches 95.73%, 85.37%, and 65.36%, respectively. The experimental results demonstrate a good application scenario for our proposed method on implicit sentiment identification tasks.

Similar content being viewed by others

Introduction

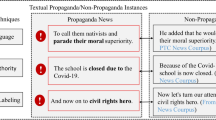

Sentiment analysis1 is a developing field of research in NLP, which focuses on how to discover or mine the polarity of sentiment expressed by people for a certain thing, product or service from text2. Text sentiment analysis is usually categorized into explicit and implicit sentiment analysis based on whether the text has sentiment words or not3. Explicit sentiment analysis is mainly based on the explicit sentiment words in the sentence (e.g., like, dislike, etc.) to determine the sentiment polarity of the whole sentence, and implicit sentiment due to the fact that it does not carry words with obvious sentiment tendencies, but rather expresses the sentiment implicitly with objective statements, and expresses itself through factual, metaphorical, or ironic expressions4.

As shown in Table 1, Examples 1 and 2 are typical of explicit and implicit sentiment sentences. In Example 1, the sentence directly expresses a positive sentiment towards the landscape by describing it as “beautiful” with explicit sentiment, while in Example 2, the sentence does not contain any sentiment words and implicitly expresses their love for the landscape. In recent years, the explicit sentiment analysis task has been the subject of the majority of sentiment analysis research, and researchers have used bag-of-words models, word embedding models, as well as machine learning and deep learning methods to perform this task, and have achieved remarkable results. However, the research on implicit sentiment is less compared to explicit sentiment and suffers from the following two difficulties: first, the lack of sentiment words leads to the difficulty of recognizing the semantic features of the sentiment; second, the closer connection between implicit sentiment and the information in the context leads to the difficulty of understanding the semantics. Statistics show that implicit sentiment sentences account for 25–30% of all sentiment sentences4, so it is meaningful to conduct implicit sentiment analysis research for the whole work of sentiment analysis.

In recent years, GNNs have achieved state-of-the-art performance in sentiment analysis tasks5,6 due to graph-structured data its powerful feature capture capabilities. GNNs interact with features by explicitly introducing relationships between words or terms (i.e., adding edges), and node representations are obtained by aggregating topological information7. Many NLP problems can be expressed in terms of graph structures8. For example, sentence structure information and semantic information in text sequences can be used to augment the original sequence data with knowledge. Therefore, for implicit sentiment tasks, in order to effectively utilize GNNs, constructing graph inputs from text sequences becomes a very crucial step. However, existing methods suffer from the following problems: (1) Inadequate information consideration. In the implicit sentiment identification task using graph neural network modeling, graph structures are built to effectively perform feature interactions between words and terms, but previous graph structures built for text only considered the structural or semantic information of the sentence. (2) Static text graph structure. Previous GNNs applied to implicit sentiment analysis have only built static graph structures through external knowledge, which makes the task of implicit sentiment analysis very dependent on the quality of the original graph structure. If the quality of the original graph structure is poor, the static graph structure cannot be corrected, which can have a very big impact on the implicit sentiment analysis task.

Since traditional text classification algorithms such as Hierarchical Attention Network (HAN) are difficult to utilize the structural information of implicit sentiment text. Previous GNNs do not consider the information of text enough, and the static graphs built are difficult to optimize further. To solve these problems, in this paper, we introduce a dynamic learning graph framework (SIF) that complements the semantic and structural information of text. SIF establishes multiple graph structures by integrating the semantic and structural information of the sentence, obtains the integrally considered graph structure by fusion, and finally improves the performance by iterating the graph structure and through dynamic learning. Specifically, for the first problem, SIF utilizes a priori knowledge to establish structural information graph and semantic information graph, which provides rich text graph structure for feature interaction for implicit sentiment analysis, and is conducive to identifying feature information of implicit sentiment text. For another problem, SIF iterates the textual graph structure through dynamic learning, learning a more robust dynamic graph structure from textual data, which can reason about the information-rich hidden edges in the graph structure, which facilitates obtaining a more robust textual graph topology for downstream tasks.

In summary, our main contributions to the implicit sentiment identification task are summarized below:

-

1.

We propose a novel implicit sentiment identification model called SIF for synthesizing and complementing the semantic and structural information of text. SIF improves the model performance by complementing two graph structures synthesizing the information of text.

-

2.

Dynamic graph structure learning to update the static graph structure to get a higher quality graph structure, to build a good text graph through dynamic iteration to remove the noise edges to get a more robust graph structure.

-

3.

Our extensive experimental results on three benchmark datasets of implicit sentiment validate the effectiveness of our framework. The accuracy on the Puns of day dataset, SemEval-2021 task 7 dataset, and Reddit dataset reaches 95.73%, 85.37%, and 65.36%, respectively. It is also demonstrated on ablation experiments that our proposed dynamic graph complementation mechanism can improve the overall model performance.

Related works

Sentiment analysis

Sentiment analysis methods can be categorized into: corpus-based methods and deep learning-based methods. In terms of implicit corpus-based approaches, Jacobs et al.9 constructed an implicit SENTiVENT corpus of English business news and conducted coarse-grained implicit sentiment analysis experiments on this dataset, and found that the existing explicit sentiment models could not be directly applied in implicit sentiment analysis.

However, the more mainstream approach is still based on deep learning. In terms of deep-based learning, Fei et al.10 introduced the Three Hop Reasoning (THOR) CoT framework to mimic the human-like reasoning process for implicit sentiment analysis, which can be simple and effective for implicit sentiment detection. Mao et al.11 proposed a novel gating mechanism for bridging multi-task learning towers. The method can be based on aspect-based sentiment analysis and sequential metaphor recognition tasks. In addition, Fei et al.12 proposed a latent emotion memory network (LEM) that can perform multi-label sentiment categorization. Xiao et al.13 also proposed an aesthetically oriented multi-granularity fusion network can be used for joint multimodal aspect-based sentiment analysis (JMASA).

The success of pre-trained language models also brings new ideas for implicit sentiment analysis, Mao et al.14 found large-scale pre-trained language models (PLMs) to be biased in sentiment analysis and emotion detection tasks. Cynthia Hee et al.15 achieved good results in classifying the implicit sentiment of 1735 news articles using a machine learning model with BERT and support vector machines to detect implicit sentiment in news events. Huang et al.16 used ERNIE2.0 to embed external knowledge and entity information into word vectors for implicit sentiment analysis.

The sentiment analysis methods mentioned above rarely utilize the structural information in the sentences, although they can effectively perform sentiment computation. In this study, SIF can effectively utilize the feature information in sentences by constructing structural and semantic information graphs.

Applications of graph neural networks

In recent years, GNNs have achieved great success in various tasks such as sentiment analysis. Xiao et al.17 proposed a novel deep learning model based on GNNs to overcome the limitations of noise and inefficient use of information due to syntactic dependency trees. Xu et al.18 proposed a novel solution based on semantic latent graphs and SenticNet, which can utilize semantic and sentiment information simultaneously. Chen et al.19 proposed a model integrating GCN and common attention mechanism for aspect-based sentiment analysis. The method can efficiently process aspect-based information and eliminate noise in irrelevant context words. Fei et al.20 propose a tag-aware GCN approach for simultaneously modeling syntactic edges and tags for semantic role labeling. Shi et al.21 propose a graph attention network to iteratively refine token representations, and an adaptive multi-label classifier to dynamically predict multiple relationships between token pairs. The model can effectively perform structured sentiment analysis. In addition, there are many NLP models that utilize GNNs as codecs to leverage information about the syntactic structure of text22,23,24,25,26

In terms of implicit sentiment, Zuo et al.27 proposed a new context-specific heterogeneous graph convolutional network (CsHGCN) framework for implicit sentiment classification tasks. The model encodes local information of unstructured data and can effectively capture deep domain features. Further, Yang et al.28 proposed a novel implicit sentiment analysis model based on graph attention convolutional neural network. In this model the graph convolutional neural network is used to propagate semantic information and the attention mechanism is used to compute the contribution to the sentiment expression of words. However, the graph structures of these models are fixed and cannot be optimized, and the constructed graph structures may contain noisy edges. Recently, Zhao et al.29 proposed an iterative graph structure learning framework based on knowledge fusion, which provides rich multi-source information for implicit sentiment analysis through graph structures of different views, and searches for hidden graph structures through iterative learning. In our work, we comprehensively consider the structural and semantic information of the text to construct a suitable textual graph structure, then enhance the information representation ability of the graph structure by fusing the graph structure, and finally obtain a more robust graph structure through dynamic learning. The order of fusion and then dynamic learning can reduce the noise edges more effectively than the work of Zhao et al. and obtain a more favorable graph structure for the downstream task.

Materials and methods

Problem statement

In the implicit sentiment identification task using graph neural networks, we need to transform the terms in the field of NLP and actually perform the graph classification task. For a text dataset with a sample size of T, each text sample is first subjected to a tokenization \(\mathscr {N}=\{\textrm{X}_{1},\textrm{X}_{2},\ldots ,\textrm{X}_{n}\}\), with \(\textrm{X}_{i}\) being the text token (i.e., node). The Pre-training word vectors map the text token into a low-dimensional vector space to obtain a word embedding matrix \(\mathrm {X\in \mathbb {R}^{n\times d}}\) (i.e., node feature matrix), where n is the sentence length (i.e., the number of nodes) and d is the word vector dimension (i.e., node feature dimension). Our goal is to learn a classification function \(\textbf{f}(\cdot )\) to classify the identification result \(\widehat{\textbf{y}}_{i}\in \{0,1\}\) (non-implicit sentiment, implicit sentiment) for each text sample. The text graph can be represented as \(\textrm{G}=(\mathscr {V},\mathscr {E},\textrm{X})=(\textrm{A},\textrm{X})\), where V is the set of nodes of \(n=\left| \mathscr {V}\right|\). \({\mathbf {\varepsilon }}\) is the set of edges of \(m=\left| \mathscr {E}\right|\). The adjacency matrix \(\mathrm {A\in \mathbb {R}^{n\times n}}\) contains all the word-to-word relations.

Methodology

This section details our proposed SIF, a dynamic graph structure learning framework that integrates text structure information with semantic information. As shown in Fig. 1, SIF consists of three components: graph construction module, graph fusion module and dynamic graph structure learning module. In the graph construction module, we construct the initial static graph structure of text based on syntactic dependency tree and co-occurrence statistics, respectively, taking into account the semantic and structural information of the sentence. After that, in the graph fusion module, we enhance the information contained in the graph structures by combining the different graph structures built. Finally, iterative learning is performed in the dynamic graph structure learning module to obtain more robust graph structures. Each of these key components is demonstrated in the subsections that follow.

Graph construction

For most NLP tasks such as implicit sentiment analysis, the typical input is a text sequence rather than a graph structure. Therefore, in order to effectively utilize GNNs, it is a difficult task to construct graph inputs from text sequences. In this section, we focus on two main graph construction methods, i.e., constructing graph structures \(\textrm{G}=(\mathscr {V},\mathscr {E})\) based on semantic and structural information of the text respectively, for constructing graph-structured inputs in implicit sentiment analysis tasks. \(\mathscr {E}_{i,j}\) denotes the relationship between words \(\textrm{x}_{i}\) and \(\textrm{x}_{j}\), which is computed by means of different metric relations, i.e., dependency parse trees and co-occurrence relations. Each \(\mathscr {E}\) corresponds to an adjacency matrix \(\textrm{A}\), where contains all word-to-word relations. \(\mathscr {E}\) can be treated as a subset of \(\textrm{A}\). If \(\mathscr {E}\) in \(\textrm{A}\) is equal to 0 by computation, it is not retained.

Structural information

Sentence structure information (i.e., dependency parse trees) in text sequences can be used to augment raw sequence data. Dependency graphs are commonly employed to depict the interdependencies among distinct entities inside a particular sentence. Formally, the extracted dependencies can be converted into graph structures30,31. Next, we describe the dependency graph construction method for a parse tree extracted given an input text.

We denote the dependencies “\(\textrm{x}_{i}\) depends on \(\textrm{x}_{j}\) and the relation is \(\mathscr {E}_{i,j}\)”.

The formalized formula is as follows:

where \(DT(x_i,x_j)\) is the \(\mathscr {E}_{i,j}\) between the \(\textrm{x}_{i}\) and \(\textrm{x}_{j}\). The weights of the edges between the nodes depend on the value of \(DT(x_i,x_j)\).

Semantic information

Similarly, semantic information in sequence data can be used to enhance the original sequence data. Co-occurrence graphs are designed to capture co-occurrence relationships between tokens in a sentence32,33. The purpose of co-occurrence analysis is to seek out similarities in meaning between word pairs, as well as to discover the underlying structure of the text representation34. Based on co-occurrence analysis, the model can derive low-dimensional dense vectors to implicitly represent syntactic or semantic features of the language. Co-occurrence relations allow obtaining semantic relations between words in a text.

Co-occurrence relationships are defined by co-occurrence matrices, which describe how words occur together. Co-occurrence frequency35 is a typical method for computing word co-occurrence matrices. The maximum likelihood estimate(MLE) of the probability between pairs \((x_{i},x_{j})\), conditional on the occurrence of word \(x_{i}\), can be expressed by the following formula36:

Equation (2) represents the global relationship between two words \((x_{1},x_{2})\). In this formula, \(\#(x_{\textrm{i}})\) is the number of occurrences of word \(\textrm{x}_{i}\), and \(\#(\mathrm {x_i,x_j})\) is the number of times the two words appear together in a predefined window L of fixed size. Word co-occurrence analysis reveals that word pairs are similar in meaning to each other, so we let \(A_{\textrm{ij}}=\mathrm {P_{MLE}(x_{j}|x_{i})}\) , where the larger the value, the closer the relationship.

Graph fusion

Most static graph construction methods in the past only consider a specific type of relationship between nodes. Although the generated graphs can capture the the structural or semantic information of the text, they are also constrained in their ability to use other kinds of graph relationships. To deal with this problem, we construct fusion graphs by combining multiple graphs together to enrich the information in the graph.

We wish to aggregate the advantages of multiple graph structures to get a fusion graph structure. We just need to merge the edges into a single graph \(pooling(\mathscr {A})=pooling(A_{1},A_{2},\ldots ,A_{r})\) by pooling the adjacency tensor, and \(A_{i}\) denotes the adjacency matrix of the different graph structures obtained by the graph construction module. Therefore we use the along-edge attention strategy to coordinate the edges of different graphs, which is formalized as shown in Eqs. (3) and (4)

where \(W_{e\textrm{dge}}^{i}\) is an attention matrix along the edges of the same size as the adjacency matrix, \(\odot\) is the matrix dot product. Finally the edges are merged into a single graph by pooling the adjacency tensor.

Graph structure learing

The static graph construction has some a priori knowledge but still has some drawbacks. First, it requires significant labor and domain expertise to construct graph topologies that perform reasonably well. Second, manually constructed graph structures may be prone to errors. Third, errors in the process of constructing the graph structure may accumulate in later stages, which may lead to performance degradation. Finally, the graph construction process usually if derived only from NLP domain knowledge , which may not be optimal for downstream prediction tasks.

To solve the above problems, we construct the graph structure with the help of domain expertise and then dynamically learn the graph structure. We learn through a similarity measure based on node embedding, the similarity measure function uses an attention based mechanism with the formula shown below:

where \(\textbf{u}\) is a non-negative weight vector to highlight the different dimensions of the node embedding and \(\odot\) denotes element-by-element multiplication. Dynamic learning of \(\textrm{A}_{i,j}\) is performed by constant iterative updating of node embeddings \(\textbf{v}_i\) and \(\textbf{v}_j\).

The final graph structure is then optimized by federating the graph construction module with a subsequent graph representation learning module. To obtain better information about the graph structure, we combine the static initial graph with the dynamically learned graph.

As shown in Eq. 6, where \(\textrm{L}^{(0)}=\textrm{D}^{(0)^{-1/2}}\textrm{A}^{(0)}\textrm{D}^{(0)^{-1/2}}\) denotes the adjacency matrix of the initial static graph after normalization, and \(\textrm{A}^{(0)}\) and \(\textrm{D}^{(0)}\) denote the adjacency and degree matrices of the initial state of the static graph, respectively.\(\textrm{A}^{(t)}\) denotes the adjacency matrix at the t-th iteration, \(\textrm{A}^{(1)}\) is computed based on the initial node feature m matrix X, which is calculated by updating the node embeddings \(\textrm{Z}^{(t-1)}\) to obtain \(\textrm{A}^{(t)}\). The dynamically learned graph is a combination of \(\textrm{A}^{(t)}\) and \(\textrm{A}^{(1)}\) to combine the advantages of both and is controlled by the balancing hyperparameters \(\alpha\). Balancing the dynamic graph and the static original graph is then done with the hyperparameter \(\beta\) . The original word embedding matrix X is then mapped to the hidden layer space Z using GCN.

where W denotes the weight matrix and ReLU is the activation function. Next, we obtain the predicted label \(\hat{y}\) by using a \(\sigma (\cdot )\) function (fully connected MLP projector) as the output function, as shown in Eq. 8.

Finally, We optimize the model by minimizing the difference between the true value \({y}_{n}\) and the predicted value \(\hat{y}_n\) through the cross-entropy loss function.

Experiments

Datasets

Reddit37:The Reddit dataset contains 15,909 texts, of which the implicit sentiment text accounts for 2,025. The sentences contain two parts Body and Punchline. Therefore there are three types of datasets, Body dataset, Punchline dataset and complete sentence dataset.

SemEval-2021 task 7 (SemEval)38: The average length of sentences in the SemEval dataset is 24.9 words, containing 6179 implicit sentiment texts and 3821 non-implicit sentiment texts.

Pun-of-the-day (Puns)39: The average length of sentences in the Puns dataset is 13.5 words and contains a total of 4827 texts, of which 2424 are implicit sentiment texts.

Details of all datasets are in Table 2.

Baselines and parameter settings

IDGL40: A model for learning graph embeddings and graph structures jointly and iteratively.

TextCNN41: TextCNN is a traditional text classification model that can use multiple convolutional kernels to capture linguistic features.

RCNN42: A convolutional neural network with recursive architecture that uses a recursive structure to gather contextual data.

DPCNN43: The DPCNN model uses the dilated convolution operation for text classification.

HAN44: HAN builds multiple attention mechanisms for feature extraction for text classification and sentiment analysis.

KIG29: KIG establishes a graph iterative learning framework that can integrate knowledge from multiple perspectives thereby improving implicit sentiment identification.

We compared all the models by coming on NVIDIA GTX 950M GPU and Python 3.8 based Pytorch environment. We used the pre-trained word vector model GloVe to get the word embedding representation with a word vector dimension of 300. The optimization method is adam, learning_rate is 0.001, dropout_rate is 0.5. We use learning rate decay and early stopping mechanism to prevent overfitting. The parameter settings for all models are shown in Table 3.

Evaluation indicator

We constructed F1, Recall and Accuracy as evaluation criteria through the confusion matrix shown in Table 4.

The formulas for F1, Recall and Accuracy are shown below:

Main results

The results of the all model on the Puns dataset are shown in Table 5, with bolding indicating the best value. As can be seen from Table 5, the performance of the our model SIF outperforms all other models. In addition, SIF outperforms the best HAN model in the comparison baseline by about 0.2% and 0.7% in the overall evaluation metrics of Acuracy and F1, respectively. This demonstrates the sophistication of SIF in terms of implicit sentiment identification ability on the Puns dataset. Although the HAN model can effectively reflect the hierarchical structure of the text by utilizing hierarchical attention network, it has poor ability to understand the semantics of the text. Especially for some complex semantic relationships or context-dependent tasks, the HAN model needs deeper semantic understanding ability. Our model utilizes multiple graph structures to represent the semantic and structural information of the sentence, which is better in identifying the sentiment semantic features of implicit sentiment text.

The results of the all model on the SemEval dataset are shown in Table 6, with bolding indicating the best value. Through the experimental results we find that our model not only outperforms the traditional text classification model, but also outperforms such graph neural network models as IDGL, KIG, and we find that SIF can reach 85.37% accuracy on the text test set, while KIG reaches 84.26%. Although KIG also uses multi-graph fusion, it does so after the graph learning module. This may generate more noise. Our method, on the other hand, fuses after the graph construction module, and then performs dynamic learning to better minimize the noisy edges. Experimental results on the SemEval dataset also demonstrate that our method can fully utilize the advantages of graph neural networks in implicit sentiment analysis, and also improves on previous graph neural network models by using the structural and semantic information of the text for graph construction and fusion to enhance the model’s representational capability. The experimental results demonstrate that our model has high identifiable ability in implicit sentiment text and can effectively perform the task of sentiment identification.

Since the reddit dataset is split into Body, Punchline and Full, we compared our model with the baseline model on each of the three datasets, and the results are in Table 7, with bolded indicating the best value.

In Table 7, we can see that our model achieves optimal results on all three datasets compared to the baseline model. In addition, we find that the accuracy of most models is in increasing order of Body, Punchline and Full, which may be due to the fact that the Punchline portion of the paragraph sample contains more implicit sentiment semantic information than the Body portion, and the models focus more on learning this part of knowledge. In the Full dataset, there is complete textual information, and our model can extract more feature information to build a better graph structure through training, so that it can better perform the implicit sentiment identification task.

Ablation experiment

To further assess the extent to which each component of the SIF affects performance, we performed ablation experiments. First , we separately explored the impact of structural information graphs as well as semantic information graphs on the whole model to explore the role of two graph structures for SIF. In addition, experiment also separately also investigated the impact brought by stationary initial graphs and dynamic learning graphs. The specific experimental results are as follows:

We conduct ablation studies to assess the impact of different model components. In Table 8, Semantic graph denotes the result of using semantic information graph alone by the model, and Structure graph denotes the result of using structural information graph alone by the model. Full model SIF denotes the result of using both semantic and structural information graph together. w/o Dynamic learning indicates that the model turns off the dynamic learning process to use only the results of the static fusion initial graph. As shown in Table 8, the experimental results are compared by a single structural infographic structure, a single semantic infographic structure and the fusion graph structure, the result of the full model SIF is the best, which shows that the complementary mechanism of structural and semantic information in this paper helps the model to comprehensively extract the feature information of the text. Our model SIF addresses to some extent the problem that most static graph construction methods in the past only consider a specific relationship and are constrained in utilizing other kinds of graph relationships. SIF enriches the information in graphs by combining multiple graphs together to construct fusion graphs, providing rich text graph structure for feature interaction for implicit sentiment analysis, and is more conducive to recognizing implicit sentiment text feature information. In addition, we find that on the Puns and SemEval datasets, the model uses only semantic infographics better than only structural infographics, suggesting that the model focuses more on feature extraction of semantic infographics on these two datasets. By turning off the dynamic iterative learning component and comparing the results with the whole model, we can see a significant decrease in the performance of the model, which proves the effectiveness of the proposed dynamic learning framework for graph learning problems. Our model not only retains the information of the static initial graph, but also enhances the semantic understanding of the text by dynamically learning the graph structure. We found that the results with dynamic learning turned off and using only the fusion graph were worse on some evaluation metrics than the results using only the separate graph structure, possibly because the fusion graph without dynamic learning superimposes noise, which has an impact on performance.

Parameter analysis

In the Graph structure learing session, We use the hyperparameter \(\alpha\) to combine the adjacency matrix \(\textrm{A}^{(t)}\) after t times of learning with the initially computed adjacency matrix \(\textrm{A}^{(1)}\), and then use the hyperparameter \(\beta\) to balance the dynamically learned graph structure with the static initial graph structure, to serve to improve the robustness of the graph structure. In order to investigate the role of the hyperparameters \(\alpha\) and \(\beta\), by manipulating the range of \(\alpha\) and \(\beta\) by grid search, we conduct a sensitivity analysis of the model’s accuracy.

From Fig. 2, we find that the model gets similar results for the visualization graphs of the two datasets, with the accuracy of the model being normally distributed as \(\beta\) increases. This suggests that controlling the proportion of the initial stationary graph in the final graph can improve the robustness of the graph structure, but too large a proportion of the initial stationary graph structure can lead to too small a proportion of the learned graph structure affecting the quality of the graph structure, which in turn reduces the performance of the model, and then it is necessary to improve the quality of the overall graph structure by controlling the dynamically learned graph structure. The variation of Accuracy value with \(\alpha\) also shows that the accuracy decreases drastically when \(\alpha\) is too large. This may be due to the fact that after dynamic learning node embedding will be lost some feature information will be lost resulting in a less stable graph structure. Thus both dynamic and static initial graph structures contribute to the performance of the model.

Number of learning

In the graph learning stage, the dynamic learning process is crucial. To reflect the effect of dynamic learning on SIF, a line graph of the number of learning times is shown in Fig. 3.

From Fig. 3a, b the model’s accuracy increases gradually as the number of learning times increases, but the model’s accuracy decreases as the frequency of learning times increases to a certain level and finally reaches convergence. This outcome might be the result of the model being unstable after a given amount of learning times, but eventually tending to converge as the number of learning times rises. This demonstrates that SIF not only retains the benefit of having a priori knowledge after the initial static graph construction, but also improves the performance of the model by dynamically learning the graph. The reasons are as follows: first, SIF mitigates to some extent the problem that static graphs require substantial domain expertise by dynamically learning out the graph structure. In addition, SIF solves the problem that manually constructed graph structures are prone to errors by reasoning out more reasonable graph structures through dynamic learning. Finally, SIF improves the quality of the graph structure through the graph learning phase, and obtains a more robust text graph topology is more favorable for the downstream identification task, which improves the accuracy of the model. This demonstrates that SIF is significantly impacted by the dynamic learning graph process.

Case study

In this section we conduct a case study to explore how the SIF model enhances the performance of implicit sentiment identification.

In Fig. 4, we construct a semantic infographic and a structural infographic for a piece of text. The graph structure constructed by considering only the semantic or structural information of the text takes into account only part of the information of the text. In contrast, our model utilizes the semantic and structural information of the sentence to construct different static initial graph structures that can consider multiple aspects information of the text. It also integrates the information of different graph structures by means of graph fusion, and then removes redundant noise edges through dynamic learning to get more robust graph structures, which are more conducive to the identification task. In Fig. 4, we demonstrate our method through three steps, and through comparison we find that our method builds different graph structures by considering the two kinds of information of the text, and the static initial graph structure after the graph fusion stage is denser, which not only retains the edges ’cat’ and ’ chased’, which are present in both graph structures, but also complements the edge structures of structural and semantic information graphs by adding the connecting edges of ’chased’ and ’escaped’, which enhances the representation information of the graph structure, and finally, after the dynamic fusion stage, the initial graph structure is denser. structure representation information, and finally removes the edge of ’the’ and ’chased’, which is of smaller weight, after dynamic learning to get a more robust text graph topology. This shows that SIF not only constructs static graph structures and fuses them according to different information of the text, but also learns the static initial graph structures dynamically and iteratively, which contributes to the performance of the whole model.

Error analysis

In order to further evaluate the limitations of the SIF model, we selected 10 error samples for error analysis. We noticed that in the process of constructing the static initial graph, although SIF can consider the feature information of the text through the static structural information graph and the semantic information graph, the two graph structures will produce some different edges in addition to the consistent feature interaction edges. For instance, in the sentence “January is the Monday of months”, there is an edge between “is” and “Monday” in the structural information graph, but not in the semantic information graph, and such edges, which only exist in a single graph structure, may be noise edges. In the graph fusion module, fusing different graph structures not only complements the information of different graph structures, but also increases the number of noisy edges in the fused graph. If these noise edges cannot be effectively removed in the dynamic learning module, the performance of the downstream tasks will be affected. We need to reduce the generation of noise edges in the graph construction module, so that the graph fusion module will reduce the superposition of noise edges, and only after dynamic learning will we obtain a graph structure that is more suitable for the downstream task and enhance the accuracy of the downstream task.

Conclusion and future work

In this paper, we propose a dynamic graph structure framework (SIF) based on the complementarity of semantic and structural information, which not only can dynamically optimize the static initial structure to prevent the accumulation of noise, which affects the the performance of the model, but also establishes two kinds of graph structures for the semantic and structural information of the text, which complement the information between the graph structures, and increase the ability of information comprehension of implicit sentiment. The case study shows that the established mechanism of complementary graph structures for structural and semantic information can comprehensively consider the knowledge of the text, and the dynamic optimization of the initial static graph structure can also enhance the performance of SIF.

However, constructing a graph structure that better expresses textual information for the the difficulty of extracting textual information, utilizing the advantages of different graph structures for more effective fusion, and updating the graph structure using unsupervised learning are all issues that need to be considered. In addition, combining graph neural networks with pre-trained models is also a direction worthy of our research.

Data availability

The data “pun of the day” and “Reddit” that support the findings of this study are available from [Yang, D., Lavie, A., Dyer, C. & Hovy, E. Humor recognition and humor anchor extraction. In Proceedings of the 2015 conference on empirical methods in natural language processing, 2367–2376 (2015)] and [Weller, O. & Seppi, K. Humor detection: A transformer gets the last laugh. arXiv preprint arXiv:1909.00252 (2019)], respectively. The data “Semeval 2021 task 7” is available at: https://competitions.codalab.org/competitions/27446.

References

Namitha, S. et al. Sentiment analysis: Current state and future research perspectives. In 2023 7th International Conference on Intelligent Computing and Control Systems (ICICCS). 1115–1119 (IEEE, 2023).

Messaoudi, C., Guessoum, Z. & Ben Romdhane, L. Opinion mining in online social media: A survey. Soc. Netw. Anal. Min. 12, 25 (2022).

Zhao, J., Liu, K. & Xu, L. Sentiment Analysis: Mining Opinions, Sentiments, and Emotions (2016).

Liao, J., Wang, S. & Li, D. Identification of fact-implied implicit sentiment based on multi-level semantic fused representation. Knowl.-Based Syst. 165, 197–207 (2019).

Xiao, L. et al. Multi-head self-attention based gated graph convolutional networks for aspect-based sentiment classification. Multimed. Tools Appl. 1–20 (2022).

Yuan, P. et al. Dual-level attention based on a heterogeneous graph convolution network for aspect-based sentiment classification. Wirel. Commun. Mobile Comput. 2021, 1–13 (2021).

Lin, Y. et al. BertGCN: Transductive text classification by combining GCN and BERT. arXiv preprint arXiv:2105.05727 (2021).

Wu, L. et al. Graph neural networks for natural language processing: A survey. arXiv preprint arXiv:2106.06090 (2021).

Jacobs, G. & Hoste, V. Fine-grained implicit sentiment in financial news: Uncovering hidden bulls and bears. Electronics 10, 2554 (2021).

Fei, H. et al. Reasoning implicit sentiment with chain-of-thought prompting. arXiv preprint arXiv:2305.11255 (2023).

Mao, R. & Li, X. Bridging towers of multi-task learning with a gating mechanism for aspect-based sentiment analysis and sequential metaphor identification. Proc. AAAI Conf. Artif. Intell. 35, 13534–13542 (2021).

Fei, H., Zhang, Y., Ren, Y. & Ji, D. Latent emotion memory for multi-label emotion classification. Proc. AAAI Conf. Artif. Intell. 34, 7692–7699 (2020).

Xiao, L. et al. Atlantis: Aesthetic-oriented multiple granularities fusion network for joint multimodal aspect-based sentiment analysis. Inf. Fusion 102304 (2024).

Mao, R., Liu, Q., He, K., Li, W. & Cambria, E. The biases of pre-trained language models: An empirical study on prompt-based sentiment analysis and emotion detection. In IEEE Transactions on Affective Computing (2022).

Van Hee, C., De Clercq, O. & Hoste, V. Exploring implicit sentiment evoked by fine-grained news events. In Workshop on Computational Approaches to Subjectivity and Sentiment Analysis (WASSA), held in conjunction with EACL 2021. 138–148 (Association for Computational Linguistics, 2021).

Huang, S. et al. Implicit sentiment analysis method based on ernie2.0-bilstm-attention. Small Microcomput. Syst. 42, 5 (2021).

Xiao, L. et al. Exploring fine-grained syntactic information for aspect-based sentiment classification with dual graph neural networks. Neurocomputing 471, 48–59 (2022).

Xu, J. et al. Graph convolution over the semantic-syntactic hybrid graph enhanced by affective knowledge for aspect-level sentiment classification. In 2022 International Joint Conference on Neural Networks (IJCNN). 1–8 (IEEE, 2022).

Chen, Z., Xue, Y., Xiao, L., Chen, J. & Zhang, H. Aspect-based sentiment analysis using graph convolutional networks and co-attention mechanism. In Neural Information Processing: 28th International Conference, ICONIP 2021, Sanur, Bali, Indonesia, December 8–12, 2021, Proceedings, Part VI 28. 441–448 (Springer, 2021).

Fei, H., Wu, S., Ren, Y., Li, F. & Ji, D. Better combine them together! integrating syntactic constituency and dependency representations for semantic role labeling. Find. Assoc. Comput. Linguist. ACL-IJCNLP 2021, 549–559 (2021).

Shi, W., Li, F., Li, J., Fei, H. & Ji, D. Effective token graph modeling using a novel labeling strategy for structured sentiment analysis. arXiv preprint arXiv:2203.10796 (2022).

Fei, H. et al. Inheriting the wisdom of predecessors: A multiplex cascade framework for unified aspect-based sentiment analysis. In IJCAI. 4121–4128 (2022).

Fei, H., Wu, S., Ren, Y. & Zhang, M. Matching structure for dual learning. In International Conference on Machine Learning. 6373–6391 (PMLR, 2022).

Fei, H. et al. Lasuie: Unifying information extraction with latent adaptive structure-aware generative language model. Adv. Neural Inf. Process. Syst. 35, 15460–15475 (2022).

Fei, H., Ren, Y., Zhang, Y. & Ji, D. Nonautoregressive encoder–decoder neural framework for end-to-end aspect-based sentiment triplet extraction. IEEE Trans. Neural Netw. Learn. Syst. 34, 5544–5556 (2021).

Fei, H. et al. On the robustness of aspect-based sentiment analysis: Rethinking model, data, and training. ACM Trans. Inf. Syst. 41, 1–32 (2022).

Zuo, E., Zhao, H., Chen, B. & Chen, Q. Context-specific heterogeneous graph convolutional network for implicit sentiment analysis. IEEE Access 8, 37967–37975 (2020).

Yang, S., Xing, L., Li, Y. & Chang, Z. Implicit sentiment analysis based on graph attention neural network. Eng. Rep. 4, e12452 (2022).

Zhao, Y., Mamat, M., Aysa, A. & Ubul, K. Knowledge-fusion-based iterative graph structure learning framework for implicit sentiment identification. Sensors 23, 6257 (2023).

Xu, K. et al. Graph2seq: Graph to sequence learning with attention-based neural networks. arXiv preprint arXiv:1804.00823 (2018).

Song, L., Zhang, Y., Wang, Z. & Gildea, D. A graph-to-sequence model for AMR-to-text generation. arXiv preprint arXiv:1805.02473 (2018).

Zhang, M. & Qian, T. Convolution over hierarchical syntactic and lexical graphs for aspect level sentiment analysis. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP). 3540–3549 (2020).

Zhang, Y. et al. Every document owns its structure: Inductive text classification via graph neural networks. arXiv preprint arXiv:2004.13826 (2020).

Figueiredo, F. et al. Word co-occurrence features for text classification. Inf. Syst. 36, 843–858 (2011).

Zhang, Z. et al. Relational graph neural network with hierarchical attention for knowledge graph completion. Proc. AAAI Conf. Artif. Intell. 34, 9612–9619 (2020).

Dagan, I., Lee, L. & Pereira, F. C. Similarity-based models of word cooccurrence probabilities. Mach. Learn. 34, 43–69 (1999).

Weller, O. & Seppi, K. Humor detection: A transformer gets the last laugh. arXiv preprint arXiv:1909.00252 (2019).

Meaney, J., Wilson, S., Chiruzzo, L., Lopez, A. & Magdy, W. Semeval 2021 task 7: Hahackathon, detecting and rating humor and offense. In Proceedings of the 15th International Workshop on Semantic Evaluation (SemEval-2021). 105–119 (2021).

Yang, D., Lavie, A., Dyer, C. & Hovy, E. Humor recognition and humor anchor extraction. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. 2367–2376 (2015).

Chen, Y., Wu, L. & Zaki, M. Iterative deep graph learning for graph neural networks: Better and robust node embeddings. Adv. Neural Inf. Process. Syst. 33, 19314–19326 (2020).

Kim, Y. Convolutional neural networks for sentence classification. arXiv preprint arXiv:1408.5882 (2014).

Lai, S., Xu, L., Liu, K. & Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 29 (2015).

Johnson, R. & Zhang, T. Deep pyramid convolutional neural networks for text categorization. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vol. 1. Long Papers. 562–570 (2017).

Yang, Z. et al. Hierarchical attention networks for document classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 1480–1489 (2016).

Acknowledgements

This work was supported by the Xinjiang Uygur Autonomous Region Office Linkage Project under Grant (No. 2022B03035), National Natural Science Foundation of China under Grant (Nos. 61862061, 62266044, 61563052, 61363064), 2018th Scientific Research Initiate Program of Doctors of Xinjiang University under Grant (No. 24470), Shaanxi Provincial Natural Science Foundation (No. 2020GY-093), and Shangluo City Science and Technology Program Fund Project (No. SK2019-83).

Author information

Authors and Affiliations

Contributions

Y.Z., A.A., M.M. and K.U. conceived and designed the study. Y.Z., A.A. and M.M. collected and preprocessed the data. All authors contributed to the manuscript. K.U. reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhao, Y., Mamat, M., Aysa, A. et al. A dynamic graph structural framework for implicit sentiment identification based on complementary semantic and structural information. Sci Rep 14, 16563 (2024). https://doi.org/10.1038/s41598-024-62269-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-62269-8

This article is cited by

-

A simple interpolation-based data augmentation method for implicit sentiment identification

Scientific Reports (2025)