Abstract

Urolithiasis is a leading urological disorder where accurate preoperative identification of stone types is critical for effective treatment. Deep learning has shown promise in classifying urolithiasis from CT images, yet faces challenges with model size and computational efficiency in real clinical settings. To address these challenges, we developed a non-invasive prediction approach for determining urinary stone types based on CT images. Through the refinement and improvement of the self-distillation architecture, coupled with the incorporation of feature fusion and the Coordinate Attention Module (CAM), we facilitated a more effective and thorough knowledge transfer. This method circumvents the extra computational expenses and performance reduction linked with model compression and removes the reliance on external teacher models, markedly enhancing the efficacy of lightweight models. achieved a classification accuracy of 74.96% on a proprietary dataset, outperforming current techniques. Furthermore, our method demonstrated superior performance and generalizability on two public datasets. This not only validates the effectiveness of our approach in classifying urinary stones but also showcases its potential in other medical image processing tasks. These results further reinforce the feasibility of our model for actual clinical deployment, potentially assisting healthcare professionals in devising more precise treatment plans and reducing patient discomfort.

Similar content being viewed by others

Introduction

Urolithiasis, frequently denoted as urinary stone disease, constitutes a predominant urological disorder1. The incidence of the disease fluctuates across different regions and populations, yet it demonstrates a general upward trend2, impacting between 1% and 13% of the worldwide population3. Moreover, the recurrence rate of urolithiasis within five to ten years can be as elevated as 50%, with the recurrence rate climbing to 75% over twenty years, suggesting that patients may confront a prolonged risk of recurrence4. Hence, the precise diagnosis, effective treatment, and accurate prognosis of urolithiasis are of substantial clinical significance.

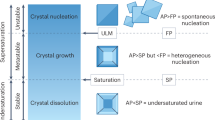

Urolithiasis encompasses a variety of types, each necessitating distinct treatment approaches. Stones are classified based on their chemical composition into calcium oxalate, calcium phosphate, magnesium ammonium phosphate, cystine, and uric acid stones. Among these, calcium oxalate stones are the most prevalent5. In clinical settings, the presence of mixed-type stones6, in addition to simple stones, significantly compounds the complexity of the classification task. Treatment for uric acid and cystine stones often involves the alkalinization of urine for drug-induced dissolution, thus circumventing the need for surgical intervention7. In contrast, calcium oxalate stones, known for their hardness, typically require percutaneous nephrolithotomy (PCNL) for effective treatment8. Conversely, magnesium ammonium phosphate stones, characterized as infectious, necessitate anti-infection therapy prior to extracorporeal shock wave lithotripsy (ESWL)9. An inappropriate choice of treatment can exacerbate patient discomfort and increase risk. Consequently, accurate pre-treatment identification of stone composition is crucial, offering significant guidance for the treatment process.

Currently, clinical practice lacks a reliable method for the preoperative determination of urinary stone composition. Traditional approaches like X-ray diffraction (XRD)10 and infrared spectroscopy (IR)11, while commonly employed for clinical analysis of stone composition, are time-intensive and necessitate in vitro examination. Modern medical imaging techniques, such as computed tomography (CT), offer clinicians non-invasive diagnostic options. However, discerning stone composition directly from CT image characteristics remains challenging. Attempts by El-Assmy and Coursey et al.12,13 to use CT numbers for prediction showed no significant differences in CT values among stones with varying compositions, indicating a difficulty in accurate prediction. Though current methods fall short for preoperative prediction, the diagnostic approach based on CT image offers a basis for in vivo preoperative diagnosis.

In recent times, deep learning has demonstrated significant efficacy in the medical domain, with its application extending to the diagnosis and treatment of urological disorders, such as urinary stones. Ui Seok Kim and colleagues14 developed a model for predicting stone composition using seven deep learning algorithms, analyzing images of urinary stones obtained via endoscopic surgery, and attained a maximum accuracy rate of 91%. Black et al.15 employed ResNet-101 for the automated classification and prediction of kidney stone images taken with digital cameras and clinical ureteroscopy (URS), achieving a cumulative weighted average recall rate of 85%. Estrade et al.16 utilized the ResNet-152-V2 network for the identification of endoscopic stones, differentiating between pure and mixed stone compositions, thereby offering a potential novel approach for the swift, non-destructive analysis of stone components. However, these investigations depend on ex vivo imaging, necessitating stone removal prior to analysis, thereby adding complexity to the procedure. Furthermore, although endoscopic surgery is less invasive, its intricacies may affect patients and it does not facilitate preoperative prediction of stone composition.

Furthermore, while the aforementioned methods have enhanced prediction accuracy, they frequently necessitate increased network depth or the addition of computationally expensive components. This escalation in computational demands poses challenges for practical implementation in clinical settings. However, simplifying the model often results in diminished performance. To address these constraints of existing techniques and better align with clinical application requirements, researchers have adopted knowledge distillation (KD), which involves transferring knowledge from a sophisticated teacher network to a more streamlined student network to boost the latter’s performance. Dian Qin et al.17 trained a lighter network by extracting insights from a well-established medical image segmentation network, enabling the streamlined network to enhance its segmentation abilities while preserving runtime efficiency. Nonetheless, KD depends on a “well-trained” pre-trained model or “teacher model.” In practical clinical scenarios for predicting urinary stone composition, such experienced “teachers” are often unavailable. Additionally, some deep learning networks might not fully exploit image features in stone image processing, which could impact the accuracy of stone CT image analysis.

In addressing the challenges and shortcomings of current methodologies, this paper undertakes the following research endeavors:

-

1.

Enhancement of the existing residual network model to more effectively extract CT imaging characteristics of urinary stones. This improvement aims to heighten the precision in predicting stone types and provide more accurate preoperative diagnostic guidance for physicians.

-

2.

Development of a self-distillation approach that operates independently of a high-powered teacher model. This is incorporated into the residual network and features novel enhancements to conventional self-distillation. These include the integration of weighted Kullback-Leibler (KL) divergence, cross-entropy, and Mean Squared Error (MSE) loss, and the application of distillation across all layer outputs of the residual network.

-

3.

Implementation of a feature fusion strategy. This involves merging the feature layers produced by two modules within the residual network, thereby yielding a more comprehensive and accurate feature representation. Such an approach enhances the model’s performance and robustness, while concurrently minimizing data dimensions and redundancy. This renders the model more efficient and adept at handling complex data tasks.

-

4.

Incorporation of the Coordinate Attention Module (CAM), which is integrated to augment the residual network’s capability in discerning critical regions in CT images of urinary system stones. By conducting spatial analysis and amalgamating context information, CAM enhances the model’s precision in identifying and determining stone types.

This research employs postoperative stone infrared spectroscopy findings as the benchmark to confirm model efficacy, enabling precise preoperative stone type forecasts to mitigate recurrence in stone patients. Moreover, evaluations conducted on two public datasets not only corroborated the model’s capacity for generalization and dependability but also highlighted its versatility in managing various medical imaging assignments. These results enhance the model’s utility in clinical settings, providing healthcare practitioners with enhanced support for making more accurate and tailored treatment decisions, potentially markedly bettering patient care outcomes and quality of life.

Methods

Knowledge distillation

Vanilla knowledge distillation

Vanilla Knowledge Distillation18 represents a foundational approach within the realm of model compression and performance enhancement. This technique’s central concept involves the transference of knowledge and learning outcomes from a sophisticated, high-capacity, large-scale model (often termed the “teacher model”) to a more streamlined, smaller-scale model (referred to as the “student model”) with the aim of boosting the latter’s performance and efficiency. This transfer of knowledge chiefly entails utilizing the probability distribution generated by the teacher model as a benchmark to facilitate the training of the student model. enabling the student to assimilate the teacher’s sophisticated understanding while preserving operational efficiency, thus leading to an enhancement in performance. Nonetheless, the success of this approach is contingent upon the availability of a potent teacher model, which might not be present in certain task-specific contexts. In situations where an ideal teacher model is absent, it becomes imperative to investigate alternative distillation approaches or to refine the teacher model to ensure effectiveness.

Self-distillation

Self-distillation distinguishes itself as a novel variant of knowledge distillation, notable for its independence from an external, large-scale teacher model. It leverages the model’s initial predictions as training targets to steer further learning, facilitating an enhancement in performance that can match or even exceed the baseline established at the outset of training.

Self-distillation can be applied through various methods, such as DKS19 and FRKSD20. In our research, we adopted the BYOT21 framework, wherein the most profound residual block serves as the teacher, guiding knowledge distillation towards the more superficial residual blocks that act as students. However, our study’s novelty diverges from conventional BYOT models in that every Resblock within our model not only fulfills its intrinsic training objectives but also contributes to guiding the training of antecedent Resblocks within the same training epoch.

In formulating the loss function, we thoroughly incorporated KL divergence, MSE loss, and cross-entropy loss. KL divergence facilitates the efficient and precise transfer of knowledge from the teacher network to each shallow classifier. Meanwhile, MSE fosters the convergence of output features from each block towards consistency during the training, ensuring alignment with the features of the deepest classifier. while introducing two hyperparameters to equilibrate the relative impact of these loss components. Furthermore, the incorporation of the temperature parameter T in the softmax function is pivotal, as it modulates the smoothness of the probability distribution for output classes. Adjusting the T value allows for the alteration of the output probability distribution’s profile: a higher T value leads to a more diffuse distribution, whereas a lower T value heightens the distinction among classes, thereby concentrating the probability distribution more on the class with the highest likelihood. The nuances of the temperature coefficient are exhaustively discussed in Sect. Influence of distillation temperature. Specifically, The loss function is defined as the accumulation of three individual losses:

-

1)

Cross-entropy loss (Loss1)

In this context, \(\:{q}^{i}\) denotes the output from the \(\:i\)th shallow classifier, and \(\:y\) symbolizes the target class label, \(\:\alpha\:\) denotes a hyperparameter that adjusts the contributions of different losses.

-

2)

Kullback-Leibler (KL) divergence loss (Loss2)

Here, \(\:{q}^{C}\) signifies the softmax layer’s output of the deepest classifier.

-

3)

Mean Squared Error (MSE) loss (Loss3)

Within this framework, \(\:{F}_{i}\) and \(\:{F}_{C}\:\)represent the features in the ith shallow classifier and the deepest classifier, respectively, while \(\:\lambda\:\) is a hyperparameter that adjusts the contributions of different losses.

Cumulatively, the neural network’s total loss function is articulated as:

Feature fusion

The base network architecture chosen for this research is ResNet-34, upon which enhancements have been made. As depicted in Fig. 1, the structure of ResNet-34 comprises four ResBlocks, each containing 3, 4, 6, and 3 residual units, labeled as F1, F2, F3, and F4, respectively. Additionally, it includes a global average pooling layer (GAP) and a fully connected layer (FC).

In this study, we implement a feature fusion strategy, depicted in Fig. 2, by amalgamating the final two feature layers of ResNet-34. This approach aims to amalgamate features from disparate network levels, thereby bolstering its capability to represent features. Generally, the more superficial layers provide a wealth of low-level detail, whereas the deeper layers are adept at encapsulating more abstract and semantically dense information. Specifically, we upscale the deepest feature map,\(\:\:{F}_{4}\), to align with the dimensionality of the shallower feature map, \(\:{F}_{3}\), a process denoted as \(\:U\left({F}_{4}\right)\) where \(\:U\) signifies the upsampling function. Following this, we merge the upsampled feature map with the shallower one via a concatenation operation, represented as \(\:C\), resulting in a combined feature map:

\(\:{F}_{fused}\) is capable of encompassing a broad spectrum of features, from granular details to overarching abstractions, enhancing the model’s ability to discern intricate patterns and nuanced concepts. Subsequently, we refine this integration using a convolution layer, \(\:{Conv}_{1\times\:1}\), to tailor the features for the final classification task, denoted as:

By integrating features across various levels, the network achieves a more detailed and enriched feature representation, thus improving its capacity to delineate complex patterns and abstract concepts. Furthermore, the layer fusion facilitates multi-scale feature representation, thereby elevating the model’s overall efficacy.

Coordinate attention module (CAM)

The CAM22 represents a sophisticated component in neural networks, designed to enable models to apprehend cross-channel information and bolster directional and positional cognizance. This attention mechanism not only augments the model’s capacity to pinpoint targets accurately but also enhances the precision of target identification. As depicted in Fig. 3, the CAM process meticulously analyzes the spatial aspects of the input feature map \(\:X\). It employs adaptive average pooling to separately gather contextual data along the vertical (\(\:x\)) and horizontal (\(\:y\)) axes, producing directional feature maps \(\:{X}_{h}\) and \(\:{X}_{w}\):

These informational elements are then integrated and modified through a sequence of an activation function, batch normalization (BN), and a \(\:1\times\:1\) convolution layer, culminating in:

where \(\:Y\) denotes the combined and modified feature map. \(\:Y\) is divided into \(\:{Y}_{x}\) and \(\:{Y}_{y}\) across the spatial dimensions, generating refined attention maps \(\:{A}_{x}\) and \(\:{A}_{y}\) via a \(\:1\times\:1\) convolution. The sigmoid function (\(\:\sigma\:\)) delicately adjusts the signal intensity to underscore the focal points of the model:

This phase not only fosters channel interaction within the feature map but also enables the creation of attention maps in two dimensions. By applying element-wise multiplication of these attention maps:

\(\:O\) emerges as the output feature map, attentively weighted to prioritize the most significant portions of the input features. Consequently, CAM significantly amplifies the model’s target localization accuracy and recognition precision. Furthermore, its adaptable and efficient architecture ensures outstanding performance even on devices with limited resources, markedly advancing feature representation capabilities through enhanced informational depiction.

Enhanced self-distillation ResNet-34 network

The enhanced self-distillation ResNet-34 network developed in this research, depicted in Fig. 4, incorporates four ResBlocks, a fully connected layer, a feature fusion module, the CAM, and self-distillation framework. Within this self-distillation framework, each ResBlock’s output serves as the “teacher” for the preceding ResBlock, designated as the “student.” The “teacher” persistently directs the “student” throughout the training to improve learning efficacy. The integration and balancing of three distinct loss functions culminate in a comprehensive loss function that governs the training.

To augment the model’s capacity for spatial information discernment, the CAM coordinate attention mechanism is ingeniously integrated following ResBlock1:

Following this, the feature fusion module amalgamates the feature maps produced by ResBlock3 and ResBlock4, thereby maximizing the exploitation of both deep and superficial features of the image.

The model’s final output is derived post-classification through a fully connected layer and the application of the Softmax function:

In this context, \(\:M\left(X\right)\) signifies the model’s ultimate classification outcome for the input \(\:X\). Through these structural advancements, coupled with self-distillation and feature fusion techniques, the refined ResNet-34 network model exhibits enhanced performance across a spectrum of image processing tasks.

Experiments

This research undertook a series of experimental investigations into the classification of urinary system stone CT images using deep learning, focusing particularly on an enhanced ResNet-34 model incorporating self-distillation technology. The primary aim was to augment the model’s precision in determining the composition of urinary stones, thereby furnishing physicians with more accurate preoperative diagnostic data. We harnessed feature fusion and CAM mechanisms to bolster the model’s capacity for processing intricate images and refined model training via self-distillation approaches. The experimental phase encompassed comparative studies, ablation experiments, and evaluations using public datasets, all designed to assess the model’s performance and its capacity for generalization. These tests also scrutinized the influence of feature fusion strategies and CAM on the model’s efficacy, as well as their contributions to enhancing model robustness and efficiency. This empirical validation confirmed the necessity and effectiveness of these enhancements. These explorations not only propelled advancements in medical image analysis technology but also underscored our method’s potential in clinical settings, opening new avenues for precise diagnosis and treatment of urinary stone.

Data collection

The data for this experiment were acquired from the Urology Department of Hebei University Affiliated Hospital. We compiled CT imaging data and corresponding infrared spectroscopy pathology reports of patients with urinary system stones from January 2018 to April 2021. This endeavor resulted in the collection of imaging data for 700 stone patients. To ensure data quality and accuracy, we applied stringent selection criteria, including: (1) Stones with a maximum diameter less than 2 mm; (2) CT images of stones that were unclear or contained artifacts; (3) Absence of an infrared spectroscopy pathology report corresponding to the CT image; (4) Mixed-type stones with a composition proportion less than 60%.

After a series of screening processes, we finally obtained CT images of 392 patients with urinary system stone components, totaling 5709 images. Further, we categorized these images into 4 categories based on the type of stones. The specific data classification is shown in Table 1.

Data preprocessing

The initial step in data preprocessing for this study entailed the conversion and anonymization of raw data sourced from the hospital. Specifically, we utilized RadiAnt DICOM Viewer software to process the original DICOM format CT images, converting them into BMP format to ease subsequent image processing and analysis. Additionally, to safeguard patient confidentiality, we anonymized all patient-related information and image details in the dataset.

Acknowledging that stones typically represent a minor portion of the total CT image, we engaged professional medical practitioners to meticulously crop the original CT data, focusing solely on the stone regions. LabelImg, a tool for precise annotation, was employed to accurately identify stone locations in each CT image within the dataset. Concurrently, stone types were labeled in accordance with corresponding medical reports, resulting in the creation of XML format annotation files. Leveraging these files, the cropped stone regions were resized to 32 × 32 pixels for training and validation purposes.

For the construction of training, validation, and test sets, the CT data was randomly segmented in a 3:1:1 ratio. To counter the issue of data imbalance present in the original dataset, we applied data augmentation techniques. These included horizontal and vertical flipping, as well as rotations (± 15°, ± 30°, ± 45°, ± 60°, ± 90°), thereby achieving a more balanced distribution across each class in the training set and somewhat alleviating the effects of data imbalance. The augmented dataset is detailed in Table 2.

Experiment setup

The experimental setup was established on the Ubuntu 18.04 operating system. Python 3.7 and Pytorch 1.10 were employed as the principal programming language and deep learning framework, respectively, operating within the NVIDIA CUDA 10.1 environment. For computational efficiency, two NVIDIA GeForce RTX 2080Ti GPUs were utilized for acceleration. The stone images processed in the experiment were in BMP format, uniformly sized at 64 × 64 pixels.

During the training phase of the classification network proposed in this research, AdamW was selected as the optimizer, with a batch size determined at 128. The initial learning rate was set at 0.01, accompanied by a weight decay coefficient of 0.0005. The total duration of training (epochs) was fixed at 200, with self-distillation starts from the 20th epoch. To execute self-distillation effectively, the distillation temperature was established at 4, and the PyTorch random seed was also set to 4, ensuring the replicability of the experiment. With these parameters, the enhanced stone classification network underwent thorough training.

Results

Evaluation metrics

In this research, a set of key metrics was employed to thoroughly assess the performance of the proposed model, encompassing Accuracy, Precision, Recall, Specificity, and F1 Score. These metrics collectively constitute a holistic evaluation framework for the performance of the classification model.

Accuracy: This metric is a primary indicator of a classification model’s overall efficacy, denoting the ratio of correctly predicted samples to the total sample count. Elevated accuracy signifies a more robust classification capability across all categories and is a widely utilized evaluation metric.

Precision: Precision represents the fraction of true positive samples within those deemed positive by the model. Higher precision indicates greater accuracy in predicting positive classes.

Recall: Recall measures the proportion of actual positive samples correctly identified as positive by the model. An increased recall rate implies the model’s enhanced ability to detect more true positive samples.

Specificity: Specificity quantifies the ratio of true negative samples accurately identified as negative by the model. Greater specificity indicates superior performance in excluding negative samples.

F1 Score: As the harmonic mean of Precision and Recall, the F1 Score is a crucial metric for gauging a model’s comprehensive performance, particularly in scenarios with class imbalances.

The formulae for these metrics are as follows:

Here, TP and TN denote the quantities of correctly classified positive and negative samples, respectively, while FP and FN indicate the counts of incorrectly classified samples. ‘P’ stands for Precision, and ‘R’ for Recall. Utilizing these evaluation metrics enables a comprehensive appraisal of the model’s performance across various dimensions, ensuring the objectivity and thoroughness of the evaluation outcomes.

Results from comparative experiments

To meticulously evaluate the efficacy of the algorithm presented in this study, a series of comparative experiments was conducted. These experiments entailed juxtaposing our method with a range of renowned classification networks, such as ResNet3423, ResNet5023, VGG1124, EfficientNetV125, EfficientNetV226, ShuffleNet27, ConvNext28, RegNet29, DenseNet12130, Vision Transformer31, and Swin Transformer32. Each of these networks underwent training, validation, and testing utilizing the dataset specifically compiled for this research.

Employing the evaluation metrics delineated in the preceding section, we conducted a comparative analysis, assessing the performance and the number of parameters of our proposed research algorithm against the other models. This extensive comparative scrutiny enabled a thorough understanding of our algorithm’s performance in the context of urinary stone classification tasks.

The Table 3 presented above reveals that the original ResNet-34 network attained an accuracy of 67.66%. With the implementation of the enhanced self-distillation and other methodologies proposed in this paper, our network model achieved a notable accuracy of 74.96% in classifying urinary stones, representing an increase of 7.3% compared to the original network. Moreover, the algorithm developed in this study also realized improvements in other critical performance indicators such as Precision, Recall, Specificity, and F1 score, registering respective enhancements of 6.29%, 13.47%, 4.67%, and 9.79% over the original ResNet-34.

To further explore the efficiency of our model, we compared the number of parameters across different models. Although our model has a slightly higher parameter count (21.7 M) compared to Resnet34 (21.3 M) by only 0.4 M, it significantly outperforms in all performance metrics. This demonstrates that through the self-distillation technique and other optimization methods proposed in this paper, we successfully enhanced the model’s performance with minimal additional parameter overhead. Moreover, compared to models with similar parameter counts such as Convnext (27.8 M) and Swin Transformer (27.5 M), and even much larger models like Vgg11 (128.8 M) and Vision Transformer (86.6 M), our model not only maintains a lower parameter count but also exhibits superior performance, proving our model design’s advantages in resource and computational efficiency.

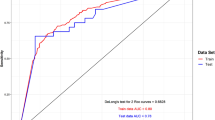

For a more vivid and comprehensive evaluation of performance, ROC (Receiver Operating Characteristic) curves for the classification network of the improved algorithm, as well as for other networks, were plotted. Additionally, the area under the ROC curve (AUC) for each class was calculated. The AUC value, a significant measure of a model’s classification capability, further corroborates the efficacy and superiority of the method introduced in this paper. The ROC curves derived from different networks and the AUC values for each type classification are illustrated in Fig. 5.

The experimental outcomes demonstrate that our model exhibits outstanding performance in class 1, 2, and 4, leading in AUC (Area Under Curve) metrics among all the models compared. It surpassed the second-highest performing model by margins of 0.9%, 1.9%, and 0.7%, respectively, in these categories. This disparity underscores the discriminative capacity of our model. Furthermore, in class 3, our model also showcased exemplary classification proficiency, achieving an AUC value of 94.6%. This result not only reflects the model’s high accuracy in sensitivity but also its exceptional ability in specificity discrimination. These findings robustly validate the effectiveness and dependability of our model in categorizing various types of urinary system stone diseases.

In addition to this, for a more holistic depiction of the performance of different networks in the classification task, we present the confusion matrices for these networks. The confusion matrices enable a visual representation of the accuracy and error rates for each network model across different categories, offering a crucial foundation for further model refinement and analytical evaluation.

The confusion matrices for the different networks are depicted in Fig. 6.

A thorough examination of the data from the confusion matrices reveals that our model exhibited a pronounced advantage in accurately identifying two primary categories: class 1 and class 3. The accuracy rates achieved for these categories were notably high, at 0.83 and 0.77, respectively, markedly outperforming the other models in the comparison group. In the classification of class 4 and class 2, our model closely approached the top-performing models, with a marginal difference of only 0.05. This demonstrates our model’s exceptional generalization capabilities and consistent performance across diverse categories. Regarding average accuracy, our model attained a score of 0.73, also surpassing the comparative group, indicative of its comprehensive effectiveness. These results further underscore the robust potential of our model in managing a wide array of stone types.

In conclusion, our model not only demonstrates high accuracy and robustness but also establishes an effective technical approach for the identification of various urinary system stone components. This achievement heralds promising prospects for future clinical implementations.

Influence of distillation temperature

The distillation temperature parameter T is instrumental in modulating the model’s training dynamics, as it affects the model’s output probability distribution and thereby alters its predictive behavior. A higher temperature setting induces a broader “openness” in the model’s predictions across various categories, meaning it assigns a certain probability to each category. Conversely, a lower temperature setting results in the model displaying greater “confidence” in its predictions, with more definitive outcomes.

In our experiment, the selection of the distillation temperature T was tailored to specific tasks and requirements. Generally, during training, a higher temperature is preferred to encourage exploratory learning, enabling the model to assimilate a broader range of information. In contrast, during the inference or testing phase, the temperature can be adjusted according to actual needs, aiming to strike a balance between the model’s conservatism and diversity in prediction.

To meticulously evaluate the impact of the distillation temperature T on the experimental outcomes, we conducted experiments at varying temperature levels. The results are detailed in Table 4.

The experimental data indicates that the model performs optimally when the distillation temperature T is set at 4, yielding an accuracy of 74.96%, precision of 71.72%, recall of 73%, specificity of 90.05%, and F1 score of 72.35%. Compared to other temperature settings, this configuration shows improvements in accuracy, precision, recall, specificity, and F1 score by 1.8%, 3.07%, 5.5%, 0.82%, and 4.73%, respectively. Hence, a distillation temperature of T = 4 is identified as the most effective setting in this study, highlighting the critical role and influence of the temperature parameter in the model’s training process.

Results of ablation experiments

To ascertain the efficacy of the four novel enhancements introduced in this paper for ResNet-34, a series of ablation experiments were undertaken. These enhancements encompass the adoption of a self-distillation strategy (A), refinement of the self-distillation strategy (B), application of a feature fusion strategy (C), and integration of CAM (D). To methodically evaluate the influence of these augmentations on the original network’s performance, we incrementally incorporated these strategies into ResNet-34 and conducted training and testing using the dataset from this study. The outcomes are presented in Table 5.

The results clearly indicate that the incorporation of each innovative strategy contributed positively to the model’s performance. Notably, when all enhancements (A + B + C + D) were integrated into ResNet-34, the model exhibited significant improvements in accuracy, precision, recall, specificity, and F1 score, attaining values of 74.96%, 71.72%, 73.00%, 90.05%, and 72.35%, respectively. These findings not only validate the effectiveness of each individual improvement but also underscore the cumulative impact of these enhancements in elevating the performance of the original network.

Comparison of different feature fusion methods

To evaluate the performance of various feature fusion methods within our architecture, we conducted comparative experiments on four methods, the results of which are presented in Table 6. These methods include:

-

1.

Concatenation: concatenation of feature layers.

-

2.

Addition: Element-wise addition of feature layers.

-

3.

Multiply: Element-wise multiplication of feature layers.

-

4.

Maximum: Element-wise maximum of feature layers.

The results indicate that the Concatenation method outperforms other methodologies across all evaluation metrics. This superiority is likely due to Concatenation’s ability to retain more comprehensive information, thereby enabling the network to learn more effectively from the fused features. In contrast, other methods such as Addition, Multiplication, and Maximum, while simplifying the information processing, may restrict the model’s ability to learn from complex feature patterns.

This comparative analysis confirms the particular importance of Concatenation due to its excellent performance, underscoring the significance of selecting appropriate feature fusion strategies in the design of deep learning models.

Results on public datasets

To substantiate the generalization capacity of our proposed model, we conducted tests on two public datasets: DermaMNIST33 (part of the MedMNIST series) and the ChestXRay2017 dataset34, focused on chest X-ray images.

DermaMNIST, a dermatoscopic image dataset, presents a 7-class classification challenge. It comprises 10,015 images in total, with 7,007 images in the training set, 1,003 in the validation set, and 2,005 in the test set. On the DermaMNIST dataset, our model outperformed other models, as indicated in Table 7. Specifically, it achieved an accuracy of 80.16%, surpassing the next best model, ResNet34, by 2.8% (77.36%). Our model led in precision with 71.63%, exceeding the second highest DenseNet121 by 9.56%. In recall, our model also topped the list with 60.90%, 10.13% higher than the next best, the Vision Transformer, at 51.40%. For specificity and F1 score, our model recorded 94.15% and 65.83%, respectively, the highest among all models compared.

ChestXRay2017, focused on binary classification of pneumonia, contains 5,857 images. We divided a part of the training set for validation, resulting in 4,187 images for training, 1,046 for validation, and 624 for testing. The results, as shown in Table 8, indicate superior performance of our model on this dataset as well. It achieved an accuracy of 92.63%, 1.6% higher than the second-ranked DenseNet121 at 91.03%. In precision and F1 score, our model led with 93.55% and 92.18%, respectively, surpassing the second-best DenseNet121 (91.25%) and EfficientNetV1 by 2.3 and 1.25% points, respectively, further confirming the model’s superiority in such tasks.

These experimental findings highlight the exceptional generalization ability of our proposed model in diverse tasks like skin lesion identification and pneumonia detection. The model demonstrated higher accuracy and reliability on two distinct public datasets compared to other contemporary models, particularly excelling in precision and recall. This emphasizes its potent capability in accurately identifying positive class samples. Such results reinforce the potential applicability of our model in clinical image analysis, paving the way for developing high-quality automated diagnostic tools.

Performance evaluation and discussion

Network performance evaluation

To validate the practical performance of our method in clinical applications, we randomly selected one sample each of various types of urinary system stones from clinical cases and applied the method proposed in this study to predict the types of these stone samples. The prediction results are shown in Table 9. The experimental results demonstrate that the method proposed in this paper successfully and accurately predicted the specific types of these four urinary system stones, with the model’s prediction confidence for each case illustrated in Fig. 7.

In these results, we noticed that the confidence levels for the third and fourth types of stones are relatively low. This phenomenon could be attributed to the smaller sample size of these two types of stones in the training dataset. Furthermore, the complex composition of mixed-type stones also increases the difficulty of prediction, thereby resulting in lower confidence levels for these two types of stones compared to the other two.

Through the validation of these experiments, the method proposed in this paper demonstrates good potential for clinical applications. It can accurately predict the type of stones before surgery, providing valuable reference information for clinical doctors in preoperative decision-making.

Discussion

As the application of deep learning technology expands in the realm of medical imaging processing, especially in the classification and prediction of urinary stone CT images, its potential has become increasingly evident. Nonetheless, high-performance models typically necessitate significant computational resources, posing a considerable challenge in resource-limited clinical environments. While traditional knowledge distillation offered a potential remedy, their dependence on powerful teacher models complicates their practical clinical application. Addressing this issue, this research introduces an enhanced multi-loss self-distillation approach, aimed at refining both the model’s efficiency and its effectiveness.

The principal contributions of this research encompass: initially, the enhanced multi-loss self-distillation method fosters model self-enhancement and performance improvement without necessitating an external teacher model, via a more proficient internal knowledge transfer mechanism. This method, through the incorporation of soft labels, not only augments the training process with richer information but also aids the model in discerning nuanced distinctions within stone classification tasks. Additionally, the implementation of the CAM markedly boosts the model’s capacity to identify critical regions within images, an essential aspect for precise stone classification. This is particularly beneficial in images where stones blend into the background, as the CAM can direct the model’s focus towards the most relevant areas, thus heightening classification accuracy. Furthermore, employing a feature fusion strategy amplifies the model’s ability to detect and amalgamate features across different layers, ensuring the effective identification of even the most subtle stone features within images. In comparison to existing technologies, our model exhibits superior performance in urinary stone identification tasks and significantly enhances the efficacy of lightweight networks, outperforming complex and cumbersome networks. The model’s lightweight design further aligns with its suitability for real-world clinical deployment. Ablation studies corroborate the effectiveness of each enhancement strategy and their collective impact on boosting model performance, substantially increasing the model’s accuracy, precision, recall, specificity, and F1 score, thus confirming the method’s effectiveness and reliability.

Moreover, testing on public datasets indicates that this method also possesses strong generalizability and robustness in additional medical image analysis tasks, such as skin lesion recognition and pneumonia detection. This suggests that, with suitable modifications, our approach could be applied to address other similar medical challenges, thereby augmenting the capabilities of lightweight models across various domains.

Despite these initial advances in enhancing model predictive capabilities, it is acknowledged that the optimization of model performance and parameter selection remains an area ripe for further exploration. For instance, the ideal selection of distillation temperature could be influenced by the task’s specific nature and dataset characteristics. Future investigations should delve into the dynamics between distillation temperature and other model hyperparameters to uncover more broadly applicable optimization techniques. Additionally, a limitation of this study is its reliance on data from a single medical center, future research will focus on expanding the sources of data, including information from a broader range of geographical areas and various hospitals, to further enrich and deepen the findings of this study. This includes investigating the relationship between the size and shape of calculi and the accuracy of predictions, as well as analyzing the potential connections between the physical characteristics of calculi and their predictability.

Conclusion

This study introduces a non-invasive method for preoperative prediction based on CT images of urinary stones, designed to forecast the type of urinary stones. Through compelling experimental outcomes, this method has shown marked enhancements for lightweight networks, even exceeding the performance of more complex and cumbersome networks. These results thoroughly affirm the efficacy of the proposed preoperative prediction system for urinary stones in categorizing stone types via preoperative CT images. This system not only aids physicians in devising suitable treatment strategies and subsequent follow-ups but also features non-invasiveness, potentially reducing patient discomfort. Additionally, owing to the network’s lightweight nature, the system facilitates straightforward implementation in real clinical environments, underscoring its substantial clinical utility. Lastly, additional evaluation of our model on two public datasets not only confirmed its generalizability and reliability but also showcased its extensive capability in managing various medical imaging tasks, thus strengthening the model’s suitability for clinical application.

Data availability

The urinary stone data that support the findings of this study are not publicly available due to patient privacy concerns.The dataset DermaMNIST are publicly available in the MedMNIST repository, https://github.com/MedMNIST/MedMNIST. The dataset ChestXRay2017 are publicly available at https://data.mendeley.com/datasets/rscbjbr9sj/3.

References

Qian, X. et al. Epidemiological Trends of Urolithiasis at the Global, Regional, and National Levels: A Population-Based Study. Int J Clin Pract. 6807203 (2022).

Borumandnia, N. et al. Longitudinal trend of urolithiasis incidence rates among world countries during past decades. BMC Urol. 23, 166 (2023).

De Coninck, V. et al. Advancements in stone classification: unveiling the beauty of urolithiasis. World J. Urol. 42, 46 (2024).

Shastri, S., Patel, J., Sambandam, K. K. & Lederer, E. D. Kidney stone pathophysiology, evaluation and management: Core Curriculum 2023. Am. J. Kidney Dis. 82, 617–634 (2023).

Siener, R. et al. Urinary stone composition in Germany: results from 45,783 stone analyses. World J. Urol. 40, 1813–1820 (2022).

Siener, R. et al. Mixed stones: urinary stone composition, frequency and distribution by gender and age. Urolithiasis. 52, 24 (2024).

Tzelves, L., Mourmouris, P. & Skolarikos, A. Outcomes of dissolution therapy and monitoring for stone disease: should we do better?[J]. Curr. Opin. Urol. 31(2) (2021).

Ullah, A. et al. Percutaneous nephrolithotomy: a minimal Invasive Surgical option for the treatment of Staghorn Renal Calculi[J]. Kmuj, 4(4): (2012).

Iqbal, M. W. et al. Contemporary Management of Struvite stones using combined endourological and medical treatment: predictors of unfavorable clinical Outcome[J]. J. Endourol. 150127063130004. (2013).

Sofińska-Chmiel, W. et al. Chemical studies of multicomponent kidney stones using the Modern Advanced Research methods. Molecules. 28, 6089 (2023).

Hermida, F. J. Analysis of human urinary stones and gallstones by Fourier Transform Infrared attenuated total reflectance Spectroscopy[J]. J. Appl. Spectrosc. 88 (1), 215–224 (2021).

El-Assmy, A. et al. Multidetector computed tomography: role in determination of urinary stones composition and disintegration with extracorporeal shock wave lithotripsy-an in vitro study[J/OL]. Urology. 77 (2), 286–290 (2011).

Coursey, C. A. et al. Dual-energy multidetector CT: how does it work, what can it tell us, and when can we use it in abdominopelvic imaging?[J]. Radiographics. 30 (4), 1037–1052 (2010).

Kim, U. S. et al. Prediction of the composition of urinary stones using deep learning[J]. Invest. Clin. Urol. 63 (4), 441 (2022).

Black, K. M., Law, H., Aldoukhi, A., Deng, J. & Ghani, K. R. Deep learning computer vision algorithm for detecting kidney stone composition. BJU Int. 125, 920–924 (2020).

Estrade, V. et al. Towards automatic recognition of pure and mixed stones using intra-operative endoscopic digital images. BJU Int. 129, 234–242 (2022).

Qin, D. et al. Efficient medical image Segmentation based on knowledge distillation. IEEE Trans. Med. Imaging. 40, 3820–3831 (2021).

Hinton, G., Vinyals, O. & Dean, J. Distilling the Knowledge in a Neural Network. Preprint at (2015). https://doi.org/10.48550/arXiv.1503.02531

Sun, D., Yao, A., Zhou, A. & Zhao, H. Deeply-supervised knowledge synergy. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 6997–7006 (2019).

Ji, M., Shin, S., Hwang, S., Park, G. & Moon, I. C. Refine myself by teaching myself: Feature refinement via self-knowledge distillation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Pp. 1066410673 (2021).

Zhang, L. et al. Be your own teacher: improve the performance of Convolutional Neural Networks via self distillation. arXiv( (2019).

Hou, Q., Zhou, D. & Feng, J. Coordinate Attention for Efficient Mobile Network Design, in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA: IEEE, pp. 13708–13717 (2021).

He, K. et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE conference on computer vision and pattern recognition: 770–778 (2016).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition[J]. arXiv preprint arXiv:1409.1556(2014).

Tan, M., Le, Q. & Efficientnet Rethinking model scaling for convolutional neural networks[C]//International conference on machine learning. PMLR: 6105–6114 (2019).

Tan, M. & Le, Q. Efficientnetv2: Smaller models and faster training[C]//International conference on machine learning. PMLR: 10096–10106 (2021).

Zhang, X. et al. Shufflenet: An extremely efficient convolutional neural network for mobile devices[C]//Proceedings of the IEEE conference on computer vision and pattern recognition:6848–6856 (2018).

Liu, Z. et al. A convnet for the 2020s[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition: 11976–11986 (2022).

Radosavovic, I. et al. Designing network design spaces[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition:10428–10436 (2020).

Huang, G. et al. Densely connected convolutional networks[C]//Proceedings of the IEEE conference on computer vision and pattern recognition.4700–4708 (2017).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale[J]. arXiv preprint arXiv: 2010.11929 (2020).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows[C]//Proceedings of the IEEE/CVF international conference on computer vision. 10012–10022 (2021).

Jiancheng Yang, R. et al. MedMNIST v2-A large-scale lightweight benchmark for 2D and 3D biomedical image classification. Sci. Data (2023).

Kermany, D., Zhang, K. & Goldbaum, M. Labeled optical coherence tomography (OCT) and chest X-Ray images for classification. Mendeley Data, V2 (2018).

Acknowledgements

This work was supported by the General Project of Natural Science Foundation of Hebei Province of China (H2019201378), the Natural Science Foundation of Hebei Province (F2023201069), the Multi-disciplinary Interdisciplinary Research Fund of Hebei University (DXK201914), the Medical Science Foundation of Hebei University(2021 × 07).

Author information

Authors and Affiliations

Contributions

K.L.: Conceptualization, Formal analysis, Funding acquisition, Resources, Supervision, Writing – review & editing.X.Z.: Investigation,Methodology, Validation, Visualization, Writing original draft, Writing review & editing. H.Y.: Conceptualization,Investigation, Data curation, Methodology, Writing original draft, J.S.: Visualization, Writing review & editing. T.X.: Investigation,Writing review & editing. M.L.:Investigation,Writing review & editing. C.L.:Conceptualization,Data CollectionS.L.: Project administration, Supervision.Y.W.: Investigation,supervision, Writing review & editing. Z.C.: Conceptualization,Funding acquisition, Data CollectionK.Y.: Funding acquisition,Formal analysis.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, K., Zhang, X., Yu, H. et al. Efficient urinary stone type prediction: a novel approach based on self-distillation. Sci Rep 14, 23718 (2024). https://doi.org/10.1038/s41598-024-73923-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-73923-6

Keywords

This article is cited by

-

Recognizing uric acid type of urinary stones by deep learning

Urolithiasis (2025)