Abstract

The identification of malaria infection using microscope images of blood smears is considered as a ‘gold standard’. The diagnosis of malaria needs expert microscopists which are scarce in remote areas where malaria is endemic. Therefore, it is desirable to automate the repetitive task of pathogen detection in the blood samples received as microscope images. This study provides an easy to use and deploy method for implementing a malaria pathogen detection software- the Intelligent Suite. The Intelligent Suite features a graphical user interface (GUI) implemented using ‘cvui’ library to interact with the OpenVINO’s inference engine for model optimisation and deployment across several inference devices. The intelligent Suite uses a custom YOLO-mp-3l model trained on Darknet framework for detection of malaria pathogen in thick smear microscope images. Moreover, the Intelligent Suite provides user interface for inference device/mode selection, alter model parameters, and generate detection reports along with the model performance metrics. The Intelligent Suite was executed on a CPU computer with model inference running on a plug-and-play Neural Compute Stick (NCS2) and performance reported.

Similar content being viewed by others

Introduction

An ‘Intelligent Suite’ is developed as a software tool for the automation of malaria pathogen detection on the microscope images of thick blood smears. The Intelligent Suite is composed of a graphical user interface (GUI), inference engine, and a trained malaria detection model. The term “Intelligent Suite” in this study refers to an advanced software tool that integrates with the AI-based YOLO-mp-3l1 model for real-time malaria pathogen detection in microscopy images. This suite incorporates the YOLO-mp-3l model, optimized for high accuracy and performance with the OpenVINO inference engine, enabling efficient processing across various hardware. It processes input images by splitting them into tiles, which are then analysed by the model to detect malaria pathogens almost instantaneously. The suite employs decision thresholds to filter high-confidence detections and reduce false positives. It features an intuitive graphical user interface (GUI) using the ‘cvui’ library, allowing users to capture images, adjust decision thresholds, and view results interactively. Additionally, the suite automatically generates detailed detection reports, summarizing pathogen locations and confidence scores to aid healthcare professionals in malaria diagnosis and treatment.

The backbone of Intelligent Suite is the OpenVINO inference engine https://docs.openvino.ai/latest/openvino_docs_OV_UG_OV_Runtime_User_Guide.html which handles the detection, model optimisation and deployment on the inference devices. The C++ demo script for object detection provided in OpenVINO toolkit https://docs.openvino.ai/latest/home.html is adopted for current application with GUI added as the main point for interacting with the Intelligent Suite. Similarly, a tiling and merge function is implemented which automatically breaks down high resolution input images into tiles for reduced memory requirements during inference. The Intelligent Suite GUI is implemented using cvui header library (https://dovyski.github.io/cvui/ and https://github.com/Dovyski/cvui/releases/tag/v2.7.0) which provides primitive OpenCV (https://opencv.org/) drawing functions for creating simple user interfaces. The GUI provides user selection of different mode of operation and settings for model parameters. The evaluation mode ’Eval’ can be selected to generate detection results, hardware and model settings applied during inference and model performance (execution time) for each session. Images can be processed in batch or individually using ’batch’ or ’single’ mode, respectively. Selection of one of the inference modes ’AUTO’ and ’HETERO’ or a specific inference devices (CPU, GPU, and MYRIAD) is available on the GUI. Model detection parameters such as ’confidence’ and ’Overlap’ thresholds can be changed with the sliders provided. The Intelligent Suite can access webcam for capturing images and can also perfrom inference on images available in the input folder. Input images and detection images are both displayed on the GUI and saved in the backup and result directories, respectively for future reference. The GUI also features a vertical colour bar as a quick visual indicator that changes to red and green colours for each positive and negative outcome, respectively. The Intelligent Suite is therefore a complete tool which can be used for malaria pathogen detection and for model performance evaluation (generate several reports on selected inference settings and hardware). The existing detection model in the Intelligent Suite can be replaced by an updated model or with models trained for other applications.

In this report the method used for developing the Intelligent Suite are discussed along with the performance comparison of different inference modes and APIs on a basic CPU computer as well as on Intel’s Neural Compute Stick2 (NCS2) from https://www.intel.com/content/www/us/en/developer/articles/tool/neural-compute-stick.html using OpenVINO’s benchmark tools is reported. The program execution speeds for OpenVINO’s C++ API and Python API are assessed. Similarly, performances for both asynchronous and synchronous detection modes are benchmarked and presented for model deployed on a plug-and-play NCS2 and a compact industrial-grade computer (POC-515) from www.neousys-tech.com. The ’Intelligent Suite’ software, compiled for Windows 10 OS, along with the detection model and corresponding user manual, can be downloaded at https://drive.google.com/file/d/1TZIr6BpGJOc1lhgnC8-wyl5awOrpsM-3/view?usp=sharing. Additionally, the source code of the Intelligent Suite can be downloaded at https://drive.google.com/file/d/1dagqSTVPmMh6K336k3wRPFDd_pvWgK_u/view?usp=sharing.

Background and motivation

Having a laptop with the health expert team serves several purposes in the field (especially in the rural endemic areas) - and that can be used to log in data and records of the campaign, save the images data, run inference using plug-and-play inference devices like NCS2, save the results, communicate with the digital microscope for acquiring data and upload data to the cloud when internet connection is found. As NCS2 consumes less power ( 1 Watt) it can run on laptop’s internal battery for extended time. Moreover, we can run multiple NCS2s on a Universal Serial Bus (USB) hub to share computation if required. This serves the purpose of low-cost alternative to using high-end computers with GPUs for practical scenarios. In contrast, other edge-devices that have GPUs such as NVIDA Jetson series require additional hardware resources such as monitors and power banks. It may not be practical to provide/distribute large number of expensive high-end laptops with GPUs for field-use.

Intel’s OpenVINO toolkit https://docs.openvino.ai/latest/home.html provides model optimisation support for supported Intel GPUs, CPUs, VPUs (NCS2) and edge devices and offers integrated functionalities for quick development and deployment. OpenVINO supports inference on Intel’s NCS2 which is a low cost USB plug and play device that includes Movidius Myriad X Vision Processing Unit (VPU). OpenVINO is designed for minimal external dependencies and comes with package managers to compile the models (including custom models). OpenVINO runtime provides C, C++ and Python API and supports Linux, Windows and MacOS which brings improved application portability and superior deployment flexibility. OpenVINO can provide inference locally using OpenVINO runtime or using Model Server running on a separate server. Remote inference enables to deploy lightweight clients to preform API calls only when required to the edge or cloud server hosting the model. However, depending on the application context cloud hosting may not be feasible for rural endemic regions that have limited/no access to the internet connectivity.

YOLO-mp-3l model1 for malaria pathogen detection in thick blood smear microscope images is already highly accurate and designed to have small architecture (93.99 mAP, 25.4 Mb, 24.477 BFLOPs) to favour running on edge computing devices. YOLO-mp-3l model trained on Darknet format (‘.cfg’, ‘. weights’) was converted to Tensorflow format (‘.pb’) before creating the OpenVINO intermediate representative (IR) format (‘.xml’, ‘.bin’) to be used in the Intelligent Suite software. The Intelligent Suite uses C++ implementation of OpenVINO API, OpenCV libraries and cvui header. The Intelligent Suite has been made simple and can be used as standalone malaria pathogen detection software or for research (model evaluation and reporting). We believe the methods used in development of this tool can contribute to the educational and research community in related field of applications.

Related works

This section covers applications developed for the identification of malaria parasites on blood smear images. The goal is to identify practical tools or user interfaces that integrate detection models for identifying malaria parasites in microscopy images. Therefore, publications centred solely on the development of detection methods fall beyond the scope of this review.

An earlier study2 published an android based mobile phone app called “Malaria Screener” to capture and process (in-phone) the blood smear images obtained by viewing through a microscope lens. The app identifies malaria parasite of type ’P. falciparum’ from both thick and thin blood smear images and also maintain result database and uploading it to the web server. No recommendation on the specification of phone and camera model is provided3 published a paper for deep-learning CNN based malaria pathogen image classification model to be run efficiently on smart-phone. The performance (software compatibility and processing speed) of smartphone-based app was tested for different versions of android operating systems through local processing on different mobile phone models. The model was deployed using TensorFlow Lite (https://www.tensorflow.org/). Alternatively, a web-based application was developed using Flask (https://flask.palletsprojects.com/en/2.3.x/)- which allowed for upload of images to server for processing on the web. However, for both applications the input was required to be cropped individual cell images (32x32 pixels each) of thin blood smear images for classification as infected or not infected.4 published a software tool to classify stages of ’P. falciparum’ mlaria parasite in blood smears from different imaging methods- Light Microscopy (LM), Fluorescence Microscopy (FM), and Atomic Force Microscopy (AFM). The GUI tool was based on Tkinter (https://docs.python.org/3/library/tkinter.html) and feature multiple tabs in steps to view the input image, detect cells and finally classify cells as helathy or one of the three development stages of parasite (ring, trophozoite, and schizont). The detection utilized traditional image segmentation and thresholding techniques from OpenCV (https://opencv.org/ and Sklearn (https://scikit-learn.org/stable/ libraries while the classification utilized convolutional neural network (CNN) from Tensorflow library. Some user settings were available in the GUI to optimise the detection and classification process. Malaria stage classifier’s software and documentations can be found at https://github.com/KatharinaPreissinger/Malaria_stage_classifier and https://malaria-stage-classifier.readthedocs.io/en/latest/index.html, respectively. There are many websites and GitHub pages featuring some tools/algorithms for malaria detection but only few of them were designed considering practical implementation while others do not include publications. Malaria hero hosted at https://malariahero.org/ with the source code available at https://github.com/caticoa3/malaria_hero is one such software application which has no publication. The malaria hero webpage interface uses different packages such as Dash and Flask while the inference is done using CNN in Amazon Web Services (AWS) cloud platform. To use the software tool an individual cell image needs to be uploaded on the web page and the prediction result is displayed as classification outcome (infected or not infected cell).

The related works covered in this report mostly take cropped cell images of thin blood smears as input and use trained classifier to predict if the cell has malaria infection. In this report we are presenting “Intelligent Suite”, a software tool that is easy to use (does not require installation and can be launched by simply copying the files to the local computer or any external drive where the user has file read/write permissions) and benefits from power of OpenVINO inference engine. While OpenVINO was used for model optimisation, software deployment and for inference on different devices along with the cvui library which is just a header file based on OpenCV library for drawing simple GUIs, the Intelligent Suite does not require extra dependencies and libraries for its operation. The Intelligent Suite uses custom CNN model (YOLO-mp-3l) based on YOLO framework to detect malaria pathogen (currently ’P. falciparum’) on thick blood smear images and produces the results along with model performance reports for the device used during inference. Moreover, the image tiling and merging technique used in the Intelligent Suite allows for the same model to predict parasites accurately and efficiently even on higher resolution input images.

This study focuses on integrating the custom deep learning model YOLO-mp-3l into the OpenVINO framework and utilizing the ‘cvui’ header file for the graphical user interface to develop a comprehensive tool for malaria pathogen detection-the ‘Intelligent Suite’. The open-source publication by1 reports on the pathogen detection performance of the YOLO-mp-3l model compared to standard YOLO models and custom models from other publications across different datasets. However, this study does not benchmark the model’s detection performance. Instead, we assess the performance (speed and memory usage) of the current solution-specifically, the OpenVINO integration of the YOLO-mp-3l model-on a CPU device and a neural compute stick. Additionally, a performance comparison between the OpenVINO inference engine implementations (Python versus C++ scripts) and inference modes (synchronous versus asynchronous) in terms of execution speed is provided.

Components of the intelligent suite

The Intelligent Suite consists mainly of a GUI built on cvui library, OpenVINO inference engine which includes the OpenCV library and the malaria pathogen detection model (YOLO-mp-3l) in OpenVINO format (.xml and .bin). Each component of the Intelligent Suite with its functionality is described as follows.

Graphical user interface

The GUI for Intelligent Suite is designed using the cvui header file hosted at https://dovyski.github.io/cvui/. Cvui is a simple user interface library built on top of OpenCV and uses only OpenCV drawing primitives to do all the rendering (no OpenGL or Qt required) which reduces the dependencies for the Intelligent Suite build. Moreover, the OpenVINO runtime comes with OpenCV packaged and we base our GUI on the same OpenCV by including cvui header file. Figure 1 shows the user interface of the Intelligent Suite for malaria pathogen detection.

Components of GUI

Interface controls

Figure 2 displays the control components of the Intelligent Suite user interface. The ’Scale’ counter allows to re-scale the GUI window. The ’Quit’ button is the recommended way to exit from the Intelligent Suite GUI. User is prompted to press the ’stop processing’ button if ’Quit’ button was pressed while current batch of input images are being processed. Alternatively, pressing ’Q’ or ’q’ or ’esc’ key on keyboard can quit the program abruptly.

Inference settings

Figure 3 displays a component of the Intelligent Suite GUI for dynamic selection of available devices or inference modes and inference parameter for the detection model. The ’Devices’ window lists different modes for using single or combination of the available devices on the system capable for inference (default: CPU). When a neural stick is plugged in it gets displayed in the devices list as ’MYRIAD’. OpenVINO uses ’Auto’ mode to automatically select from the available devices for inference. The “Hetero” mode tells OpenVINO to do inference on a list of available devices in the order defined during the program coding: current order in the Intelligent Suite is GPU, HDDL, MYRIAD and finally CPU as a fallback device. The ’Settings’ window allows to set detection parameters for the model (intersection over union (IoU) threshold for non-maxima suppression (NMS) and prediction confidence threshold for filtering final detection) using the provided sliders. The values can be set dynamically and the “reset” button sets default values (0.5 for both thresholds).

Input sources

Figure 4 displays the Input sources components (webcam and image folder) and their controls provided in the Intelligent Suite GUI of Fig. 1.

The ’capture only’ button when clicked captures the current image frame from the webcam stream and stores inside ’input_images’ folder. The ’capture and detect’ button starts capture from webcam followed by immediate detection process on the captured image. User can dynamically switch the webcam ID (default: 0) to select specific camera in the system. The ’stream’ checkbox if enabled displays webcam’s current stream/frame view in the input display window. ’Mirror’ option allows user to flip (left-right) the webcam view depending on whether the camera is facing towards or away from the user.

The ’detect on folder’ button processes all the images that are already in the ‘input_images’ folder including the images saved using ’capture only’ or images from other sources (e.g., digital microscope) copied to the folder. The list of images in the ’input_images’ folder is generated and passed as input to the detection model. If the ’batch’ mode is selected the model is loaded and full list of input image gets processed without the need for user intervention. If the ’single’ mode is selected the program waits for user event (right-click on mouse) before processing the next image in the list. The ’single’ mode is useful to view the detection result on individual input image and to change the model parameters if required. Either of the ’single’ or ’batch’ mode can be activated dynamically at any stage of processing. The ’eval’ checkbox will activate the evaluation mode in which the detection results are stored in a separate file for each processing session (one pass of the input image list). A separate file that records the information about the selected inference device, model settings (IoU and confidence threshold), total input processed, total number of positive and negative outcomes and FPS (images processed per second) which helps for model bench-marking and performance analysis. The ’Stop processing’ button allows user to interrupt/stop the batch processing in the middle of the operation. User is prompted for ’Stop processing’ (to avoid any error or file corruption) if program ’Quit’ button is pressed while the detection process is running. The ’store input image’ checkbox which is enabled by default moves the input image (once it has been processed) from ’input_images’ folder to ’input_images_backup’ folder for storing. This will allow to clean the ’input_image’ folder for new batch of images. The ’store input image’ checkbox if disabled can be useful for repetitive testing of different model parameters for same set of images as they will not get moved from the ’input_images’ folder.

Display windows

Figure 5 shows the window components dedicated for displaying the current input and the output images. The ’Detections’ window displays the currently processed image with detected bounding boxes and confidence scores. Mouse click on this window area pops out the currently displayed detection image in a separate window of its original size for better viewing. The “Input image” window displays the current input image from the input folder that is being processed or it will be the current frame from the webcam stream.

Information windows

Figure 6 shows windows for providing useful information to the users such as the current input and number of detection, total input processed and all detection numbers, and status of the application. The ’Current job’ window displays the image name and parasite count for most recent (current) image that was processed. The ’Total job’ window displays the total number of images processed and total parasite count for the period the Intelligent Suite is running for. The ’Info’ window displays status of the Intelligent Suite processes as logs.

Outcome bar

Figure 7 shows a vertical coloured bar- the outcome bar. The outcome bar sits besides the ’Detection’ window for a quick visual indication of the detection outcome. For each image processed by the software the colour of the bar changes to red for positive outcome (i.e., pathogen detected) and green for negative outcome (i.e., no detection).

Inference engine

The OpenVINO’s inference engine https://docs.openvino.ai/latest/openvino_docs_OV_UG_OV_Runtime_User_Guide.html takes Intermediate Representation (IR) file format for model deployment, which contains trained model’s network topology in a ‘.xml’ file and model weights and biases in a ‘.bin’ file. The conversion to IR format is supported only for some popular deep learning frameworks such as Caffe, Tensorflow, MXNet, Kaldi, and ONNX using the model optimiser tool available at https://docs.openvino.ai/latest/openvino_docs_MO_DG_Deep_Learning_Model_Optimizer_DevGuide.html. The model optimiser generates IR files targeted for the inference device using techniques such as quantization, freezing, and fusion. The Intelligent Suite is based on the C++ demo script of OpenVINO which performs asynchronous detection on input images using trained YOLO models.

Detection model

The Intelligent Suite uses an YOLO5 based deep learning model for detection of malaria pathogen in images. The detection model (YOLO-mp-3l1) is trained on public dataset ’plasmodium-phonecamera.zip’ from https://air.ug/datasets/ which contains 1182 color images (750x750 pixels), of thick blood smears treated with field stain, captured using smartphone camera attached to microscope’s eyepiece. 948 images are positively infected with P. falciparum parasite and 234 images are negative images with artifacts due to impurities. Each image in the dataset has annotation file with coordinates of bounding box drawn around any visible P. falciparum parasite. YOLO-mp-3l is custom designed architecture based on YOLO-v45 optimized for speed, accuracy and memory for malaria pathogen detection task. YOLO-mp-3l which was trained on model input resolution of 608x608 pixels achieved mean average precision (mAP) scores of 93.99 (@IoU=0.5) outperforming the standard YOLOv4 model for malaria pathogen detection task as reported in1.

The YOLO-mp-3l model was selected for use in the Intelligent Suite due to its suitability for low-resource devices1. This model, based on the standard YOLOv4-tiny-3l architecture, incorporates several key modifications to make it more suitable for devices with limited computational resources. According to1, the YOLO-mp-3l model integrates three prediction layers, enabling it to detect objects at multiple scales. This customization allows the model to accurately identify malaria pathogens of varying sizes within microscope images, improving detection performance for small objects typical in such images. The YOLO-mp-3l model retains a lightweight design, ensuring efficient operation on low-power devices, which is crucial for deployment in resource-limited settings where high-performance computing infrastructure is scarce. Its lightweight nature allows it to maintain real-time detection speeds without compromising accuracy. The original YOLO-mp-3l model trained in Darknet format was exported to OpenVINO format for use with Intelligent Suite. The OpenVINO inference engine optimizes the model for better inference speed and reduced memory consumption, facilitating real-time malaria pathogen detection even on devices with limited hardware resources.

Method

The intelligent suite process flow

The Intelligent Suite reads images from the specific image folder. The Malaria pathogen detection Intelligent Suite block diagram is shown in Fig. 2. The images can come from digital microscope or other sources such as webcam and are stored in the input folder (Fig. 8). The webcam capture option is available in the Intelligent Suite where user can select/switch the ID from multiple webcams. The Intelligent Suite automatically reads the model input resolution and breaks the input image into smaller sub-images (tiles) if image size is more than 1.5 times more than model input size. Deployment of pathogen detection model on inference devices and prediction on the list of input images are done asynchronously using OpenVINO runtime. The prediction on the input image tiles is further processed for merging the overlapping detection to produce the final output. The Intelligent Suite not only stores the prediction images with the detection bounding boxes but also generates report files with extra information about the model parameters, inference device, execution speed, and number of detection.

The C++ demo script for object detection provided with OpenVINO toolkit (version 2021.4) was adopted for current application with a user interface added as the main point for interacting with the Intelligent Suite software. When the Intelligent Suite is launched all necessary input/output folders are created and a GUI window as displayed in Fig. 1 is presented for user interaction with the software. Different functionalities available in the GUI and its process flow diagram are presented in Fig. 9. Most of the features of the GUI menu were covered in the previous section on components of GUI. The perform detection block (Fig. 9) calls the OpenVINO inference engine API which loads the IR model (.xml, .bin) to the inference device using one or the combination (’Hetero’ mode) of several device specific plugins (MKLDNN, cLDNN, Myriad and FPGA).

Integration of detection model

Figure 10 displays the block diagram showing the integration of YOLO-mp-3l malaria pathogen detection model in the Intelligent Suite with OpenVINO’s model optimiser and inference engine. The detection model YOLO-mp-3l used by Intelligent Suite was trained on Darknet Framework https://github.com/AlexeyAB/darknet as a previous work of authors and the complete description can be found in the publication1. Therefore, the original YOLO-mp-3l model trained on Darknet framework was converted to TensorFlow format and finally to OpenVINO IR format. The model input resolution was changed from 608x608 pixels to 416x416 pixels for increased inference speed. The GitHub repository ’TNTWEN’ at https://github.com/TNTWEN/OpenVINO-YOLOV4/tree/master allowed for converting Darknet’s ’.weights’ model file format to TensorFlow’s frozen ’.pb’ model format with limitations to standard YOLO network architectures only. We modified the scripts in the TNTWEN’s GitHub repository to incorporate the custom design of YOLO-mp-3l model architecture and its parameters such as the model input size and number of classes. The difficult part in the conversion step for custom models was to re-define the ’yolo_v4’ function of ’yolo_v4.py’ script in TNTWEN’s GitHub to incorporate the custom configuration of yolo-mp-3l: - i.e., move detection to early layers, add ‘SPP’ block after backbone, add ‘mish’ activation function on backbone and ‘leaky’ afterwards. This modification will not be required for standard YOLO models, or no conversion is needed for model already trained on formats supported by OpenVINO. Finally, the OpenVINO’s model optimiser tool is used to generate IR (.xml, .bin) format from the converted YOLO-mp-3l ’.json’ and ’.pb’ model files.

Image preprocessing and postprocessing

Training object detection models on high-resolution images can be limited by the available memory on the inference device. Therefore, large dimensions are usually broken into smaller tiles for model input. Tiling serves two purposes- the input size is smaller and requires less system memory for training/testing, the object to image size ratio increases which makes it easier for detection models to identify smaller objects such as malaria pathogens in microscope images. However, inference on tiled images requires merging the overlapping detections at tile corners to produce accurate results.

The YOLO-mp-3l model was trained with a multiscale factor of 1.5, making it robust for detecting malaria pathogens across varying input resolutions. This adaptability is crucial when dealing with microscope images that may differ in size and clarity. To ensure precise malaria detection, the Intelligent Suite preprocesses input images through several steps. When the resolution of the input image exceeds 1.5 times the model’s input size (416x416 pixels for the YOLO-mp-3l in the Intelligent Suite), which is automatically detected, the system splits the image into smaller tiles. This ensures that each segment matches the model’s input resolution, maintaining accuracy across images of different sizes. The YOLO-mp-3l model works directly on tiled image regions without further augmentation or modification. Each tile is processed individually in the device’s memory, and the detection results are combined for the final output. These steps ensure the model efficiently handles a variety of image resolutions and delivers accurate pathogen detection, making it well-suited for large-scale malaria screening tasks.

For developing the Intelligent Suite, we borrowed the tiling/merging concept of Darkhelp API https://github.com/stephanecharette/DarkHelp and ccoderun https://www.ccoderun.ca/DarkHelp/api/index.html and modified it specific to our application. Darkhelp is a wrapper to make it easier to use Darknet framework within C++ application. There are two key parameters- ‘tile_edge_factor’ and ‘tile_rect_factor’ used by Darkhelp in the tiling/merging approach. The ‘tile_edge_factor’ controls how close to the edge of a tile an object must be to be considered for merging detection boxes. Smaller the value the closer object must be from the edge of the tile. The range is 0.01 to 0.5, with 0.25 as default. Similarly, the ‘tile_rect_factor’ controls how close the rectangle needs to line up on two tiles before the predictions are combined. 1.0 is perfect/closest match which is ideal and cannot happen. Current range is 1.10 to 1.50 with default of 1.20. The tiling/merging process is integrated to the Intelligent Suite program as follows:

-

YOLO-mp-3l model’s input resolution is set to 416⨯416 pixels.

-

The input image will get broken into smaller tiles if the input image size is greater than 1.5 times the model resolution (416⨯416 pixels). For example, if input image size is 750⨯750 pixels there will be four tiled images (375⨯375 pixels each) in memory.

-

Asynchronous detection is applied on individual tiles and offsets on tile positions applied on detected boxes to be displayed correctly on the final merged image.

-

If two boxes on the edges of neighbouring tiles satisfy both the ‘tile_edge_factor> = 0.25’ and the ‘tile_rect_factor>= 1.20’ conditions, they get merged into a larger single box (drawn as union of the two) and the confidence score set to the higher value among the merged boxes.

Figure 11 shows the input image to the Intelligent Suite on the left and the final output detection image on the right. The final image also displays the horizontal and vertical lines as a reference for the tile boundaries created during image tiling process, and the final detection boxes including the merged detection at tile boundaries.

Result and report generation

The YOLO-mp-3l model used by the Intelligent Suite generates bounding boxes with probabilistic confidence scores for each pathogen object detected pathogen in the input image. A detection is considered valid if its confidence score exceeds the threshold set during model inference. Similarly, multiple detections are merged into a single detection if their bounding boxes sufficiently overlap (based on Intersection over Union, IoU). Detections can be filtered using a combination of confidence scores and overlap values, which are typically determined by the model’s performance (precision and recall) on a validation dataset. For the YOLO-mp-3l model, the authors reported that a threshold of 0.5 for both the confidence score and IoU produced the best match between the model’s pathogen detection counts and the human-annotated ground truth on validation set images1. However, these thresholds often require adjustment when applied to new data that differ significantly in image properties-such as resolution, object clarity, lighting, and object size-compared to the training and validation datasets. The software includes a slider that allows users to select confidence and IoU thresholds within the range of 0.30 to 0.95.

The Intelligent Suite saves output result files and detection images in the output directories as shown in Fig. 12. The results gets stored in the dated folder for each day. A file ’full_day_detection_result.csv’ records detection result of all images processed throughout the day. The evaluation (’eval’) mode allows to benchmark performance of model for different model parameters and hardware settings. If ’eval’ mode is selected the Intelligent Suite stores two extra time-stamped files (’time_eval_session_performance_result.csv’ and ’time_eval_session_detection_result.csv’) for each evaluation session.

The ’time_eval_session_detection_results.csv’ and ’full_day_detection_results.csv’ files summarizes the performance information on the detection results per image for an ’eval’ session and for whole day, respectively (Fig. 13).

The ’time_eval_session_performance_result.csv’ file provides information on the total images processed, total number of pathogens detected, the number of positive and negative outcome images, list of available inference devices, the inference mode/device used, average processing speed (FPS), and the model parameters (confidence and IoU thresholds) for each ’eval’ session (Fig. 14).

Performance analysis

Since the OpenVINO optimises the model for Intel devices a non-Intel platform the ’POC-515’ (Neousis Technology www.neousys-tech.com)- an ultra-compact embedded CPU (AMD Ryzen quad core) computer is chosen for fair comparison against the Intel’s NCS2. NCS2 is plugged into the USB3 port of POC-515 for assessment.

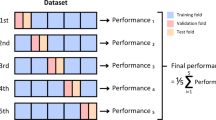

Performance assessment using OpenVINO’s benchmark tool

OpenVINO provides benchmark tool (’benchmark.exe’ for C++ and ’benchmark_app.py’ for Python) to assess model performance on several inference devices. Model performance is assessed for two inference modes: synchronous (latency oriented) and asynchronous (throughput oriented) and reported (Table 1). The YOLO-mp-3l model input size was set t0 ‘416x416’ while the input image size was ’750x750’ pixels.

Performance assessment using OpenVINO’s demo object detection scripts

The python ’object_detection_demo.py’ script and C++ ’object_detection_demo.exe’ executable available in OpenVINO’s toolkit is used with YOLO-mp-3l detection model deployed on NCS2, and POC-515 CPU and performance reported (table 2). The YOLO-mp-3l model input size was kept as ‘416x416’ pixels and the input image size was 750x750 pixels.

Performance assessment using Intelligent Suite software

The Intelligent Suite implemented the image tiling and merging script in the demo C++ object detection script. The detection model (YOLO-mp-3l) was set to input resolution of 416⨯416 pixels while the input image resolution was 750⨯750 pixels. The input image was automatically broken into 4 tiles (375⨯375 pixels each) as input to the detection model. The performance result for the Intelligent Suite deployment in NCS2 and POC-515 industrial PC is reported in Table 3. Total time refers to time taken for reading image, loading model, tiling/merging, performing inference, and saving detection images (operated in ’single’ mode i.e., processing one image at a time). Detection-only time refers to time taken only for doing inference and saving detection images.

Software deployment

Running inference using OpenVINO runtime is considered to be the most basic and simple form of software deployment https://docs.openvino.ai/latest/openvino_deployment_guide.html. A local distribution package was created using OpenVINO deployment manager https://docs.openvino.ai/latest/openvino_docs_install_guides_deployment_manager_tool.html. A Windows batch script acts as the main point for running the application. The batch script will initialise environment for OpenVINO runtime and launch the actual software (binary executable). For Linux based OS a bash script can be written to do the same. The Intelligent Suite software is currently compiled and distributed for Windows OS, but the same script and code can be compiled/distributed for other operating systems (e.g., MacOS) supported by OpenVINO’s deployment manager. OpenVINO also supports deployment on Linux (Debian and RMP packages) distributions including support for edge-devices such as Raspberry Pi (Raspbian OS) and NVIDIA Jetson (ARM architecture). Since, OpenVINO optimizes model performance for Intel hardware- the Intelligent Suite is expected to perform faster on Intel based processors. Users feedback were considered during software development such as the addition of the coloured bar for flagging the outcome as positive or negative. Similarly, having a button to browse input image folder was suggested but not considered because the implementation required extra code and dependencies specific to Operating Systems and their software versions.

Originally conceptualized for use in remote areas with basic field laptops and the potential for inference on plug-and-play devices like Intel’s NCS2, the software is currently in prototype form. It may require further testing, validation, and feature enhancements in the future. The Intelligent Suite program folder can be copied to a computer or external drive and executed directly. The current version requires microscopy images to be copied into a specific input folder before processing. The suite displays a list of available inference devices on the user’s computer, including the Neural Compute Stick 2 (if plugged in), and offers processing modes (single or batch) for automated pathogen identification. Detection results are displayed on the user interface and are also saved on the computer as images and report files for future reference. This allows the user to adjust model inference settings (confidence and IoU threshold) for optimal results.

Discussion

The asynchronous operation mode provided better speed compared to the synchronous mode (Table 1). Therefore, running inference in NCS2 and using asynchronous mode provided best result for our application. Moreover, the result from Table 2 was in consistent with OpenVINO’s recommendation to using their C++ API for performance-critical cases (like our current application) because their python API was relatively slower with decreased FPS. The time taken by the full Intelligent Suite program as reported in performance assessment section (Table 3 was higher for NCS2 compared to CPU (AMD not Intel). More of the program time was used in loading model files to the NCS2 compared to CPU. However, after model is loaded and image is read the NCS2 is 3.5 times quicker for inference. In practice while the Intelligent Suite is running in the batch detection mode the model gets loaded only once, and it is just the inference time for each input image which favours the use of NCS2 from the results compared to inference on CPU (AMD) in the POC-515. OpenVINO optimizes detection model for Intel devices therefore Intelligent Suite can run more efficiently on a computer/laptop that has Intel CPU and/or GPU and can be considered for automation and real time application. Currently image acquisition from digital microscope is not integrated within the Intelligent Suite but is desirable if any standard digital microscope camera provides device drivers and libraries for easy integration. For example, Olympus microscope requires the software installed on local PC (strictly Windows OS) to capture and save the images. Such restriction can limit the deployment of detection software tool to specific operating system and hardware. The image dataset used for training detection models should be standardized for producing accurate and reliable results especially for healthcare applications. Therefore, integration of a standard digital microscope camera is favoured over use of mobile phone camera for image acquisition because the detection model trained on images acquired from a particular phone model may not produce reliable results on images from other phone models.

While the Intelligent Suite can be used as a standalone malaria pathogen detection software, we believe the methods used in development of this tool can contribute to the educational and research community in related field of applications. Moreover, the detection models in the Intelligent Suite can be replaced with newer YOLO models (OpenVINO supported format) trained on new datasets to be used as standalone tool or for generating results and reports for model benchmark purposes.

Contributions

In this study, the development of the Intelligent Suite led to significant findings, particularly in performance benchmarking of OpenVINO, revealing substantial differences in inference speed between Python and C++ implementations. These insights could guide future research on real-time applications. Additionally, we benchmarked OpenVINO’s inference modes (synchronous and asynchronous) on Intel’s Neural Compute Stick 2 (NCS2) and an industrial PC with an AMD processor. We also detailed the process of modifying external libraries to export the Darknet-based custom YOLO-mp-3l model to the OpenVINO format, offering valuable insights into the complexity and applicability of this process for other researchers. The compiled version of the ’Intelligent Suite,’ along with its source code and the detection models used in this study, have been made publicly available (see introduction section for the download link) as a contribution to academia and research:

-

Release of Intelligent Suite Script: This allows users to modify the user interface, recompile the Intelligent Suite for different operating systems, and integrate various detection models for multiple use cases.

-

Release of the Software Tool: The Intelligent Suite, compiled for Windows 10 OS, is available for users, providing quick access to the tool.

-

Release of the Detection Model: The Intelligent Suite includes the YOLO-mp-3l model, which can be used for pathogen detection and model benchmarking.

Data availability

The data that support the findings for this study are available at https://air.ug/static/images/downloads/plasmodium-phonecamera.zip.

References

Koirala, A. et al. Deep learning for real-time malaria parasite detection and counting using yolo-mp. IEEE Access 10, 102157–102172. https://doi.org/10.1109/ACCESS.2022.3208270 (2022).

Yu, H. et al. Malaria screener: a smartphone application for automated malaria screening. BMC Infectious Diseases 20, 1–8. https://doi.org/10.1186/s12879-020-05453-1 (2020).

Fuhad, K. et al. Deep learning based automatic malaria parasite detection from blood smear and its smartphone based application. Diagnostics 10, 329. https://doi.org/10.3390/diagnostics10050329 (2020).

Katharina, P., István, K. & János, T. An automated neural network-based stage-specific malaria detection software using dimension reduction: The malaria microscopy classifier. MethodsX 10, 102189. https://doi.org/10.1016/j.mex.2023.102189 (2023).

Bochkovskiy, A., Wang, C.-Y. & Liao, H.-Y. M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934https://doi.org/10.48550/arXiv.2004.10934 (2020).

Acknowledgements

This work is an outcome of a competitive category 1 Australia India Council Department of Foreign Affairs and Trade (AIC DFAT) 2022 grant. We extend our sincere gratitude to the AIC DFAT for their generous grant that has been instrumental in making this research project a reality. Their financial support and commitment to fostering research and diplomatic initiatives between Australia and India are greatly appreciated. This grant has enabled us to delve deeper into our research, pursue valuable insights, and contribute to the advancement of knowledge in the field of artificial intelligence and health. We are truly thankful for the trust and investment that AIC DFAT has placed in our work.

Author information

Authors and Affiliations

Contributions

M.J., A.K., G.C., S.B., and A.M., designed the Intelligent Suite, A.K. developed the prototype, P.K.S, S.M., and T.P., contributed as malaria experts in labelling and identification of malaria pathogens, A.M., A.H., and J.M. contributed as hardware experts. M.J., S.B., and G.C., contributed as machine learning experts. P.K.S., S.M., and T.K.P. provided the necessary expertise and facilities for the diagnosis of malaria pathogens. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Koirala, A., Jha, M., Chetty, G. et al. Development of intelligent suite for malaria pathogen detection in microscopy images. Sci Rep 14, 23821 (2024). https://doi.org/10.1038/s41598-024-75933-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-75933-w