Abstract

Imbalanced datasets, where the minority class is underrepresented, pose significant challenges for node classification in graph learning. Traditional methods often address this issue through synthetic oversampling techniques for the minority class, which can complicate the training process. To address these challenges, we introduce a novel training paradigm for node classification on imbalanced graphs, based on mixed entropy minimization (ME). Our proposed method, GraphME, offers a ‘free imbalance defense’ against class imbalance without requiring additional steps to improve classification performance. ME aims to achieve the same goal as cross-entropy-maximizing the model’s probability for the correct classes-while effectively reducing the impact of incorrect class probabilities through a “guidance” term that ensures a balanced trade-off. We validate the effectiveness of our approach through experiments on multiple datasets, where GraphME consistently outperforms the traditional cross-entropy objective, demonstrating enhanced robustness. Moreover, our method can be seamlessly integrated with various adversarial training techniques, leading to substantial improvements in robustness. Notably, GraphME enhances classification accuracy without compromising efficiency, a significant improvement over existing methods. The GraphME code is available at: https://github.com/12chen20/GraphME.

Similar content being viewed by others

Introduction

In recent years, the rapid advancement of Convolutional Neural Networks (CNN) has markedly accelerated progress in the field of image processing. However, conventional CNN are constrained to Euclidean space data, specifically image data. In contrast, non-Euclidean space data, such as social networks, traffic flow, and molecular structures, are ubiquitous in real-world applications. Within the machine learning domain, these non-Euclidean data types are collectively referred to as “graph data.” Graph data is recognized as a powerful model for representing complex relationships among entities, making it suitable for applications in traffic prediction1,2, network analysis3, and biochemistry4. Consequently, the modeling and application of graph data have attracted substantial research interest.

Graph Neural Networks (GNNs) have emerged as a preeminent technique for node classification tasks. Examples such as ChebNet5, Graph Convolutional Networks (GCN)3, and LegendreNet6 substantially reduce spatiotemporal complexity by parameterizing convolutional kernels in spectral methods. Conversely, GraphSage7 utilizes features in the spatial domain, facilitating the construction of GNNs for large-scale datasets. These GNNs have been extensively applied to processing graph-structured data, achieving significant success in the aforementioned fields.

Despite significant progress, existing research predominantly assumes balanced node categories in modeling. However, this assumption is often invalid in real-world applications, where imbalanced data distribution during training can detrimentally impact model performance. The model tends to favor the majority class, resulting in reduced classification accuracy for minority classes. This issue is particularly pronounced in domains such as fraud detection8, where fraudulent transactions are significantly rarer than normal transactions. The imbalanced data distribution biases algorithms towards the majority class, neglecting the minority classes’ characteristics. Similarly, in the BlogCatalog dataset (social network)9, class 8 constitutes 13.88%, whereas minority classes like class 38 account for only 0.02%. Thus, directly applying GNNs to numerous real-world imbalanced graph datasets may yield suboptimal results, including overfitting.

In the machine learning field, extensive research has been conducted on addressing class imbalance in Euclidean data. Solutions can be categorized into three primary approaches: data-level methods, algorithm-level methods, and hybrid methods. A common strategy is the use of resampling techniques to balance the sample distribution across classes. This involves oversampling minority class samples or undersampling majority class samples to ensure the model adequately focuses on minority classes during training. However, this approach may introduce issues, such as oversampling10 leading to the model’s increased sensitivity to noise, and undersampling11 potentially resulting in the loss of important sample features. Algorithm-level methods involve cost-sensitive learning12 or modifying the loss function13 to mitigate class imbalance’s impact. Hybrid methods14 combine both approaches. Nevertheless, effectively handling imbalanced graph-structured data (non-Euclidean data) remains a significant challenge.

While the aforementioned methods address class imbalance in Euclidean data to some extent, their direct application to graph-structured data often yields suboptimal results. Therefore, we have comprehensively reevaluated the traditional cross-entropy loss function (CE)15 from a novel perspective and proposed a more suitable loss function, Mixed Entropy (ME), which we have named GraphME, to address class imbalance in graph data. In node classification tasks, experiments have demonstrated that our approach further improves the prediction accuracy of the GraphSMOTE16 algorithm for imbalanced graph classification.

Here, we define the softmax cross-entropy \(CE(y, \hat{y})\) as follows:

where \(N\) is the number of samples, \(K\) is the number of classes, \(y^{(i)}\) is the true distribution, and \(\hat{y}^{(i)}\) is the softmax multinomial predicted distribution. Given the inherent limitations of CE in addressing class imbalance, we explored more robust loss functions. Consequently, we proposed the predictive entropy of the training data as a regularization term for the primary training objective cross-entropy. The predictive entropy \(R(\hat{y})\) is defined as follows:

\(R(\hat{y})\) has proven to be an effective method for addressing semi-supervised learning, as it can incorporate unlabeled data into standard supervised learning. On the other hand, CE loss may be insufficient to explicitly minimize the prediction probability of incorrect classes, particularly for minority classes. To enhance the focus on minority classes, we introduced \(R(\hat{y})\) of the imbalanced labeled data as a complementary part of the cross-entropy loss in the loss function. We refer to the combination of these two loss functions as Mixed Entropy (ME), a strategy that effectively addresses challenges associated with class imbalance. The detailed implementation and theoretical framework of ME are comprehensively discussed in the section of “Methodology”.

In this study, we evaluate the effectiveness of the proposed methods using three widely recognized imbalanced datasets: Cora, Citeseer, and BlogCatalog. Figure 1 illustrates the t-SNE17 visualization outcomes for models trained on the Cora and Citeseer datasets utilizing CE and ME loss functions. Initial experiments reveal that GraphME significantly enhances performance in handling imbalanced data relative to existing methodologies. In Fig. 1a,b, the t-SNE visualizations of the Cora dataset are presented. The model trained with the ME loss function (Fig. 1b) exhibits more distinct clustering and reduced overlap between categories compared to the model trained with the CE loss function (Fig. 1a). This indicates that the ME loss function more effectively discriminates between sample classes, resulting in clearer clusters in the latent space.Similarly, Fig. 1c,d display the t-SNE visualizations for the Citeseer dataset. Consistent with the findings from the Cora dataset, the model trained with the ME loss function (Fig. 1d) achieves superior clustering performance, characterized by well-separated categories and minimal overlap. These results further substantiate the efficacy of the ME loss function in constructing a discriminative latent space, surpassing the performance of the CE loss function.

In this study, we propose a novel training paradigm-Mixed Entropy Minimization (ME)-designed to address significant challenges in node classification within graph learning, particularly data imbalance and the low representativeness of minority class samples. Our primary contributions are as follows:

-

1.

Introduction of ME: This method offers a “free imbalance defense” enhancing node classification accuracy without requiring additional procedures or complex oversampling techniques.

-

2.

Versatility and compatibility: We demonstrate the seamless integration of the ME method with various known adversarial training techniques, further improving model robustness.

-

3.

Breakthrough improvement: Unlike previous attempts, our approach significantly enhances model classification performance without compromising efficiency, representing a major breakthrough in the field.

The remainder of this paper is organized as follows: section “Methodology” delves into the methodology, providing a theoretical analysis of the ME loss function’s role in addressing class imbalance and detailing the principles underlying the design of the GraphME framework. Section “Related work” offers a review of related work. Section “Experiments” presents a comparative analysis of the performance of GraphME and baseline models in applying the ME loss function through extensive experiments on publicly available imbalanced datasets. Finally, section “Conclusion” concludes the paper and outlines directions for future research.

Methodology

This section aims to explore the design principles of the GraphME framework through mathematical theory. We demonstrate the impact of this strategy on gradients and validate its effectiveness in handling minority class samples through experiments. Specifically, we show that this strategy promotes the dominance of the correct class (including minority classes) in the softmax scores over all other “incorrect” classes. While research on entropy regularization18 provides some insights into gradient behavior during training, our study focuses on the distinct monotonicity of the gradient of \(R\left( \hat{y}\right)\). This key finding helps explain the phenomenon of unstable convergence during the training process. We will next present the main theoretical results.

Derivation of the ME loss function

Consider a multi-class classification problem with \(N\) samples and \(K\) classes. Let \(y^{(i)}\) represent the true distribution of the \(i\)-th sample, and \(\hat{y}^{(i)}\) denote the predicted distribution obtained through the softmax function. To address class imbalance, we propose the mixed entropy loss function.

Definition 2.1

(Mixed Entropy Loss Function) The mixed entropy loss function \(ME(y, \hat{y})\) combines the softmax cross-entropy \(CE(y, \hat{y})\) and the prediction entropy \(R(\hat{y})\), and is defined as follows:

In this definition, \(\lambda \ge 0\) is a parameter that balances the effects of cross-entropy loss and prediction entropy. The mixed entropy loss function aims to effectively handle class imbalance by integrating information from both the true and predicted distributions, enhancing the model’s focus on minority classes.

Our experiments found that \(\lambda = 0.3\) yields the best results. Equation (3) shows that by introducing \(R(\hat{y})\) as a regularization term, the mixed entropy loss function considers both the cross-entropy loss of labeled data and the prediction entropy of unlabeled data, making it effective in semi-supervised learning. The following proposition describes some properties of mixed entropy.

Proposition 2.1

For a sample indexed by i, (1) when \(\hat{y}_g^{(i)} \ge e^{-1}\), the function\(-\hat{y}_g^{(i)} \log \hat{y}_g^{(i)}\)is decreasing with respect to\(\hat{y}_g^{(i)}\); (2) when \(\hat{y}_j^{(i)} < e^{-1}\), the function\(-\hat{y}_j^{(i)} \log \hat{y}_j^{(i)}\)is increasing with respect to\(\hat{y}_j^{(i)}\), where \(g\)represents the index of the true class, \(j \ne g\)indicates the index of misclassified classes, and the letter “e” represents the mathematical constant that serves as the base of the natural logarithm.

Proof

Consider the derivative term \(\hat{y}_j^{(i)} \log \hat{y}_j^{(i)}\) in the prediction entropy \(R(\hat{y})\), which is derived as follows:

We analyze the behavior of Eq. (4) by considering its sign:

Therefore, when \(\hat{y}_g^{(i)} \ge e^{-1}\), the function \(-\hat{y}_g^{(i)} \log \hat{y}_g^{(i)}\) is decreasing with respect to \(\hat{y}_g^{(i)}\). Specifically, as \(\hat{y}_g^{(i)}\) increases, the term \(-\hat{y}_g^{(i)} \log \hat{y}_g^{(i)}\) decreases, where \(g\) stands for the index of the true class. Conversely,

In this case of Eq. (6), when \(\hat{y}_j^{(i)} < e^{-1}\), the function \(-\hat{y}_j^{(i)} \log \hat{y}_j^{(i)}\) is increasing with respect to \(\hat{y}_j^{(i)}\). Specifically, as \(\hat{y}_j^{(i)}\) decreases, the term \(-\hat{y}_j^{(i)} \log \hat{y}_j^{(i)}\) decreases, where \(j \ne g\) indicates the index of misclassified classes.

This indicates that \(-\hat{y}_j^{(i)} \log \hat{y}_j^{(i)}\) exhibits different monotonic behaviors over different ranges of \(\hat{y}_j^{(i)}\). Notably, for the predicted probability of the true class \(\hat{y}_g^{(i)}\), the loss term decreases as its value increases; for the predicted probability of incorrect classes \(\hat{y}_j^{(i)}\), the loss term decreases as its value decreases. This property helps optimize model performance for minority class predictions in imbalanced classification scenarios. \(\square\)

GraphME framework and notations overview

In this study, we propose an ME loss function to address the issue of imbalanced graph data. Initially, we introduce the basic notation and definitions used in graphs. We consider an undirected graph \(G=\{V,E,X\}\), where \(V =\{v_1,v_2,\ldots ,v_n\}\) is a set of n nodes, and E is a set of of edges. The node feature matrix X is a \(n \times d\) matrix, where each row X[j, :] corresponds to the feature vector of node j. The number of categories is denoted by m. For a given category i, the number of samples is represented by \(|C_i|\). The adjacency matrix of the graph G is denoted by \(A \in {\mathbb {R}}^{n \times n}\), and the degree matrix, a diagonal matrix capturing the degree of each node, is denoted by \(D \in {\mathbb {R}}^{n \times n}\). Table 1 provides the major notations in the paper.

We first define the imbalance ratio (IR) as follows:

where \(\min _i{\left| C_i\right| }\) represents the size of the smallest class, and \(\max _i{\left| C_i\right| }\) represents the size of the largest class, providing a measure of class disparity. Our aim is to develop a node classifier \(f\) that can effectively handle imbalanced graph data classification by integrating the ME loss function with the GraphSMOTE model. To achieve optimal performance, we use the GraphSMOTE model for feature extraction and the ME loss function for training an efficient node classifier \(f\). Specifically,

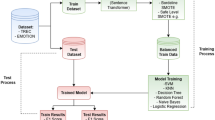

GraphME is a sophisticated graph classification framework that integrates the GraphSMOTE architecture with the ME loss function. By producing synthetic nodes for underrepresented classes and applying the ME loss function, GraphME improves model performance in graph classification tasks, effectively addressing prevalent class imbalance problems. The core concept is to improve the GraphSMOTE framework’s ability to learn representations by leveraging data augmentation and customized loss functions. Figure 2 illustrates the framework structure of the GraphME method.

Based on the GraphSMOTE architecture, we developed GraphME and conducted an in-depth analysis of the four primary modules of GraphSMOTE. Firstly, GraphSMOTE includes a feature extractor (encoder) driven by GraphSAGE7, which learns high-dimensional node representations by preserving node features and graph topology, as defined by the following equation:

This module plays a crucial role in generating artificial nodes for minority classes. Secondly, GraphSMOTE utilizes a synthetic node generator operating in latent space to create new minority nodes via interpolation:

The third component is the edge generator, responsible for predicting connections between synthetic nodes, thereby constructing a more balanced graph structure:

Ultimately, GraphSMOTE utilizes a GNN-based classifier to execute node classification on the augmented graph \(\widetilde{G}\), as expressed by:

These modules collectively enable GraphME to demonstrate significant performance improvements in the generation and classification of minority class nodes through its superior loss function–ME.

Related work

The problem of imbalanced learning in graphs can be broadly categorized into node-level and edge-level imbalance learning. Node-level imbalance refers to the uneven distribution of labeled nodes across different classes, whereas edge-level imbalance pertains to the unequal number of edges connecting nodes of different classes.

Node-level class imbalance learning

The primary challenge in node-level class imbalance learning is to ensure balanced information distribution across classes to facilitate unified model training. Dual-Regularized Graph Convolutional Networks (DRGCN)19 address this by introducing two regularization techniques: generating synthetic nodes via Generative Adversarial Networks (GAN)20 and minimizing latent distribution differences between unlabeled and labeled nodes, thereby achieving balanced representation learning. Conversely, Distance-wise Prototypical Graph Neural Network (DPGNN)21 employs prototype-driven training and distance metric learning to precisely capture the relative positions of nodes with respect to class prototypes, thus balancing training loss. The Topology-Aware Margin (TAM)22 method introduces connectivity and distribution-aware margins, comparing each node’s connection patterns with class average nodes and adaptively adjusting margins to mitigate class imbalance. Long-Tail Experts for Graphs (LTE4G)23 address the long-tail distribution phenomenon in class and node degree distributions by jointly tackling node classification problems using expert models and knowledge distillation techniques, thereby enhancing classification accuracy for both head and tail classes. The Graph Neural Network framework with Curriculum Learning (GNN-CL)24 employs adaptive graph oversampling to generate reliable interpolated nodes and edges. It integrates graph classification loss with metric learning loss, dynamically adjusting parameters during training to optimize the distribution of nodes in the feature space. This approach effectively addresses the issue of node label imbalance, thereby improving classification accuracy. The GNN-based Imbalanced Node Classification Model (GNN-INCM)25 enhances the representativeness and information retention of node embeddings through two synergistic modules: Embedding Clustering Optimization (ECO) and Graph Reconstruction Optimization (GRO). By incorporating a hard example strategy and knowledge distillation methods, this model significantly improves the representation and classification accuracy of minority-class nodes.

Edge-level class imbalance learning

Event-Aware Graph Attention Networks (EA-GAT)26 introduced a meta-learning-based model designed to encode fine-grained events using an event-aware graph attention mechanism. This model employs a gradient-based episodic learning approach to acquire transferable knowledge, effectively addressing the link prediction problem in event-driven social networks under few-shot scenarios. Node-wise Localized GNN (LGNN)27 processes graphs at both global and local levels. At the global level, all nodes rely on a foundational global GNN to encode overall graph patterns. At the local level, each node is adapted into a unique model based on the global model and its local context, thereby enabling few-shot link prediction across various parts of a single graph. Several studies have focused on detecting anomalous edges in dynamic graphs by tracking edge dynamics28,29,30. For instance, Miz et al.29 demonstrated that a Hopfield network model, combined with graph and temporal information, can accurately detect anomalous temporal spikes through a memory network. They also provided a distributed algorithm implementation suitable for large-scale, time-varying graphs. The Structural Graph Neural Network (StrGNN)30 proposed a node labeling function to identify the role of each node within the graph. This model employs a GNN with Gated Recurrent Units (GRUs)31 to capture changes in subgraph structures and temporal information, serving as indicators of anomalies. Wu et al.32 introduced a novel framework called GraphMixup, which enhances node classification performance by mixing features in the semantic relation space and capturing both local and global information in the graph structure using context-aware self-supervision. The framework specifically designs Edge Mixup to handle graph data, while the reinforcement-based mixing mechanism adaptively generates minority-class samples.

Experiments

In this section, we compare the performance of GraphME, utilizing the superior ME loss function, against state-of-the-art graph neural networks through a series of experiments. These experiments are conducted on publicly available graph datasets and employ high-performance hardware, including an NVIDIA Tesla T4 GPU with 16GB memory, an Intel Xeon CPU with a 2.0 GHz clock speed, and 78GB of RAM. These hardware configurations ensure both computational efficiency and the reliability of the results.

Datasets and performance measurement

Datasets

This paper provides a detailed description of three classic datasets widely used in graph neural network research, all accessible through the PyTorch Geometric library33. The datasets include Cora34 and CiteSeer, two prominent citation network datasets with undirected graph structures where nodes represent documents and edges denote citation relationships. Node labels are employed for multi-class classification tasks, and node features are word vectors, with each element being a binary variable (0-1) indicating the presence or absence of a specific word in the document. Additionally, we utilize the BlogCatalog dataset9 from social networks, where nodes represent blog users and edges represent user relationships. The BlogCatalog dataset is notably imbalanced, with class 8 comprising 13.88% of the data while class 38 comprises only 0.02%. These datasets serve as benchmarks for evaluating and comparing the performance of various graph neural network algorithms, providing essential resources for academic research.

To ensure fairness and comparability of research results, a uniform partitioning strategy was adopted for these datasets. Samples from each class were split into training, validation, and test sets in a 1:1:2 ratio. More detailed partitioning information can be found in Table 2.

Performance measurement

In the context of imbalanced datasets, the misclassification cost for minority classes is significantly higher than for majority classes. Therefore, we employ three primary evaluation metrics: classification accuracy (ACC), the area under the receiver operating characteristic curve (AUC–ROC) score, and the F-Score.

Classification accuracy (ACC) is the ratio of correctly classified samples to the total number of samples, calculated as follows:

where True Positive (TP) refers to instances where the predicted and actual values match, with both being from the minority class. False Positive (FP) occurs when the predicted value is from the minority class, but the actual value belongs to the majority class. Conversely, False Negative (FN) is when the predicted value is from the majority class, while the actual value is from the minority class. True Negative (TN) describes instances where the predicted and actual values match, with both being from the majority class. However, in cases of class imbalance, ACC may underestimate the performance for minority classes.

To address this issue, we employ the AUC–ROC score, which evaluates the overall discriminative ability of the model. A value close to 1 indicates that the model can effectively distinguish positive samples while minimizing the misclassification of negative samples. Additionally, the F-Score, which is the harmonic mean of precision and recall, is used to assess the accuracy and completeness of the model. The F-Score is calculated as follows:

where precision and recall are calculated as:

These metrics collectively provide a comprehensive evaluation of model performance, particularly in handling imbalanced datasets, and more accurately reflect the model’s predictive capability across different classes.

Baseline model and hyperparameter settings

Baseline models

To demonstrate the superior performance of our proposed ME loss function in addressing imbalanced classification problems, we selected several advanced methods for comparative analysis. Through meticulously designed node classification experiments, we compared GraphME with GraphSMOTE and evaluated the performance of ME loss against CE loss across various methods, revealing the unique advantages and contributions of ME loss in handling imbalanced graph data.

-

1.

Over-sampling: This classic method increases the number of minority class samples by duplicating existing ones or generating new ones, thereby balancing the dataset. In the embedding space, this is typically achieved by replicating the representations of minority nodes.

-

2.

Re-weight35: This cost-sensitive approach assigns specific loss weights to each class. Instead of altering the quantity of data, it influences the learning process by adjusting the sample weights.

-

3.

Embed-SMOTE36: An extension of SMOTE that generates new minority class samples in the embedding space. Embed-SMOTE leverages proximity relationships in the embedding space to create more representative new minority samples, enhancing the model’s ability to recognize minority classes.

-

4.

GraphSMOTE16: A variant of SMOTE specifically designed for imbalanced graph data. It balances the node class distribution within the graph structure by generating new minority class nodes in the embedding space. This method preserves the structural characteristics of the graph while improving the recognition of minority nodes. GraphSMOTE is further refined into several variants, including \(\hbox {GraphSMOTE}_T\) (for edge prediction), \(\hbox {GraphSMOTE}_O\) (emphasizing both edge prediction and node classification), \(\hbox {GraphSMOTE}_{\text {preT}}\), and \(\hbox {GraphSMOTE}_{\text {preO}}\) (which incorporate a pre-training phase to extend these focuses).

-

5.

GraphME (Ours): Our proposed GraphME method introduces ME as an substitute loss function and combines it with GraphSMOTE, forming the GraphME approach. This method applies ME loss to imbalanced graph data, demonstrating its potential advantages and unique contributions in handling imbalanced classification problems.

These comparisons confirm the excellence of the proposed ME loss function in graph node classification tasks based on imbalanced data.

Hyperparameter settings

To ensure fairness and reproducibility of our experiments and to achieve optimal results, all models were trained using the ADAM optimization algorithm. The imbalance ratio was set to 0.5, and the oversampling ratio to 2.0. We selected the optimal combination of hyperparameters from the following options:

-

Learning rate: {0.01, 0.015, 0.001, 0.005}

-

Weight decay: {0.0005, 0.0003}

-

Dropout rate: {0.1, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8}

-

Early stopping: 200 epochs for the Cora and BlogCatalog datasets, and 100 epochs for the Citeseer dataset.

For more detailed experimental parameter settings, please refer to Table 3.

Experimental results

Performance comparison

Based on the analysis of the BlogCatalog dataset (Fig. 3), several key conclusions can be drawn. Under the ‘Origin’ setting, the use of the ME loss function, without any additional algorithms, resulted in a 5.6–7.3% improvement in the ACC and AUC–ROC metrics compared to the CE loss function. This improvement surpasses all ‘GraphSMOTE’ variants, with the ACC metric being approximately 1.7% higher than the best-performing method, ‘\(\hbox {GraphSMOTE}_{\text {preT}}\)’. For the sake of simplicity, the GraphSMOTE model employing ME loss is referred to as our GraphME model. Specifically, under ME loss, the ACC metric of the GraphME method was up to 6.3% higher than that of ‘\(\hbox {GraphSMOTE}_{\text {T}}\)’, further validating the efficacy of ME loss in enhancing the classification model’s discriminative ability. In terms of AUC–ROC, the ‘Origin’ method demonstrated a 7.30% improvement, while the ‘Re-weight’ method showed a 4.40% enhancement, both indicating significant gains. Similarly, in F1 score, the ME loss exhibited superior performance, with the ‘Origin’ method’s F1 score increasing by 5% and the ‘Embed-SMOTE’ method’s F1 score improving by 5.4%, significantly boosting the model’s performance.

To further substantiate the exceptional performance of ME loss in addressing imbalanced classification problems, we conducted experiments on the Cora dataset, comparing the performance of different methods under CE loss and ME loss. As illustrated in Fig. 4a,c, under the ‘Origin’ setting, the application of ME loss improved the model’s ACC and F1 scores by approximately 10%, representing significant enhancements that even surpassed all imbalanced methods using CE loss, with an improvement of about 4% over the best method. Our GraphME model and its four variants outperformed GraphSMOTE by 4–7% in ACC and F1 scores, further demonstrating the efficiency and superiority of ME loss.

As shown in Fig. 4b, ME loss also led to a significant improvement in AUC–ROC. The ‘Origin’ method’s AUC–ROC increased by approximately 3%, with the highest AUC–ROC score for ‘\(\hbox {GraphME}_{\text {preO}}\)’ reaching 95.4%, a 2% improvement over ‘\(\hbox {GraphSMOTE}_{\text {preO}}\)’ with CE loss. Overall, ME loss enhances the model’s discriminative capability, making it more robust in handling imbalanced data.

Finally, as demonstrated in Table 4, we have emphasized the optimal results: across all comparative methods, the ME loss function consistently outperformed the CE loss function, with notable improvements in both ACC and AUC–ROC metrics. Specifically, within the ‘Origin’ method, the ACC with the ME loss was 18.8% higher than with the CE loss, and the F1 score exhibited a significant increase of 23.9%. This underscores the robust capabilities of the ME loss function in effectively addressing the imbalanced classification problem without the need for special handling.

Furthermore, in terms of the AUC–ROC metric, our ‘\(\hbox {GraphME}_{\text {preT}}\)’ method, when combined with the ME loss, achieved the highest performance, attaining an AUC–ROC of 89.9%. Additionally, the ‘\(\hbox {GraphME}_{\text {preT}}\)’ method consistently yielded optimal results across all evaluated methods. These findings suggest that the utilization of the ME loss function can substantially enhance the performance of classification models on imbalanced datasets, thereby improving both their discriminative power and overall effectiveness.

To enhance the rigor and persuasiveness of our experimental evaluation, we incorporated state-of-the-art benchmark models-GNN-INCM25, Graphmixup32, and GNN-CL24 into our analysis on two well-established imbalanced datasets. We conducted a thorough comparative analysis between these models and our proposed GraphME model. Adhering strictly to the original experimental settings outlined in the respective publications, we utilized the officially released code to ensure that all benchmark models were executed under optimal conditions. As illustrated in Table 5, the GraphME model consistently outperformed the benchmark models on both the Cora and Citeseer datasets, achieving the highest classification accuracy. Notably, GraphME surpassed the performance of the other models by approximately 2.6–4.5% on the Cora dataset, and exhibited a similarly superior performance on the Citeseer dataset, with an accuracy improvement ranging from 0.2 to 9.1%. These findings underscore the exceptional learning and generalization capabilities of GraphME in addressing imbalanced node classification tasks, highlighting its significant advantages and broad applicability across diverse imbalanced datasets.

To enhance the rigor of our findings, we conducted statistical significance tests to evaluate the accuracy of CE loss and ME loss across three established datasets. As shown in Table 6, at a significance level of 0.05, the results indicated that P \(\le\) 0.05 , prompting us to reject the null hypothesis. In the eight pairs of samples drawn from the Cora, BlogCatalog, and Citeseer datasets, our proposed method exhibited average accuracy improvements of 4.9%, 3.9%, and 10.5%, respectively, relative to state-of-the-art approaches. These results underscore the statistical significance of the enhancements offered by GraphME, highlighting its substantial implications in comparison to existing methodologies.

Impact of imbalance ratio

This study systematically evaluates the impact of imbalance rates on node classification performance and validates these findings across various algorithms to evaluate robustness. Figure 5 illustrates the classification results using two loss functions under different imbalance ratios (0.1, 0.2, 0.4, 0.6) on the Citeseer dataset. It is evident from Fig. 5 that ME-Loss consistently outperforms CE-Loss across most algorithms, regardless of the imbalance rate. Especially, the largest AUC–ROC difference between ME-Loss and CE-Loss is observed at an imbalance rate of 0.2, achieving a disparity of 3.3% (compared to the ‘Reweight’ method). At an imbalance rate of 0.1, the AUC–ROC difference remains significant at 3.1% (compared to the ‘Origin’ method). Even at an imbalance rate of 0.6, the AUC–ROC difference between ME-Loss and CE-Loss persists at a significant 2.9% (compared to the ‘Origin’ method), with disparities exceeding 2.4% across other methods.

These findings indicate that the ME loss function demonstrates superior robustness in managing imbalanced datasets, particularly at higher imbalance rates. Compared to CE-Loss, ME-Loss more effectively mitigates data imbalance, thereby improving classification performance. This insight is crucial for the further optimization of classification algorithms to address real-world imbalanced data.

Efficiency comparison

To facilitate a more intuitive comparison of the efficiency between the GraphME method and other baseline models, we limited the maximum number of epochs to 2010. Table 7 displays the total runtime for each method in minutes. Analyzing the data presented in Table 7 and Fig. 4 allows us to draw the following conclusions: the implementation of the ME loss function enhances classification accuracy without causing a significant increase in training time. Specifically, in the Embed-SMOTE model, the ME loss function demonstrates greater efficiency compared to the traditional CE loss, reducing the runtime by 0.1 minutes. Additionally, the computational efficiency of the ME loss function across other models remains comparable to that of traditional methods, with no substantial increase in training time. These results indicate that the ME loss function not only improves classification performance but also maintains computational efficiency equivalent to existing methods, offering a more optimal solution for practical applications.

Conclusion

The proposed Mixed Entropy Minimization (ME) method presents an innovative and efficient solution for addressing class imbalance in graph-based node classification. Our GraphME model markedly enhances node classification accuracy and model robustness without necessitating additional complex steps. By optimizing model probabilities for the true class and effectively neutralizing those for incorrect classes, GraphME exhibits substantial advantages in mitigating class imbalance challenges. We conducted extensive node classification experiments on three real-world citation network datasets: Cora, Citeseer, and BlogCatalog, employing three evaluation metrics. The results demonstrate that GraphME not only surpasses the conventional cross-entropy method in robustness but also seamlessly integrates various adversarial training techniques, further augmenting model performance and versatility.

GraphME’s strength lies in its streamlined and efficient design, significantly improving classification performance without increasing model complexity. This “free imbalance defense” feature distinguishes GraphME from existing graph learning methods, providing a robust foundation for further research and application. Although GraphME has achieved significant progress in addressing class imbalance in node classification, several future research directions warrant exploration. Firstly, it is essential to validate GraphME’s effectiveness on more diverse and large-scale graph datasets to comprehensively assess its generalizability and limitations. Additionally, the GraphME method could be extended to other graph learning tasks, such as link prediction and graph embedding, to evaluate its generality and adaptability.

Data availability

Data is available at: https://github.com/12chen20/GraphME/tree/main/data. All figures in this article were generated using Python software.

References

Fu, J., Zhou, W. & Chen, Z. Bayesian graph convolutional network for traffic prediction. Neurocomputing 582, 127507 (2024).

Wang, B. et al. Towards dynamic spatial-temporal graph learning: A decoupled perspective. In Proceedings of the AAAI Conference on Artificial Intelligence 38, 9089–9097 (2024).

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. arXiv preprint[SPACE]arXiv:1609.02907 (2016).

Luo, H. et al. Prediction of drug-disease associations based on reinforcement symmetric metric learning and graph convolution network. Front. Pharmacol. 15, 1337764 (2024).

Defferrard, M., Bresson, X. & Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. Adv. Neural Inf. Process. Syst. 29 (2016).

Chen, J. & Xu, L. Improved modeling and generalization capabilities of graph neural networks with legendre polynomials. IEEE Access (2023).

Hamilton, W., Ying, Z. & Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 30 (2017).

Lakhan, A., Mohammed, M. A., Ibrahim, D. A., Kadry, S. & Abdulkareem, K. H. Its based on deep graph convolutional fraud detection network blockchain-enabled fog-cloud. IEEE Trans. Intell. Transport. Syst. (2022).

Tang, L. & Liu, H. Relational learning via latent social dimensions. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 817–826 (2009).

Chawla, N. V., Bowyer, K. W., Hall, L. O. & Kegelmeyer, W. P. Smote: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002).

Lin, W.-C., Tsai, C.-F., Hu, Y.-H. & Jhang, J.-S. Clustering-based undersampling in class-imbalanced data. Inf. Sci. 409, 17–26 (2017).

Chung, Y.-A., Lin, H.-T. & Yang, S.-W. Cost-aware pre-training for multiclass cost-sensitive deep learning. arXiv preprint[SPACE]arXiv:1511.09337 (2015).

Xu, L. & Chen, J. Graphecc: Enhancing graphsmote with enhanced complementary classifier. J. Intell. Fuzzy Syst., 1–13.

Shi, M., Tang, Y., Zhu, X., Wilson, D. & Liu, J. Multi-class imbalanced graph convolutional network learning. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI-20) (2020).

Kullback, S. & Leibler, R. A. On information and sufficiency. Ann. Math. Stat. 22, 79–86 (1951).

Zhao, T., Zhang, X. & Wang, S. Graphsmote: Imbalanced node classification on graphs with graph neural networks. In Proceedings of the 14th ACM international conference on web search and data mining, 833–841 (2021).

Van der Maaten, L. & Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 9 (2008).

Grandvalet, Y. & Bengio, Y. Semi-supervised learning by entropy minimization. Adv. Neural Inf. Process. Syst. 17 (2004).

Shi, M., Tang, Y., Zhu, X., Wilson, D. & Liu, J. Multi-class imbalanced graph convolutional network learning. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI-20) (2020).

Goodfellow, I. et al. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 27 (2014).

Wang, Y., Aggarwal, C. & Derr, T. Distance-wise prototypical graph neural network in node imbalance classification. arXiv preprint [SPACE] arXiv:2110.12035 (2021).

Song, J., Park, J. & Yang, E. Tam: topology-aware margin loss for class-imbalanced node classification. In International Conference on Machine Learning, 20369–20383 (PMLR, 2022).

Yun, S., Kim, K., Yoon, K. & Park, C. Lte4g: Long-tail experts for graph neural networks. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, 2434–2443 (2022).

Li, X. et al. Graph neural network with curriculum learning for imbalanced node classification. Neurocomputing 574, 127229 (2024).

Huang, Z., Tang, Y. & Chen, Y. A graph neural network-based node classification model on class-imbalanced graph data. Knowl. Based Syst. 244, 108538 (2022).

Zhu, X. et al. Few-shot link prediction for event-based social networks via meta-learning. In International Conference on Database Systems for Advanced Applications, 31–41 (Springer, 2023).

Liu, Z., Fang, Y., Liu, C. & Hoi, S. C. Node-wise localization of graph neural networks. arXiv preprint[SPACE]arXiv:2110.14322 (2021).

Wang, H. & Qiao, C. A nodes’ evolution diversity inspired method to detect anomalies in dynamic social networks. IEEE Trans. Knowl. Data Eng. 32, 1868–1880 (2019).

Miz, V., Ricaud, B., Benzi, K. & Vandergheynst, P. Anomaly detection in the dynamics of web and social networks using associative memory. In The World Wide Web Conference, 1290–1299 (2019).

Cai, L. et al. Structural temporal graph neural networks for anomaly detection in dynamic graphs. In Proceedings of the 30th ACM international conference on Information & Knowledge Management, 3747–3756 (2021).

Chung, J., Gulcehre, C., Cho, K. & Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint[SPACE]arXiv:1412.3555 (2014).

Wu, L. et al. Graphmixup: Improving class-imbalanced node classification by reinforcement mixup and self-supervised context prediction. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 519–535 (Springer, 2022).

Fey, M. & Lenssen, J. E. Fast graph representation learning with PyTorch geometric. arXiv preprint[SPACE]arXiv:1903.02428 (2019).

Bojchevski, A. & Günnemann, S. Deep gaussian embedding of graphs: Unsupervised inductive learning via ranking. arXiv preprint[SPACE]arXiv:1707.03815 (2017).

Yuan, B. & Ma, X. Sampling+ reweighting: Boosting the performance of AdaBoost on imbalanced datasets. In The 2012 International Joint Conference on Neural Networks (IJCNN), 1–6 (IEEE, 2012).

Ando, S. & Huang, C. Y. Deep over-sampling framework for classifying imbalanced data. In Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2017, Skopje, Macedonia, September 18–22, 2017, Proceedings, Part I 10, 770–785 (Springer, 2017).

Acknowledgements

This work was supported by “The National Social Science Foundation of China under Grant 20BTJ046”.

Author information

Authors and Affiliations

Contributions

Conceptualization, L.X.; methodology, L.X. and Z.H; software, J.C. ; validation, J.C. and Z.H ; formal analysis, L.X.; investigation, J.C.; resources, L.X.; analysed the results; J.C. and Z.H ; writing—original draft preparation, L.X., J.C., Z.H; writing—review and editing, L.X.; visualization, J.C. and Z.H; supervision, L.X.; project administration, L.X.; funding acquisition, L.X. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xu, L., Zhu, H. & Chen, J. Imbalanced graph learning via mixed entropy minimization. Sci Rep 14, 24892 (2024). https://doi.org/10.1038/s41598-024-75999-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-75999-6