Abstract

Synthetic data is becoming a valuable tool for computational pathologists, aiding in tasks like data augmentation and addressing data scarcity and privacy. However, its use necessitates careful planning and evaluation to prevent the creation of clinically irrelevant artifacts.

This manuscript introduces a comprehensive pipeline for generating and evaluating synthetic pathology data using a diffusion model. The pipeline features a multifaceted evaluation strategy with an integrated explainability procedure, addressing two key aspects of synthetic data use in the medical domain.

The evaluation of the generated data employs an ensemble-like approach. The first step includes assessing the similarity between real and synthetic data using established metrics. The second step involves evaluating the usability of the generated images in deep learning models accompanied with explainable AI methods. The final step entails verifying their histopathological realism through questionnaires answered by professional pathologists. We show that each of these evaluation steps are necessary as they provide complementary information on the generated data’s quality.

The pipeline is demonstrated on the public GTEx dataset of 650 Whole Slide Images (WSIs), including five different tissues. An equal number of tiles from each tissue are generated and their reliability is assessed using the proposed evaluation pipeline, yielding promising results.

In summary, the proposed workflow offers a comprehensive solution for generative AI in digital pathology, potentially aiding the community in their transition towards digitalization and data-driven modeling.

Similar content being viewed by others

Introduction

In recent years, rapid advancements in scanning technologies have revolutionized traditional pathology, giving rise to the field of digital pathology1,2,3. Instead of traditional glass slides, the focus now shifts to whole slide images (WSIs)—high-resolution digital representations of complete tissue sections. To create WSIs, tissue specimens undergo meticulous scanning using specialized slide scanners. These scanners capture every microscopic detail, including cell structures, tissue patterns, and staining variations. The resulting WSIs serve as the digital counterparts of physical glass slides4.Pathologists can now review WSIs remotely, breaking geographical constraints. Collaborative efforts among pathologists occur virtually, with insights shared and challenging cases discussed. Deep learning algorithms have demonstrated impressive capabilities in automating critical tasks such as cancer detection or grading5,6,7,8 as well as cell segmentation9,10,11. However, the success of these deep learning algorithms heavily relies on the availability and quality of large-scale, annotated datasets12, which can be challenging in the medical domain where data scarcity and privacy concerns are prominent13. To address this issue, synthetic data generation has gained momentum, by producing data that maintains similar statistical properties as real data, making it a promising avenue to tackle privacy concerns while working with sensitive data such as medical images14,15. We explore this approach by using synthetic data as a standalone solution by simulating a scenario in which we anonymize proprietary medical data, enabling institutions to share and utilize data without compromising patient privacy.

At the heart of synthetic data generation lie generative models, such as Generative Adversarial Networks (GANs)16. GANs have found widespread application in generating synthetic images across various medical domains17,18,19, including digital histopathology images20,21,22,23. However, recent advancements have shown that diffusion models24 have surpassed GANs in generating both natural and medical images25,26. Diffusion models are a class of generative models inspired by non-equilibrium thermodynamics. These models employ a two-step process to learn the data distribution to generate new data afterwards. In the first step, a Markov chain of diffusion steps is defined, where noise is progressively added to the input. Then, the model learns to reverse this process by generating novel data starting from the noisy inputs24. The usage of diffusion models in medical image generation includes (but is not limited to) brain magnetic resonance images28,29, microscopic blood cells images30, positron emission tomography heart images31, and chest x-ray32. A comprehensive review on the subject has been recently published33.

The first application of diffusion models in generating histopathology images dates to34, where the authors successfully employed denoising diffusion models to generate Hematoxylin and Eosin (H&E) tiles of three different subtypes of gliomas. Their results demonstrated that diffusion models outperform GANs for this task based on common quantitative evaluation metrics used in image generation quality assessments. Following their success, the authors in35 also demonstrated the ability and superiority of diffusion models over GANs in generating colon histopathology images containing six different cell nucleus types and their corresponding masks. Additionally, authors in36 used vision transformer-based diffusion models to generate colon histopathology images with higher quality than those generated using GANs.

Before exploiting generated data it is crucial to evaluate them. However, the evaluation of generated data remains a topic of debate, with various studies adopting different approaches. The lack of a standardized evaluation pipeline to comprehensively assess generated data poses a major caveat. In this study, we aim to tackle this issue by introducing a general framework for generating and, more importantly, thoroughly evaluating synthetic pathology images from various viewpoints. We will illustrate how these multifaceted evaluations yield complementary insights that cannot be obtained through any single method alone.

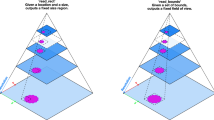

The proposed synthetic pathology data generation and evaluation pipeline, as demonstrated in Fig. 1, begins with the preprocessing stage, where tiles are extracted from WSIs.

Subsequently, a diffusion model is trained to generate data conditioned on different classes. After the training process, a sampling phase is performed to generate a specific number of synthetic data for each class. Lastly, the evaluation step process encompasses three distinct sets of assessments. The first set involves quantitative metrics that measure the similarity between the generated and real images. For our quantitative evaluation, we employed well-established distribution based metrics commonly used in the field of synthetic images, including Inception Score (IS)37, Fréchet Inception Distance (FID)38 improved precision-recall39, and density-coverage40 as well as IL-NIQE no-reference image quality assessment method41.

The second set evaluates the practical usability of the generated images in deep learning models. To complement this set we investigated the convolutional filters learned by the classifiers, trained on both real and generated data, using the Concept Relevance Propagation (CRP) algorithm42, an explainable artificial intelligence approach.

Lastly, a qualitative assessment is conducted to validate the biological realism of the generated data, a crucial aspect not targeted in previous assessments. This comprehensive approach ensures the reliability and usefulness of the generated dataset for our selected task. We applied the pipeline on 650 WSIs of five different tissues derived from the Genotype-Tissue Expression (GTEx)43. We specifically chose to include multiple tissues, since most previous studies have focused on only one tissue type34,35,36: such conservative approach fails to demonstrate whether similar success can be expected for other tissue types with varying complexities of morphology.

To the best of our knowledge, this study represents a novel and reproducible approach to histopathology image generation and evaluation. What sets our work apart is the thorough evaluation of the generated data from diverse angles, encompassing different tissue types and utilizing a range of evaluation metrics. Through our emphasis on multifaceted evaluation, including the pioneering application of explainability methods, our objective is to offer a comprehensive understanding of the capabilities and limitations of the proposed generative model within the digital pathology domain.

The proposed pipeline. The graphical workflow of our proposed pipeline is demonstrated. (A) Demonstrates the preprocessing step, where first, through a set of steps the tissue is detected in a slide followed by a tiling step to extract batches of tiles. (B) Demonstrates the model training step where the extracted tiles are input into a denoising diffusion model, where noise is initially added to images. These perturbed images, along with the noise level embedding and the class embedding (in our case, the tissue type), are subsequently fed into a U-Net model, which predicts the noise added to the image. Both the initial noise and the predicted noise are utilized to compute the loss and drive the training process. (C) Depicts the data generation step. Here, pure noise along with the desired class embedding are fed into the trained model to predict the noise image, which is then subtracted from the original noise to create a less noisy image. This resulting image serves as the new noisy input. After this process is repeated for ‘t’ steps, the final image is generated. (D) Subsequently, the generated dataset undergoes comprehensive evaluations, divided into three distinct categories. Quantitative assessment entails metrics such as Inception Score (IS), Frechet Inception Distance (FID), improved precision-recall (PRC, RCL), and density-coverage (DEN, COV) to gauge the similarity between the generated and real datasets as well as IL-NIQE to assess image quality. A practical evaluation involves training a ResNet classifier for tissue detection, assessing and comparing the usability of the generated data with the real dataset in terms of performance and explainability. Lastly, the biological realism of the generated data is evaluated through a series of questionnaires administered to expert pathologists.

Materials and methods

Dataset

Images were obtained from the GTEx study44. GTEx dataset contains WSIs collected using an Aperio glass slides scanner using H&E staining43.

Among the 53 available human tissues, we retained data associated exclusively to samples obtained from our designated tissues of interest, namely the Brain, Kidney, Lung, Pancreas, and Uterus. This filtering process resulted in approximately 5000 samples’ metadata. Subsequently, we randomly selected a sample set of 650 specimens (subjects) from the filtered metadata to obtain their corresponding WSIs, resulting in one slide per subject. In cases where a WSI was not available for downloading, the specimen was excluded from the final dataset (the list of the downloaded WSIs is available in Supplementary Table S1).

Out of the 648 downloaded WSIs, a total of 589 have been used as training set for the deep learning models, while the remaining ones have been exclusively used for testing purposes.

Preprocessing pipeline

WSIs demonstrate a considerably high spatial resolution: in our case, a spatial base resolution of 0.494 μm per pixel on a 20x magnification factor. Such granularity makes them impractical as direct inputs for neural networks.

It is commonly recommended to divide a WSI into uniform-sized tiles which can then be utilized as input for deep learning models45,46. Therefore, a preprocessing stage was deemed necessary to adequately prepare the collected data for model training.

In our study, we employed the Histolab library47 to facilitate the tiling process of the slides. This library offers tools to generate tissue masks and perform the tiling process47.

The WSIs do not necessarily contain only tissue information. Therefore, the creation of a tissue mask given a slide is a crucial step, as it ensures that the generated tiles primarily contain tissue-related information while minimizing extraneous background areas. We observed that the following ordered steps (depicted in Fig. 1A) define an adequate tissue mask:

-

1.

RgbToGrayscale filter: This process involves the conversion of a 3-channel image into a single channel grayscale image.

-

2.

OtsuThreshold filter: Given a grayscale image, returns a binary thresholded image with separated foreground and background48.

-

3.

BinaryDilation: Performs a dilation on a binary image, i.e., the output image’s pixel value is determined by assigning it the maximum value among its neighboring pixels.

-

4.

BinaryFillHoles filter: This process involves the task of filling all regions within a binary image.

We have carefully selected parameters to define the attributes of the tissue mask extractor. For the OtsuThreshold image filter, we have chosen the operator.lt parameter, which performs the “less than” operation between the pixels of the image and the estimated Otsu’s threshold. Additionally, we employed a disk size of 5 and performed a single iteration for the BinaryDilation operation. Furthermore, we utilised the pre-set structural element (i.e. an element with a square connectivity equal to one) for the BinaryFillHoles operation.

In addition to the mask generation, other parameters are required in order to tile a WSI:

-

Tile Size: Specifies the final resolution of the extracted tiles in pixels.

-

Level: Specifies the level of tile extraction in a slide, the lower it is (minimum value 0), the higher the magnification factor will be.

-

Pixel Overlap: Specifies in pixels how much the tiles will overlap between each other.

We decided to extract non-overlapping tiles with a resolution of 512 × 512 pixels from slides at the magnification factor of 5x (therefore with resolution of 1.976 μm per pixel). These parameters were chosen upon consultation with pathologists aiming to optimize the tissue classification task, providing sufficient contextual information for tissue differentiation, and avoiding excessive zooming that could render the tiles unidentifiable.

This resulted in 63,247 tiles that were divided in two sets: from the 589 training WSIs, 56,990 tiles have been extracted and used for training the generative model and the classifier, while the remaining 6257 tiles have been extracted from the 59 test WSIs and used for testing purposes. Nevertheless, even with a precisely defined mask, not all generated tiles may consistently capture informative attributes of the original slide. As illustrated in (the left panel of) Supplementary Figure S1, some tiles lack discernible morphological characteristics of the tissue, indicating that they may not contribute valuable information for the models. To filter such tiles, we exploited the Complexity metric \(\:\left(C\right)\) as inspired by49 and it is defined as Eq. (1):

where the pixel-wise gradient \(\:\left(G\right)\) in the horizontal (\(\:x\))and vertical (\(\:y\)) directions is computed for each tile \(\:t\). The total sum of gradients is then multiplied by a term that is dependent on the width \(\:N\) and height \(\:M\) of the tile. A tile \(\:t\) with a non-uniform tissue will exhibit elevated pixel gradient values, thereby resulting in a correspondingly high value for \(\:{C}_{t}\).

We applied a chosen threshold (i.e., 0.4) based on visual inspection to retain only the tiles that exhibited sufficient distinguishable features. This step resulted in the removal of 948 uninformative tiles, allowing us to retain a total set of 62,299 tiles, ranging from 10,891 for Brain to 14,173 for Pancreas. Additional details are summarized in Supplementary Table S2.

Denoising diffusion probabilistic model

For our generative model, we utilized the Denoising Diffusion Probabilistic Models (DDPM) proposed by24. DDPM is a specific variant of diffusion models that focuses on denoising the input data by learning a probabilistic model capable of generating high-quality outputs. To create a dataset covering five different tissue types, we followed the classifier-free-guidance approach outlined in25. In short, the pipeline works as follows.

First, Gaussian noise is introduced into the input images. The magnitude of this noise is determined randomly by a scheduler, resulting in varying levels of noise being applied to the input images. This approach allows the model to explore a spectrum of image noises, ranging from subtle distortions to an isotropic Gaussian distribution. The noisy image along with an embedding vector representing the level of noise and a trainable embedding vector representing the class (in our case the tissue type) are fed to a U-Net50 based neural network, which aims to learn to output the noise. This prediction is then compared to the actual added noise, forming the basis for calculating the loss and guiding the model’s training process. This process is illustrated in Fig. 1B.

Once the training phase is complete, the image generation phase begins. Starting with a baseline of pure isotropic noise, the trained network predicts the corresponding noise level. The difference between this predicted noise and the initial noise results in the creation of a less noisy image, which then becomes the input for the network once again. This iterative procedure is repeated for t steps, resulting in the generation of a final image, as illustrated in Fig. 1C.

The model implementation can be found online(https://github.com/lucidrains/denoising-diffusion-pytorch/tree/main). The images were resized to 256 pixels, as experimenting with different sizes revealed that this produced the most favorable outcomes. For model training, we employed a batch size of 26. The learning rate was set at 1e-5, and the training procedure encompassed 215,000 steps. We empirically found the learning rate by monitoring the sampled data after every 1000 steps. The number of steps for data generation (t) was set to 250. Visual inspection of image generation and model checkpointing was done every 1000 steps. Training was done till a convergence in the training loss was noticed as well as sampling during the training provided convincing results, since it has been observed that the quality of the generated images can be improved even when the training loss was stable. Class conditioning was done using a trainable torch.nn.Embeddings variable. Lastly, the generated images were further enhanced using a sharpening filter with a factor of 3.

Evaluations

To comprehensively evaluate the quality of the generated images from various perspectives, we devised multiple sets of experiments ranging from widely recognized metrics for assessing generated images quality as well as expert human evaluation. In the following, we will give an overview of the details of each of these experiments.

Canonical quantitative evaluation metrics

We assessed the quality of the generated images using well-established metrics in the field of synthetic images, including Inception Score (IS)37, Fréchet Inception Distance (FID)38, improved precision-recall39, and density-coverage40 as well as IL-NIQE no-reference image quality assessment method41. Here, we will briefly explain each metric.

The IS is calculated using Eq. (2).

where \(\:y\) is a set of classes (in our case tissues), \(\:x\) is the image, \(\:p\left(x\right|y)\) is the conditional probability, \(\:p\left(y\right)\) is the marginal class probability, \(\:{D}_{KL}\) stands for the Kullback Leibler divergence and \(\:{E}_{x\:\sim\:{P}_{g}}\) denotes average over all the generated images.

High values of IS indicate that the generated images have a high diversity across different classes while maintain good within class similarities37.We utilized the torchmetrics implementation to calculate IS in our study, splitting the images 5 times and using the Inception_v3 network51 unbiased logits as the features to calculate the conditional and marginal probabilities.

Fréchet Inception Distance (FID) is a measure to quantify the similarity between the distributions of the generated and real images38. It achieves so by passing the generated and real images through the original Inception_v3 network, extracting the outputs from a specified layer, and calculating Eq. (3):

Here, \(\:{\mu\:}_{r}\) and \(\:{\varSigma\:}_{r}\) represent the estimated average and covariance matrices of real images, while \(\:{\mu\:}_{g}\) and \(\:{\varSigma\:}_{g}\) denote the same for generated images in the Inception\textunderscore{v3} feature space. The trace operation is denoted by \(\:Tr\). To calculate FID, we utilized the torchmetric implementation, setting the feature parameter to 2048, which corresponds to the last pooling layer. Lower values of FID would indicate that the distributions of the real and generated images are more similar38.

Both FID and IS rely on the imagenet52 pre-trained Inception_v3 network which might not be ideal for those images whose distribution significantly differs from natural images53,54. To account for this, we additionally incorporated methods that assess the quality of generated images by examining the similarity of the manifolds between the real and generated data. The authors in39 have proposed improved precision and recall measuring the overlap of the manifolds of the generated and real data. Briefly, they are calculated by projecting the data to a feature space and estimating the manifolds for real and generated data by surrounding each datapoint with a hyper-sphere that reaches its kth nearest neighbor39. Precision is defined as the percentage of generated samples within the real data manifold, while recall is defined as the percentage of real images that fall within the generated data manifold39. Higher values for both indicate greater overlap between the real and generated data. Precision and recall have been demonstrated to suffer in the presence of outliers40. To overcome this problem, the authors in40 have proposed the density and coverage metrics, which offer improved manifold estimation (for a more detailed explanation, please refer to40).

In this study, we calculated the precision, recall, density and coverage using the code provided by40. We set the number of neighbors to 10. To prevent any potential bias that may rise due to networks being trained on specific datasets40, the feature space for real and generated data was created by projecting the data onto a 100-dimension space through a ResNet-50 network55 with random weights (for result comparability, we also performed analyses using an ImageNet pretrained network). We modified the last fully connected layer of the network to output a 100-dimensional vector. Since the imbalance between the number of real and generated images can bias the estimation40, we addressed this issue by randomly sampling an equal number of generated images per tissue from the real data. To obtain reliable estimates, we repeated the entire process five times and reported the mean and standard error of the mean.

IL-NIQE41 is a blind image quality assessment (BIQA) method which aims to evaluate the quality of images and videos without the need for a reference, non distorted image. The quality score is computed by a modified Bhattacharyya distance, which measures the deviation of the statistics of the input image from a reference set of statistics learned from a high quality set of natural images. This process is not done for the whole image but is done after dividing the image in patches and then averaging each quality score over the entire image. To compute the IL-NIQE score we used the PyTorch Toolbox for Image Quality Assessment python package. We computed IL-NIQE score for each image in both datasets and then reported the average score for each dataset. Moreover, we also computed the IL-NIQE score for each class of both synthetic and real dataset.

Deep learning-based practical assessments

While the aforementioned evaluation measures provide valuable insights into the quality of generated images and their similarity to real ones, they may not provide sufficient information about more complex morphological features (such as histopathological plausibility) or the suitability of the generated images to be used as a replacement to the real images in machine learning studies. These aspects are of utmost importance and are one of the primary focuses of our current study. Therefore, additional evaluation criteria are required. To do so, we designed a set of experiments using deep convolutional neural networks (CNNs)56.

We used the ResNet-50 architecture55 with randomly initialized weights to train a tissue classifier in two different scenarios. In the first scenario, we trained the network from scratch using the real tiles employed to train the diffusion model. Based on results demonstrated in57, we put aside 30% of slides specifically for internal validation, resulting in 39,294 tiles for training. This approach reduces the potential of data leakage related biases in the evaluation of models. To ensure that the network learns relevant morphological features associated with tissue types, we applied a set of following augmentations during training: spatial transformations, including random rotation and random horizontal-vertical flipping, chromatic transformations, such as jittering and random channel swapping, to mitigate the effects of staining58, and robustness enhancing augmentations like blurring using a Gaussian filter and random erasing of parts of the image. The performance was evaluated on both the real tiles reserved for testing (check Materials and Methods, Dataset section) and the generated tiles. Additionally, we retrained the network on a subset of the real tiles, equivalent in number to the generated tiles, to compare the results and eliminate the possibility of performance differences due to varying dataset sizes.

The network was trained for 100 epochs with a batch size of 32. We employed the AdamW optimizer59 with an initial learning rate of 0.0002. The learning rate was decreased using a cosine annealing scheduler with a tmax parameter of 10060. The model corresponding to the highest accuracy on the validation was saved for further steps. The model and training were implemented using pytorch and pytorchlightning.

In the second scenario, adopting the exact same training setting as the first scenario, we trained a network from scratch using the generated tiles. The only distinction was that, since the generated images were on a tile level and not slide level, we reserved 30% of the tiles for internal validation, resulting in 10,500 tiles for training. Subsequently, the trained network was evaluated on two distinct test sets. The first test set comprised a randomly selected 15% subset of the slides utilized in training of the diffusion model (referred to as the internal test-set). In contrast, the second test set consisted of real slides that were not seen by the diffusion model (the same test set used to assess the performance of the network trained on real data).

We evaluated the performance of the models on the test sets using multi-class accuracy (ACC) and Matthews’s correlation coefficient (MCC)61,62,63.

The explainability analysis

We employed Concept Relevant Propagation (CRP)42 as a means to assess whether the ResNet-50 utilized similar concepts, represented by convolution filters in CNNs, to classify images belonging to the same class, regardless of the dataset it was trained on. This analysis was crucial to ensure that the classifier employed consistent strategies in identifying the tissue of the tile, regardless of its origin (real or generated). The insights gained from this analysis provided valuable information for evaluating both the classifier itself and the quality of the synthetic tiles generated by the diffusion model.

CRP combines local and global approaches to provide robust and comprehensive explanations of a model’s predictions. It is based on Layer-wise Relevance Propagation (LRP)64 but extended to disentangle explanations based on concepts learned by the network. CRP computes concept-conditional heatmaps to locate activation of concepts in the input space and provides dataset-level statistics to investigate how different classes or images activate specific channels in the model.

We extracted important concepts for each class from the network trained on the generated dataset by randomly selecting one generated image per class and choosing the convolutional layer 4 which, in the ResNet-50 architecture, is the last convolutional before the output. This choice is justified as we aimed to identify higher-level concepts, which are typically encoded in deeper layers closer to the output of the network65,66. The same process was done for the network trained on the real data.

Afterwards we constrained the relevance propagation by conditioning it on both the class and the layer. This allowed us to determine the significance of each channel in the selected layer for accurately classifying the given tile as belonging to its corresponding class.

In our analysis, we extracted a list of the channels per class per model, sorted by relevance. The most relevant concept per class were further utilized for feature visualization, where we obtained the most representative samples for each concept from both synthetic and real datasets, along with attribution heatmaps. Additionally, we assessed the prevalent class associated with these concepts, evaluating the integrity and efficacy of the concept extraction procedure. This analysis also helped in assessing the generalizability of the findings by examining whether the same associations hold true when employing the identical model on distinct datasets. This analysis was done using the CRP python package (https://github.com/rachtibat/zennit-crp).

Qualitative evaluations

It is acknowledged that human expert evaluation remains the ultimate benchmark, particularly in the case of medical images. For this reason, we asked three professional pathologists (LC, EM, ET) to assist us in evaluating the quality of our generated images.

We did so by designing two different questionnaires. The first half of the first questionnaire included 100 questions. Pathologists were presented with three tiles from a single tissue and tasked with identifying the corresponding tissue. Half of the questions featured tiles derived from real data, while the remaining half consisted of generated images. The tiles “type” (real vs. generated) was unknown to pathologists, the questions were randomized, and an equal number of tissues were included. The aim was to assess the pathologists’ ability to detect tissues using a limited number of tiles and to examine potential variations in performance between real and generated data. The second half of the first questionnaire consisted of an additional 100 questions. This time, pathologists were required to determine whether a given tile was real or generated, based on a single tile. The number of questions was equal for each tissue and data type (real or generated). Similar to the previous section, the questions were randomized across tissues and the distinction between real and generated data. All the tiles were randomly selected, but they were manually reviewed to ensure diversity. The examples of the questions from the first questionnaire are depicted in Supplementary Figure S2.

The follow up questionnaire was sent one week after receiving responses to the first questionnaire. Pathologists were asked to rate from 0 (not important) to 5 (very important) how much they had employed some specific image features in distinguishing between real and generated tiles. The aim of this phase was to figure out whether the pathologists’ approach predominantly relied on “visual attributes”, such as blurriness, chromatic features, or the presence of artifacts, or if they also found biological or morphological irregularities in their discrimination between real and generated images. Out of the seven questions, four corresponded to visual attributes and three corresponded to biological attributes. The full list of questions is available in Supplementary Table S3.

Implementation

All data processing and model training codes were implemented in Python (version 3.7.16 or higher). Any specific packages used were specified in the corresponding Materials and Methods section. For the evaluation questionnaires, we employed JavaScript and the Google Apps Script APIs.

The pre-processing, classifier training and evaluations were performed on a single machine with 2x Nvidia GTX-1080Ti GPUs, an Intel i7-6700 CPU, and 32GB RAM. The diffusion model training was conducted on a cluster with 2 x NVIDIA V-100 GPUs with 32GB VRAM.

All the codes are available in the Git-Hub repository {https://github.com/Mat-Po/diffusion_digital_pathology}.

Results

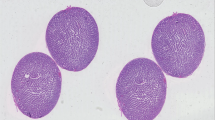

We used the set of 56,990 extracted real tiles as input for the diffusion model and performed training over 215,000 steps. Confirming a converged loss and preliminary visual assessments of image quality, we produced 3001 synthetic images per tissue. This choice was motivated by our available resources and time constraints. A sample pair of real and generated images for each tissue are depicted in Fig. 2.

After our initial visual examinations, which confirmed a strong resemblance between the generated images and actual data, we moved forward with our evaluation pipeline.

Measuring the similarity between generated and real images reveals Generated Data resembles real images

After generating the synthetic dataset, we conducted a quantitative assessment of its quality using various synthetic image evaluation metrics. These results are summarized in Table 1.

The overall results indicate that the generated images closely resemble the real images. Our generated images achieved a high Inception Score (2.9115 ± 0.0339, typical reported values are between 2 and 3 where higher values are better), surpassing previous attempts at generating synthetic digital pathology images34,35,36. However, the Inception Score achieved with our generated images is still lower to the score that would have been achieved with our real dataset (3.2892 ± 0.0149). The FID value, although slightly higher, is still comparable to values reported in other studies (FID values in synthetic pathology typically range from 10 to 40 where lower values are more desired)34,35,36,67. It is important to note that these studies utilized a smaller field of view, which can result in a lower FID. For instance, in35, increasing the field of view by a factor of two (from x20 to x10, the only experiment equivalent to our field of view) more than doubled the FID (reaching a value of 38.1), which exceeds our reported FID. Additionally, based on results reported in36, changing the dataset results in a significant change in the achieved FID. The precision and recall values approach the maximum value of 1 and outperform previous work34,36. However, it is important to consider that these studies calculated precision and recall using ImageNet pretrained networks, which can impact the results40. To ensure a fairer comparison, we recalculated these values using an ImageNet pretrained ResNet50. The results demonstrate that our precision outperforms the other studies, while our recall remains comparable to most studies. Nevertheless, there is a significant decrease in recall compared to [34.], although this decrease is accompanied by a substantial increase in precision. The summary of these comparisons can be found in Supplementary Table S4. To the best of our knowledge, no previous study has reported density and coverage metrics, but our values are also close to the maximum achievable value of 1, indicating a high similarity between the generated images and real ones. Similarly, to our knowledge, IL-NIQE has not been previously utilized to evaluate the quality of generated pathology images (However, typical IL-NIQE values for real images range from around 30 to 60, where lower is better). The average score obtained on the generated data (59.66) is higher than the average score obtained from the real data (33.25), suggesting that the generated data may appear less realistic than actual data, as anticipated. Regardless, since to our knowledge there are no other studies reporting IL-NIQE scores in the evaluation of diffusion models, direct assessment of the level of realism remains challenging. However, it is important to note that direct comparisons between studies may not be completely fair and feasible due to differences in datasets, tile sizes, zoom levels, and tissues. Our main focus in this paper is not solely on improving the quality of the generated images. Nevertheless, we present these quantitative metrics to demonstrate that our generated images exhibit similar quality to those of other previously published studies that have utilized diffusion models.

The calculated metrics provide an assessment of the overall quality of the generated dataset. However, for a more detailed analysis, we also calculated the FID and IL-NIQE individually for each class. The results are presented in Table 2. While an overall increase in FID was expected when calculating it separately for each class, we observed variations in this increase across different tissues. Specifically, the brain and pancreas tissues demonstrated a more substantial increase in FID compared to the uterus and lung tissues, which maintained their FID values close to the overall FID score. Interestingly, the class-wise IL-NIQE scores do not consistently correlate with class-wise FID scores. Specifically, the discrepancy between the average IL-NIQE scores of real and synthetic data suggests that the generated data for lung, pancreas, and uterus exhibit lower quality compared to those for brain and lung. These results highlight the importance of examining the performance of the generative model at a class level using multiple methods, as it provides valuable insights into the variability of generated data quality across different tissue types.

The Generated Data exhibit performance comparable to Real Data in a deep learning-based Task

The performance of the trained ResNet-50 for tissue classification under various scenarios is summarized in Table 3. The table provides an overview of the test results obtained from different experimental settings. Furthermore, to gain a deeper understanding of the classification outcomes, the corresponding confusion matrices are visualized in Supplementary Figure S3.

The results indicate that there is no significant difference in the performance of the network trained on real data when tested on either unseen real data or generated data. This finding suggests that the generated images have successfully captured the diverse morphological patterns present in the original tissues, as the network’s ability to classify them remains consistent. In contrast, when evaluating the network trained on generated data, a decrease in performance is observed for both seen and unseen real data. It is noteworthy that earlier studies have also documented a reduction in performance when relying solely on generated data as opposed to real data36. This reduction in performance can be attributed to several factors. Firstly, the smaller number of training samples available for the network trained on generated data compared to real data may contribute to lower accuracy. In fact, when we conducted an experiment by retraining the network using an equal number of real image tiles as generated ones, we observed a small decrease in accuracy when tested on external real data as reported in Table 3. Additionally, as evident from the confusion matrices, the decline in performance is notably pronounced for certain tissues such as pancreas and lung. This observation suggests that these tissues present more intricate and complex characteristics, potentially posing challenges for the diffusion model in generating diverse and accurate tiles. Interestingly, this phenomenon contrasts with some of our quantitative metrics, such as the class-wise FID, which might have predicted greater difficulties in tissues like the brain. However, the outcome of the deep learning assessment did not align with this expectation, It appears to correlate more closely with the results of the IL-NIQE, as that measure predicted lower quality of lung, pancreas, and uterus images, underscoring the significance of multifaceted evaluations.

Despite these drawbacks, the overall results are promising, as they demonstrate that the generated images exhibit similar patterns to those found in real tiles. Furthermore, by utilizing a small number of generated images, we can achieve performance levels comparable to those attained using real data.

Explainability-mediated analysis further reveals insights about the features learned from both datasets

We employed CRP to identify the top channel or concept activated for each class in both ResNets, one trained on real data and the other on generated data, analyzing 5 concepts for each network in total. These lists are presented in Supplementary Table S5. Once we determined the class-specific concepts, we used them to visualize which input pixels activate these concepts.

For instance, in Fig. 3A, channel 428, associated with kidney-related concept in the ResNet trained on real data, is activated by kidney samples from both generated and real data. This is somewhat expected from the real data, given how we extracted this channel. However, it is not taken for granted for the generated data. Notably, the input pixels activating channel 428 are part of the same anatomical structure, the kidney’s glomerulus. In simpler terms, channel 428 in the ResNet trained on real data is activated by the glomeruli in kidney samples both in real and generated data.

What’s crucial is that when we examine the concept that is most activated by kidney samples in the network trained on generated data, shown in Fig. 3B, a similar pattern emerges. In both real and generated data, the input pixels activating this channel correspond to the glomeruli of kidney images.

We also used the global approach in CRP to identify which classes are most activated by the same set of selected channels. The upper part of Supplementary Table S5 contains channels from the network trained on real images, while the lower part contains channels from the network trained on generated tiles. Upon inspecting the classes activated by each channel in both datasets, we found a strong agreement, suggesting that the networks behave similarly when it comes to tiles from both datasets. This evidence supports the performance metrics used to analyze the classifier’s performance.

Additionally, these results shed light on situations where the network makes mistakes, particularly in cases of confusion between pancreas and lung. Channel 890 from the lower part of Supplementary Table S5 illustrates this, as although the most frequent class activated by that channel is Pancreas, the second most frequent class, with a substantial normalized relevance of 0.95 and 0.94 depending on the dataset, is the Lung class.

To gain a better understanding, we selected the most relevant concept for each tissue and visualized the pixels activating that concept in some example real and generated images using both the networks trained on the real dataset (Supplementary Figure S4) and the network trained on the generated dataset (Supplementary Figure S5). While it appears that the most important concept for tissues such as brain, kidney, lung, and uterus, to some extent, remains consistent between the networks trained on real and generated data, this isn’t the case for pancreas. For the network trained on generated data, the most important concept for pancreas tissue, while descriptive enough (as indicated by the classifier’s overall good performance), seems to resemble the most important concept for lung and uterus tissue, which explains the decrease in performance.

Concept relevance propagation visualization. Visualization of input pixels that activate the indicated channels. Each panel explores one network, either trained on real or synthetic data, and one concept. Each row examines the relevance of the channel either on the synthetic dataset (gen.) or on the real one (real). (A) ResNet trained on the real dataset. Input pixels that activate channel 428 in the last convolutional layer belong to the same tissue class and consistent morphological elements in both datasets. (B) ResNet trained on the synthetic dataset. Here, too, the tiles that activate the channel belong to the same class in both datasets, and input pixels belong to consistent morphological structures.

The generated data is biologically realistic in the eyes of experts

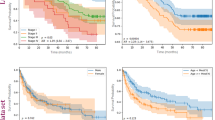

The pathologists’ questionnaire results are summarized in Table 4 and depicted in Fig. 4.

The results from the first task of tissue detection demonstrate that pathologists are highly proficient in identifying the tissue type based on the given three tiles at the provided zoom level. Importantly, there is no significant difference in performance between real and generated images, indicating that the generated data accurately capture the morphology of the original tissues. The combined performance of the pathologists slightly exceeds chance level in detecting real vs. generated tiles (accuracy of 56%), suggesting that the generated tiles possess a biologically realistic appearance. When we examined the distribution of errors made for the generated tiles (generated tiles mistaken for real), as shown in Supplementary Figure S6, the brain images had slightly higher average number of mistakes, suggesting that they appeared more realistic to the pathologists’ eyes and conversely, kidney images had the lowest average number of mistakes, indicating that pathologists found it easier to detect generated kidney images. Nevertheless, the overall differences across tissues were small and not significant. Notably, the results from the second questionnaire reveal that the pathologists primarily relied on visual attributes such as chromatic features and blurriness to determine whether a tile was real or generated, as they did not detect any noticeable biological irregularities in the generated images. When combined, the average score given to visual attributes was 3.83 ± 0.19, which was found to be statistically significantly different from the average score of 1.22 ± 0.01 given to biological attributes (two-tailed paired t-test, p-value = 0.0075). This finding highlights the potential value of the generated dataset for educational purposes, as it indicates that the generated images are biologically realistic to the extent that even expert pathologists are almost unable to distinguish them, except for some minor differences at the image visual quality level.

Pathologists’ questionnaire results. (A) Presents the confusion matrices illustrating the pathologists’ performance in tissue detection task in the first questionnaire, using both real (top row) and generated (bottom row) images. These matrices correspond to the first two rows of Table 3. (B) Displays the confusion matrices that depict the pathologists’ performance in the task of discriminating between real and generated images during the first questionnaire. These matrices correspond to the last row of the results presented in Table 3. (C) Illustrates the average ± standard error of the mean of the pathologists’ responses to the second questionnaire. The cyan bar represents the average score indicating the score to which pathologists relied on visual attributes to differentiate real images from generated ones, while the gray bar indicates the extent to which they utilized biological attributes as described in Materials and Methods.

Discussion

The field of digital pathology, like many other disciplines, could greatly benefit from the ability to generate realistic synthetic data. These data could assist in addressing privacy concerns and enabling AI-assisted diagnosis by providing high-quality data to aid the training process. This holds promise in scenarios requiring pixel/tile-level annotations which can be time-consuming and susceptible to errors when executed by humans. Hence, in this article, we have introduced a comprehensive pipeline for generating and assessing synthetic data in digital pathology. We employed this pipeline to generate images from five distinct tissues with a wide field of view, employing a denoising diffusion model. The quality of the data was assessed through three distinct evaluation approaches: quantitative assessment employing well-established metrics, practical applicability evaluation through deep learning-based tissue classification and explainability, and qualitative evaluation involving questionnaires administered to pathologists. Our findings emphasize the fact that employing these varied evaluations provides us with complementary insights into data quality, highlighting that relying solely on a single approach would be insufficient.

Given the current trend towards analyzing digital pathology images at the whole slide level45,68,69, it is desirable to generate synthetic data at the same level as well. In our study, we focused on generating tiles of wide field of view, to examine the ability of diffusion models in generating images at this resolution and as an early attempt to get closer to WSI image generation. Future research can build upon this by generating tiles from multiple fields of view and subsequently integrating them to generate complete whole slide images. Additionally, incorporating the adjacency of tiles in the generative model could assist in generating neighboring tiles to be able to generate WSIs. An example of this has been done with GANs in70.

We explored the diffusion model’s capability to generate tiles from various tissues, each characterized by distinct morphological complexities. Our findings indicated that, although the overall performance was satisfactory, the model’s effectiveness varied across different tissues. An intriguing observation highlighting the importance of diverse evaluations emerged when examining each tissue individually.

In particular, when comparing class-wise FID scores, brain and pancreas showed similar results, both significantly higher than lung. However, classifier performance was notably lower for pancreas and lung, while the brain exhibited almost perfect performance. This trend was more closely mirrored in the results from the class-wise IL-NIQE, where pancreas, lung, and uterus showed lower quality. Furthermore, the results from the pathologists’ questionnaire indicated that pathologists had the most difficulty in distinguishing between real and generated brain images, implying that the generated brain images are of the highest quality. Conversely, they had the easiest time distinguishing kidney generated images, which had relatively good FID and IL-NIQE scores.

Explainability analyses shed further light on these findings. They revealed that the most relevant histopathological features for detecting pancreas when the network is trained on generated data differ from that when trained on real data. Interestingly, these features closely resemble the most relevant concepts for lung tissue. It remains to be determined whether these differences are attributed to the limitations of the diffusion model’s classifier-free guidance pipeline in distinguishing between lung and pancreas tissues or whether they stem from inherent complexities within the pancreas tissue itself.

One limitation of utilizing diffusion models is their longer inference time, i.e. will take longer for the model to generate a synthetic image, compared to alternative generative methods like GANs. However, our primary focus in this paper is to underscore the importance of a multifaceted evaluation pipeline and the valuable insights it offers. This evaluation framework is applicable to various generative models, not limited to diffusion models. We opted for diffusion models due to their consistent ability to generate realistic medical and natural images, a critical consideration given the sensitive nature of medical data. Nonetheless, further studies can be conducted, assessing multiple generative models using the proposed evaluation pipeline, enabling a comprehensive comparison of their strengths and weaknesses.

Despite tissue classification not ranking among the foremost priorities in digital pathology, we harnessed its utility to assess the quality of generated data, their viability in replacing real tiles within deep learning-based tasks and to emphasize the significance of multifaceted evaluation. As anticipated, the performance of a model trained solely on generated data did not match that of a network trained on real data, yet the outcome remained promising and satisfactory. The generation of data to facilitate network training becomes particularly valuable when dealing with scenarios that require pixel-level annotations, which are labor-intensive. Recent studies have showcased generative models’ potential in producing annotated pairs of tissue images alongside their corresponding cell annotations’ mask35,71 for specific tissues. This lays the foundation for expanding our work, potentially involving the generation of data from various tissues, accompanied by their respective cell type annotations under normal and various pathological conditions unique to each tissue. The classifier-free guidance conditioning employed in the diffusion model, as demonstrated by34, enables the encoding of multiple biological features, thereby paving the way to condition on more biologically significant factors.

Explainable artificial intelligence is a rapidly growing sub field within the machine learning community72. It has emerged as a response to the challenge of interpreting predictions made by black box models, where the underlying mathematical functions are often highly complex and difficult to understand, even for AI experts. Deep neural networks, although highly effective in their performance when dealing with unstructured data such as images, fall into this category of black box models. To leverage the power of deep learning methods while addressing the need for interpretability, post-hoc methods have been developed. In this study, we demonstrated the application of an explainability technique to enhance our understanding of data quality and to gauge whether, for the generated data, the model learns comparable features as when trained with real data. This holds a special significance, especially considering a recent study that highlighted how generated histopathological images can provide explanations beyond what is attainable with real data73. This is particularly important in the medical domain, where the demand for interpretable models remains crucial.

Finally, the pathologists’ relatively low performance in distinguishing between real and generated images reinforced our confidence in the realism of the generated images, thus promoting their utility for educational purposes. Notably, the feedback garnered from the subsequent questionnaire was intriguing. It emphasized that instances where pathologists successfully identified generated tiles were often attributable to discernible visual cues like blurriness or chromatic characteristics. Given the significantly high resolution of histopathology images, the fact that generated images would not consistently match this level of resolution was to be expected. This limitation could potentially be addressed in future studies by integrating super-resolution networks such as the one proposed in74, to enhance the quality of generated images.

Overall, although synthetic data in digital pathology may not be ready to completely complement real images for research purposes, our findings, like other studies, highlight their promising potential in the future. What’s important to consider is the need to examine these generated data from different angles to fully understand their strengths and weaknesses. Our results emphasize the importance of thoroughly evaluating the generated dataset using various approaches, which should become a common practice in the field to better develop and use synthetic datasets effectively.

Data availability

All the data used in this paper is publicly accessible from https://gtexportal.org/home/. The source code is publicly available on GitHub at: https://github.com/Mat-Po/diffusion_digital_pathology. ResNet and Diffusion model weights are publicly available on HuggingFace model hub at: https://huggingface.co/pozzi/diffusion_digital_path/tree/main.

References

Baxi, V., Edwards, R., Montalto, M. & Saha, S. Digital pathology and artificial intelligence in translational medicine and clinical practice. Mod. Pathol. 35, 23–32 (2022).

Caputo, A. et al. The slow-paced digital evolution of pathology: lights and shadows from a multifaceted board, Pathologica, vol. 115, no. 3, p. 127, June (2023).

Makhlouf, Y., Salto-Tellez, M., James, J., O’Reilly, P. & Maxwell, P. General roadmap and core steps for the development of AI tools in digital pathology. Diagnostics. 12 (5), 1272 (2022).

Jahn, S. W., Plass, M. & Moinfar, F. Digital pathology: advantages, limitations and emerging perspectives. J. Clin. Med. 9 (11), 3697 (2020).

Bejnordi, B. E. et al. M. Balkenhol and others, Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer, Jama, vol. 318, pp. 2199–2210, (2017).

Coudray, N. et al. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat. Med. 24, 1559–1567 (2018).

Shaban, M. et al. Context-aware convolutional neural network for grading of colorectal cancer histology images. IEEE Trans. Med. Imaging. 39, 2395–2405 (2020).

Bychkov, D. et al. Deep learning based tissue analysis predicts outcome in colorectal cancer. Sci. Rep. 8, 3395 (2018).

Graham, S. et al. Hover-Net: simultaneous segmentation and classification of nuclei in multi-tissue histology images. Med. Image. Anal. 58, 101563 (2019).

Xu, K., Jahanifar, M., Graham, S. & Rajpoot, N. Accurate segmentation of nuclear instances using a double-stage neural network, in Medical Imaging 2023: Digital and Computational Pathology, (2023).

Makhlouf, Y. et al. True-T–Improving T-cell response quantification with holistic artificial intelligence based prediction in immunohistochemistry images. Comput. Struct. Biotechnol. J. 23, 174–185 (2024).

Cho, J., Lee, K., Shin, E., Choy, G. & Do, S. How much data is needed to train a medical image deep learning system to achieve necessary high accuracy? arXiv preprint arXiv:1511.06348, (2015).

Price, W. N. & Cohen, I. G. Privacy in the age of medical big data. Nat. Med. 25, 37–43 (2019).

Chen, R. J., Lu, M. Y., Chen, T. Y., Williamson, D. F. K. & Mahmood, F. Synthetic data in machine learning for medicine and healthcare. Nat. Biomedical Eng. 5, 493–497 (2021).

Savage, N. Synthetic data could be better than real data. Nature, (2023).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM. 63, 139–144 (2020).

Frangi, A. F., Tsaftaris, S. A. & Prince, J. L. Simulation and synthesis in medical imaging. IEEE Trans. Med. Imaging. 37, 673–679 (2018).

Zhou, T., Fu, H., Chen, G., Shen, J. & Shao, L. Hi-net: hybrid-fusion network for multi-modal MR image synthesis. IEEE Trans. Med. Imaging. 39, 2772–2781 (2020).

Tang, Y., Tang, Y., Zhu, Y., Xiao, J. & Summers, R. M. A disentangled generative model for disease decomposition in chest x-rays via normal image synthesis. Med. Image. Anal. 67, 101839 (2021).

Kapil, A. et al. Deep semi supervised generative learning for automated tumor proportion scoring on NSCLC tissue needle biopsies. Sci. Rep. 8, 17343 (2018).

Mahmood, F. et al. Deep adversarial training for multi-organ nuclei segmentation in histopathology images, IEEE transactions on medical imaging, vol. 39, pp. 3257–3267, (2019).

Levine, A. B. et al. and others, Synthesis of diagnostic quality cancer pathology images by generative adversarial networks, The Journal of pathology, vol. 252, pp. 178–188, (2020).

Falahkheirkhah, K. et al. Deepfake Histologic Images for Enhancing Digital Pathology, Laboratory Investigation, vol. 103, p. 100006, (2023).

Ho, J., Jain, A. & Abbeel, P. Denoising Diffusion Probabilistic Models, arXiv preprint arxiv:2006.11239, (2020).

Dhariwal, P. & Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural. Inf. Process. Syst. 34, 8780–8794 (2021).

Müller-Franzes, G. et al. Nebelung and others, a multimodal comparison of latent denoising diffusion probabilistic models and generative adversarial networks for medical image synthesis. Sci. Rep. 13, 12098 (2023).

Weng, L. What are diffusion models? lilianweng.github.io, July (2021).

Pinaya, W. H. L. et al. Brain imaging generation with latent diffusion models, in MICCAI Workshop on Deep Generative Models, (2022).

Dorjsembe, Z., Odonchimed, S. & Xiao, F. Three-dimensional medical image synthesis with denoising diffusion probabilistic models, in Medical Imaging with Deep Learning, (2022).

Waibel, D. J. E., Röoell, E., Rieck, B., Giryes, R. & Marr, C. A diffusion model predicts 3d shapes from 2d microscopy images, arXiv preprint arXiv:2208.14125, (2022).

Kim, B. & Ye, J. C. Diffusion deformable model for 4D temporal medical image generation, in International Conference on Medical Image Computing and Computer-Assisted Intervention, (2022).

Chambon, P., Bluethgen, C., Langlotz, C. P. & Chaudhari, A. Adapting pretrained vision-language foundational models to medical imaging domains, arXiv preprint arXiv:2210.04133, (2022).

Kazerouni, A. et al. Diffusion models in medical imaging: a comprehensive survey. Med. Image. Anal., p. 102846, (2023).

Moghadam, P. A. et al. A morphology focused diffusion probabilistic model for synthesis of histopathology images, in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, (2023).

Shrivastava, A. & Fletcher, P. T. NASDM: Nuclei-Aware Semantic Histopathology Image Generation Using Diffusion Models, arXiv preprint arXiv:2303.11477, (2023).

Xu, X., Kapse, S., Gupta, R. & Prasanna, P. ViT-DAE: Transformer-driven Diffusion Autoencoder for Histopathology Image Analysis, arXiv preprint arXiv:2304.01053, (2023).

Salimans, T. et al. Improved techniques for training gans. Adv. Neural. Inf. Process. Syst., 29, (2016).

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B. & Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural. Inf. Process. Syst., 30, (2017).

Kynkäänniemi, T., Karras, T., Laine, S., Lehtinen, J. & Aila, T. Improved precision and recall metric for assessing generative models. Adv. Neural. Inf. Process. Syst., 32, (2019).

Naeem, M. F., Oh, S. J., Uh, Y., Choi, Y. & Yoo, J. Reliable fidelity and diversity metrics for generative models, in International Conference on Machine Learning, (2020).

Zhang, L., Zhang, L. & Bovik, A. C. A feature-enriched completely blind image quality evaluator. IEEE Trans. Image Process. 24 (8), 2579–2591 (2015).

Achtibat, R. et al. Where What: Towards Human-Understandable Explanations through Concept. Relevance Propag., (2022).

Carithers, L. J. et al. Gelfand and others, a novel approach to high-quality postmortem tissue procurement: the GTEx project. Biopreserv. Biobank. 13, 311–319 (2015).

Carithers, L. J. & Moore, H. M. The genotype-tissue expression (GTEx) project, vol. 13, Mary Ann Liebert, Inc. 140 Huguenot Street, 3rd Floor New Rochelle, NY 10801 USA, pp. 307–308. (2015).

Srinidhi, C. L., Ciga, O. & Martel, A. L. Deep neural network models for computational histopathology: a survey. Med. Image. Anal. 67, 101813 (2021).

Bizzego, A. et al. Evaluating reproducibility of AI algorithms in digital pathology with DAPPER. PLoS Comput. Biol. 15, e1006269 (2019).

Marcolini, A. et al. histolab: A Python library for reproducible Digital Pathology preprocessing with automated testing, SoftwareX, vol. 20, p. 101237, (2022).

Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. man. Cybernetics. 9, 62–66 (1979).

Redies, C., Amirshahi, S. A., Koch, M. & Denzler, J. PHOG-derived aesthetic measures applied to color photographs of artworks, natural scenes and objects, in Computer Vision–ECCV 2012. Workshops and Demonstrations: Florence, Italy, October 7–13, 2012, Proceedings, Part I, (2012).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation, in Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, Proceedings, Part III 18, 2015. (2015).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision, in Proceedings of the IEEE conference on computer vision and pattern recognition, (2016).

Deng, J. et al. Imagenet: A large-scale hierarchical image database, in 2009 IEEE conference on computer vision and pattern recognition, (2009).

Barratt, S. & Sharma, R. A note on the inception score, arXiv preprint arXiv:1801.01973, (2018).

Kynkäänniemi, T., Karras, T., Aittala, M., Aila, T. & Lehtinen, J. The Role of ImageNet Classes in Frackslash{}{‘}{e}chet Inception Distance, arXiv preprint arXiv:2203.06026, (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition, in Proceedings of the IEEE conference on computer vision and pattern recognition, (2016).

LeCun, Y. & Bengio, Y. and others, Convolutional networks for images, speech, and time series, The handbook of brain theory and neural networks, vol. 3361, p. 1995. (1995).

Bussola, N., Marcolini, A., Maggio, V., Jurman, G. & Furlanello, C. AI slipping on tiles: Data leakage in digital pathology, in Pattern Recognition. ICPR International Workshops and Challenges: Virtual Event, January 10–15, Proceedings, Part I, 2021. (2021).

Madabhushi, A. & Lee, G. Image analysis and machine learning in digital pathology: challenges and opportunities. Med. Image. Anal. 33, 170–175 (2016).

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization, arXiv preprint arXiv:1711.05101, (2017).

Loshchilov, I. & Hutter, F. Sgdr: Stochastic gradient descent with warm restarts, arXiv preprint arXiv:1608.03983, (2016).

Matthews, B. W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme, Biochimica et Biophysica Acta (BBA)-Protein Structure, vol. 405, pp. 442–451, (1975).

Baldi, P., Brunak, S., Chauvin, Y., Andersen, C. A. F. & Nielsen, H. Assessing the accuracy of prediction algorithms for classification: an overview, Bioinformatics, vol. 16, pp. 412–424, (2000).

Jurman, G., Riccadonna, S. & Furlanello, C. A comparison of MCC and CEN error measures in multi-class prediction, PLOS, (2012).

Bach, S. et al. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS One. 10, e0130140 (2015).

Zeiler, M. D. & Fergus, R. Visualizing and Understanding Convolutional Networks, CoRR, vol. abs/1311.2901, (2013).

Qin, Z., Yu, F., Liu, C. & Chen, X. How convolutional neural networks see the world — a survey of convolutional neural network visualization methods. Math. Found. Comput. 1, 149–180 (2018).

McAlpine, E., Michelow, P., Liebenberg, E. & Celik, T. Is it real or not? Toward artificial intelligence-based realistic synthetic cytology image generation to augment teaching and quality assurance in pathology. J. Am. Soc. Cytopathol. 11, 123–132 (2022).

Guan, Y. et al. Node-aligned graph convolutional network for whole-slide image representation and classification, in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (2022).

Javed, S., Mahmood, A., Qaiser, T., Werghi, N. & Rajpoot, N. Unsupervised Mutual Transformer Learning for Multi-Gigapixel Whole Slide Image Classification, arXiv preprint arXiv:2305.02032, (2023).

Deshpande, S., Minhas, F., Graham, S. & Rajpoot, N. SAFRON: stitching across the frontier network for generating colorectal cancer histology images. Med. Image. Anal. 77, 102337 (2022).

Deshpande, S., Minhas, F. & Rajpoot, N. Synthesis of Annotated Colorectal Cancer Tissue Images from Gland Layout, arXiv preprint arXiv:2305.05006, (2023).

Samek, W., Montavon, G., Lapuschkin, S., Anders, C. J. & Müller, K. R. Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications, Proceedings of the IEEE, vol. 109, pp. 247–278, (2021).

Dolezal, J. M. et al. Agni and others, deep learning generates synthetic cancer histology for explainability and education. NPJ Precision Oncol. 7, 49 (2023).

Dong, C., Loy, C. C., He, K. & Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38, 295–307 (2015).

Funding

The study was partially funded under the National Plan for Complementary Investments to the NRRP, project “D34H—Digital Driven Diagnostics, prognostics and therapeutics for sustainable Health care” (project code: PNC0000001), Spoke 2: “Multilayer platform to support the generation of the Patients’ Digital Twin”, CUP: B53C22006170001, funded by the Italian Ministry of University and Research.

Author information

Authors and Affiliations

Contributions

The study was conceived by GJ, who, along with SN, supervised the project. GJ secured funding.MP conceived the initial design. MP, SN, MM, LC, and GJ contributed to the final design and proposal of the methodology. ER conducted preprocessing of the slides and generated the questionnaires. MP performed diffusion model training and generated synthetic data, as well as explainability analyses. SN carried out quantitative evaluations and deep learning-based assessments. LC, EM, and ET participated in the quality assessment of the data and answered the questionnaires. The manuscript was written by SN, MP, ER, and GJ, with input from MM, LC, EM, and ET.

Corresponding author

Ethics declarations

Declaration of generative AI in scientific writing

During the preparation of this work the authors used ChatGPT3.5 for grammar and spelling checks, as well as rephrasing to enhance readability. After using this tool/service, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Pozzi, M., Noei, S., Robbi, E. et al. Generating and evaluating synthetic data in digital pathology through diffusion models. Sci Rep 14, 28435 (2024). https://doi.org/10.1038/s41598-024-79602-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-79602-w

This article is cited by

-

Mitigating bias in prostate cancer diagnosis using synthetic data for improved AI driven Gleason grading

npj Precision Oncology (2025)

-

Artificial Intelligence for Dermatological Image Quality Assessment

Current Dermatology Reports (2025)