Abstract

Breast cancer is one of the most prevalent cancers with an increasing trend in both incidence and mortality rates in Iran. Survival analysis is a pivotal measure in setting appropriate care plans. To the best of our knowledge, this study is pioneering in Iran, introducing a multi-method approach using a Deep Neural Network (DNN) and 11 conventional machine learning (ML) methods to predict the 5 year survival of women with breast cancer. Supplying data from two centers comprising a total of 2644 records and incorporating external validation further distinguishes the study. Thirty-four features were selected based on a literature review and common variables in both datasets. Feature selection was also performed using a p value criterion (< 0.05) and a survey involving oncologists. A total of 108 models were trained. According to external validation, the DNN model trained with the Shiraz dataset, considering all features, exhibited the highest accuracy (85.56%). While the DNN model showed superior accuracy in external validation, it did not consistently achieve the highest performance across all evaluation metrics. Notably, models trained with the Shiraz dataset outperformed those trained with the Tehran dataset, possibly due to the lower number of missing values in the Shiraz dataset.

Similar content being viewed by others

Introduction

Breast cancer remains a leading cause of mortality and morbidity in women globally, accounting for approximately 24.5% of all cancer diagnoses and 15.5% of cancer-related deaths1,2. Notably, 2020 marked it as the most prevalent and lethal cancer for women in various countries3,4,5. Despite an unwavering rise in incidence, mortality rates have fortunately stagnated or declined in recent years, potentially due to advancements in treatment modalities and the widespread adoption of mammography screening programs, particularly in developed nations6,7,8. This paradoxical landscape of increasing incidence alongside stable or decreasing mortality underscores the critical need for accurate prognostic models, particularly those capable of predicting 5 year survival.

Despite a rising breast cancer incidence in Iran, underprivileged provinces experience slower increases, plausibly due to limited diagnostic infrastructure. Paradoxically, mortality rates currently remain lower in these regions. However, recent data suggest a potential trend reversal, foreshadowing a future rise in mortality within these communities9,10. Furthermore, Iran exhibits a younger age of diagnosis compared to many developed nations by approximately a decade11. Five-year and ten-year survival rates are estimated at 80% and 69%, respectively12. These discrepancies in survival across regions likely stem from disparities in early detection initiatives and access to adequate healthcare facilities13,14. This complex landscape underscores the need for tailored predictive models that account for such socio-economic and infrastructural variations.

Accurate 5 year survival prediction remains a critical, yet formidable, challenge for oncologists15,16,17,18,19. This task lies at the heart of personalized medicine, informing crucial treatment decisions impacting medication selection and dosage regimens20,21,22. Breast cancer prognosis remains a complex tapestry woven from diverse factors, encompassing patient demographics, tumor characteristics, biomarker profiles, and lifestyle habits7,23,24.

Machine learning (ML) and its subfield, deep learning (DL), which involves algorithms that analyze data in a manner like human reasoning25, have garnered substantial traction in oncology, particularly in the realm of diagnosis and detection using image processing26,27,28, and survival prediction29,30,31,32. These technologies offer compelling advantages, potentially aiding healthcare professionals at various treatment stages33,34,35,36. Notably, they hold the promise of enhancing technical parameters (e.g., treatment quality and speed) while generating valuable clinical insights37,38. Accurate survival models empower physicians to streamline decision-making, potentially minimizing false positives/negatives. For patients with lower predicted survival, this could inform the consideration of less invasive treatments with reduced side effects39,40,41. To our knowledge, there have been limited studies addressing both conventional machine learning approaches and deep learning methods for predicting breast cancer survival using non-image data. Building upon existing research (Table 1), this study aimed to develop and compare the DL and ML models for predicting 5 year breast cancer survival.

Materials and methods

This section represents the characteristics of leveraged datasets and outlines the step-by-step procedures employed, encompassing the entire process from dataset preparation to model development and evaluation.

Data source and dataset characteristics

The dataset in this study included the data of 2644 patients from two centers. One of these centers was the Breast Diseases Research Center of Shiraz University of Medical Sciences, which supplied data of 1465 patients from 2003 to 2013. Another center was the Cancer Research Center of Shahid Beheshti University of Medical Sciences, that provided data of 1179 patients from 2008 to 2018. The former and the later datasets consisted of 151 and 66 variables, respectively.

Data preparation

Following identifying common variables in the two datasets and a comprehensive review of relevant literature, 34 variables were selected, as outlined in Table 2. To augment patient data, the initial step involved gathering specific values from the Health Information Systems at Tajrish Hospital in Tehran and Shahid Motahari Clinic in Shiraz. In the second step, a total of 643 successful telephone calls were conducted to collect information on patients’ survival status and lifestyle. Simultaneously, the survival status of patients who did not respond was verified through the Iran Health Insurance System. Patients whose survival status could not be investigated through any of these measures were subsequently excluded from the study. In total, data from 1875 patients were utilized, comprising 741 individuals with less than a 5 year survival and 1134 individuals with a 5 year or greater survival. Finally, the datasets were normalized and the missing values were managed using K-Nearest Neighbors imputer.

The overall survival of patients was determined by calculating the time interval between the diagnosis and the time of death. Specifically, if this interval exceeded 5 years or if the patient was alive with more than 5 years having elapsed since the diagnosis of breast cancer, the label was assigned as 1; otherwise, it was labeled as 0.

Model development

DNN along with conventional machine learning models, such as LR, NB, KNN, DT, RF, Extra Trees, SVM, Adaboost, GBoost, XGB, and MLP were used in this study. A brief explanation of each algorithm is provided below:

DNN It is a type of artificial neural network with multiple hidden layers between the input and output layers. DNNs are designed to automatically learn and model complex patterns by passing data through layered architectures50.

LR A statistical algorithm commonly applied to binary classification problems. It extends linear regression to model the likelihood of a dichotomous outcome (e.g., occurrence vs. non-occurrence of an event) by mapping predictions to probabilities51.

NB Bayes’ theorem is one of the fundamental principles in probability theory and mathematical statistics. In this algorithm, variables are assumed to be independent of each other52.

KNN A non-parametric classification algorithm that determines the class of a test sample based on the classes of its k nearest neighbors in the training data. The algorithm computes the distance between the test sample and all training samples to find these neighbors53.

DT A predictive model that uses a tree-like structure to make decisions based on sequential tests of input data. Each node represents a decision rule, and each branch represents the outcome of that rule, they ultimately lead to prediction or classification54.

RF A machine learning algorithm that combines multiple decision trees to improve the prediction accuracy and prevent overfitting. It operates by training each tree on a random subset of the data, with each tree providing a “vote” for the outcome, and the most common vote across all trees is selected as the final prediction55.

Extra Trees An ensemble learning method used for classification and regression tasks, which improves performance by aggregating predictions from multiple decision trees. Unlike traditional decision trees, Extra Trees are built with more randomization in the tree creation process, notably by selecting random splits at each node, which helps to avoid overfitting and enhances model accuracy56.

SVM A supervised machine learning algorithm works by finding the hyperplane that best separates different data classes in a high-dimensional space. SVM is particularly effective in handling complex, non-linear data by using kernel functions to transform data into higher dimensions, making it useful for accurate predictions57.

AdaBoost an ensemble machine learning technique that focuses on improving the performance of weak classifiers by sequentially combining them into a stronger classifier58.

GBoost A machine learning algorithm that iteratively builds a model by training a sequence of weak learners, typically decision trees, to correct the errors of previous ones59.

XGBoost A highly efficient machine learning algorithm based on gradient boosting principles, known for its accuracy and speed in solving regression and classification problems. XGBoost also integrates several advanced features like regularization, handling missing values, and parallelization, making it suitable for large datasets60.

MLP It is a type of artificial neural network composed of several layers of neurons, each of which processes data through nonlinear activation functions. This architecture enables MLPs to learn complex patterns in the data, making them suitable for classification and regression61.

The performance of these algorithms was thoroughly assessed and compared. Model development and evaluation were conducted using the Python programming language, leveraging scikit-learn42,45,47, TensorFlow42, and Autokeras libraries within the Jupyter Notebook environment.

Feature selection was conducted through three distinct approaches62,63. Initially, modeling was executed using all features, which were chosen based on a review of relevant articles while also taking into account dataset limitations. Subsequently, features were selected based on a two-tailed p value criterion (< 0.05) using scikit-learn. Lastly, features were chosen through a survey methodology. To identify essential variables, a questionnaire was formulated and completed by five oncology specialists, aiming to pinpoint essential features for the analysis.

As previously stated, the study involved modeling using a deep neural network and 11 conventional machine learning models. To train and fit the conventional machine learning models, the dataset was initially divided into two parts: the train set and the test set64. The train set served for model development, hyperparameter tuning, and initial training. The train set was partitioned into five folds (k = 5), and the modeling process was iterated five times. In each iteration, one of the folds served as the validation set, while the remaining four were used as the train set. The model underwent training on the train set for each iteration and was subsequently validated on the designated validation set. Different hyperparameters were employed during each fold, determined through the Grid Search method. This method systematically explores a predefined range of hyperparameter values to identify an optimal set that maximizes performance measures such as accuracy. Five distinct evaluation points, or different accuracy values, were obtained for each iteration. The highest accuracy level signified the most optimal model within that algorithm. As an example, Fig. 1 shows the DT algorithm training process, with Shiraz datasets using k-fold and the accuracy score in each fold.

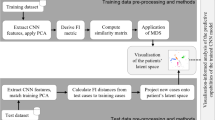

For training the deep neural network, the dataset was initially divided into two parts: the train set and the test set. Subsequently, the Neural Architecture Search method, a technique in deep learning for automatically exploring optimal neural network architectures, was applied. Hyperparameters were adjusted following this exploration. To determine the optimal architecture and set hyperparameters, the Autokeras library was utilized. Figure 2 illustrates the model development process employing both Deep Neural Network and conventional machine learning techniques. Sets of hyperparameters and also the best parameters in each trained model are attached as supplementary information.

Performance evaluation

At this stage, the trained models underwent evaluation on the test set to derive the ultimate estimate of their performance on previously unseen data. The evaluation process encompassed two distinct approaches, as illustrated in Fig. 1. Firstly, each model was assessed on a subset of the same dataset used for training, constituting a cross-validation. Secondly, the evaluation was repeated on the dataset from the other center, effectively constituting an external validation. These two evaluation methods provided a comprehensive understanding of the models’ performance across both internal and external datasets65,66. It should be noted that the hyperparameter setting was exclusively performed on the training dataset. In this study, the following metrics were employed for evaluating the models.

-

1.

Accuracy The percentage of people whose life status is correctly predicted.

\(Accuracy = \frac{{True\,positive\left( {TP} \right) + True\,negative\left( {TN} \right)}}{{Total}} \times 100\)

True positive (TP) indicates those individuals who are alive and are correctly predicted as alive. True negative (TN) indicates those individuals who have died and are correctly considered dead.

-

2.

Specificity The percentage of individuals who have died and are labeled 0 and are correctly considered dead.

\(Specificity = \frac{{True\,negative(TN)}}{{False\,positive\left( {FP} \right) + True\,negative(TN)}} \times 100\)

-

3.

Sensitivity The percentage of individuals who are alive and have a label of 1 and are correctly predicted as alive.

\(Sensitivity = \frac{{True\,positive\left( {TP} \right)}}{{True\,positive\left( {TP} \right) + False\,negative(FN)}} \times 100\)

-

4.

Area under the Curve Indicates how well the model can distinguish between class labels and correctly predicts the model for classes 0 and 1.

Results

In this study, modeling was conducted using conventional machine learning algorithms and Deep Neural Network in three distinct approaches. In the initial stage, modeling was executed using all available features extracted from related articles. Subsequently, in the second and third stages, modeling occurred alongside feature selection, taking into account p value and the opinions of 5 oncology experts, respectively. Given that each algorithm was trained with three datasets—Tehran, Shiraz, and a combination of the two—it can be stated that nine models were created for each algorithm. It is noteworthy that all models, except those trained using the combined dataset, underwent evaluation through both cross-validation and external evaluation methods. Based on the recorded evaluation metrics, the highest average accuracy in cross-validation was 94.29%, attributed to models trained with the Shiraz dataset and features selected by oncologists. Additionally, the highest average accuracy in external validation was 76.42%, observed in models trained with the Shiraz dataset and utilizing all features. The maximum average AUC in cross-validation, reaching 0.983, was associated with models trained using the Shiraz dataset and features selected based on the p-value. In external validation, this AUC value was 0.851, achieved by models trained with the Tehran dataset and features selected by oncologists. The subsequent section provides a detailed presentation of the evaluation results.

Evaluation and performance comparison of trained models with all features

According to Table 3, the highest accuracy achieved on the test data in the cross-validation was 95.43%, which belongs to the GBoost and Extra Tree models, which were trained with the Shiraz dataset. Additionally, the highest accuracy level in external validation reached 85.56%, which was achieved using the DNN model. Figure 3 illustrates the architecture of this DNN model. Among the conventional models, the highest accuracy was 81.69%, obtained with the SVM model. Both models were trained with the Shiraz dataset and tested on the Tehran dataset. Figure 4 shows the learning curves of the models that recorded the highest cross or external validation accuracy. The pinnacle AUC levels in cross-validation were attained by XGB and Extra Trees with 0.994 and in external validation by Extra Trees with 0.960. Figure 5 illustrates the ROC curves of models that recorded the highest AUC in cross and external validation.

Learning curves for Extra Trees, SVM, GBoost, and DNN models trained on the Shiraz datasets with all features. The Extra Trees learning curve indicates that the training score does not improve with more training data, but the cross-validation score does. The SVM and GBoost learning curves show that while the training scores decrease with more training data, the cross-validation scores increase. Using all the training data makes the training and cross-validation scores more reasonable and realistic. The DNN learning curve indicates that both the training and cross-validation scores increase with more training data.

Evaluation and performance comparison of trained models with selected features based on P value

In this part, feature selection was done based on two-tailed p value (< 0.05) before modeling. The selected features based on Shiraz, Tehran, and combined datasets are shown in Table 4.

According to Table 5, the highest accuracy achieved on the test data in cross-validation was 96.26%, which belongs to the DT model trained with the Shiraz dataset. Moreover, the highest level of accuracy in external validation was 82.89%, and it was obtained using the SVM model, which was trained with the Shiraz dataset and tested on the Tehran dataset. Figure 6 shows the learning curves of the models that recorded the highest cross or external validation accuracy. The highest level of AUC in cross and external validation was obtained using Extra Trees with 0.992 and MLP with 0.944, respectively. Figure 7 illustrates the ROC curves of models that recorded the highest AUC in cross and external validation.

Learning curves for DT and SVM models trained on the Shiraz datasets with features selected based on p values. The DT learning curve indicates that, despite fluctuations, both the training and cross-validation scores increase with more training data. The SVM learning curve shows that while the training scores decrease with more training data, the cross-validation scores increase, and using all the training data makes the training and cross-validation scores more reasonable and realistic.

Evaluation and performance comparison of trained models with features selected by oncologists

In this part, preceding the modeling process, feature selection was carried out based on a questionnaire completed by five oncology specialists. The questionnaire assigned importance and impact scores to each feature on the survival of breast cancer patients, ranging from 1 to 5 (1: unimportant, 5: very important). Features with an average score greater than or equal to 3 were selected for use in modeling. Figure 8 illustrates the selected features along with their respective average scores. It is noteworthy that the features selected in Shiraz and Tehran and the combined datasets were the same.

As per Table 6, the highest accuracy on the test data in cross-validation reached 95.85%, which is attributed to the GBoost model trained with the Shiraz dataset. Additionally, the highest accuracy in external validation was 81.54%, achieved by the DNN model. The highest accuracy of the conventional machine learning models was 77.82%, obtained with the LR model. Both the DNN and LR models were trained using the Shiraz dataset and tested on the Tehran dataset. Figure 9 shows the learning curves of the models that recorded the highest cross or external validation accuracy. The maximum AUC levels in cross-validation and external validation were attained by RF with 0.992 and LR with 0.972, respectively. Figure 10 illustrates the ROC curves of models that recorded the highest AUC in cross and external validation.

Learning curves for LR, GBoost, and DNN models trained on the Shiraz datasets with features selected by oncologists. The LR and DNN learning curves indicate that despite fluctuations, both the training and cross-validation scores increase with more training data. The GBoost learning curve shows that while the training score decreases with more training data, the cross-validation score increases, and using all the training data makes the training and cross-validation scores more reasonable and realistic.

Discussion

In this study, the utilization of two datasets significantly mitigates the likelihood of bias associated with single-center studies, an issue often cited in similar research 45. Moreover, each algorithm underwent training three times: once with all features, once with features selected based on p value, and once again with features selected by oncologists. This resulted in the development of a total of 108 models. Subsequently, the performance of conventional machine learning models and DNN was compared.

Certainly, one of the strengths of this study lies in the comprehensive consideration of various variables encompassing tumor characteristics, tumor markers, patient clinical information, patient characteristics, and lifestyle factors. As evident, an essential step before modeling is feature selection, a task accomplished through various methods such as RF, 1NN, KNN, and Cox regression. Beyond these techniques, another approach to feature selection involves consulting experts. Study 42 emphasizes the importance of specialist input in refining the feature set. Initially, their dataset contained 113 features, but after expert consultation, 89 features were discarded. As previously mentioned, in our study, feature selection was conducted not only based on p value but also through a survey involving oncologists. As a result of this expert input, 24 items were selected from the initial set of 32 features. In this study’s overall findings of feature selection methods, common features that emerged as significant include metastasis, recurrence, age at diagnosis, estrogen and progesterone hormone receptors, tumor size, lymph vascular invasion, and the type of surgery performed. It is noteworthy that tumor size, age at diagnosis, hormone receptors, and surgery have consistently been identified as important characteristics in many studies, aligning with the results observed in this investigation46,47,49.

The results reveal that the Extra Trees and GBoost models, trained on all features, achieved the highest cross-validation accuracy at 95.43%. Moreover, the DNN model demonstrated the highest external validation accuracy at 85.56%. This finding is consistent with the results of study45 that both cross and external validation were conducted. According to this study45 the deep neural network model outperformed in all evaluation indicators. In contrast, among our models trained with all features, the XGB model demonstrated the highest AUC in cross-validation, whereas in study 45 there was not a significant performance difference between XGB and other models.

For models trained on features selected based on p value, the DT model achieved the highest cross-validation accuracy at 96.26%. However, this contrasts with study43, which reported negligible performance differences between DT, SVM, and RF models. In external validation, the SVM model’s accuracy of 82.89% supports findings from study41, which also recognized SVM’s strong performance after DNN. The highest amount of AUC among trained models with selected features based on P value in cross and external validations was related to Extra Trees and MLP, respectively. Meanwhile, based on the results of study40, the AUC of the MLP model was not much different from other models.

Regarding models trained on features selected by oncologists, the highest accuracy of cross-validation was 95.85% and was related to the GBoost model. Besides, the highest accuracy of external validation was 81.59% and related to the DNN. This result is consistent with study41, which highlighted DNN’s superior performance in external validation. However, study46, where feature selection was conducted with the assistance of expertise, the RF model demonstrated the highest level of performance accuracy. The highest AUCs for models trained with oncologist-selected features were achieved by RF in cross-validation and LR in external validation, contrasting with the findings of study42, where XGB recorded the highest AUC in cross-validation.

The findings of the current study are in line with study41, which reported the DNN as the best model. Overall, aside from DNN, other studies36,38,43,45 indicated that models based on neural networks such as ANN and MLP, showed better performance. It is noteworthy to say that the mentioned contractions in studies36,42,43,45 could be assigned to utilizing different datasets as well as feature selection approaches.

Study limitations and future considerations

This study encountered several limitations. Firstly, the inaccessibility of patients’ genetic data was a significant constraint. Combining genetic data with other available information could potentially enhance the efficiency of the models. Additionally, the absence of certain aspects of the patients’ medical history, such as blood pressure, blood sugar levels, and other cancers, could notably impact the performance and accuracy of both ML and DL models. Certainly, considering the drugs used in the treatment process would be a valuable addition, bringing the study results closer to the outcomes obtained in real-world scenarios. Future investigations could include using medical images in addition to other forms of data and training deep learning models like CNN. Moreover, developing and comparing metastasis and recurrence prediction models could provide a broader perspective in this field. Furthermore, incorporating online and accessible datasets for external validation could also be practical to enhance the applicability of our findings.

Conclusion

To the best of our knowledge, this study represents a pioneering effort in Iran, being the first to introduce a survival prediction model using deep learning. Leveraging data from two centers and incorporating external validation further distinguishes the study. The results indicate that, overall, the DNN model demonstrated superior prediction accuracy in external validation. This could be because DNN can capture non-linear relationships and interactions among features better than simpler models. However, DNN was not consistently at a higher level in other performance metrics. Moreover, among the conventional models, SVM showed the highest prediction accuracy in external validation. The reason behind this could be that SVM employs kernel functions to transform data into higher dimensions, allowing it to capture complex relationships between features. Notably, evaluation metrics were generally higher for models trained with the Shiraz dataset. This discrepancy might be attributed to the fewer missing values in the Shiraz dataset compared to the Tehran dataset, which was addressed using the KNN algorithm. With an increasing number of similar studies and positive outcomes, there is optimism that ongoing advancements in the field will lead to optimized medical decisions and improved disease prognosis through the utilization of deep learning algorithms to uncover hidden patterns in data.

Data availability

The data that support the findings of this study are not openly available due to the policies and regulations of the data centers that provided data. The raw data could be made available from the corresponding authors upon reasonable request. The Python source code is available at https://doi.org/https://doi.org/10.5281/zenodo.12805879.

Abbreviations

- ANN:

-

Artificial neural network

- BC:

-

Breast cancer

- CNN:

-

Convolutional neural network

- DL:

-

Deep learning

- DNN:

-

Deep neural network

- DT:

-

Decision tree

- ER:

-

Estrogen receptor

- GBM:

-

Gradient boosting machine

- GBoost:

-

Gradient boosting

- GRU:

-

Gated recurrent unit

- HER2:

-

Human epidermal growth factor receptor-2

- KNN:

-

K nearest neighbor

- LDA:

-

Linear discriminant analysis

- LGBM:

-

Light gradient boosting machine

- LR:

-

Logistic regression

- LSTM:

-

Long short-term memory

- LVI:

-

Lymphovascular invasion

- ML:

-

Machine learning

- MLP:

-

Multilayer perceptron

- NB:

-

Naive Bayes

- PNI:

-

Perineural invasion

- PR:

-

Progesterone receptor

- RF:

-

Random forest

- RIPPER:

-

Repeated incremental pruning to produce error reduction

- ROC:

-

Receiver operator characteristic

- SVM:

-

Support vector machine

- XGB:

-

Extreme gradient boosting

References

Fitzmaurice, C. et al. Global, regional, and national cancer incidence, mortality, years of life lost, years lived with disability, and disability-adjusted life-years for 32 cancer groups, 1990 to 2015: A systematic analysis for the global burden of disease study. JAMA Oncol. 3(4), 524–548 (2017).

Łukasiewicz, S. et al. Breast cancer—Epidemiology, risk factors, classification, prognostic markers, and current treatment strategies—An updated review. Cancers 13(17), 4287 (2021).

Lei, S. et al. Global patterns of breast cancer incidence and mortality: A population-based cancer registry data analysis from 2000 to 2020. Cancer Commun. 41(11), 1183–1194 (2021).

Gorgzadeh, A. et al. Investigating the properties and cytotoxicity of cisplatin-loaded nano-polybutylcyanoacrylate on breast cancer cells. Asian Pac. J. Cancer Biol. 8(4), 345–350 (2023).

WHO. Breast cancer description available from: https://www.who.int/news-room/fact-sheets/detail/breast-cancer (2020).

Ahmad, A., Breast cancer statistics: recent trends. Breast cancer metastasis and drug resistance: challenges and progress, pp. 1–7 (2019).

CDC. Breast Cancer Statistics avaavailable from: https://www.cdc.gov/cancer/breast/statistics/index.htm (2023).

Taylor, C. et al. Breast cancer mortality in 500 000 women with early invasive breast cancer in England, 1993–2015: Population based observational cohort study. Bmj https://doi.org/10.1136/bmj-2022-074684 (2023).

Aryannejad, A. et al. National and subnational burden of female and male breast cancer and risk factors in Iran from 1990 to 2019: Results from the global burden of disease study 2019. Breast Cancer Res. 25(1), 47 (2023).

Rahimzadeh, S. et al. Geographical and socioeconomic inequalities in female breast cancer incidence and mortality in Iran: A Bayesian spatial analysis of registry data. PLoS ONE 16(3), e0248723 (2021).

Alizadeh, M. et al. Age at diagnosis of breast cancer in Iran: A systematic review and meta-analysis. Iran. J. Public Health 50(8), 1564 (2021).

Akbari, M. E. et al. Ten-year survival of breast cancer in Iran: A national study (retrospective cohort study). Breast Care (Basel) 18(1), 12–21 (2023).

Ginsburg, O. et al. Breast cancer early detection: A phased approach to implementation. Cancer 126(S10), 2379–2393 (2020).

Maajani, K. et al. The global and regional survival rate of women with breast cancer: A systematic review and meta-analysis. Clin. Breast Cancer 19(3), 165–177 (2019).

Denfeld, Q. E., Burger, D. & Lee, C. S. Survival analysis 101: An easy start guide to analysing time-to-event data. Eur. J. Cardiovasc. Nurs. 22(3), 332–337 (2023).

Ghaderzadeh, M. & Aria, M. Management of Covid-19 detection using artificial intelligence in 2020 pandemic. In Proceedings of the 5th International Conference on Medical and Health Informatics. Association for Computing Machinery: Kyoto, Japan, pp. 32–38 (2021).

Aria, M., Ghaderzadeh, M. & Asadi, F. X-ray equipped with artificial intelligence: Changing the COVID-19 diagnostic paradigm during the pandemic. BioMed. Res. Int. 2021, 9942873 (2021).

Rai, S., Mishra, P. & Ghoshal, U. C. Survival analysis: A primer for the clinician scientists. Indian J. Gastroenterol. 40(5), 541–549 (2021).

Indrayan, A. & Tripathi, C. B. Survival analysis: Where, why, what and how?. Indian Pediatr. 59(1), 74–79 (2022).

Wongvibulsin, S., Wu, K. C. & Zeger, S. L. Clinical risk prediction with random forests for survival, longitudinal, and multivariate (RF-SLAM) data analysis. BMC Med. Res. Methodol. 20(1), 1 (2019).

Fraisse, J. et al. Optimal biological dose: A systematic review in cancer phase I clinical trials. BMC Cancer 21, 1–10 (2021).

Lotfnezhad Afshar, H. et al. Prediction of breast cancer survival through knowledge discovery in databases. Glob. J Health Sci. 7(4), 392–398 (2015).

Akgün, C. et al. Prognostic factors affecting survival in breast cancer patients age 40 or younger. J. Exp. Clin. Med. 39(4), 928–933 (2022).

Escala-Garcia, M. et al. Breast cancer risk factors and their effects on survival: A Mendelian randomisation study. BMC Med. 18(1), 327 (2020).

Arefinia, F. et al. Non-invasive fractional flow reserve estimation using deep learning on intermediate left anterior descending coronary artery lesion angiography images. Sci. Rep. 14(1), 1818 (2024).

Pacal, İ. Deep learning approaches for classification of breast cancer in ultrasound (US) images. J. Inst. Sci. Technol. 12(4), 1917–1927 (2022).

Işık, G. & Paçal, İ. Few-shot classification of ultrasound breast cancer images using meta-learning algorithms. Neural Comput. Appl. 36(20), 12047–12059 (2024).

Coşkun, D. et al. A comparative study of YOLO models and a transformer-based YOLOv5 model for mass detection in mammograms. Turk. J. Electr. Eng. Comput. Sci. 31(7), 1294–1313 (2023).

Shimizu, H. & Nakayama, K. I. Artificial intelligence in oncology. Cancer Sci. 111(5), 1452–1460 (2020).

Zarean Shahraki, S. et al. Time-related survival prediction in molecular subtypes of breast cancer using time-to-event deep-learning-based models. Front. Oncol. 13, 1147604 (2023).

Tomatis, S. et al. Late rectal bleeding after 3D-CRT for prostate cancer: Development of a neural-network-based predictive model. Phys. Med. Biol. 57(5), 1399 (2012).

Tran, K. A. et al. Deep learning in cancer diagnosis, prognosis and treatment selection. Genome Med. 13(1), 152 (2021).

Ghaderzadeh, M. et al. A fast and efficient CNN model for B-ALL diagnosis and its subtypes classification using peripheral blood smear images. Int. J. Intell. Syst. 37(8), 5113–5133 (2022).

Bayani, A. et al. Identifying predictors of varices grading in patients with cirrhosis using ensemble learning. Clin. Chem. Lab. Med. (CCLM) 60(12), 1938–1945 (2022).

Bayani, A. et al. Performance of machine learning techniques on prediction of esophageal varices grades among patients with cirrhosis. Clin. Chem. Lab. Med. (CCLM) 60(12), 1955–1962 (2022).

Ghaderzadeh, M. et al. Deep convolutional neural network-based computer-aided detection system for COVID-19 using multiple lung scans: design and implementation study. J. Med. Internet Res. 23(4), e27468 (2021).

Boldrini, L. et al. Deep learning: a review for the radiation oncologist. Front. Oncol. 9, 977 (2019).

Mihaylov, I., Nisheva, M. & Vassilev, D. Application of machine learning models for survival prognosis in breast cancer studies. Information 10(3), 93 (2019).

Arya, N. & Saha, S. Multi-modal advanced deep learning architectures for breast cancer survival prediction. Knowl. -Based Syst. 221, 106965 (2021).

Montazeri, M. et al. Machine learning models in breast cancer survival prediction. Technol. Health Care 24, 31–42 (2016).

Yang, P.-T. et al. Breast cancer recurrence prediction with ensemble methods and cost-sensitive learning. Open Med. 16(1), 754–768 (2021).

Nguyen, Q. T. N. et al. Machine learning approaches for predicting 5 year breast cancer survival: A multicenter study. Cancer Sci. 114(10), 4063–4072 (2023).

Othman, N. A., Abdel-Fattah, M. A. & Ali, A. T. A hybrid deep learning framework with decision-level fusion for breast cancer survival prediction. Big Data Cognit. Comput. 7(1), 50 (2023).

Lotfnezhad Afshar, H. et al. Prediction of breast cancer survival by machine learning methods: An application of multiple imputation. Iran J. Public Health 50(3), 598–605 (2021).

Lou, S. J. et al. Breast cancer surgery 10 year survival prediction by machine learning: A large prospective cohort study. Biology (Basel) 11(1), 47 (2021).

Ganggayah, M. D. et al. Predicting factors for survival of breast cancer patients using machine learning techniques. BMC Med. Inform. Decis. Mak. 19(1), 48 (2019).

Kalafi, E. et al. Machine learning and deep learning approaches in breast cancer survival prediction using clinical data. Folia Boil. 65(5/6), 212–220 (2019).

Tapak, L. et al. Prediction of survival and metastasis in breast cancer patients using machine learning classifiers. Clin. Epidemiol. Glob. Health 7(3), 293–299 (2019).

Zhao, M. et al. Machine learning with k-means dimensional reduction for predicting survival outcomes in patients with breast cancer. Cancer Inform. 17, 1176935118810215 (2018).

Shrestha, A. & Mahmood, A. Review of deep learning algorithms and architectures. IEEE Access 7, 53040–53065 (2019).

Domínguez-Rodríguez, S. et al. Machine learning outperformed logistic regression classification even with limit sample size: A model to predict pediatric HIV mortality and clinical progression to AIDS. PLOS ONE 17(10), e0276116 (2022).

Chen, H. et al. Improved naive Bayes classification algorithm for traffic risk management. EURASIP J. Adv. Signal Process. 2021(1), 30 (2021).

Saadatfar, H. et al. A new k-nearest neighbors classifier for big data based on efficient data pruning. Mathematics 8, 286. https://doi.org/10.3390/math8020286 (2020).

Blockeel, H. et al. Decision trees: from efficient prediction to responsible AI. Front. Artif. Intell. 6, 1124553 (2023).

Hu, L. & Li, L. Using tree-based machine learning for health studies: Literature review and case series. Int. J. Environ. Res. Public Health 19(23), 16080. https://doi.org/10.3390/ijerph192316080 (2022).

Akinola, S., Leelakrishna, R. & Varadarajan, V. Enhancing cardiovascular disease prediction: A hybrid machine learning approach integrating oversampling and adaptive boosting techniques. AIMS Med. Sci. 11(2), 58–71 (2024).

Guido, R. et al. An overview on the advancements of support vector machine models in healthcare applications: A review. Information 15(4), 235. https://doi.org/10.3390/info15040235 (2024).

El Hamdaoui, H. et al. Improving heart disease prediction using random forest and adaboost algorithms. iJOE 17(11), 61 (2021).

Wassan, S. et al. Gradient boosting for health IoT federated learning. Sustainability 14, 16842. https://doi.org/10.3390/su142416842 (2022).

Li, W., Peng, Y. & Peng, K. Diabetes prediction model based on GA-XGBoost and stacking ensemble algorithm. PLOS ONE 19(9), e0311222 (2024).

Prasetyo, S. Y. & Izdihar, Z. N. Multi-layer perceptron approach for diabetes risk prediction using BRFSS data. In 2024 IEEE 10th International Conference on Smart Instrumentation, Measurement and Applications (ICSIMA). (2024).

Aria, M., et al., Acute lymphoblastic leukemia (ALL) image dataset. Kaggle, (2021).

Aria, M., et al., COVID-19 Lung CT scans: A large dataset of lung CT scans for COVID-19 (SARS-CoV-2) detection. Kaggle. https://www.kaggle.com/mehradaria/covid19-lung-ct-scans, accessed 20 April 2021, (2021).

Aria, M., Hashemzadeh, M. & Farajzadeh, N. QDL-CMFD: A quality-independent and deep learning-based copy-move image forgery detection method. Neurocomputing 511, 213–236 (2022).

Farhad, A. et al. Artificial intelligence in estimating fractional flow reserve: A systematic literature review of techniques. BMC Cardiovas. Disord. 23(1), 407 (2023).

Aria, M., Nourani, E. & Golzari Oskouei, A. ADA-COVID: Adversarial deep domain adaptation-based diagnosis of COVID-19 from lung CT scans using triplet embeddings. Comput. Intell. Neurosci. 2022(1), 2564022 (2022).

Funding

Throughout this study, no financial resources or funding were received.

Author information

Authors and Affiliations

Contributions

S.Z.H., R.R., H.E. and M.A. were responsible for the conceptualization and design of the study. S.Z.H and M.A. performed the development and evaluation of the ML and DL models. M.K., M.A, and V.Z. prepared the datasets and also facilitated the process of data gathering. S.Z.H. handled data gathering from the EHR and Iran Health Insurance System, as well as telephone interviews with patients and data cleansing. The initial draft was critically reviewed by R.R. and M.A. and ultimately all authors read and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Ethical approval

All experimental protocols were approved by the Institutional Review Board of Shahid Beheshti University of Medical Sciences, with the approval code IR.SBMU.RETECH.REC.1401.823, and informed consent was obtained from all subjects and/or their legal guardians. In addition, all methods were performed in accordance with the relevant guidelines and regulations.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hamedi, S.Z., Emami, H., Khayamzadeh, M. et al. Application of machine learning in breast cancer survival prediction using a multimethod approach. Sci Rep 14, 30147 (2024). https://doi.org/10.1038/s41598-024-81734-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-81734-y

Keywords

This article is cited by

-

Current AI technologies in cancer diagnostics and treatment

Molecular Cancer (2025)

-

Medical laboratory data-based models: opportunities, obstacles, and solutions

Journal of Translational Medicine (2025)