Abstract

With rapid technological advancements, videos are captured, stored, and shared in multiple formats, increasing the requirement for summarization techniques to enable shorter viewing durations. Key Frame Extraction (KFE) algorithms are crucial in video summarization, compression, and offline analysis. This study aims to develop an efficient KFE approach for generic videos. Existing methods include the Adaptive Key Frame Extraction Algorithm, which reduces redundancy while ensuring maximum content coverage; the Optimal Key Frame Extraction Algorithm, which utilizes a Genetic Algorithm (GA) to select key frames optimally; and the Rapid Key Frame Extraction Algorithm, which employs clustering techniques to identify typical key frames. However, a clear prerequisite remains for a more versatile KFE technique that can address generic applications rather than specific use cases. Evolutionary algorithms offer a powerful solution for achieving optimal KFE. This proposed method leverages an interactive GA with a well-designed Fitness Function and elitism-based survivor selection to enhance performance. This proposed algorithm has been tested on diverse datasets, including VSUMM, SumMe, Mall, user-generated videos, surveillance footage from Amrita Vishwa Vidyapeetham University (Coimbatore, India), and web-sourced videos. The results demonstrate that the proposed KFE approach adheres to benchmark data and captures additional significant frames. Compared to Differential Evolution (DE) techniques and Deep Learning (DL) models from the literature, this recommended algorithm demonstrates superior efficiency, as verified through quantitative and qualitative evaluation metrics. Furthermore, the computational complexity of the GA is intricately compared to that of DE and DL-based approaches, highlighting the distinct efficiencies and performance features.

Similar content being viewed by others

Introduction

With the progressive explosion of media content in the prevailing information age, videos have assumed a predominant role in content sharing. With the unabated exchanges of discrete sizes of videos on the Internet, reviewing a complete set of related videos retrieved for a particular search is an onerous exercise. The viewing audience would be better served by an option to preview a concise video synopsis rather than waste precious time by watching an irrelevant, poorly composed video. Video summarization entails the identification of key frames (KF) in a trailer video that signifies the vital aspects of visual media. An incomprehensive, skewed summarization that unfairly promotes only one side of an issue ensues in an inadequate, incompetent video synopsis. Efficient depiction of selective video clips enables a well-balanced compendium of a trailer.

Computer Vision (CV) is focused on mimicking human visual systems, facilitating thorough visual content analysis with minimal investment of time and effort. CV’s footprints are apparent in diverse domains, such as computer medical vision, machine vision, surveillance, unmanned vehicles, and missile guidance. Video analytics, which involves an examination of a video in real-time or offline modes, has dealt with problems like event detection, pattern recognition, surveillance, crowd management, and public safety. Analytics can be performed on the entire video or only on frames with perceptible changes. In detecting events of interest, analysis restricted to frames bearing prominent change instead of scanning through an entire video would undoubtedly save time and computing resources. The accuracy of the analysis is proportional to the precision with which the prominent KF is detected.

Extensive research has contributed to varied Key Frame Extraction (KFE) approaches. The KFE schemes have been based on shot, motion, or an evolutionary approach. Shot-based KFE aims to partition a video into shots and identify the KF within each shot. Motion-based KF techniques pinpoint radical changes among the video frames.

Evolutionary approaches are handy in solving problems employing techniques stimulated by nature. Evolutionary Algorithm (EA) is subdivided into Evolutionary programming and strategies, Genetic Algorithm (GA), and Genetic Programming (GP). GAs, inspired by natural genetics and evolution, can search large spaces in pursuit of optimal combinations of solutions. GA imitates the natural selection process where offspring for the next generation are produced by the fittest individuals designated as parents in the preceding generations.

Motivation of the study

The necessity for efficient and automated video content summarization drives the motivation for developing a GA-based approach for KFE. The KFE plays a crucial role in reducing the volume of data that needs to be processed, stored, and transmitted while preserving vital information. By leveraging the capabilities of GA, this work can optimize the selection of KF through a well-defined Fitness Function (FF) that evaluates the significance of each frame.

Key Motivations for this Approach Include:

-

Optimization power: The GA is adept at finding optimal or near-optimal solutions within large search spaces, making them particularly suited for handling extensive video datasets.

-

Adaptability: GA is highly adaptable to several types of videos and extraction criteria. The flexibility of the FF allows for customization across different domains and use cases.

-

Reducing redundancy: By selecting a diverse yet representative set of frames, GA effectively minimizes redundancy in the extracted summary, resulting in a more concise and informative representation of the video content.

-

Efficiency: GA-based KFE methods often outperform exhaustive search techniques in speed and performance, especially when dealing with complex or lengthy video sequences. It has been substantiated and detailed in section 4.5.

This work focuses on performing KFE using GA on unconstrained video. Primarily, the choice and design of the fitness function and survivor selection are the essence of this proposed work.

Objective of the work

The primary objective of developing a GA-based KFE is to achieve efficient, automated video content summarization by identifying and selecting the most representative frames. This approach aims to optimize the selection process, ensuring that important information is retained while minimizing redundancy and reducing data processing requirements.

Specific objectives include:

-

Maximizing relevance: Ensure that the KFE represents the video’s most informative and relevant moments, capturing the core narrative or action. The results in section "Experimental results" demonstrate the efficacy of the proposed algorithm in identifying the most relevant information, as proven through comparison with ground truth data.

-

Minimizing redundancy: Select diverse frames to avoid repetitive content, making the summary more summarizing and informative.

-

Enhancing adaptability: Design the extraction process to be flexible across various video types and domains, with the GA-FF tailored to adapt to different extraction criteria.

-

Improving computational efficiency: Leverage the optimization power of GAs to reduce computational load compared to exhaustive search methods, enabling faster and more scalable video summarization. It has been substantiated and detailed in Sect. 4.5

This algorithm has been tested across various datasets to demonstrate its efficacy. The rest of this paper is organized as follows: section "Related works" reviews various techniques for KFE reported in the literature; section "Proposed algorithm for KFE" outlines the proposed framework; section "Experimental results" shows the validation results that attest to the efficacy of the proffered work.

Related works

Many investigators have developed algorithms for the KFE. A few significant related works are discussed below: The authors1 suggested a method for video summarization using Capsules Net. Capsules Net was trained to extract the content and motion features, which enabled the generation of an inter-frame motion curve. Each frame was fitted with a Cubic H-Bezier curve on a sliding window. G1continuity error was computed to represent the change in regularity between the piecewise curves. Transition Effects Detection (TED), which indicated sudden video changes, was captured to identify shots. A self-attention model was pursued to KFE using the position content of each shot.

In2, KFE was performed on summary space by employing Lipschitz functions to map the frames of the video to a higher dimensional summary space. K-means clustering was applied to the frames in the summary space to infer the anchor points by the generated weight matrix, which are the most representative frames in each cluster as the selected representative frames exhibited redundancies, KFE from these sets of representative frames. The application of Discrete Cosine Transform (DCT) on representative frames enabled the generation of a DCT matrix. In turn, the DCT matrix facilitated the derivation of Hamming distances for KFE.

The authors in3 proffered a non-linear Sparse Dictionary Selection (SDS) for the KFE. The video frames were mapped to a higher dimensional feature space using the Mercer kernel to convert the non-linear relationship between frames into a linear one. The standard kernel SDS, derived from Simultaneous Orthogonal Matching Pursuit (SOMP), was used to select frames correlated to the residuals. Next, frames orthogonal to the latter were selected to ensure that the current frame was dissimilar to the previously selected frame. A robust Kernel SDS was used to identify candidate KF. The best candidate, KF, was then selected based on its significance. This process was repeated for several iterations, punctuated by updates of the reconstruction coefficient and the energy ratio of the residual at each iteration; KFE on termination of this sequence.

A method for KFE for the assembly process was suggested4. A semantic graph was constructed for each frame. Objects were represented as 3-D ellipsoids. Meaningful semantic segments were formed in each frame. The graph’s vertices corresponded to segments, and the edges corresponded to the spatial relations between two segments. Differences in Eigenvalues and the structural change in the graphs of the consecutive frames indicated the presence of KF.

The authors5 Put forth KFE for the estimation of odometry. The drift errors were added over time during odometry approximation, which had been reduced by identifying KF based on scan similarity in each step. Euclidean distance was used to evaluate the error between the real and the valued poses. Errors in pose estimation increase as the process continues due to local transformation. KFE minimized the accumulated error at each step based on scan similarity. The scan data having the lowest uncertainty, exceeding the user-defined similarity threshold compared to other candidates, were selected as KF.

The authors6 focused on annotated sentences of videos. The video was first partitioned into shots, which were selected as frames that ensue at 25 intervals in the video under consideration. The frame from each shot, which had lower visual features than other frames, was selected as a KF. 215D Visual feature vector comprising of 45D color moment feature vector, 170D Hierarchical Wavelet Packet (HWVP) descriptor, SIFT, and Color SIFT were used for sentence generation—each sentence comprised of elements such as objects, events, scenes, and adjectives. The Weighted Scoring Algorithm (WSA) was used to find the best frame elements. The relationships among the best elements were then analyzed, and a sentence was constructed using the Correlation Graph Algorithm (CGA).

The authors7 performed image segmentation based on differential evolution. A histogram was used to obtain the preliminary threshold. The Gaussian Mixture Model (GMM) was used to attain multiple thresholds. The mean square error of the difference between the Gaussian function and the histogram is used as the fitness function. The FF expedites the determination of the best threshold for image segmentation. The authors8 invented an evolutionary approach to image fusion. Input images were classified into blocks; the more evident blocks were used to constitute the final image. EA was employed to dynamically find the best block size for the composition of the final image. The authors9 reviewed the application of various EAs for combinatorial optimization problems.

The KFE was carried out in three phases: first, extracting features from video frames; next, segmenting the video into distinct parts; and finally, identifying the KF that best represents the content of each segment10. Features such as Scale-Invariant Feature Transform (SIFT), Global Image Structure Tensor (GIST), Histograms (HSV), and (Pyramid Histogram of Oriented Gradients (PHOG) were extracted from the video frames, and the K-means clustering algorithm was applied to group the videos into distinct segments based on these extracted features. KFE is subsequently extracted using dynamic programming combined with a 0–1 integer linear programming algorithm, optimizing the selection process for greater efficiency and accuracy.

In11, the authors used Spatio-Temporal subtitles for KFE. Initially, mid-frames between the appearance and disappearance of the subtitles were selected. An SSPA curve was generated, and catastrophic points were detected using edge detection methods. The KFE was then extracted based on the steady points derived from these catastrophic points and the presence of subtitles.

It focuses on identifying KF for object detection while minimizing False Negatives (FN)12. The FN was determined by combining motion detection using optical flow with object detection in each frame. The pre-trained YOLOv5 was then fine-tuned based on the identified FN, which resulted in better KFE than standard YOLOv5. The KFE identified them based on abrupt shot transitions13. Abrupt shots were detected by applying Sobel, Canny, and Roberts edge detection techniques and feature extraction using block-based local binary patterns. Histogram-based thresholding was then used to extract the abrupt shots. The Sobel gradient was applied, and frames with higher coefficients were selected as KF based on the Z-score of their magnitudes.

The KFE was identified using a simple GA14. Color-based distance metrics were used to detect frames with significant intensity changes, which were marked as shots. The FF was then applied to each frame within every shot, and over multiple generations, the frames with the highest fitness values were selected as KF. In15, the authors aimed to identify KF in dance videos. These video frames have been analyzed using directional gradient histogram and optical flow directional features, with music features also integrated to assist in KFE. The authors compared KFE using entropy, absolute difference, optical flow, and the YOLO16. The experimental results demonstrated that YOLO outperformed all other methods for KFE.

Table 1 illustrates a comparison of different methodologies discussed in the literature. Closely examining the published literature delineates that KFE within videos is still an open problem. Accordingly, this work proffers a different of the GA with an efficient FF and carefully crafted crossover, mutation, and survivor mechanisms for unconstrained videos.

The investigation reported in this paper is focused on the evolution of KFE GA. Distinctive features of the submitted algorithm are noted below:

-

a.

The user option is used to select the initial population size.

-

b.

The user can provide the percentage of KFE from the video under consideration. A reference guideline for the user to decide the percentage of KF is presented in this work.

-

c.

This work has designed an FF that works on unconstrained videos besides a specific use case.

-

d.

The auto-select ability of crossover point and mutation bit without repetition. Elitism-based Survivor selection ensures entry of the best-fitted chromosome into the succeeding generation without being lost.

The performance of the proposed work is also compared with existing differential EA and DL to demonstrate the efficacy of the proposed algorithm.

Proposed algorithm for KFE

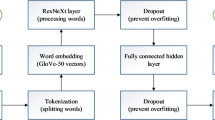

The proposed algorithm pinpoints the identification of KF from a given video, which can be used for diverse applications. The KFE can be summarized as a video synopsis or used for offline video analysis. The GA is applied to the input video, and after a series of evolutions, the fittest chromosome is represented as the KF for the input video. A model diagram of GA with suitable approaches chosen for KFE is shown in Fig. 1.

The following subsections elaborate on the adoption of each step of the GA in the proposed work.

Creation of initial population

The user dynamically provides the initial population size. Let ‘n’ denote the population size, which generates ‘n’ binary chromosomes, where the size of each chromosome depends on the frame counts in an input video. If a video input, V, has K frames, as in Eq. (1), then each chromosome would be represented by K bits.

The user selects the KFE from the video. Assume ‘r’ represents the number of frames to be KFE from the input video ‘V’. The symbol ‘r’ is the percent random numbers generated, and the bits corresponding to those random numbers are termed as 1 in the chromosome and others as ‘0’. Thus, a chromosome with the number of bits equivalent to the number of frames in the video with r% of 1’s in the place of the generated random numbers and 0’s in other places is created.

where T is r% of the size of the input video, as shown in Eq. (2). Similarly, ‘n’ chromosomes are generated for a population size of ‘n’. Figure 2 shows the chromosomes created for a sample video with 98 KF generated from the Mall17 with ‘r’ as 10. Therefore, each chromosome’s size is 98 bits, with 10% of the bits as 1’s. The generated random numbers determine the locations where 1’s would emerge in the chromosome, and the corresponding frames in the position of 1’s would be considered for further processing. Figure 3 shows the frames selected for a chromosome in Fig. 2.

Guideline for selecting ‘r’

The value of ‘r’ represents the percentage of KF the user decides to extract from an input video. The guideline for selecting the value of ‘r’ is specified based on shots. Shots are usually KF, where drastic changes in intensity occur. For an input video V with K frames {F1, F2 …FK}, each KF of size u × v, the difference of intensities of consecutive frames are obtained, as given in Eq. (3).

The average value of the difference frames is calculated, and a threshold (H) is obtained based on the averages, as shown in Eqs. (4) to (6).

The reference for dynamically choosing the percentage of a KF(r) from the given input video is based on the number of shots (S) for a particular video. Shots are identified based on threshold ‘H’, as shown in Eq. (7).

Shots have at least an H difference from the repeated frames. Once the number of shots is identified for the input video, the user may treat the number of shots (S) as a guideline for selecting the number of KF the user agrees to extract from the input video. If the chosen value of ‘r’ is less than or equal to S, then prominent KF will be extracted from the given video, and if ‘r’ exceeds the value of S, it may generate repeated KFE without much difference.

Thus,

If r ≤ S, Prominent KFE from the given video

If r > S, There is no noticeable difference in the KFE

Figure 4 represents the flow diagram for calculating the threshold (H). Figure 5 represents the flow diagram for identifying the shots (S), which aids as a guideline for users to select the number of KFE from a video.

For a video of the Mall dataset comprising 98 KF, the shots extracted are shown in Fig. 6. The number of shots (S) for a video composed of a Mall dataset, with 98 frames, is 23 shots. If the percentage of KF (r%) that the user decides to extract from this video is less than 23 KF, then prominent KFE can be ensured, in other cases, which may generate more identical KF.

Parent selection

The Top-2 fittest chromosomes amongst ‘n’ chromosomes are selected as parents. For a video with K frames, T being r% of K as in Eq. (2) and each KF being of size u × v, the fitness of each chromosome with frames (f1, f2 .. .fT) is calculated using the algorithm FF (P).

Cross-over and mutation

Cross-over

Cross-over is performed on Parent1 and Parent2, the Top-2 highest fitness-valued chromosomes selected as parents. The cross-over point is selected randomly for each generation without user intervention. Cross-over takes place in two steps:

-

Step 1: Each chromosome consists of (0, 1). T (r% of K) is the number of 1’s generated in each chromosome during the initial population creation step. The crossover point ‘Ω’ is randomly generated, and a single crossover is applied using ‘Ω’. An illustration of Step 1 is shown in Fig. 7. Parent1 and Parent2 are applied with Step 1, and the two resulting chromosomes are (SP1 SP2).

-

Step 2: The numbers of 1’s are supposed to be equal to T for every chromosome. Frames corresponding to the positions of 1’s in the chromosomes are further considered for fitness calculation. However, after Step 1, the number of 1 s may differ for (SP1 and SP2) due to the swap of contents of (Parent1 and Parent2) chromosomes. To enable (SP and SP2) to have a T number of 1’s, the last few bits are changed appropriately.

-

If the number of 1 ≤ T, the Last few 0 bits are changed to 1 until the number of 1 reaches T

-

If the number of 1 > T, the Last few 1 bits are changed to 0 until the number of 1 reaches T

-

From Fig. 7, (Parent1, Parent2) chromosomes reveal that T (Number of 1’s) is 4, but SP1 has only three 1’s, and SP2 has five 1’s, hence the need to apply Step 2. Figure 8 shows an illustration of Step 2. CR1 and CR2 are the resultant chromosomes after applying cross-over on (Parent1, Parent2). CR1 and CR2 are replaced in the third and fourth positions of the SFitz sorted array, where the first two positions are already occupied by (Parent1 and Parent2). The mutation is subsequently performed on the rest of the population.

Mutation

Bit flip mutation flips 2 random bits of a chromosome. One random bit is flipped to 1, and the other is flipped to 0, ensuring that there are T numbers of 1’s in the chromosome after mutation. Bit flip mutation is done on the entire population other than Parent1, Parent2, CR1, and CR2. Figure 9 shows an illustration of the mutation.

Survivor selection

Survivor selection is based on Elitism. The Top-2 fittest chromosomes, Parent1and Parent2, selected as parents, are always retained in the population for the next generation. The recombined chromosomes (CR1, CR2) and the residual mutated chromosomes are transferred to the next generation.

Termination condition

Termination condition occurs when the maximum number of generations is reached. For experimental analysis, the maximum number of generations is set to MaxGen. After MaxGen iterations, the frames of the fittest chromosome are represented as KF. The overall procedure of the proposed algorithm is represented as the Proposed_GA below:

These resulting KF are converted into a video of shorter length than the input video, on which further analysis can be performed. The video derived from the KF can be referred to as a summarized video.

Experimental results

The proposed algorithm for KFE is implemented using OpenCV with C++. KFE, using the proposed algorithm, was assessed on video generated from the Mall18,19, on videos from surveillance cameras of our campus, user-generated videos, VSUMM, SumMe dataset, and videos from the Internet. Besides varied resolutions, the algorithm was assessed on over 275 videos of varying lengths, in the range of (98 to 8044) KF. The initial population size, dynamically provided by the user, was appraised with more than 1000 chromosomes. Doing so stated that the fittest chromosomes after generations were chosen from numerous chromosomes rather than barely a few. Testing was performed with MaxGen varying from (10 to 110) the maximum number of generations. The convergence was observed to be better when the algorithm was run for more than 90 generations; hence, the proposed algorithm was made to run for 100 generations for each selected video.

A video from the campus surveillance camera at Amrita Vishwa Vidyapeetham University, Coimbatore, Tamil Nadu, India, consists of 237 KF, each with a resolution of 960 × 576, captured at a rate of 25 KF per second, and the parameters selected for the analysis, along with their respective values, are provided in Table 2.

The size of the population is selected to be 1000. Therefore, 1000 chromosomes, each of size 237 bits, were generated for the video. The value of ‘r’ was chosen to be 10%. Therefore, 10% of 237 (i.e., T = 23) random numbers were generated for each chromosome. The bits corresponding to those random numbers were set as 1’s, and others as 0’s. Thus, 1000 chromosomes, each constituted of 237 bits, with 23 bits as 1’s and the rest as 0’s, are generated; one of the chromosomes is shown in Fig. 10. The frames corresponding to 1’s in the chromosome shown in Fig. 10 are depicted in Fig. 11.

Frames corresponding to the chromosome in Fig. 10.

The FF was evaluated for each chromosome using FF (P); the Top-2 fittest chromosomes were selected as parents. Crossover was applied for the fittest chromosomes, and bit flip mutation was applied to the rest of the chromosomes. Selection of the Survivor was performed punctuated by the tenet of Elitism. Therefore, the Top-2 fittest of the current generation, recombined children from the fittest chromosomes, and the mutated chromosomes from the current generation were treated as the population for the next generation. This was continued for the next 100 generations, and at the end of 100 generations, the fittest chromosome is represented as KF using Proposed_GA (P, MaxGen) with P as 1000 and MaxGen as 100. Figure 12 shows the KF generated for the video.

The KF for the video corresponding to Fig. 10.

For a user-generated video with 435 KF, each with a resolution of 640 × 480 and being captured at a rate of 29 frames/sec, the parameters selected with values are given in Table 3. Figure 13 shows the KF generated after 100 generations for the video.

Figure 14 shows KF generated after 100 generations for the video referenced in Fig. 6 with 98 KF, where each frame has a resolution of 640 × 480, captured at a rate of 10 frames/sec. The parameters chosen with values are given in Table 4.

For a video of a band marching from the internet, containing 296 KF with a resolution of 960 × 576, captured at a frame rate of 25 KF per second, the selected parameters are presented in Table 5. The KF generated after 100 generations for this video is illustrated in Fig. 15.

The video on archery from the internet with 166 KF, where each frame has a resolution of 320 × 240, is captured at a rate of 25 KF/sec. The parameters selected with values are given in Table 6. Figure 16 shows the KF generated after 100 generations for the video.

Results and discussion of the proposed algorithm on VSUMM

The proposed algorithm for KFE based on GA was appraised using the VSUMM20, and the results were compared. Figure 17 shows the KFE by the proposed algorithm for another video from the VSUMM. Each video frame has a resolution of 320 × 238, captured at a rate of 30 frames/s. For KFE, the parameters and corresponding values selected for the proposed algorithm are given in Table 7. Figure 18 shows the benchmark VSUMM summarized frames for the video.

For another video from VSUMM where each KF has a resolution of 352 × 288, captured at a rate of 25 Frames/Sec., Fig. 19 shows the KFE by the proposed algorithm for a video from VSUMM Fig. 20 shows the benchmark VSUMM summarized frames; the parameters and corresponding values chosen for the proposed algorithm are given in Table 8.

Comparisons of (Figs. 17 and 20) underscore the palpability of the proposed algorithm’s proficiency in KFE in the benchmark summarization specified by VSUMM. It was noticed that the proposed algorithm has also extracted more significant KF than those specified in the benchmark data.

Results and discussion of the proposed algorithm in comparison with a meta-heuristic approach (MHA)

In order to study the efficacy of the KFE from a given video, the proposed algorithm was compared with an MHA21,22, for which DE was selected as a model. Experimentation using a DE-based approach was conducted on VSUMM and SumMe and with classical settings for F (Differential weight) and Cr (Crossover probability) values. The initial population of DE was generated using the same method as in the case of the proposed GA, discussed in section "Creation of initial population". The mutation was based on DE/rand/1, where for each candidate chromosome (Xi, G), a mutated vector (V) was generated based on Eq. (15).

where r1, r2, and r3 were randomly selected within the [0… (n − 1)] interval and G corresponds to generation. Uniform Cross-over was performed across the mutated (Vi, G+1) and candidate vector (Xi, G), generating the trial vector (U i, G+1) as shown in Fig. 21.

Differential weight (denoted as F) is a crucial parameter that controls the amplification of the difference between two population vectors during the mutation phase. Its primary role is to balance exploration-searching new areas of the solution space and exploitation-refining the current solutions. Different mutation factor (F) values were tested, ranging from 0.4 to 0.9. It was detected that an F value of 0.8 led to slower convergence compared to smaller values, but it also promoted diversity within the population. This diversity allowed the algorithm to explore a broader range of candidate solutions while balancing exploration and exploitation. Unlike when F was set to 0.9, which resulted in overly large steps, the value of F at 0.8 enabled the algorithm to exploit promising areas of the search space more effectively without destabilizing the search process.

The Crossover Probability (CP)) was initially tested at 0.1 and gradually increased to 0.9. Since a high CP typically accelerates convergence, a value of 0.9 was deemed appropriate for extensive exploration while allowing room for fine-tuned exploitation. Fitness was calculated using FF (P). The fittest among the candidates and the trial vector were passed on to the next generation. Table 9 shows the strategies adopted for implementing DE.

Figure 22 shows the KFE by the proposed algorithm for a video from the VSUMM; in contrast, Fig. 23 shows the KFE by the DE. Each video frame has a resolution of 320 × 240, captured at a rate of 29 frames/s. Figure 24 shows the benchmark summarized VSUMM frames for the video.

For the KFE in Fig. 22, the parameters and corresponding values selected for the proposed algorithm are given in Table 10.

Figure 25 shows the KFE by the proposed algorithm for a video from the VSUMM; in contrast, Fig. 26 shows the KFE by the DE. Each video frame has a resolution of 320 × 240, captured at a rate of 29 frames/s. Figure 27 shows the benchmark summarized VSUMM frames for the video.

For the KFE in Fig. 25, the parameters and corresponding values selected for the proposed algorithm are given in Table 11.

The results demonstrate that, even after fine-tuning the parameters of DE, it could not outperform the proposed GA. This is primarily because the FF and crossover and mutation operations enabled the proposed algorithm to avoid getting stuck in local optima. As a result, the algorithm produced KF closer to the ground truth while generating a few additional meaningful frames.

Results and discussion of the proposed algorithm on SumMe

This proposed algorithm for KFE based on GA has been tested on the SumMe23, and the results are compared. Figure 28 shows the KFE by the proposed algorithm for a culinary video from the SumMe. Each video frame has a resolution of 320 × 240, captured at a rate of 15 frames/s. Figure 29 shows the benchmark summarized KF for the culinary video in the SumMe. For the KFE, the parameters and corresponding values chosen for the proposed algorithm are given in Table 12.

Figure 30 shows the KFE by the proposed algorithm for a culinary video from the SumMe. Each video frame has a resolution of 320 × 240, captured at a rate of 20 frames/s. Figure 31 shows the benchmark summarized frames for the culinary video in the SumMe. For the KFE, the parameters and corresponding values selected for the proposed algorithm are given in Table 13.

By comparing Figs. 28 and 31, it is evident that the proposed algorithm can have KFS specified in the benchmark summarization by SumMe. The proposed algorithm has also extracted more meaningful KF than those specified by the benchmark data.

Evaluation

Figure 32 shows the FF values and the corresponding graph for 100 generations of our campus surveillance video corresponding to Fig. 11. The graph’s fixed evolution with incremental generations bears convincing evidence that the FF (P) and the survivor selection with elitism discern the KF for an assumed video.

Quantitative evaluation

Precision, Recall, and F1-score were calculated, applying the proposed algorithm to 50 VSUMM and 25 SumMe videos. Table 14 compares the DE with the proposed algorithm based on the average Precision, Recall, and F1-score values calculated for VSUMM and SumMe. Table 15 compares the F1-scores of the proposed method with DL via the SumMe data set. Tables 14 and 15 metrics show that the proposed algorithm generated better results for Precision, Recall, and F1-scores than the DE and DL.

The results in Table 14 indicate that, despite extensive fine-tuning of the parameters in DE, it could not improve the proposed GA’s performance. This can be attributed primarily to the FF and the crossover and mutation operations, which empowered the proposed algorithm to evade entrapment in local optima.

The proposed GA-based method incorporates an efficient fitness function designed to outperform existing approaches in the literature (Table 15). The proposed approach explores a vast solution space through mechanisms such as mutation and crossover, enabling the algorithm to avoid local optima and discover unconventional solutions that gradient-based methods may manage35. Furthermore, the continuous evolution of the population, coupled with survivor selection based on elitism, enhances the model’s effectiveness, ensuring that the most promising solutions are retained and refined.

Subjective evaluation

Despite the quantitative evaluations shown to attest to the proposed algorithm’s versatile competence, the recommended proposal’s effectiveness was demonstrated by subjective evaluation with 200 subjects (Faculties and Students of Amrita University). The subjects were provided videos and KFE via the proposed algorithm36. The evaluation was based on the absolute category rating scale ranging from 1 (Bad) to 5 (Excellent), as shown in Table 16. The scores provided by the subjects reflected the relevance of KF for the videos presented to them.

The Mean Opinion Score (MOS) was calculated by Eq. (16) based on the ratings provided by the subjects.

where R is individual ratings given by N subjects. The MOS observed scores based on the subject’s response are summarized in Table 17.

Results in Table 17 attest that most subjects opined that the KF was relevant and discernable, substantiating the proposed algorithm’s propriety37. The summary of the strategy used in each step of the proposed work is provided in Table 18.

A total of 275 videos were tested, as shown in Table 19, and efficacy was tested by plotting the curve of fitness vs. generations and through quantitative and subjective evaluation38. The proposed algorithm is applied to the VSUMM and SumMe, and the match of the resulting KF with the benchmark data frames demonstrates the efficacy of the proposed work.

Comparison of computational complexity: a detailed analysis

The computational complexity of the proposed GA is compared with other approaches like DL and DE.

Computational complexity of GA

The overall computational complexity of a GA is influenced by the key factors: the number of generations (g), the population size (n), and the complexity of the FF (f). Hence, the complexity of GA can be generally represented as O(g.n .f). However, the complexities of crossover, mutation, and survivor selection must also be included.

-

a.

FF complexity: The proposed GA leverages an FF to effectively identify the fittest chromosomes selected as parents for the next generation. This approach ensures that the most optimal solutions are carried forward, promoting better offspring and improving the algorithm’s overall performance in reaching an optimal solution39. The FF calculates the mean followed by the variance of each frame with a complexity of O(N) for each frame. For ‘T’ frames of a chromosome, it is O(n1) + O(n2) + … + O(nT) = O (N). The sum of differences between variances of consecutive frames is then calculated with a complexity of O(L) for L different variances. Since L is usually less than N, the overall complexity becomes O(N). Quickly, all the calculated values are done with O(NLogN).

-

b.

Crossover and mutation complexity: In the proposed work, the crossover process is executed in two distinct steps: first, a single-point crossover is performed, followed by modifying the last few bits to preserve the value of ‘T’. The complexity associated with the single-point crossover operation is O(K), where K is the length of each chromosome. The complexity of modifying the last few bits to preserve the value of T is O(h), where h is the number of bits modified. The total complexity is O(K) + O(h). Since ‘h’ is less than ‘K’ anyway, the overall complexity for cross-over in the proffered work is O(K).

Bit flip mutation is applied to all chromosomes except for the top four fittest individuals. This selective approach ensures that the most optimal solutions are preserved while introducing variability among the remaining chromosomes, thereby fostering a diverse population that can explore new potential solutions effectively40. For a chromosome, the mutation complexity is O(h), where h bits are modified and repeated for N-4 chromosomes; hence, the complexity is O(N).

Since the four chromosomes do not undergo mutation and only the top two chromosomes are subjected to crossover, the overall complexity of the combined crossover and mutation processes can be expressed as O(K)+O(N)=O(K+N)=O(N). This formulation highlights that, despite the inclusion of both operations, the dominant factor remains the total number of elements involved, leading to an efficient computational complexity of O (N).

The computational complexity of cross-over and Mutation is:

-

Step 1. Cross over: O (K)

-

Step 2. Mutation: O (N)

-

Step 3. Cross-over and Mutation: O (N)

-

c.

Elitism and Survivor Selection Complexity: The elitism-based survivor selection mechanism, which entails sorting and selecting the top individuals based on their fitness, introduces additional complexity to the algorithm. This process ensures that the fit solutions are preserved in the next generation, enhancing the overall quality of the population41. However, it requires an efficient sorting step, which contributes to the computational overhead, thereby impacting the overall complexity of the algorithm.

Quick sort organizes the chromosomes according to their FF values, ensuring that only the fittest individuals are selected for the next generation. This efficient sorting mechanism not only enhances the performance of the GA but also fosters the preservation of optimal solutions, promoting a more robust evolutionary process.

The computational complexity of survivor selection is:

-

Quick Sort : O(NlogN)

-

d.

Overall Complexity of the Proffered Work:

The overall complexity of the proffered work is represented as O(g.n.f), where.

-g→represents the number of generations,

-n→denotes the population size, and.

-f→encompasses the complexities associated with fitness evaluation, crossover, mutation, and survivor selection.

Therefore, O (g. n. f) = O(g. n.( N log N + N + N log N)).

This simplifies to O (g. n. N log N), where N signifies the number of frames in the video. This representation effectively captures the computational demands of the algorithm, highlighting the significant factors contributing to its overall complexity.

Computational complexity of DL

The DL, particularly those used for video analysis (e.g., Convolutional Neural Networks (CNN) or Recurrent Neural Networks (RNN), regularly have significantly higher computational complexity 42.

For instance, training a DL has a complexity of O (d .p .N)

where:

-

d→is the number of layers in the neural network,

-

p→is the number of parameters in the model (which can be in the millions for complex networks),

-

N→is the number of video frames.

This complexity, especially with large-scale models, can far exceed that of the proposed GA, especially in scenarios with limited computational resources. Furthermore, the inference complexity of DL is considerable, making them less suited for real-time or resource-constrained applications.

Computational complexity of DE

The overall computational complexity of a DE is influenced by the key factors: the number of generations (g), the population size (p), and the complexity of the FF (f). Hence, the complexity of GA can be generally represented as O(g .p .f). However, the complexities of crossover, mutation, and survivor selection must also be included.

-

FF Complexity: Since the FF described in (section “Computational complexity of GA”. A) is being utilized in DE for testing, the overall computational complexity of the FF is O (NlogN).

-

Cross-over and mutation complexity: The crossover used in DE is uniform. The uniform crossover operation involves generating a trial vector by mixing components of a mutated vector and a candidate vector. The computational complexity of this process depends on the number of components, D, in each vector. The algorithm performs a simple comparison for each D component to decide whether to take the value from the target vector or the donor vector based on a crossover probability43. This comparison is done constantly for each component, O(1). Thus, for D components, the computational complexity of the uniform crossover is O(D). This is typically linear in terms of the dimension of the vectors. If the population size is N, the total complexity for applying the uniform crossover to the entire population is O (ND).

SMutation performed is a DE/rand/1 mutation approach, which generates a mutant vector by adding a scaled difference of two randomly chosen population vectors to a third vector. The complexity for randomly selecting three vectors is O(1). The complexity for computing the differences between vectors is O(D), where D is the number of components in each vector44. Multiplying the difference vector by the scaling factor F also takes O(D), and finally, adding the scaled difference to the base vector requires O(D) as well. For a single mutant vector, the complexity is O(D). Since the mutation step must be performed for each individual in the population, and the population size is N, the overall complexity for the DE/rand/1 mutation for the entire population is O(ND). This complexity is linear concerning both the population size N and the dimensionality of the problem D.

The computational complexity of cross-over and mutation is:

-

Step 1. Cross-over: O(ND)

-

Step 2. Mutation: O(ND)

-

Step 3. Cross-over and Mutation: O(ND)

-

a.

Elitism and Survivor Selection Complexity: Since the same elitism-based survivor selection described in (section “Computational complexity of GA”.C) is being utilized in DE for testing, the overall computational complexity of the survivor selection is O(NlogN).

-

b.

Overall complexity of DE:

The overall complexity of DE is represented as O (g.p.f), where

-g→represents the number of generations,

- p→denotes the population size, and

-f→encompasses the complexities associated with fitness evaluation, crossover, mutation, and survivor selection.

Therefore, O (g. p. f) = O(g. p.( N log N + ND + N log N)).

This simplifies to O (g. p. (N log N + ND)), where N signifies the number of frames in the video and D signifies the dimensionality of the problem or the number of components (or variables) in each solution vector45.

The computational complexity of DE is similar to GA; fitness evaluation dominates the complexity, and DE’s mutation and recombination have a small additional overhead 46.

Comparison of computational complexities

Table 20 compares the computational complexities of the proffered work with that of the DE and DL47.

It is clear from the complexity analysis that DL is higher than the complexity of GA. While DE) incurs a slightly higher overhead due to its recombination and mutation processes, in practical applications involving large-scale problems where fitness evaluations are costly, the difference in overall complexity between GA and DE is generally insignificant48. Nevertheless, the performance of GA often surpasses that of DE, as demonstrated by the experiments conducted.

Conclusion and future work

This investigation focused on the applicability of interactive Genetic Algorithms (GA) based on Key Frame Extraction (KFE) from input videos. The study’s initial results were reconfirmed by verification against conventional benchmark video data sets. The fitness function, cross-over, mutation, and survivor selection based on elitism proved instrumental in discrete KFE for any specified video. The proffered algorithm was measured with a diverse set of test video datasets, Amrita Vishwa Vidyapeetham University, Coimbatore, Tamil Nadu, India, the university’s surveillance camera video, user-generated videos, VSUMM, SumMe datasets, and videos from the Internet. The curve of fitness versus generations kept increasing, thus validating the algorithm for all the videos of assorted sizes, formats, and types. In order to evaluate the efficacy of this proposed algorithm, it was compared with a Differential Evolution (DE). The proposed work proved its mettle when stacked against Deep Learning (DL). The resultant KF can be unified into a new video for post-analysis and summarization.

The algorithm may be further improvised by considering different crossover and mutation operators and aiming for faster convergence.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Huang C., Wang H. Novel key-frames selection framework for comprehensive video summarization. IEEE Trans. Circuits Syst. Video Technol. IEEE. 2019.

Li, X., Zhao, B. & Lu, X. Key frame extraction in the summary space. IEEE Trans. Cybern. 48(6), 1923–1934 (2017).

Ma, M., Mei, S., Wan, S., Wang, Z. & Feng, D. Video summarization via nonlinear sparse dictionary selection. IEEE Access 7, 11763–11774 (2019).

Piperagkas, G. S., Mariolis, I., Ioannidis, D. & Tzovaras, D. Key frame extraction with semantic graphs in assembly processes. IEEE Robot. Autom. Lett. 2(3), 1264–1271 (2017).

Yoo, W. S. & Lee, B. H. Dynamic key-frame selection technique for enhanced odometry estimation based on laser scan similarity comparison. Electron. Lett. 53(13), 852–854 (2017).

Qian, X., Liu, X., Ma, X., Lu, D. & Xu, C. What is happening in the video? Annotate video by sentence. IEEE Trans. Circuits Syst. Video Technol. 26(9), 1746–1757 (2016).

SandhyaSree, V., Thangavelu, S.: Performance analysis of differential evolution algorithm variants in solving image segmentation. In International Conference on Computational Vision and Bio Inspired Computing, 329–337, Springer (2019).

Haritha, K. C., Thangavelu, S.: Multi focus region-based image fusion using differential evolution algorithm variants. In Computational Vision and Bio Inspired Computing, 579-592, Springer, Cham (2018).

Radhakrishnan, A., Jeyakumar, G. Evolutionary algorithm for solving combinatorial optimization review. Innov. Comput. Sci. Eng., pp. 539–545 (2021).

Ma, L., Wang, W., Yaozong Zhang, Yu., Shi, Z. H. & Hong, H. Multi-features combinatorial optimization for keyframe extraction. Electron. Res. Arch. 31(10), 5976–5995 (2023).

Zhang, Y. et al. Key frame extraction method for lecture videos based on spatiotemporal subtitles. Multimedia Tools Appl. 83(2), 5437–5450 (2024).

Sinulingga, H. R. & Kong, S. G. Key-frame extraction for reducing human effort in object detection training for video surveillance. Electronics 12(13), 2956 (2023).

Nandini, H. M., Chethan, H. K. & Rashmi, B. S. Shot-based keyframe extraction using edge-LBP approach. J. King Saudi Univ.-Comput. Inf. Sci. 34(7), 4537–4545 (2022).

Liu, L., Wang, X., Zhou, S. Keyframes extraction algorithm based on GA. In 2011 Fourth International Joint Conference on Computational Sciences and Optimization, pp. 808–811, IEEE (2011).

Yao, P.: Key frame extraction method of music and dance video based on multicore learning feature fusion. Sci. Program. 1, 9735392 (2022).

Bharathi, S., Senthilarasi, M. & Hari, K. Key frame extraction based on real-time person availability using YOLO. J. Wirel. Mobile Netw. Ubiquit. Comput. Depend. Appl. 14(2), 31–40 (2023).

Change Loy, C., Gong, S., Xiang, T. From semi-supervised to transfer counting of crowds. Paper presented at the Proceedings of the IEEE International Conference on Computer Vision, 2256–2263 (2013).

Loy, C. C., Chen, K., Gong, S. and Xiang, T. Crowd counting and profiling: Methodology and evaluation. Modeling, Simulation and Visual Analysis of Crowds, 347–382, Springer. 2013.

Loy, C. C., Chen K., Gong, S., Xiang, T. Feature mining for localized crowd counting, British Machine Vision.

Conference (BMVC),1(2) (2012).

De Avila, S. E., Lopes, A. P., da Luz, Jr A., de Albuquerque Araújo, A. VSUMM: A mechanism designed to produce static video summaries and a novel evaluation method. Pattern Recognit. Lett. 56–68.32, no. 1, Elsevier. 2011.

Storn, R., and Price, K. Differential evolutional simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim., pp. 341–35911 (1997).

Abraham, K. T., Ashwin, M., Sundar, D., Ashoor, T., Jeyakumar, G. An evolutionary computing approach for solving key frame extraction problem in video analytics. In 2017 International Conference on Communication and Signal Processing (ICCSP), pp. 1615–1619. IEEE. 2017.

Gygli, M., Grabner, H., Riemenschneider, H. and Van Gool, L. Creating summaries from user videos, In European Conference on Computer Vision, pp. 505520 (2014).

Zhang, K., Chao, W. L., Sha, F. and Grauman, K. Video summarization with long short-term memory, In European Conference on Computer Vision, pp. 766782 (2016).

Mahasseni, B., Lam, M. and Todorovic, S. Unsupervised video summarization with adversarial LSTM networks, In IEEE Conference on Computer Vision and Pattern Recognition, pp. 29822991 (2017).

Zhou, K., Qiao, Y. and Xiang, T. Deep reinforcement learning for unsupervised video summarization with diversity representativeness reward. In AAAI Conference on Artificial Intelligence, p. 75827589 (2018).

Rochan, M., Ye, L. and Wang, Y. Video summarization using fully convolutional sequence networks. In European Conference on Computer Vision, pp. 358374 (2018).

Ji, Z., Xiong, K., Pang, Y. and Li, X. Video summarization with attention-based encoder-decoder networks. IEEE Trans. Circuits Syst. Video Technol., p. 11 (2019).

Elfeki, M., Borji, A. Video summarization via actionless ranking. In IEEE Winter Conference on Applications of Computer Vision, pp. 754763 (2019).

Yuan, L., Tay, F.E., Li, P., Zhou, L. and Feng, J. Cyclesum: Cycle-consistent adversarial LSTM networks for unsupervised video summarization. In AAAI Conference on Artificial Intelligence, vol. 33, pp. 9143–9150 (2019).

Jung, Y., Cho, D., Kim, D., Woo, S. and Kweon, I. S. Discriminative feature learning for unsupervised video summarization, In AAAI Conference on Artificial Intelligence, vol. 33, pp. 85378544 (2019).

Jappie, Z., Torpey, D. and Celik, T. SummaryNet: A multi-stage deep learning model for automatic video summarization, arXiv preprint arXiv:2002.09424 (2020).

Zhu, W., Lu, J., Li, J. & Zhou, J. Dsnet: A flexible detect-to-summarize network for video summarization. IEEE Trans. Image Process. 30, 948–962 (2020).

Shi, Y., Xi, J., Hu, D., Cai, Z. & Xu, K. RayMVSNet++: Learning ray-based 1D implicit fields for accurate multi-view stereo. IEEE Trans. Pattern Anal. Mach. Intell. 45(11), 13666–13682. https://doi.org/10.1109/TPAMI.2023.3296163 (2023).

Song, W. et al. TalkingStyle: Personalized speech-driven 3D facial animation with style preservation. IEEE Trans. Vis. Comput. Graphics https://doi.org/10.1109/TVCG.2024.3409568 (2024).

Li, J., Zhang, C., Liu, Z., Hong, R. & Hu, H. Optimal Volumetric video streaming with hybrid saliency-based tiling. IEEE Trans. Multimedia 25, 2939–2953. https://doi.org/10.1109/TMM.2022.3153208 (2023).

Li, J., Han, L., Zhang, C., Li, Q. & Liu, Z. Spherical convolution empowered viewport prediction in 360 video multicast with limited FoV feedback. ACM Trans. Multimedia Comput. Commun. Appl. 19, 15. https://doi.org/10.1145/3511603 (2023).

Liu, Z., Jiang, G., Jia, W., Wang, T. & Wu, Y. Critical Density for K-coverage under border effects in camera sensor networks with irregular obstacles existence. IEEE Internet Things J. 11(4), 6426–6437. https://doi.org/10.1109/JIOT.2023.3311466 (2024).

He, S., Luo, H., Jiang, W., Jiang, X. & Ding, H. VGSG: Vision-guided semantic-group network for text-based person search. IEEE Trans. Image Process. 33, 163–176. https://doi.org/10.1109/TIP.2023.3337653 (2024).

Hu, C., Zhao, C., Shao, H., Deng, J. & Wang, Y. TMFF: Trustworthy multi-focus fusion framework for multi-label sewer defect classification in sewer inspection videos. IEEE Trans. Circuits Syst. Video Technol. https://doi.org/10.1109/TCSVT.2024.3433415 (2024).

Liu, C., Xie, K., Wu, T., Ma, C. & Ma, T. Distributed neural tensor completion for network monitoring data recovery. Inf. Sci. 662, 120259. https://doi.org/10.1016/j.ins.2024.120259 (2024).

Guo, T., Yuan, H., Hamzaoui, R., Wang, X. & Wang, L. Dependence-based coarse-to-fine approach for reducing distortion accumulation in G-PCC attribute compression. IEEE Trans. Ind. Inform. https://doi.org/10.1109/TII.2024.3403262 (2024).

He, S. et al. Region generation and assessment network for occluded person re-identification. IEEE Trans. Inf. Forensics Secur. 19, 120–132. https://doi.org/10.1109/TIFS.2023.3318956 (2024).

Yu, S. et al. Radar target complex high-resolution range profile modulation by external time coding metasurface. IEEE Trans. Microw. Theory Tech. 5, 55. https://doi.org/10.1109/TMTT.2024.3385421 (2024).

Cheng, D., Chen, L., Lv, C., Guo, L. & Kou, Q. Light-Guided and cross-fusion U-net for anti-illumination image super-resolution. IEEE Trans. Circuits Syst. Video Technol. 32(12), 8436–8449. https://doi.org/10.1109/TCSVT.2022.3194169 (2022).

Li, T., Braud, T., Li, Y. & Hui, P. Lifecycle-aware online video caching. IEEE Trans. Mobile Comput. 20(8), 2624–2636. https://doi.org/10.1109/TMC.2020.2984364 (2021).

Xing, J., Yuan, H., Hamzaoui, R., Liu, H. & Hou, J. GQE-Net: A graph-based quality enhancement network for point cloud color attribute. IEEE Trans. Image Process. 32, 6303–6317. https://doi.org/10.1109/TIP.2023.3330086 (2023).

Author information

Authors and Affiliations

Contributions

Conceptualization, Methodology, Software, Validation, Formal analysis, Investigation, Resources, Data Curation. Writing—Original Draft, Writing—Review & Editing, Visualization—Manjusha Rajan and Latha Parameswaran.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Consent to participate/publish

Informed consent was attained from all participants in the university surveillance videos (Figs. 11 and 12) and the author herself in Fig. 13. All other figures in the article correspond to the Mall, SumMe and VSUMM datasets, and videos from the internet, which are publicly available.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Rajan, M., Parameswaran, L. Key frame extraction algorithm for surveillance videos using an evolutionary approach. Sci Rep 15, 536 (2025). https://doi.org/10.1038/s41598-024-84324-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-84324-0