Abstract

The application of artificial neural networks (ANNs) can be found in numerous fields, including image and speech recognition, natural language processing, and autonomous vehicles. As well, intrusion detection, the subject of this paper, relies heavily on it. Different intrusion detection models have been constructed using ANNs. While ANNs are relatively mature to construct intrusion detection models, some challenges remain. Among the most notorious of these are the bloated models caused by the large number of parameters, and the non-interpretability of the models. Our paper presents Convolutional Kolmogorov-Arnold Networks (CKANs), which are designed to overcome these difficulties and provide an interpretable and accurate intrusion detection model. Kolmogorov-Arnold Networks (KANs) are developed from the Kolmogorov-Arnold representation theorem. Meanwhile, CKAN incorporates a convolutional computational mechanism based on KAN. The model proposed in this paper is constructed by incorporating attention mechanisms into CKAN’s computational logic. The datasets CICIoT2023 and CICIoMT2024 were used for model training and validation. From the results of evaluating the performance indicators of the experiments, the intrusion detection model constructed based on CKANs has an attractive application prospect. As compared with other methods, the model can predict a much higher level of accuracy with significantly fewer parameters. However, it is not superior in terms of memory usage, execution speed and energy consumption.

Similar content being viewed by others

Introduction

In modern society, internet technology has become an integral part of almost every aspect of daily life. With access to the Internet, you can video chat with loved ones and friends thousands of miles away, shop online, and access information at your fingertips. One of the high-profile application areas is the Internet of Things (IoT), where almost all electronic devices can be integrated through information technology1. IoT applications range from home and industrial automation2,3to smart cities4and connected cars5. IoT devices can be used to collect data, monitor and control physical assets, and make decisions6. IoT has the potential to revolutionize many industries and have a significant impact on the global economy7. However, while these technologies bring convenience and better experiences, they also have corresponding pitfalls. The sheer size of the population using these technologies has led some unscrupulous individuals to engage in profit-taking in defiance of legal and ethical constraints. Recently, Internet security incidents have become more frequent8, and the trend is expected to continue. Consequently, individuals, corporations and even nations will face unpredictable challenges. As a result, it is extremely important to build an effective protection system.

When building protection mechanisms, the current mainstream solution is to combine deep learning algorithms to design intrusion detection system (IDS). An innovative system for detecting intrusions in IoT networks using convolutional neural networks (CNNs) is proposed by the authors9. Compared to other models, this model exhibits good performance. Attention mechanisms for model optimization are very popular. Using attention mechanisms on top of the CNN, the authors optimized the model for faster processing of detection samples without sacrificing accuracy10. Some authors have also combined the excellent properties of spiking neural networks and CNNs for intrusion detection11. From the experimental results, it can be seen that the model is much less resource-intensive than the other models in terms of computational resource usage and energy consumption. While doing this, it maintains the same level of detection accuracy. Recurrent neural networks have also been used by some researchers to develop detection models12. Experimental results show that the model exhibits better results compared to existing methods. There are many similar intrusion detection models built on ANNs13,14,15. Despite the popularity of ANNs for intrusion detection model building, it is still undeniable that they suffer from deficiencies in interpretability. And these models are usually constructed with a large number of processing layers to achieve sufficient accuracy.

Using the Kolmogorov-Arnold representation theorem as inspiration, the authors proposed KANs16. A KAN with a much smaller size can provide similar or better accuracy relative to a multi-layer perceptron (MLP) of a much larger size for the purpose of fitting data and solving partial differential equations (PDEs). The KANs were designed with easy interpretability in mind. The visualizations of KANs were intuitive, and the interaction with humans was easy16. The choice of KANs for this paper was based on these excellent qualities. Convolutional computing is also a much-needed capability for detection models due to its powerful feature processing. It is these advantages that motivate this paper to propose an intrusion detection model based on CKANs. Here are the main contributions to the framework designed in this paper:

-

A novel data preprocessing procedure is proposed, where sample balancing and data normalization can be performed more rationally. A method for organizing features is proposed that augments significant features at the data level.

-

Using the Kolmogorov-Arnold representation theorem, a new intrusion detection model is developed for the first time. The model’s interpretability and accuracy can be enhanced. An intrusion detection model based on CKANs is designed and implemented, and attention mechanisms are employed to enhance the model’s performance.

-

The models were evaluated on the datasets CICIoT2023 and CICIoMT2024 for various accuracy metrics. The experimental results show that the model proposed in this paper can do a better job than other models. The computational resource requirements and energy consumption of the model are evaluated. The occurrence of these situations is also analyzed.

Throughout the rest of this paper, the following sections will be discussed. The work related to this topic is described in Sect. 2. A description of our proposed solution can be found in Sect. 3. A discussion of the results of the adopted models is provided in Sect. 4. An overview of the results of the experiments is presented in Sect. 5 along with suggestions for future research directions.

Related work

In full swing, research and development of learning models based on the KAN framework are being carried out. Researchers expect this new learning framework to replace classical MLPs and improve performance. The learning framework has been demonstrated to be feasible and advanced in a number of studies.

The KAN framework has been optimized by combining it with other techniques in several studies. Introduced B-splines and radial basis functions KAN (BSRBF-KAN), a KAN that combines B-splines and radial basis functions (RBFs) to fit input vectors in data training17. The authors found that BSRBF-KAN showed stability in 5 training sessions with competitive average accuracy. Deep operator KAN (DeepOKAN) is a new variant of neural operators that uses KAN architectures instead of CNNs18. When compared to MLP-based deep operator networks (DeepOnets), DeepOKANs achieve comparable accuracy with fewer parameters. A novel architecture, the fractional KAN (fKAN), is presented in the paper, which combines the distinctive features of KANs with a trainable adaptation of a fractional-orthogonal Jacobi function19. Investigating rational functions as a new basis function. The authors proposed two different approaches based on Pad ́e approximation and rational Jacobi functions as trainable basis functions, establishing the rational KAN (rKAN) [20]. According to that paper, smooth, structurally informed KANs can reach equivalence to MLPs in specific classes of functions by incorporating smoothness [21]. Authors introduced wavelet kolmogorov-arnold network (Wav-KAN), a novel neural network architecture that utilizes the wavelet KAN framework to improve performance and interpretability [22]. In addition to enhancing accuracy, Wav-KAN provides faster training speeds and increased robustness due to its ability to adapt to the data structure in the paper.

Several studies have made improvements based on graph computing. The Fourier KAN graph collaborative filtering (Fourier KAN-GCF) recommendation model is a simple and efficient graph-based recommendation model23. This model is based on a novel Fourier KAN that replaces the MLP in graph convolution networks (GCN) during feature transformations. As a result, graph collaborative filtering (GCF) represents better data and is more straightforward to train by using a Fourier KAN as part of feature transformation during message passing. The authors presented the Graph Kolmogorov-Arnold Networks (GKAN), a novel neural network architecture extending the theory of the recently proposed KAN to graph-structured data24. As opposed to classic GCNs which are based on static convolutional architectures, GKANs employ learnable spline-based functions between layers, transforming the way data is processed across graph layers. By using a real-world dataset (Cora), GKAN is experimentally evaluated using a semi-supervised graph learning problem. In general, architecture provides better performance. A comparison was made between KANs and MLPs when it came to graph learning tasks25. The authors conducted comprehensive experiments on node labeling, graph analysis, and graph regression studies. Based on the experimental results, KANs are comparable to MLPs in classification tasks, but they are clearly superior in graph regression tasks.

Another research topic is time-series data analysis. The Kolmogorov-Arnold representation theorem inspired KANs, which use spline-parametrized univariate functions instead of linear weights, enable them to learn activation patterns dynamically. Their study demonstrates that KANs provide more accurate results with fewer learning parameters than conventional MLPs in satellite traffic forecasting26. In addition, the authors present a study of the impact of KAN-specific parameters on performance. As part of their exploration of KAN for time series prediction, they proposed two methods: temporal KAN (T-KAN) and multivariate temporal KAN (MT-KAN)27. Through symbolic regression, T-KAN can explain the nonlinear relationship between predictions and previous time steps, allowing it to be highly interpretable in dynamically changing environments due to its ability to detect concept drift within time series. On the other hand, MT-KAN is effective in improving prediction performance through the discovery and utilization of complex relationships among variables in multivariate time series. These approaches are validated by experiments that demonstrate their effectiveness in time series forecasting tasks by significantly outperforming traditional methods. As a result of their research, they developed a temporal KANs (TKANs), a neural network architecture inspired by KANs and long short-term memory (LSTM)28. In TKANs, recurring KAN layers (RKANs) are embedded in memory management, combining the strengths of both networks. This innovation allows us to forecast multiple time series with improved accuracy and efficiency.

In addition to these applications, several other directions are also being investigated. KAN was integrated with several pre-trained CNN schemes for remote sensing (RS) image classification tasks using the EuroSAT dataset for the first time, demonstrating a high level of accuracy29. KAN has been used to predict the pressure and flow rate of flexible electrohydrodynamic pumps30. The authors evaluated the KAN model against random forest (RF), and MLP models using a dataset of flexible electrohydrodynamic pump parameters. In the experimental results, KAN has been demonstrated to be exceptionally accurate and interpretable, making it an appropriate alternative to electrohydrodynamic pumping predictive modeling. In their study, the authors propose a different PDE form using KAN rather than MLP, known as Kolmogorov-Arnold-Informed Neural Networks (KINNs)31. They compare MLP with KAN in several numerical PDE examples. For a number of PDEs in computational solid mechanics, KINN exceeds MLP in terms of accuracy and convergence speed. According to the enthusiasm of the researchers and KAN’s excellent performance in different applications, more research results will be made available for learning and application in the near future.

In the field of intrusion detection, there are also many excellent traditional deep learning models available. An approach based on hybrid learning is proposed to identify malicious traffic utilizing a lightweight, two-stage scheme32. The domain name system (DNS) is well protected in this way. A high-performance machine learning-based monitoring system for detecting malicious uniform resource locators (URLs) is presented in the paper33. A two-layer detection system is proposed in the proposal. In both binary and multi-class classification, it is superior. In this article, they propose a novel method for designing a smart IDS using software-defined networking (SDN) and deep learning34. This approach considers the SDN framework as a promising option that enables reconfiguration of static network infrastructure and separates the control plane from the data plane in smart consumer electronics networks. They propose a method for detecting and classifying network activity in an IoT system using predictive machine learning35. An evaluation of five supervised learning models was conducted to analyze their impact on the detection and classification of network activities in IoT systems. The results of their experiments indicate that their model is highly accurate in detecting anomalies. The article proposes a new method for detecting intrusions in IoT by stacking ensembles of deep learning models36. The model is evaluated on three open-source datasets, including binary and multi-class classification, and its results are compared with those of other standard machine learning methods. In experimental studies, it has demonstrated a high level of accuracy and a low false positive rate (FPR). These deep learning models based on traditional architectures have played a profound role in advancing the field. Table 1 summarizes these relevant research efforts.

Proposed scheme

This section describes the main elements of this study, including the data pre-processing process, the model construction process, and the internal mechanisms specific to the proposed model. A diagram depicting the overall framework of this study is shown in Fig. 1.

Data pre-processing

The datasets used in this study are CICIoT202337and CICIoMT202438. Even the current state-of-the-art software and hardware can’t guarantee that the data collected is completely correct, so the datasets need to be cleaned first to remove invalid data. Remove data records with null values, infinite values, and negative values (when they should be positive). Following the completion of this step, all data records left behind are valid. The balancing of the data samples was then carried out. There are often large differences in the number of samples of different types of data in the collected dataset. In order to avoid unfairness for data types with small samples, the sample set will be quantitatively balanced. In this paper, each type of data is clustered using the K-Means algorithm. The sample point at the center of the cluster is used as representative sample in the set of samples for that cluster. A total of 10,000 representative samples were collected in this manner for each type of data.

Data normalization operations are then performed on these selected samples. Due to their uneven distribution when analyzing the features, these values must be further processed prior to normalization. Figure 2shows the result after one field was analyzed using Isolation Forest (iForest)39. As can be seen from the blue area on the far left, this represents the normal data points obtained from the analysis, which constitutes only a small part of the range of values. However, the entire range of values expands dramatically due to a small number of values. In the area of the anomaly, the background color indicates the density of outliers within that region. In the iForest analysis, the proportion of outliers was set to 0.1. As can be seen from the figure, the vast majority of the sample points are concentrated in a very small area; whereas a small percentage of the samples are spread over a large area. Normalizing these samples directly on a proportional basis would result in a decrease in the distinguishability of most sample points. Because their values will be squeezed together. Without these widely varying values, most sample points could be normalized to give more discriminatory results. Because this is when the original small scope becomes global, so that the differences between features can be brought out as much as possible under the existing conditions. Based on this analysis, the outlier value is set to the nearest inlier value and then normalized. The following equation illustrates the normalization operation:

where \(\:\varvec{X}\) denotes the original value. \(\:{\varvec{X}}_{\varvec{m}\varvec{i}\varvec{n}}\) is the minimum value of the feature column and \(\:{\varvec{X}}_{\varvec{m}\varvec{a}\varvec{x}}\) is the maximum value of the feature column.

Next, feature selection is performed by a particle swarm algorithm. Features were selected using a regression XGBoost model fitted to the classification to which the samples belonged and evolved in the direction that minimized the root mean square error. The population size is 30 and the number of iterations is 100. Eventually the feature set with the highest score in the evaluation will be selected. In order to facilitate currently available deep learning models using the processed dataset, these features will be binary encoded and organized in the form of a 2D matrix. The selected features will be evaluated for information gain. Information gain can be calculated using the following formula:

where, \(\:\varvec{I}\varvec{G}(\varvec{S},\:\varvec{A})\) denotes the information gain of dataset \(\:\varvec{S}\) with respect to feature \(\:\varvec{A}\). \(\:\varvec{t}\) is the subset partitioned according to feature \(\:\varvec{A}\). \(\:\left|{\varvec{S}}_{\varvec{t}}\right|\) is the number of samples in the subset \(\:{\varvec{S}}_{\varvec{t}}\). And \(\:\left|\varvec{S}\right|\) is the total number of samples in the original dataset \(\:\varvec{S}\). \(\:\varvec{H}\left({\varvec{S}}_{\varvec{t}}\right)\) is the information entropy of the subset \(\:{\varvec{S}}_{\varvec{t}}\). Which is defined in Eq. 3.

where \(\:\varvec{b}\) is a constant and \(\:{\varvec{x}}_{\varvec{i}}\) is a sample point in a finite sample set. The few features with the highest information gain will have two chances to appear in the final feature representation. Make key information in the feature set more prominent by replicating it in a way that increases its visibility. So, it ends up being 36 features, each assembled into a 4*4 matrix, the features are a 6*6 matrix, and the resulting sample is a 24*24 matrix.

Kolmogorov–Arnold Networks

In the work of Vladimir Arnold and Andrey Kolmogorov, they showed that a multivariate continuous function on a bounded domain can be described as a finite synthesis of continuous functions of a one variable plus the binary operation of addition. Specifically, this can be expressed in the following equation:

where \(\:{\varvec{\phi\:}}_{\varvec{q},\varvec{p}}:[0,1]\to\:\mathbb{R}\) and \(\:{\varvec{\varnothing\:}}_{\varvec{q}}:\mathbb{R}\to\:\mathbb{R}\). Each layer of the KAN is composed of these learnable one-dimensional functions:

Each function \(\:{\varvec{\phi\:}}_{\varvec{q},\varvec{p}}\) is rendered as a B-spline. B-spline functions are segmented continuous polynomial functions that are continuous and finitely derivable over the whole curve. Through the use of node vectors and basis functions, the B-spline curve is a derivation of the Bézier Curve that allows finer control over the shape of the curve. B-spline is a type of spline function created by a linear combination of base splines. That can effectively improve the network’s ability to represent complex data. \(\:{\varvec{n}}_{\varvec{i}\varvec{n}}\) refers to a layer’s input features. A layer’s \(\:{\varvec{n}}_{\varvec{o}\varvec{u}\varvec{t}}\), on the other hand, indicates its output features, which reflect dimensional transformations.

Using an integer array, a KAN’s shape can be represented as follows:

Computation graph nodes at layer \(\:\varvec{i}\) are represented by \(\:{\varvec{n}}_{\varvec{i}}\). An \(\:\varvec{i}\)th neuron in the \(\:\varvec{l}\)th layer is represented by \(\:(\varvec{l},\:\varvec{i})\). And its activation value is indicated by \(\:{\varvec{x}}_{\varvec{l},\varvec{i}}\). The activation functions between layers \(\:\varvec{l}\) and \(\:\varvec{l}+1\) are \(\:{\varvec{n}}_{\varvec{l}}{\varvec{n}}_{\varvec{l}+1}\). The activation function for \(\:(\varvec{l},\:\varvec{i})\) and \(\:(\varvec{l}+1,\:\varvec{j})\) is as follows:

KANs have an overall structure like MLPs, which stack layers. However, rather than relying on simple linear transformations and nonlinear activations, it makes use of complex functional mappings.

The computational logic of the KAN network with \(\:\varvec{L}\) layers is shown in Eq. 6. Transform each layer’s input, \(\:{\varvec{x}}_{\varvec{l}}\), to get the next layer’s input, \(\:{\varvec{x}}_{\varvec{l}+1}\), as follows:

It can be stated that the activation function \(\:\varvec{\phi\:}\left(\varvec{x}\right)\) is the sum of the basis function \(\:\varvec{b}\left(\varvec{x}\right)\) and the spline function:

\(\:{\varvec{\omega\:}}_{1}\) and \(\:{\varvec{\omega\:}}_{2}\) are the weight parameters of the corresponding parts. Here set:

The \(\:spline\left(x\right)\) is a linear combination of B-splines. The learnable spline functions are:

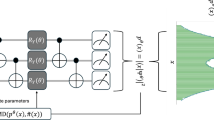

Attention based Conversational Kolmogorov–Arnold Networks

The Convolutional Kolmogorov-Arnold Network is similar to the CNN. It successfully integrates the advantages of KAN and the computational mechanisms of CNN. In comparison with other architectures, the CKAN has the advantage of requiring a relatively small number of parameters40. In KAN Convolutions, there is a significant difference between the kernel and that of CNN Convolutions. CNNs utilize weights, whereas Convolutional KANs use nonlinear functions that utilize B-splines to construct the kernels. A convolution kernel consists of the same elements as Eq. 10. Set K as the KAN convolutional kernel \(\:\in\:\:{\mathbb{R}}^{\varvec{N}\times\:\varvec{M}}\). The KAN Convolution can be defined as follows:

Suppose there is the following input matrix for which KAN convolution calculation needs to be performed.

If the kernel of the KAN convolution is 3*3:

The result is shown below:

The overall structure of the proposed model is shown in Fig. 3. The core innovation of the KAN framework is to place learnable activation functions on the edges. In contrast, traditional frameworks place them in nodes and fix them. It is with this approach that the model will be able to learn more complex functional relationships between data. The weight parameters have been replaced by parametric spline functions, which enhances the model’s expressive potential. Having done this, it will be able to gain a deeper understanding of more detailed and complex information through deep learning. Compared to traditional deep learning techniques, the KAN framework is also more interpretable. KAN is a structured, easy-to-understand system that facilitates human-computer interaction. Consequently, scientists can gain a solid understanding of the inner workings of the model, and even participate directly in its optimization and discovery. Models can be guided by scientists so that the laws of mathematics and physics can be discovered or verified, thus facilitating the collaboration with scientists and artificial intelligence.

The execution logic of the attention mechanism is shown in algorithm 1.

Algorithm 1: Attention mechanism |

|---|

Input: Tensor |

Output: Tensor with added attention mechanism 1 max_pool \(\:\leftarrow\:\) use maximum pooling to obtain global features for each channel |

2 avg_pool \(\:\leftarrow\:\) obtaining global features for each channel using average pooling 3 # Define MLP, where channel_in and channel_out of Conv2d are equal. |

4 mlp \(\:\leftarrow\:\) Sequential (Conv2d (channel_in, channel_out, 1, bias = False), |

5 ReLU (), |

6 Conv2d (channel_in, channel_out, 1, bias = False)) |

7 conv \(\:\leftarrow\:\) Conv2d (2, 1, kernel_size = 3, padding = 1, bias = False) |

8 max_out \(\:\leftarrow\:\) mlp(max_pool(input)) |

9 avg_out \(\:\leftarrow\:\) mlp (avg_pool (input)) 10 channel_out \(\:\leftarrow\:\) Sigmoid (max_out + avg_out) 11 out1 = channel_out * input 12 max_out \(\:\leftarrow\:\) get the maximum value for each channel, along the channel dimension 13 avg_out \(\:\leftarrow\:\) get the average value for each channel along the channel dimension 14 spatial_out \(\:\leftarrow\:\)Sigmoid (conv (cat ([max_out, avg_out], dim = 1))) 15 out = spatial_out * out1 |

As follows is the definition of the ReLU function:

And the sigmoid function:

Algorithm 2

below demonstrates the computational logic of the loss function used in the proposal model training.

Algorithm 2: Loss function for the proposed model |

|---|

Input: Number of classifications \(\:\to\:\) n_class Sample prediction results \(\:\to\:\)\(\:predict\) Sample label \(\:\to\:\)\(\:target\) |

Output: Loss value between prediction and real label 1 correct \(\:\leftarrow\:\) get the prediction result’s probability of correct classification |

2 predict \(\:\leftarrow\:\) probability value of the current prediction classification 3 ids \(\:\leftarrow\:\) rank the probability of the correct classification |

4 \(\:\alpha\:\:\leftarrow\:\:predict\:-correct\:\) |

5 \(\:\beta\:\leftarrow\:\:1-correct\) |

6 loss \(\:\leftarrow\:\)\(\:mean(n\_class\:*\:\alpha\:\:+\:(ids\:+\:1\left)\:*\:\beta\:\right)\) |

Training a model uses the following strategy to adjust the learning rate:

\(\:{\zeta\:}_{1}\) is the starting learning rate used for the first epoch of model training. In this situation, \(\:{I}_{\text{m}\text{a}\text{x}}\) represents the total number of epochs the model must undergo training and \(\:i\) represents the number of training rounds currently in progress.

Performance evaluation and discussion

This section focuses on experimenting with the proposal model. The experimental environment and evaluation metrics are presented, and the experimental results are compared and discussed with classical deep learning models widely used today.

Experimental environment

For model validation, the experimental environment is as follows:

-

Operating system: Linux-5.15.120 + -x86_64-with-glibc2.31.

-

CPU: Intel(R) Xeon(R) @ 2.20 GHz, 4 Core(s), 42.5 W.

-

RAM: 32 GB, 11.76 W.

The GPU(s) used for model training:

-

NVIDIA Tesla P100 (16 GB).

Evaluation metrics

A number of criteria were used for evaluating each model during the experiments, and the major evaluation criteria were as follows:

(1) The accuracy of detection, which is an indication of a model’s basic capability. Evaluation indicators used in this study include:

TP stands for true positive. TN stands for true negative. FP and FN stand for false positives and false negatives, respectively.

(2) The complexity of a model is measured by three factors: the number of parameters included in it, the amount of computation required for each sample to be analyzed, and the amount of memory occupied by the model when training is complete.

(3) Execution speed, measured in terms of sample processing per second.

(4) Memory allocation of the model during sample processing.

(5) Energy consumption is determined by averaging the power consumption for 10,000 samples.

Experimental results and discussion

Different data types were encoded in the experimental session to facilitate the presentation of experimental results. The correspondence between specific data types and their encodings is shown in Table 2.

Table 3 shows the overall classification accuracy performance of all the models used in this study. Tables 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14 and 15 present detailed experimental results for each model, based on the classification of the data. Figures 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14 and 15 illustrate the confusion matrices corresponding to these models.

The comparative models in the experiments include nine commonly used classical models and two state-of-the-art models. These two models are Spikformer41and SpikingGCN42, respectively. From the experimental results, it can be seen that the model proposed in this paper outperforms other models in terms of overall classification accuracy. This shows that the model has strong analysis capability of the data features. More detailed metrics also include recall, precision, f1-score and false positive rate. Recall means the proportion of samples predicted to be true out of all samples actually true. It is an effective way to assess the model’s ability to find out the positive class of samples. Precision denotes the proportion of samples that are actually true out of all samples predicted to be true. It is a technique used to assess the quality of samples predicted to be positive by a model. The F1-score, on the other hand, is a reconciled average of the two, which is used for coordinated analysis. A false positive rate is calculated by dividing the number of false positive samples detected by the number of true negative samples. It can be used to assess the model’s reliability and validity. The performance of these metrics, while slightly worse than other models in some classifications, remains dominant overall. The results indicate that the model proposed in the paper is superior across all accuracy metrics.

The performance metrics exhibited by these models during execution are presented in Table 16. These include the number of parameters in the model, the number of floating-point operations to compute a single sample, and the size of the model when training is complete. In addition, the number and total amount of memory allocations required by the model during model validation were also counted. Data related to memory is derived from the tracemalloc Python library. These values are calculated from the system memory snapshot when the model processes a single sample. Energy consumption and sample processing speed were also quantified.

It can be seen from the experimental results that the proposed CKAN model can achieve classification accuracy superior to the other models. This is done while using only a much smaller number of parameters than the others. The size of the resulting model from the final training is also smaller than most models. However, in terms of memory allocation, both the number of allocations and the allocated memory space are greater than the other models. This also led to its poor performance in two subsequent metrics, power consumption and sample processing speed.

The proposed model is significantly smaller than the other models both in terms of network depth and number of parameters. The calculation of the samples, however, requires more memory than other models. It follows that the availability of memory in the runtime environment will play a very significant role in the execution of the model. An efficient memory allocation strategy will enhance the efficiency of model execution and vice versa, it will become an execution bottleneck for the model. It can also be observed from the experimental results that very little memory used by the model is reused during the inference process. Therefore, it needs to perform memory allocation and memory write operations more frequently. Consequently, the model detects samples at a slower rate than other models. While other models have a larger number of parameters, they can usually manage their own parameters in memory more conveniently. This is mainly due to the fact that KAN’s connection weights are computed from B-spline functions, rather than simple linear weights. There is no doubt that it will be more computationally complex. Currently, the proposed model is only suitable for use in scenarios with sufficient computational resources so that it can prove its own advantages in detection. If used in resource-constrained settings, its computational performance can seem slow and less efficient. Furthermore, in terms of energy consumption, this model is not suitable for use in situations with a lack of adequate energy resources.

Although the model statistical results show the KAN framework requires far fewer parameters and floating-point computations. The performance, however, is poor when it comes to memory usage. It has more frequent memory operations and requires more memory space. Therefore, to improve the execution efficiency of deep learning models based on the KAN framework, it is necessary to start with memory and computational strategies. It is necessary to optimize the way calculations are performed, to maximize computational efficiency and reduce memory allocation requirements. Another idea is to design hardware architectures that are more suitable for composite computing. Current hardware designs are more favorable to traditional deep learning frameworks, highlighting the disadvantages of the KAN framework. As soon as the KAN framework is able to make significant progress in terms of execution efficiency, it will become one of the most desirable deep learning frameworks.

Conclusions and future work

During the experiments, the model proposed in this paper is compared with nine currently popular classical models and two state-of-the-art models. A comprehensive set of indicators is used in the comparison. In the overall picture, the CKAN model leads all the other models in classification accuracy. In terms of computational efficiency and energy consumption, however, it presents a limitation. The current model is more suitable for use in scenarios with sufficient computational resources and may not perform as well when computational resources are limited.

Deep learning models based on the KAN architecture replace the original connection weights with a form fitted by a finite number of spline functions compared to traditional deep learning models. There is a significant increase in computational effort associated with this substitution operation. Trainable parameters have changed from linear objects to non-linear objects. On the one hand, this results in a marked increase in the model’s training duration. On the other hand, the model’s sample processing speed during validation is lower than other models. It can be seen that the model requires frequent memory manipulation during inference calculations. The advantage is that the model fits the data more precisely, leading to improved accuracy.

The spline functions fitting calculations in the model will be examined in greater depth in future work. As things stand, this part is where the bottleneck in the model’s computational efficiency lies. If this part of the computational mechanism can be improved in an efficient way, it will lead to a significant enhancement in the execution efficiency of models based on the KAN architecture. If this is successfully achieved, it is expected that KAN-based deep learning models will grow significantly and shine in more and wider fields.

Data availability

The datasets used in this paper are publicly available at https://www.unb.ca/cic/datasets/iotdataset-2023.html and https://www.unb.ca/cic/datasets/iomt-dataset-2024.html.

References

Chataut, R., Phoummalayvane, A. & Akl, R. Unleashing the power of IoT: A comprehensive review of IoT applications and future prospects in healthcare, agriculture, smart homes, smart cities, and industry 4.0. Sensors, 23(16), p.7194. (2023).

Huda, N. U., Ahmed, I., Adnan, M., Ali, M. & Naeem, F. Experts and intelligent systems for smart homes’ Transformation to Sustainable Smart Cities: A comprehensive review. Expert Systems with Applications, 238, p.122380. (2024).

Kamm, S., Veekati, S. S., Müller, T., Jazdi, N. & Weyrich, M. A survey on machine learning based analysis of heterogeneous data in industrial automation. Computers in Industry, 149, p.103930. (2023).

Hui, C. X., Dan, G., Alamri, S. & Toghraie, D. Greening smart cities: An investigation of the integration of urban natural resources and smart city technologies for promoting environmental sustainability. Sustainable Cities and Society, 99, p.104985. (2023).

Choi, D. D. & Lowry, P. B. Balancing the commitment to the common good and the protection of personal privacy: Consumer adoption of sustainable, smart connected cars. Information & management, 61(1), p.103876. (2024).

Mary, D. S., Dhas, L. J. S., Deepa, A. R., Chaurasia, M. A. & Sheela, C. J. J. Network intrusion detection: An optimized deep learning approach using big data analytics. Expert Systems with Applications, 251, p.123919. (2024).

Mazhar, T. et al. Analysis of challenges and solutions of IoT in smart grids using AI and machine learning techniques: A review. Electronics, 12(1), p.242. (2023).

Shandler, R. & Gomez, M. A. The hidden threat of cyber-attacks–undermining public confidence in government. J. Inform. Technol. Politics. 20 (4), 359–374 (2023).

El-Ghamry, A., Darwish, A. & Hassanien, A. E. An optimized CNN-based intrusion detection system for reducing risks in smart farming. Internet of Things, 22, p.100709. (2023).

Wang, Z. & Ghaleb, F. A. An attention-based convolutional neural network for intrusion detection model. IEEE Access. 11, 43116–43127 (2023).

Wang, Z., Ghaleb, F. A., Zainal, A., Siraj, M. M. & Lu, X. An efficient intrusion detection model based on convolutional spiking neural network. Scientific Reports, 14(1), p.7054. (2024).

Kasongo, S. M. A deep learning technique for intrusion detection system using a recurrent neural networks based framework. Comput. Commun. 199, 113–125 (2023).

Kumar, G. S. C., Kumar, R. K., Kumar, K. P. V., Sai, N. R. & Brahmaiah, M. Deep residual convolutional neural network: an efficient technique for intrusion detection system. Expert Systems with Applications, 238, p.121912. (2024).

Bakhsh, S. A. et al. Enhancing IoT network security through deep learning-powered Intrusion Detection System. Internet of Things, 24, p.100936. (2023).

Hnamte, V., Najar, A. A., Nhung-Nguyen, H., Hussain, J. & Sugali, M. N. DDoS attack detection and mitigation using deep neural network in SDN environment. Computers & Security, 138, p.103661. (2024).

Liu, Z. et al. Kan: Kolmogorov-arnold networks. Preprint at https://doi.org/10.48550/arXiv.2404.19756 (2024).

Ta, H. T. BSRBF-KAN: a combination of B-splines and Radial Basic functions in Kolmogorov-Arnold Networks. Preprint at https://doi.org/10.48550/arXiv.2406.11173 (2024).

Abueidda, D. W., Pantidis, P. & Mobasher, M. E. Deepokan: deep operator network based on Kolmogorov Arnold networks for mechanics problems. Preprint at https://doi.org/10.48550/arXiv.2405.19143 (2024).

Aghaei, A. A. fKAN: Fractional Kolmogorov-Arnold Networks with trainable Jacobi basis functions. Preprint at https://doi.org/10.48550/arXiv.2406.07456 (2024).

Aghaei, A. A. rKAN: Rational Kolmogorov-Arnold Networks. Preprint at https://doi.org/10.48550/arXiv.2406.14495 (2024).

Samadi, M. E., Müller, Y. & Schuppert, A. Smooth Kolmogorov Arnold networks enabling structural knowledge representation. Preprint at https://doi.org/10.48550/arXiv.2405.11318 (2024).

Bozorgasl, Z. & Chen, H. Wav-kan: Wavelet Kolmogorov-Arnold networks. Preprint at https://doi.org/10.48550/arXiv.2405.12832 (2024).

Xu, J. et al. FourierKAN-GCF: Fourier Kolmogorov-Arnold Network–An effective and efficient feature Transformation for Graph Collaborative Filtering. Preprint at https://doi.org/10.48550/arXiv.2406.01034 (2024).

Kiamari, M., Kiamari, M. & Krishnamachari, B. GKAN: Graph Kolmogorov-Arnold Networks. Preprint at https://doi.org/10.48550/arXiv.2406.06470 (2024).

Bresson, R. et al. KAGNNs: Kolmogorov-Arnold Networks meet Graph Learning. Preprint at https://doi.org/10.48550/arXiv.2406.18380 (2024).

Vaca-Rubio, C. J., Blanco, L., Pereira, R. & Caus, M. Kolmogorov-Arnold networks (kans) for time series analysis. Preprint at https://doi.org/10.48550/arXiv.2405.08790 (2024).

Xu, K., Chen, L. & Wang, S. Kolmogorov-Arnold Networks for Time Series: Bridging Predictive Power and Interpretability. Preprint at https://doi.org/10.48550/arXiv.2406.02496 (2024).

Genet, R. & Inzirillo, H. Tkan: temporal kolmogorov-arnold networks. Preprint at https://doi.org/10.48550/arXiv.2405.07344 (2024).

Cheon, M. Kolmogorov-Arnold Network for Satellite Image classification in Remote Sensing. Preprint at https://doi.org/10.48550/arXiv.2406.00600 (2024).

Peng, Y. et al. Predictive modeling of flexible EHD pumps using Kolmogorov-Arnold Networks. Biomimetic Intelligence and Robotics, 4(4), p. 100184. (2024).

Wang, Y. et al. Kolmogorov Arnold Informed neural network: A physics-informed deep learning framework for solving PDEs based on Kolmogorov Arnold Networks. Comput. Methods Appl. Mech. Engrg. 433, p. 117518. (2024).

Abu Al-Haija, Q., Alohaly, M. & Odeh, A. A lightweight double-stage scheme to identify malicious DNS over HTTPS traffic using a hybrid learning approach. Sensors, 23(7), p.3489. (2023).

Abu Al-Haija, Q. & Al-Fayoumi, M. An intelligent identification and classification system for malicious uniform resource locators (URLs). Neural Comput. Appl. 35 (23), 16995–17011 (2023).

Javeed, D. et al. An intelligent intrusion detection system for smart consumer electronics network. IEEE Trans. Consum. Electron. 69 (4), 906–913 (2023).

Alsulami, A. A., Abu Al-Haija, Q., Tayeb, A. & Alqahtani, A. An intrusion detection and classification system for IoT traffic with improved data engineering. Applied Sciences, 12(23), p.12336. (2022).

Lazzarini, R., Tianfield, H. & Charissis, V. A stacking ensemble of deep learning models for IoT intrusion detection. Knowl. Based Syst. 279, 110941 (2023).

Neto, E. C. P. et al. CICIoT2023: A real-time dataset and benchmark for large-scale attacks in IoT environment. Sensors, 23(13), p.5941. (2023).

Dadkhah, S. et al. Raphael and Chukwuka Molokwu, Reginald and Sadeghi, Somayeh and Ghorbani, Ali, CiCIoMT2024 (Attack Vectors in Healthcare Devices-A Multi-Protocol Dataset for Assessing IoMT Device Security, 2024). CICIoMT2024: Attack Vectors in Healthcare devices-A Multi-Protocol Dataset for Assessing IoMT Device Security.

Liu, F. T., Ting, K. M. & Zhou, Z. H. December. Isolation forest. In 2008 eighth ieee international conference on data mining (pp. 413–422). IEEE. (2008).

Bodner, A. D., Tepsich, A. S., Spolski, J. N. & Pourteau, S. Convolutional Kolmogorov-Arnold Networks. Preprint at https://doi.org/10.48550/arXiv.2406.13155 (2024).

Zhou, Z. et al. Spikformer: when spiking neural network meets transformer. Preprint at http://arxiv.org/abs/2209.15425 (2022).

Zhu, Z. et al. Spiking graph convolutional networks. Preprint at http://arxiv.org/abs/2205. 02767 (2022).

Funding

The Talents Subsidized Project (R23165) of Tongling University under Grant 2024tlxyrc020. The research project (K24061) of Tongling University under Grant 2024tlxyxdz280.

Author information

Authors and Affiliations

Contributions

Zhen Wang conducted most of the experiments and drafted the manuscript. Anazida Zainal, Maheyzah Md Siraj and Fuad A. Ghaleb organized discussions and provided many suggestions for this work. Xue Hao assisted with the experiments and collected and compiled the results. Shaoyong Han provided various experimental environments and computational resources, and offered insightful ideas during the research process.All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, Z., Zainal, A., Siraj, M.M. et al. An intrusion detection model based on Convolutional Kolmogorov-Arnold Networks. Sci Rep 15, 1917 (2025). https://doi.org/10.1038/s41598-024-85083-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-85083-8

Keywords

This article is cited by

-

Design of an iterative method for adaptive federated intrusion detection for energy-constrained edge-centric 6G IoT cyber-physical systems

Scientific Reports (2025)

-

Using Kolmogorov–Arnold network and ResNet for marine protein mapping in support of the Prabowo–Gibran MBG program

Discover Sustainability (2025)

-

Quantum-inspired adaptive mutation operator enabled PSO (QAMO-PSO) for parallel optimization and tailoring parameters of Kolmogorov–Arnold network

The Journal of Supercomputing (2025)