Abstract

This work aims to explore the application of artistic creativity using deep learning (DL) and artificial intelligence (AI) in the evaluation system of innovation and entrepreneurship. Moreover, this work intends to propose a new perspective for talent cultivation in animation majors in higher education institutions. Addressing the strong subjectivity and lack of quantitative indicators in traditional artistic creativity evaluation methods, this work constructs an artistic creativity innovation evaluation model based on the Backpropagation Neural Network (BPNN) integrated with the Style-Based Generative Adversarial Network (StyleGAN) algorithm. After preprocessing the artistic creativity image data, the data are input into the BPNN and StyleGAN fusion model. The model training uses the Adam optimizer, with 120 iterations set, and incorporates Dropout layers during training to enhance the model’s generalization ability. The performance of the model is evaluated, revealing that it outperforms existing technologies in indicators such as loss function value, fitting effect, accuracy, precision, recall, and F1 score, with an accuracy of up to 96.30%. Additionally, this work explores the potential application of this model in talent cultivation in animation majors in higher education institutions, providing new teaching tools and methods to enhance students’ innovation ability and practical skills. Therefore, this work offers a new technical means for artistic creativity evaluation and provides important references and guidance for innovation in higher education art education models and talent cultivation in the animation industry.

Similar content being viewed by others

Introduction

In today’s era of globalization and rapid advancements in information technology, artistic creativity is not only an important form of aesthetic expression but also a key driver of social progress and economic vitality1,2. However, existing evaluation systems for artistic creativity often have significant shortcomings and fail to meet the urgent demand for assessing artistic creativity in innovation and entrepreneurship3. Traditional evaluation methods largely rely on subjective judgment and qualitative analysis, lacking objectivity and practical assessment tools. As a result, the evaluation of artistic creativity often varies from person to person and fails to accurately reflect its innovativeness and market potential. Moreover, with the deep integration of art with technology, business, and other fields, the role of artistic creativity in innovation and entrepreneurship has become increasingly prominent. This intensifies the demand for technologies and evaluation systems that can comprehensively and objectively assess artistic creativity.

In order to address this issue, deep learning (DL), as a branch of artificial intelligence (AI), shows great potential when applied to the evaluation of artistic creativity. DL algorithms can learn from a large number of artworks and extract key features, enabling automatic identification and analysis of artistic works4,5. By training deep neural networks, they can achieve high recognition accuracy and intelligence, thereby helping to construct a more objective and comprehensive evaluation system for artistic creativity6,7,8. By combining DL with other AI technologies such as machine learning and natural language processing, intelligent evaluation models can be built to assist in assessing key factors such as innovation and market potential of artworks. This can provide technical support and solutions for building innovative evaluation systems and enhancing the level of talent cultivation in animation education9,10.

This work aims to address the problems and challenges in the fields of artistic creativity, innovation and entrepreneurship evaluation, and talent cultivation in animation education. First, it focuses on constructing an innovation and entrepreneurship evaluation system suitable for the field of artistic creativity. By integrating DL, this work aims to learn from a vast array of artistic works and extract key features to achieve automatic identification and analysis of artistic works. Additionally, this work establishes intelligent evaluation models to assist in assessing crucial factors such as innovation and market potential of artistic works, aiming for a more comprehensive and accurate evaluation of artistic creativity. Simultaneously, this work conducts an in-depth analysis of existing talent cultivation models in animation education and proposes improvement measures. Finally, this work explores the prospects of AI technology in animation education to foster students’ innovation and practical abilities, injecting new vitality into the development of the animation industry.

Literature review

Overview of the current status of artistic creativity evaluation

In the field of artistic creativity evaluation, many scholars have conducted related research. For example, Acar and& Runco studied assessment methods for divergent thinking, proposed new measurement tools, and expanded creativity theory. They emphasized the role of data-driven approaches in creativity research and provided a more theoretically supported direction for future creativity assessment11. Kozhevnikov et al. emphasized the role of visual ability and cognitive style in artistic creativity but noted that existing research primarily relied on subjective judgments, lacking objectivity and quantifiable metrics. This highlighted significant limitations in traditional creativity assessment methods, which struggled to meet the demand for objective evaluation in innovation and entrepreneurship fields12. Cruz et al., through a systematic review, identified a correlation between emotional states and creativity, but their study also depended on subjective assessments, further underscoring the limitations of traditional approaches in quantifying creativity13. Díaz-Portugal et al. investigated emotion-driven decision-making in cultural and creative entrepreneurs during opportunity evaluation, finding limited impact of emotions on opportunity assessment. This provided new insights into the psychological mechanisms of creative decision-making but also revealed that traditional assessment methods were inadequate for handling complex emotional and creative factors14. Collectively, these studies demonstrate that traditional creativity assessment methods suffer from strong subjectivity and a lack of quantitative indicators.

The data-driven method can provide a more objective and comprehensive evaluation to better meet the needs of artistic creativity evaluation in the field of innovation and entrepreneurship. For example, Plucker reviewed the latest developments in creativity assessment, and explored current challenges and future directions. Their study pointed out that creativity measurement tools had been increasingly refined and highlighted the need to integrate multidimensional approaches to enhance measurement accuracy. It also emphasized the importance of creativity assessment in education and social applications15. Hitsuwari et al. performed aesthetic evaluations of poetry co-created by humans and AI, and discovered no significant difference in aesthetic quality between human-created works and AI-generated ones. This offered new insights into the application of AI in artistic creation16. However, their study primarily focused on the creative outcomes of AI-human collaboration and did not explore how to enhance the quantitative level of artistic creativity through an evaluation system. Sung et al. developed and validated a DL-based computerized creativity assessment tool that enabled automatic scoring. The method combines text analysis with generative models to improve the objectivity and efficiency of the assessment, drive the intelligent development of creativity measurement and provide new ideas and technological support for future research17. However, they did not explore how to translate these findings into practical evaluation tools.

Overview of the current status of innovation and entrepreneurship evaluation

In the field of innovation and entrepreneurship evaluation, researchers primarily focus on market potential, business models, and innovation within entrepreneurial projects. For example, Koch et al. studied innovation in the creative industries, linked the founder’s creativity with commercial orientation and explored its impact on innovation outcomes18. Ávila et al. revealed the importance of emotions in art entrepreneurship practices by examining the emotional experiences of entrepreneurs, and offered new perspectives for entrepreneurship education19. These studies emphasized the importance of artistic creativity in the business realm but often overlooked its unique role in innovation and entrepreneurship.

Furthermore, Marins et al. and Purnomo introduced an aesthetic perspective from cultural and artistic entrepreneurship. However, these studies mainly focused on the cultural creative industries and lacked exploration of the role of artistic creativity in broader innovation and entrepreneurship fields20,21. This gap highlights the necessity of developing a more inclusive evaluation system to fully recognize the multifaceted role of artistic creativity in driving innovation and entrepreneurship.

Purg et al. explored the mutual influence between art and entrepreneurship through case studies and theoretical analysis, and proposed new ideas to promote the development of the creative industries22. They noted that the combination of art and entrepreneurship could not only stimulate new business models but also bring profound social and cultural impacts. However, their study focused more on the theoretical connections between art and entrepreneurship and did not clarify how to combine artistic creativity with commercial value through an artistic evaluation system. Muriel-Nogales et al. focused on the innovative practices and policies of social enterprises and third-sector organizations in the cultural and artistic fields. They provided a wealth of case studies and experiences, and revealed the potential of the cultural and artistic sector in social transformation23. These studies offered valuable insights into the role of artistic creativity in social and cultural innovation, but there was still a lack of in-depth exploration regarding the construction of specific evaluation metrics and methods.

The current application of DL and AI in artistic creativity

In recent years, the application of DL and AI technologies in the evaluation of artistic creativity has gradually gained attention. Khalid et al. used DL algorithms to achieve automatic recognition and monitoring of agricultural pests. This demonstrated AI’s powerful capabilities in image recognition and feature extraction, and offered technical insights for evaluating artistic creativity24. Ma et al. reviewed the DL methods in microbial image analysis, and highlighted their advantages in image classification and feature recognition, further proving the potential of DL in processing complex image data25. Chen et al. improved the quality and diagnostic accuracy of medical images through DL algorithms. Their findings showed that these technologies could effectively enhance image details and improve analysis, which provided a reference for image processing and feature extraction in artworks26. Additionally, Fu et al. used DL technology to achieve 3D super-resolution imaging in cell biology, and demonstrated the application prospects of AI in image generation and optimization27. Gendy et al. reviewed lightweight image super-resolution methods, and pointed out the potential of DL technologies in enhancing image details and visual representation, providing richer technical tools for artistic creativity evaluation28. These studies indicate that DL and AI technologies can offer objective quantitative indicators and efficient technical support for evaluating artistic creativity.

Despite this, the application of DL and AI technologies in evaluating artistic creativity remains in an exploratory phase. Existing studies focus mostly on technology development and validation, lacking in-depth integration with the actual needs of the art field. For example, how to combine AI technologies with the aesthetic features of artworks, how to use quantitative indicators to comprehensively assess the innovativeness and market potential of artistic creativity, and how to apply these technologies in art education and talent development are still weak points in current research. Recent studies have begun to fill this gap. For example, Jin et al. used the Generative Adversarial Network (GAN) to generate pencil sketches and combined learning analytics to provide personalized art education experiences for students29. Their research demonstrates the potential of AI in art education but lacks a systematic framework for quantifying artistic creativity. Similarly, Tsita et al. designed a virtual reality environment to enhance users’ understanding of contemporary art, but the focus of the research was on user experience, with limited applicability to creativity evaluation30.

In the field of animation education, existing research mainly focuses on curriculum design, teaching methods, and practical aspects. For example, Liu et al. studied a digital learning system based on multiplayer online animation games, and found that it effectively enhanced students’ interest in learning and hands-on skills31. Wang and Tao proposed an animation media art teaching design based on big data integration technology, and the results showed that this method effectively improved students’ learning outcomes and satisfaction32. However, most of these studies remain at the theoretical level, lacking close alignment with industry needs. In particular, in terms of artistic creativity evaluation, they have not fully explored how to enhance students’ innovative abilities and practical skills through an evaluation system. Furthermore, although Abdubannobovna and Orifakhon discussed recent advancements in art education technology, these studies lack a clear connection to the specific needs of the animation industry33. This gap highlights the need for animation education models to be more closely aligned with industry demands and integrate creativity evaluation systems to enhance students’ innovative abilities and practical skills.

Research gaps and innovations

The literature of various scholars is further summarized, as shown in Table 1.

In summary, while existing research has made some progress in the areas of art creativity evaluation, innovation and entrepreneurship evaluation, and the application of DL and AI technologies in art education, there are still some shortcomings. For example, current research still relies too heavily on subjective factors and lacks the ability to transform artistic creation evaluation systems into practical assessment tools. The application of DL and AI technologies in the field of art still requires further exploration. The animation education model tends to be too theoretical and lacks a strong connection with industry demands. Additionally, existing studies on art creativity evaluation have not fully addressed how to apply evaluation systems in art education, particularly in the context of talent development in animation programs. Therefore, this work aims to fill these research gaps by constructing a DL and AI-based evaluation model. This model combines the advantages of BPNN and StyleGAN methods. Specifically, BPNN can establish a mapping relationship between the features of artistic works and the evaluation results, while StyleGAN can generate high-quality artistic creative images, providing rich visual feature support for the evaluation model. This integration allows for a more comprehensive consideration of the structural and visual style features of artistic works. It enables precise assessment of artistic creativity and effectively addresses the subjectivity and lack of quantitative metrics in traditional evaluation methods.

Research model

The art creativity evaluation model constructed addresses the issues of subjectivity and lack of quantitative indicators in traditional evaluation methods by integrating the Backpropagation Neural Network (BPNN) with the Style-Based Generative Adversarial Network (StyleGAN). The model leverages the feature mapping ability of BPNN and the artistic style generation advantages of StyleGAN to accurately assess the innovativeness and market potential of artworks. It also enhances the model’s generalization ability through data preprocessing, the Adam optimizer, and Dropout layers. This model not only provides an objective and quantitative approach for evaluating art creativity but also offers technical support for talent development in animation majors at universities, laying the foundation for subsequent experimental design and result analysis.

Analysis of innovation and entrepreneurship evaluation from the perspective of artistic creativity

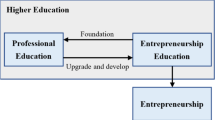

In the field of artistic creativity, innovation and entrepreneurship evaluation is a complex and multidimensional process. As a significant driver of innovation and entrepreneurship, the construction of an evaluation system for artistic creativity is crucial for identifying and nurturing creative projects with market potential and cultural value34,35,36. An effective evaluation system can assist stakeholders such as investors, curators, educators, and policymakers in better understanding the commercial and technical feasibility of artistic creativity and its potential contributions to society and culture37. Artworks typically possess various characteristics, such as style, color, and composition. These features can be viewed as different dimensions for classification. Considering the evaluation of artworks as a classification problem allows for deeper insights into their innovative impact and market potential. Figure 1 illustrates the principles and applications for constructing an evaluation system for artistic creativity.

Figure 1 illustrates the principles and applications of the system. The construction of this evaluation system is based on four main principles: comprehensiveness, objectivity, dynamism, and operability. Comprehensiveness is reflected in the system’s ability to conduct a comprehensive assessment of artworks from multiple dimensions, such as artistic style, color, and composition. Objectivity is achieved by introducing DL algorithms to reduce subjective interference, quantifying the innovativeness and market potential of artistic creativity in a data-driven manner. Dynamism emphasizes the system’s ability to adapt to the ever-changing cultural, technological, and social environments in the field of artistic creativity. Operability ensures that the system can be efficiently applied in various scenarios, such as investment decision-making, art education, and policy formulation. This evaluation system provides objective decision support for investors, curators, educators, and policymakers. It also helps art creators and creative professionals better understand market demands and develop marketing strategies, and provides new teaching tools and methods for talent cultivation in university animation programs.

Such an evaluation system can effectively promote the transformation and application of artistic creativity in innovation and entrepreneurship, thus driving the overall development of the artistic creativity industry. Therefore, AI algorithms such as DL are introduced to build an evaluation model for artistic creativity and innovation. This helps researchers identify and quantify the features of different artworks, subsequently classifying them to gain a deeper understanding of their innovation and market potential. Furthermore, the work analyzes the advantages and roles of this model in cultivating animation professionals in higher education.

Analysis of the construction of an innovative evaluation model for artistic creativity using BPNN integrated with stylegan algorithm

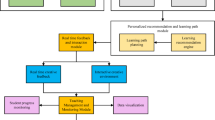

In order to achieve an automatic evaluation of artistic creativity, this work proposes an evaluation model that integrates the BPNN38,39,40 and the StyleGAN algorithm41,42,43,44. The BPNN can establish a mapping relationship between the artistic creativity features obtained through training and the evaluation results, enabling the prediction of evaluations for new artworks. StyleGAN is a type of GAN45 specialized in capturing and generating stylistic features in artworks. Additionally, StyleGAN can generate high-quality images of artworks, providing rich visual features for the evaluation model and enhancing its flexibility. The design of this model aims to automatically extract features from artworks using DL technology and conduct innovative evaluations. Figure 2 depicts the framework of this model.

Figure 2 illustrates that in this model, during the data preparation phase, the first step involves collecting relevant data of artistic creative works, including artist information, artwork attributes, and artwork images. These data serve as the foundation for model training and evaluation. Subsequently, the data undergo preprocessing, which includes operations such as data cleaning, noise removal, and standardization, to ensure data quality and consistency.

At the BPNN algorithm layer, structured data of artistic works, such as artist information and artwork attributes, are taken as input. Then, a multi-layer neural network structure is constructed, comprising input, hidden, and output layers. A multi-layer perceptron serves as the foundational structure of BPNN, and further adjustments to the weights and biases of each output layer in the network are made using the gradient descent method. The adjustments to the weights of the output and hidden layers are denoted as \(\Delta {o_{qj}}\) and \(\Delta {w_{ji}}\), respectively, while adjustments to the thresholds of the output and hidden layers are denoted as \(\Delta {\gamma _q}\) and \(\Delta {h_j}\). Typically, each adjustment is proportional to the gradient descent of the error and can be represented as Eq. (1):

In (1), the negative sign indicates gradient descent, E represents the computed error, η denotes the learning rate, and \(\partial\) represents the partial derivative operation with respect to a certain variable. For instance, \(\frac{{\partial E}}{{\partial {o_{qj}}}}\) represents the partial derivative of the error for the output layer weights, denoted as ∂(denominator)/∂(numerator), which indicates the partial derivative of the denominator concerning the numerator.

Therefore, the adjustment of the output layer weights, denoted as \(\Delta {o_{qj}}\), can be expressed as Eq. (2):

\(ne{t_q}\) refers to the input of the q-th node in the output layer, and \({y_q}\) refers to the output of the q-th node in the output layer. The adjustment of the hidden layer weights, denoted as \(\Delta {w_{ji}}\), is expressed as Eq. (3):

\({z_j}\) refers to the output of the j-th node in the hidden layer, and the operation ∂(denominator)/∂(numerator) represents the partial derivative of the denominator concerning the numerator. The adjustment of the output layer threshold, denoted as \(\Delta {\gamma _q}\), is expressed as Eq. (4):

The adjustment of the hidden layer threshold, denoted as \(\Delta {h_j}\), is represented by Eq. (5):

Furthermore, due to the existence of Eq. (6) to (10):

\({x_i}\) refers to the input of the i-th node in the input layer, \(i=1,2, \cdots ,n\); \(T_{q}^{p}\) denotes the expected output of the p-th sample, and \(y_{q}^{p}\) represents the actual output of the p-th sample. Thus, the adjustment of the output layer weights, denoted as \(\Delta {o_{qj}}\), can be obtained by Eq. (11):

The adjustment quantity of the hidden layer weights, denoted as \(\Delta {w_{ji}}\), is given by Eq. (12):

The adjustment quantity of the output layer threshold, denoted as \(\Delta {\gamma _q}\), is represented by Eq. (13):

The adjustment quantity of the hidden layer threshold, denoted as \(\Delta {h_j}\), is given by Eq. (14):

Finally, the weights and thresholds for the N + 1-th input sample are given by equations (15) to (18):

Unlike the BPNN model, the StyleGAN algorithm layer is based on the GAN for generating artistic creative images. During the construction phase of the StyleGAN model, this work first uses randomly generated latent vectors as input, and then generates high-quality artistic creative works images through the generative network. Assuming \(h \in \left\{ {1,2, \ldots ,H} \right\}\) represents a predefined class and H denotes the number of categories, the typical cross-entropy loss function \({L_{R1}}\) used during the training process is represented by Eq. (19):

M represents the number of artistic creative images. \(R\left( {h|{x_i}} \right)\) denotes the probability that the artistic creative image \({x_i}\) is predicted as the label h, and \(q\left( h \right)\) represents the label distribution of artistic creative images, as shown in Eq. (20):

\({y_i}\) denotes the real label of artistic creative image \({x_i}\). After pre-training, the artistic creative image recognition network R is embedded into the synthesis network S. Subsequently, the artistic creative image recognition network R is trained using both synthetic and real images of artistic creative works. By integrating models, it is possible to comprehensively consider both the structural and visual characteristics of artworks, achieving precise classification of their creativity and enabling more comprehensive and accurate evaluations. This provides a new technological approach for evaluating artistic creativity, while also offering a reference for innovation in higher education art programs and the cultivation of talent in the animation industry. Figure 3 illustrates the pseudocode of this model.

The core feature of this model lies in its ability to comprehensively consider both the structural and visual style characteristics of artworks, allowing for precise evaluation of artistic creativity. The BPNN establishes a mapping relationship between the features of artworks and evaluation results through its multi-layer neural network structure. The StyleGAN focuses on generating high-quality artistic creative images and providing rich visual feature support for the model. This integration not only enhances the model’s ability to assess the innovation and market potential of artworks but also strengthens its generalization capability across different artistic styles and creation types. The intended use of the model is to provide an objective, quantitative, and efficient technical approach for evaluating artistic creativity, and address the shortcomings of traditional evaluation methods that are overly subjective and lack quantitative indicators. This innovative evaluation model aims to offer new technical support and theoretical references for art education, cultural and creative industries, and the innovation and entrepreneurship sectors. It intends to promote the scientific assessment of artistic creativity and the innovation of talent development models.

Experimental design and performance evaluation

Datasets collection

This work uses the Netflix Animation Library (https://www.netflix.com/jp/browse/genre/3063) as the data source. The dataset is a high-quality collection of animated works widely used in animation research and development. It contains over 500 animated works, spanning from early 20th-century classics to early 21st-century CGI animations. The diversity of the dataset is reflected in several aspects. First, it includes a rich variety of animation styles, such as 2D, 3D, and stop-motion animation; then, it covers a wide range of thematic categories, including sci-fi, fantasy, and adventure; additionally, the dataset also contains extensive metadata, such as information on directors, screenwriters, production companies, release years, and audience ratings. These metadata provide multidimensional feature support for training DL models. The representativeness of the dataset lies in its inclusion of animated works from different periods, types, and styles, effectively reflecting the diversity and development trends in the animation field. It provides a solid foundation for training and validating artistic creativity evaluation models.

In the data preprocessing stage, the image data are first standardized. This is achieved by adjusting the image size to a uniform resolution (such as 512 × 512 pixels) and normalizing the pixel values to the range of [0, 1] to eliminate scale differences between images. Metadata are encoded and normalized, such as converting categorical information such as directors and production companies into one-hot encoding, and standardizing numerical information such as release year and audience ratings. Additionally, to enhance the model’s generalization capability, data augmentation techniques, such as random cropping, rotation, and color adjustment, are applied during training to generate more diverse training samples. Ultimately, the dataset is divided into a training set (80%) and a test set (20%), providing a solid foundation for DL model training and validation. Through these preprocessing steps, the Netflix Animation Library not only supports the development of the artistic creativity evaluation model but also offers valuable data support for animation education and industry applications. Subsequently, these data are input into the fused BPNN and StyleGAN model for training. The BPNN learns the mapping relationship between the features of the artworks and evaluation results from the structured data. The StyleGAN generates high-quality artistic creative images, providing rich visual features for the evaluation model. Through this fusion, the model can comprehensively consider both the structural and visual characteristics of the artworks, enabling precise classification and evaluation of artistic creativity.

Experimental environment

In order to validate the constructed algorithm, development mainly takes place on a Windows computer. Table 2 provides specific environment configuration information.

Parameters setting

The specific hyperparameter settings are as follows. Adam optimizer is used as the optimizer to minimize the loss function. The number of epochs is set to 120, with a learning rate (LR) of 0.001. A decay strategy is employed where the learning rate is reduced by 50% when the loss indicator stops improving. During the training process, a Dropout layer with a dropout value of 0.5 is added between the input and output layers. The batch size is set to 64.

Performance evaluation

In order to analyze the performance of the model proposed, it is compared with GAN46, StyleGAN, BPNN47, and the model algorithm proposed by Jin et al. in the related field. The evaluation is conducted based on indicators such as loss function value, fitting effect, accuracy, precision, recall, and F1 score. The loss function value reflects the error convergence of the model during the training process. A lower loss value indicates that the model fits the training data better. The fitting performance is measured by comparing the consistency in trends between the model’s predicted values and the actual values. A good fit means the model can accurately capture the patterns in evaluating the innovativeness of artworks. Accuracy represents the proportion of correctly predicted values, reflecting the overall accuracy of the evaluation. Precision focuses on the proportion of actual innovative works among those predicted as innovative by the model, measuring the model’s conservatism. Recall reflects the proportion of innovative works that the model is able to identify, demonstrating the model’s sensitivity. The F1 score is the harmonic mean of precision and recall, providing a comprehensive measure of the model’s performance balance. Through the combined evaluation of these metrics, the proposed model demonstrates superior performance in artistic creativity evaluation, and offers reliable technical support for innovation and entrepreneurship assessments.

Figure 4 displays the comparison of the loss function values of each model algorithm.

In Fig. 4, through the analysis of the loss values of each algorithm, it can be observed that the proposed BPNN fused with the StyleGAN model algorithm achieves the minimum loss value. Specifically, the loss value of the model algorithm proposed reaches a basic stable state at around 0.38 after 30 iterations, while the final loss functions of other algorithms all exceed 0.62. Therefore, in the evaluation of artistic creativity and innovation, the proposed innovative evaluation model demonstrates superior convergence performance, enabling intelligent innovation evaluation of artistic creative works.

Figure 5 presents an analysis of the fitting effect of the proposed algorithm compared with other models and the actual value.

Figure 5 reveals that, concerning the fitting effect, the algorithm proposed closely follows the trend of the real values and achieves better predictions for the normalized peak and trough values. Furthermore, comparing the prediction results of other model algorithms with the real values reveals that their predicted results significantly deviate from the peak and trough values of the real values.

Figures 6, 7, 8 and 9 display the further analysis of the predictive accuracy of innovation evaluation based on the indicators of accuracy, precision, recall, and F1 score for each algorithm.

Further analysis of the prediction accuracy of each algorithm, as shown in Figs. 6, 7, 8 and 9, reveals that with the increase in iteration cycles, the evaluation accuracy of each algorithm tends to increase first and then stabilize. Compared with GAN, StyleGAN, BPNN, and the model proposed by Jin et al. in the relevant field, the accuracy of the model proposed reaches 96.30%, an increase of at least 3.75%. The rankings of the algorithms are as follows: the model proposed > the algorithm proposed by Jin et al. > StyleGAN > GAN > BPNN. In addition, the precision, recall, and F1 values of the model reach 92.82%, 91.06%, and 85.88%, respectively, representing an improvement of at least about 4% in evaluation accuracy compared to other algorithms. Therefore, the artistic creative innovation evaluation model based on BPNN fused with StyleGAN proposed exhibits better performance in prediction accuracy for innovation and entrepreneurship evaluation.

To validate the model’s generalization ability, experiments are conducted on multiple datasets, including the original Netflix Animation Library dataset and two additional datasets. The first is the Annecy International Animation Film Festival (AIAFF) dataset (https://www.annecy.org/). It is one of the most significant animation film festivals in the world, whose official website provides information on some of the award-winning and participating works. The second is the Sundance Film Festival (SFF) Animation section dataset (https://www.sundance.org/festival), which covers a wide variety of animated works and offers abundant resources for the collection. Table 3 presents the results.

Table 3 shows that the model performs best on the Netflix Animation Library dataset, achieving an accuracy of 96.30%, a precision of 92.82%, a recall of 91.06%, and an F1 score of 85.88%. This indicates that the model can efficiently identify and assess the innovativeness of artworks in this dataset. However, when applied to the AIAFF dataset, the model’s performance slightly declines, with accuracy at 94.85%, precision at 91.50%, recall at 89.70%, and an F1 score of 85.20%. This result reflects that while the model still maintains a high level of accuracy and reliability in handling animation works from different regions and styles, it is somewhat influenced by cultural diversity and stylistic differences. Further, on the SFF dataset, the model’s accuracy drops to 93.20%, precision is 89.30%, recall is 88.50%, and the F1 score is 83.90%. This suggests that while the model still performs well on animation artworks from various cultural backgrounds, its generalization ability faces challenges when confronted with a broader range of artistic forms and cultural differences. Overall, the model’s performance across these three datasets demonstrates its effectiveness in evaluating artistic creativity and some degree of generalization ability. However, it also highlights the need for further optimization in cross-cultural scenarios to improve its applicability and accuracy across a wider spectrum of animation art.

To more intuitively demonstrate the evaluation process and results of the proposed assessment system for specific animated works, three stylistically distinct animated films, Spirited Away, Toy Story, and Big Fish & Begonia, are selected for case analysis, as shown in Table 4. These works have a broad influence in the animation field and effectively showcase the system’s evaluation capabilities.

In Table 4, through the evaluation of the three animated works mentioned above, the system can provide detailed and targeted scores and feedback on the works from three dimensions: innovation, unique style, and market potential. Taking Spirited Away as an example, the system gives it a score of 95 for innovation, and recognizes its high level of innovation in narrative structure and visual style. In particular, its innovative aspects, such as its unique spiritual worldview and delicate use of color, add unique artistic value to the work. Meanwhile, the system points out that there is still room for further optimization in market promotion, such as strengthening international market expansion and brand development to better realize its commercial value.

Discussion

Based on the research findings above, it is observed that the model proposed is compared with GAN, StyleGAN, BPNN, and the model proposed by Jin et al. in terms of performance analysis. The results indicate that the proposed model performs best in terms of loss function values, exhibiting faster convergence and minimal loss values, showcasing superior convergence effects, and better prediction of peak and valley values after normalization. This is consistent with the views of Zhou et al.48 and Çiçek et al.49. Further analysis of its predictive performance reveals that the proposed model’s accuracy is significantly better than other models, achieving an evaluation prediction of 96.30%. Therefore, the artistic innovation evaluation model based on BPNN fused with StyleGAN proposed performs better in predicting innovation and entrepreneurship evaluation accuracy. This is consistent with the views of Qi et al.50. This model is expected to become an important tool for innovation evaluation in the field of artistic creativity. It can provide intelligent and accurate evaluation services for artistic creators, while also providing strong support for the development of the creative industry.

In art education environments, traditional methods for assessing artistic creativity primarily rely on teachers’ subjective judgments, lacking objective quantitative indicators. For example, teachers typically evaluate students’ creativity by observing their creative processes, the completeness of their works, and interactions with students. However, this approach has notable limitations: assessment results are highly subjective, making cross-class or cross-school comparisons difficult, and it struggles to accurately reflect the innovativeness and market potential of students’ works. Additionally, traditional methods often lack in-depth analysis of multi-dimensional characteristics of student works, such as stylistic uniqueness, innovative technical applications, and the depth of cultural connotations. These limitations not only affect the quality of art education but also restrict the comprehensive development of students’ creativity. To address these challenges, recent studies have explored applying AI and deep learning technologies to artistic creativity assessment. For instance, Sung et al. developed a deep learning-based computerized creativity assessment tool capable of automated scoring, enhancing objectivity and efficiency. However, most of these studies focus on technology development and validation, lacking close integration with the practical needs of art education. In art education, an assessment system must not only provide objective scores but also offer specific feedback and improvement suggestions for students and teachers to foster the development of students’ creativity.

The proposed potential application of the artistic creativity assessment system within educational environments is rooted in a profound understanding of the limitations of traditional assessment methods and innovative improvements to existing technologies. By leveraging deep learning and AI, the system can automatically extract key features of artistic works and evaluate them across multiple dimensions, including innovativeness, unique style, and market potential. Compared with traditional methods, the system not only provides more objective and quantifiable assessment results but also offers real-time feedback and targeted improvement suggestions for students and teachers. For example, in the “Animation Creative Concepts” course, students’ submitted creative sketches can be quickly scored for innovativeness through the system. Meanwhile, the system analyzes the stylistic characteristics and market potential of the works and provides specific improvement recommendations. This real-time feedback mechanism helps students better understand the strengths and weaknesses of their works, enabling them to make targeted improvements and enhance their creativity and practical capabilities.

From the perspective of the system’s applicability to future creative works, a dynamic data update mechanism can be established to periodically collect the latest artworks and creative cases, ensuring the system remains timely and adaptable. Additionally, by incorporating cross-domain data fusion, such as including data from painting, sculpture, architectural design, and other fields in the model’s training, the system’s generalization ability can be enhanced. Moreover, through modular design, the model can flexibly adapt to different types and styles of artworks, and improve its ability to evaluate future creative works. These measures will ensure the system can better support the evaluation of creative works that transcend existing values and norms.

Although the model proposed demonstrates excellent technical performance, its interpretability remains a key issue that needs to be addressed. To help users better understand and trust the model’s evaluation results, this work has conducted a deep analysis of the model’s decision-making process. Specifically, through feature importance analysis and attention mechanism visualization, it highlights the specific features the model focuses on when evaluating artworks. For example, feature importance analysis reveals the extent to which the model relies on characteristics such as color contrast, composition complexity, and style uniqueness. All of these features play a critical role in the model’s final evaluation. Additionally, attention mechanism visualization allows for a direct and intuitive display of the areas the model focuses on when processing images of artworks. For instance, in an animated work, the model may focus more on the details of the character’s expression, the color layers in the background, or the dynamic effects of the overall scene. These analyses not only provide users with an intuitive understanding of the model’s decision logic but also offer insights for further optimization of the model. This makes it more persuasive and practical in the highly subjective field of artistic creativity evaluation.

To better showcase the system’s strengths and limitations, a review of previous research on creativity or art evaluation systems has been added, particularly the work by Jin et al. Through comparative analysis, it becomes evident that the proposed system holds advantages regarding technological innovation and application effectiveness. However, it also shows certain limitations, such as its dependence on the dataset and the constraints in defining innovation. Additionally, an analysis of the system’s applicability reveals that the system is not limited to animation programs but can be extended to other art and design fields, such as graphic design and illustration. Specific teaching application suggestions are proposed for different programs based on their unique characteristics. Moreover, based on the system’s features and limitations mentioned above, directions for future improvements are outlined, such as incorporating more advanced DL algorithms to enhance the model’s ability to understand complex creative works.

Finally, to ensure that the system built can be applied to concrete educational cases and provide related effect evaluations, this work plans to develop a series of teaching cases based on the model, and cover both foundational and advanced course content. For example, in a course like “Animation Creative Concepts,” students can submit creative sketches through the system, and the system will provide innovation evaluations and improvement suggestions. In an “Animation Project Practice” course, students can use the system to conduct a comprehensive evaluation of their full animation works, optimizing their market potential. Additionally, through actual teaching applications, feedback from students and teachers will be collected to assess the system’s teaching effectiveness. Representative student works will be selected to showcase the improvement process and effects before and after using the system. Through comparative analysis, the system’s impact on enhancing the innovation and market potential of student works will be visually demonstrated, thereby strengthening the argument of the research work. However, how the system provides feedback to learners, and whether this feedback positively influences their understanding of creativity and innovation, remains an issue for further exploration. Additionally, since the system has not yet been applied in an educational environment, the discussion of its educational effectiveness is currently in the speculative stage. Future research will need to validate the system’s practical value in educational settings through specific teaching cases and comparative experiments, such as analyzing changes in student learning outcomes after using the system, and their increased interest in the integration of AI with art.

In today’s highly competitive business environment, assessing artistic creativity is crucial for identifying and nurturing creative projects with market potential. Traditional evaluation methods often rely on subjective judgment and lack quantitative indicators, making it difficult to accurately reflect the innovation and commercial value of artistic works. The artistic creativity evaluation model based on the integration of BPNN and StyleGAN proposed provides new technological means and theoretical support for evaluating artistic creativity in the business context. The model automatically extracts features of artistic works using DL techniques and combines high-quality visual features generated by StyleGAN. This enables a comprehensive and objective evaluation of artworks from multiple dimensions, such as innovation, unique style, and market potential. Its high accuracy (96.30%) and excellent predictive performance indicate that the model can effectively identify creative works with commercial potential, providing scientific decision-making support for investors, art curators, educators, and policymakers. Additionally, the model’s dynamic and operable nature allows it to adapt to the constantly changing cultural, technological, and market environments, and provide strong technical support for the application and promotion of artistic creativity in the business field. By integrating artistic creativity with commercial value, this work advances the development of artistic creativity assessment systems. Moreover, it offers new ideas and methods for the innovation and sustainable development of the creative industry.

The artistic creativity evaluation system platform developed has not yet been open-sourced. The main reason is that the system is still in the optimization phase, and some features need further improvement to ensure its stability and accuracy in different scenarios. Additionally, considering that some of the technologies involved in the system may be subject to patent applications, it is not yet appropriate to release it as open source. Furthermore, to further promote the application of the art creativity evaluation system proposed in education and other fields, this work plans to develop an online demonstration platform to visually showcase the system’s functions and effectiveness. This platform will reference existing open creativity scoring systems (Open Creativity Scoring: https://openscoring.du.edu/) and provide transparent evaluation mechanisms along with real-world application examples to enhance users’ understanding and trust in the system. The platform will allow users to upload artwork and receive real-time innovation evaluation results from the system. It can showcase how the model focuses on specific features of the artwork (such as color, composition, and style) and visualize the decision-making process. The AuDra system quantifies the innovativeness and artistic value of creative paintings by analyzing visual features such as color, composition, and brushstrokes, combined with machine learning algorithms, thus providing objective evaluation indicators for painting creativity51. Compared with AuDra, this work’s system not only achieves technological breakthroughs but also uses deep learning and AI to offer more precise and objective quantitative indicators for multi-dimensional assessment of artistic creativity, significantly outperforming traditional methods in accuracy and efficiency. While the AuDra system primarily focuses on evaluating creative paintings, the system in this work covers a broader range of art forms, such as animation and graphic design, demonstrating wider applicability. Additionally, the platform will offer practical application cases, and demonstrate the system’s effectiveness in educational settings, such as the improvement process of student artworks and teaching feedback from educators. By incorporating user feedback mechanisms, this work aims to continuously optimize the system’s performance and user experience. The development of this demonstration platform will help showcase the system’s practical application value and provide an innovative tool for art education and the creative industry, promoting the widespread adoption of art creativity evaluation technologies. The goal is to protect intellectual property while also promoting academic exchange and technological sharing through this approach.

Conclusion

Research contribution

This work successfully develops an artistic creativity evaluation system based on DL and AI, and empirical analysis verifies the system’s significant advantages in intelligently assessing creative artistic works. The proposed evaluation model, which integrates BPNN and StyleGAN, achieves an accuracy of 96.30% in evaluating artistic works, significantly improving the precision and efficiency of the assessment. This model not only provides intelligent and objective evaluation services for artists but also offers strong support for the development of the creative industry. Additionally, this work deeply explores the application value of the model in the training of animation professionals in higher education and proposes an innovative method of embedding it as a teaching tool into the curriculum. By providing students with real-time feedback on innovation and improvement suggestions, the model effectively enhances students’ creativity and practical skills, and offers new technological tools and teaching methods for the field of art education. Overall, this work advances the development of artistic creativity evaluation systems on a technical level. Moreover, it provides important theoretical and practical references for cultivating innovative artistic talent that meets future market demands in the education sector.

Future works and research limitations

Despite the positive outcomes of this work, there are still some limitations and areas for future improvement. First, the generalization ability of the model needs further validation across a broader range of artistic works and cultural backgrounds. Second, the interpretability of the model needs enhancement to enable users to better understand the logic behind the evaluation results. Additionally, in terms of the cultivation of talent in animation majors at universities, innovative educational models need to be closely aligned with industry demands. Future educational practices should consider industry trends and technological developments more comprehensively to foster animation professionals who are better suited to meet future market demands.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author Hao Xie on reasonable request via e-mail xiehao@suse.edu.cn.

References

Gohoungodji, P. & Amara, N. Art of innovating in the arts: definitions, determinants, and mode of innovation in creative industries, a systematic review. Rev. Managerial Sci. 17 (8), 2685–2725 (2023).

Repenning, A. & Oechslen, A. Creative digipreneurs: artistic entrepreneurial practices in platform-mediated space. Digit. Geogr. Soc. 4, 100058 (2023).

Lewandowska, K. & Kulczycki, E. Academic research evaluation in artistic disciplines: the case of Poland. Assess. Evaluation High. Educ. 47 (2), 284–296 (2022).

Shukla, S. Creative computing and Harnessing the power of generative artificial intelligence. J. Environ. Sci. Technol. 2 (1), 556–579 (2023).

Bijalwan, V., Semwal, V. B. & Gupta, V. Wearable sensor-based pattern mining for human activity recognition: deep learning approach. Industrial Robot: Int. J. Rob. Res. Application. 49 (1), 21–33 (2022).

Xu, J., Pan, S., Sun, P. Z., Park, S. H. & Guo, K. Human-factors-in-driving-loop: driver identification and verification via a deep learning approach using psychological behavioral data. IEEE Trans. Intell. Transp. Syst. 24 (3), 3383–3394 (2022).

Chen, D., Lu, Y., Li, Z. & Young, S. Performance evaluation of deep transfer learning on multi-class identification of common weed species in cotton production systems. Comput. Electron. Agric. 198, 107091 (2022).

Allugunti, V. R. A machine learning model for skin disease classification using Convolution neural network. Int. J. Comput. Program. Database Manage. 3 (1), 141–147 (2022).

Balamurugan, D., Aravinth, S. S., Reddy, P. C. S., Rupani, A. & Manikandan, A. Multiview objects recognition using deep learning-based wrap-CNN with voting scheme. Neural Process. Lett. 54 (3), 1495–1521 (2022).

Nawaz, M. et al. An efficient deep learning approach to automatic glaucoma detection using optic disc and optic cup localization. Sensors 22 (2), 434 (2022).

Acar, S. & Runco, M. A. Divergent thinking: New methods, recent research, and extended theory. Psychology of Aesthetics, Creativity, and the Arts, 13(2), 153–158. (2019).

Kozhevnikov, M., Ho, S. & Koh, E. The role of visual abilities and cognitive style in artistic and scientific creativity of Singaporean secondary school students. J. Creative Behav. 56 (2), 164–181 (2022).

Cruz, T. N. D., Camelo, E. V., Nardi, A. E. & Cheniaux, E. Creativity in bipolar disorder: a systematic review. Trends Psychiatry Psychother., 44, e20210196. (2022).

Díaz-Portugal, C., Delgado-García, J. B. & Blanco-Mazagatos, V. Do cultural and creative entrepreneurs make affectively driven decisions? Not when they evaluate their opportunities. Creativity Innov. Manage. 32 (1), 22–40 (2023).

Plucker, J. A. The patient is thriving! Current issues, recent advances, and future directions in creativity assessment. Creativity Res. J. 35 (3), 291–303 (2023).

Hitsuwari, J., Ueda, Y., Yun, W. & Nomura, M. Does human–AI collaboration lead to more creative art? Aesthetic evaluation of human-made and AI-generated Haiku poetry. Comput. Hum. Behav. 139, 107502 (2023).

Sung, Y. T., Cheng, H. H., Tseng, H. C., Chang, K. E. & Lin, S. Y. Construction and validation of a computerized creativity assessment tool with automated scoring based on deep-learning techniques. Psychol. Aesth. Creat. Arts 18(4), 493 (2024).

Koch, F., Hoellen, M., Konrad, E. D. & Kock, A. Innovation in the creative industries: linking the founder’s creative and business orientation to innovation outcomes. Creativity Innov. Manage. 32 (2), 281–297 (2023).

Ávila, A. L. D., Davel, E. & Elias, S. R. Emotion in entrepreneurship education: passion in artistic entrepreneurship practice. Entrepreneurship Educ. Pedagogy. 6 (3), 502–533 (2023).

Marins, S. R., Davel, E. P. & Parsley, S. Aesthetic embeddedness: towards an aesthetic Understanding of cultural and artistic entrepreneurship. Entrepreneurship Reg. Dev. 35 (9–10), 695–714 (2023).

Purnomo, B. R. Artistic orientation in creative industries: conceptualization and scale development. J. Small Bus. Entrepreneurship. 35 (6), 828–870 (2023).

Purg, P., Cacciatore, S. & Gerbec, J. Č. Establishing ecosystems for disruptive innovation by cross-fertilizing entrepreneurship and the arts. Creative Industries J. 16 (2), 115–145 (2023).

Muriel-Nogales, R., Andersen, L. L. & Ferreira, S. Guest editorial: unlocking the transformative potential of culture and the arts: innovative practices and policies from social enterprises and third-sector organisations. Social Enterp. J. 20 (2), 113–122 (2024).

Khalid, S., Oqaibi, H. M., Aqib, M. & Hafeez, Y. Small pests detection in field crops using deep learning object detection. Sustainability 15 (8), 6815 (2023).

Ma, P. et al. A state-of-the-art survey of object detection techniques in microorganism image analysis: from classical methods to deep learning approaches. Artif. Intell. Rev. 56 (2), 1627–1698 (2023).

Chen, Z. et al. Deep learning for image enhancement and correction in magnetic resonance imaging—state-of-the-art and challenges. J. Digit. Imaging. 36 (1), 204–230 (2023).

Fu, S. et al. Field-dependent deep learning enables high-throughput whole-cell 3D super-resolution imaging. Nat. Methods. 20 (3), 459–468 (2023).

Gendy, G., He, G. & Sabor, N. Lightweight image super-resolution based on deep learning: State-of-the-art and future directions. Inform. Fusion. 94, 284–310 (2023).

Jin, Y. et al. GAN-based pencil drawing learning system for Art education on large-scale image datasets with learning analytics. Interact. Learn. Environ. 31 (5), 2544–2561 (2023).

Tsita, C. et al. A virtual reality museum to reinforce the interpretation of contemporary Art and increase the educational value of user experience. Heritage 6 (5), 4134–4172 (2023).

Liu, L., Li, M. & Ji, S. A research on digital learning system based on multiplayer online animation game. Libr. Hi Tech. 41 (5), 1524–1544 (2023).

Wang, R. & Tao, Y. Animation media Art teaching design based on big data fusion technology. Int. J. Adv. Comput. Sci. Appl. 15 (2), 731 (2024).

Abdubannobovna, M. R. & Orifakhon, E. The emergence of Art teaching technology: revolutionizing Art education. Galaxy Int. Interdisciplinary Res. J. 12 (1), 287–290 (2024).

Peñarroya-Farell, M., Miralles, F. & Vaziri, M. Open and sustainable business model innovation: an intention-based perspective from the Spanish cultural firms. J. Open. Innovation: Technol. Market Complex. 9 (2), 100036 (2023).

Walzer, D. Towards an Understanding of creativity in independent music production. Creative Industries J. 16 (1), 42–55 (2023).

Altinay, L., Kromidha, E., Nurmagambetova, A., Alrawadieh, Z. & Madanoglu, G. K. A social cognition perspective on entrepreneurial personality traits and intentions to start a business: does creativity matter? Manag. Decis. 60 (6), 1606–1625 (2022).

Wohl, H. Innovation and creativity in creative industries. Sociol. Compass. 16 (2), e12956 (2022).

Chiang, T. A., Che, Z. H., Huang, Y. L. & Tsai, C. Y. Using an ontology-based neural network and DEA to discover deficiencies of hotel services. Int. J. Semantic Web Inform. Syst. (IJSWIS). 18 (1), 1–19 (2022).

Almiani, M., Abughazleh, A., Jararweh, Y. & Razaque, A. Resilient back propagation neural network security model for containerized cloud computing. Simul. Model. Pract. Theory. 118, 102544 (2022).

Kheradpisheh, S. R., Mirsadeghi, M. & Masquelier, T. Bs4nn: binarized spiking neural networks with Temporal coding and learning. Neural Process. Lett. 54 (2), 1255–1273 (2022).

Hassanpour, A. et al. E2F-Net: Eyes-to-face inpainting via stylegan latent space. Pattern Recogn. 152, 110442 (2024).

Alkishri, W., Widyarto, S. & Yousif, J. H. Evaluating the effectiveness of a Gan fingerprint removal approach in fooling deepfake face detection. J. Internet Serv. Inform. Secur. (JISIS). 14 (1), 85–103 (2024).

He, L. et al. Research on high-resolution face image inpainting method based on stylegan. Electronics 11 (10), 1620 (2022).

Yang, C., Zhang, Y., Bai, Q., Shen, Y. & Dai, B. Revisiting the evaluation of image synthesis with Gans. Adv. Neural. Inf. Process. Syst. 36, 9518–9542 (2023).

Zhang, Y. et al. High-Precision detection for sandalwood trees via improved YOLOv5s and stylegan. Agriculture 14 (3), 452 (2024).

Yeleussinov, A., Amirgaliyev, Y. & Cherikbayeva, L. Improving OCR accuracy for Kazakh handwriting recognition using Gan models. Appl. Sci. 13 (9), 5677 (2023).

Oyebode, O. O., Oladimeji, O. A., Adelekun, A. & Akomolafe, T. A. Performance evaluation of selected classification algorithms for Iris recognition system. Eur. J. Comput. Sci. Inform. Technol. 11 (2), 1–12 (2023).

Zhou, Q., Situ, Z., Teng, S. & Chen, G. Comparative effectiveness of data augmentation using traditional approaches versus stylegans in automated sewer defect detection. J. Water Resour. Plan. Manag. 149 (9), 04023045 (2023).

Çiçek, S., Koç, M. & Korukcu, B. Urban map generation in artist’s style using generative adversarial networks (GAN). Archit. Plann. J. (APJ). 28 (3), 9 (2023).

Qi, W., Deng, H. & Li, T. Multistage guidance on the diffusion model inspired by human artists’ creative thinking. Front. Inform. Technol. Electron. Eng. 25 (1), 170–178 (2024).

Patterson, J. D., Barbot, B., Lloyd-Cox, J. & Beaty, R. E. AuDrA: an automated drawing assessment platform for evaluating creativity. Behav. Res. Methods. 56 (4), 3619–3636 (2024).

Author information

Authors and Affiliations

Contributions

Hao Xie: Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparationZijian Zhao: writing—review and editing, visualization, supervision, project administration, funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics statement

The studies involving human participants were reviewed and approved by College of fine arts, Sichuan University of Science and Engineering Ethics Committee (Approval Number: 2022.5623000). The participants provided their written informed consent to participate in this study. All methods were performed in accordance with relevant guidelines and regulations.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xie, H., Zhao, Z. The analysis of entrepreneurship evaluation system for talent cultivation in artistic creativity and animation under artificial intelligence. Sci Rep 15, 16958 (2025). https://doi.org/10.1038/s41598-025-01437-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-01437-w