Abstract

The brain’s ability to switch between functional states while maintaining both flexibility and stability is shaped by its structural connectivity. Understanding the relationship between brain structure and neural dynamics is a central challenge in neuroscience. Prior studies link neural dynamics to local noisy activity and mesoscale coupling mechanisms, but causal links at the whole-brain scale remain elusive. This study investigates how the balance between integration and segregation in brain networks influences their dynamical properties, focusing on multistability (switching between stable states) and metastability (transient stability over time). We analyzed a spectrum of network models, from highly segregated to highly integrated, using structural metrics like modularity, efficiency, and small-worldness. By simulating neural activity with a neural mass model, and analyzing Functional Connectivity Dynamics (FCD), we found that segregated networks sustain dynamic synchronization patterns, while small-world networks, which balance local clustering and global efficiency, exhibit the richest dynamical behavior. Networks with intermediate small-worldness (\(\omega\)) values showed peak dynamical richness, measured by variance in FCD and metastability. Using Mutual Information (MI), we quantified the structure-dynamics relationship, revealing that modularity is the strongest predictor of network dynamics, as modular architectures support transitions between dynamical states. These findings underscore the importance of the balance between local specialization, global integration, and network’s modularity, which fosters the dynamic complexity necessary for cognitive functions. Our study enhances the understanding of how structural features shape neural dynamics.

Similar content being viewed by others

Introduction

The relationship between brain structure and dynamics remains an open question in neuroscience. While it is hypothesized that structural connectivity shapes neural dynamics1,2,3,4, the precise nature of this interaction is still unclear. Unraveling how dynamic brain activity emerges from a relatively fixed structure is crucial for understanding brain function in health and disease. Hence, analyzing the dynamics of simulated brain dynamics using artificial networks is an important tool for unraveling this complex relationship.

Brain connectivity, or the connectome4, refers to the physical links between brain regions and is most accurately represented by the Structural Connectivity (SC) matrix, derived from imaging techniques5. The SC matrix indicates whether two regions are connected by axonal fibers4. On the other hand, brain activity measurements using techniques like EEG6 and fMRI7 reveal dynamic activity within each region. Pairwise correlations of these signals uncover sub-networks of stable, synchronized activity, forming what is known as Functional Connectivity (FC).

Interestingly, relationships between sub-networks observed in SC and in FC have been found8,9,10. However, while SC is a static representation, FC is not, varying over time. As such, the FC variation is interpreted as transitions between multiple states, defining a dynamical regime known as Functional Connectivity Dynamics (FCD)11,12,13. Hence, clarification on how the static brain structure supports the dynamical activity exhibited by the FCD, is key for understanding brain function in health and disease.

Previous research on the causal relationship between structure and function, show that at local scale (cortical areas), the observed global dynamics may emerge from noisy activity of neurons and synapses, as well as their chaotic nature14,15,16,17,18. At mesoscale (coupling between areas), global coupling15,19,20 and internode delay19,21 could be the mechanisms that drive the system towards different dynamical states. Nevertheless, while some studies have established a link between a given SC and its associated FCD10,21,22, a causal relationship between the topological properties of SC, namely integration and segregation, and FCD’s dynamical characteristics, such as multistability or metastability, has not been systematically studied at the global scale (whole brain).

In this study, we aimed to investigate how SC’s integration and segregation correlate with FCD’s multistability and metastability. By using a Wilson-Cowan neural mass model over different network topologies, including a binarized version of the human connectome, we investigated how the broad spectrum of structural segregation and integration, characterized by the Small-world index \(\omega\)23, shapes network dynamics. By means of mutual information, we derived a relationship between network’s structure and dynamics.

Results

Set of structural connectivities

We built a set of adjacency matrices that span the axis of the integration-segregation property. This set comprises five types of networks or generating algorithms: Modular networks, Hierarchical networks, Watts-Strogatz small world networks, and Barabasi-Alberts scale-free networks. Each of these network-generating algorithms can be tuned to obtain a different degree of integration or segregation in the network. In addition, a binarized Human connectome following the Schaeffer-200 parcellation was used, which was also perturbed to obtain integrated or segregated versions (Fig. 1 and also see Methods).

Small-world index \(\omega\) describes network’s integration/segregation.

To characterize the degree of integration or segregation a network has, several metrics were calculated. Briefly, a segregated network is a network with a high amount of different communities or a latticed structure, while an integrated network could be characterized as a big single community where all paths are short like in a random network.

Segregation (Clustering coefficient, modularity, and path length), and integration (global efficiency), metrics were calculated24, by using the brain connectivity toolbox for Python bctpy (https://pypi.org/project/bctpy/).

In addition, Telesford’s Small-World metric25, ω, was also calculated and the networks span a wide range of this metric (Fig. 2 A). We seek to evaluate if ω could serve to describe the degree of the network’s integration/segregation.

For this, we plotted each metric against ω (Fig. 2 B–E). We observed that the clustering coefficient decreases as ω increases, indicating a transition from locally clustered to more random structures (Fig. 2 B). Modularity, a measure of global segregation, also decreases with increasing ω (Fig. 2 C). Path length, which is inverse to network integration, shows an inverse relationship with ω (Fig. 2 D). Finally, global efficiency, which measures network integration, increases with ω (Fig. 2 E).

In summary, ω correlates inversely with clustering coefficient, modularity, and path length25,26, while global efficiency is directly correlated with ω27, showing a direct link between ω and the integration-segregation properties of the networks.

Network Types and Structural Properties: Structural connectivity, graph and degree distribution are shown for highly segregated to highly integrated networks. Watts-Strogatz networks (A–C) transition from highly ordered (segregated) to more randomized (integrated) structures by increasing the rewiring probability p. Modular networks (D–F), show increasing inter-module connections, enhancing integration. Hierarchical networks (G–I) are constructed by iteratively adding inter-module connections in an ordered fashion to create a hierarchical structure. Power-Law networks (J–L), generated with a modified Barabasi-Alberts algorithm, display variations in clustering by forming triangles. Finally, Human connectome networks (M–O) are modified to increase either segregation or integration using a custom algorithm. Axis labels for each plot are shown in panel O.

Integration and Segregation Metrics for different network topologies. (A) Network classification based on the Small-world index (\(\omega\)) into lattice, soft lattice, small world, soft random, and random categories. (B–D) Relationship of \(\omega\) with clustering coefficient, modularity, and path length, respectively. (E) Relationship of \(\omega\) with global efficiency. Lattice: \(\omega \le -0.75\)); soft lattice: \(-0.75<\omega \le -0.25\); small world: \(0.25<\omega \le 0.25\); soft random: 0.25\(<\omega \le 0.75\); random: \(0.75<\omega\).

FCD is a proxy for network’s dynamical richness

To study how network topology determines its dynamics, we implemented a Wilson-Cowan neural mass model28 on each node. The model considers a homeostatic plasticity mechanism that allows a better exploration of the parameter space, as the nodes maintain their oscillatory behavior within a wide range of external inputs. The dynamics of all networks were characterized at different values of the coupling strength g, distributed logarithmically between 0.01 and 2.5. After filtering and down-sampling, a Hilbert Transform was used to obtain the phase and envelope of simulated signals in the 5–15 Hz band (Fig. 3 A). Time-resolved Functional Connectivity (FC) matrices were calculated as the pair-wise correlation between envelopes29, using a sliding window approach (window size = 2000 points, equivalent to 4 s; overlap = 75%; Fig. 3 A, bottom). Finally, the FCD matrix was calculated by computing the Euclidean distance between vectorized FCs (Fig. 3 B).

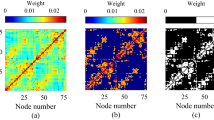

The FCD matrix shown in Fig. 3 B represents how different (or similar) are the FCs through which the network transits. Therefore, the FCD is a representation of the network dynamics11. When the coupling between nodes is \(g\le 0.04\), the FCD appears as almost uniform green (Fig. 4 top), meaning that the difference between FCs is higher than 0 and mostly constant. Thus, the network is always found in different (un)synchronized states that are never revisited. On the other hand, when coupling \(g\ge 1.0\), the FCD matrix appears blue, meaning that the difference between FCs is zero and the synchronization pattern is only one static configuration throughout the simulation. Intermediate values of g cause the appearance of yellow and red patches in the FCD, meaning richer dynamics where FCs with both larger and smaller differences are observed (Fig. 4, top).

To account for the observed synchronization patterns, we used the variance of the off-diagonal values in the FCD, Var(FCD). Var(FCD) is minimal at small or large g (indicating constant or fixed correlation patterns) and maximal when the FCD matrix has a patchy structure (Fig. 4, bottom).

Network Topology drives Network Dynamics The FCD patterns (top) of segregated (left), intermediate (middle), or integrated (right) networks are shown. Coupling value is shown at the top. Overall, intermediate networks are more dynamic, as their FC distance is higher (red patches). On the contrary, integrated networks exhibit less dynamics (green to blue patches). The variance of FCD is shown in the bottom plots. The X-axis represents coupling strength while the y-axis is the mean variance of FCD over 10 network realizations. Notice that Watts-Strogatz networks yield the higher values for Var(FCD) for intermediate or high levels of integration.

Network topology drives network dynamics

To account for the dynamical repertoire exhibited by each type of network in the Integration/segregation continuum, we calculated Var(FCD) for each global coupling value, and averaged the results of 10 different random seeds that governed the heterogeneity of each network. Figure 4 depicts the FCD matrices for three characteristic networks of each type, and at different values of g. At the bottom, the line plots summarize the average of Var(FCD) over the coupling range explored. All networks showed a maximum dynamical richness at intermediate values of global coupling, as it has been previously established in simulation studies16,20. Also, networks classified as ’intermediate’ between segregation and integration, show rich dynamics in a wider range of coupling values. Moreover, the networks of the Modular and Hierarchical types tend to show greater dynamics of FC.

Network topology imposes dynamical richness

To further explore the dynamical variations due to SC, we analyzed two other parameters that characterize network’s dynamic: Synchrony and Metastability (\(\chi\)). Synchrony measures the phase synchrony of all the signals of the network, ranging from no synchronization (0) to fully synchronized (1). Metastability (\(\chi\)), on the other hand, measures the variability in time of the global synchrony30. Both measures are plotted along with Var(FCD) for all networks studied and across the whole range of global coupling in Fig. 5. In all cases, there is a strong correspondence of shallow –sometimes staggered– synchronization curves with a higher occurrence of Metastability and Var(FCD). On the contrary, steep synchronization curves (typical for random networks) correlate with very small values of the measures associated with dynamical richness. Metastability and Var(FCD) mostly coincide for all networks but with some differences, such as in Connectome-based networks where the measures peak at clearly different values of coupling.

Network topology modulates Dynamical parameters. Network’s behavior for three dynamical metrics(rows), and different types of networks densities are shown. Colors represent different integration-segregation values ranging from latticed to random (\(p=1.00\)). All values correspond to mean values over ten realizations.

Linking structural features to network dynamics

To establish a relationship between structure and dynamics, we reduced the data shown in Fig. 5 by calculating the slope of the phase synchrony, or the area under the curve for metastability and varFCD. This approach allowed the dynamics of any network, across the studied range of global coupling (g), to be summarized into a single value. Figure 6 illustrates the relationship between structural parameters and dynamical measures.

Relationship Between Structural Features and Network Dynamics. Phase synchrony slope, and area under the curve for Metastability (X) and Var(FCD) plotted against clustering coefficient, global efficiency, \(\omega\) and modularity for all network tested. Symbol shapes and colors denote the different types of networks, as shown in the legend. Vertical lines in the Omega plot denote the range considered as small-world (\(-\)0.25;0.25). Networks with small-world characteristics (\(\omega\) close to zero) exhibit the highest dynamical richness, with Var(FCD) peaking at intermediate global coupling values. The plots highlight that structural features such as clustering coefficient, global efficiency, and modularity are key predictors of the dynamic behavior of networks, with small-world networks showing the optimal balance for dynamic flexibility.

For all networks, the slope of the adjusted sigmoid for phase synchrony (see methods), increased as the network transitioned from segregation to integration . Notice that, as the slope increases, i.e., as the transition to synchrony becomes sharper, its metastability is reduced.

The segregation measures tested Clustering Coefficient (CC) (Fig. 6, middle left) and Modularity (Q) (Fig. 6, middle right)—both follow a monotonous exponential relationship with respect to \(\chi\). However, the Clustering Coefficient (CC) showed distinct behavior in Barabási-Albert (scale-free) networks compared to other network types. At higher CC values, modular and hierarchical networks displayed trends that differed from those of Watts-Strogatz and connectome-based networks. Similarly, the integration metric (Global Efficiency, \(\eta\)) and the small-worldness index (\(\omega\)) (Fig. 6, middle) showed differing patterns for modular and hierarchical networks relative to Watts-Strogatz and connectome networks. This similarity between modular and hierarchical networks is unsurprising, as hierarchical networks are inherently modular in their structure.

On the other hand, Var(FCD) exhibited a broad inverted “U”-shaped relationship with structural features (Fig. 6, bottom), peaking when the small-worldness index (\(\omega\)) was between 0 and 0.5. This indicates an optimal balance between integration and segregation. Var(FCD) declined sharply when integration increased (Global Efficiency) or segregation decreased (Modularity or Clustering Coefficient), emphasizing the need for segregated sub-networks to sustain dynamic synchronization patterns. Modularity (Q), a global segregation measure, better captured the increasing trend in Var(FCD) compared to the local Clustering Coefficient (CC), which showed deviations, particularly for Barabási-Albert networks. Notably, Watts-Strogatz and connectome networks experienced a significant drop in Var(FCD) at high segregation levels, unlike modular and hierarchical networks, which maintained high values and did not exhibit the inverted “U” trend.

Modularity is a predictor for network dynamics

Finally, to link structural parameters with dynamical properties, we calculated the Mutual Information (MI) between each structural parameter, and \(\chi\) or varFCD (Table 1 and Fig. 7). MI quantifies the relationship between two variables, X and Y, by measuring the amount of information about Y that can be gained by observing X31. This method provided a model-agnostic framework to identify the structural parameter that best predicts the associated dynamical properties.

Metastability (\(\chi\)) consistently shows higher MI values with respect to Var(FCD), indicating that it is more strongly and predictably influenced by structural features. On the other hand, Var(FCD), is most effectively predicted by Efficiency and Modularity, highlighting their critical role in dynamic richness.

Among the structural metrics, Modularity (Q) exhibits the highest MI values for both \(\chi\) (t-test p<0.001, \(|D|>0.8\) for all pairs) and Var(FCD) (t-test p<0.001, \(|D|>0.8\) vs. CC;\(\omega\); \(D=0.74\) vs. \(\eta\)). This shows modularity’s role in mediating transitions across dynamical states by balancing localized specialization and global integration. Additionally, the relatively lower MI for \(\omega\) in Var(FCD) compared to \(\chi\) indicates that while small-worldness is significant for metastable states, it plays a less prominent role in dynamic functional connectivity variability, suggesting that modularity provides a more direct representation of the large-scale structural organization required to sustain rich and complex network dynamics.

Overall, global measures like Modularity (Q) and Efficiency (\(\eta\)) emerge as the most informative metrics for understanding network dynamics, particularly for the more complex patterns captured by Var(FCD).

Mutual Information links network’s structure and dynamics. Bar shows the mutual information that each structural metric shares with dynamical ones, for all types of networks. Modularity (Q) is the structural parameter that shares more information with both dynamical parameter. Error bar represents SEM.

Discussion

In this study, we explored how network structure influences brain dynamics, focusing on metrics that quantify the integration-segregation balance, particularly modularity and small-worldness. We found that networks with an \(\omega\) index close to zero or slightly positive –indicating small-world characteristics– exhibited the most dynamic behavior, as quantified by the variance of the FCD matrix. The networks that deviate from this tendency were found to have a high global efficiency, i.e. to be highly integrated. Additionally, the ability to show multiple dynamic states was characterized by metastability \(\chi\)30. Our experiments showed that highly segregated networks are more prone to transit to different states than integrated ones.

Previous research has extensively investigated the relationship between network structure and dynamics across different scales. At the local scale, random neural fluctuations and chaotic activity have been linked to phenomena such as dynamical wandering, where the brain transitions between various stable states over time14,15,16,18,32. At the mesoscale, mechanisms such as coupling between cortical areas, sub-networks, and delays between nodes have been proposed to drive state transitions by modifying the brain’s intrinsic, likely chaotic, oscillatory regime19,20,21. These studies collectively highlight the influence of structural connectivity on neural dynamics.

Building on these findings, our study focuses on the global scale, demonstrating that the presence of modules within a network, along with their interconnectivity, plays a crucial role in modulating global dynamics. By using mutual information to quantify the relationship between structural and dynamical parameters, we identified that structural features such as modularity is key for understanding how network structure shapes dynamic richness in a model-agnostic manner.

The relationship between structure and dynamics becomes evident when considering the level of modularity and interconnectivity in a network. At one extreme, networks composed of entirely disconnected modules exhibit flat dynamics, as limited communication between components leads to each module operating independently, with average distances between components too large to enable meaningful interaction. Conversely, enhancing communication between modules, for instance by reducing the mean path length, increases dynamical richness, enabling more complex interactions across the network. However, when interconnectivity becomes excessive, or modularity is absent as in random networks, the system transitions into a fully integrated state where all components synchronize, once again resulting in flat, homogeneous dynamics.

In addition to influencing dynamic richness, the presence of modules strongly correlates with high metastability. Networks with high modularity, such as modular or hierarchical networks, exhibit increased metastability, as the presence of distinct yet interacting modules creates conditions conducive to metastable regimes. This observation aligns with prior research demonstrating that modular organization promotes metastable dynamics33. Together, these findings underscore the critical role of modular structure in shaping both the richness and stability of network dynamics.

Our study also highlights the critical role of small-worldness in supporting the brain’s dynamical richness-its capacity to exhibit a wide range of dynamic states. The human connectome combines diverse topological characteristics, including small-world, modular, hierarchical, and power-law properties34,35,36,37. Among these, small-worldness stands out as particularly crucial because it balances integration (efficient global communication) and segregation (specialized local processing)38. Small-world networks, with their hallmark features of short path lengths and high clustering, uniquely facilitate both integration and segregation. Short path lengths enable rapid communication between distant brain regions, ensuring efficient global coordination, while high clustering supports local specialization within modules34,39. This dual capability surpasses the limitations of purely modular networks, which excel in segregation but lack efficient integration40, and power-law networks, which promote integration through highly connected hubs but lack strong local clustering. By combining these strengths, small-world networks provide the structural foundation for the brain’s dynamic flexibility and resilience. Reinforcing this idea, Watts-Strogatz networks are the ones that most closely follow the behavior of connectome networks, specially in the drop of varFCD and metastability when the networks are too segregated.

Our analysis using Mutual Information (MI) shows that modularity is a stronger predictor of network dynamics compared to other structural metrics, such as small-worldness (\(\omega\)). While \(\omega\) is effective in representing the integration-segregation balance at a local level, modularity provides a clearer representation of the overall network architecture. Modularity describes the organization of distinct yet interconnected modules, which are closely tied to large-scale structural features that influence dynamic interactions. This characteristic makes modularity better suited to explain complex dynamic behaviors, including Metastability (\(\chi\)) and Dynamic Functional Connectivity variability (VarFCD). In contrast, the small-worldness index (\(\omega\)), derived from the clustering coefficient (CC), emphasizes immediate node-level connections. Consequently, any limitations in MI observed for CC also affect \(\omega\), given their inherent connection. These findings suggest that modularity plays a key role in determining the dynamic properties of brain networks, offering insights that go beyond the capabilities of simpler metrics like clustering coefficient or global efficiency. The analysis illustrates how modular organization contributes to the complexity and adaptability of brain dynamics.

However, while these findings are promising, they should be interpreted with caution. One key limitation of our study is the exclusion of weighted networks, which restricts the generalization of our conclusions. Prior research has shown that variations in coupling strength between brain regions significantly influence overall dynamics, particularly in individuals with Alzheimer’s disease41, major depressive disorder (MDD)42, cognitive decline43, or as part of the aging process44. Future studies should investigate the role of coupling strength in shaping neural dynamics, particularly at the macroscale level.

Additionally, while the variance of functional connectivity dynamics (VarFCD) provides valuable insights into how brain region connectivity evolves over time, it may not fully capture the complexity of brain dynamics45. To gain a more comprehensive understanding of dynamical richness, it is crucial to investigate higher-order interactions (HOIs), which capture simultaneous relationships among multiple brain regions, moving beyond traditional pairwise correlations. This approach provides a more accurate depiction of the system’s dynamics and offers a broader perspective on its complexity46. Although the exact methodologies for studying HOIs are still under development47,48, several emerging approaches have shown promise in characterizing phenomena such as aging45, psychiatric disorders48, and topological properties of brain networks47. Using artificially generated network as in our present work, future studies may unveil which topological properties are more likely to sustain HOIs in a similar manner as observed in empirical brain recordings.

Lastly, given the study’s focus on binarized networks and pairwise correlations, future research could extend these findings by investigating weighted networks, where variations in connection strength may provide a deeper understanding of how modularity influences dynamic behavior. Furthermore, HOI analysis could offer deeper insights into the complex mechanisms underlying brain dynamics, capturing dependencies beyond pairwise relationships and enriching our understanding of network function.

In conclusion, our findings suggest that highly modular networks are particularly adept at transitioning between distinct dynamical states, underscoring their crucial role in system segregation. On the other hand, the degree of interconnectivity between modules significantly shapes how networks navigate shifts between low and high activity levels. Also, small-world networks exhibited the most dynamic behavior, as evidenced by the variance of the Functional Connectivity Dynamics (FCD) matrix. Finally, network’s modularity index may be used as a proxy for estimating network’s dynamics.

Methods

Networks

Networks of 240 nodes (200 in human connectome) were created as such that each network has a density of 0.075. This means an average degree of 18 connections per node in the 240-node networks and 15 connections in the 200-node networks. All networks are binary and undirected, that is, the adjacency matrices are symmetric.

Watts-Strogatz networks

These networks were generated using the Watts-Strogatz algorithm26. The algorithm starts with 240 nodes arranged in a circular lattice and each node is connected to its 18 nearest neighbors. Then, with probability \(p_r\), each connection is rewired to connect a randomly selected node within the network. The reconnection probability was varied from \(p_r=0\) (highly segregated lattice network) to \(p_r=0.5\) (highly integrated random network).

Modular networks

This type of network consists of a set of internally topologically independent subnetworks (modules), which are connected to each other through a reduced number of (inter-module) links. 240 nodes were arranged in 8 modules of 30 nodes. Nodes within the modules were connected randomly with a density of 0.6. Then, with probability \(p_{inter}\), intra-module connections were replaced by inter-module connections, such that the degree of the initial modular network remains constant. The inter-module connection was varied between \(p_{inter}=5\times 10^{-4}\) (highly segregated) to \(p_{inter}=0.07\) (highly integrated).

Hierarchical networks

These networks where initially set up in a similar manner as Modular Networks. 240 nodes were divided in 12 modules and the nodes within the modules were randomly connected with density 0.9. In this case, the modules were of variable size between 16 and 24. Then, the hierarchical structure is implemented by iteratively connecting module pairs, with a probability that decreased as the hierarchy increased. Finally, a number of random connections were added (replacing existing ones to preserve the degree, with probability ranging from 0 to 0.5), to gradually increase the network integration.

Scale-free networks

These networks were generated using the Holme and Kim algorithm49, as implemented in the NetworkX Python package50. This algorithm generates graphs with power-law degree distribution and a approximate average clustering coefficient. The algorithm begins with a small number of nodes (typically three) and grows the network by adding one node at a time. Each new node is connected to existing nodes with a preference for those that have higher degrees (rich-gets-richer). Additionally, after choosing a node based on its degree, the algorithm might create a triangle (triad closure) to increase clustering. In this way, by progressively increasing or decreasing the average clustering coefficient we obtained more segregated or integrated networks, respectively.

Human connectome networks

The Enigma toolbox51 was used to obtain a Human Connectome Project (HCP) connectome parcellated according to the Schaefer 200 parcellation. The weighted connectome was binarized using a threshold that left 7.5% of connections.

To integrate the human connectome network, we use the randmio_und_connected function of the Brain Connectivity Toolbox (BCT) package implemented in Python (https://pypi.org/project/bctpy/). Starting by determining the number of rewiring iterations, we applied the function iteratively, increasing the rewires each time to move the network towards a randomized state with increased integration. The process is stopped once a desired integration level or a set number of iterations is reached, ensuring that while the network becomes more integrated, its degree distribution remains consistent.

To segregate the human connectome network, we developed an algorithm based on node modularity. It starts by computing an agreement matrix, derives a consensus partition for the network, and then calculates the nodal participation coefficient and z-scored modularity for each node. The core operation iterates over nodes, prioritizing those with high modularity scores. For these nodes, it identifies and deletes certain intra-module connections (those within the same community) and establishes new inter-module connections (those outside its community). This rewiring aims to change up to three connections per iteration, preserving the graph’s structure while emphasizing nodes with the highest modularity values.

Structural metrics

Integration and segregation in the generated networks were quantified by several metrics. Global efficiency is an estimator for network integration, being the average of the inverse of the shortest path lengths52. Clustering coefficient is a local measure of segregation, effectively counting how many triangles are formed when two neighbors of a node are connected as well53. Modularity measures segregation more globally, relying on the initial detection of modules and then measuring the balance between intra-module and inter-module links54. Finally, small-world index \(\omega\) measures the integration/segregation balance by contrasting the Global Efficiency and Clustering Coefficient of each network with that of its corresponding latticed or random version26.

Dynamical model

Neural Mass Model: Wilson-Cowan with Plasticity

The neural dynamics of each constituent node in the obtained SC networks were simulated using an oscillatory neural mass model, the Wilson-Cowan model55, with the incorporation of an inhibitory synaptic plasticity (ISP) mechanism28. The activity in neural populations is governed by the equations28

where \(E_k\) and \(I_k\) correspond to the average firing rates in excitatory and inhibitory populations in the \(k^{th}\) brain region (node in the network), respectively. \(\tau _e\) and \(\tau _i\) are the excitatory and inhibitory time constants, \(c_{ab}\) is the local connection strength from population a to population b, and P is the excitatory input constant. \(r_e\) and \(r_i\) are parameters to take into account the refractory period of firing neurons. Long-range connections \(W_{jk} = \lbrace 0,1 \rbrace\) from region j to region k are multiplied by a global coupling constant G. D corresponds to additive noise, which is given by random values from a normal (Gaussian) distribution with zero mean and standard deviation \(D=0.002\). Indices k and j run across the total number of nodes n. The nonlinear response function S is a sigmoid function given by:

where \(\mu\) and \(\sigma\) are the location and slope parameters of the sigmoid, respectively.

The ISP mechanism is modeled as change of the \(c_{ie}\) local inhibitory synaptic connection, that depends on the activity of both the local excitatory and inhibitory populations, according to

where \(\tau _{isp}\) is the learning rate, and \(\rho\) is the target excitatory activity level, with initial value \(c_{ie}^k(0)=3.75\).

Parameters used are: \(c_{ee}=3.5\), \(c_{ei}=2.5\), \(P=0.4\), \(\tau _e=0.01\), \(\tau _i=0.02\), \(\mu =1\), \(\sigma =0.25\), \(\rho =0.125\), \(\tau _{isp}=2\), \(r_e = r_i = 0.5\).

Simulation

Based on the obtained sets of networks, the neuronal dynamics of each network were simulated using the Wilson-Cowan oscillatory neural mass model with plasticity in each node. The global coupling strength parameter G was varied over a logarithmic space range of values \(\lbrace 0,..,2.512\rbrace\).

Maruyama-Euler method for stochastic ordinary differential equation was used, with integration time step \(dt = 0.0001s\). Simulations were performed for \(t = 102 s\), with a noiseless transient of \(t_{trans} = 50 s\) for ensuring stabilization of the ISP.

To introduce heterogeneity in the obtained oscillatory signals among network nodes, the value of the excitatory input constant P was assigned randomly to each node, within a range of \(0.3-0.5\). The simulation parameters used were adjusted to ensure that the obtained signals exhibited oscillatory behavior. This simulation protocol was repeated 10 times (10 random seeds).

Analysis

Signal preprocessing

Every simulated time serie was subsampled to 0.5kHz, and filtered with a band-pass filter (Bessel 4th order,\(f_{low}=5Hz\); \(f_{high}=15Hz\)). Hilbert transform was then applied for obtaining the instantaneous phase and envelope of the slow oscillation. The first and the last second of simulation were discarded in order to get rid of artifacts that Hilbert transform may generate. This signal was used for the dynamical analysis.

Dynamical metrics

Functional connectivity (FC)

The statistical dependency of neural signals was measured through a Functional Connectivity (FC) matrix56. Every element of this matrix corresponds to the pairwise envelope correlation between nodes j, k, over a time window of duration W (\(W_{time} = 4 s\), overlap = 75 %). The window length of 2000 samples or 4s was chosen as a compromise between time resolving changes in the seconds scale, and to properly capture slow components (around 0.6Hz) present in the envelope signal.

Functional connectivity dynamics (FCD)

Functional Connectivity Dynamics (FCD) matrix, which displays the dynamical repertoire of the system, was calculated using the Euclidean distance between the vectorized lower triangular of \(FC_t\) and \(FC_{t+\tau }\).

Synchrony

For assessing the overall synchrony of the network, Kuramoto’s order parameter R(t) was calculated.

Where i is the complex unit and \(\phi _k(t)\) is the instantaneous phase of the k-th node.

Metastability

Metastability30, was assessed by calculating the variance of R(t)

Where \(\tau\) is the number of points that the signal R has, and \(\langle R \rangle _T\) is the average in time of the global synchrony.

Multistability

Multistability is the numerous stable points that a system has30. As such, we used the variance of FCD, VarFCD, as a proxy for quantifying multistability16.

Metrics summarization

Phase synchrony

To characterize the phase synchronization curve into one value, a sigmoid function was fitted to each network’s phase response (averaged over 10 seeds).

Where k is the slope of the function, and \(x_0\) is the 50% point. Then, the slope of each fitted function was plotted against each structural metrics

Multi and metastability

To describe these metrics as a single value, the area under the curve (AUC) for the coupling range was calculated using Simpson’s rule with scipy’s function simpson. Again, the average of 10 seeds was used.

Where f(g) corresponds to multi or metastability.

Mutual information

The relationship between structural and dynamical parameters was evaluated using Mutual Information.

where, \(p(x, y)\) is the joint probability distribution, and \(p(x)\) and \(p(y)\) are the marginal distributions of \(X\) and \(Y\), respectively.

For each structural parameter across the entire network set, Mutual Information with respect to multi- or metastability was computed using the scikit-learn function mutual_info_regression (https://scikit-learn.org/).

To ensure reliable estimates, Mutual Information was resampled31 using bootstrapping (N=43, B=2000), and Standard error Mean (SEM) was obtained from confidence interval of 95%. The normality of the bootstrap distributions was then assessed via the Kolmogorov-Smirnov test against a Gaussian function. Given significant variance differences (F-test, df1=df2=1999, p<0.001), mean differences were evaluated with Welch’s t-test for unpaired samples. Effect sizes were computed using Cohen’s d.57

Data availability

The structural networks employed in this study along with sample code for simulation and basic analysis can be found at https://github.com/vandal-uv/Integration-Segregation.

References

Bullmore, E. & Sporns, O. The economy of brain network organization. Nat. Rev. Neurosci. 13, 336–349 (2012).

Kaiser, M. A tutorial in connectome analysis: Topological and spatial features of brain networks. Neuroimage 57, 892–907 (2011).

Lynn, C. W. & Bassett, D. S. The physics of brain network structure, function and control. Nat. Rev. Phys. 1, 318–332 (2019).

Sporns, O., Tononi, G. & Kötter, R. The human connectome: A structural description of the human brain. PLoS Comput. Biol. 1, e42 (2005).

Fornito, A., Zalesky, A. & Breakspear, M. The connectomics of brain disorders. Nat. Rev. Neurosci. 16, 159 (2015).

da Silva, F. L. Eeg and meg: Relevance to neuroscience. Neuron 80, 1112–1128 (2013).

Van Den Heuvel, M. P. & Pol, H. E. H. Exploring the brain network: A review on resting-state FMRI functional connectivity. Eur. Neuropsychopharmacol. 20, 519–534 (2010).

Diez, I. et al. A novel brain partition highlights the modular skeleton shared by structure and function. Sci. Rep. 5, 10532 (2015).

Honey, C. J., Kötter, R., Breakspear, M. & Sporns, O. Network structure of cerebral cortex shapes functional connectivity on multiple time scales. Proc. Natl. Acad. Sci. 104, 10240–10245 (2007).

Honey, C. et al. Predicting human resting-state functional connectivity from structural connectivity. Proc. Natl. Acad. Sci. 106, 2035–2040 (2009).

Hansen, E. C., Battaglia, D., Spiegler, A., Deco, G. & Jirsa, V. K. Functional connectivity dynamics: Modeling the switching behavior of the resting state. Neuroimage 105, 525–535 (2015).

Preti, M. G., Bolton, T. A. & Van De Ville, D. The dynamic functional connectome: State-of-the-art and perspectives. Neuroimage 160, 41–54 (2017).

Cabral, J., Kringelbach, M. L. & Deco, G. Functional connectivity dynamically evolves on multiple time-scales over a static structural connectome: Models and mechanisms. Neuroimage 160, 84–96. https://doi.org/10.1016/j.neuroimage.2017.03.045 (2017).

Berglund, N. & Gentz, B.: Stochastic dynamic bifurcations and excitability. Stochastic methods in Neuroscience 64–93 (2010).

Heitmann, S. & Breakspear, M. Putting the “dynamic’’ back into dynamic functional connectivity. Netw. Neurosci. 2, 150–174 (2018).

Orio, P. et al. Chaos versus noise as drivers of multistability in neural networks. Chaos: Interdiscip. J. Nonlinear Sci. 28, 106321 (2018).

Piccinini, J. et al. Noise-driven multistability vs deterministic chaos in phenomenological semi-empirical models of whole-brain activity. Chaos 31, 023127. https://doi.org/10.1063/5.0025543 (2021).

Xu, K., Maidana, J. P., Castro, S. & Orio, P. Synchronization transition in neuronal networks composed of chaotic or non-chaotic oscillators. Sci. Rep. 8, 8370 (2018).

Deco, G., Jirsa, V., McIntosh, A. R., Sporns, O. & Kötter, R. Key role of coupling, delay, and noise in resting brain fluctuations. Proc. Natl. Acad. Sci. 106, 10302–10307 (2009).

Deco, G. & Jirsa, V. K. Ongoing cortical activity at rest: Criticality, multistability, and ghost attractors. J. Neurosci. 32, 3366–3375 (2012).

Cabral, J. et al. Exploring mechanisms of spontaneous functional connectivity in meg: How delayed network interactions lead to structured amplitude envelopes of band-pass filtered oscillations. Neuroimage 90, 423–435 (2014).

Batista-García-Ramó, K. & Fernández-Verdecia, C. I. What we know about the brain structure-function relationship. Behav. Sci. 8, 39 (2018).

Humphries, M. D. & Gurney, K. Network ‘small-world-ness’: A quantitative method for determining canonical network equivalence. PLoS ONE 3, e0002051 (2008).

Rubinov, M. & Sporns, O. Complex network measures of brain connectivity: Uses and interpretations. Neuroimage 52, 1059–1069 (2010).

Telesford, Q. K., Joyce, K. E., Hayasaka, S., Burdette, J. H. & Laurienti, P. J. The ubiquity of small-world networks. Brain Connect. 1, 367–375 (2011).

Watts, D. J. & Strogatz, S. H. Collective dynamics of ‘small-world’ networks. Nature 393, 440 (1998).

Achard, S. & Bullmore, E. Efficiency and cost of economical brain functional networks. PLoS Comput. Biol. 3, e17. https://doi.org/10.1371/journal.pcbi.0030017 (2007).

Abeysuriya, R. G. et al. A biophysical model of dynamic balancing of excitation and inhibition in fast oscillatory large-scale networks. PLoS Comput. Biol. 14, e1006007. https://doi.org/10.1371/journal.pcbi.1006007 (2018).

Hipp, J. F., Hawellek, D. J., Corbetta, M., Siegel, M. & Engel, A. K. Large-scale cortical correlation structure of spontaneous oscillatory activity. Nat. Neurosci. 15, 884–890 (2012).

Kelso, J. S. Multistability and metastability: Understanding dynamic coordination in the brain. Philos. Trans. R. Soc. B: Biol. Sci. 367, 906–918 (2012).

Timme, N. M. & Lapish, C. A tutorial for information theory in neuroscience. Eneuro5 (2018).

Piccinini, J. et al.: Noise-driven multistability vs deterministic chaos in phenomenological semi-empirical models of whole-brain activity. Chaos: An Interdisciplinary Journal of Nonlinear Science31 (2021).

Hizanidis, J., Kouvaris, N. E., Zamora-López, G., Díaz-Guilera, A. & Antonopoulos, C. G. Chimera-like states in modular neural networks. Sci. Rep. 6, 19845 (2016).

Bassett, D. S. & Bullmore, E. T. Small-world brain networks revisited. Neuroscientist 23, 499–516 (2017).

Sporns, O. & Betzel, R. F. Modular brain networks. Annu. Rev. Psychol. 67, 613–640 (2016).

Meunier, D., Lambiotte, R., Fornito, A., Ersche, K. & Bullmore, E. T. Hierarchical modularity in human brain functional networks. Front. Neuroinform. 3, 571 (2009).

Tomasi, D. G., Shokri-Kojori, E. & Volkow, N. D. Brain network dynamics adhere to a power law. Front. Neurosci. 11, 72 (2017).

Wang, R. et al. Segregation, integration, and balance of large-scale resting brain networks configure different cognitive abilities. Proc. Natl. Acad. Sci. 118, e2022288118 (2021).

Bassett, D. S. & Bullmore, E. Small-world brain networks. Neurosci. 12, 512–523 (2006).

Cohen, J. R. & D’Esposito, M. The segregation and integration of distinct brain networks and their relationship to cognition. J. Neurosci. 36, 12083–12094 (2016).

Schumacher, J. et al. Dynamic functional connectivity changes in dementia with lewy bodies and alzheimer’s disease. NeuroImage Clin 22, 101812 (2019).

Javaheripour, N. et al. Altered brain dynamic in major depressive disorder: State and trait features. Transl. Psychiatry 13, 261 (2023).

Wei, Y.-C. et al. Functional connectivity dynamics altered of the resting brain in subjective cognitive decline. Front. Aging Neurosci. 14, 817137 (2022).

Jauny, G. et al. Linking structural and functional changes during aging using multilayer brain network analysis. Commun. Biol. 7, 239 (2024).

Gatica, M. et al. High-order interdependencies in the aging brain. Brain Connect. 11, 734–744 (2021).

Battiston, F. et al. The physics of higher-order interactions in complex systems. Nat. Phys. 17, 1093–1098 (2021).

Santoro, A., Battiston, F., Lucas, M., Petri, G. & Amico, E. Higher-order connectomics of human brain function reveals local topological signatures of task decoding, individual identification, and behavior. Nat. Commun. 15, 10244 (2024).

Herzog, R. et al. Genuine high-order interactions in brain networks and neurodegeneration. Neurobiol. Dis. 175, 105918 (2022).

Holme, P. & Kim, B. J. Growing scale-free networks with tunable clustering. Phys. Rev. E 65, 026107. https://doi.org/10.1103/PhysRevE.65.026107 (2002).

Hagberg, A. A., Schult, D. A. & Swart, P. J.: Exploring network structure, dynamics, and function using networkx. In Varoquaux, G., Vaught, T. & Millman, J. (eds.) Proceedings of the 7th Python in Science Conference, 11 – 15 (Pasadena, CA USA, 2008).

Larivière, S. et al. The ENIGMA Toolbox: Multiscale neural contextualization of multisite neuroimaging datasets. Nat. Methods 18, 698–700. https://doi.org/10.1038/s41592-021-01186-4 (2021).

Latora, V. & Marchiori, M. Efficient behavior of small-world networks. Phys. Rev. Lett. 87, 198701 (2001).

Onnela, J.-P., Saramäki, J., Kertész, J. & Kaski, K. Intensity and coherence of motifs in weighted complex networks. Phys. Rev. E 71, 065103 (2005).

Clauset, A., Newman, M. E. & Moore, C. Finding community structure in very large networks. Phys. Rev. E 70, 066111 (2004).

Wilson, H. R. & Cowan, J. D. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J . 12, 1–24 (1972).

Messé, A., Hütt, M.-T., König, P. & Hilgetag, C. C. A closer look at the apparent correlation of structural and functional connectivity in excitable neural networks. Sci. Rep. 5, 7870 (2015).

Lakens, D. Calculating and reporting effect sizes to facilitate cumulative science: a practical primer for t-tests and anovas. Front. Psychol. 4, 863 (2013).

Acknowledgements

J.PE. is recipient of a PhD Grant FIB-UV From UV. S.O.V. is recipient of a Ph.D. fellowship grant from ANID 21241572 (Chile). C.C. is supported by BrainLat/NIH and GBHI funding. P.O. is funded by the Advanced Center for Electrical and Electronic Engineering (AFB240002 ANID, Chile) and Fondecyt Regular Grant 1241469 (ANID, Chile).

Author information

Authors and Affiliations

Contributions

General Idea: JPE and PO. Code and model creation: JPE, SOV, CCO, PO. Simulations: JPE, PO. Analysis and plotting: JPE. Writing, proofreading, and manuscript approval: JPE, SOV, CCO, JPM, PO.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Palma-Espinosa, J., Orellana-Villota, S., Coronel-Oliveros, C. et al. The balance between integration and segregation drives network dynamics maximizing multistability and metastability. Sci Rep 15, 18811 (2025). https://doi.org/10.1038/s41598-025-01612-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-01612-z