Abstract

This study aims to address the issues of accuracy and efficiency in sculpture image classification. Due to the diversity and complexity of sculpture images, traditional image processing algorithms perform poorly in capturing the sculptures’ intricate shapes and structural features, resulting in suboptimal classification and recognition performance. To overcome this challenge, this study proposes an innovative image classification method that combines the ResNet50 model from the Deep Convolutional Neural Network (DCNN) with the K-means++ clustering algorithm. ResNet50 is chosen for its powerful feature extraction capabilities and outstanding performance in image classification tasks. At the same time, K-means++ is selected for its optimized initial centroid selection strategy, which enhances the stability and reliability of clustering. After the final convolutional layer of ResNet50, a self-attention module is added. This module learns and generates an attention map, which guides the model on which areas of the image to focus on in subsequent processing. ResNet50 includes residual blocks, each containing multiple convolutional layers and a skip connection, enabling the network to learn differences between inputs and outputs rather than directly learning outputs, thus improving performance. Initially, ResNet50 extracts feature vectors from original images, which are inputted into the K-means + + algorithm for clustering. K-means + + automatically partitions these feature vectors into different categories, achieving unsupervised image classification. The CMU-MINE architectural sculpture dataset is utilized in the experimental section, with ViT-Base, EfficientNet-B4, and ConvNeXt-Tiny as benchmarks to evaluate the proposed ResNet50 + K-means + + image classification approach. The final model achieves a loss value of 0.155 and a recall of 98.9%, significantly outperforming the other three models. In conclusion, performing feature point matching during three-dimensional reconstruction is crucial. This study employs a combined image classification method using the ResNet50 and K-means + + algorithm, optimizing the accuracy issues of traditional classification methods and achieving promising classification results.

Similar content being viewed by others

Introduction

In the digital age, sculpture art creation has increasingly incorporated advanced technological methods, providing artists with richer and more diverse creative possibilities. However, despite the numerous conveniences brought by digital sculpture creation, several challenges persist in practical applications. Among these, the classification and recognition of sculpture images is a key issue. Artists often need to reference a large number of sculpture images for inspiration and creativity. Yet, due to the diversity and complexity of sculpture images, efficiently classifying and recognizing these images has become an urgent problem that needs to be addressed.

Research background and motivations

With the continuous advancement of technology, machine learning (ML) and artificial intelligence (AI) are increasingly widely used across various fields. Especially in art and design, their potential is gradually being explored and showcased. Sculpture creation is a crucial part of traditional art and its creation involves deep understanding and meticulous handling of form, material, color, and more. However, traditional methods heavily rely on artists’ experience and intuition, which limits the efficient processing and classification of large image datasets, thus restricting their application and development in the creative process1,2,3. Digital sculpture creation remarkably enhances the efficiency and precision of creation by utilizing computer technology to assist in sculptural works’ design, simulation, optimization, and manufacturing. It also provides artists with more creative possibilities and freedom. However, despite the advantages of digital sculpture creation, several challenges remain in practical applications. One key issue is the classification and recognition of sculpture images. During the creative process, artists should reference numerous sculpture images to gain inspiration and ideas. Due to the diversity and complexity of sculpture images, traditional image classification methods often struggle to accurately distinguish between different sculpture types and styles, making it difficult for artists to find inspiration and references.

Deep learning (DL) technology has demonstrated significant potential in image processing and computer vision. As a vital art branch, sculpture involves a complex creation process that heavily relies on the artist’s intuition and experience. However, in the digital age, how to leverage technological tools to assist in sculpture creation, enhancing both efficiency and quality, has become an urgent issue. Traditional image processing algorithms often struggle to effectively capture sculptures’ complex forms and structural features, resulting in poor classification and recognition performance. In recent years, the Deep Convolutional Neural Network (DCNN) has achieved marked breakthroughs in image classification4,5,6. By mimicking the neural connections in the human brain, DCNN constructs hierarchical neural network models capable of automatically learning and extracting feature information from images, thereby achieving accurate image classification7,8. The emergence of DCNN has opened new possibilities and avenues for sculpture creation. Through DCNN, efficient processing and analysis of large volumes of sculpture images can be conducted, extracting crucial features like form, material, and color, providing data support and inspiration for sculpture creation.

In sculpture image processing, various technologies have emerged, such as genetic algorithms for optimizing sculpture design parameters, specialized threshold segmentation algorithms for extracting sculpture contours, and 3D exploration techniques for constructing three-dimensional (3D) models of sculptures. However, these technologies primarily focus on specific aspects of sculpture and lack comprehensive solutions. While genetic algorithms can optimize designs to a certain extent, they struggle with capturing complex image features. Threshold segmentation algorithms have limited effectiveness in extracting contours of intricate sculptures, and 3D exploration techniques are primarily concerned with 3D modeling rather than image classification. However, relying solely on DCNN for image classification still has limitations. For one thing, DCNN requires substantial annotated data for training, which is often challenging to obtain in high quality for sculpture creation. For another, DCNN focuses only on overall image features in the classification process, neglecting similarities and differences between images, potentially resulting in less accurate classification results9,10,11. As unsupervised learning methods, clustering algorithms categorize data into diverse clusters based on similarities without requiring labeled data. Widely applied in image processing and data mining, clustering algorithms group images with similar features, aiding better understanding and analysis of image data. Moreover, clustering algorithms can swiftly identify sculpture images with similar forms, materials, and colors in sculpture creation, offering artists more creative inspiration and references12.

Although the SIFT algorithm finds widespread application in 3D reconstruction and feature point matching domains, this study focuses on two-dimensional (2D) image classification, particularly for sculptural artworks. The decision to exclude 3D information stems from the research objective to evaluate DL methods’ capacity for handling complex visual patterns rather than exploring 3D data applications. Consequently, while SIFT offers robust feature extraction capabilities, it remains excluded from the primary classification pipeline in this investigation. Understanding its operational principles proves essential for comprehending how different feature extraction techniques influence final model performance. Against this backdrop, this study proposes a sculpture image classification method that combines DCNN and clustering algorithms. It aims to provide a new classification approach for digital creation in sculpture art, promoting innovation in the age of AI. The specific objectives of this study are as follows. First, the self-attention module is introduced to enhance the attention mechanism of sculpture image feature extraction in ResNet50, thus improving the accuracy of feature point matching; Second, the K-means++ algorithm is utilized to cluster the feature vectors extracted by ResNet50, achieving unsupervised image classification and reducing the cost of manual labeling. DCNN is used for feature extraction and initial classification of many sculpture images. Then, the clustering algorithm refines and optimizes these classifications, resulting in more accurate and reliable image classification outcomes. The methodological innovation of this study lies in its proposed ResNet50-K-means++ integration, which simultaneously harnesses DCNN’s powerful feature extraction capacity while optimizing feature representation through clustering algorithms. This combined approach demonstrates enhanced effectiveness in processing complex textural and shape information characteristic of sculptural imagery.

Research objectives

This study explores an image classification method that combines DCNN with clustering algorithms. This method achieves accurate classification and recognition of sculpture images by leveraging DL algorithms’ powerful feature extraction and learning capabilities and integrating clustering algorithms for unsupervised classification of feature vectors. This offers artists more creative inspiration and references, thus promoting innovation and development in sculpture creation. This study innovatively designs a self-attention module and integrates it into the final convolutional layer of ResNet50. The core contribution of this module lies in its ability to dynamically adjust the weights of different regions of the image, enabling the model to focus on features that are crucial to classification decisions. Section "Research background and motivations" begins with a description of the study’s background, objectives, and necessity. Section "Literature review" summarizes the current state of research in image classification. Section "Research methodology" expounds on the application of image classification in current sculpture creation and proposes an image classification algorithm that combines DCNN and clustering algorithms to improve the accuracy of feature point matching in sculpture images. Section "Experimental design and performance evaluation" details the experimental design to validate the proposed image classification algorithm’s feasibility in practical applications. Section "Conclusion" concludes with a summary of the research contributions, limitations, and future research directions.

Literature review

Image classification, a foundational task in computer vision, has made significant progress. However, sculpture image classification, as a specialized area within image classification, presents unique challenges. Sculptures encompass rich 2D image information and complex features such as shapes, structures, and materials in 3D space. Therefore, sculpture image classification requires more refined feature extraction and classification methods compared to general image classification tasks.

Traditional image processing methods for sculpture image classification primarily involve two steps: feature extraction and classifier design. In terms of feature extraction, commonly used methods encompass edge detection, texture analysis, and shape recognition, which can extract distinguishing features from images. Regarding classifier design, frequently used ML algorithms include support vector machines, decision trees, and random forests, which can classify images based on the extracted features. Image classification is a core task in computer vision. In recent years, image classification has made significant progress due to the development of DL, especially the convolutional neural network (CNN). In sculpture image classification, various CNN-based algorithms have been proposed. Classic models such as AlexNet, VGGNet, and ResNet have performed well in sculpture image classification tasks. These models learn more complex and deep feature representations by stacking multiple convolutional, pooling, and fully connected (FC) layers. Some researchers have also proposed specific models for sculpture image classification, such as three-dimensional convolutional neural networks (3D-CNN) and attention mechanism-based CNN models, to enhance classification performance13. AlexNet, a milestone in DL, achieved breakthrough results in the 2012 ImageNet challenge. The network architecture includes 8 layers, comprising 5 convolutional layers and 3 FC layers14. AlexNet’s success demonstrates the immense potential of CNNs in image classification tasks, laying the foundation for subsequent research.

Table 1 summarizes the research advancements in the field of sculpture image classification. Under the impetus of DL, both image classification and 3D reconstruction technologies have made considerable progress. By integrating the DCNN with clustering algorithms, further improvements in image classification can be achieved, providing more intelligent technical support for sculpture creation. Nawaz et al.6 proposed the MAGE framework to unify image representation learning and image synthesis tasks. The approach was based on the representation learning method using semantic masks of images, achieving image generation and representation learning within a unified framework. Sujithra et al.15 introduced a self-supervised learning-based image classification technique, which pre-trained using unlabeled image data to learn universal feature representations of images. Fine-tuning on limited labeled data then enabled efficient image classification. In sculpture image classification, Zhao et al.16 noted that ResNet50 addressed the gradient vanishing problem in deep networks by introducing residual blocks. It achieved excellent performance on the ImageNet dataset and became a benchmark model for many computer vision tasks. Xu et al.17 pointed out that the Inception v3 model enhanced the model’s expressive power by using convolution kernels of different sizes in parallel within each convolutional layer to capture multi-scale information. Meanwhile, Inception v3 demonstrated outstanding performance on the ImageNet dataset, especially when computational resources are limited. Taheri et al.18 extracted handcrafted features including color and texture from semantic pyramids of deep neural networks (DNNs), fused feature maps across different DNN layers. Their methodology additionally considered feature vector interpretability.

Current image classification techniques primarily concentrate on image categorization and recognition. While existing research has made some progress in sculpture image classification, several shortcomings still exist. Most studies focus on extracting 2D image features and lack sufficient attention to the 3D spatial characteristics of sculptures. Furthermore, existing 3D reconstruction techniques mainly rely on multi-view or depth images, making direct application to sculpture image classification challenging. This study aims to address these gaps by integrating DCNN and clustering algorithms. The goal is to learn and extract 3D structural features from a large dataset of sculpture images, enabling more accurate image classification and recognition. This approach seeks to fill the current research gaps in sculpture image analysis.

Research methodology

Image classification and 3D reconstruction techniques applied in sculpture creation

Sculpture art has always held a significant position throughout history, from classical sculptures in ancient Greece to modern abstract art. Sculptors continuously explore new materials and techniques to express their creative ideas. With the advancement of technology, computer vision techniques, especially image classification and 3D reconstruction, have brought new possibilities to sculpture creation19,20. Table 2 illustrates examples of the application of image classification and 3D reconstruction techniques in sculpture creation.

Using image classification technology can categorize abundance sculptures by their artistic styles. A database encompassing various styles can be created by training a CNN on sculptures of different styles. When artists input their sculptures, the system can automatically analyze the stylistic features and recommend similar works for reference. Selecting the appropriate materials is crucial in sculpture creation. Image classification technology can identify and categorize different materials. Using pre-trained models like ResNet or DenseNet, various sculpture materials (such as marble, bronze, wood, and clay) can be classified, helping artists quickly identify and choose the best materials.

High-precision digital archiving of existing sculptures can be achieved through 3D reconstruction technology. Based on DL methods such as PointNet++ or Neural Radiance Fields (NeRFs), 2D images can be converted into high-precision 3D models, allowing for comprehensive display and preservation of sculpture works21,22. Multi-view image capture devices can obtain images of sculptures from multiple angles. DL models process these images for 3D reconstruction, generating high-precision 3D models.

Feature point matching method for 3D reconstruction of sculptures

3D reconstruction involves converting information from 2D images or image sequences into a 3D model using certain technical methods. In the 3D reconstruction of sculptures, multiple-view images are typically used as input data. The process includes steps such as feature point matching, camera calibration, and 3D point cloud generation, ultimately leading to the creation of a 3D model of the sculpture. Feature point matching is one of the critical steps in this process. It establishes correspondences between various views, providing the foundational data for subsequent 3D reconstruction23,24,25.

Feature point extraction and matching are fundamental to 3D reconstruction. An effective feature-matching method should be highly robust and accurate, ensuring consistency across different viewpoints, scales, and lighting conditions. Constructing a scale space is crucial for detecting feature points at various scales. The Euclidean distance measures the distance between two points in an n-dimensional space. In feature point matching, the Euclidean distance between two feature point descriptors can be calculated, with smaller distances indicating greater similarity between the feature points26,27,28. This method is well-suited for Scale Invariant Feature Transformation (SIFT) feature points, as their descriptors usually have high dimensionality and rich information.

SIFT generates the scale space through Gaussian blurring and the Difference of Gaussians (DoG). Gaussian blurring involves applying Gaussian blur to the image at different scales, resulting in images at various levels of blurriness:

L represents the blurred image; G is the Gaussian kernel function; \(\sigma\) refers to the scale parameter; σ denotes the original image.

The purpose of the DoG is to compute the DoG images at adjacent scales:

k refers to the scale factor.

Figure 1 presents the SIFT implementation framework.

Key components of the SIFT algorithm are as follows29,30,31. (1) The scale-space construction: It includes creating a series of images at different scales by applying Gaussian blur and downsampling operations to the original image. (2) Extremum detection: It is performed to identify key points by finding local extrema in the scale-space images, which typically represent prominent features in the image. (3) Keypoint localization: For each detected extremum, keypoint localization further refines its precise location and scale by analyzing the gradient directions in the surrounding area. (4) Orientation assignment: This is done by assigning a primary orientation to each key point to ensure rotation invariance, achieved by calculating a histogram of gradient directions in the key point’s surrounding area. (5) Feature description: It involves constructing feature descriptors by computing the gradient magnitudes and directions in the local region around each key point.

Image classification based on DCNN and clustering algorithms

CNN is a type of DL model that has achieved remarkable success in computer vision in recent years. CNN automatically extracts multi-level features from images through a hierarchical structure, significantly improving image classification accuracy. A typical CNN structure includes convolutional, pooling, FC, and output layers32,33,34.

The convolutional layer is the core component of CNN, extracting local features from images. The convolution operation slides convolutional kernels over the image to generate feature maps. The parameters of the convolutional kernels are updated during training through the backpropagation algorithm. The equation for the convolutional layer can be expressed as:

W represents the convolution kernel; x denotes the input image; b is the bias; f refers to the activation function.

The pooling layer is adopted to downsample the feature maps, reducing computational load and model complexity while enhancing the translational invariance of features. A commonly used pooling method is max-pooling:

x represents the pixel values within the pooling window.

The FC layer maps high-level features to the classification space, achieving the final image classification. This layer uses a weight matrix to map the input features to the output classes. The output layer typically uses the Softmax function to convert the FC layer’s output into a probability distribution for multi-class classification:

z represents the output of the FC layer; K denotes the number of classes.

Classic CNN architectures include LeNet-5, AlexNet, VGGNet, GoogLeNet, ResNet, and others. ResNet introduces residual blocks, enabling the network to learn the differences between input and output rather than directly learning the output. This approach effectively mitigates the issues of vanishing or exploding gradients during deep network training35,36,37. ResNet50, a prominent model in the ResNet series, has gained wide recognition and application in DL due to its exceptional performance.

This study innovatively designs a self-attention module and integrates it into the final convolutional layer of ResNet50. The self-attention module learns and generates an attention map to visually indicate key areas in the image. The module receives the feature map of the final convolutional layer of ResNet50 as input, and generates an attention map of the same size as the input through convolution, activation, and other transformations. Each element value in the figure represents the importance weight of the corresponding region, with larger values indicating that the region is more critical for classification. In the subsequent processing, the module weights the original feature map based on the attention map, dynamically adjusts the region weights, and focuses the model on areas with rich sculptural shapes and structural features, ignoring background or noise. ResNet50 is constructed by stacking multiple residual blocks, each containing several convolutional layers and a skip connection. ResNet50 extracts low-level details (such as edges and textures) and high-level semantic features through residual blocks. The self-attention module weights and fuses multi-level feature maps, which not only enhances the model’s perception of sculpture texture details, but also improves its understanding of the overall structure, thereby improving classification accuracy. This structure allows the network to learn the differences between input and output, rather than focusing solely on learning the output directly. This approach significantly enhances the model’s performance38,39,40. Figure 2 depicts the structure of a residual network module.

Each layer of ResNet50 is based on a 3 × 3 convolutional kernel with a stride of 1 and uses the Rectified Linear Unit (ReLU) as the activation function. At the beginning of the model is a 7 × 7 convolutional kernel with a stride of 2, designed to reduce the resolution of the input images. At the end of the model are global average pooling and FC layers, which are used for final classification.

ResNet50 has demonstrated outstanding performance in computer vision tasks and is extensively used in areas such as image recognition, object detection, and image segmentation. Due to its exceptional performance and versatility, ResNet50 has become a foundational model in the DL field, serving as the starting point for many researchers and developers in building more complex models41,42,43.

This study employs a pre-trained ResNet50 model to extract feature vectors from sculpture images. After the final convolutional layer of ResNet50, a self-attention module is added. This module learns and generates an attention map, guiding the model on which areas of the image to focus on during subsequent processing. Specifically, the original image is input into the ResNet50 network, and the output from the final FC layer (before the classification layer) is extracted as the feature vector. This feature vector contains rich image information and reflects the image’s feature representation at multiple scales. Introducing the self-attention module allows the model to focus on key regions of the image and dynamically adjust the weights of these regions during processing.

The architecture of ResNet50 is as follows. (1) The input layer accepts sculpture images as input. (2) The first convolutional layer employs a 7 × 7 convolutional kernel with a stride of 2 to reduce the resolution of the input image. (3) The residual block layer encompasses multiple stacked residual blocks, each containing 3 × 3 convolutional layers and skip connections for feature fusion. (4) The FC layer maps the high-level features to the classification space, achieving the final image classification.

After obtaining the feature vectors, the K-means++ algorithm is used for clustering. K-means++ is an improved version of the K-means algorithm that enhances clustering effectiveness by optimizing the selection of initial centroids. Specifically, in the initial phase, the K-means++ algorithm randomly selects one sample point as the first centroid. For each remaining sample point, it selects the next centroid based on the distance to the already chosen centroids. This ensures that the initial centroids are more evenly distributed in the space, thereby improving the stability and accuracy of the clustering process.

K-means++ is an improved version of the K-means clustering algorithm that enhances the stability and reliability of clustering by optimizing the selection of initial centroids. Compared to the traditional K-means algorithm, K-means + + more effectively avoids local optima, thus improving clustering accuracy. Specifically, it first randomly selects a data point as the first centroid. Then, for each remaining data point, it calculates the square of the shortest distance to the already selected centroids and chooses the next centroid based on this squared distance44,45,46. K-means + + ensures that initial centroids are more evenly distributed in the data space, thereby enhancing the effectiveness of clustering47.

First, feature vectors are extracted from original images using ResNet50. These feature vectors are clustered based on the K-means + + algorithm. By leveraging ResNet50’s powerful feature extraction capabilities, this approach transforms raw images into informative feature representations, thereby enhancing clustering accuracy. The K-means + + algorithm automatically assigns these feature vectors to different clusters, enabling unsupervised image classification.

During the training of ResNet50, the K-means++ algorithm can cluster unlabeled image data first, generating corresponding pseudo-labels. Subsequently, these pseudo-labeled image data can be used for training ResNet50. This method helps mitigate the dependency of ResNet50 on a large amount of labeled data to some extent, thereby improving the model’s generalization ability.

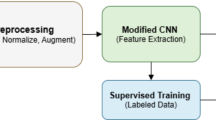

The application process of the ResNet50 and K-means++ fusion model is as follows. First, the sculpture image is input into the ResNet50 network, and the output from the final FC layer (just before the classification layer) is extracted as the feature vector. Second, a self-attention module is added after the final convolutional layer of ResNet50. This module learns and generates an attention map, guiding the model to focus on specific image areas during subsequent processing. After obtaining the feature vector, the K-means + + algorithm is applied for clustering. The clustering begins by randomly selecting a data point as the first centroid. Then, for each remaining data point, the square of its shortest distance to the selected centroids is calculated, and the next centroid is chosen based on this distance. This process is repeated until all centroids are selected. Finally, each data point is assigned to the nearest cluster based on its distance from the centroids. The hierarchical structure of the ResNet50 and K-means + + fusion model is illustrated in Fig. 3. Beyond employing ResNet50 and K-means + + algorithms, this study incorporates a self-attention mechanism to strengthen the model’s capacity for capturing long-range dependencies. This mechanism operates by reweighting elements in input feature maps to emphasize classification-relevant information. Within the attention-based encoder-decoder framework, the encoder transforms input sequences into fixed-length context vectors, while the decoder generates new output sequences based on these vectors and preceding outputs. Distinct from conventional encoder-decoder architectures, the attention mechanism enables dynamic examination of all input sequence elements during output generation, with variable focus allocation according to computed attention weights.

Experimental design and performance evaluation

Datasets collection

This study selects the CMU-MINE architectural sculpture dataset as its research subject, derived from the widely available CMU-Oxford Sculpture architectural sculpture dataset. The dataset comprises 143,000 sculpture images spanning 2197 classes from 242 artists, with each image having a resolution of 640 × 640 pixels. Here, 145 classes with a significant amount of image data are chosen.

For comparison, the Microsoft Global Building Footprints (MGBF) dataset is used alongside the CMU-MINE dataset to evaluate the effectiveness of the proposed method. The MGBF dataset comprises 4235 building footprint images characterized by high-resolution (0.5 m/pixel) and complex silhouette features that exhibit visual similarities to sculptural textures. It utilizes high-resolution satellite imagery from 2014 to 2023 sourced from Maxar, Airbus, and IGN France. This dataset covers extensive global regions including South America, Africa, parts of Europe, Asia, the US, and Australia, encompassing 1.24 billion detected buildings with estimated heights of 174 million buildings. The main files are in GeoJSON format, storing polygonal corner coordinates of building outlines, and are available for download as ZIP files. Regarding dataset selection, the MGBF dataset is specifically constructed for building footprint classification. This study demonstrates its applicability to sculptural classification tasks, as both domains involve identifying and categorizing specific objects with distinctive visual patterns.

The dataset is partitioned into training, validation, and test sets in a ratio of 7:2:1.

Experimental environment

The computer configuration used for the experiment includes a 256 GigaByte (GB) hard drive, 16GB of random access memory, and an Intel Core i7-7700 K processor with an 8 Mega Byte (MB) cache and a maximum frequency of 4.50 GHz. The graphics processing unit (GPU) used is the GeForce GTX 1080 with 8GB of dedicated memory. The experiments are conducted on the Ubuntu 16.04 operating system, using the DL framework PyTorch 0.4.0.

Parameters setting

The ResNet50 network is configured with a batch size of 32. The initial learning rate is set to 0.001 and decayed by a factor of 0.1 every 10 epochs. The model employs the Adam optimizer with parameters beta1 = 0.9, beta2 = 0.999, and epsilon = 1e-8. The training process consists of 100 epochs.

Performance evaluation

Experimental results of image feature point matching

Figures 4 and 5 illustrate the original images and the feature point-matching experiment’s results. It can be observed that the objects of interest in the images (such as buildings and sculptures) vary in position, size, and orientation between the two images. This necessitates algorithms that accurately detect feature points across diverse scales and rotation angles. The SIFT algorithm successfully detects numerous feature points in both images and establishes correspondences between these points through matching algorithms. These matched feature points are visualized in the figures with lines connecting them, clearly demonstrating their corresponding relationships.

Training results and application analysis of ResNet50+ K-means++

In selecting clustering methods, this study considers several commonly used clustering algorithms, including K-means, K-means++, and DBSCAN. These algorithms have advantages and disadvantages, making them suitable for different data distributions and feature spaces. The K-means, K-means++, and DBSCAN algorithms cluster the feature vectors extracted by ResNet50. The results show that on the CIFAR-10 dataset, the clustering accuracy for K-means, K-means++, and DBSCAN reaches 89.7%, 91.5%, and 87.2%, respectively.

In unsupervised learning, the absence of ground truth labels necessitates an alternative approach. To address this, K-means ++ is initially applied to cluster the data, after which pseudo-labels are assigned to each cluster. While these pseudo-labels are not true labels, they can serve as a basis for the model’s output and evaluation. After generating the pseudo-labels, these labels train a supervised classification model, ResNet50. The trained model is then employed to predict the cluster labels for the test data.

In the context of the supervised model, recall is defined as the number of true positives (TP) and false negatives (FN). In the unsupervised setting, the clustering results and pseudo-labels estimate TP and FN. Specifically, samples correctly clustered are considered TP, while misclassified samples are considered FN.

This study adopts ViT-Base, EfficientNet-B4, and ConvNeXt-Tiny as benchmarks to evaluate the image classification performance of the proposed ResNet50 + K-means + + approach. The compared models, ViT-Base, EfficientNet-B4, and ConvNeXt-Tiny, have 8, 10, and 19 layers, respectively. Figure 6; Table 3 display the training results of different models on the validation and test sets. It indicates that the proposed model achieves a final loss value of 0.155 and a recall of 98.9%, remarkably exceeding the other three models. An experiment using vanilla ResNet50 is conducted to validate the baseline performance of ResNet50 in image classification tasks. While vanilla ResNet50 can extract certain features, its performance does not meet expectations when applied to the more complex sculpture image classification task. Specifically, when handling sculptures with similar forms, materials, and colors, the vanilla ResNet50 struggles with confusion, decreasing classification accuracy. This result further underscores the necessity of the ResNet50 + K-means + + approach proposed in this study.

Figure 7 shows the proposed method’s application results on a dataset containing a mixture of three types of images. It reveals that employing the ResNet50 + K-means++ image classification method achieves superior clustering of feature vectors representing mixed images of sculptures and buildings, demonstrating ideal classification effectiveness.

Experiments are conducted on the MGBF dataset to validate the performance of the proposed method. Figure 8 presents the results. The performance comparison of different models and the inference speed comparison of the machine are shown in Table 3. It suggests that the proposed ResNet50 + K-means + + model performs better than other comparative models on a subset of the MGBF dataset. The proposed model achieves an accuracy of 96.3%, a recall of 95.8%, and an F1 score of 96.0%. Although K-means + + is primarily used for feature analysis or preprocessing in this experiment, it likely contributes to better feature extraction and representation of building images, thus enhancing classification performance. Moreover, the powerful feature extraction capability of ResNet50 is also a key factor in achieving high accuracy.

Table 4 presents the ablation experiment results, quantifying the individual contributions of each component. The self-attention module and K-means + + algorithm yield performance improvements of 3.2% and 4.7%, respectively, confirming their essential roles in the model architecture. The complete model achieves 92.3% accuracy, exceeding the linear sum of individual contributions (92.1%) by 0.2%, indicating synergistic effects between components.

Discussion

This study proposes a method that combines DCNNs, specifically ResNet50, with the K-means + + clustering algorithm, incorporating a self-attention module after the final convolutional layer of ResNet50. The goal is to enhance the accuracy of feature point matching in sculpture images. The proposed method first employs ResNet50 to extract feature vectors from original images, followed by K-means++ clustering, enabling unsupervised image classification. Sculpture image classification can be analyzed from multiple dimensions, including color, texture, line features (curves or linear), and environmental background. Unsupervised models may classify images using a combination of these dimensions. Texture features can be captured through local variations in the image, color features can be represented by the distribution of pixel intensities, and line features can be identified by edge direction and length. In unsupervised learning, the importance of features is not directly guided by labels but is instead learned automatically by the algorithm. For instance, DL models such as autoencoders can autonomously learn effective data representations, which may encompass the various dimensional features mentioned above. The ResNet50 model extracts multidimensional features, including color, texture, and shape, through its deep convolutional network. The K-means++ clustering algorithm then performs classification based on these features.

Nguyen et al.48 demonstrated that VGG19 exhibited excellent performance on large-scale datasets like ImageNet. However, its deeper network structure led to high computational costs and overfitting issues when handling specific domain images. Li et al.49 pointed out that although AlexNet performed well on some tasks, its feature extraction capability and classification accuracy were limited when processing sculpture images. Based on these findings, the combined approach of ResNet50 + K-means + + outperforms other models in classification accuracy and recall. This is primarily attributed to ResNet50’s residual block design, which enables efficient learning of complex image features, even in deep networks. However, while this method performs well on specific datasets, its cross-domain adaptability still requires further validation. Given that different image domains exhibit distinct characteristics and distribution patterns, the proposed method may need appropriate modifications and optimizations to ensure robust performance across various datasets.

Conclusion

Research contribution

This study leverages the powerful feature extraction capabilities of DCNNs, the efficient classification ability of clustering algorithms, and the integration of the self-attention mechanism. This combination successfully improves the feature point matching and classification accuracy of sculpture images, offering new insights and methods for future research in sculpture creation and image classification. The proposed method effectively addresses the multi-classification problem by combining DCNN and clustering algorithms. ResNet50 is used to extract high-level features from the images, while K-means + + is employed to cluster the feature vectors, thereby improving classification accuracy.

Future works and research limitations

While this study has achieved notable advancements in sculpture image classification, several aspects remain open for further improvement and exploration. While the proposed method reduces computational requirements compared to state-of-the-art models, further optimization remains necessary to enhance efficiency when processing large-scale datasets. It would be beneficial to explore the integration of other advanced DL models and clustering algorithms, such as Transformer models and DBSCAN, thus enhancing classification performance and efficiency. Additionally, incorporating multimodal data by integrating sculpture image data with other information like textual descriptions and 3D models could potentially improve the accuracy and robustness of image classification and feature point matching.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author Xuhui Wang on reasonable request via e-mail wxhtx131421@163.com.

References

Mehdiyev, N. et al. Deep learning-based clustering of processes and their visual exploration: an industry 4.0 use case for small, medium‐sized enterprises. Expert Syst. 41 (2), e13139 (2024).

Kaur, T. & Gandhi, T. K. Deep convolutional neural networks with transfer learning for automated brain image classification. Mach. Vis. Appl. 31 (3), 20 (2020).

Wang, H. et al. An enhanced intelligent diagnosis method based on multi-sensor image fusion via improved deep learning network. IEEE Trans. Instrum. Meas. 69 (6), 2648–2657 (2019).

Chen, C. et al. This looks like that: deep learning for interpretable image recognition. Adv Neural Inf. Process. Syst, 32, pp.100-115, (2019).

Bansal, M. et al. Transfer learning for image classification using VGG19: Caltech-101 image data set. J Ambient Intell. Humaniz. Comput, 1–12 (2023).

Nawaz, M. et al. Skin cancer detection from dermoscopic images using deep learning and fuzzy k-means clustering. Microsc Res. Tech. 85 (1), 339–351 (2022).

Wu, F. et al. Detection and counting of banana bunches by integrating deep learning and classic image-processing algorithms. Comput. Electron. Agric. 209, 107827 (2023).

Khan, A. R. et al. Brain tumor segmentation using K-means clustering and deep learning with synthetic data augmentation for classification. Microsc Res. Tech. 84 (7), 1389–1399 (2021).

Bhatt, C. et al. The state of the Art of deep learning models in medical science and their challenges. Multimed Syst. 27 (4), 599–613 (2021).

Suganyadevi, S., Seethalakshmi, V. & Balasamy, K. A review on deep learning in medical image analysis. Int. J. Multimed Inf. Retr. 11 (1), 19–38 (2022).

Picon, A. et al. Deep convolutional neural networks for mobile capture device-based crop disease classification in the wild. Comput. Electron. Agric. 161, 280–290 (2019).

Yang, Y. et al. Two-level attentions and grouping attention convolutional network for fine-grained image classification, Appl. Sci. 9(9), 1939 (2019).

Xie, J., Liu, R. & Luttrell, J. Deep learning based analysis of histopathological images of breast cancer. Front. Genet. 10, 80 (2019).

Pitchai, R. et al. Brain tumor segmentation using deep learning and fuzzy K-means clustering for magnetic resonance images. Neural Process. Lett. 53 (4), 2519–2532 (2021).

Sujithra, B. S. & Albert Jerome, S. Adaptive cluster-based superpixel segmentation and BMWMMBO-based DCNN classification for glaucoma detection. Signal. Image Video Process. 18 (1), 465–474 (2024).

Zhao, X. et al. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 57 (4), 99 (2024).

Xu, C. et al. A deep image classification model based on prior feature knowledge embedding and application in medical diagnosis. Sci. Res. 14 (1), 13244 (2024).

Taheri, F., Rahbar, K. & Beheshtifard., Z. Content-based image retrieval using handcraft feature fusion in semantic pyramid. Int. J. Multimed Inf. R. 12 (2), 21 (2023).

Wei, X. et al. Railway track fastener defect detection based on image processing and deep learning techniques: A comparative study. Eng. Appl. Artif. Intell. 80, 66–81 (2019).

Luo, Q. et al. Temporal and Spatial deep learning network for infrared thermal defect detection. NDT E Int. 108, 102164 (2019).

Lu, W. et al. Soybean yield preharvest prediction based on bean pods and leaves image recognition using deep learning neural network combined with GRNN. Front. Plant. Sci. 12, 791256 (2022).

Jia, S. et al. A survey: deep learning for hyperspectral image classification with few labeled samples. Neurocomputing 448, 179–204 (2021).

Khamparia, A. et al. Seasonal crops disease prediction and classification using deep convolutional encoder network. Circ. Syst. Signal. Process. 39, 818–836 (2020).

Ma, A. et al. SceneNet: remote sensing scene classification deep learning network using multi-objective neural evolution architecture search. ISPRS J. Photogramm Remote Sens. 172, 171–188 (2021).

Krentzel, D., Shorte, S. L. & Zimmer, C. Deep learning in image-based phenotypic drug discovery. Trends Cell. Biol. 33 (7), 538–554 (2023).

Ganaie, M. A. et al. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 115, 105151 (2022).

Saleem, M. H., Potgieter, J. & Arif, K. M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis Agric. 22 (6), 2053–2091 (2021).

Li, Y., Wu, J. & Wu, Q. Classification of breast cancer histology images using multi-size and discriminative patches based on deep learning. IEEE Access. 7, 21400–21408 (2019).

Junior, F. E. F. & Yen, G. G. Particle swarm optimization of deep neural networks architectures for image classification. Swarm Evol. Comput. 49, 62–74 (2019).

Dong, S., Wang, P. & Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 40, 100379 (2021).

Hameed, Z. et al. Breast cancer histopathology image classification using an ensemble of deep learning models. Sensors 20 (16), 4373 (2020).

Kwon, D. et al. A survey of deep learning-based network anomaly detection. Clust Comput. 22, 949–961 (2019).

Haridasan, A., Thomas, J. & Raj, E. D. Deep learning system for paddy plant disease detection and classification. Environ. Monit. Assess. 195 (1), 120 (2023).

Hassanzadeh, T., Essam, D. & Sarker, R. EvoDCNN: an evolutionary deep convolutional neural network for image classification. Neurocomputing 488, 271–283 (2022).

Shajin, F. H. et al. Efficient framework for brain tumour classification using hierarchical deep learning neural network classifier. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 11 (3), 750–757 (2023).

Samad, M. D., Abrar, S. & Diawara, N. Missing value Estimation using clustering and deep learning within multiple imputation framework. Knowl. -Based Syst. 249, 108968 (2022).

Rajendran, A., Balakrishnan, N. & Ajay, P. Deep embedded median clustering for routing misbehaviour and attacks detection in ad-hoc networks. Ad Hoc Netw. 126, 102757 (2022).

Puzyrev, V. & Elders, C. Unsupervised seismic facies classification using deep convolutional autoencoder. Geophysics, 87(4), IM125–IM132 (2022).

Borowiec, M. L. et al. Deep learning as a tool for ecology and evolution. Methods Ecol. Evol. 13 (8), 1640–1660 (2022).

Upadhyay, S. K. & Kumar, A. A novel approach for rice plant diseases classification with deep convolutional neural network. Int. J. Inf. Technol. 14 (1), 185–199 (2022).

Yan, W. et al. Deep learning in neuroimaging: promises and challenges. IEEE Signal. Process. Mag. 39 (2), 87–98 (2022).

Chen, Z. et al. Learnable graph convolutional network and feature fusion for multi-view learning. Inf. Fusion. 95, 109–119 (2023).

Atwany, M. Z., Sahyoun, A. H. & Yaqub, M. Deep learning techniques for diabetic retinopathy classification: A survey. IEEE Access. 10, 28642–28655 (2022).

Vankdothu, R. & Hameed, M. A. Brain tumor MRI images identification and classification based on the recurrent convolutional neural network. Meas. Sens. 24, 100412 (2022).

Vasavi, P., Punitha, A. & Rao, T. V. N. Crop leaf disease detection and classification using machine learning and deep learning algorithms by visual symptoms: A review, Int. J. Electr. Comput. Eng. 12(2), 2079 (2022).

Abd Algani, Y. M. et al. Leaf disease identification and classification using optimized deep learning. Meas. Sens. 25, 100643 (2023).

Albalawi, U., Manimurugan, S. & Varatharajan, R. Classification of breast cancer mammogram images using Convolution neural network. Concurr Comput. Pract. Exp. 34 (13), e5803 (2022).

Nguyen, T. H., Nguyen, T. N. & Ngo, B. V. A VGG-19 model with transfer learning and image segmentation for classification of tomato leaf disease. AgriEng 4 (4), 871–887 (2022).

Li, J. et al. DARC: deep adaptive regularized clustering for histopathological image classification. Med. Image Anal. 80, 102521 (2022).

Funding

This research received no external funding.

Author information

Authors and Affiliations

Contributions

Xuhui Wang: Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, writing—review and editing, visualization, supervision, project administration, funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors. All methods were performed in accordance with relevant guidelines and regulations.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, X. The analysis of sculpture image classification in utilization of 3D reconstruction under K-means++. Sci Rep 15, 18127 (2025). https://doi.org/10.1038/s41598-025-01949-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-01949-5