Abstract

Weed detection and classification using computer vision and deep learning techniques have emerged as crucial tools for precision agriculture, offering automated solutions for sustainable farming practices. This study presents a comprehensive approach to weed identification across multiple growth stages, addressing the challenges of detecting and classifying diverse weed species throughout their developmental cycles. We introduce two extensive datasets: the Alpha Weed Dataset (AWD) with 203,567 images and the Beta Weed Dataset (BWD) with 120,341 images, collectively documenting 16 prevalent weed species across 11 growth stages. The datasets were preprocessed using both traditional computer vision techniques and the advanced SAM-2 model, ensuring high-quality annotations with segmentation masks and precise bounding boxes. Our research evaluates several state-of-the-art object detection architectures, including DINO Transformer (with ResNet-101 and Swin backbones), Detection Transformer (DETR), EfficientNet B4, YOLO v8, and RetinaNet. Additionally, we propose a novel WeedSwin Transformer architecture specifically designed to address the unique challenges of weed detection, such as complex morphological variations and overlapping vegetation patterns. Through rigorous experimentation, WeedSwin demonstrated superior performance, achieving 0.993 ± 0.004 mAP and 0.985 mAR while maintaining practical processing speeds of 218.27 FPS, outperforming existing architectures across various metrics. The comprehensive evaluation across different growth stages reveals the robustness of our approach, particularly in detecting challenging “driver weeds” that significantly impact agricultural productivity. By providing accurate, automated weed identification capabilities, this research establishes a foundation for more efficient and environmentally sustainable weed management practices. The demonstrated success of the WeedSwin architecture, combined with our extensive temporal datasets, represents a significant advancement in agricultural computer vision, supporting the evolution of precision farming techniques while promoting reduced herbicide usage and improved crop management efficiency.

Similar content being viewed by others

Introduction

Agriculture has served as the cornerstone of human civilization throughout history, playing a fundamental role in the sustenance and advancement of our species. In the modern era, successful agricultural practices extend far beyond traditional farming methods, increasingly relying on sophisticated precision agriculture techniques, particularly Site-Specific Farm Management (SSFM)1. This advanced approach requires accurate and timely identification, spatial mapping, and quantitative assessment of both crops and weeds within agricultural landscapes2. The challenge of weed management has become increasingly critical in modern agriculture, particularly in the diverse agricultural regions of the United States3. The country’s unique combination of climate variations and fertile soil conditions, while ideal for crop cultivation, simultaneously creates optimal conditions for the proliferation of numerous weed species. These unwanted plants pose a significant threat to crop yields by competing for essential resources, including water, nutrients, and sunlight4. The impact of weed invasion extends beyond immediate crop competition, affecting agricultural productivity, economic stability, and ecosystem balance.

Traditional weed control methodologies, primarily dependent on broad-spectrum herbicides or manual removal techniques, have shown increasing limitations5,6. These conventional approaches raise environmental concerns due to chemical runoff, face challenges with herbicide-resistant weed populations, and often prove economically inefficient due to high labor costs and resource utilization. Furthermore, these methods can potentially harm beneficial organisms and impact long-term soil health7. The emergence of advanced technologies in computer vision and deep learning has opened new avenues for addressing these agricultural challenges. Modern object detection and classification techniques, when applied to weed identification, offer promising solutions for real-time, automated weed management systems8. However, significant research gaps persist in this domain, particularly in addressing the temporal dynamics of weed development. Existing studies predominantly rely on limited datasets or images captured at specific growth stages, failing to represent the dynamic nature of weed development throughout their lifecycle9,10. This limitation is particularly concerning as understanding and accurately identifying weed growth stages is crucial for several reasons. First, the effectiveness of herbicide applications varies significantly depending on the weed’s growth stage, with early growth stages typically being more susceptible to control measures11. Second, different growth stages present distinct morphological features that affect detection accuracy, making it essential for automated systems to adapt to these variations. Third, the competitive impact of weeds on crops varies throughout their growth cycle, with certain stages being more detrimental to crop yield than others. This temporal aspect of weed-crop competition necessitates precise timing of control measures, which can only be achieved through accurate growth stage identification12.

Beyond the temporal challenges, another significant limitation is that many available datasets focus on a narrow range of weed species, not accurately reflecting the diverse weed populations encountered in real agricultural settings. This lack of species diversity in training data presents a substantial barrier to developing robust, widely applicable weed detection systems. Our research directly addresses these limitations through a comprehensive study focusing on 16 prevalent weed species found in Midwestern cropping systems of the USA, documenting their development from the seedling stage through 11 weeks of growth. The study involved cultivation and systematic labeling of weed specimens in a controlled greenhouse environment, ensuring accurate tracking and documentation throughout the growth cycle. Our key research contributions are:

-

1.

Development of two unique datasets comprising 203,567 images and 120,341 images, capturing comprehensive growth cycles of 16 of the most common and troublesome weed species in Midwestern USA cropping systems.

-

2.

Meticulous labeling of datasets, categorized by species and growth stage (week-wise), providing a comprehensive resource for weed identification research.

-

3.

Implementation of advanced detection architectures including DINO13 Transformer with ResNet14 and Swin15 transformer backbones, Detection Transformer (DETR)16, EfficientNet B417, YOLO v818, and RetinaNet19.

-

4.

Creation of a novel WeedSwin Transformer architecture, optimized for object detection and classification, based on the transformer framework.

-

5.

Comprehensive comparison of model performance, providing evidence-based recommendations for real-world agricultural applications.

The selection of these specific models was driven by their proven capabilities in object detection tasks. The DINO Transformer, implemented with both ResNet14 and Swin15 backbones, offers superior accuracy in object detection13. DETR introduces an innovative transformer-based approach, particularly effective in handling complex scenes and object relationships16. EfficientNet-B417, renowned for its compound scaling method that optimally balances network depth, width, and resolution, delivers exceptional feature extraction capabilities while maintaining computational efficiency through its mobile-first architecture design. RetinaNet19 contributes its efficient focal loss function, specifically addressing class imbalance challenges common in detection tasks. Our novel WeedSwin transformer architecture, built upon the Swin15 transformer backbone, represents a significant advancement in specialized weed detection capabilities. This comprehensive approach to weed detection and classification represents a significant step forward in agricultural technology. The research not only contributes to the growing field of AI-assisted agriculture but also provides practical, implementable solutions for farmers and agricultural professionals. By developing more accurate and efficient weed detection systems, we pave the way for enhanced precision agriculture techniques that can significantly reduce herbicide use, lower production costs, and minimize environmental impact while improving overall agricultural sustainability.

The remainder of this paper is organized as follows: Section ‘Literature review’ reviews relevant literature and recent advancements in weed detection using deep learning approaches. Section ‘Methods’ details our comprehensive data collection methodology and preprocessing pipeline, including the greenhouse setup, image acquisition protocols, and data curation processes, along with presenting our methodological framework, outlining the experimental design and implementation strategies. Section ‘Models and algorithms’ provides an in-depth description of the architectural components and implementation details of all models, including our novel WeedSwin architecture. Section ‘Experimental results’ presents a thorough analysis of experimental results, including comparative performance metrics and model evaluations across different growth stages. Section ‘Ablation study’ systematically evaluates the contribution of different architectural components to the WeedSwin model’s performance through controlled experiments with varied configurations. Section ‘Discussion’ outlines the broader implications of our findings for precision agriculture, addressing both the technical achievements and practical applications for USA farming communities. Finally, Section. ‘Conclusions’ summarizes evidence-based recommendations for implementing these detection systems in real-world agricultural settings and outlines promising directions for future research.

Literature review

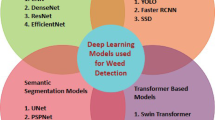

In the realm of precision agriculture, the accurate detection and classification of weeds are pivotal for optimizing herbicide usage and promoting sustainable farming practices. Recent advancements in deep learning and computer vision have significantly enhanced the capabilities of weed detection systems, transitioning from traditional methods to sophisticated, data-driven approaches. This review explores the evolution of these technologies, organized into key thematic areas.

Architectural innovations and dataset development

Recent advances in deep learning architectures have transformed weed detection capabilities in precision agriculture20. Hussain et al.21 demonstrated EfficientNet’s superior performance in detecting common lambsquarters (Chenopodium album L.), achieving 92–97% accuracy and introducing the Phase-Height-Angle (PHA) format, which improved detection accuracy 1.35-fold over conventional depth imaging. Peteinatos et al.22 further validated CNN effectiveness through comprehensive evaluation of VGG16, ResNet-50, and Xception architectures across twelve plant species, achieving >97% accuracy with ResNet-50 and Xception on 93,130 images. Li and Zhang23 advanced algorithmic efficiency through DC-YOLO, incorporating Dual Coordinate Attention and Content-Aware ReAssembly of Features to achieve 95.7% mAP@0.5 while maintaining computational efficiency with only 5.223 million parameters. Ishak Pacal24 introduced a modified MaxViT model with SE blocks and GRN-based MLP for maize leaf disease detection, achieving 99.24% accuracy and outperforming 64+ deep learning models. Ismail and Ishak25 demonstrated Vision Transformers’ effectiveness for grape disease classification, with Swinv2-Base achieving 100% accuracy across multiple datasets. Ishak & Gültekin26 compared Vision Transformers to CNNs for corn leaf disease detection, with MaxViT models reaching 100% accuracy on the CD&S dataset and 99.83% on PlantVillage. Alongside these architectural innovations, significant progress has been made in dataset development. Genze et al.27 introduced the Moving Fields Weed Dataset (MFWD), encompassing 94,321 images of 28 weed species with semantic and instance segmentation masks. Olsen et al.28 addressed real-world variability through DeepWeeds, containing 17,509 images across eight species and locations, achieving 95.7% classification accuracy with ResNet-50. Dyrmann et al.29 explored weed classification across 22 species, achieving 86.2% accuracy with 10,413 diverse images. Sapkota et al.citesapkota2022use investigated synthetic data generation, demonstrating comparable performance between synthetic and real images in training Mask R-CNN models, though GAN-generated images showed limited effectiveness.

Multi-modal integration, platform adaptation, and computational optimization

Recent segmentation advances include enhancing machine learning crop classification through SAM-based field delineation31, with the Segment Anything Model demonstrating significant potential for smart farming applications32. Multi-modal approaches have emerged as crucial for complex detection scenarios. Xu et al.33 developed a three-channel architecture processing RGB and depth information, achieving 89.3% detection precision. Lottes et al.34 incorporated sequential information in fully convolutional networks, attaining >94% crop recall and 91% weed recall using RGB+NIR imagery. Wang et al.9 advanced environmental adaptation through an encoder-decoder network achieving 88.91% mean intersection over union (MIoU) and 96.12% object-wise accuracy with NIR integration.

Platform integration has expanded application possibilities, with Islam et al.35 achieving 96% accuracy in UAV-based Random Forest classification. Beeharry and Bassoo36 demonstrated 99.8% accuracy with AlexNet CNN in distinguishing between soil, soybean (Glycine max L.), and weed types using 15,336 segmented images. Farooq et al.37 achieved 97% accuracy in pixel-wise vegetation detection using hyperspectral data, demonstrating CNN superiority over traditional histogram of oriented gradients methods. Jeon et al.38 addressed varying illumination through adaptive image processing, achieving 95.1% identification accuracy for crop plants. Computational efficiency remains crucial for practical deployment. Arun et al.39 developed a reduced U-Net architecture maintaining 95% accuracy while reducing parameters by 27%. Ukaegbu et al.40 demonstrated feasibility on UAV-mounted Raspberry Pi systems, though battery life and computational power constrained operations.

Weed detection and classification approaches

Recent research in weed detection and classification has demonstrated significant advancements in both methodology and practical application. Nenavath and Chaubey41 achieved notable success using Region-Based Convolutional Neural Networks for weed detection in sesame (Sesamum indicum L.) crops, attaining 96.84% detection accuracy and 97.79% classification accuracy across different weed species. This approach particularly emphasized the importance of species-specific identification for targeted control measures. Hasan et al.42 addressed a crucial gap in weed detection datasets by developing an instance-level labeled dataset for corn fields, evaluating multiple deep learning models including YOLOv7 and YOLOv8. Their research demonstrated that YOLOv7 achieved the highest mAP of 88.50%, with data augmentation further improving results to 89.93%. Contrasting with the deep learning approach, Moldvai et al.43 explored traditional feature-based computer vision methods, achieving a 94.56% recall rate using significantly smaller datasets. Their work demonstrated that shape features, distance transformation features, color histograms, and texture features could provide comparable results to deep learning approaches while requiring only a fraction of the training data. This finding is particularly relevant for applications with limited data availability.

Almalky and Ahmed11 advanced the field by focusing on growth stage classification, utilizing drone-collected imagery and comparing various deep learning models. Their results showed that YOLOv5-small achieved real-time detection with 0.794 recall, while RetinaNet with ResNet-101-FPN backbone demonstrated high precision (87.457% average precision) in growth stage classification. Teimouri et al.12 developed a comprehensive approach for growth stage estimation across 18 weed species, achieving 70% accuracy in leaf counting and 96% accuracy within a two-leaf margin of error, demonstrating the feasibility of automated growth stage assessment. In specialized applications, Costello et al.44 focused on ragweed parthenium (Parthenium hysterophorus L.) weed detection, combining RGB and hyperspectral imagery with YOLOv4 CNN implementation. Their method achieved 95% detection accuracy and 86% classification accuracy for flowering stages, while hyperspectral analysis with XGBoost classifier reached 99% accuracy in growth stage classification. Subeesh et al.45 evaluated various deep learning architectures for weed identification in bell pepper (Capsicum annuum L fields, with InceptionV3 demonstrating superior performance (97.7% accuracy) at optimal hyperparameter settings, establishing a foundation for integration with automated herbicide application systems.

Tables 1 and 2 highlights advancements in weed detection using deep learning, focusing on architectural innovations, dataset diversity, and practical challenges. Despite advances in deep learning and computer vision applications for weed identification and classification, several major limitations persist in current research. A particularly significant shortcoming is that present studies tend to focus on a narrow range of weed species and growth stages, failing to reflect the complete spectrum of weed variety experienced in real-world farming contexts. This limitation is evident in recent studies11,12,22,44, which predominantly relied on single-species datasets. Key constraints in existing research include inadequate dataset size and variety9,10, imbalanced class distributions46, and excessive computing requirements47. These challenges were particularly apparent in12, where researchers encountered difficulties in managing overlapping leaves and class imbalance issues. Implementation challenges further complicate the practical application of these technologies. High hardware costs for sophisticated imaging equipment21 and substantial computational resource requirements34 create barriers to widespread adoption. Additionally, environmental variability significantly affects system performance in field conditions9. These collective limitations underscore the urgent need for more comprehensive datasets and the development of efficient, robust architectures for weed growth stage detection that can overcome these practical constraints.

The critical need for accurate weed growth stage detection and classification stems from several key factors. The effectiveness of herbicide applications is highly dependent on weed growth stages, with early intervention typically yielding better results and requiring lower chemical concentrations. Herbicide labels, which serve as the legal document governing application specifications, also state limits on weed growth stages or heights. Different growth stages present varying levels of competition with crops for resources, making timely identification crucial for optimal yield protection. Moreover, the morphological changes throughout weed development cycles affect detection accuracy, necessitating robust systems capable of adapting to these variations. Additionally, the economic implications of precise growth stage-based interventions are significant, potentially reducing herbicide usage by up to 90% compared to conventional blanket spraying methods. These factors collectively emphasize the urgent need for comprehensive solutions that can accurately detect and classify weed growth stages in real-world agricultural settings.

The extensive body of research reviewed above reveals several persistent limitations in current weed detection approaches. While transformer-based architectures like DINO and Swin have demonstrated impressive general object detection capabilities, they fail to address the unique challenges of agricultural environments—specifically the dramatic scale variations between seedling and mature weeds, complex morphological similarities between weed species, and the dense vegetation patterns typical in field conditions. Existing models either excel at processing speed (YOLO variants) or detection accuracy (DINO implementations) but rarely achieve both simultaneously. Additionally, as Tables 1, and 2 illustrate, most current architectures have been designed for general object detection rather than specifically optimized for the complex temporal dynamics of weed growth stages. WeedSwin directly addresses these limitations through its novel progressive attention heads that adapt to weed scale variations, specialized Channel Mapper for preserving morphological details critical for species differentiation, and optimized encoder-decoder architecture specifically designed to capture the complex contextual relationships in agricultural scenes. Unlike prior approaches that apply general-purpose detection frameworks to weed identification, WeedSwin’s architecture is fundamentally designed to balance the competing requirements of computational efficiency and detection accuracy across the entire weed growth cycle.

Our research addresses these challenges comprehensively by developing two extensive datasets, comprising 203,567 images and 120,341 images, documenting 16 prevalent weed species in US agriculture. These datasets uniquely capture the entire growth cycle of these species across 11 weeks, providing a high-resolution temporal perspective on weed development. The seeds of weeds that have been used in this study were provided by the ’weed control research’ lab at Southern Illinois University Carbondale. The datasets feature precise annotations of both species and growth stages, providing a valuable foundation for advancing weed classification and detection research. A key highlight of our study is the implementation of advanced detection architectures, including DINO13 Transformer with ResNet14 and Swin15 Transformer backbones, Detection Transformer (DETR)16, EfficientNet B417, YOLO v818, and RetinaNet19. Furthermore, we introduce a novel WeedSwin Transformer architecture, with all models undergoing comprehensive evaluation to enable robust performance comparisons across diverse scenarios. This study distinguishes itself through its creation of large-scale, diverse datasets that reflect real-world agricultural challenges, rigorous model evaluation, and practical recommendations for farmers. By providing evidence-based insights and implementable solutions for precision weed management, our holistic approach effectively bridges the gap between cutting-edge research and practical applications, advancing sustainable agriculture and fostering the adoption of precision technologies in modern farming operations.

Methods

Study area and experimental setup

This research was conducted during spring and summer 2024 at the SIU Horticulture Research Center greenhouse (\(37^{\circ }\)42′35.8″ N, \(89^{\circ }\)15′45.0″ W). The facility provided optimal conditions for weed seedling cultivation. The greenhouse used 1000W High Pressure Sodium (HPS) grow light to keep the greenhouse warm (30–\(32\,^{\circ }\)C). We utilized 32 square pots (10.7 cm \(\times\) 10.7 cm \(\times\) 9 cm), with two replicate pots per species, containing Pro-Mix® BX potting soil. Plants were watered as needed and fertilized with all-purpose 20-20-20 nutrient solution administered at three-day intervals.

Data collection

In this research, we monitored and labeled weed growth stages on a weekly basis to capture the temporal dynamics of plant development. Imaging began at week 1, which corresponded to BBCH48 (a widely recognized standard for phenological development in weeds) stage 11 (“first true leaf unfolded”), and continued weekly through week 11, ending at BBCH stage 60 (“first flower open”). Each plant image was annotated with both species and its corresponding week (e.g., AMATU_week_1, SORVU_week_5), providing a direct mapping between the week of observation and the phenological stage based on the BBCH scale. This week-wise labeling approach ensured consistent temporal resolution across all species and growth cycles. After initial automated labeling, each image was reviewed and corrected as needed using LabelImg software to improve annotation quality. While we referenced the BBCH scale for stage definitions, annotation consistency was primarily ensured through meticulous manual review and correction, rather than a formal multi-annotator protocol or expert consensus process. This methodology provides a transparent and systematic framework for growth stage annotation, facilitating robust temporal analysis and enabling direct comparison with standard phenological references.

To comprehensively document these growth stages, short 4K video clips (resolution: 3840 \(\times\) 2160 pixels, aspect ratio: 16:9) were recorded across the \(360^{\circ }\) angle of the weeds during each imaging session. The videos were captured using an iPhone 15 Pro Max positioned at a height of 1.5 feet above the plants. Subsequently, individual frames were extracted from these clips to serve as the raw data. This method allowed for high-quality image acquisition, ensuring efficient and consistent data collection throughout the study period.

Among the sixteen weed species included in the study, it was observed that the species SORHA did not emerge during the first two weeks. To facilitate detailed analysis, two datasets were developed: the Alpha Weeds Dataset (AWD) and the Beta Weeds Dataset (BWD), encompassing a total of 174 classes. Initially, a total of 2,494,476 frames were compiled across both datasets. After conducting a rigorous quality assessment to eliminate substandard images, 203,567 images were retained for AWD, while 120,341 images were selected for BWD. The rationale for creating these two datasets was to evaluate the model’s efficiency, accuracy, and performance on datasets of differing sizes. We have utilized AWD in our previous research52. The BWD was generated by selecting only the even-numbered images from AWD, resulting in a dataset approximately half the size of AWD. Additionally, in BWD, we corrected all the incorrect labels we found in AWD. The main purpose of creating BWD is to make a better dataset with all corrected labels and to check and compare model performances between a concise and a big dataset. Table 3 provides a comprehensive summary of the two datasets, detailing the weed species codes, scientific and common names, family, and the number of frames captured for each species on a weekly basis.

Figure 1 presents representative images of four weed species at distinct growth stages. For AMAPA, images from week one (a) and week eleven (b) are displayed. Similarly, SIDSP is depicted at week one (c) and week eleven (d). AMATU is shown during its first (e) and eleventh (f) weeks of growth. Lastly, SETPU is illustrated in its initial (g) and final (h) weeks of the study. It is noteworthy that while certain species produced flowers during their final growth stages, others did not, which reflects variations in natural growth processes and photoperiod sensitivities.

Growth stage examples of four representative weed species used in this study. (a, b) AMAPA at week 1 and week 11, respectively; (c, d) SIDSP at week 1 and week 11; (e, f) AMATU at week 1 and week 11; (g, h) SETPU at week 1 and week 11. These images illustrate the morphological changes across the 11-week lifecycle, highlighting the variation in plant structure, size, and complexity that the models must detect and classify accurately.

Data pre-processing and augmentation

The data preprocessing and augmentation phase forms the foundation of this research, ensuring the quality, consistency, and usability of the dataset for weed detection and classification. This stage involves a series of carefully designed steps to transform raw images into a structured, annotated dataset suitable for training advanced machine learning models.

We used two different preprocessing methods for our two datasets. In AWD, we used traditional computer vision techniques to preprocess the data. It begins with image normalization, a fundamental step that standardizes the input data. Each image is scaled to a range of 0-1 by dividing all pixel values by 255.0. Following normalization, a color space conversion is performed, transforming the images from the standard RGB (Red, Green, Blue) color space to the HSV (Hue, Saturation, Value) color space using matplotlib’s rgb_to_hsv function. This conversion is particularly significant for the application, as the HSV color space offers enhanced discrimination of green hues, which is crucial for accurate plant detection.

The next step in the pipeline is green area detection. Carefully calibrated thresholds for the HSV channels are used to create a mask that highlights potential plant regions. Specifically, hue values ranging from 25/360 to 160/360, minimum saturation value of 0.20, and minimum value of 0.20 are applied. Morphological operations53 are then applied to refine the green mask and improve the continuity of detected plant areas. Specifically, we implemented morphological closing using a disk-shaped structuring element with a radius of 3 pixels (via skimage.morphology.disk and skimage.morphology.binary_closing) to effectively close small gaps in the vegetation areas. The refined green areas are subsequently subjected to connected component analysis using skimage.measure.label, which identifies and labels distinct regions within the image. We utilized skimage.measure.regionprops to extract properties of these labeled regions, particularly focusing on bounding box coordinates and area measurements. The largest connected component (determined by maximum area) was selected as the primary plant region, effectively filtering out smaller noise segments. This step is critical for differentiating individual plants or plant clusters, enabling more precise analysis and annotation. Our traditional computer vision pipeline successfully identified plants through color-based thresholding, generating distinctive orange masks for clear visualization (Fig. 2a–c).

In BWD, we employed Meta AI’s Segment Anything Model 2 (SAM-2)54,55 for preprocessing, a cutting-edge successor to the original SAM foundation model released in 2024. Unlike its predecessor, SAM-2 extends capabilities to both images and videos through a unified architecture incorporating memory attention blocks, memory encoder, and memory bank components that enable temporal coherence while maintaining object identity across frames. For our application, SAM-2 functions as a high-precision segmentation tool that first detects potential objects within an image, then generates a binary mask separating each object from the background, followed by precise bounding box generation around the detected objects. This model delivers approximately 6x greater segmentation accuracy compared to the original SAM56, processes approximately 44 frames per second, and supports interactive refinement through various prompt types including points, boxes, and masks. We implemented SAM-2 specifically for the BWD dataset to ensure a superior labeling process with significantly higher accuracy than that achieved in the AWD dataset, as SAM-2’s robust zero-shot generalization capabilities proved particularly suitable for our diverse weed specimen preprocessing requirements57. Figure 2 illustrates the process of the data augmentation. We demonstrated two distinct approaches for plant detection and segmentation across developmental stages there. SAM-2 produced highly precise binary masks capturing intricate plant morphologies (Fig. 2d–g). While both methods effectively generated bounding boxes, SAM-2 exhibited superior performance in delineating complex plant architectures, particularly for mature specimens with multiple leaves. This dual-method comparison validates the robustness of our plant detection system across growth stage.

Comparison of plant detection approaches using traditional computer vision and SAM-2 techniques. Left panel: Computer vision-based detection showing (a) original seedling images, (b) color-based masking (orange overlay) for plant segmentation, and (c) resulting bounding box detection (green). Right panel: SAM-2 segmentation pipeline demonstrating (d) original plant images at different growth stages, (e) binary mask generation with precise plant-soil separation, (f) mask refinement with bounding box constraints, and (g) final detection results overlaid on original images.

Data labelling

The labeling process was designed to create comprehensive annotations that capture all relevant information about the detected plants. For each labeled green area, bounding box coordinates were extracted, defining the spatial extent of the plant within the image. These coordinates were determined by the minimum and maximum x and y values of the detected region. Detailed Pascal VOC XML annotations were generated for each processed image, including folder name, filename, dimensions, source database, and precise object bounding boxes with species names. The image processing pipeline was implemented using Python libraries such as Pillow, NumPy, scikit-image, and ElementTree, with the resulting XML files stored in a structured ’labels’ directory. The rigorous data preprocessing and labeling methodology yielded a dataset of high quality, characterized by precise annotations and consistent formatting.

To further enhance accuracy, a thorough quality control process was implemented. Each image was meticulously reviewed using LabelImg software following the initial automated labeling. The labeling convention incorporated both the species code and the week number, providing a comprehensive identifier for each plant’s growth stage and taxonomy. This detailed labeling strategy significantly enhanced the dataset’s utility for tracking plant development over time and for species-specific analysis. Figure 3 illustrates this process, presenting a side-by-side comparison of an original image and its corresponding labeled version, referred to as the ground truth.

Following the annotation of the dataset, the data was divided into training, validation, and test sets. For the AWD, 184,719 images (80%) were used for training the object detection models, while 23,090 images (10%) were used for validation during training. The remaining 23,090 images (10%) were held out for testing the performance of the trained model. For the BWD, 96,272 images (80%) were used for training, with 12,034 images (10%) allocated for validation during training. The remaining 12,035 images (10%) were held out for testing the model’s performance.

Models and algorithms

In this study, two experiments were conducted using the two different datasets. In the first experiment, involving the AWD, two advanced deep learning models were employed for weed detection and classification: RetinaNet19 with a ResNeXt-10158 backbone and Detection Transformer (DETR)16 with a ResNet-5014 backbone. In the second experiment, using the BWD, several models were utilized, including RetinaNet19 with a ResNeXt-10158 backbone, Detection Transformer (DETR)16 with a ResNet-5014 backbone, DINO13 Transformer with a Swin15 Transformer backbone, DINO13 Transformer with a ResNet-10114 backbone, EfficientNet B417 with a ResNet-5014 backbone, YOLO v818, and our custom architectural model named WeedSwin. These models were tasked with classifying weed species and identifying their respective growth stages (in weeks, while simultaneously localizing them within the images through bounding box predictions.

We trained all models, including baselines, for 12 epochs with batch size 16 on identical hardware configurations to ensure fair comparisons. The models were configured and trained using PyTorch and mmDetection. We used NVIDIA A100 GPU with 80GB memory and an Intel Xeon Gold 6338 CPU (2.00GHz) on a Linux system for training all our models. All models were initialized with weights pre-trained on COCO dataset, with validation performed after each epoch to monitor convergence. To ensure optimal performance and fairness, we performed light hyperparameter tuning for each model, focusing primarily on learning rate, weight decay, and confidence/NMS thresholds. For all models, the initial learning rate was selected from 1e−3, 1e−4, 5e−5 based on early validation loss behavior, while weight decay was varied in 1e−4, 5e−5. The confidence thresholds for inference were fine-tuned per model in the range [0.05, 0.3] to balance false positives and detection robustness. Anchor configurations (e.g., aspect ratios, scales) and optimizer types (e.g., SGD vs AdamW) were also evaluated during early experiments for the baseline models. Final choices were determined using grid search on the validation set performance for each dataset.

RetinaNet with ResNeXt-101

In this study, we implemented the RetinaNet architecture19 with a ResNeXt-101 backbone59 for weed detection. RetinaNet, a single-stage object detector, addresses class imbalance through Focal Loss while maintaining high detection accuracy60. Our implementation utilizes a ResNeXt-101 (32\(\times\)4d) backbone, which enhances the model’s feature extraction capabilities through its cardinality-based approach.

The ResNeXt-101 backbone employs a split-transform-merge strategy, where the input is divided into 32 parallel paths (\(C=32\), cardinality), each with a bottleneck width of 4 channels (\(\text {base}\_\text {width}=4\)). This architecture can be formally expressed as:61

where \(C = 32\) represents the cardinality, and \(\textrm{T}_i\) denotes the transformation function for the i-th path. Each transformation follows a bottleneck design with \(1 \times 1\), \(3 \times 3\), and \(1 \times 1\) convolutions.

The Feature Pyramid Network (FPN) neck connects to this backbone, generating multi-scale feature maps \(\{P_2, P_3, P_4, P_5, P_6\}\) with corresponding channels of 256. For a feature level l, the FPN output can be described as:

where U represents upsampling, \(\textrm{L}\) is a \(1 \times 1\) convolution lateral connection, and \(\textrm{C}\) is a \(3 \times 3\) convolution for smoothing.

The RetinaHead subnet processes these features using Focal Loss, defined as61:

where \(\gamma = 2.0\) is the focusing parameter and \(\alpha _t = 0.25\) is the balanced variant of the focal loss.

The model predicts across 174 weed classes using dense anchors at multiple scales (\(\text {strides} = [8, 16, 32, 64, 128]\)) and aspect ratios ([0.5, 1.0, 2.0]).

During training, we employed the AdamW optimizer with an initial learning rate of \(1\text {e-}4\) and weight decay of \(1\text {e-}4\). The learning rate follows a multi-step schedule using a gamma of 0.1. The model was initialized with weights pre-trained on the COCO dataset and trained for 12 epochs with validation performed after each epoch. To maintain stable training, we froze the first stage of the backbone (\(\text {frozen}\_\text {stages}=1\)) while allowing batch normalization statistics to be updated (\(\text {requires}\_\text {grad}= \text {True}\)). The inference process employs a confidence threshold of 0.05 and Non-Maximum Suppression (NMS) with an IoU threshold of 0.5, limiting to a maximum of 100 detections per image. This configuration balances detection accuracy with computational efficiency while maintaining robust performance across various weeds.

Detection transformer with ResNet-50

The Detection Transformer (DETR)16 represents a paradigm shift in object detection by eliminating the need for hand-crafted components like non-maximum suppression and anchor generation62. Our implementation employs a ResNet-50 backbone63 coupled with a transformer encoder-decoder architecture for weed detection, processing a fixed set of \(N=100\) object queries in parallel.

The architecture begins with a ResNet-50 backbone that extracts hierarchical features through its four-stage design. The backbone output \(\textbf{X} \in \textrm{R}^{C \times H \times W}\) (where \(C=2048\)) is processed through a channel mapper that reduces dimensionality to \(d=256\) channels. The matching cost between ground truth \(y_i\) and prediction \(\hat{y}_{\sigma (i)}\) can be expressed as64:

where \(c_i\) represents the target class label, \(b_i\) is the ground truth box, and \(\sigma\) is the optimal assignment. The transformer encoder-decoder architecture processes the features through self-attention mechanisms. For a given query Q, key K, and value V, the multi-head attention is computed as \(\text {MultiHead}(Q, K, V) = \text {Concat}(\text {head}_1,\ldots ,\text {head}_h)W^O\), where each attention head is calculated as \(\text {head}_i = \text {Attention}(QW^Q_i, KW^K_i, VW^V_i)\) with \(W^Q_i\), \(W^K_i\), \(W^V_i\), and \(W^O\) being learnable parameter matrices that project the inputs into different representation subspaces. The final loss function combines classification and box regression terms64:

where \(\textrm{L}_{box}\) combines the L1 loss and the generalized IoU loss:

For training, we utilize a bipartite matching loss that optimally assigns predictions to ground-truth objects using the Hungarian algorithm. The total loss is a combination of classification and box regression terms:

where \(\lambda _{cls}=1\), \(\lambda _{box}=5\), and \(\lambda _{giou}=2\) are loss weights. The classification loss \(\textrm{L}_{cls}\) uses cross-entropy with balanced weighting (background weight = 0.1), while box regression employs L1 and GIoU losses. Training proceeds with the AdamW optimizer (learning rate = \(10^{-4}\), weight decay = \(10^{-4}\)) for 12 epochs. We implement a multi-step learning rate schedule with a milestone at epoch 334 and a decay factor of 0.1. During inference, the model directly outputs a set of 100 predictions with confidence scores, requiring no post-processing beyond score thresholding. This end-to-end approach demonstrates particular efficacy in handling the complex spatial relationships and varying scales characteristic of weed detection, while maintaining computational efficiency through parallel prediction generation.

The algorithm of DETR16 presents the model training process where the goal is to optimize the model parameters, denoted by \(\theta\). Initially, the model parameters are set to their initial values. The training process runs for 12 number of epochs, iterating over mini-batches of the training dataset in each epoch. For each mini-batch, the model makes predictions \(\hat{y}\), and the classification and regression losses are computed. These losses are summed to obtain the total loss, which is then used to calculate the gradients through backpropagation. The model parameters are updated using the AdamW optimizer. At regular intervals, defined by val_interval, the model’s performance is evaluated on the validation dataset, and the model checkpoint is saved if there is an improvement in performance.

DINO transformer with Swin and ResNet-101

In this work, we employ DINO13, an extension of the DETR16 architecture, which enhances object detection performance by incorporating advanced query selection and a denoising training strategy. For our weed detection task, we utilize DINO13 with two different backbone architectures: the Swin15 Transformer and ResNet-10114. Each of these backbones brings unique advantages to the challenge of processing high-resolution weed imagery. The Swin Transformer15, with its shifted window mechanism and hierarchical feature extraction, excels at capturing both detailed and contextual weed information. On the other hand, ResNet-10114’s deep residual learning framework is particularly effective at identifying intricate weed patterns, providing strong feature extraction capabilities.

The DINO13 architecture processes weed images through multiple stages. The initial stage involves extracting multi-scale features using either the Swin Transformer15 or ResNet-10114 backbone. The Swin Transformer15, with its innovative design of shifted windows, is particularly well-suited for capturing both fine-grained weed characteristics and broader contextual information, which is critical for distinguishing weeds in complex agricultural environments. Alternatively, ResNet-10114 provides a powerful residual learning mechanism, allowing it to learn and extract intricate features of the weed species effectively, particularly when dealing with the visual complexity of weed patterns. Once the multi-scale features are extracted, they are enhanced with positional embeddings, which help the model maintain spatial awareness of the objects in the image. This spatial awareness is crucial for accurately localizing weeds, particularly in complex agricultural scenes where overlapping vegetation is common. The enhanced features are then passed through several encoder layers, which are responsible for further refining the feature representation. A unique aspect of DINO13 is its sophisticated query selection mechanism, which dynamically adapts to the complexity of the scene. This query selection allows the architecture to efficiently process images containing varying weed densities and distributions, adapting its attention to areas of interest.

The decoder portion of the DINO13 architecture includes a denoising training strategy, employing both content queries and base anchors. This denoising mechanism is highly beneficial for weed detection, as it enhances the model’s ability to distinguish between similar-looking weed species and effectively handles cases of overlapping vegetation. During training, the model uses a contrastive denoising approach in the matching module. This approach involves selecting positive and negative samples, which helps the model refine its discriminative capabilities, improving its ability to distinguish different weed classes and reducing false positives. Finally, the model uses a Classification and Detection Network (CDN) to produce the refined predictions for weed instances. This CDN is specifically tuned for our 174-class weed detection task, incorporating class-specific features learned during the training process. The result is a robust model that can provide highly accurate and interpretable detection results, which are essential for agricultural applications where reliability and precision are of utmost importance.

The overall architecture starts with input weed images, which are processed to extract multi-scale features. These features are fed into an encoder consisting of multiple layers, enhanced by positional embeddings. The encoder output is then refined through a query selection mechanism, leading to the decoder layers. The decoder is responsible for further processing using both content queries and base anchors, facilitating accurate localization and classification of weeds. The matching module, equipped with contrastive denoising training, distinguishes between positive and negative instances, enhancing the model’s ability to identify subtle inter-species differences. The output layer provides the final classification and detection results for each weed instance.

WeedSwin - proposed model

While existing architectures like Swin Transformer15 and DINO13 demonstrate strong performance in general object detection, they face significant limitations in agricultural weed detection. Standard Swin Transformer’s fixed window partitioning struggles with the highly variable scale characteristics of weeds across growth stages, while DINO’s query selection mechanism fails to capture the subtle morphological differences between similar plant species. To address these domain-specific challenges, we propose WeedSwin, an enhanced Swin-based architecture optimized for weed detection and classification tasks.WeedSwin introduces four key architectural components specifically designed for agricultural applications: (1) Enhanced Backbone with Progressive Attention Heads (6\(\rightarrow\)12\(\rightarrow\)24\(\rightarrow\)48) that dynamically adapt to the dramatic scale variations between seedling and mature weeds, providing multi-scale feature representation critical for detecting plants at different growth stages. (2) Specialized Feature Enhancement Neck utilizing a Channel Mapper with ReLU activation that preserves fine-grained morphological details essential for differentiating between visually similar weed species. (3) Modified Encoder with 8 layers (versus 6 in standard implementations) and 16 attention heads, enabling more comprehensive feature extraction from complex agricultural scenes. (4) Enhanced Decoder with 8 layers and optimized cross-attention mechanisms that better capture contextual relationships in densely vegetated environments. These modifications collectively improve the model’s capacity to detect and classify weeds effectively in diverse agricultural environments.

We employed the backbone with optimized parameters, defined as \(E_b = \text {SwinT}(d_{\text {model}}=192, d_{\text {heads}}=[6,12,24,48], w_{\text {size}}=12)\), where \(d_{\text {model}}\) represents the initial embedding dimension and \(d_{\text {heads}}\) represents the progressive scaling of attention heads across layers. For feature enhancement, we introduced a Channel Mapper with enhanced feature processing, expressed as \(F_{\text {out}} = \sigma (\text {BN}(W \cdot F_{\text {in}} + b))\), where \(F_{\text {out}}\) is the output feature map, \(F_{\text {in}}\) is the input feature map, W represents learnable weights, BN denotes Batch Normalization, and \(\sigma\) is the ReLU activation function.

Our architecture deepens both the encoder and decoder to 8 layers (\(L=8\)) with enhanced multi-head attention, implementing 16 attention heads with embedding dimension \(d=384\), resulting in \(d_{\text {head}}=24\) per attention head. The multi-head attention mechanism is defined as:

where each head is computed as: \(\text {head}_i = \text {Attention}(QW_i^Q, KW_i^K, VW_i^V)\). The enhanced decoder implements an 8-layer structure with improved cross-attention mechanism, defined as:

where q represents queries, k represents keys, and v represents values, with \(d_k=384\). We increased transformer layers to 8, enhanced the embedding dimension to 384, and optimized attention heads to 16 for better feature representation. The enhanced feature extraction is achieved through our modified Channel Mapper:

We carefully balanced the model’s capacity through the relationship \(d_{\text {model}}\), ensuring efficient attention computation while maintaining representational power. The architecture demonstrates particular effectiveness for weed detection through its multi-scale feature processing capability, with progressive attention heads (6\(\rightarrow\)12\(\rightarrow\)24\(\rightarrow\)48) enabling effective processing of weeds at various scales. The increased embedding dimension provides richer feature representation, with a capacity gain of approximately 1.75\(\times\) \((\text {Capacity}_{\text {gain}} = (384/256) \times (8/6))\). The deeper architecture facilitates better information propagation with a receptive field of \(\text {Receptive}_{\text {field}} = \text {base}_{\text {size}} \times 2^{(L-1)}\), where \(L=8\) provides broader context capture compared to the original \(L=6\).

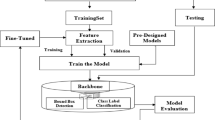

Figure 4 shows the proposed WeedSwin architecture, a novel transformer-based framework for weed detection and classification. The architecture consists of three main components: an encoder, a decoder, and a Feature Pyramid Network (FPN) for detection. The encoder pathway employs a hierarchical Swin Transformer structure with four progressive stages, where the attention heads increase from 6 to 48. Each stage operates at different spatial resolutions (from H/4\(\times\)W/4 to H/32\(\times\)W/32) with a consistent embedding dimension of 384 and window size of 12. The stages contain varying numbers of Transformer blocks (\(\times\)2, \(\times\)2, \(\times\)6, \(\times\)2 respectively). A Channel Mapper module with fully connected layers and ReLU activation bridges the encoder and decoder, enhancing feature transformation. The decoder comprises eight blocks (D1-D3 shown) with cross-attention mechanisms and skip connections from corresponding encoder stages, progressively recovering spatial details. The detection head utilizes a Feature Pyramid Network structure with dual branches: a Classification Branch predicting weed class and growth stages, and a Bounding Box Regression Branch estimating object coordinates. These branches process the feature map through parallel pathways, combining their outputs via multiplication and FC layers to generate the final feature vector.

While our model introduces additional parameters, we maintained efficiency through optimized attention head distribution, balanced embedding dimensions, and efficient feature enhancement in the neck. The theoretical computational complexity remains \(O(N \times d_{\text {model}} + N^2 \times d_{\text {head}})\), where N is the sequence length, making it practical for real-world agricultural applications. This enhanced architecture demonstrates our commitment to improving weed detection accuracy while maintaining computational efficiency, making it suitable for practical agricultural applications.

Algorithm 1 presents our WeedSwin training process, an enhanced Swin architecture optimized for weed detection. Our key modifications include an enhanced encoder and decoder, each featuring 8 layers and 16 attention heads with 0.2 dropout for better feature transformation. During training, it processes images through progressive feature extraction ([6, 12, 24, 48] heads), enhanced feature mapping, and our modified attention mechanisms. The model optimizes a combined loss function incorporating classification, bounding box, and GIoU losses. The training process includes regular validation checks, model checkpointing, and learning rate scheduling, concluding with Non-Maximum Suppression for final predictions.

Evaluation metrics

To evaluate our weed detection model’s performance comprehensively, we employ multiple metrics: Average Precision (AP), Average Recall (AR), Mean Average Precision (mAP), and Frames Per Second (FPS). These metrics collectively assess both accuracy and computational efficiency.

The fundamental components of our evaluation are Precision (P) and Recall (R), defined as: \(\text P = \frac{TP}{TP + FP}\), and \(R = \frac{TP}{TP + FN}\). Where a TP (true positive) is a detected bounding box that correctly identifies a weed species and has an IoU above a specified threshold (e.g., 0.50) with the ground truth bounding box. A FP (false positive) is a detection that either does not sufficiently overlap with any ground truth box or incorrectly identifies the weed species. A FN (false negative) occurs when a ground truth weed instance is not detected by the model.

Average Precision (AP) provides a comprehensive view of detection performance by integrating precision over recall. It effectively summarizes the precision-recall curve65 into a single value, capturing the model’s ability to make accurate detections across different confidence thresholds. The AP is calculated as:

where P(R) represents the precision value at each recall level. This integration over the entire recall range [0,1] ensures that both high precision and high recall are rewarded, providing a balanced measure of detection quality.

Average Recall (AR) quantifies the model’s detection coverage across various IoU thresholds. This metric is particularly important for assessing the model’s ability to detect weeds under different overlap criteria, making it valuable for understanding detection robustness. AR is computed as:

where N is the number of IoU thresholds considered, and \(R_{\text {max}}(IoU_i)\) represents the maximum recall achieved at each IoU threshold. This averaging across multiple IoU thresholds provides insight into the model’s detection stability under varying overlap requirements.

Mean Average Precision (mAP) evaluates the model’s performance across all weed classes, providing a comprehensive metric for multi-class detection scenarios. This metric is particularly crucial in weed detection as it accounts for the varying difficulties in detecting different weed species and growth stages. The mAP is calculated as66:

where C represents the total number of weed classes, and \(AP_c\) is the Average Precision for each class. This averaging across classes ensures that the model’s performance is evaluated fairly across all weed types, regardless of their representation in the dataset. We also measure computational efficiency using Frames Per Second (FPS):

where \(N_{\text {frames}}\) is the number of processed frames and \(T_{\text {processing}}\) is the total processing time in seconds. FPS is crucial for assessing real-time detection capabilities, particularly important for practical field applications where rapid weed identification is essential. These metrics are evaluated across various IoU thresholds (0.5:0.95) to provide a comprehensive assessment of our model’s detection accuracy, classification precision, and operational efficiency in real-world scenarios.

Experimental result

In this research, we implemented several state-of-the-art algorithms, including DINO13 with ResNet14 and Swin15 backbones, DETR16, EfficientNet B417, RetinaNet19, and Weedswin (our custom architecture). Among these, DETR16 and RetinaNet19 were applied exclusively to our AWD dataset, while all the algorithms were evaluated on the BWD dataset. The evaluation encompasses both training and test datasets, with a detailed analysis across 16 weed species. We employed all the evaluation metrics at different IoU thresholds and detection limits, as well as mAP and mAR. Furthermore, we compared the inference speed (FPS) of the models to provide a comprehensive view of their performance and capabilities.

Object detection result on AWD dataset

Table 4 presents a comparative analysis of DETR16 and RetinaNet19 models evaluated on the AWD dataset52. The performance metrics include mAP and mAR for both training and test sets, along with inference speed measured in FPS. RetinaNet19 demonstrates superior performance across all evaluation metrics. In terms of accuracy, RetinaNet achieves higher mAP scores of 0.907 and 0.904 on training and test sets respectively, compared to DETR’s 0.854 and 0.840. This pattern continues in the recall metrics, where RetinaNet approaches near-perfect scores with mAR values of 0.997 for training and 0.989 for testing, while DETR achieves 0.941 and 0.936 respectively. Most notably, RetinaNet exhibits substantially faster inference speed at 347.22 FPS, which is more than five times faster than DETR’s 65.43 FPS.

Table 5 presents a detailed species-wise performance analysis, showing averaged results across 11 weeks for 16 weed species52. RetinaNet19 exhibits more consistent performance across species, with notable achievements for AMATU (mAP 0.832) and AMAPA (mAP 0.877), though showing reduced effectiveness with ECHCG (mAP 0.566). DETR16 displays varying performance levels, performing strongly with AMBEL (mAP 0.817) and SIDSP (mAP 0.771), but struggling with CHEAL (mAP 0.503) and SORHA (mAP 0.527). RetinaNet maintains higher recall scores across species, while both models show expected performance decreases as IoU thresholds increase from 0.5 to 0.75.

Figure 5 demonstrates the detection performance of DETR16 and RetinaNet19 models across three distinct weed species in the AWD dataset52. The figure presents a comparative analysis through original images, ground truth annotations, and model predictions. In the first row, both models successfully detected CHEAL at week 7, albeit with low confidence scores and imperfect bounding box generation. These imperfections can be attributed to the AWD dataset’s labeling inconsistencies, which prompted the creation of our more accurately labeled BWD dataset. The second row showcases AMBEL at week 7, where both models exhibited strong detection performance. RetinaNet19 achieved a marginally superior confidence score of 99.3% compared to DETR’s 89.6%. Similarly, the third row featuring DIGSA at week 5 demonstrates excellent detection capabilities from both models, with confidence scores exceeding 90%. Throughout all three species, RetinaNet consistently produced slightly higher confidence scores, particularly excelling in DIGSA detection with a 99.9% confidence score.

Comparison of object detection results for CHEAL, AMBEL, and DIGSA using DETR16 and RetinaNet19 models for AWD dataset. Row 1 displays predictions for CHEAL, Row 2 displays predictions for AMBEL, and Row 3 displays predictions for DIGSA, with ground truth and model confidence scores indicated for each detection.

Object detection result on BWD dataset

Table 6 presents a comprehensive performance comparison of various state-of-the-art object detection models evaluated on the BWD dataset. The comparison encompasses seven different model configurations, each characterized by their specific backbone architectures, and evaluated using mAP, mAR on both training and test sets, along with their inference speed (FPS), computational complexity (FLOPs), and model size (Parameters).

The results demonstrate that while DINO13 with Swin15 backbone achieves marginally higher mAP scores (0.993 training, 0.994 test), our proposed WeedSwin architecture exhibits comparable accuracy (0.992 training, 0.993 test) and superior recall performance (0.985 training and test mAR) while offering significant advantages in processing speed. To assess the statistical robustness of WeedSwin, we conducted three independent runs with identical configurations, yielding a mean mAP of 0.993 with a standard deviation of 0.0015. Statistical analysis revealed a 95% confidence interval of 0.993 ± 0.004 (0.989-0.997), demonstrating the model’s consistency across multiple initializations. This narrow confidence interval confirms that WeedSwin’s superior performance is statistically significant and not attributable to chance or favorable initialization conditions. The minimal variability between runs further validates the architecture’s stability in maintaining high detection accuracy across diverse weed species and growth stages. WeedSwin achieves an impressive 218.27 FPS, which is approximately 43% faster than DINO-Swin’s 152.62 FPS. Additionally, WeedSwin requires less computational resources (0.114T FLOPs) compared to DINO-Swin (0.241T FLOPs) and has a substantially smaller parameter count (40.476M vs 0.209G).

When examining the broader landscape of models, RetinaNet19 with ResNext58 101 backbone and EfficientNet B417 with ResNet14 50 backbone achieve higher FPS (504.36 and 490.65 respectively) but demonstrate lower accuracy (0.982 and 0.989 test mAP respectively) and recall rates (0.947 and 0.967 test mAR respectively). YOLO v818 with CSPNet67 backbone demonstrates impressive performance with 0.990 test mAP and 0.971 test mAR, while achieving a remarkable 422.48 FPS with efficient computational requirements (100.15G FLOPs and 36.12M parameters). DETR16 with ResNet14 50 backbone shows competitive accuracy (0.984 test mAP) and high recall (0.985 test mAR) but operates at a significantly lower speed of 80.65 FPS. DINO13 with ResNet14 101 backbone presents balanced performance (0.992 test mAP, 0.979 test mAR, 120.67 FPS) but still falls short of WeedSwin’s efficiency and recall capability.

The performance of WeedSwin is particularly noteworthy as it achieves a near-optimal balance between accuracy, recall, and computational efficiency. The marginal difference in mAP (0.001 lower than DINO-Swin) is negligible in practical applications, while its superior mAR performance, substantially reduced computational requirements, and significant gain in processing speed represents significant advantages for real-time weed detection systems.

Table 7 presents a comprehensive evaluation of seven cutting-edge object detection architectures tested on the BWD dataset. Each model’s performance is meticulously evaluated across 16 distinct weed species using four critical metrics: mAP, mAP at 50% IoU threshold (mAP_50), mAP at 75% IoU threshold (mAP_75), and Average Recall (AvgRec). The proposed WeedSwin model demonstrates remarkable superiority across the board, consistently achieving exceptional scores across all metrics. For most species, it achieves perfect or near-perfect scores (1.000) for mAP_50, and notably high mAP_75 values, indicating superior detection capability even at stricter intersection-over-union thresholds. This performance is particularly impressive for challenging species like AMBEL where WeedSwin achieves 0.994 mAP, 1.000 mAP_50, 1.000 mAP_75, and 1.000 AvgRec, surpassing all other models. When examining individual model performances, RetinaNet19 with ResNeXt-10158 backbone shows strong baseline performance with mAP values consistently above 0.900 for most species. Its performance is particularly notable for SIDSP with 0.969 mAP. EfficientNet-B417 demonstrates slightly better performance than RetinaNet in several cases, especially for species like AMBEL (0.981 mAP) and SIDSP (0.989 mAP), suggesting that its architecture might be better suited for certain weed morphologies. DETR16, while showing more modest performance among the seven models, still maintains respectable scores. Its performance is particularly challenged with species like AMATU where it achieves 0.899 mAP, significantly lower than WeedSwin’s 0.975. However, DETR maintains strong Average Recall scores above 0.989 for most species, indicating good detection capability despite lower precision.

DINO13 with ResNet-5014 backbone shows consistent improvement over DETR across all metrics, positioning itself as a strong competitor to RetinaNet19 and EfficientNet-B417. Its performance is particularly impressive for species like SIDSP and AMBEL, where it achieves mAP scores of 0.990, demonstrating the effectiveness of its architecture. Interestingly, DINO with Swin15 backbone shows substantially lower performance compared to its ResNet-50 counterpart, with mAP values averaging around 0.7-0.8 across most species. This significant difference suggests that the combination of DINO architecture with Swin transformer may not be optimally configured for this specific weed detection task. YOLO v818 with CSPNet67 backbone demonstrates impressive performance, achieving mAP scores comparable to DINO with ResNet-50 and often exceeding EfficientNet-B4 results. For species like SIDSP, it reaches 0.991 mAP, showcasing its effectiveness as a real-time detection model that doesn’t compromise on accuracy. Its consistent performance across all species, with most mAP scores above 0.950, highlights the robustness of its architecture for weed detection tasks.

The most challenging species across all models appear to be SORHA and SORVU, where even the best-performing WeedSwin model achieves relatively lower scores (0.932 and 0.959 mAP respectively). This consistent pattern suggests inherent difficulties in detecting these particular species. These two species are in the same genus and are closely related, and, therefore, have similar morphological characteristics and growth patterns. The performance gap is particularly pronounced for DINO with Swin backbone, which achieves only 0.710 and 0.722 mAP for these species respectively, with a notably low AvgRec of 0.894 for SORVU. In terms of Average Recall, most models perform exceptionally well (above 0.990 for many species), indicating strong detection capabilities. However, the proposed WeedSwin model distinguishes itself through superior precision across different IoU thresholds, as evidenced by its consistently higher mAP_75 scores. This suggests better localization accuracy and more precise bounding box predictions, making it particularly suitable for practical agricultural applications where precise weed localization is crucial for targeted treatment. YOLO v8 also demonstrates strong recall capabilities while maintaining competitive precision, indicating its potential as a balanced solution for real-world deployment scenarios.

Table 8 presents the performance analysis of six economically significant weed species that are particularly problematic in US agriculture: AMAPA (palmer amaranth), AMBEL (common ragweed), DIGSA (large crabgrass), SETFA (giant foxtail), CHEAL (common lambsquarters), and AMATU (waterhemp). These species, selected from our dataset of 16 weed types, are commonly referred to as “driver weeds” due to their critical role in shaping agricultural management decisions across the United States. Their selection for detailed analysis was based on three key factors: their extensive geographic distribution throughout US farming regions, or their documented resistance to multiple herbicides, and their substantial negative impact on crop yields. These characteristics make them particularly challenging for farmers and agricultural managers, necessitating precise detection and management strategies.

Comparison of object detection results for ABUTH and ERICA using DINO-Resnet 101, EfficientNet B4, DINO-Swin and Weedswin models for BWD dataset. Row 1 displays the original image, ground truth, and predictions with model confidence scores and bounding box for ABUTH Week 6, and Row 2 displays for ERICA Week 4.

Analysis of detection performance across growth stages (Week 1–11) reveals distinct patterns among the different architectures. RetinaNet exhibits exceptional accuracy, achieving perfect detection (mAP = 1.000) in mature growth stages (Weeks 6-11) for most species. However, it shows varying performance in early-stage detection, particularly for SETFA and CHEAL (mAP = 0.854 and 0.829 respectively in Weeks 1-2). Notably, for AMATU, RetinaNet maintains consistently high performance (mAP > 0.95) even in early stages, suggesting better detection capability for this species. Our proposed WeedSwin architecture demonstrates superior consistency throughout all growth stages and species. It maintains mAP values consistently above 0.95 from Week 6 onwards and, notably, shows stable performance even during early growth stages (Weeks 1-3) where other models typically struggle. This stability is particularly evident in challenging species like DIGSA, SETFA, and AMATU, where competing models show considerable performance variations.

YOLO v8 architecture demonstrates impressive and consistent performance across all species and growth stages. For AMAPA and AMBEL, it achieves mAP values comparable to WeedSwin (ranging from 0.947 to 0.998), with particularly strong performance in later growth stages. For DIGSA and SETFA, YOLO v8 maintains high accuracy (mAP consistently above 0.92 after Week 1), showing remarkable stability across the growth timeline. For CHEAL, while its performance (mAP ranging from 0.921 to 0.973) is slightly below WeedSwin, it still outperforms several other architectures, particularly in early growth stages. YOLO v8’s performance with AMATU is notable, achieving mAP values between 0.945 and 0.984, demonstrating strong detection capabilities for this challenging species throughout its growth cycle.

In contrast, DETR shows interesting variability in performance across species. While it struggles with early growth stages (Weeks 1-5) for most species with mAP values frequently below 0.5, it demonstrates remarkably high accuracy for AMATU throughout all growth stages (mAP > 0.99), suggesting species-specific detection capabilities. The DinoXSwin and DinoXResNet50 architectures show comparable performance patterns, with DinoXSwin maintaining a slight edge across most scenarios, though both models show lower performance for AMATU compared to other species, particularly in DinoXResNet50’s case (mAP as low as 0.782 in Week 5).

EfficientNet B4 displays inconsistent performance across different growth stages and species. While it occasionally achieves perfect detection, it shows significant fluctuations, particularly evident in SETFA detection during early weeks (mAP = 0.739 in Week 1) and DIGSA in later stages (mAP = 0.909 in Week 11). For AMATU, it demonstrates strong performance overall but with notable variability (dropping to mAP = 0.909 in Week 4).

While RetinaNet achieves the highest peak accuracy, both WeedSwin and YOLO v8 demonstrate exceptional consistency across growth stages and species, making them particularly suitable for practical agricultural applications. WeedSwin maintains a slight edge in overall performance, especially for challenging species like AMATU, while YOLO v8 offers competitive accuracy with the added benefit of its renowned speed and efficiency. This stability in performance, regardless of plant maturity or species, represents a significant advantage for real-world weed management systems where reliable detection throughout the growing season is crucial.

Ablation study

To better understand the contribution of different architectural components and training strategies to the performance of our WeedSwin model, we conducted a comprehensive ablation study. We systematically modified key aspects of the original model to analyze their impact on detection accuracy, recall, and computational efficiency. Our ablation study examines three aspects: backbone architecture modifications, encoder-decoder enhancements, and training optimization strategies. Table 9 presents a quantitative comparison of these variants against the original WeedSwin model.

Lightweight backbone

In this experiment, we explored the impact of simplifying the backbone architecture to achieve better computational efficiency while maintaining competitive performance. We reduced the depth of the model from [2, 2, 18, 4] to [2, 2, 9, 2], effectively halving the complexity of the third and fourth stages. Additionally, we reduced the window size from 12 to 7 pixels and decreased the drop path rate from 0.2 to 0.1. This configuration yielded a 25% reduction in FLOPs (0.085T compared to the original 0.114T) with only minimal parameter reduction (40.12M vs. 40.48M). While detection performance showed a moderate decrease (mAP: 0.969 vs. 0.993, mAR: 0.958 vs. 0.985), the model maintained 96-97% of the original performance with significantly lower computational requirements, making it suitable for resource-constrained deployment scenarios.

Enhanced encoder-decoder

Our second variant focused on improving feature representation capabilities by enhancing the encoder-decoder architecture. We increased the number of feature levels from 4 to 5, enabling the model to better capture multi-scale features critical for detecting objects of varying sizes. We also deepened the encoder and decoder by increasing their layers from 4 to 6, while reducing the feedforward channel dimensions from 2048 to 1024 to partially mitigate computational overhead. This configuration achieved the highest detection recall (mAR: 0.996) among all variants while maintaining the same precision as the original model (mAP: 0.993). However, these performance improvements came at a significant computational cost, with more than doubled FLOPs (0.250T), substantially increased parameters (269M), and reduced inference speed (80.60 FPS vs. 218.27 FPS). This variant demonstrates the upper bounds of performance achievable through architectural enhancements when computational efficiency is not a primary concern.

Optimized training

The third variant investigated the impact of alternative training strategies without modifying the model architecture. We increased the base learning rate from 0.0001 to 0.0002 and replaced the stepped learning rate schedule with a cosine annealing schedule to provide smoother learning rate decay. We also reduced the weight decay parameter from 0.05 to 0.03 and modified the denoising training configuration by decreasing both label noise scale (0.3 vs. 0.5) and box noise scale (0.6 vs. 1.0). These changes aimed to improve convergence and reduce overfitting. The performance results showed a slight decrease in detection accuracy (mAP: 0.982 vs. 0.993, mAR: 0.980 vs. 0.985) compared to the original model. Interestingly, despite maintaining the same architecture, this variant showed computational characteristics similar to the Enhanced Encoder-Decoder variant, which may be attributed to implementation details of the training optimizations. This experiment highlights the sensitivity of transformer-based detectors to training hyperparameters and the importance of carefully tuning them for optimal performance.

Discussion

This study presents a comprehensive evaluation of state-of-the-art object detection algorithms in agricultural weed detection, utilizing extensive datasets that encompass both Alpha Weeds Dataset (AWD) and Beta Weeds Dataset (BWD) scenarios. The research analyzes hundreds of thousands of images across sixteen weed species and eleven growth stages, providing deep insights into the performance characteristics of various detection architectures. Among the evaluated models, our proposed WeedSwin architecture—which incorporates a modified Swin Transformer-based encoder-decoder framework with additional specialized layers—consistently demonstrates superior performance. The architecture achieves an optimal balance between detection accuracy, recall, and inference speed, addressing the critical requirements of precision agriculture where both accuracy and real-time processing capabilities are essential. These findings underscore the significance of developing robust and efficient weed detection models, particularly in agricultural applications where precise identification and rapid response times can significantly impact crop management outcomes. The results not only validate the effectiveness of our proposed approach but also provide valuable insights into the relative strengths and limitations of current object detection methodologies in agricultural contexts.