Abstract

The primary goal of this research is to apply bagging-based regression techniques to forecast the solubility of raloxifene and the density of carbon dioxide (CO₂). Bagging regression models were utilized, namely Bagging Bayesian Ridge Regression (BAG-BRR), Bagging Linear Regression (BAG-LR), and Bagging Polynomial Regression (BAG-PR). The hyperparameters of these models were tuned using the Tree-Based Parzen Estimators algorithm to achieve optimal performance. The results demonstrate the efficacy of the bagging regression models in predicting both the CO2 density and the solubility of raloxifene. For the CO2 density prediction, BAG-BRR achieved a coefficient of determination (CoD/R2) of 0.83728, an RMSE of 6.0525E+01, and an AARD% of 1.16098E+01. BAG-LR attained a CoD of 0.85705, an RMSE of 5.8358E+01, and an AARD% of 1.11066E+01. BAG-PR exhibited superior performance with a CoD of 0.98559, an RMSE of 2.5934E+01, and an AARD% of 4.68598E+00. Similarly, for the solubility of raloxifene prediction, BAG-BRR achieved a CoD of 0.90615, an RMSE of 6.5797E−01, and an AARD% of 1.36868E+01. BAG-LR attained a CoD of 0.90002, an RMSE of 6.8669E−01, and an AARD% of 1.54778E+01. BAG-PR demonstrated outstanding performance with a CoD of 0.98565, an RMSE of 2.8158E−01, and an AARD% of 6.28460E+00. The findings highlight the potential of bagging regression models, particularly BAG-PR, for reliable and accurate predictions of CO2 density and the solubility of raloxifene.

Similar content being viewed by others

Introduction

Machine Learning (ML) has been recognized as a new framework for developing algorithms that enable computers to extract patterns from data and estimate the desired variables autonomously. The field covers a variety of methodologies and strategies, such as regression, classification, clustering, and deep learning, among other techniques1,2,3. The popularity of machine learning has increased significantly due to its capacity to extract significant patterns and insights from intricate datasets, thereby enabling progress in diverse domains4. Despite the growing adoption of machine learning in various scientific fields, its application to modeling solid state solubility and density in supercritical CO2 systems, particularly for pharmaceutical compounds like raloxifene, has been limited. Considering the green processing using supercritical solvents, it is of great importance to further develop this process via computational approaches5,6.

The present study employed bagging regression models, specifically Bagging Bayesian Ridge Regression (BAG-BRR), Bagging Linear Regression (BAG-LR), and Bagging Polynomial Regression (BAG-PR). For improving the models’ accuracy, the Tree-Based Parzen Estimators (TPE) algorithm was employed for hyperparameter tuning. The proposed method, TPE, is capable of dynamically exploring the hyperparameter space by adjusting the balance between exploration and exploitation. This allows for efficient and effective optimization of hyperparameters.

Bagging is an ensemble learning method that uses bootstrapping to generate multiple subsets of the raw dataset for analysis. This method is used to strengthen the precision and generality of machine learning models by reducing the variance and overfitting. The process involves randomly selecting points from the raw data with replacement to generate new subsets of data. The generated subsets are then applied to train multiple models, which are combined to make a final prediction. Bagging has been shown to be effective in a variety of applications, including classification, regression, and clustering. The approach involves training individual models using each subset, and subsequently aggregating the predictions of all models to obtain the final prediction. Bagging is a technique that aids in reducing overfitting, enhancing stability, and improving prediction accuracy by decreasing variance. Ensemble modeling is a widely used technique in which multiple base models, such as decision trees, are combined to create a more robust and accurate model.

The selection of bagging models, i.e., Bagging Bayesian Ridge Regression (BAG-BRR), Bagging Linear Regression (BAG-LR), and Bagging Polynomial Regression (BAG-PR) was driven by their ability to address the complexities inherent in correlation of the solubility of raloxifene7,8. Bagging, as an ensemble technique, reduces variance and mitigates overfitting by combining predictions from multiple models trained on bootstrapped subsets of the data. This is particularly advantageous for our dataset, which, although limited in size, exhibits nonlinear relationships between the input variables (temperature and pressure) and the target properties. Bayesian Ridge Regression incorporates regularization to handle potential multicollinearity and provides probabilistic predictions, while Linear Regression serves as a baseline for comparison. Polynomial Regression captures nonlinear patterns by introducing higher-degree terms, making it well-suited for modeling the intricate behavior of solubility and density under varying conditions. By leveraging these models within a bagging framework, we aim to enhance predictive accuracy and robustness, ensuring reliable performance across the dataset.

The Tree-Based Parzen Estimators (TPE) algorithm is a popular approach for optimizing hyperparameters in machine learning models. The methodology employed involves the utilization of both tree-based structures and Bayesian optimization to effectively navigate the hyperparameter search space. The TPE method adjusts the trade-off between exploration and exploitation through the use of an objective function model and a process of iteratively sampling favorable areas within the hyperparameter space. The algorithm in question has gained significant popularity for the purpose of optimizing the performance of models and enhancing the generalization abilities of machine learning models.

Data of drug solubility and solvent density

The dataset provided in this study contains values for temperature (T), pressure (P), solubility of raloxifene (y), and CO2 density. The dataset is sourced from9, and listed in Table 1. Figures 1 and 2 are scatter plots for the solubility of raloxifene and density for solvent at supercritical state. The x-axis indicates temperature (K), the y-axis represents pressure (bar), and the color of the markers represents the respective property (solubility or CO2 density). The dataset has been also used by Aldawsari et al.7 who developed ML models for correlation of raloxifene solubility in supercritical CO2.

Methodology

Tree-based Parzen estimators (TPE)

This algorithm is a robust optimization technique that is specifically designed to effectively explore the intricate and multi-dimensional hyperparameter space. Its intelligence and efficacy make it a valuable tool for optimization purposes. The paper proposes the use of kernel density estimation and a sequential model-based approach in TPE to effectively explore the search space for optimal hyperparameter configurations. The technique of Tree-structured Parzen Estimator (TPE) is a valuable tool for hyperparameter tuning in various academic and practical applications. It achieves this by dynamically adjusting the exploration and exploitation trade-off, thereby focusing on promising regions10,11.

The TPE algorithm follows a systematic workflow that intelligently explores the hyperparameter space12:

-

(a)

Initialization: TPE begins by specifying an initial prior probability distribution over the hyperparameters. This distribution represents the initial beliefs about the hyperparameter values before any observations are made.

-

(b)

Candidate Proposal: In each iteration, TPE generates a set of candidate hyperparameter configurations based on the current probability distribution. These proposals strike a careful balance between exploring unexplored regions and exploiting promising areas, seeking the optimal configuration.

-

(c)

Evaluation and Split: Each proposed configuration is evaluated using an appropriate evaluation metric on a validation set. The obtained performance measures are then used to divide the prior distribution into two distinct parts: one representing “good” configurations and the other representing “bad” configurations.

-

(d)

Probability Distribution Update: TPE updates the probability distributions for both the “good” and “bad” configurations based on the evaluation results. This update involves leveraging kernel density estimation, adjusting the densities of the hyperparameters to reflect the observed performance.

-

(e)

Iterative Refinement: Steps b to d are repeated for a predefined number of iterations or until a convergence criterion is satisfied. Throughout the iterations, TPE dynamically adjusts the exploration and exploitation trade-off, honing in on the most promising regions of the hyperparameter space.

For the initial prior probability distributions, the TPE algorithm utilized uniform distributions tailored to each model’s hyperparameters. In the Bagging Bayesian Ridge Regression (BAG-BRR) model, the regularization parameter λ was assigned a uniform distribution ranging from 0.001 to 10.0, while the hyperparameters a0 and b0 for the gamma prior were each uniformly distributed between 0.1 and 10.0. For the Bagging Linear Regression (BAG-LR) model, no hyperparameters required tuning, as it is a base model without additional parameters. In the Bagging Polynomial Regression (BAG-PR) model, the polynomial degree was given a uniform distribution over the integers4,5,13, and the interaction_only parameter followed a categorical distribution with options {True, False}. As for the search space defined for the hyperparameters tuned by TPE, it was designed to encompass plausible values to optimize model performance. For BAG-BRR, the search space included λ ∈ [0.001, 10.0], a0 ∈ [0.1, 10.0], and b0 ∈ [0.1, 10.0]. For BAG-PR, it covered the polynomial degree ∈ {1, 2, 3, 4, 5} and interaction_only ∈ {True, False}. The TPE algorithm iteratively sampled from these spaces, refining its focus to efficiently identify optimal hyperparameter configurations.

Bagging ensemble method for regression

Bagging Regression, also referred to as Bootstrap Aggregating, is an ensemble technique utilized to increase fitting precision and minimize variance by combining multiple regression models in its architecture. The methodology employed involves the creation of various subsets of the original dataset through bootstrapping. Subsequently, a regression model is fitted to each of these subsets. The ultimate forecast is acquired by consolidating the prognostications from singular models, commonly by means of averaging14,15.

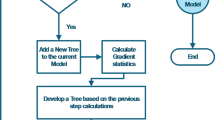

As shown in Fig. 3, the bagging process commences by creating numerous bootstrap samples from the primary training dataset. Bootstrap sampling is a statistical technique that entails the random selection of observations from a given dataset with replacement. This process generates subsets of the same size as the original dataset. The utilization of this resampling technique facilitates the generation of varied subsets, thereby enabling the training of each regression model on marginally distinct data.

In this study, a regression model is fitted independently for each bootstrap sample. Various regression algorithms can be utilized, including but not limited to linear regression, decision trees, and support vector regression. Bagging is a method that utilizes the training of multiple models using independent data sets. This approach leverages the diversity of the models to capture different patterns and relationships that are present in the dataset16.

Polynomial regression (PR)

Polynomial regression has been extensively applied in scientific data regression tasks due to its low computational cost, high interpretability, and well-known gradient17,18. Polynomial regression models the association between a dependent (y) and an independent variable (x) by fitting an nth-degree polynomial function to the data. The objective of polynomial regression is to estimate the conditional mean of y, expressed as E(y|x), which captures the nonlinear relationship.

Polynomial regression can be viewed as a linear statistical estimation problem despite the fact that it is utilized to fit a nonlinear model to the data. This is due to the fact that the inferred parameters can be expressed as a linear combination of the regression function E(y|x). Since multiple linear regression includes polynomial regression, the two are mutually exclusive18.

The equation for n-th order polynomial regression, with one independent variable, can be expressed in a general form as follows17:

here \(\widehat{y}\) represents the expected response variable, and × represents the independent variable.

By estimating the coefficients \({\upbeta }_{0},{\upbeta }_{1},\dots ,{\upbeta }_{n}\) through techniques such as ordinary least squares, the polynomial regression model can capture the nonlinear relationship between the independent variable × and the expected value of the dependent variable y.

Bayesian ridge regression (BRR)

BRR offers a powerful solution for regression analysis by combining the principles of ridge regression with Bayesian statistics. In this section, we provide a comprehensive overview of the Bayesian Ridge Regression model and detailing its mathematics for fitting the dataset used in this study7,19. BRR posits that the output y is expressed as a weighted sum of the input features X, accompanied by a noise component \(\upepsilon\). The mathematical representation of the model is given by20:

In this context, y represents an n-dimensional vector that corresponds to the response7.

To finalize the Bayesian formulation, several assumptions are made for both the regression weights \(\upbeta\) and the precision term \({\alpha }\)7. In BRR, the coefficients are modeled using independent normal distributions, while the precision parameter follows a gamma distribution21:

here \(\uplambda\) denotes the regularization term that governs how strongly the coefficients are pulled toward zero, while \({a}_{0}\) and \({b}_{0}\) serve as hyperparameters that define the shape and scale of the gamma distribution used as the prior.

Linear regression (LR)

The LR model is designed to identify a linear relationship between a response and one or more predictor variables, also known as independent variables. In this approach, the assumption of normality pertains to22:

here y stands for the outputs that need to be calculated, and x denotes the independent variable of the modeling. The LR is employed to minimize the sum of squared errors, aiming to decrease the model’s overall error23,24.

here yk denotes the output of the k-th instance25. The mean of \({y}_{k}\) over \(n\) points is represented by \({\overline{y}}_{k}\), and the predicted value for the k-th data is \(\widehat{{y}_{k}}\)26.

Performance metrics

To examine the predictive accuracy of the bagging models for the prediction of CO2 density and the solubility of raloxifene, several performance metrics were employed. These metrics offer numerical indicators of the model’s accuracy and precision.

-

1.

Coefficient of Determination (CoD/R2 Score): The R2 score, quantifies the extent to which the input variables can predict the variability in the target variable. It assesses the proportion of the target variable’s variance that can be explained by the provided input variables27:

$${R}^{2}=1-\frac{{\sum }_{i=1}^{n}{\left({y}_{i}-\widehat{{y}_{i}}\right)}^{2}}{{\sum }_{i=1}^{n}{\left({y}_{i}-\overline{y }\right)}^{2}}$$where \(({y}_{i})\) stands for the observed values, \((\widehat{{y}_{i}})\) denotes the predicted values, \((\overline{y })\) represents the mean of the observed values, and n the quantity of data points.

-

2.

Root mean squared error (RMSE): The RMSE is a widely employed metric for assessing the average magnitude of prediction discrepancies. It is computed as28:

$$RMSE = \sqrt {\frac{1}{n}\mathop \sum \limits_{i = 1}^{n} \left( {y_{i} - \widehat{{y_{i} }}} \right)^{2} }$$where \(({y}_{i})\) stands for the observed values, \((\widehat{{y}_{i}})\) shows the predicted values, and \(n\) indicates the total number of data points.

-

3.

Average Absolute Relative Difference (AARD%): The average absolute relative difference, represented as a percentage, quantifies the mean relative disparity between the projected and actual values. It is determined as an average of the relative differences between the observed and predicted values29.

$$AARD\% = \frac{1}{n}\mathop \sum \limits_{i = 1}^{n} \left| {\frac{{y_{i} - \widehat{{y_{i} }}}}{{y_{i} }}} \right| \times 100$$where \(({y}_{i})\) is the observed values, \((\widehat{{y}_{i}})\) stands for the predicted values, and \(n\) denotes the total quantity of data points.

Results and discussion

The implementation was carried out in Python, leveraging Scikit-learn to build bagging regression models—specifically Bagging Bayesian Ridge, Linear, and Polynomial Regression—for predicting raloxifene solubility and CO2 density. Hyperparameter optimization was carried out via the Tree-Based Parzen Estimators (TPE) algorithm from Hyperopt (likely the intended “bejoor”), while Matplotlib facilitated data visualization through scatter plots and NumPy handled numerical computations. While 20% is kept for testing, 80% of data points serve for training and validation. Table 2 summarizes the performance analysis and comparisons for the regression models in estimation of CO2 density from the dataset.

The BAG-PR model obtained an R2 of 0.98559 for fitting the data, confirming a strong correlation between the actual and estimated solvent density. Additionally, it recorded the lowest RMSE of 25.934, indicating a minimal average discrepancy between the predicted and observed values. The AARD% value of 4.68598E+00 suggests that, on average, the model predictions deviated by approximately 4.69% from the observed values30. Table 3 presents the performance analysis for the regression models in calculating the solubility of raloxifene.

Among the models, the BAG-PR model recorded the greatest R2 value of 0.98565, demonstrating good correlation between the estimated and observed solubility data. It also obtained the minimum RMSE value of 2.8158E−01, indicating a small average difference between the predicted and observed values. The AARD% value of 6.28460E+00 suggests that, on average, the model predictions deviated by approximately 6.28% from the observed solubility values.

Overall, the Bagging Polynomial Regression (BAG-PR) model revealed greater precision among the other models in predicting both CO2 density and raloxifene solubility, reporting the highest R2 and the lowest RMSE values. These results indicate the robustness of the BAG-PR model for accurately predicting the properties of interest in this study. Figures 4 and 5 are comparison of actual and predicted values and show the high performance of BAG-PR for both outputs. The representation of density and solubility as a two-variable function is shown in three dimensions in Figs. 6 and 7.

Figures 8 and 9 demonstrate the direct correlation between density and pressure, as well as the inverse correlation between density and temperature. Figures 10 and 11 demonstrate the correlation between the increase in solubility and the increase of both input parameters. Also, Figs. 12 and 13 represent the contour of solvent density and drug solubility, respectively. The variations in the density and solubility are in agreement with the previous study7.

Table 4 compares the performance of the proposed Bagging Polynomial Regression (BAG-PR) model for predicting raloxifene solubility in supercritical CO2 against existing methods from previous studies, evaluated using the Average Absolute Relative Difference (AARD%). The proposed method achieved an AARD% of 6.258, outperforming the stacked machine learning model from Najmi et al.31 with an AARD% of 8.62 and the machine learning approach by Bahrami et al.32 with an AARD% of 13.89. This comparison underscores the superior accuracy of the proposed BAG-PR model in this context.

Conclusion

We evaluated the solubility of raloxifene and CO2 density in a dataset consisting of temperature and pressure as the inputs. Our goal was to develop accurate predictive models for these properties using the Bagging Bayesian Ridge Regression (BAG-BRR), Bagging Linear Regression (BAG-LR), and Bagging Polynomial Regression (BAG-PR) algorithms, while employing the Tree-Based Parzen Estimators (TPE) algorithm for hyper-parameter tuning.

The results of our analysis demonstrate the effectiveness of the developed models in predicting solvent density and the solubility of medicine. For the prediction of CO2 density, all three models performed well, with R2 scores ranging from 0.83728 to 0.98559. Among them, the BAG-PR model achieved the highest R2 of 0.98559, showing excellent agreement. BAG-LR also indicated promising performance with an R2 of 0.85705. The root mean square error (RMSE) values ranged from 2.5934E+01 to 6.0525E+01, indicating relatively small deviations between the estimated and actual solvent density. Additionally, the average absolute relative difference (AARD%) ranged from 4.68598E+00% to 1.16098E+01%, further confirming the accuracy of the models.

Similarly, for the solubility of raloxifene, the BAG-BRR, BAG-LR, and BAG-PR models displayed commendable predictive performance. The R2 ranged from 0.90002 to 0.98565, representing a strong correlation. The BAG-PR model achieved the highest R2 score of 0.98565, demonstrating its superior ability to capture the underlying patterns in the data. The RMSE values ranged from 2.8158E−01 to 6.8669E−01, indicating small errors in the predicted solubility values. The AARD% ranged from 6.28460E+00% to 1.54778E+01%, reflecting the overall accuracy of the models in predicting the solubility of raloxifene.

Data availability

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- AARD%:

-

Average Absolute Relative Difference percentage

- BAG-BRR:

-

Bagging Bayesian Ridge Regression

- BAG-LR:

-

Bagging Linear Regression

- BAG-PR:

-

Bagging Polynomial Regression

- CoD/R2 :

-

Coefficient of determination

- ML:

-

Machine learning

- RMSE:

-

Root mean squared error

- TPE:

-

Tree-Based Parzen Estimators

References

de Araújo, L. J. P., Özcan, E., Atkin, J. A. D., Baumers, M. & Drake, J. H. Machine learning-based algorithm selection for irregular three-dimensional packing in additive manufacturing. Expert Syst. Appl. 287, 127661. https://doi.org/10.1016/j.eswa.2025.127661 (2025).

Liang, Y. et al. Typical applications and perspectives of machine learning for advanced precision machining: A comprehensive review. Expert Syst. Appl. 283, 127770. https://doi.org/10.1016/j.eswa.2025.127770 (2025).

Wan, S., Wan, F. & Dai, X.-J. Machine learning approaches for cardiovascular disease prediction: A review. Arch. Cardiovasc. Dis. https://doi.org/10.1016/j.acvd.2025.04.055 (2025).

Alpaydin, E. Introduction to Machine Learning (MIT Press, 2020).

Alzhrani, R. M., Almalki, A. H., Alaqel, S. I. & Alshehri, S. Novel numerical simulation of drug solubility in supercritical CO2 using machine learning technique: Lenalidomide case study. Arab. J. Chem. 15, 104180. https://doi.org/10.1016/j.arabjc.2022.104180 (2022).

Li, M., Jiang, W., Zhao, S., Huang, K. & Liu, D. Employment of artificial intelligence approach for optimizing the solubility of drug in the supercritical CO2 system. Case Stud. Thermal Eng. 57, 104326. https://doi.org/10.1016/j.csite.2024.104326 (2024).

Aldawsari, M. F., Mahdi, W. A. & Alamoudi, J. A. Data-driven models and comparison for correlation of pharmaceutical solubility in supercritical solvent based on pressure and temperature as inputs. Case Stud. Thermal Eng. 49, 103236. https://doi.org/10.1016/j.csite.2023.103236 (2023).

Zhang, X. Employment of a machine learning-based modeling and simulation to perceive the connections between material properties and quality attributes in pharmaceuticals. Chin. J. Phys. https://doi.org/10.1016/j.cjph.2025.05.027 (2025).

Notej, B., Bagheri, H., Alsaikhan, F. & Hashemipour, H. Increasing solubility of phenytoin and raloxifene drugs: Application of supercritical CO2 technology. J. Mol. Liquids 373, 121246 (2023).

Bergstra, J., Bardenet, R., Bengio, Y. & Kégl, B. Algorithms for hyper-parameter optimization. In Advances in Neural Information Processing Systems 24 (2011).

Dong, H., He, D. & Wang, F. SMOTE-XGBoost using Tree Parzen Estimator optimization for copper flotation method classification. Powder Technol. 375, 174–181 (2020).

Yu, T. & Zhu, H. Hyper-parameter optimization: A review of algorithms and applications. arXiv preprint arXiv:2003.05689 (2020).

The Merck Index: An Encyclopedia of Chemicals, Drugs, and Biologicals, 14th ed. Edited by Maryadele J. O'Neil (Editor), Patricia E. Heckelman (Senior Associate Editor), Cherie B. Koch (Associate Editor), and Kristin J. Roman (Assistant Editor). Merck and Co., Inc.: Whitehouse Station, NJ. 2006. 2564 pp. ISBN 0-911910-00-X. J. Am. Chem. Soc. 129, 2197–2197, https://doi.org/10.1021/ja069838y (2007).

Breiman, L. Bagging predictors. Mach. Learn. 24, 123–140 (1996).

Breiman, L. Using iterated bagging to debias regressions. Mach. Learn. 45, 261–277 (2001).

Kotsiantis, S. B., Kanellopoulos, D. & Zaharakis, I. D. In Artificial Intelligence Applications and Innovations: 3rd IFIP Conference on Artificial Intelligence Applications and Innovations (AIAI) 2006, June 7–9, 2006, Athens, Greece 3. 53–60 (Springer).

Ostertagová, E. Modelling using polynomial regression. Procedia Eng. 48, 500–506 (2012).

Heiberger, R. M., Neuwirth, E., Heiberger, R. M. & Neuwirth, E. Polynomial regression. R Through Excel: A Spreadsheet Interface for Statistics, Data Analysis, and Graphics, 269–284 (2009).

Bishop, C. M. & Nasrabadi, N. M. Pattern Recognition and Machine Learning Vol. 4 (Springer, 2006).

Mostafa, S. M., Eladimy, S. A., Hamad, S. & Amano, H. CBRL and CBRC: Novel algorithms for improving missing value imputation accuracy based on Bayesian ridge regression. Symmetry 12, 1594 (2020).

Polson, N. G. & Scott, J. G. Shrink globally, act locally: Sparse Bayesian regularization and prediction. Bayesian Stat. 9, 105 (2010).

Abu-Mostafa, Y. S. Learning from data: a short course (2012).

Pombeiro, H., Santos, R., Carreira, P., Silva, C. & Sousa, J. M. Comparative assessment of low-complexity models to predict electricity consumption in an institutional building: Linear regression vs. fuzzy modeling vs. neural networks. Energy Build. 146, 141–151 (2017).

Kim, M. K., Kim, Y.-S. & Srebric, J. Predictions of electricity consumption in a campus building using occupant rates and weather elements with sensitivity analysis: Artificial neural network vs. linear regression. Sustain. Cities Soc. 62, 102385 (2020).

Faris Alotaibi, H. et al. Pharmaceutical nanonization by green supercritical processing: Investigation of Exemestane anti-estrogenic medicine solubility using machine learning. J. Mol. Liq. 392, 123353. https://doi.org/10.1016/j.molliq.2023.123353 (2023).

Song, H., Shao, H., Zhang, Y. & Wang, X. Advancing nanomedicine production via green method: Modeling and simulation of pharmaceutical solubility at different temperatures and pressures. J. Mol. Liq. 411, 125806. https://doi.org/10.1016/j.molliq.2024.125806 (2024).

Botchkarev, A. Evaluating performance of regression machine learning models using multiple error metrics in azure machine learning studio. Available at SSRN 3177507 (2018).

Willmott, C. J. & Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 30, 79–82 (2005).

Lee, B. K., Lessler, J. & Stuart, E. A. Improving propensity score weighting using machine learning. Stat. Med. 29, 337–346 (2010).

Ghazwani, M., Yasmin Begum, M., Naglah, A. M., Alkahtani, H. M. & Almehizia, A. A. Development of advanced model for understanding the behavior of drug solubility in green solvents: Machine learning modeling for small-molecule API solubility prediction. J. Mol. Liq. 386, 122446. https://doi.org/10.1016/j.molliq.2023.122446 (2023).

Najmi, M. et al. Estimating the dissolution of anticancer drugs in supercritical carbon dioxide with a stacked machine learning model. Pharmaceutics 14, 1632 (2022).

Bahrami, Z., Bashipour, F. & Baghban, A. Application of machine learning approach to estimate the solubility of some solid drugs in supercritical CO2. Sci. Rep. 15, 5192. https://doi.org/10.1038/s41598-025-89858-5 (2025).

Acknowledgements

This work was supported by the Key Science and Technology Project of Henan Province, China (Grant No. 222102310510, 232102231045, 232102310022), the Cultivation Fund of Huanghuai University for National Scientific Research Project (XKPY-2022015), Teaching Reform Projects of Huanghuai University (2024XJGLX43), the Natural Science Foundation of Henan Province, China (Grant No. 232300421270) and 2023 Annual Research-Oriented Teaching Reform Project of Henan Province Undergraduate Universities (Jiao Gao [2023] No. 388).

Author information

Authors and Affiliations

Contributions

Shuhui Wu: Writing, Conceptualization, Investigation, Resources, Methodology. Ting Zhang: Software, Conceptualization, Investigation, Validation. Yunxia Tao: Writing, Validation, Methodology, Resources. Lina Fu: Writing, Conceptualization, Validation, Resources. Ying Chen: Writing, Validation, Investigation, Resources, Software. Weidong Qiang: Writing, Conceptualization, Investigation, Visualization. Enzhong Li: Writing, Conceptualization, Investigation, Methodology.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wu, S., Zhang, T., Tao, Y. et al. Intelligence modeling of nanomedicine manufacture by supercritical processing in estimation of solubility of drug in supercritical CO2. Sci Rep 15, 23193 (2025). https://doi.org/10.1038/s41598-025-05428-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-05428-9

Keywords

This article is cited by

-

Predictive analysis of solubility data with pressure and temperature in assessing nanomedicine preparation via supercritical carbon dioxide

Scientific Reports (2025)

-

Raloxifene solubility in supercritical CO2 and correlation of drug solubility via hybrid machine learning and gradient based optimization

Scientific Reports (2025)

-

Intelligence modeling of solubility of raloxifene and density of solvent for green supercritical processing of medicines for enhanced solubility

Scientific Reports (2025)