Abstract

Nearly a third of TB cases go undetected annually. WHO recommends computer-aided detection (CAD) to enhance TB screening, with studies showing comparable performance to local radiologists. Using 774 chest X-rays from the South African National TB Prevalence Survey, we compared 12 CAD software with 11 radiologists from Nigeria, India, the UK, and the US, against a composite microbiological reference standard. Sensitivity, specificity and Cohen’s kappa were calculated. Receiver-operating characteristic curves were developed for CAD and Euclidean distance assessed radiologists’ alignment with the best-performing software. Binomial regression tested the impact of radiologists’ characteristics on accuracy. Radiologist performance varied. On the restricted read, British radiologists had the highest sensitivity (78.7% [73.2–83.5%]) and Indian radiologists the lowest (67.1% [61.0–72.8%]). Specificity ranged from 75.8% (71.8–79.4%, Nigeria) to 84.3% (80.9–87.3%, the US). Radiologist performance was significantly impacted by HIV, prior TB, and age. The top CAD outperformed all except Indian radiologists when matching specificity. CAD with Conformité Européenne generally matched or surpassed radiologists. British radiologists’ sensitivity was closest to the top CAD, while American radiologists were closest in specificity and overall. Experience, TB reads, and country had no significant impact on accuracy. CAD performed well against radiologists globally, highlighting potential to enhance access to care.

Similar content being viewed by others

Introduction

An estimated 1.25 million people worldwide died from tuberculosis (TB) in 2023, with TB reclaiming its title as the deadliest infectious disease after the COVID-19 pandemic1. However, one in four of the over 10 million TB cases in 2023 were not diagnosed and reported, fueling high mortality rates. Innovations to facilitate the screening and diagnosis of these ‘missed millions’ are urgently needed to achieve the global goal of ending TB2.

The World Health Organization (WHO) previously estimated that two thirds of the global population do not have access to basic radiology services3. Chest X-ray (CXR) is a WHO-recommended screening tool for TB, however it has several shortcomings including modest reproducibility and high inter- and intra-reader variability in addition to the lack of trained human readers in high TB burden settings4,5,6,7. High-income countries without a substantial TB burden generally have better access to radiologists, due both to locally-trained radiologists and migration of those from low- and middle-income countries8. The resulting shortage in many high-burden settings constrains access to TB care. This is exacerbated by geographic inequality in human reader distribution as many radiologists, and high-quality radiological equipment, are concentrated in tertiary institutions in urban centers, resulting in unequal access to care9. Furthermore, there are disparities in the quality of radiological interpretation across settings due to differential training practices and professional development opportunities9. Altogether, this contributes to high TB incidence and lacking detection in high-burden countries.

Computer-aided detection (CAD) software utilizes artificial intelligence (AI) to overcome some of CXR’s shortcomings. The CAD market has expanded rapidly in recent years, with 12 certified and market-ready products currently available for TB10. CAD analyzes CXR to provide an output, between 0 and 1 or 0-100, that indicates the likelihood of TB. By doing so, CAD can be used in place of human readers, in settings where they are not available, as the output can be utilized for TB screening and triage decisions. CAD can also supplement the existing radiological workforce, providing AI-powered reading support to reduce workloads and provide quality assurance to limit inter- and intra-reader variability11,12. Recognizing the performance of CAD in several studies13,14,15, and its potential impact for TB screening programs, in March 2021 the WHO recommended the use of CAD for TB screening and triage in individuals 15 years and above, without the requirement of human readers16. This was the first time any AI product was recommended by WHO globally. Recent evaluations suggest that CAD interpretation generally achieves a high-performance standard and can triage for TB effectively, resulting in substantial numbers of diagnostic tests saved17,18,19. However, like human readers, there is evidence to suggest some variability in CAD performance in some sub-populations, particularly in individuals with prior TB history and in older populations17.

Numerous comparisons have evaluated CAD against human reader accuracy and have demonstrated comparable, if not better, performance by AI against both field and expert radiologists in high-burden countries. In Dhaka, Bangladesh, five evaluated CAD solutions were universally found to outperform Bangladeshi board-certified radiologists for TB screening13. In Viet Nam, six of twelve CAD products demonstrated comparable performance to an expert reader with over 30 years of experience18. Meanwhile, three CAD systems demonstrated similar accuracy to expert radiologists with at least 10 years of experience in pre-migration health assessment19. However, literature has generally focused on assessing a relatively limited number of CAD products against radiologists from within a specific context. Although important for situating CAD within TB programs, benchmarking AI against a more comprehensive array of radiologists, with different professional backgrounds and from countries with varied TB-burdens, is important given the high variation in radiological expertise globally, especially due to differential levels of experience and exposure to TB. This would provide a complementary global perspective on the current standard of CAD technology compared to radiologists. Furthermore, since CAD is an opaque tool with its ability to generalize and perform well across different populations depending upon the diversity of training data used, it is important to compare both CAD and human readers’ accuracy when interpreting data outside their training context. We therefore compared the performance of 12 commercially-available CAD products against 11 radiologists spanning four countries – India, Nigeria, the United Kingdom (UK) and the United States (US), using data from the most recent national prevalence survey in South Africa, a high HIV/TB setting.

Results

This analysis leveraged the CAD readings previously published in a complementary publication using the same evaluation dataset10,17. For the complementary publication, we contacted 13 CAD manufacturers with commercially available products for TB (according to ai4hlth.org) between January 2021 and December 2023, and 10 of the 13 contacted CAD manufacturers consented to participate17. These readings were utilized in this complementary analysis. Evaluated CAD products in this paper therefore include: CAD4TB [version 7] (Delft Imaging Systems, the Netherlands), ChestEye [version 2.4] (Oxipit.ai, Lithuania), Genki [version 20.12] (DeepTek, India), InferRead [version 1] (Infervision, China), JF CXR-2 [version 2] (JF Healthcare, China), Lunit INSIGHT CXR (Lunit) [version 4.9] (Lunit, South Korea), Nexus CXR [version 1.0] (Nexus, South Africa), qXR [version 3] (Qure.ai, India), RADIFY [version 3.5.0c] (Envisionit, South Africa), TiSepX-TB [version1.0.0.0] (MedicalIP, South Korea), XrayAME [version 1] (Epcon, Belgium) and Xvision [version 2.2.211] (Mindfully Technologies SRL, Romania). This resulted in a total of 12 products in this evaluation.

Of 774 participants, 396 (51%) were males and participants had a median age of 48.3 (± 18). Over half (n = 405, 52%) had TB symptoms, mostly cough (n = 258, 33%). Of 258 cases, most (n = 189, 73%) were positive on both liquid culture and Xpert (Table 1). Cases were significantly younger than controls, were more likely to have had prior TB infection and to currently be receiving or have previously received TB treatment; however, cases were less likely to report symptoms or to smoke than controls. Over a quarter of cases were people living with HIV (n = 65), compared to only 15% of controls (n = 77). All CAD assigned significantly lower abnormality scores to controls than to cases with p < 0.01.

323 (42%), 324 (42%), 258 (33%), and 259 (33%) of CXR were classified as TB-suggestive by British, Nigerian, American, and Indian radiologists, respectively. Of these, 203 (79%), 199 (77%), 177 (69%), and 173 (67%) were bacteriologically positive, respectively. Radiologists from all countries classified significantly more CXR as suggestive of TB in cases compared to controls.

Radiologists’ sensitivity and specificity against the MRS

In the restricted reading, radiologists from the UK had the highest overall sensitivity at 78.7% (95% CI: 73.2–83.5%), with specificity of 76.7% (95% CI: 72.9–80.3%), followed by Nigerian, American, and Indian radiologists (Table 2). Although the latter had significantly lower overall sensitivity than the British radiologists, there was some overlap in individual-level performance as UK Radiologist 4 demonstrated a notably lower sensitivity (56.5% [34.5–76.8%]) than all other study radiologists. In contrast, American Radiologist 2 had the highest sensitivity across all study radiologists (94.1% [71.3–99.9%]), however they only significantly outperformed Nigerian Radiologist 2 in terms of sensitivity.

While British radiologists had the highest overall sensitivity, their specificity (76.7% [72.9–80.3%]) was lower than American and Indian radiologists, but higher than those from Nigeria. The individuals with the lowest specificity were UK Radiologist 3 (67.2% [54.6–78.2%]) and Nigerian Radiologist 1 (67.9% [62.0-73.4%]). Both had specificity significantly lower than Nigerian Radiologist 2, American Radiologist 1, and Indian Radiologist 3, with the latter also performing significantly worse than the other Indian radiologists. However, both UK Radiologist 3 and Nigerian Radiologist 1 demonstrated comparably high sensitivity compared to their peers. Overall, American radiologists had the highest specificity at 84.3% (95% CI: 80.9–87.3%), with sensitivity of 68.6% (95% CI: 62.6–74.2%), followed by Indian, British and Nigerian radiologists. American Radiologist 2 demonstrated the highest specificity at 84.8% (68.1–94.9%), although this was not significantly better than others. The same radiologist also demonstrated the highest sensitivity. While Nigerian radiologists were the least specific (75.8% [71.8–79.4%]), they had the second highest sensitivity after radiologists from the UK.

In the inclusive reading, American radiologists had the highest sensitivity (92.3% [95% CI: 88.3–95.2%]), followed by British, Nigerian, and Indian radiologists, with significant differences between the highest and lowest (Table 2). However, American radiologists also had significantly lower specificity than any other group. American Radiologist 2 had the highest sensitivity point estimate of all radiologists, achieving 100%, but with moderate specificity (66.7% [48.2–82.0%]). Indian radiologists had the highest specificity (73.6% [95% CI: 69.6–77.4%]) overall, significantly outperforming radiologists from all other countries. Individual specificity ranged from 73.0% (67.4–78.1%) to 77.3% (66.2–86.2%) across Indian readers, with the top-performing significantly more specific than Radiologist 1 from the US, UK, and Nigeria. While the sensitivity of Indian radiologists was lowest, this was only significant when compared to American radiologists. Meanwhile, British radiologists simultaneously achieved the second highest sensitivity and specificity, with 87.2% (82.5–91.0%) and 63.4% (59.1–67.5%), respectively.

Only American Radiologist 2 surpassed the WHO’s Target Product Profile (TPP) of 90% sensitivity and 70% specificity on the restricted reading. None of the country-level estimates met the TPP. Sensitivity and specificity varied widely across countries despite receiving the same reading instructions. In both inclusive and restricted readings British and Nigerian readers were especially sensitive but less specific, where the reverse was observed for Indian radiologists who generally had high specificity. Although American radiologists were highly sensitive in the inclusive reading, in the restricted reading their performance diverged as Reader 1 demonstrated higher specificity, but missed TB cases, while Radiologist 2 delivered high sensitivity and specificity simultaneously.

Level of agreement between country groups

On the restricted reading, agreement ranged from weak to moderate between radiologists’ groups. Agreement was strongest between American and Indian radiologists, with a kappa of 0.74 (0.68–0.79) (Table 3). Meanwhile, British radiologists had a low to moderate level of agreement with radiologists from all other countries20. Agreement was weak between Nigerian and Indian radiologists (kappa = 0.60 (95% CI: 0.55–0.66), while Nigerian and American radiologists (kappa = 0.59 (95% CI 0.53–0.65)) had the weakest level of agreement overall. Agreement was even lower on the inclusive reading. The highest alignment was between Indian and British radiologists (0.69 (95% CI 0.64–0.74)), who had moderate agreement, while the lowest was between American with Nigerian and Indian radiologists, who demonstrated weak agreements of 0.55 (95% CI 0.50–0.61) and 0.55 (95% CI 0.50–0.60), respectively.

Impact of radiologists’ characteristics on performance

We analyzed the impact of various individual-level factors on how well radiologists interpreted X-rays to detect TB. We found that the country where a radiologist works made very little difference in their accuracy (variance of 0.019), meaning that being from different countries didn’t significantly affect how well radiologists read the X-rays. Although the intercept was statistically significant, indicating the overall chance of correctly identifying TB on an X-ray was better than guessing, this result might be influenced by the small number of radiologists we studied (only 11 people from 4 countries). Furthermore, the negative interaction term suggested that more experience may not necessarily result in higher reader accuracy, but this was not statistically significant. Including country of practice as a fixed effect also identified no significant impact of reader characteristics on interpretation accuracy (Annex 1).

Radiologists sub-group analysis

Radiologist performance varied across subgroups defined by HIV status, prior TB history, and age (Fig. 1). Results are presented here for the restricted analysis; however, similar trends are also observed for the inclusive analysis (Annex 2).

Sensitivity and specificity were generally higher in HIV-negative individuals than in those who were HIV-positive, with significant differences in sensitivity observed for US radiologists (a reduction of 23.5%) and in specificity for Indian and Nigerian radiologists, with reductions of 17.2% and 17.3%, respectively. UK radiologists demonstrated the highest sensitivity (83.3% [95% CI: 76.6–88.4%]) in HIV-negative people, with moderate specificity of 79.9% (75.3–83.8%). UK radiologists also had the second highest sensitivity in people living with HIV (PLHIV) (73.8% [62.0–83.0%]) and were only outperformed by Nigerian radiologists, who demonstrated sensitivity of 76.9% (65.4–85.5%) but the lowest specificity of any country in PLHIV (68.8% [57.8–78.1%]) (Annex 2). In the HIV-negative population, Indian radiologists demonstrated the highest specificity (87.3% [83.3–90.4%]) and lowest sensitivity (74.0% [66.4–80.4%]), but American radiologists that demonstrated the highest specificity in PLHIV (80.5% [70.3–87.8%]) at the expense of sensitivity (53.8% [41.9–65.4%]).

All groups demonstrated higher sensitivity but significantly lower specificity in individuals with a prior TB compared to those without. The greatest difference was observed in British radiologists, who were 44.4% less specific in those with a history of TB. However, despite having the lowest specificity, UK radiologists demonstrated the highest sensitivity in those with prior TB at 84.6% (75.8–90.6%). In contrast, in people with prior TB, US radiologists had the lowest sensitivity (74.7% [64.9–82.5%]), but highest specificity (60.4% [50.9–69.2%]). Indian and Nigerian radiologists demonstrated a better balance of sensitivity and specificity. In those who had never had TB, UK radiologists again had the highest sensitivity at 75.4% (68.4–81.4%), simultaneously obtaining high specificity (85.9% [82.1–88.9%]) although this was surpassed by both American and Indian radiologists. Specificity in this group was highest for Indian radiologists at 91.2% (88.1–93.6%) and lowest for Nigerian radiologists at 84.4% (80.6-87.6%).

Age also influenced radiologist performance. Sensitivity was highest in the youngest group (15 to < 35 years) and declined with age. While radiologists demonstrated similar sensitivities in young and middle age groups (35 < 55 years), with overlapping confidence intervals, when comparing the young and older age groups (> 55 years) radiologists from India, the UK and the US were significantly less sensitive in the older individuals. Indian and Nigerian radiologists were also significantly less sensitive in the older than the middle age group. The largest absolute reduction in sensitivity between young and old was observed for Indian radiologists at 24.3%. Trends in specificity were more complex. Specificity also decreased with age but was lowest in the middle-aged group for all countries, recovering marginally in the older age group. For Indian and British radiologists, specificity was significantly lower in the middle age group compared to the younger, with the greatest reduction observed for British radiologists (19.7%). There were no significant differences in specificity between middle and older age groups.

In the youngest group, UK radiologists had the highest sensitivity at 85.7% (76.7–91.6%), with moderate-ranking specificity of 88.9% (82.5–93.1%), while Indian radiologists had the lowest sensitivity at 75.0% (64.8–83.0%) and highest specificity (92.6% [86.9–95.9%]). In the middle-aged group, Nigerian radiologists showed the highest sensitivity (83.5% [75.1–89.4%]) and lowest specificity, while US radiologists demonstrated the lowest sensitivity and highest specificity (80.8% [73.7–86.4%]). Among the oldest group, UK radiologists had the highest sensitivity at 66.2% (54.6–76.1%), with moderate specificity of 74.5% (68.5–79.6%). Indian and American radiologists both demonstrated very low sensitivity in the older population at 50.7% (39.3–62.0%) and 54.9% (43.4–66.0%), respectively, but both had specificity surpassing 80%.

Radiologists compared to CAD overall performance

In general CAD solutions and radiologists demonstrate a considerable overlap in performance (Fig. 2). However, the CAD with the highest AUC, Lunit (0.902 [95% CI: 0.879–0.926]) outperformed all radiologists on both inclusive and restricted readings. Nexus (0.897 [95% CI: 0.872–0.922), the second highest performing CAD, outperformed radiologists on the restricted reading and performed similarly on the inclusive reading. For both restricted and inclusive readings, the performance of most other CAD software overlapped with that of radiologists, including qXR, JF CXR-2, ChestEye, Xvision, CAD4TB, Genki, InferRead DR Chest, and TiSepX-TB. There was no significant difference in performance between most CAD software, except TiSepX-TB which had non-overlapping confidence intervals with the top two-performers (Lunit and Nexus), XrayAME which performed significantly worse than all but TiSepX-TB and RADIFY, and RADIFY with significantly worse performance than all CAD. The software with the lowest AUCs (RADIFY and XrayAME) performed worse than all radiologists on both readings.

Our prior, complementary publication provides a detailed interpretation of CAD software performance alone in terms of ROC-AUC and other performance metrics17.

Matching CAD with radiologists’ sensitivity and specificity when detecting TB against MRS

In both restricted and inclusive readings, CE-marked CAD solutions (Class I, IIa, and IIb) performed as well as or better than radiologists across all four countries (Table 4). The CE mark signifies compliance with EU standards for safety, health, and environmental protection. For CAD products included in Table 4, Class I is the least stringent classification, while Class IIb is the most stringent. However, there were some exceptions: CAD4TB had significantly lower specificity when matching Indian radiologists’ sensitivity on the inclusive reading; InferRead with significantly lower sensitivity when matching American radiologists’ specificity on the restricted reading, and ChestEye had significantly lower specificity when matching American radiologists’ sensitivity on the restricted reading.

Lunit significantly outperformed all radiologists, except for Indian radiologists when matching specificity in the inclusive reading. Several CAD without CE-mark also performed well compared to radiologists. Nexus significantly outperformed all radiologists, except Indian radiologists when matching specificity in the restricted reading. Furthermore, Genki and JF CXR-2 performed on par with radiologists from all countries in the restricted reading, while JF CXR-2 significantly improved upon radiologists from Nigeria and the US in the inclusive reading. Genki also significantly improved upon the sensitivity of American radiologists when matching specificity in the inclusive reading. RADIFY and XrayAME performed significantly worse than radiologists in all analyses, except for Indian radiologists when matching sensitivity in the restricted reading. Further details are available in Annexes 3 and 4.

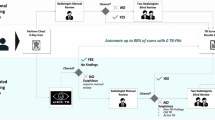

Performance of radiologists compared to the best-performing CAD software

We compared radiologists’ performance against the best performing CAD solution. Figure 3 shows the ROC curve of the CAD software with the highest AUC with the points of each group of radiologists indicated. Radiologists’ sensitivity and specificity fall below the level of the highest-performing CAD software on both the restricted and inclusive readings. For analysis of radiologists’ groups against different CAD software see Annex 5.

We then compared radiologists’ performance against the best CAD software when matching sensitivity and specificity (Annex 5). On the restricted reading, American radiologists achieved specificity closest to the best CAD at 84.3% (95% CI: 80.9–87.3%) compared to 88.4% (95% CI: 85.3–91.0%) and with overlapping confidence intervals. The sensitivity of British radiologists (78.7% [95% CI: 73.2–83.5%]) was closest and overlapped with CAD’s (87.1% [95% CI: 80.4–92.2%]). For the inclusive reading, when sensitivity matched, Indian radiologists compared most favorably to the best CAD software, with specificities of 73.6% (95% CI: 69.6–77.4%) and 83.5% (95% CI: 80.0-86.6%), respectively. While American radiologists were closest to the best CAD software in terms of sensitivity, with 92.3% (88.3–95.2%) compared to 96.4% (91.9–98.8%).

Given the heterogeneity in radiologist’s performance, we calculated the Euclidean distance between each country’s performance and each radiologist’s performance and the best-performing CAD software’s ROC curve to assess how closely aligned performance is (Table 5)21,22. The shortest possible distance was calculated from each point to the ROC curve, with a smaller distance indicating a more similar performance to the software. In both restricted and inclusive readings, American radiologists performed most comparably to the best CAD (i.e. the shortest distance), followed by those from India, the UK and Nigeria in the restricted reading and by British, Indian, and Nigerian radiologists in the inclusive reading.

Overall, we found no significant differences in Euclidean distances between the radiologists (in general p > 0.05) (Annex 6), with a few exceptions. In the restricted reading, UK Radiologist 1 showed the closest individual alignment with the best CAD, although American radiologists that were most closely aligned overall (Table 5). This alignment was only significantly greater than that of UK Radiologist 2 and American Radiologist 2. Notably, American Radiologist 2 had the greatest Euclidean distance from the best CAD’s curve, making them the least aligned reader on the restricted reading, despite being the only one to meet the WHO TPP. This discrepancy was significant only compared to UK Radiologist 1 (p = 0.015, Annex 6). For the inclusive reading, UK Radiologist 1 also aligned closest with CAD (p = 0.033, Table 5), but alignment was significantly closer only when compared to Nigerian Radiologist 2, who was the least aligned reader (p = 0.018, Table 5). Nigerian Radiologist 2 was also significantly further from the ROC curve than UK Radiologist 4, indicating less alignment with the best-performing CAD software.

Agreement between radiologists from each country and the best-performing CAD

We calculated the agreement between the country groups and the highest performing CAD software, setting the threshold of CAD at the midpoint of 0.5. In the restricted reading, there was moderate to strong agreement between this CAD and each group of radiologists with kappa ranging from 0.65 (0.60–0.70) for Nigerian radiologists to 0.72 (0.66–0.76) for Indian radiologists (Annex 7)20. In the inclusive reading there was weaker agreement, except for Indian radiologists, where the kappa coefficient was 0.7, indicating moderate to strong agreement.

Discussion

Our paper is the first to compare a comprehensive array of 12 CAD solutions against a diverse group of radiologists, including those from high-income and high TB burden countries. With new CAD products and versions emerging rapidly, benchmarking these tools against human readers remains critical for evidence-based deployment in TB screening algorithms worldwide.

We found that many CAD products performed as well as, or better than, radiologists. This was noteworthy considering the global diversity represented in the study. All CE-marked CAD achieved comparable performance to radiologists from the four study countries. Additionally, Nexus, Genki and JF CXR-2 performed well compared to human readers despite lacking CE-marks. Notably, Lunit and Nexus outperformed many radiologists from both high-income and high TB burden countries, emphasizing CAD’s potential to assist in TB screening in settings where trained human readers are scarce, either to guide the readings of non-specialized clinicians or as a second read as a means of quality control.

Our findings align with previous studies that concluded CAD performs on par with highly experienced radiologists from high-burden countries and updates this to suggest new versions may surpass the accuracy of expert radiologists17,18,19. Codlin et al. (2021) found CAD performed similarly to, but did not surpass, a Vietnamese radiologist with over 30 years of experience, although qXR, CAD4TB and Genki came close18. An earlier version of the top-performing CAD in our evaluation, Lunit, showed less promise, highlighting the pace of technological advancement18. Another evaluation identified that CAD4TB surpassed Bangladeshi radiologists after a single version update23. A concerted effort to ensure CAD evaluations keep pace with software updates is essential, with global coordination necessary to ensure the required data availability. Furthermore, our study adopted the unique approach of using high-performing CAD as a baseline for comparing human readers, an analysis of increasing relevance as CAD continues to outperform human readers.

Unlike previous studies that focused on comparing CAD to a limited number of radiologists from high TB burden countries, with a few comparisons also against remote-reading European radiologists, we included a broader range of readers from both low TB burden and high-income countries, offering a more global perspective. Although regression analysis demonstrated that country of practice did not significantly impact accuracy, comparing CAD with readers from countries with different epidemiological contexts helps to contextualize CAD’s performance. Our results indicated different approaches to reading across countries. Despite not being high burden countries, British radiologists had the highest sensitivity but lowest specificity, while American radiologists were most specific when reading for TB. An American radiologist was the only human reader to meet the TPP, and overall, American radiologists closest in performance to the top-performing CAD. Altogether, this analysis underscores the importance of benchmarking CAD against diverse readers, within and beyond high TB burden countries, to provide global insight on AI performance.

Our study demonstrates a significant influence of HIV status, age, and prior TB history on radiologist performance across all study countries. In individuals with a history of TB, sensitivity was higher, but specificity was significantly lower for all radiologist groups, likely reflecting the difficulty in distinguishing post-infection scarring from active disease, which could result in over-referral. The impact of age on reader performance was nuanced, potentially due to increased radiographic complexity in older adults, who often present with multiple comorbidities and diverse clinical histories that can obscure or mimic disease on chest radiographs. Performance was generally poorer in PLHIV with significant differences observed for radiologists from Nigeria, India, and the US. This is consistent with prior literature showing atypical imaging presentations of TB in PLHIV and may be further influenced by the fact that none of the readers in this study were from countries with the highest HIV incidence24,25. Interestingly, similar limitations have been observed with CAD systems13,18. In our complementary analysis on the same dataset, most of the 12 CAD software evaluated also demonstrated significantly worse performance in PLHIV, individuals with prior TB, and older adults, but not in the middle-aged group17. The parallel biases seen in both human and CAD performance suggest that certain intrinsic challenges in chest X-ray interpretation may be difficult to overcome, regardless of whether the reader is human or artificial. This may point to a need for adjunctive clinical or demographic data. Our estimates of human reader performance may be conservative, as real-world interpretation is often informed by demographic and clinical information. For instance, knowledge that a person has HIV may ensure the radiologist is alert to potential for atypical disease manifestations. Commercial CAD systems do not currently leverage multi-modal approaches to incorporate such data into predictions, although doing so may assist in overcoming performance limitations in some sub-populations.

In addition to geographic diversity, the 11 readers varied in experience and TB reading volumes, but these factors did not significantly affect accuracy. However, we noted a non-significant negative relationship between experience length and annual X-ray volume, suggesting either reduced accuracy with experience or faster reads by more experienced readers, potentially impacting accuracy. Due to our small sample size, more complex models were not feasible, indicating a need for future research with larger sample sizes to assess how individual reader characteristics affect accuracy.

Our findings have substantial real-world implications for TB control programs, demonstrating that CAD can decentralize access to expert-level CXR interpretation, addressing the critical shortage of qualified radiologists in high burden regions. Early users highlight CAD’s feasibility in low resource settings, particularly due to its rapid result delivery, available in under 1 min, capacity to integrate with existing data systems, and offline processing capability26,27. This immediacy enables same day screening and triage decisions, eliminating the waiting period for radiologist interpretation that can lead to patient loss to follow-up. Implementation of CAD technology could contribute to reduction in diagnostic delay- a critical concern given long current TB diagnostic delays in high-burden regions28,29. The feasibility of CAD implementation to reduce inequalities in access to care is further supported through its integration with advanced ultra-portable X-ray screening technology that can travel in backpacks or carry cases to remote settings, overcoming traditional barriers to accessing X-ray imaging. However, the cost of purchasing CAD software can reduce accessibility in some settings as price points are high and pricing structures and complex and lack transparency, particularly when purchased directly from suppliers. A concerted effort is necessary to enhance the affordability of CAD software to ensure accessibility in all contexts.

Our study has several limitations. Firstly, our study included a limited number of radiologists (11 total, with 2 to 4 per country), which may limit the generalizability and statistical power of our findings. Moreover, each chest X-ray was read by only one radiologist per country, preventing assessment of inter-observer variability within countries and potentially affecting the robustness of country-level comparisons. These limitations were primarily due to logistical constraints, and future research with larger samples and multiple readers per image is needed to better understand both individual and country-level differences in chest X-ray interpretation. Despite this limitation, our conclusions are consistent with other studies showing CAD’s comparable or superior performance to radiologists, which span multiple regions. Second, we did not conduct sub-analyses stratified by years of experience or X-ray reading volumes due to regression results suggesting no significant impact on reader variability. Finally, the potential for CAD to supplement radiologists by providing a second read or reducing workloads was beyond this study’s scope, although CAD shows promise in these areas12.

In conclusion, CAD’s performance is generally on par with- or better than- radiologists from diverse countries, underscoring its potential to expand access to TB care globally.

Methods

Sampling strategy

We compared the performance of CAD and radiologists using a case-control sample of 774 CXR from participants in the South African National TB Prevalence Survey, conducted between August 2017-July 201930. During the prevalence survey, 35,191 individuals were screened using a parallel screening algorithm consisting of WHO-recommended four symptom screen and CXR. Screen-positive individuals were requested to provide two sputum samples for testing using GeneXpert MTB/RIF Ultra (Cepheid, USA) and MGIT liquid culture (Bactec MGIT 960, Becton Dickinson, USA). Results from the prevalence survey are reported in full by Moyo et al.30.

A total of 4,917 prevalence survey participants with complete records, including CXR and diagnostic test (Xpert Ultra and liquid culture) results, made up the sampling frame. Cases were defined according to a composite microbiological reference standard (MRS) constituting bacteriologically positive (Bac+) individuals who had a positive result on either Xpert or liquid culture, or both. Therefore, controls were those without bacteriologically confirmed TB, defined by their negative results on both Xpert and liquid culture. Assuming a sensitivity of 90% and specificity of 70%, in line with the WHO’s Target Product Profile (TPP), and a precision level of ± 5%, a minimum sample of size of 138 cases is required based on the WHO-TDR CAD Calibration Toolkit31,32. We decided to include all 258 Bac + cases in the sampling frame to strengthen the statistical power of this analysis and doubled this to arrive at the number of 516 controls, which were then randomly selected from the sampling frame using a random number generator, yielding a total sample of 774 CXR.

CAD reading

We included all CAD software that were contacted for participation in a previous study between January 2021- December 2023, these included manufacturers of all commercially available CAD products for TB identified through ai4hlth.org10,17. All CAD readings utilized the same, secure process whereby CAD software was installed on Stop TB Partnership’s server, hosting the 774 anonymized CXR from the South African National Prevalence Survey. CAD software then read the dataset to produce a numeric abnormality score for each image, which was compiled and utilized in the analysis. Four CXR, used to test the process and check product compatibility, were excluded from the sampling frame. This ensured that developers had no prior access to CXR evaluation data, and no CAD training was performed prior to reading. All readings were conducted independently and blind to demographic and clinical information. Where graphics processing capacity was required, the same process was conducted on a Google Cloud Server. There was no other involvement of CAD developers in the study.

Radiologist reading

In parallel, a global tender process was launched through the United Nations Office for Project Services (UNOPS), which hosts the Stop TB Partnership, to engage tele-radiology companies from a variety of countries. Tender applications were evaluated for their competency in delivering timely tele-radiology services on an ad-hoc basis, such as for the purposes of this study. Qualifying tele-radiology companies were then contacted for participation in this study, with four companies consenting to provide reads. Participating tele-radiology companies were then provided with the same reading instruction and with remote access to the study dataset and were responsible for selecting and managing study radiologists.

Tele-radiology companies engaged radiologists certified from- and practicing in- four different countries (India, Nigeria, the UK and the US) for participation in the study. These geographies reflect both high- and low- TB burden countries. In 2023, India had the largest number of TB cases globally and Nigeria the largest number of cases in Africa; in comparison, the US and UK both have low TB incidence1. Multiple radiologists per country were selected by tele-radiology companies and read study CXR, with four readers from the UK, three from India and two each from Nigeria and the US. Radiologists were engaged by tele-radiology companies on the basis of their availability to read the study CXR, the authors were not involved in the radiologist selection process and did not impose any requirements regarding radiologists’ experience level and no additional pre-study qualification test was required. Overall, radiologists had experience ranging from 7 to 25 years and read between 50 and 10,000 CXR per year for TB (Annex 8).

Tele-radiology companies allocated study CXR for reading according to radiologists’ workload. Each CXR was read by one radiologist from each country. Radiologists were provided with the same reading instructions and limited access to the study dataset, stored on Stop TB Partnership’s server, and asked to classify images as either (1) normal, no findings; (2) abnormal, consistent with TB; or (3) abnormal, not consistent with TB, as well as to identify radiological abnormalities present on the CXR. Like CAD, radiologists read independently and were blind to demographic and clinical information.

Analysis

To assess the performance of radiologists, we constructed two binary human reading classifications, in line with methodology from a previous study comparing CAD and radiologists13. The binary classifications were:

-

1.

A restricted reading- considering only abnormalities consistent with TB as TB presumptive.

-

2.

An inclusive reading- considering all abnormalities as TB presumptive.

Leveraging these two reading categories, we calculated the performance in terms of sensitivity and specificity of radiologists individually and in country groups against the composite MRS. This analysis was also repeated with disaggregation by HIV status, TB history, and age group. Inter-reader agreement for both the restricted and inclusive readings was assessed using Cohen’s kappa coefficient.

We compared radiologists with CAD by plotting radiologists’ sensitivity and specificity on the Receiver Operating Characteristic (ROC) curve. For CAD products, the area under the ROC curve (AUC) was subsequently calculated. AUC ranges between 0 and 1, with a high AUC indicating higher overall performance by CAD. We then evaluated the difference in sensitivity and specificity between CAD and radiologists from each country by matching each CAD product’s sensitivity with radiologists’ and comparing the corresponding specificity. We then performed the same in reverse by matching CAD specificity to that of the radiologists and comparing sensitivity. Statistical difference was determined using the McNemar test for paired proportions. This analysis was conducted for both inclusive and restricted readings.

Subsequently, we performed the reverse analysis, comparing radiologists’ performance in terms of sensitivity and specificity against the CAD software with the highest AUC. We then evaluated how closely radiologists’ performance aligned with this CAD solution by plotting each individual radiologists’ as well as the country group’s sensitivity and specificity on the ROC curve of the CAD software and calculating the Euclidean distance between the radiologists’ point and the closest point on the ROC curve. Furthermore, to assess how closely radiologists agreed with the best-performing CAD software, Cohen’s kappa was calculated between each country group and CAD.

To evaluate the influence of individual factors such as country of practice and clinical experience on radiologists’ performance, we employed binomial linear mixed-effects models for both inclusive and restricted reading protocols. The outcome variables comprised the number of correctly identified TB cases and the total number of X-rays interpreted. Covariates included the annual volume of TB chest X-rays reviewed and years of professional experience. An interaction term between TB X-rays read per year and experience was incorporated to assess their combined effect on accuracy. For the inclusive reading analysis, country of practice was modeled as both a fixed and random effect to capture its overall and country-specific impact on diagnostic accuracy. In contrast, for the restricted reading analysis, country was included solely as a fixed effect due to limited variability in the outcome data.

All calculations were conducted using R, v 3.6.0 (R Computing, Vienna, Austria).

Data availability

Anonymized data available upon reasonable request to the corresponding author. Chest X-ray images will not be provided as these are withheld by the corresponding author’s organization to reserve their use for product evaluations.

References

World Health Organization. Global Tuberculosis Report. (2024). https://www.who.int/publications/i/item/9789240101531

Stop, T. B. & Partnership Global Plan to End TB 2023–2030. (2022). https://www.stoptb.org/what-we-do/advocate-endtb/global-plan-end-tb/global-plan-end-tb-2023-2030

World Health Organization. Essential Diagnostic Imaging. (2012). https://web.archive.org/web/20121023154427/http://www.who.int/eht/en/DiagnosticImaging.pdf.

Graham, S. et al. Chest radiography abnormalities associated with tuberculosis: Reproducibility and yield of active cases. Int. J. Tuberc Lung Dis. 6, 137–142 (2002).

Pinto, L. M. et al. Scoring systems using chest radiographic features for the diagnosis of pulmonary tuberculosis in adults: A systematic review. Eur. Respir J. 42, 480–494. https://doi.org/10.1183/09031936.00107412 (2013).

van Cleeff, M. R. A. et al. A comprehensive study of the efficiency of the routine pulmonary tuberculosis diagnostic process in Nairobi. Int. J. Tuberc Lung Dis. 7, 186–189 (2003).

van Cleeff, M., Kivihya-Ndugga, L., Meme, H., Odhiambo, J. & Klatser, P. The role and performance of chest X-ray for the diagnosis of tuberculosis: A cost-effectiveness analysis in nairobi, Kenya. BMC Infect. Dis. 5, 111 (2005).

Sahu, B. & Madani, G. Imaging inequality: Exploring the differences in radiology between high- and low-income countries. Clin. Radiol. 79, 399–403 (2024).

Pomykala, K., Desai, I., Jardon, M., Naik, P. & Pool, K. L. Imaging of tuberculosis in resource-limited settings. Curr. Radiol. Rep. 7, 23 (2019).

Stop, T. B. & Partnership AI Products for Tuberculosis Healthcare. AI4HLTH. https://www.ai4hlth.org

Creswell, J. et al. Early user perspectives on using computer-aided detection software for interpreting chest X-ray images to enhance access and quality of care for persons with tuberculosis. BMC Global Public Health. 1, 30 (2023).

Dvijotham, K. et al. Enhancing the reliability and accuracy of AI-enabled diagnosis via complementarity-driven deferral to clinicians (CoDoC). https://doi.org/ (2022). https://doi.org/10.21203/rs.3.rs-2231672/v1

Qin, Z. Z. et al. Tuberculosis detection from chest x-rays for triaging in a high tuberculosis-burden setting: An evaluation of five artificial intelligence algorithms. Lancet Digit. Health. 3, e543–e554 (2021).

Kik, S. V. et al. Diagnostic accuracy of chest X-ray interpretation for tuberculosis by three artificial intelligence-based software in a screening use-case: An individual patient meta-analysis of global data. 01.24.22269730.(2022). (2022). https://doi.org/10.1101/2022.01.24.22269730

Tavaziva, G. et al. Chest X-ray analysis with deep Learning-Based software as a triage test for pulmonary tuberculosis: An individual patient data Meta-Analysis of diagnostic accuracy. Clin. Infect. Dis. 74, 1390–1400 (2021).

World Health Organization. Consolidated guidelines on tuberculosis: Module 2: Screening: Systematic screening for tuberculosis disease. (2021). https://www.who.int/publications-detail-redirect/9789240022676

Qin, Z. Z. et al. Computer-aided detection of tuberculosis from chest radiographs in a tuberculosis prevalence survey in South africa: External validation and modelled impacts of commercially available artificial intelligence software. Lancet Digit. Health. https://doi.org/10.1016/S2589-7500(24)00118-3 (2024).

Codlin, A. J. et al. Independent evaluation of 12 artificial intelligence solutions for the detection of tuberculosis. Sci. Rep. 11, 23895. https://doi.org/10.1038/s41598-021-03265-0 (2021).

Gelaw, S. M. et al. Diagnostic accuracy of three computer-aided detection systems for detecting pulmonary tuberculosis on chest radiography when used for screening: Analysis of an international, multicenter migrants screening study. PLOS Glob Public. Health. 3, e0000402 (2023).

McHugh, M. L. Interrater reliability: The kappa statistic. Biochem. Med. (Zagreb). 22, 276–282 (2012).

Luenberger, D. G. & Ye, Y. Linear and Nonlinear Programming vol. 116 (Springer US, 2008).

Sharma, N. Importance of distance metrics in machine learning modelling. Medium (2022). https://towardsdatascience.com/importance-of-distance-metrics-in-machine-learning-modelling-e51395ffe60d

Qin, Z. Z. et al. Comparing different versions of computer-aided detection products when reading chest X-rays for tuberculosis. PLOS Digit. Health. 1, e0000067 (2022).

Padyana, M. et al. HIV-Tuberculosis: A study of chest X-ray patterns in relation to CD4 count. N. Am. J. Med. Sci. 4, 221–225 (2012).

GBD 2021 HIV Collaborators. Global, regional, and national burden of HIV/AIDS, 1990–2021, and forecasts to 2050, for 204 countries and territories: The Global Burden of Disease Study 2021. Lancet HIV 11, e807–e822 (2024)

Barrett, R., et al. User perspectives on the use of X-rays and computer-aided detection for TB. Int. J. Tuberc. Lung Dis. 26, 1083–1085 (2022).

Qin, Z. Z. et al. Early user experience and lessons learned using ultra-portable digital X-ray with computer-aided detection (DXR-CAD) products: A qualitative study from the perspective of healthcare providers. PLoS ONE. 18, e0277843 (2023).

Wako, W. G. et al. Determinants of health system diagnostic delay of pulmonary tuberculosis in gurage and siltie zones, South ethiopia: A cross-sectional study. BMJ Open. 11, e047986 (2021).

Jiang, Y. et al. Duration and determinants of delayed diagnosis with tuberculosis in Shenzhen, China: A cross-sectional study. Risk Manag. Healthc Policy. 15, 1473–1481 (2022).

Moyo, S. et al. Prevalence of bacteriologically confirmed pulmonary tuberculosis in South Africa, 2017–19: A multistage, cluster-based, cross-sectional survey. Lancet Glob. Health. 22, 1172–1180. https://doi.org/10.1016/S2214-109X(22)00269-1 (2022).

World Health Organization. High priority target product profiles for new tuberculosis diagnostics: Report of a consensus meeting. (2014). https://www.who.int/publications/i/item/WHO-HTM-TB-2014.18

World Health Organization. Determining the local calibration of computer-assisted detection (CAD) thresholds and other parameters. (2021). https://tdr.who.int/activities/calibrating-computer-aided-detection-for-tb

Funding

The funders of the study (Government of Canada) were not involved in its design, data collection, analysis, interpretation, or reporting.

Author information

Authors and Affiliations

Contributions

The study was conceived by ZZQ, CD, MVdW and TM. Data collection was led by ZZQ, TM, SMo, FI, PM, LM, NM, KZ, SMa, and MVdW. Data cleaning and verification was done by ZZQ, TM, SMo, and FI, and data analysis and interpretation by ZZQ, TM, MVdW, JC, CD, RB, LK, and SZ. ZZQ, RB wrote the first draft of the manuscript. ZZQ, RB, SZ, JC, CD, TM, FI, SMo, YT, SS, and MVdW revised the manuscript. All authors had full access to all the data in the study and had final responsibility for the decision to submit for publication. All authors contributed to and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

Anonymized CXR data was obtained via a data-sharing request to the South African Medical Research Council (SAMRC) that was approved by the National Department of Health, South Africa. The CXR data provided had undergone automatic anonymization and hashing, ensuring no manual data handling, and was securely stored on Stop TB Partnership’s server using Secure File Transfer Protocol (SFTP). All data on the server was only accessible to co-investigators. Ethical approval was obtained from the SAMRC (EC002-3/2020) and Heidelberg (S-488/2021). During the prevalence survey, informed consent was obtained from all participants, with additional assent and parental consent for those aged 15-18 years. The study was carried out in accordance with the relevant guidelines and regulations.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qin, Z.Z., Van der Walt, M., Moyo, S. et al. Comparing the accuracy of computer-aided detection (CAD) software and radiologists from multiple countries for tuberculosis detection in chest X-Rays. Sci Rep 15, 22540 (2025). https://doi.org/10.1038/s41598-025-06164-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-06164-w