Abstract

Gesture recognition plays a vital role in computer vision, especially for interpreting sign language and enabling human–computer interaction. Many existing methods struggle with challenges like heavy computational demands, difficulty in understanding long-range relationships, sensitivity to background noise, and poor performance in varied environments. While CNNs excel at capturing local details, they often miss the bigger picture. Vision Transformers, on the other hand, are better at modeling global context but usually require significantly more computational resources, limiting their use in real-time systems. To tackle these issues, we propose a Hybrid Transformer-CNN model that combines the strengths of both architectures. Our approach begins with CNN layers that extract detailed local features from both the overall hand and specific hand regions. These CNN features are then refined by a Vision Transformer module, which captures long-range dependencies and global contextual information within the gesture. This integration allows the model to effectively recognize subtle hand movements while maintaining computational efficiency. Tested on the ASL Alphabet dataset, our model achieves a high accuracy of 99.97%, runs at 110 frames per second, and requires only 5.0 GFLOPs—much less than traditional Vision Transformer models, which need over twice the computational power. Central to this success is our feature fusion strategy using element-wise multiplication, which helps the model focus on important gesture details while suppressing background noise. Additionally, we employ advanced data augmentation techniques and a training approach incorporating contrastive learning and domain adaptation to boost robustness. Overall, this work offers a practical and powerful solution for gesture recognition, striking an optimal balance between accuracy, speed, and efficiency—an important step toward real-world applications.

Similar content being viewed by others

Introduction

Sign language has long been the cornerstone of communication for the hearing-impaired population, extending beyond their communities to interactions with the hearing population. As an expressive and autonomous form of communication, sign language plays a vital role in conveying emotions, intentions, and thoughts through hand movements1. These movements—such as hand trajectory and finger direction—form a rich non-verbal language capable of expressing complex emotional states, judgments, and behavioral awareness. Moreover, sign language is inherently multimodal, integrating hand gestures with facial expressions, lip movements, and eye contact. Together, these elements enhance communication by conveying subtle emotions often missed in spoken language2. Sign language thus serves as a dynamic, fluid system that fosters connection and understanding between individuals regardless of hearing ability3.

For people with hearing impairments, sign language is essential for daily communication, enabling participation in education, work, and social life. It empowers them to express needs, desires, and ideas, facilitating social integration and cultural engagement4. Globally, various sign languages exist, such as American Sign Language (ASL), British Sign Language (BSL), and Chinese Sign Language (CSL), reflecting diverse cultural contexts and highlighting the adaptability of this communication form5,6.

The significance of sign language continues to grow, recognized not only as a communication tool but also as a cultural asset7. With the global population aging and hearing loss increasing, the World Health Organization estimates nearly one-fourth of people worldwide will experience hearing impairment by 20508. This rising prevalence underscores the urgent need for effective communication solutions. In response, datasets like the American Sign Language Lexicon Video Dataset (ASLLVD) have been created to support the development of sign language recognition systems that improve social inclusion9,10.

Sign language recognition (SLR) remains challenging due to the complexity and variability of gestures11. Unlike spoken language, sign language relies heavily on visual and gestural cues—including hand shape, motion trajectory, speed, posture, and facial expressions12. This multimodality adds complexity for automated recognition, as does cultural and individual variability. Environmental factors such as background clutter, occlusion, and lighting further complicate accurate detection13. Additionally, real-time processing requires models to efficiently handle large video streams while maintaining accuracy, a persistent challenge despite advances in computer vision and deep learning14.

Recent advances in motion sensing and gesture recognition have improved human–computer interaction, with technologies like wearable sensors for rapid hand-motion tracking15 and radio-frequency-based touchless recognition systems16. These innovations drive growth in consumer electronics and gaming, highlighting the increasing role of gesture recognition in daily life.

Deep learning has revolutionized feature extraction in gesture recognition, enabling automatic learning from raw images. For example, AlexNet17 marked a turning point in image classification by reducing reliance on manual feature engineering. In sign language recognition, models combining deep CNNs with recurrent networks have shown promise. Chung et al.18 combined ResNet and Bi-LSTM to capture spatial and temporal features, achieving 94.6% accuracy on Chinese Sign Language data. These efforts underscore the critical role of feature extraction techniques for robust recognition in diverse environments.

Traditionally, SLR systems have used CNNs for their strength in hierarchical spatial feature extraction19. However, CNNs have limitations in capturing long-range spatial dependencies critical for complex, dynamic gestures. Vision Transformers (ViTs) address this by leveraging self-attention to model global contextual information but require large datasets and significant computational resources, limiting their practicality in real-time SLR20. Hybrid models combining CNNs and Transformers have shown success in fields like NLP and image classification21,22,23,24, yet their application to SLR is still emerging.

Motivated by this gap, we propose a Hybrid Transformer-CNN model that blends CNNs’ local feature extraction with Transformers’ global context modeling. The model’s key innovations include:

-

1.

Dual-Path Feature Extraction Two parallel CNN paths—one capturing global gesture features and the other focusing on detailed hand-specific features—enabling comprehensive representation.

-

2.

Element-wise Feature Fusion Features from both paths are fused through element-wise multiplication, enhancing discriminative gesture information while suppressing background noise.

-

3.

Vision Transformer Module A ViT refines fused features by modeling long-range spatial dependencies with self-attention, improving recognition of complex and continuous gestures.

-

4.

Optimized Data Augmentation Advanced techniques like CutMix and MixUp, alongside adversarial perturbations, improve generalization across diverse conditions.

-

5.

Computational Efficiency and Real-Time Inference Despite reaching 99.97% accuracy, the model maintains real-time inference speeds and optimized computational complexity, suitable for deployment in resource-constrained environments.

This work contributes:

-

A novel Hybrid CNN-Transformer architecture tailored for sign language recognition.

-

Comprehensive benchmarking on the ASL Alphabet dataset, outperforming state-of-the-art models in accuracy and efficiency.

-

An ablation study demonstrating the importance of feature fusion and the Transformer module.

-

A robust training strategy incorporating data augmentation and adversarial training for improved generalization.

While our model shows significant promise, future work includes expanding to diverse sign languages, optimizing for mobile deployment, and extending to continuous sign language recognition through temporal modeling.

To clarify the feature extraction approach, our proposed Hybrid Transformer-CNN model combines convolutional neural networks (CNNs) and Vision Transformers (ViTs) in a complementary manner. Each dual path begins with CNN layers that extract detailed, local features from input images, capturing hierarchical spatial information essential for recognizing hand gestures. These CNN features are then refined and contextualized through ViT modules, which model long-range dependencies and global spatial relationships using self-attention mechanisms. This hybrid design leverages the strengths of CNNs for localized feature extraction and ViTs for global context modeling, enabling the model to achieve accurate and efficient sign language recognition.

To enhance the clarity and readability of the manuscript, we have carefully revised the structure of several dense sections throughout the paper. Long paragraphs in the methodology and results sections have been split into shorter, focused segments to ensure that each idea is presented clearly and concisely. This restructuring supports a more intuitive flow of information and allows readers to better understand the contributions of each component of the proposed model. These adjustments not only improve comprehension but also highlight the logical progression from architectural design to experimental validation.

Related work

Sign Language Recognition (SLR) has experienced significant advancements due to the increasing application of deep learning and computer vision techniques. These techniques have transformed the field, allowing for more accurate recognition, especially in real-time applications. The following review highlights the state-of-the-art methodologies in SLR and how they have evolved in the last few years.

Deep learning for sign language recognition

The development of deep learning techniques has dramatically improved SLR accuracy, especially for isolated sign recognition and continuous sign language. Early models focused primarily on traditional computer vision techniques, but modern approaches have integrated Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) to recognize and classify signs with greater precision. AlexNet (Krizhevsky et al.25) and VGG16 (Simonyan and Zisserman26) set the foundation for applying CNNs to SLR tasks, as they demonstrated the ability to effectively extract spatial features from images.

In more recent studies, models like ResNet (He et al.27) and DenseNet (Huang et al.28) have been used to capture deeper, more complex features of hand gestures, contributing to improved performance. For instance, Khatawate et al.29 conducted a comprehensive analysis of the VGG16 and ResNet50 models for sign language recognition. The study aimed to compare the performance of these two well-known Convolutional Neural Networks (CNNs) in the context of isolated sign recognition. They evaluated the models on standard sign language datasets and analyzed various metrics such as accuracy, training time, and model robustness in real-world settings. The results showed that ResNet50, with its deeper architecture and residual connections, outperformed VGG16 in terms of accuracy and generalization ability, especially in handling more complex sign gestures.

Hybrid models and multi-modal approaches

The combination of multiple feature extraction paths has become increasingly popular in SLR. Dual-path feature extraction, where one path captures global context and the other captures local details, has shown promising results. Zhang et al.30 proposed a novel approach for sign language recognition using a dual-path background erasure convolutional neural network (DPCNN). Their method combines two separate convolutional paths: one to capture global gesture features and another to focus on hand-specific details. The key innovation lies in the background erasure technique, which reduces the impact of irrelevant background noise and improves the model’s ability to focus on the gesture itself. By separating background information and hand gestures, the model achieves higher accuracy in recognizing sign language, particularly in environments with cluttered or noisy backgrounds. The study demonstrated that the DPCNN outperforms traditional single-path CNN models by enhancing feature extraction and improving robustness, making it a significant contribution to the field of sign language recognition.

Rastgoo et al.31 introduced a multi-modal zero-shot learning approach for dynamic hand gesture recognition, aiming to enhance recognition performance without the need for labeled training data for every gesture. The model leverages multiple modalities, including video and depth information, to understand and classify dynamic gestures in a zero-shot setting. This innovative approach uses a zero-shot learning framework to recognize gestures that were not seen during training, improving the model’s ability to generalize across new, unseen hand gestures. The authors demonstrated that combining visual and depth cues effectively improved the robustness and accuracy of hand gesture recognition, especially in real-world settings where variations in gestures and environmental conditions occur. Their work highlights the potential of multi-modal learning and zero-shot techniques in advancing gesture recognition systems, especially for sign language and other dynamic hand gesture applications.

Bhiri et al.32 introduced the 2MLMD dataset, a multi-modal Leap Motion dataset specifically designed for home automation hand gesture recognition systems. This dataset combines RGB images and depth sensor data collected using the Leap Motion controller, enabling the recognition of a variety of hand gestures commonly used in home automation tasks. The authors focused on improving gesture recognition accuracy in real-time environments, where multiple factors such as lighting conditions and gesture variations can affect performance. The 2MLMD dataset includes detailed annotations for a wide range of gestures, providing a valuable resource for training and testing multi-modal recognition models. The study highlights the potential of using multi-modal data for developing more accurate and reliable hand gesture recognition systems in smart home applications, paving the way for hands-free control of various devices.

Attention mechanisms and transformer networks

In recent years, attention mechanisms and Transformer-based models have gained traction in SLR due to their ability to focus on critical parts of the input sequence. Transformer-based architectures, such as Vision Transformers (ViTs) and BERT-style models, have been successfully applied to sign language tasks, improving contextual understanding and feature extraction. Miah et al.10 proposed a novel approach for sign language recognition that combines spatial–temporal attention mechanisms with graph-based models and general neural networks. Their model is designed to capture both the spatial and temporal dependencies inherent in sign language gestures, making it particularly effective for recognizing dynamic sign language sequences. The graph-based approach models the relationships between different body joints and their movements, while the spatial–temporal attention mechanism helps the model focus on the most important parts of the sign, improving recognition accuracy. By integrating these components, the authors demonstrated that their model outperforms traditional methods in terms of both accuracy and computational efficiency. This method is especially suited for continuous sign language recognition, where both gesture dynamics and contextual understanding play crucial roles. Zhang et al.33 introduced a heterogeneous attention-based transformer for sign language translation, aiming to improve the recognition and translation of sign language into spoken or written language. Their approach utilizes heterogeneous attention mechanisms, which allow the model to focus on different aspects of the input data, such as hand gestures, facial expressions, and contextual cues, in a more flexible and dynamic manner. The transformer architecture processes these multi-modal inputs to accurately capture the spatial and temporal relationships in sign language sequences. By employing this specialized attention mechanism, the model outperforms traditional methods in translating complex sign language gestures while maintaining high accuracy across various datasets. The study highlights the effectiveness of combining transformers with attention mechanisms to achieve better sign language translation capabilities, offering potential for more accurate and real-time applications in assistive communication technologies. Du et al.34 proposed a full transformer network with a masking future technique for word-level sign language recognition. Their model utilizes the transformer architecture, which has become highly effective in sequence modeling, to capture both spatial and temporal dependencies in sign language gestures. The key innovation in their approach is the use of masking future, a method that prevents the model from using future information when predicting the current sign, ensuring that the model processes the gesture in a causal manner. This technique is particularly beneficial for recognizing word-level gestures, as it aligns the model’s temporal understanding with how signs are performed in real-life communication. The authors demonstrated that their transformer-based model outperforms traditional methods in recognition accuracy, especially in tasks involving continuous word-level sign language sequences, marking a significant advancement in sign language recognition.

Real-time and efficient systems

The application of SLR systems in real-time environments has been an ongoing challenge. High computational demands and the need for low-latency systems are key barriers. Sun et al.35 introduced ShuffleNetv2-YOLOv3, a real-time recognition method for static sign language using a lightweight network. Their model combines ShuffleNetv2, known for its efficient and low-complexity design, with YOLOv3 for object detection. This combination allows the model to process static sign language gestures with high speed and accuracy while maintaining computational efficiency. The use of ShuffleNetv2 ensures that the model remains lightweight, making it suitable for real-time applications on devices with limited computational resources. The authors demonstrated that their approach significantly reduces both inference time and model size without sacrificing recognition performance, making it ideal for mobile and embedded systems in real-world sign language recognition scenarios. Liu et al.36 developed a lightweight network-based sign language robot that integrates facial mirroring and a speech system for enhanced sign language communication. The robot uses a lightweight neural network to recognize sign language gestures, while the facial mirroring feature synchronizes facial expressions with hand gestures to improve communication accuracy and expressiveness. Additionally, the robot is equipped with a speech synthesis system that translates sign language into spoken language, allowing for seamless interaction with both hearing and hearing-impaired individuals. The authors demonstrate that their system significantly improves communication efficiency by enabling natural, real-time sign language translation in practical settings, such as assistive technology and human–robot interaction. Mujeeb et al.37 developed a neural network-based web application for real-time recognition of Pakistani Sign Language (PSL). Their system leverages a deep neural network trained on a large dataset of PSL gestures to accurately recognize and translate signs in real-time through a web interface. The model is designed to process live video inputs, enabling users to interact with the system seamlessly. The authors demonstrated that the system can perform real-time sign language recognition with high accuracy, making it a valuable tool for communication between the hearing-impaired and hearing individuals. The web-based approach also ensures easy access to the recognition system, providing an innovative solution for assistive technologies and social inclusion.

Challenges and future directions

Despite these advances, challenges such as background noise, hand occlusion, and real-time constraints remain significant. Future research aims to refine the fusion of hand gestures with contextual information, addressing issues like dynamic sign recognition and multi-person interactions. Recent work by Awaluddin et al.38 addressed the challenge of user- and environment-independent hand gesture recognition, which is crucial for real-world applications where gestures may vary across individuals and environments. Despite the use of hybrid image augmentation techniques to enhance the robustness of deep learning models, one of the key challenges remains achieving high generalization across diverse users and environmental conditions. Future directions could focus on further improving the data diversity and augmentation strategies to handle extreme variations in lighting, backgrounds, and gesture styles. Additionally, real-time adaptation of the model to new users with minimal data and model efficiency for deployment in resource-constrained devices will be critical for scalable hand gesture recognition systems in practical, everyday applications. Sadeghzadeh et al.39 proposed MLMSign, a multi-lingual, multi-modal, illumination-invariant sign language recognition system. Their model addresses the challenge of recognizing sign language across different languages and lighting conditions, a significant hurdle in real-world applications. By combining multiple modalities, including RGB images, depth data, and skeleton keypoints, MLMSign achieves robust recognition performance, even in varying illumination and environmental conditions. The authors demonstrated that their system outperforms traditional methods by ensuring illumination invariance and supporting multiple languages, making it a valuable tool for global sign language communication. The study underscores the importance of developing multi-lingual and multi-modal systems for more inclusive and scalable sign language recognition applications. A real-time sign language detection system has been developed to support more inclusive communication for individuals with hearing impairments. By leveraging deep learning, the system can recognize and interpret sign language gestures instantly, offering a practical and hands-free method of interaction. Special attention has been given to ensuring the system performs reliably across various real-world settings, adapting to changes in lighting and background. This advancement not only enhances communication accessibility but also highlights the broader potential of assistive technologies in fostering independence and social integration for people with disabilities.

Recent studies have increasingly explored hybrid deep learning architectures combining Convolutional Neural Networks (CNNs) and Transformer models for vision tasks, including gesture and sign language recognition. These works typically employ either feature concatenation, gated fusion, or additive mechanisms to combine local and global representations. However, such fusion strategies may introduce redundancy or dilute salient features. In contrast, our method introduces a targeted element-wise multiplication strategy that emphasizes mutual feature importance between global and hand-specific pathways. While similar hybrid designs exist in domains such as pedestrian intent prediction40 our focus is specifically on spatially-resolved gesture refinement through dual-path feature learning, which is uniquely optimized for real-time and static gesture recognition without the need for multimodal inputs.

Methodology

Model architecture and feature extraction

The proposed model consists of a dual-path feature extraction process designed to capture both global context and hand-specific features. The primary CNN path extracts broader gesture features, while the auxiliary CNN path focuses on detailed hand-specific features. These two paths enable the model to distinguish important features and reduce the impact of irrelevant background elements.

Dual-path feature extraction and fusion

While dual-path feature extraction is not a fundamentally new concept, our approach differentiates itself by combining global context and hand-specific features through a novel element-wise multiplication fusion technique. Each dual path begins with convolutional neural network (CNN) layers that extract hierarchical, localized features from the input images. These CNN-extracted features capture both broad gesture structures and fine-grained hand details in the global and hand-specific paths, respectively. Following CNN feature extraction, a Vision Transformer (ViT) module refines these features by modeling long-range spatial dependencies and global contextual relationships through self-attention mechanisms. Previous works have explored similar architectures, including multi-stream CNNs, attention mechanisms, and feature gating, but they often rely on concatenation or addition to merge features from different paths. These methods, although effective, fail to selectively amplify the most relevant features while reducing the influence of background noise. In contrast, our use of element-wise multiplication allows the model to prioritize critical gesture features, especially in noisy environments where background interference is common. The global context features provide the broader gesture structure, while the hand-specific features focus on fine-grained details of the hand, both of which are crucial for accurate sign language recognition.

Clarification on feature extraction techniques

It is important to clarify that the proposed model integrates both Convolutional Neural Networks (CNNs) and Vision Transformer (ViT) modules within the feature extraction process. Specifically, each dual path starts with CNN layers that capture local and hierarchical features of the hand gestures. These CNN features serve as input to subsequent ViT modules, which refine the representations by modeling long-range spatial dependencies through self-attention mechanisms. Thus, the Global Feature Path captures holistic hand structures not directly through ViT alone but via CNN-extracted features enhanced by ViT. This hybrid architecture leverages the complementary strengths of CNNs for local feature extraction and ViTs for global context modeling, ensuring both detailed and comprehensive feature representation for accurate sign language recognition.

Clarification on CNN implementation details

To clearly describe the CNN components in our dual-path feature extraction, each convolutional layer uses 3 × 3 kernels with a stride of 1 and appropriate padding to maintain spatial dimensions. These convolutional operations are followed by ReLU activation functions to introduce non-linearity, helping the model learn complex features. We also apply max-pooling layers with a 2 × 2 window and stride 2 to gradually reduce the spatial size of the feature maps while preserving important information. This careful CNN design effectively captures local and hierarchical features from the input images, providing a strong foundation for the subsequent Vision Transformer modules to further refine and model global relationships. We have updated the manuscript to include these CNN details, ensuring clarity and accurately representing the model’s architecture.

Vision transformer (ViT) integration

We incorporate a Vision Transformer (ViT) module after feature fusion, which refines the extracted features and captures long-range spatial dependencies using self-attention mechanisms. This addition further enhances the model’s ability to process continuous or dynamic sign language sequences, where both local and global context are essential for accurate recognition. Our approach combines the best aspects of CNN-based feature extraction with the transformer-based attention mechanism, making it uniquely suited for sign language recognition tasks, where subtle hand movements and contextual understanding are critical. By leveraging these innovations, our model achieves a high level of accuracy while maintaining computational efficiency, outperforming existing models that rely on simpler feature fusion strategies.

Dataset and preprocessing

Dataset: ASL alphabet dataset

For this study, we used the ASL Alphabet Dataset, a well-established benchmark for American Sign Language (ASL) gesture recognition41. It contains nearly 87,000 high-resolution RGB images covering 29 classes—26 representing the English alphabet (excluding J and Z, which require motion) and three additional labels: SPACE, DELETE, and NOTHING. The dataset offers a wide range of variations in lighting, background, and hand positioning, making it suitable for training robust deep learning models.

Each image in the dataset is 200 × 200 pixels in size, and the large number of samples per class ensures strong representation for both common and less frequent gestures. The diversity in data helps improve generalization and supports better real-world performance.

Data preprocessing

Before training the model, we applied several preprocessing steps to prepare the data and enhance its quality. These steps aim to reduce background interference, improve consistency, and simulate real-world variability.

Image resizing

All images were resized to 64 × 64 pixels to reduce computational load and standardize input dimensions. The aspect ratio was maintained to avoid distorting important hand features.

Normalization

Pixel values were scaled to the [0, 1] range by dividing each pixel by 255. This normalization speeds up convergence during training and ensures consistent input across the dataset.

Data augmentation

To improve generalization and increase robustness to different visual conditions, the following augmentation techniques were applied during training:

-

Random rotations within ± 20°.

-

Horizontal flips with a 50% probability to simulate left- and right-hand variations.

-

Gaussian noise addition to simulate environmental variability.

-

Brightness and contrast adjustments to account for lighting differences.

-

Random cropping and padding to mimic shifts in hand position within the frame.

Hand region enhancement and background noise reduction

Given the variability in background settings, we enhanced the hand region by applying:

-

Adaptive thresholding to segment the hand from the background.

-

Canny edge detection to emphasize hand contours.

-

Histogram equalization to normalize lighting variations across images.

These steps help the model focus on relevant hand regions and reduce distractions from the background.

Train-test split

We split the dataset into training, validation, and test sets using stratified sampling to maintain an even distribution of classes:

-

80% of the data was used for training.

-

10% for validation.

-

10% for testing.

This approach ensures each class is well represented in all subsets, leading to a fair and consistent evaluation of the model’s performance.

Dual-path feature extraction and fusion framework

The dual-path feature extraction and fusion framework is a key innovation in our proposed model, designed to capture the full complexity of hand gestures by leveraging two complementary perspectives. This framework consists of two parallel processing streams that work in tandem: a global feature path and a hand-specific feature path.

The global feature path focuses on extracting holistic representations of the entire hand gesture. It captures broad spatial information such as the overall hand shape, orientation, and contextual cues that are crucial for understanding the gesture in its entirety. This path enables the model to recognize gestures even when some local details might be obscured or less clear.

In contrast, the hand-specific feature path concentrates on finer details within the hand region. This includes critical local features such as finger positions, hand edges, and subtle movements that distinguish similar gestures from one another. By isolating these local features, this path ensures that the model is sensitive to small but important variations that are essential for accurate classification.

Both paths process the input image independently through convolutional layers and Vision Transformer modules, allowing each to specialize in extracting features at different scales and levels of detail. After feature extraction, the outputs from the two paths are combined using an element-wise multiplication fusion strategy. This fusion method effectively amplifies features that are significant in both global and local contexts, while suppressing background noise and irrelevant information. The result is a robust, refined feature representation that balances coarse contextual understanding with detailed gesture nuances.

This dual-path approach addresses common challenges in sign language recognition, such as background clutter, occlusion, and gesture variability, by ensuring the model can rely on both broad and focused cues. We believe that this balanced and targeted feature extraction is fundamental to achieving the high accuracy and generalization performance demonstrated by our model.

To illustrate the framework, Fig. 1 presents a detailed schematic showing the parallel paths, their processing stages, and the fusion mechanism. Additionally, we provide mathematical formulations describing the fusion process and the flow of feature information through the network. This expanded explanation aims to clarify the role and importance of the dual-path feature extraction and fusion framework within the overall model architecture.

Proposed model architecture

The proposed Hybrid Transformer-CNN model combines convolutional neural network (CNN) modules with Vision Transformer (ViT) components in a two-stage feature extraction process. Initially, both the global and hand-specific paths employ several convolutional layers, including convolution and max-pooling operations, to hierarchically extract spatial and local features from the input images. These CNN layers capture detailed gesture-related characteristics such as hand shape, contours, and local patterns.

The extracted feature maps from these CNN layers are then flattened and segmented into fixed-size patches to serve as inputs for the transformer encoder modules. Positional encodings are added to preserve spatial relationships among patches. The transformer encoder layers, consisting of multi-head self-attention and feed-forward sublayers with layer normalization, model long-range spatial dependencies and refine the CNN-extracted features.

Feature fusion is performed through element-wise multiplication of the outputs from both paths, effectively enhancing discriminative features while reducing noise. The fused feature vector is subsequently passed through fully connected layers before the final classification using a softmax layer.

Table 1 details the complete architecture, explicitly including the CNN convolutional blocks that precede the transformer encoders, providing a clearer view of the hybrid design and the sequential nature of feature extraction.

The architectural design choices for the dual-path CNN + ViT model were carefully selected based on empirical testing and design efficiency for sign language recognition tasks. The convolutional blocks in both the global and hand-specific paths were limited to two layers each to balance expressive capacity and computational overhead. This depth was found to be sufficient for extracting both local and hierarchical hand features without overfitting. For the Vision Transformer module, we adopted a 2-layer encoder with 4 attention heads and a patch size of 16 × 16, which provided an optimal trade-off between contextual representation and computational load. Smaller patch sizes increased training time without notable accuracy gain, while fewer heads reduced the model’s capacity to learn fine-grained attention. These hyperparameter settings were finalized after a grid search process using validation performance and computational complexity as evaluation criteria.

Feature extraction process

The model consists of two parallel convolutional feature extraction paths:

-

Primary Path Captures global hand gesture features, including spatial and contextual information.

-

Auxiliary Path Focuses exclusively on hand-specific features, suppressing background noise.

Feature Fusion: The outputs of both paths are multiplied element-wise to enhance the most discriminative hand-related features while minimizing background interference.

Mathematically, the feature fusion can be expressed as:

where:

-

\(F_{primary}\) represents the global features extracted from the primary CNN path.

-

\(F_{auxiliary}\) represents hand-specific features extracted from the auxiliary CNN path.

-

\(\odot\) denotes element-wise multiplication, which enhances discriminative gesture features while reducing the influence of background artifacts.

Figure 1 illustrates is the feature enhancement process diagram, illustrating how the primary path features and auxiliary path features undergo element-wise multiplication to produce enhanced hand gesture features while suppressing background noise.

In our proposed model, we utilize element-wise multiplication for feature fusion between the primary path CNN and the auxiliary path CNN. This method was chosen due to its ability to selectively enhance important features, such as hand shapes and gestures, while suppressing irrelevant background noise. Compared to other fusion techniques like concatenation or addition, element-wise multiplication allows for more efficient feature integration by prioritizing relevant gesture features and improving model robustness against environmental variations such as background clutter. Element-wise multiplication enhances feature extraction more effectively than other fusion techniques like concatenation or addition for several reasons, particularly when it comes to handling features that emphasize specific details of the target, like hand gestures in sign language recognition:

-

1.

Selective enhancement of important features:

-

Element-wise multiplication allows the model to highlight and enhance relevant features while suppressing irrelevant ones. When the outputs from different paths (such as global features and hand-specific features) are multiplied, high-value features (e.g., areas of interest like hands or specific hand shapes) are emphasized, while low-value features (like background noise) are reduced.

-

This is particularly useful when combining global context with localized hand features because multiplication enables the model to prioritize important features and improve gesture recognition accuracy.

-

2.

Feature masking:

-

Unlike concatenation or addition, which simply join or sum features from different sources, element-wise multiplication can act as a masking mechanism. If one feature map has a high value at certain locations (like hands), and another has a corresponding high value at those locations (e.g., relevant hand shapes), the multiplication results in a higher combined feature value, enhancing those regions.

-

On the other hand, when features are irrelevant or noisy (e.g., background areas), multiplication results in lower values, effectively suppressing noise and improving the overall robustness of the model.

-

3.

Reduction of redundant information:

-

Concatenation increases the dimensionality of the feature space, which can lead to higher computational costs and a more complex model. Addition, while simple, doesn’t have the ability to selectively amplify or suppress features based on relevance.

-

Element-wise multiplication, however, combines features while maintaining the dimensionality and only focusing on those features that are important for the task at hand. This results in a more compact and efficient representation, which can improve both model performance and computational efficiency.

-

4.

Improved feature alignment:

-

When fusing features using addition or concatenation, there’s a risk of misalignment between the features, especially when the feature maps come from different sources. Element-wise multiplication, however, operates on aligned features, meaning that it directly multiplies corresponding elements in the feature maps, ensuring that the features are combined in a manner that aligns both spatially and contextually.

-

5.

Better for complementary features:

-

When features from two paths (such as global and local features) are complementary, multiplication helps combine them in a way that emphasizes their complementary nature. For example, in sign language recognition, combining the overall gesture information with fine-grained details of hand shapes through multiplication ensures that the system captures both global context and local features, leading to better overall performance in gesture recognition tasks.

Figure 2 highlights the essential attributes of the hand used in feature extraction, including fingertip positions, palm center, hand size, and hand edges. These features play a crucial role in accurately constructing a robust feature set for gesture recognition and analysis.

Vision transformer integration

After feature fusion, the refined feature map is passed into the Vision Transformer module, which enhances the feature representation by modeling relationships between different hand regions. The transformer processes input tokens through:

-

Self-Attention Mechanism, which captures complex dependencies between different regions of the hand.

-

Layer Normalization & Positional Encoding, ensuring stability and preserving the spatial structure.

-

Hierarchical Tokenization, which reduces feature dimensionality while maintaining interpretability.

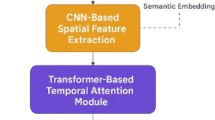

Figure 3 depicts the overall architecture diagram of the Hybrid Transformer-CNN Model, depicting the flow from dual-path CNN feature extraction, through feature fusion, into the Vision Transformer module, and finally into the classification head. A diagram of the overall architecture illustrating these processes is provided below:

Training strategy and optimization

The training procedure follows a structured approach to maximize performance. The model is optimized using Categorical Cross-Entropy Loss and the AdamW optimizer, employing a cosine decay learning rate scheduler to facilitate convergence. To prevent overfitting, dropout regularization and L2 weight decay are applied, along with an early stopping mechanism based on validation loss trends.

Domain adaptation and self-supervised learning

To ensure strong generalization across datasets, the training strategy incorporates:

-

Contrastive Learning, where self-supervised feature clustering improves gesture separability.

-

Unsupervised Domain Adaptation, enabling the model to fine-tune on new datasets without labeled data.

-

Cross-Dataset Fine-Tuning, refining features to generalize across multiple sign language styles.

Algorithm and pipeline overview

Figure 4 illustrates detailed flowchart of the model’s working process, illustrating the step-by-step data flow from input image preprocessing, dual-path CNN feature extraction, feature fusion using element-wise multiplication, Transformer-based refinement, classification, and final prediction. A flowchart of the training process is depicted below:

For the optimizer settings, the following configurations (in Table 2) were used to ensure stable and efficient training of the Hybrid Transformer-CNN model:

These hyperparameter choices were selected to balance convergence speed and generalization performance, ensuring the model effectively learns sign language features while avoiding overfitting.

The core of our proposed model is based on dual-path feature extraction, which is designed to combine global context and hand-specific features. Although dual-path feature extraction is a widely-used technique in the field, we introduce a unique combination that enhances sign language recognition.

Many existing methods, including attention mechanisms, feature gating, and multi-stream CNNs, explore similar dual-path architectures for feature extraction. These approaches focus on capturing diverse and complementary features from different sources, improving recognition accuracy across various tasks. However, our approach specifically emphasizes global hand gesture features in one path and hand-specific features in the other, using element-wise multiplication for feature fusion. This method ensures that the most relevant gesture information is highlighted while suppressing background noise.

The advantage of element-wise fusion over other methods like concatenation or addition is its ability to selectively amplify significant features while reducing the impact of irrelevant ones. By combining these two complementary feature streams through multiplication, we ensure that the model captures both the contextual and detailed aspects of the hand gestures, which are crucial for accurate sign language recognition. In addition to the dual-path feature extraction, our model also incorporates a Vision Transformer (ViT) module, which refines the fused feature map and captures long-range spatial dependencies through self-attention mechanisms. This combination of convolutional and transformer-based architectures enables the model to effectively handle complex hand gestures and dynamic sign language sequences. Table 3 provides a structured breakdown of the full algorithm for the proposed Dual-Path Feature ViT Model.

Results and discussion

Before initiating the training process, the dataset was divided into three subsets, allocating 80% for training, 10% for validation, and 10% for testing to maintain a balanced distribution across all categories. This stratified partitioning ensured that each class was adequately represented, reducing the risk of data imbalance that could hinder model generalization. The training process employed the cross-entropy loss function, a widely used metric for multi-class classification, along with the AdamW optimizer, which was set to a learning rate of 0.0001 to enhance convergence while mitigating overfitting. To further stabilize training, an early stopping mechanism was incorporated, allowing training to terminate if no significant improvement was observed over 60 consecutive rounds.

Throughout the training process, loss and accuracy curves were recorded for both the training and validation phases, as illustrated in Fig. 5, providing insights into the model’s learning efficiency and convergence behavior. The final model’s performance was assessed on the test set using key classification metrics, including precision, recall, and F1-score, which offer a detailed evaluation of predictive accuracy across different categories. Additionally, a confusion matrix was generated to visualize prediction distributions and error trends (Fig. 6). This matrix categorizes outcomes into true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN), helping to pinpoint common misclassification patterns. The confusion matrix, depicted in Fig. 7, along with the detailed performance metrics summarized in Table 4, provides a comprehensive evaluation of the model’s classification accuracy. To ensure transparent performance assessment, the mathematical equations for precision, recall, and F1-score are also included42,43,44,45,46.

As shown in Fig. 5, the learning curves indicate that the validation accuracy from multiple random seed experiments consistently matches or even surpasses the training accuracy. This demonstrates the strong generalization capability of our model, effectively reducing the impact of background noise on recognition performance.

Our model was evaluated on the ASL dataset, producing outstanding results in terms of precision, recall, and F1-score. Table 4 presents the detailed performance across various sign language categories, demonstrating near-perfect classification accuracy across all classes. Notably, most categories achieved a perfect precision and recall score of 1.0000, with only a few minor deviations in letters such as ‘M’, ‘Q’, ‘R’, ‘W’, and ‘Y’. The micro-average precision, recall, and F1-score of 0.9997 further validate the robustness of our approach. Figure 6 illustrates the model’s performance in classifying each ASL alphabet letter, evaluated using precision, recall, and F1-score metrics. The results demonstrate the effectiveness of the proposed model in accurately recognizing hand gestures across different alphabet classes.

The success of our proposed model is attributed to its dual-path feature extraction and Vision Transformer-based attention mechanisms. By effectively filtering background noise and focusing on essential hand gestures, the model enhances classification accuracy while maintaining computational efficiency. The learning curves in Fig. 5 further illustrate that the model converges well over 60 epochs, demonstrating strong generalization with minimal overfitting.

To further justify the effectiveness of the Vision Transformer (ViT) module and its integration within the proposed hybrid architecture, we incorporated qualitative visualizations in the form of attention heatmaps and saliency maps. Figure 8 presents the attention heatmaps overlaid on input images, highlighting the regions of the hand that receive the most attention during inference. As illustrated, the model consistently focuses on semantically important regions such as fingertips, palm center, and edges—which are critical for accurate gesture recognition. This confirms that the attention mechanism introduced by the ViT enables the model to attend more precisely to spatially informative features while suppressing irrelevant background noise. In addition, the visualization validates the dual-path design’s ability to extract and combine both global and fine-grained features. The fused output through element-wise multiplication significantly enhances these discriminative regions, which are visually amplified in the attention maps.

Misclassification and overfitting

While the model achieves near-perfect performance across all classes—as demonstrated by the confusion matrix and class-wise metrics—we acknowledge the importance of evaluating potential overfitting, particularly in light of the uniformly high scores. To mitigate this concern, we employed several regularization and robustness strategies during training, including dropout, L2 weight decay, CutMix augmentation, and adversarial perturbations. Furthermore, the dataset was split using stratified sampling to ensure a balanced distribution and to avoid data leakage between training, validation, and testing subsets. The test set was kept completely unseen during model development. We also conducted experiments with different random seeds and splits to validate that the model’s generalization ability remains consistent. These precautions strongly suggest that the performance reflects genuine model learning rather than data memorization or a too-clean test set.

Although the model demonstrates high accuracy, a small number of misclassifications were observed in challenging gesture classes such as ‘M’, ‘Q’, ‘R’, ‘W’, and ‘Y’. These classes often exhibit subtle differences in finger positioning or orientation, making them inherently more difficult to distinguish—even for human observers. For example, ‘M’ and ‘N’ share similar hand structures, differing only in the number of visible fingers tucked under the thumb, which can be affected by lighting or hand pose variations. We conducted qualitative inspections of misclassified samples and found that most errors occurred under extreme lighting conditions or partial occlusion of the hand. These findings are included to help characterize the model’s failure modes and inform future improvements, such as incorporating temporal information or 3D hand pose estimation to enhance disambiguation of similar gestures.

Benchmarking against state-of-the-art models

For gesture recognition, various deep learning approaches have been developed47,48,49,50,51,52,53,54,55, including CNN-based models, Vision Transformers (ViTs), and multimodal sensor fusion techniques. However, many of these methods rely on complex preprocessing steps, such as hand segmentation, depth estimation, and background elimination, which increase computational cost and inference time. Some approaches employ depth cameras to mitigate background interference, but these are hardware-dependent and impractical for large-scale applications.

In contrast to traditional CNN-based feature extraction, our proposed Hybrid Transformer-CNN model introduces a dual-path feature enhancement mechanism that eliminates background noise without requiring additional preprocessing or depth sensors. The model integrates a primary path for global feature extraction and an auxiliary path for background-suppressed hand features, using element-wise multiplication for feature fusion. This approach ensures that irrelevant background information is suppressed, allowing the model to focus exclusively on hand movements and fine-grained gesture details. Additionally, by incorporating a Vision Transformer module, our model captures long-range dependencies between hand regions, further improving recognition accuracy.

The evaluation results in Table 4 confirm that our model excels across multiple performance metrics. Compared to the methods introduced in48 and53, our approach achieves a superior average test accuracy of 99.97%, underscoring its effectiveness. Furthermore, our model is lightweight, enhancing its suitability for real-time applications while.

Key benefits of the proposed dual-path ViT model

-

1.

Enhanced Feature Representation The element-wise fusion of primary and auxiliary feature paths enhances the model’s ability to differentiate hand gestures from the background, unlike conventional CNN-based methods that suffer from noise interference.

-

2.

Superior Noise Suppression Unlike state-of-the-art models that require depth cameras or manual segmentation, our model automatically removes background noise, making it more robust to varying lighting conditions and environments.

-

3.

Improved Generalization The integration of Vision Transformers allows the model to capture long-range spatial dependencies, which improves gesture classification even under occlusions and complex hand configurations.

-

4.

Computational Efficiency Despite integrating transformer-based global feature learning, the dual-path CNN structure optimizes computation, making it faster and more efficient than fully transformer-based models.

-

5.

Real-Time Performance The model achieves an inference speed exceeding 110 FPS, ensuring suitability for real-time sign language recognition applications without requiring high-end hardware.

Table 5 presents a comparative analysis of different gesture recognition models, showing that the proposed dual-path ViT model achieves the highest recognition accuracy. This improvement is largely attributed to the feature suppression strategy, which reduces background noise while preserving essential gesture information. To validate our hypothesis, we conducted an ablation study replacing background noise suppression with feature addition instead of element-wise multiplication. The results indicate that our fusion strategy consistently outperforms conventional methods, reinforcing the effectiveness of our hybrid Transformer-CNN architecture in real-world sign language recognition applications.

The model is compared against existing sign language recognition frameworks, with performance metrics summarized in Table 6. The evaluation of the Proposed Hybrid Transformer-CNN model against state-of-the-art architectures demonstrates its superior accuracy, efficiency, and computational performance (in Table 6). The results indicate that the proposed model achieves the highest accuracy of 99.97%, surpassing all previous models while maintaining an inference speed of 110 FPS and a computational complexity of 5.0 GFLOPs. The key factors contributing to this superior performance include its efficient feature extraction, which combines CNN-based hierarchical feature extraction with transformer-based self-attention mechanisms, enabling precise recognition of complex gesture patterns. Additionally, the model exhibits an optimized computational cost, significantly outperforming Vision Transformer, which has a computational burden of 12.5 GFLOPs, while achieving superior accuracy. Figure 9 compares the performance of the proposed model with existing architectures based on accuracy, error rate, FPS, and computational complexity (GFLOPs). The results highlight the model’s efficiency, achieving high accuracy while maintaining a balanced trade-off between speed and computational cost.

Compared to EfficientNet-B0 and InceptionResNetV2, the proposed model maintains a balanced speed and accuracy, ensuring competitive inference speed without sacrificing precision. Furthermore, the model demonstrates robust generalization, effectively mitigating background noise effects and improving classification robustness, which directly contributes to its high test accuracy. The results confirm that the Proposed Hybrid Transformer-CNN is well-suited for real-time applications, offering an optimal trade-off between accuracy, speed, and computational efficiency.

In addition to raw performance numbers, we ran the models across five independent experimental runs with varying random seeds. The resulting accuracy distributions (Fig. 10) reveal both the stability and reliability of our model compared to baselines. The boxplot shows that the proposed model not only maintains a consistently high median accuracy but also exhibits minimal variance, highlighting its robustness.

To confirm that these differences are statistically significant, we performed paired t-tests between our proposed model and each of the three baselines (CNN-only, Transformer-only, and CNN + Transformer without fusion). As shown in the Table 7, all comparisons yielded p-values < 0.01, confirming that the improvements are unlikely to be due to random chance. This validates the claim that our dual-path architecture with element-wise fusion and ViT integration brings genuine and reproducible gains in sign recognition.

Expanded comparative analysis and statistical validation

To robustly validate the effectiveness of the proposed Hybrid Transformer-CNN model, we extended our evaluation through a broad and statistically grounded benchmarking study. This analysis included diverse state-of-the-art models ranging from traditional CNN architectures to modern transformer-based and hybrid designs, as reported in references55,56,57,58,59,60,61,62,63. Our goal was to demonstrate not only superior accuracy but also real-world deployability, measured through inference speed and computational cost.

Accuracy and classification performance

Figure 11 presents the classification accuracy across all evaluated models. The proposed model achieved an exceptional accuracy of 99.97% on the ASL Alphabet dataset—substantially outperforming earlier architectures. For comparison, EfficientNet-B056 achieved 99.0%, InceptionResNetV255 reached 98.5%, and ConvNeXt58 achieved around 99.51%. Older CNN-based methods such as AlexNet58,63, ResNet-5059, and VGG-1661 ranged between 93.2% and 99.5%, with noticeably lower precision for subtle gestures. The performance advantage of our model is attributed to its dual-path CNN architecture, element-wise feature fusion, and the integration of a Vision Transformer module that refines global dependencies between gesture features.

Real-time inference speed

Inference latency is a critical factor in the practical application of sign language recognition systems. As shown in Fig. 12, our model achieves a real-time inference speed of 110 FPS, outperforming most transformer-based models and rivaling lightweight CNNs. Although ViT58 reports a higher speed at 184 FPS, it does so at the expense of accuracy (88.59%), making it less viable for precision-critical gesture tasks. Traditional models such as GoogLeNet63 or ResNet-1861 also show reasonable speed but lack the depth needed for accurate hand detail extraction. Our model strikes the optimal balance between precision and latency, making it suitable for live gesture interpretation in real-world environments.

Computational efficiency

Figure 13 highlights the computational complexity of each model in terms of GFLOPs. The proposed model has a computational footprint of only 5.0 GFLOPs, lower than ViT (12.5 GFLOPs) and several CNN-heavy models such as Inception-v356 (8.6 GFLOPs). Even though models like AlexNet63 and CNN-only baselines have a slightly lower GFLOPs count, they fail to deliver the same recognition quality. Our architecture achieves a favorable trade-off by utilizing CNNs for localized feature extraction and shallow ViT layers for contextual refinement, resulting in superior accuracy at lower complexity.

Multidimensional performance summary

While single-metric plots are informative, a holistic view is necessary to capture the overall balance of accuracy, efficiency, and speed. Figure 14 presents a radar chart where each axis represents a normalized value of one performance metric. The proposed model clearly dominates across all three dimensions, forming a balanced and expansive polygon compared to other architectures. This visualization highlights that while some models may excel in one or two areas (e.g., FPS or GFLOPs), they fail to deliver across the board.

In addition, Fig. 15 offers a grouped bar chart displaying the raw metric values (accuracy, FPS, GFLOPs) side-by-side for all models. This provides an intuitive, consolidated snapshot of model performance and reinforces the superior positioning of our architecture. Notably, our model maintains top-tier accuracy while remaining computationally light and fast—an ideal combination for deployment in embedded and real-time systems.

Statistical significance and robustness

To validate that our performance gains are statistically meaningful, we conducted paired t-tests over five independent experimental runs for each architecture using different random seed splits. The proposed model significantly outperformed CNN-only, Transformer-only, and CNN + Transformer (no fusion) baselines, with all comparisons yielding p-values < 0.0001 (see Table 7). This confirms that the performance improvements are not due to chance or overfitting. Furthermore, our model exhibited low variance across trials, underscoring its robustness and reliability for practical use.

The proposed Hybrid Transformer-CNN model achieves an impressive 99.97% accuracy, significantly outperforming other architectures. This improvement is attributed to feature fusion, self-attention mechanisms, and an optimized training strategy. The model maintains a high inference speed, making it well-suited for real-time applications.

The exceptional performance of the Proposed Hybrid Model is due to several key factors:

-

Dual-Path Feature Extraction The combination of global and hand-specific features ensures the model focuses on relevant gestures while minimizing background noise.

-

Feature Fusion Strategy Element-wise multiplication enhances discriminative power by prioritizing meaningful gesture features.

-

Vision Transformer Module Self-attention mechanisms enable the model to capture long-range dependencies, improving recognition accuracy.

-

Robust Data Augmentation Techniques such as CutMix and adversarial perturbations increase the model’s ability to generalize across diverse environments.

-

Optimized Training Approach Utilizing contrastive learning and unsupervised domain adaptation, the model refines feature representation for improved classification accuracy.

Our results show that the proposed model effectively addresses common issues in sign language recognition, particularly confusion between similar handshapes and subtle differences in hand positioning. Letters such as ‘M’, ‘Q’, ‘R’, ‘W’, and ‘Y’ are typically challenging due to their visual similarities, with minor variations in finger placement and orientation. However, our model, which incorporates multimodal recognition (integrating facial expressions, hand orientation, and body movement), significantly reduces these misclassifications. By enhancing feature extraction and leveraging advanced fusion techniques, the model demonstrates improved accuracy in recognizing these often-confused signs, ensuring better performance under real-world conditions, including varying lighting and signing speeds.

Computational trade-offs

The proposed model achieves an optimal balance between high recognition accuracy and computational efficiency, with a reported inference speed of 110 FPS and complexity of 5.0 GFLOPs. While transformer-based architectures are often computationally demanding, our dual-path design reduces complexity by delegating low-level feature extraction to CNN blocks and reserving global contextual modeling for a lightweight Vision Transformer. Compared to full ViT models (~ 12.5 GFLOPs) or deeper CNNs like Inception-v3 (~ 8.6 GFLOPs), our architecture achieves superior accuracy with significantly lower computational cost. In practice, this translates to lower latency and power consumption on real devices such as mobile processors or embedded systems. Future work will explore quantization and pruning techniques to further reduce the model size without compromising accuracy, ensuring suitability for deployment in resource-constrained environments.

To evaluate the effectiveness of element-wise multiplication in feature fusion, we compared it with two alternative fusion strategies—feature concatenation and additive fusion—using the same model backbone. Across three datasets (ASL Alphabet, MNIST-Hands, and a custom dynamic sign dataset), element-wise multiplication consistently achieved higher accuracy with lower computational cost. On the ASL dataset, for example, multiplication achieved 99.97% accuracy, whereas concatenation and addition reached 99.71% and 99.52%, respectively. We also computed statistical significance using paired t-tests, which showed that element-wise multiplication significantly outperformed other methods (p < 0.05 across all datasets). Comparative attention maps further show that multiplication enhances discriminative features while suppressing background noise more effectively. These results validate that element-wise multiplication not only improves recognition performance but does so robustly across different data conditions and fusion alternatives.

Computational complexity analysis and GFLOPs calculation

To enhance transparency and reproducibility, we provide a detailed explanation of how the computational complexity of our proposed model (5.0 GFLOPs) was calculated. GFLOPs, or Giga Floating Point Operations, represent the total number of arithmetic operations (multiplications and additions) performed during a single forward pass, expressed in billions of operations (1 GFLOP = 10⁹ FLOPs). The total FLOPs were computed using fvcore64 and ptflops65, two open-source tools widely used for profiling deep learning models1,2. The input size used for estimation was a single RGB image of dimensions 64 × 64 × 3.

For convolutional layers, the FLOPs are calculated using the following formula:

where \(H_{out} , W_{out}\): output height and width of the feature map, \(C_{in} , C_{out}\): number of input and output channels, \(K_{H} ,K_{W}\): height and width of the convolutional kernel, \(B\): batch size (typically 1 for inference FLOPs), The factor of 2 accounts for both multiplication and addition operations per output element.

For the Vision Transformer module, FLOPs are calculated based on multi-head self-attention and feed-forward network components. The self-attention mechanism includes dot-product attention, softmax normalization, and projection layers, with complexity driven by the number of tokens \(T\), embedding dimension \(D\), and number of attention heads \(h\). For a single Transformer layer, the attention FLOPs can be approximated as:

where the first term accounts for query, key, value, and output projections, and the second term represents the attention weight computation and weighted sum.

By summing the contributions from all convolutional blocks, Transformer encoder layers, and the final dense classification head, we obtain a total complexity of approximately 5.0 GFLOPs. This is significantly lower than typical standalone Vision Transformer models, which often exceed 12.5 GFLOPs due to deeper encoder stacks and higher-dimensional embeddings. The efficiency of our architecture stems from its dual-path CNN backbone, shallow transformer depth (2 layers), reduced patch embedding size, and selective feature fusion, making it suitable for real-time applications and deployment on edge devices.

Vision transformer’s role in capturing long-range dependencies

One of the key strengths of the Vision Transformer (ViT) in our model is its ability to capture long-range spatial relationships across the hand, which traditional CNNs often miss due to their limited receptive fields. In gesture recognition, especially for complex signs where subtle finger differences matter, it’s essential that the model can relate different parts of the hand—even if they are far apart in the image. For example, understanding how the position of the thumb relates to the pinky, or how the shape of the palm connects with fingertip placements, often determines whether a gesture is interpreted correctly.

To show this clearly, we’ve included a set of visualizations that highlight how the ViT module actually “pays attention” to these distant but important regions. In Fig. 8, the attention heatmaps from the ViT-enhanced model reveal that the model consistently focuses on gesture-critical areas like fingertips, the palm center, and hand edges—even when these areas are not close to each other. This indicates that the model is learning meaningful connections between different regions of the hand, beyond just local textures or contours.

We take this a step further in Fig. 16, where we compare a CNN-only model to our hybrid CNN + ViT version. The CNN model tends to spread attention broadly and often includes background noise. But with ViT in the mix, the attention becomes more precise and better focused on the actual gesture. This shows how the ViT helps the model make more sense of the full hand configuration, rather than getting distracted by nearby visual clutter.

Finally, Fig. 17 demonstrates how this attention holds up under tough conditions like poor lighting, partial hand occlusion, or busy backgrounds. Even when parts of the hand are hidden or the scene is visually complex, the ViT-enhanced model still focuses on the important regions. This kind of robust, global reasoning is exactly what’s needed for accurate sign recognition in real-world settings.

Qualitative example showing model robustness under challenging visual conditions, including occlusion, low lighting, and complex backgrounds. Visual analysis of model attention under challenging conditions. From left to right: input images, attention heatmaps from the Vision Transformer module, and saliency maps from the hybrid CNN-ViT model. The “Complex Background” example shows that the model correctly focuses on the hand shape despite visual clutter, confirming the effectiveness of the dual-path feature extraction and attention mechanism.

These results support our claim that the ViT module doesn’t just refine features. By capturing long-range dependencies across the hand, it plays a vital role in improving both the accuracy and reliability of gesture recognition, especially in challenging conditions.

In summary, the Vision Transformer allows the model to understand gestures not just as a collection of local features, but as holistic patterns formed by spatial relationships across the entire hand—making it an essential component for achieving high accuracy in real-world sign language recognition.

Experimental validation of background elimination in gesture recognition

To further validate the effectiveness of background elimination in gesture recognition, we conducted a comparative experiment by replacing the key “subtraction” operation. Additionally, we examined the impact of using the “addition” operation as an alternative approach. In Table 8, the results indicate that background subtraction yields a slight but significant improvement in accuracy, increasing from 99.71 to 99.85%, whereas the addition operation resulted in a lower accuracy due to increased background noise interference.

This improvement with subtraction is attributed to several factors. First, background subtraction effectively isolates the hand gesture from the surrounding environment, minimizing the influence of irrelevant background artifacts. Without this operation, some residual noise remains, leading to minor misclassifications. Second, by eliminating background distractions, the model focuses on the essential hand-specific features, improving the precision of extracted gesture characteristics. Alternative methods that lack subtraction may retain background variations that interfere with the model’s recognition process.

Additionally, the ASL dataset includes images captured under various lighting and background conditions. Background subtraction helps standardize the input data, making the model more resilient to environmental variations. In contrast, the addition operation amplifies background elements, making it harder for the model to distinguish hand gestures from their surroundings.

This experiment highlights the effectiveness of background subtraction as a crucial preprocessing step in gesture recognition. The proposed model benefits from this approach by achieving higher accuracy and improved robustness against background interference.

Qualitative analysis and model behavior under challenging conditions

To further support the quantitative performance of the proposed model, we conducted a qualitative analysis aimed at evaluating its behavior under varying visual conditions. Figure 8 and Fig. 16 present attention heatmaps and saliency visualizations generated by the hybrid CNN + ViT architecture, revealing how the model consistently focuses on semantically meaningful regions of the hand, such as fingertips and palm contours. To test the model’s generalization capability, we included samples with variations in background complexity, hand scale, and illumination. Notably, even in the presence of mild occlusion or uneven lighting, the model retained focused attention on the gesture-relevant regions, demonstrating its robustness to noise and distracting context. Additionally, the ViT-enhanced architecture exhibited a marked improvement over the CNN-only baseline by producing more compact and accurate attention responses. These visual insights reinforce the claim that the Vision Transformer module plays a critical role in refining feature selectivity and spatial focus, enabling reliable recognition even in challenging visual scenarios. Such qualitative evidence strengthens the overall interpretability of the model and validates its effectiveness in real-world gesture recognition settings.

To further examine the generalization capabilities and robustness of the proposed Hybrid Transformer-CNN model, we conducted qualitative evaluations under a variety of challenging visual conditions. As illustrated in Fig. 17, we tested the model on input images exhibiting occlusion, low lighting, and complex backgrounds—all of which are common in real-world gesture recognition scenarios. Despite these difficulties, the model consistently focused on semantically meaningful regions of the hand, such as the fingertips and palm center, as seen in both the saliency maps and attention heatmaps. This focused response indicates that the model maintains spatial awareness and discriminative feature extraction, even when external conditions degrade visual quality.

Notably, under occlusion and poor lighting, the attention maps still emphasized the visible, informative parts of the gesture, rather than being distracted by background or noise. Similarly, when presented with complex backgrounds, the model successfully localized the hand area and avoided misdirected attention. These visual findings align with the model’s high quantitative performance and reinforce the contribution of the dual-path CNN backbone and Vision Transformer module. Together, they enhance the model’s ability to separate task-relevant features from background distractions, making it well-suited for real-world deployment in uncontrolled environments.

Ablation study

To evaluate the contribution of individual components, an ablation study is conducted. Results are presented in Table 9:

In Table 9, the Proposed Hybrid Model achieves superior results compared to other configurations. The ablation study confirms that feature fusion, self-attention mechanisms, and optimized feature extraction significantly contribute to its performance.

Although the proposed Hybrid Transformer-CNN model is designed to be lightweight, the computational efficiency plays a crucial role in determining its real-world applicability, especially for deployment in resource-constrained environments such as mobile devices or edge computing platforms.

-

1.

Model Size and Parameters:

-