Abstract

Sensor calibration is a crucial step to ensure the proper functioning of advanced driver assistance systems (ADAS), and vehicle coordinate system reconstruction is an indispensable part of this process. However, the currently mainstream vehicle alignment platforms are characterized by high costs, complex structures, and immobility, making them unsuitable for the rapid upgrade and transformation pace of modern automotive manufacturers. To address these limitations, this paper proposes a low-cost, flexible solution based on extracting key feature points from the car body-in-white. First, the paper introduces the reconstruction principles based on rigid body transformation. Then, the method for selecting the necessary feature points and its general applicability are discussed. Third, the paper describes how to mitigate the influence of vehicle body color by choosing appropriate light sources, and it employs suitable algorithms to complete the feature point extraction process. Finally, validation experiments were designed to verify the accuracy of the proposed method, demonstrating that it achieves better reconstruction precision compared to the vehicle alignment platforms.

Similar content being viewed by others

Introduction

In recent years, autonomous driving technology has experienced rapid development, with advanced driver assistance systems (ADAS) playing a pivotal role in this field. ADAS leverages a variety of sensors installed on vehicles to perceive the surrounding environment during driving and assist the driver in making appropriate decisions1. To achieve higher levels of autonomous driving, the sophistication and complexity of ADAS continue to increase2. Consequently, the number and types of sensors required are also growing, posing greater challenges to the system’s safety and accuracy3. To ensure the proper functioning of all vehicle systems, original equipment manufacturers (OEMs) conduct rigorous end-of-line (EOL) calibrations prior to vehicle delivery, among which the calibration of ADAS is an indispensable component4.

Sensors are a critical component of ADAS, serving as the primary means through which the system perceives external environmental information5. The installation position of these sensors significantly affects the reliability of ADAS functions; therefore, precise calibration of sensor placement is essential during the ADAS calibration process6. Modern ADAS systems generally rely on multi-sensor fusion to operate effectively7which necessitates the alignment of data from different sensors within a unified coordinate system8,9. As such, sensor installation must be referenced to the vehicle’s coordinate system. However, due to variations introduced during the installation process, sensor positions may deviate from their intended locations, making pre-delivery calibration essential. This process, known as multi-sensor calibration, ensures that all sensors are correctly aligned. Once the vehicle leaves the installation station, the vehicle coordinate system can no longer be accurately determined, hence it must be reconstructed during the multi-sensor calibration process.

According to ISO 8855:201110, the vehicle coordinate system is defined as follows: the x-axis points forward in the horizontal direction and is parallel to the vehicle’s longitudinal symmetry plane; the y-axis points to the left, perpendicular to the longitudinal symmetry plane; and the z-axis points upward, perpendicular to the horizontal plane. The origin of the coordinate system can be selected as needed, with commonly used reference points including the vehicle’s overall center of gravity, the center of gravity of the sprung mass, the midpoint of the wheelbase at the height of the center of gravity, and the center of the front axle.

During end-of-line (EOL) calibration11the ADAS calibration time for each vehicle is strictly constrained in order to maintain the production efficiency of the vehicle assembly line. Therefore, the reconstruction of the vehicle coordinate system must be performed efficiently, typically within several tens of seconds. Equipment used in post-sale service calibration generally requires extensive setup and alignment time, rendering it unsuitable for this application scenario.

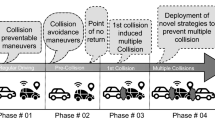

Currently, major automobile manufacturers around the world typically utilize alignment platforms in EOL ADAS calibration scenarios12,13. The basic principle is to align the vehicle coordinate system with the platform coordinate system, thereby achieving coordinate system reconstruction. Taking the inward-expanding alignment platform as an example, its structure and coordinate axis directions are illustrated in (Fig. 1). The coordinate axes in the figure serve only to indicate direction; their orientation is consistent with that of the vehicle coordinate system, while the origin is arbitrarily chosen for illustrative purposes.

The procedure begins by driving the vehicle onto the alignment platform, positioning the front wheels into V-shaped grooves on the platform to achieve alignment along the y-axis. Once in place, push rods within the grooves adjust the inner sides of the front wheels to straighten the vehicle’s body, thereby aligning it along the x-axis. The z-axis alignment is implicitly ensured by assuming standard tire pressure, resulting in the vehicle chassis being parallel to the platform and the z-axis perpendicular to the horizontal plane. Once the vehicle is properly positioned, the coordinate systems are considered coincident, completing the reconstruction. The entire process—from the vehicle entering the platform to being properly aligned—takes only a few seconds, which meets the stringent time requirements of EOL calibration.

Current vehicle coordinate system reconstruction methods in ADAS end-of-line calibration predominantly rely on mechanical alignment platforms. For example, a well-known domestic manufacturer in China provides a platform with a longitudinal positioning accuracy of ± 3 mm, lateral accuracy of ± 2 mm, and yaw angle deviation within ± 0.5°. Despite their high precision, such systems exhibit several limitations: their performance is highly sensitive to installation quality and long-term structural degradation, their complex mechanical design leads to high manufacturing and maintenance costs—often amounting to tens of thousands of dollars—and they lack flexibility, being permanently installed and difficult to relocate or reconfigure during production line upgrades. These limitations pose significant challenges in the context of modern automotive production, which demands adaptable, cost-effective, and easily maintainable solutions due to rapid model iteration and frequent line adjustments.

However, a review of existing literature reveals a notable research gap: there is limited exploration of flexible, vision-based methods for coordinate system reconstruction that can match or exceed the accuracy of mechanical platforms while offering lower cost and greater deployment flexibility.

To address this gap, this paper proposes an innovative coordinate system reconstruction method based on machine vision and deep learning. The proposed system comprises only a camera, a computer, and several light sources, resulting in minimal hardware complexity and low deployment cost. Unlike traditional rigid platforms, this solution is non-contact, thus avoiding wear caused by physical interaction with the vehicle and significantly reducing maintenance overhead. The system is highly portable and easily reconfigurable, allowing for rapid adaptation to production line modifications without the need for costly and labor-intensive reinstallation. Moreover, the total hardware cost is reduced to just a few thousand dollars. Experimental results demonstrate that the proposed method not only reduces cost and enhances flexibility but also achieves higher reconstruction accuracy compared to existing mechanical alignment platforms. This approach offers a practical and scalable alternative for modern vehicle manufacturing environments.

This paper first analyzes and constructs the principles of vehicle coordinate system reconstruction. Based on these principles, a detailed implementation scheme is designed. Finally, a verification plan is developed to test the feasibility of the method and to evaluate its reconstruction accuracy.

Materials and methods

Describing the vehicle coordinate system using the camera coordinate system

In this study, we adopt a known coordinate system and solve for its relationship with the vehicle coordinate system. This known coordinate system is then used to describe the vehicle coordinate system, a process commonly referred to as coordinate transformation.

When reconstructing the coordinate system using a vehicle alignment platform, the platform coordinate system serves as the known coordinate system, which is perfectly aligned with the vehicle coordinate system. Thus, the vehicle coordinate system can be described by the platform coordinate system. The establishment of the platform coordinate system relies on precise calibration during deployment, which requires a rigorous installation process and a complex calibration procedure to achieve high accuracy.In this research, we replace the known platform coordinate system with the camera coordinate system, aiming to solve the transformation relationship between the camera coordinate system and the vehicle coordinate system, allowing the vehicle coordinate system to be described through the camera coordinate system.

The determination of the camera coordinate system can be achieved through camera calibration. Camera calibration, a fundamental technique in machine vision, is a well-established process. One of the most widely used methods is Zhang’s calibration method14which is simple and offers high precision. Compared to the complex setup and calibration of the platform coordinate system, the camera coordinate system can be established with a straightforward calibration procedure, significantly reducing labor and time costs.

The relationship between the camera coordinate system and the vehicle coordinate system

The transformation between the camera coordinate system and the vehicle coordinate system belongs to the category of rigid body transformation15. Rigid body transformation, as a special type of coordinate transformation, involves objects that are considered to undergo no deformation. The body-in-white (BIW)16 refers to the vehicle structure that has undergone stamping, welding, and assembly during the manufacturing process but has not yet been painted or had mechanical and electrical components installed. It forms the main structure of the vehicle. During engineering analysis, the BIW of a vehicle that has not yet left the production line can often be regarded as a rigid body, neglecting any minor deformations that may have occurred during the manufacturing process. Therefore, in this study, the BIW is taken as the object of the transformation, and by solving the rigid body transformation between the two coordinate systems, the vehicle coordinate system can be determined.

In practical computations, the rigid body transformation is typically described using a set of 3D points in space. Once the positions of these points in both coordinate systems are known, the transformation matrix between the camera coordinate system and the vehicle coordinate system can be computed using rigid body transformation. The rigid body transformation can be expressed by Eq. (1):

In this context, P represents the 3D coordinates of the point set before the rigid body transformation, Q represents the 3D coordinates of the point set after the transformation, and T is the transformation matrix. The transformation matrix T can be expressed by Eq. (2):

Here, R is a 3 × 3 rotation matrix representing the rotation of the coordinate system around the x, y, and z axes. t is a 3 × 1 translation vector representing the displacement of the coordinate system along the x, y, and z axes. 0 is a 3 × 1 zero vector, and 1 is a scalar used to maintain the consistency of homogeneous coordinates. This matrix represents the transformation relationship between the coordinate systems before and after the rigid body transformation. After the original coordinate system undergoes a rotation R around the x, y, and z axes and a translation t along the x, y, and z axes, the new coordinate system can be obtained. The rotation part R of the transformation matrix has three degrees of freedom, corresponding to the rotation angles around the x, y, and z axes. The translation part t also has three degrees of freedom, representing the displacement along the x, y, and z axes, resulting in a total of six degrees of freedom. To determine these degrees of freedom, at least three non-collinear points are required to solve the nonlinear system17.In this context, Q represents the set of points in the camera coordinate system, which are known once the camera coordinate system is established. To solve for the transformation matrix T, the corresponding set of points P in the vehicle coordinate system must also be known, and P must consist of at least three non-collinear points. Therefore, in this study, three distinct feature points on the BIW were selected to form the P point set. Feature points, as the name suggests, are points with clear and distinguishable characteristics. This advantage allows their positions in the camera coordinate system to be determined through image processing and a series of calculations18.

The specific dimensions and position of the BIW within the vehicle coordinate system are often proprietary information of automotive manufacturers. However, obtaining the positions of several feature points on the BIW, without involving the overall dimensions or enabling the reverse-engineering of those dimensions, can be permissible through the signing of a collaboration agreement. This study is based on a cooperative research and development project with an automotive manufacturer, which allows access to the target feature point set P in the vehicle coordinate system. Therefore, P is considered known, enabling the computation of the transformation matrix T. In summary, by obtaining the coordinates of three pairs of non-collinear points in both the camera and vehicle coordinate systems, the transformation matrix T can be calculated.

Overall methodology design

The point set P in the vehicle coordinate system is directly provided by the automotive manufacturer, while the 3D coordinates of the feature point set Q in the camera coordinate system need to be obtained using Eq. (3):

Here, \(\:\left({X}_{c},{Y}_{c},{Z}_{c}\right)\) represents the 3D coordinates of the feature point in the camera coordinate system, and \(\:\left(u,v\right)\) represents the 2D coordinates of the feature point in the pixel coordinate system. \(\:{f}_{x},{f}_{y}\) are the focal lengths of the camera in the X and Y directions, respectively, and \(\:{u}_{0},{v}_{0}\) represent the coordinates of the camera sensor’s center in the pixel coordinate system. The parameters \(\:{f}_{x},{f}_{y},{u}_{0},{v}_{0}\) are the intrinsic parameters of the camera, which can be obtained through camera calibration19. Therefore, before obtaining the 3D coordinates, the 2D coordinates of the feature points must be solved, and these 2D coordinates can be acquired through image processing. After organizing these steps, the overall principle of this study is illustrated in (Fig. 2).

Based on Fig. 2, the following specific workflow is designed for this study.

The following section will introduce the specific implementation methods according to the workflow illustrated in (Fig. 3).The system architecture is illustrated in (Fig. 4).The layout of the detection scenario is shown in (Fig. 5).

Selection of feature points

The BIW is large, with numerous feature points spanning from the front to the rear of the vehicle. Image feature points are distinctive points in an image with unique properties that allow them to be differentiated from other points within the same image. These points provide a unique identifier across different images. Common feature points include corner points, edge points, and center points of circles.Selecting the appropriate feature points is one of the challenges. First, the number of feature points required must be determined. Based on the principles of rigid body transformation discussed in Sect. 2.2, at least three feature points are needed to calculate the transformation matrix. Secondly, when considering the acquisition of the 3D coordinates of feature points through visual technology, the camera’s field of view is limited. At a certain working distance, only a specific area can be captured. To optimize costs and efficiency, using a single camera to capture all feature points in one shot is ideal. Therefore, the selected feature points should be relatively close to each other, allowing the camera to capture them all in a single frame.

In order to accurately obtain the 2D coordinates of the feature points, the selected points must also take into account the ease of extraction and accuracy. Considering these factors, this study selects the region of the BIW around the fuel tank cap at the rear of the vehicle. The feature points include two corner points near the wheel arch and the center point of the fuel tank cap, as shown in (Fig. 6). For simplicity, these feature points are collectively referred to as Feature Point 1, Feature Point 2, and Feature Point 3, respectively, as marked in the figure.

The selection of these feature points heavily relies on the specific features of the vehicle itself. Therefore, the primary concern is whether these feature points still exist if the vehicle model changes, meaning the structure of the vehicle body is modified. Will the selection method need to change? If there are not enough feature points to support this method when the vehicle model changes, then the method would lack general applicability, greatly reducing its practical value.

In terms of the method’s general applicability, this paper provides the following explanation. First, the method is designed specifically for small vehicles. The majority of vehicles on the market are small vehicles, and most automotive manufacturers primarily produce small vehicles. The characteristics of large vehicles differ significantly from small vehicles, and the equipment used for small vehicles is typically not interchangeable with that for large vehicles. Therefore, large vehicles are not considered within the scope of this study. In China, small vehicles are generally categorized into two types: sedans and SUVs, which together account for over 98% of the market. Thus, the reconstruction method proposed in this paper is focused on these two types of vehicles, which also meets the production needs of most automotive manufacturers.

After collecting a large number of samples, we found common features in the body-in-white (BIW) around the fuel tank cap. Observing 100 different SUV models from various brands, we discovered that the BIW around the fuel tank cap in SUVs has a highly similar shape, consistently presenting four common feature points, as shown in (Fig. 7): one at the center of the fuel tank cap, two corner points, and one at the highest point of the wheel arch. Similarly, after examining 100 different sedan models from various brands, we observed that the BIW around the fuel tank cap in sedans also shares similar common features, as shown in (Fig. 8). Unlike SUVs, sedans generally exhibit only three common feature points in this area: one at the center of the fuel tank cap, one corner point, and one at the highest point of the wheel arch.

Returning to the original question, when the vehicle model changes, the BIW in the area around the fuel tank cap still exhibits common features, and the selection method does not need to be altered. Even if the vehicle model differs, a sufficient number of feature points can still be found in the same region to support this method. Therefore, the method of reconstructing the vehicle coordinate system through feature point extraction is indeed generalizable. Most vehicle models can find similar common features in the area around the fuel tank cap, meeting the requirement for extracting feature points necessary for this method.

Image acquisition

According to the actual requirements of the automotive manufacturer, in order to avoid additional site expansion costs, measurements showed that when the vehicle enters the inspection station, there is approximately 700–800 mm of space on either side of the vehicle. This limits the maximum working distance of the camera to 700 mm. To capture all the selected feature points while allowing for some margin of movement, the target area to be captured is approximately 550 × 500 mm. Therefore, when selecting the camera, its field of view at a 700 mm distance must be larger than the target area. After selecting a suitable camera, it is mounted 700 mm away from the vehicle’s surface, with the camera’s field of view aligned roughly to the center of the target area. The resulting image capture effect is shown in (Fig. 9).

The detection accuracy and stability of machine vision are significantly affected by natural light20. The ADAS calibration station in the automotive manufacturing plant has strict requirements, one of which is that the natural light intensity at the station remains constant. Under these constant conditions, light colors such as white, which reflect light well, create a sharp contrast between the black gaps at the edges and the light-colored body, producing a relatively clear image of the target area, as shown in (Fig. 9a). However, for dark colors such as black, which have poor light reflection properties, the contrast between the black edge gaps and the dark-colored body is blurred, making the target area features less distinct, as shown in (Fig. 9b).

In various vision systems, such issues typically require additional lighting from auxiliary light sources, and this study is no exception. Currently, there are almost no reference examples of lighting schemes that cover large areas of the vehicle’s body for similar problems. Therefore, designing an auxiliary lighting solution that perfectly resolves the challenges encountered in this study, while balancing cost and efficiency, is one of the key difficulties of this research.

To address this challenge, after testing, this study ultimately selected two adjustable red bar lights as auxiliary lighting sources. The selection of this light source was based on the following factors: first, the two bar lights can cover all the required features for this method, making it the most cost-effective solution for large-area illumination. Using a surface light source for this region would significantly increase costs. Second, red light was chosen because, under red light, the dark-colored vehicle’s body-in-white surface appears red, while the gaps remain black, providing a clear contrast. In image processing, this results in a significant grayscale difference, making it easier to distinguish the edge areas. If white or other light-colored light sources were used, even though the reflection from the body-in-white surface would be enhanced, the surface would still appear in its original dark color, and the contrast with the gaps would remain unclear, with minimal grayscale difference between the gaps and the surface. Similarly, other dark-colored light sources would also fail to produce adequate feature extraction. After multiple tests, red light was found to have the best illumination effect on dark-colored vehicle bodies, effectively highlighting the body-in-white edges.To evaluate the applicability of red light illumination for vehicle bodies of different colors, this study selected several commonly seen vehicle body colors on the market—primarily dark colors—and conducted feature extraction experiments. The results, as shown in (Table 1), indicate that red light illumination meets the lighting requirements for feature extraction in the majority of vehicle body colors available commercially.

Lastly, the power of the light source, i.e., the lighting intensity, also needed to be considered. Insufficient power would result in unsatisfactory illumination, leaving the original issue of blurry edges unresolved. On the other hand, excessive power would cause the effect shown in (Fig. 10). The left side of Fig. 10 shows the feature area containing the corner point in the original image. Excessive lighting intensity illuminates the interior of the gaps, which, due to the angle between the camera and the shooting area, makes the interior of the gaps clearly visible. This can result in edge misidentification, as shown on the right side of (Fig. 10). The green lines represent the actual edges to be extracted, while excessive lighting power causes the appearance of the non-target red edges, which can easily interfere with the accurate extraction of the correct edges. Therefore, the power of the light source should not be too high.

Considering all these factors, this study selected two adjustable red bar lights as auxiliary light sources. Their adjustable intensity can meet the standard required for accurate detection.

To determine the appropriate illumination power, this study was conducted in a closed indoor environment with constant natural lighting. An adjustable light source was used to illuminate the feature region at varying intensities. A lux meter was employed to measure the illumination intensity in the target area, and the proposed feature extraction algorithm was then applied to extract the coordinates of feature points. The results are presented in (Table 2). As shown in the table, when the ambient natural light intensity on the vehicle surface is below 132 lx, the algorithm fails to identify the feature points. Within the range of 144 to 248 lx, correct feature point coordinates can be successfully extracted. However, further increases in illumination intensity result in either incorrect coordinate detection or extraction failure. These findings are consistent with the preceding analysis.

After capturing the images, distortion correction is required due to lens distortion in the camera21. This correction needs to account for the camera’s distortion parameters, which are typically obtained through camera calibration. In this study, Zhang’s calibration method was used to complete the camera calibration process. Since distortion correction has a wide range of well-established and reliable methods, this paper will not elaborate further on this process.

Region of interest (ROI) extraction

After obtaining the image, it is necessary to extract the coordinates of the feature points. The total area of the captured image is quite large, but the region containing the feature points only occupies a small portion of the entire image. Most of the image consists of irrelevant background and areas unrelated to the feature points. The small region where the feature points are located is the Region of Interest (ROI) for this study. To reduce the complexity of feature point extraction and avoid unnecessary calculations, this study first applied the YOLOv8 algorithm22,23 to extract the ROI. It is worth mentioning that, as discussed in Sect. 2.5, the lighting must fully cover the feature points, which refers specifically to the need to cover the ROI rather than the entire body-in-white area.

To ensure that the dataset used for training the model is sufficiently diverse and representative, a systematic data augmentation approach was employed. The dataset construction for this study proceeded as follows: First, after setting up the camera and light source as described in Sect. 2.5, 200 images of vehicle bodies in various colors were captured from different distances. Next, to further enrich the dataset, various data augmentation techniques were applied to the original images. The specific methods include:

-

1.

Rotation: The original images were rotated in small increments both clockwise and counterclockwise. Each rotation was by 5 degrees, for a total rotation of 10 degrees. This rotation operation simulates the possible changes in front or rear vehicle body height caused by variations in tire pressure. After rotation, the dataset was increased by 800 images.

-

2.

Brightness Adjustment: The brightness of the original images was quantitatively increased or decreased to simulate changes in light intensity on the vehicle surface. The natural light at the test site was relatively constant, with the surface of the body-in-white being illuminated by a consistent auxiliary light source. A lux meter measured the light intensity in the region of interest (ROI) at approximately 180 lx, while the non-ROI regions had light intensity between 50 and 80 lx. When the test site changes, variations in natural light intensity may occur. The ROI’s light intensity can be maintained by adjusting the auxiliary light source, but the brightness of other areas may change. To simulate this scenario, the brightness of the original images was increased and decreased by 10% increments, up to 30%. After this brightness adjustment, 1200 images were added to the dataset.

-

3.

Noise Addition: Random Gaussian noise with a standard deviation between 10 and 30 was added to the original images to simulate potential noise interference. This operation added 200 images to the dataset.

-

4.

Cropping: Random cropping of 5–20% of the edges of the original images was performed to simulate scenarios where the shooting distance decreases or the edges are obstructed. This operation added 200 images to the dataset.

Using the above data augmentation methods, the initial 200 images were expanded to 2600 images, all with a resolution of 2592 × 1944 in BMP format. Subsequently, the images were annotated using LabelImg v1.8.5 software, labeling three classes: “point1,” “point2,” and “point3,” which correspond to the regions where the three feature points are located. Finally, the images were split into training, validation, and test sets in a standard 6:2:2 ratio, with 1560 images used as the training set, 780 images as the validation set, and 780 images as the test set.

The experimental setup for training the YOLOv8 model in this study was as follows: the operating system was Windows 11, the CPU was an AMD Ryzen 7 6800 H, and the GPU was an NVIDIA GeForce RTX 3060. The version of PyTorch used was 2.0.1, with CUDA version 12.0, and Python version 3.9.18. The metrics used to evaluate the detection performance of the YOLOv8 model were Precision (P), Recall (R), and Mean Average Precision at IOU = 0.5 (mAP@0.5).

In this context, TP refers to the number of ROIs correctly identified by the model, FP refers to the number of incorrectly identified ROIs, and FN refers to the number of ROIs the model failed to identify. APi represents the detection accuracy for the i-th class of ROIs, and C is the total number of feature region categories. After 300 training epochs, tThe model achieved a detection precision of 97.3%, a recall of 92.9%, and a mean average precision (mAP) of 93.7%. The actual detection performance is shown in (Fig. 11), demonstrating that the model can accurately identify ROIs on vehicles of different colors. Given the excellent detection performance, this study did not perform additional optimizations on the original model, aside from modifying a few necessary training parameters.

Corner point extraction based on template matching

Feature point extraction relies on feature extraction algorithms, and the accuracy of vehicle coordinate system reconstruction depends on the precision of feature point coordinate extraction. In this study, a template matching algorithm24 and an ellipse fitting algorithm25 were used for feature extraction. The selected feature points can be divided into two categories: edge corner points and ellipse centers. For the edge corner points, a corner point extraction algorithm based on template matching was employed.

The key to corner point extraction is edge detection. With proper auxiliary lighting, edge extraction is relatively straightforward. The challenge in this study lies in ensuring the uniqueness of the corner points.

During the on-vehicle testing of the proposed method, we observed a non-uniqueness in the selected feature corner points. As illustrated in (Fig. 10), the green contours indicate the boundaries of the regions of interest (ROIs) containing the feature points. A corner point is typically defined as the intersection of two straight edges. Ideally, such a point should result from the intersection of two well-defined straight lines. However, as shown in the red circled area in (Fig. 10), the supposed corner region exhibits a certain degree of curvature rather than a distinct angular intersection.

Due to this geometric ambiguity, even when using the same corner detection algorithm, the extracted corner coordinates vary slightly between runs—typically by several pixels. This inconsistency introduces considerable repeatability errors. To evaluate the impact of this localization error on the final reconstruction accuracy, we manually introduced a perturbation of up to 2 pixels in the corner coordinates (in practice, the deviation does not exceed 2 pixels). The resulting outputs were then compared with the standard result, in which the corner coordinates were fixed to a reference position (x, y). The results are summarized in (Table 3).

It can be observed that even a single corner point deviation can lead to a translation error of up to 1.56 mm and a rotation error of up to 0.35°, significantly affecting the accuracy of the calculation. Therefore, ensuring the uniqueness of corner point definition—i.e., guaranteeing consistent localization across detections—is essential for reliable reconstruction.

To address the uniqueness issue, this study used a template matching algorithm based on shape for feature point extraction. The idea is to create a template region, as shown in the green contour in (Fig. 12), and define a point at the top of this region as the corner point. The center coordinates of the template region are then obtained, and the relationship between the corner point and the center point is established. Since the center point is uniquely determined, the corner point can also be uniquely determined based on this relative relationship. As the template region is part of the rigid body of the BIW, it will not deform. When a new image is captured and the ROI is extracted, there will be a region in the image that matches the template region. This region will differ from the template only by translation and scaling. After applying affine transformation, the template region and the target region will align perfectly, and the feature point in the test region can be uniquely determined.

In practice, the first step is to obtain the contour of the small feature region, i.e., the green contour in (Fig. 10), and apply a series of smoothing operations to use it as the template image. When capturing a new image where the feature points need to be extracted, the contour of the new image is first extracted. The extracted contour then undergoes a series of filtering, connection, and smoothing operations, leaving only closed and continuous shapes. These shapes are then numbered and sorted, followed by template matching with the contour of the template image. The contour with the highest matching score is selected as the target contour, as shown in (Fig. 13). Finally, an affine transformation is performed to align the edges of the template contour with the edges of the target contour. Once aligned, the corner point coordinates can be uniquely determined.

After performing several hundred iterations using the proposed method, we found that the calculated corner coordinates and the final calibration results remained identical across runs, with no pixel-level deviations observed. This consistency indicates that the method can reliably achieve unique corner point localization. Its robustness and accuracy are sufficient to meet the requirements of the final calibration task.

It should be noted, however, that since custom-defined corner points were used in this process, the same definition method must be employed when obtaining the corresponding precise feature coordinates from the OEM (Original Equipment Manufacturer) to ensure consistency.

Fuel tank cap center extraction based on ellipse fitting

The extraction of the fuel tank cap center is closely related to the shape of the cap. Common shapes include circular, elliptical, and rectangular caps. This paper uses an elliptical fuel tank cap as an example to illustrate the extraction algorithm. First, the small region around the fuel tank cap is captured, and the contour is directly extracted using the Canny algorithm26. Although the lighting has been made as even as possible, irrelevant contours and discontinuous contours may still appear. At this point, small, non-continuous contours can be removed by setting conditions such as length and roundness, and nearby contours can be connected.

At this stage, most irrelevant contours will have been removed, leaving only continuous, more distinct contours. A second length filter is then applied to remove shorter contours, which are typically caused by strong reflections or shadows. These contours are usually much shorter compared to the actual fuel tank cap contour. Finally, the remaining contours are numbered, and each set of contours is subjected to ellipse fitting. The fitted ellipses are filtered based on predefined conditions of area and curvature. Sometimes, even after a second round of length filtering, irrelevant contours may remain. The ellipses fitted to these contours often exhibit significant deformation, allowing for further filtering at this stage. The final ellipse that meets the conditions is the target ellipse, and its center coordinates are the desired feature point coordinates for this study. This process is illustrated in (Fig. 14).

The area around the fuel tank cap typically has a large amount of blank space with no significant interfering contours. Therefore, under proper lighting, the contour extraction of the fuel tank cap is relatively easy. This study also tested various other shapes, and with simple modifications to the current algorithm, the extraction process can be easily completed.

This study primarily focuses on SUV models. As mentioned earlier, SUVs have four common feature points. In this paper, three of these were selected, while the highest point of the wheel arch was not included. However, for sedans, the highest point of the wheel arch is one of the essential feature points. The method for determining this point is currently quite simple: it only requires proper lighting of the wheel arch, allowing for the extraction of a section of the contour at the highest point, from which the coordinates of the highest point can be calculated. The method is well-documented in widely applied cases, such as those involving wheel arch height measurements. Therefore, this paper will not go into further detail on this method.

Acquisition of 3D coordinates of feature points

The 3D coordinates of the feature points can be calculated using Eq. (3), which can be rewritten in a more intuitive form as Eq. (7), where the parameter definitions are the same as in Eq. (3). \(\:\left({X}_{c},{Y}_{c},{Z}_{c}\right)\) represents the 3D coordinates of the feature point in the camera coordinate system, which are the values to be solved in this section. It is clear that both Xc and Yc are related to Zc, where Zc represents the depth value at that point. The accuracy of the 3D coordinate calculation is directly related to the precision of Zc.

.

This method requires precision at the millimeter level, meaning the depth accuracy needs to reach at least 0.1 mm. Currently, cameras capable of achieving this level of precision are primarily structured light cameras27,28. Most common structured light cameras use a line laser solution, which takes tens of seconds to scan the image, making it unsuitable for the rapid detection required in this study. After various tests, this study ultimately selected a dynamic fringe structured light solution with an area array. Compared to the line laser approach, this solution has the advantage of capturing an entire surface without scanning, reducing the time from image capture to completion to just 2–3 s.

Results

Accuracy testing using a calibration board

Using the method described earlier, the transformation matrix can be calculated. For a more intuitive observation of the results, this study decomposed the transformation matrix into a rotation matrix and a translation matrix, and the rotation matrix was further converted into Euler angles29. To verify the accuracy of this method, a calibration board was used to simulate the vehicle body in the experiment. Specifically, the calibration board coordinate system was used to simulate the vehicle coordinate system, and the feature points on the calibration board were used to simulate the feature points of the vehicle. A standard calibration board is shown in (Fig. 15).

After setting up the calibration board, since the exact size of each point and the center-to-center distance between the circles on the board are known, any selected circle center can be used as the coordinate origin. This allows the calculation of several other accurate circle center coordinates in the calibration board coordinate system. These known coordinates in the calibration board system represent the feature point coordinates provided by the automotive manufacturer in the vehicle coordinate system. A simple program can then be used to extract the center coordinates of these circles in the camera coordinate system, thereby satisfying the conditions for solving the transformation matrix.

After converting the transformation matrix into a translation matrix T and a rotation matrix R, each point in the camera coordinate system can be transformed into its corresponding point on the calibration board through translation T and rotation R. The simplest way to verify the transformation matrix is to physically determine the spatial relationship between two corresponding points in the two coordinate systems and compare these actual values with the calculated values. However, the challenge with this method is that while each feature point on the calibration board can be easily identified, the feature points in the camera coordinate system are located on the imaging plane with the camera’s optical center as the origin, making it impossible to accurately determine them by physical means. This is one of the challenges in the validation process of this study.

To address this issue, this study opted to use another coordinate system as a substitute for the camera coordinate system. The center of the bottom-right circle (point 4) on the calibration board was chosen to establish Calibration Board Coordinate System B, while the coordinate system constructed with point 1 as the origin is referred to as Calibration Board Coordinate System (A) The coordinate reconstruction algorithm was then used to obtain the transformation matrices from the camera coordinate system to both Calibration Board Coordinate Systems A and (B) This provides the translation and rotation relationships from the origin of the camera coordinate system to the origins of both Calibration Board Coordinate Systems A and B. At this point, the transformation relationship between Coordinate Systems A and B can also be calculated. This transformation relationship can be measured physically, and by comparing the calculated results with the actual measurements, the accuracy of the algorithm can be assessed. Since the amount of rotation is difficult to measure, only the translation component was verified initially.

The calibration board used in this study has circles with a diameter of 30 mm, and the distance between the centers of two adjacent circles is 60 mm. Using points 1, 2, and 3 as feature points for calculation, the center of point 1 is set as the coordinate origin (0,0,0), with the coordinate axes oriented as shown in (Fig. 12). The coordinates of point 2 are (60, 0, 0), and the coordinates of point 3 are (0, 60, 0). Before starting the experiment, a laser level was used to adjust the camera plane to be as parallel as possible to the calibration board plane. The results obtained after calculation using the aforementioned method are shown in (Table 4).

As shown in Table 4, the transformation matrix calculated using this method has a translation error of 1.41 mm in the X direction and 1.22 mm in the Y direction. In the ideal case, the translation values in the Z direction between the two coordinate systems should be equal. However, due to factors such as the wall not being perfectly level and the camera and calibration board not being perfectly parallel, the final translation in the Z direction between the two coordinate systems showed a discrepancy of 0.12 mm.

To test the repeatability of this method, two random coordinate systems were selected on the calibration board, and the same testing method was applied. Ten sets of data were obtained, as shown in (Table 5). In this table, ∆x and ∆y represent the differences between the actual and calculated translation values in the X and Y directions.

Through ten experiments, the average error in reflecting the X-axis translation using this method was found to be 1.40 mm, and the average error in reflecting the Y-axis translation was 1.21 mm. Based on these results, it can be preliminarily concluded that this method can achieve coordinate system reconstruction with a translation accuracy within 2 mm.

Compared to real vehicles, the calibration board allows any feature point to be selected as the coordinate origin, easily constructing multiple known calibration board coordinate systems. The center coordinates of circular feature points are easy to extract and exhibit clear uniqueness, meaning that the resulting coordinate values are less affected by the precision of the feature extraction algorithm. However, performing this verification experiment directly using the vehicle coordinate system is not feasible. The vehicle coordinate system can currently only be confirmed using existing solutions, such as alignment platforms, which not only introduce inherent errors from these methods but also involve prohibitively high costs, making them impractical for experimental verification. Therefore, this study initially used a calibration board instead of a vehicle to explore the preliminary feasibility of the method.

However, there are some limitations to this experiment. For example, the Y-axis translation of the calibration board is upward, perpendicular to the horizontal ground. For a real vehicle, movement along this axis is uncommon. The axes of the vehicle coordinate system are aligned with those of the alignment platform coordinate system shown in (Fig. 1), and it is clear that vehicles generally move along the X and Y axes. When the camera is positioned behind the vehicle for detection, vehicle movement along the Y-axis is actually along the depth direction relative to the camera, altering the distance between the camera and the vehicle body. The translation error in this direction was not captured by the above experiment. Additionally, a key point is that when this method is applied to a vehicle, the accuracy of feature point extraction will be greatly affected by the feature extraction algorithm, so the actual error needs to be tested and verified on a real vehicle.

Real vehicle validation experiment – rotation verification

Using the verification scheme similar to that in Sect. 3.1 for real vehicle validation is not practical. Therefore, designing a more appropriate scheme for real vehicle validation is essential. The scheme not only needs to calculate the translation accuracy in the camera’s depth direction but also reflect the error in rotation to a certain extent, which is one of the key challenges of this study. Ultimately, this study designed a validation experiment based on relative changes.

In this experiment, the vehicle was simulated to undergo quantifiable translation and rotation. Using the coordinate reconstruction method, the reconstructed displacement or rotation values before and after the transformation were calculated, and the difference was computed. The final error was obtained by subtracting the quantifiable change from the calculated difference. Since achieving precise rotation or translation of the vehicle is highly challenging, this study rotated and translated the camera instead, achieving the same effect.

This experiment is divided into two parts: rotation verification and translation verification. The specific procedure for the rotation verification part is as follows:

-

1.

Preparation: A test vehicle of any kind is required. In this study, a well-known Chinese SUV brand was used. The test vehicle was parked on a flat surface, and the camera was fixed on an electric turntable, which was mounted on a horizontal gimbal on the camera stand. The camera was positioned 800 mm away from the target body-in-white area. Using the real-time camera feed displayed by the camera control software, the camera’s horizontal and vertical positions were adjusted to ensure that the area of interest was centered in the frame. A level was used to adjust the stand and gimbal to make the camera as level as possible. After adjustments, the turntable was fine-tuned so that its rotation scale aligned with the 0-degree mark.

-

2.

Lighting Setup: The auxiliary light sources were installed on the stand, positioned on both sides of the detection area. Their positions were adjusted to ensure that the light illuminated the detection surface as evenly as possible. The power was adjusted to prevent the image from being too dark or overexposed. The experimental setup mimicked an automotive manufacturer’s inspection area, with the only light source being constant LED lights from the ceiling, avoiding interference from sunlight or other natural light sources.

-

3.

Experiment Execution: After beginning the experiment, RGB images and corresponding depth images were captured. The electric turntable was then rotated 2.5 degrees around the Z-axis, and another round of images was captured. This step was repeated multiple times to obtain RGB and depth images as the camera rotated ± 12.5 degrees around the Z-axis. In reality, the vehicle would only rotate around its Z-axis; thus, the experiment allowed the camera to rotate around the Z-axis of the vehicle coordinate system (perpendicular to the ground).

-

4.

Image Processing: The captured images were processed using a program to calculate the 3D coordinates of the target feature points in the camera coordinate system. These coordinates were then compared with the known corresponding feature point coordinates in the vehicle coordinate system to obtain the rotation matrix, followed by the calculation of Euler angles. In this experiment, calculations were performed every time the vehicle was rotated by 2.5 degrees, and the difference between the actual computed rotation angle and the standard value (2.5 degrees) was used to determine the rotation error. This experiment was repeated three times, and the results are shown in (Table 6).

As shown in Table 6, each 2.5-degree rotation results in an average error of less than 0.12 degrees. Although the root mean square error (RMSE) obtained for each group is relatively larger than the mean error, the overall values are close, indicating that the error distribution under this method is relatively uniform. In actual experiments, it was found that the errors produced with each 2.5-degree rotation can be both positive and negative. Due to the mutual correction of these errors, the total error within a 25-degree rotation is maintained within 0.3 degrees. However, due to the limitations of the camera’s field of view, larger rotation angles cannot be tested.

When this method is implemented at the inspection station in the future, a series of auxiliary lines should be set up at the station. When the vehicle enters the station, it should be positioned as parallel to the auxiliary lines as possible to prevent significant rotation of the vehicle body. In this case, the 25-degree rotation range is sufficient to reflect the detection error of the vehicle’s rotation by this method. During the coordinate reconstruction on the vehicle alignment platform, a yaw angle error of ± 0.5 degrees may occur. In contrast, this method can control the error within 0.3 degrees, thus improving the reconstruction accuracy concerning the rotation amount.

Real vehicle validation experiment – translation verification

The specific procedure for the translation experiment is as follows:

-

1.

Setup: Remove the electric turntable used for the rotation test and install a high-precision electric guide rail. Set the camera according to the conditions specified in Sect. 3.2.1(1). Then, use a laser level to adjust the camera’s position, ensuring that the X and Y axes of the camera are as parallel as possible to the X and Z axes of the vehicle. The translation direction mentioned in the following conditions refers to the camera coordinate system.

-

2.

X-axis Translation: Move the camera back and forth along the X-axis by 50 mm using the guide rail, capturing images and calculating the translation value every 5 mm. The difference between the actual computed translation value along the X-axis and the standard value (5 mm) will provide the translation error. This experiment is repeated three times, and the results are shown in (Table 7).

-

3.

Z-axis Translation: Rotate the guide rail 90 degrees and reinstall it. After installation, move the camera back and forth along the Z-axis by 50 mm using the guide rail, capturing images and calculating the translation value every 5 mm. The difference between the actual computed translation value along the Y-axis and the standard value (5 mm) will provide the translation error. This experiment is also repeated three times, and the results are shown in (Table 8).

As shown in Table 7, every 5 mm displacement along the X direction results in an average error of less than 0.6 mm. However, there is still a compensatory relationship between positive and negative errors. To minimize the randomness introduced by corrections, this study repeated the experiment 10 times and selected the set with the maximum error. After moving 100 mm, the X-axis displacement changed from 2684.438 mm to 2782.537 mm, resulting in an error of 1.901 mm. Other sets even exhibited errors lower than the average error. Overall, this reconstruction method can keep the error in the X-axis translation within 2 mm, while the alignment error of the vehicle alignment platform along the vehicle’s X-axis is ± 3 mm. Therefore, the method’s accuracy in reflecting the X-axis translation is constrained by the vehicle alignment platform.

As shown in Table 8, every 5 mm displacement along the Y direction results in an average error of less than 0.3 mm. Similarly, to minimize the randomness introduced by corrections, this study selected the set with the maximum error from the ten data sets. After moving 100 mm, the Y-axis displacement changed from 1484.414 mm to 1583.377 mm, resulting in an error of 1.037 mm. Overall, this reconstruction method can keep the error in the Y-axis translation within 1.1 mm, while the alignment error of the vehicle alignment platform along the vehicle’s Y-axis is ± 2 mm. Therefore, the method’s accuracy in reflecting the Y-axis translation is constrained by the vehicle alignment platform.

From the two tables, it can be observed that the RMSE computed for each group is slightly larger than the mean error; however, the difference is minimal. This indicates that the proposed method exhibits stable measurement errors in terms of translation. Furthermore, the RMSE is consistently better than the alignment error of the vehicle on the centering platform, further demonstrating the superiority of the accuracy of the method proposed in this study.

The total displacement of 100 mm is determined by the camera’s field of view; beyond this distance, the camera will not be able to capture the entire target body-in-white area. To prevent excessive vehicle displacement during actual testing, front wheel limiters should be set up at the inspection station. When the vehicle enters, the front wheels should ideally touch the front limiter, ensuring that the vehicle’s displacement during each capture does not exceed 100 mm. Thus, the results of this displacement experiment can reflect the accuracy of the method designed in this study.

Adaptability evaluation across different vehicle types

To evaluate the adaptability of the proposed method across different vehicle types, ten vehicle models were randomly selected for testing. The testing procedures followed those described in Sect. 3.2.1 and 3.2.2. Due to project confidentiality requirements, specific vehicle models are not disclosed; only general vehicle type information is provided. The test results are summarized in (Table 9), where both translation and rotation errors represent the average values calculated from twenty sets of data.

The experimental results indicate that all randomly selected vehicles possessed a sufficient number of common feature points, enabling successful computation using the proposed method. Translation and rotation error measurements across these vehicles revealed that, regardless of vehicle type, the translation error along the X-axis was consistently within 1 mm, the translation error along the Y-axis within 0.5 mm, and the rotation error within 0.21 degrees. Additionally, it was observed that the method yielded slightly lower accuracy when applied to passenger cars compared to SUVs. This may be attributed to the more dispersed distribution of common feature points on passenger cars, which weakens the relative spatial relationships among the points and leads to greater potential errors in feature point localization.

Conclusions and discussion

The reconstruction of the vehicle coordinate system is an essential part of the ADAS offline detection process; however, the current reconstruction methods have several deficiencies. This paper proposes a vehicle coordinate system reconstruction method based on machine vision. The method is introduced from aspects such as implementation principles, hardware and software selection, implementation methods, and technical challenges, leading to the following conclusions:

-

1.

This study proposes a lightweight and flexible vehicle coordinate system reconstruction method based on the principle of rigid body transformation. Compared to the currently mainstream vehicle alignment platforms, this method offers advantages such as lower cost and ease of disassembly, making it more aligned with the application needs of automotive manufacturers.

-

2.

An investigation of existing mainstream SUV and sedan models concluded that both types of small vehicles share similar common feature points in their rear side areas. The use of these common feature points is a crucial basis for the generalizability of this reconstruction method.

-

3.

This research developed a lighting scheme suitable for large-area illumination of vehicles of various colors, which addresses the challenges of feature extraction for dark-colored vehicles. By using the YOLOv8 object detection model, extraction of the vehicle body feature areas was achieved. A corner point extraction algorithm based on template matching was employed to resolve the non-uniqueness issue of corner points, successfully completing the extraction of target feature points.

-

4.

This paper designed two validation experiments for the proposed method. The calibration board experiment first validated the initial accuracy and feasibility of the method, followed by an experiment based on relative changes that provided further accuracy validation on real vehicles. The results demonstrated that the method achieves a rotation error of less than 0.3 degrees, a translation error along the X-axis controlled within 2 mm, and a translation error along the Y-axis controlled within 1.1 mm, showing improvements over vehicle alignment platforms.

Based on these conclusions, this study asserts that the proposed method has advantages such as low cost, good flexibility, and higher accuracy, which can address the deficiencies of current mainstream vehicle alignment platforms and align better with the upgrading pace of automotive manufacturers, thereby offering good practical application prospects. However, this study still faces some issues. First, the method currently only uses the minimum requirement of three pairs of feature points, which may reduce the overall robustness of the method and result in lower accuracy. Theoretically, more feature points could enhance the reliability of the method further, but finding a concentration of common features within a certain area is challenging. In the future, this study will attempt to identify areas of the body-in-white with more common feature points or utilize “virtual feature points,” such as using the midpoints of lines connecting the extracted corner points with the center of the fuel tank cap as feature points. This approach could significantly increase the number of common feature points within a single area, although this would require automotive manufacturers to provide more corresponding coordinates as needed.

Second, the implementation and validation of this research rely on a certain depth of cooperation with automotive manufacturers, requiring them to be willing to provide some body coordinates. This may pose difficulties for researchers interested in replicating the method. Finally, the validation scheme used in this study is currently only suitable for preliminary verification at the laboratory stage, and further communication and research between the two parties are needed to complete an enterprise-recognized validation scheme.

Data availability

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Neumann, T. Analysis of advanced Driver-Assistance systems for safe and comfortable driving of motor vehicles. Sensors 24, 6223 (2024).

Diewald, A. et al. Radar target simulation for vehicle-in-the-loop testing. Vehicles 3, 257–271 (2021).

Jumaa, B. A., Abdulhassan, A. M. & Abdulhassan, A. M. Advanced driver assistance system (ADAS): A review of systems and technologies. International J. Adv. Res. Comput. Engineering Technology (IJARCET). 8, 231–234 (2019).

Solmaz, S. & Holzinger, F. In 2019 IEEE International Conference on Connected Vehicles and Expo (ICCVE) 1–8 (IEEE).

Jia, X., Hu, Z. & Guan, H. In 2011 9th World Congress on Intelligent Control and Automation. 1224–1230 (IEEE).

Wu, D., Zhuang, Z., Xiang, C., Zou, W. & Li, X. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops.

Ou, J., Huang, P., Zhou, J., Zhao, Y. & Lin, L. Automatic extrinsic calibration of 3D lidar and multi-cameras based on graph optimization. Sensors 22, 2221 (2022).

Ikram, M. Z. & Ahmad, A. In 2019 IEEE Radar Conference (RadarConf). 1–5.

Siegert, J., Schlegel, T., Groß, E. & Bauernhansl, T. Standardized coordinate system for factory and production planning. Procedia Manuf. 9, 127–134 (2017).

Standardization, I. O. f. Road vehicles — Vehicle dynamics and road-holding ability — Vocabulary. (2011).

Margies, L. & Mueller, R. In International ATZ Conference. 123–138 (Springer).

Fori Automation, L. Automotive End of Line Systems with Fori China. https://foriauto.wordpress.com/category/end-of-line-systems/ (2024).

Dynomerk ADAS Calibration Systems. https://www.dynomerk.com/products/adas-calibration-systems/ (2024).

Zhang, Z. Proceedings of the Seventh IEEE International Conference on Computer Vision. 666–673 (IEEE).

Eggert, D. W., Lorusso, A. & Fisher, R. B. Estimating 3-D rigid body transformations: a comparison of four major algorithms. Mach. Vis. Appl. 9, 272–290 (1997).

Kugathasan, P. & McMahon, C. Multiple viewpoint models for automotive body-in-white design. Int. J. Prod. Res. 39, 1689–1705 (2001).

Challis, J. H. A procedure for determining rigid body transformation parameters. J. Biomech. 28, 733–737. https://doi.org/10.1016/0021-9290(94)00116-L (1995).

Jing, J., Gao, T., Zhang, W., Gao, Y. & Sun, C. Image feature information extraction for interest point detection: A comprehensive review. IEEE Trans. Pattern Anal. Mach. Intell. 45, 4694–4712 (2022).

Zhang, Y. J. 3-D Computer Vision: Principles, Algorithms and Applications. 37–65 (Springer, 2023).

Batchelor, B., Waltz, F., Batchelor, B. & Waltz, F. Machine vision for industrial applications. Intell. Mach. Vis. Tech. Implementat. Applic.. 1–29 (2001).

Wang, A., Qiu, T. & Shao, L. A simple method of radial distortion correction with centre of distortion Estimation. J. Math. Imaging Vis. 35, 165–172 (2009).

Wang, G. et al. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 23, 7190 (2023).

Safaldin, M., Zaghden, N. & Mejdoub, M. An improved YOLOv8 to detect moving objects. IEEE Access (2024).

Korman, S., Reichman, D., Tsur, G. & Avidan, S. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2331–2338.

Kanatani, K., Sugaya, Y. & Kanazawa, Y. Ellipse Fitting for Computer Vision: Implementation and Applications (Springer Nature, 2022).

Rong, W., Li, Z., Zhang, W. & Sun, L. In IEEE International Conference on Mechatronics and Automation. 577–582 (IEEE, 2014).

Zanuttigh, P. et al. Time-of-flight and structured light depth cameras. Technol. Applic. 978 (2016).

Chen, X., Xi, J., Jin, Y. & Sun, J. Accurate calibration for a camera–projector measurement system based on structured light projection. Opt. Lasers Eng. 47, 310–319 (2009).

Weisstein, E. W. Euler angles. https://mathworld.wolfram.com/ (2009).

Author information

Authors and Affiliations

Contributions

Conceptualization, D.Z. and G.Y.; methodology, D.Z.; software, G.Y.; validation, G.Y. and J.J.; formal analysis, D.Z.; investigation, G.Y.; resources, D.Z.; data curation, D.Z.; writing—original draft preparation, G.Y.; writing—review and editing, D.Z.; visualization, Z.J.; supervision, J.J.; project administration, G.Y.; funding acquisition, L.J. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ding, Z., Gong, Y., Lin, J. et al. A vehicle coordinate system reconstruction method for end of line calibration applications. Sci Rep 15, 20806 (2025). https://doi.org/10.1038/s41598-025-07269-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-07269-y