Abstract

Sperm morphology assessment is recognised as a critical, yet variable, test of male fertility. This variability is due in part to the lack of standardised training for morphologists. This study utilised a bespoke ‘Sperm Morphology Assessment Standardisation Training Tool’ to train novice morphologists using machine learning principles and consisted of two experiments. Experiment 1 assessed novice morphologists’ (n = 22) accuracy across 2- category (normal; abnormal), 5- category (normal; head defect, midpiece defect, tail defect, cytoplasmic droplet), 8- category (normal; cytoplasmic droplet; midpiece defect; loose heads and abnormal tails; pyriform head; knobbed acrosomes; vacuoles and teratoids; swollen acrosomes), and 25- category (normal; all defects defined individually) classification systems, with untrained users achieving 81.0 ± 2.5%, 68 ± 3.59%, 64 ± 3.5%, and 53 ± 3.69%, respectively. A second cohort (n = 16) exposed to a visual aid and video significantly improved first-test accuracy (94.9 ± 0.66%, 92.9 ± 0.81%, 90 ± 0.91% and 82.7 ± 1.05, p < 0.001). Experiment 2 evaluated repeated training over four weeks, resulting in significant improvement in accuracy (82 ± 1.05% to 90 ± 1.38%, p < 0.001) and diagnostic speed (7.0 ± 0.4s to 4.9 ± 0.3s, p < 0.001). Final accuracy rates reached 98 ± 0.43%, 97 ± 0.58%, 96 ± 0.81%, and 90 ± 1.38% across classification systems 2-, 5-, 8- and 25-categories respectively. Significant differences in accuracy and variation were observed between the classification systems. This tool effectively standardised sperm morphology assessment. Future research could explore its application in other species, including in human andrology, given its accessibility and adaptability across classification systems.

Similar content being viewed by others

Introduction

Sperm morphology is one of the three key foundational semen quality assessments in veterinary and human medicine, along with ejaculate concentration and sperm motility. Unlike concentration and motility which can be assessed objectively with technologies like computer assisted semen analysis (CASA) systems, morphology is primarily assessed subjectively by laboratory technicians. Without robust standardisation protocols, subjective tests are prone to bias and human error, leading to inaccurate and highly variable results. The importance of standardisation of sperm morphology assessment has been the subject of several studies1–3. These studies universally acknowledge that standardisation protocols, along with quality control (QC), quality assurance (QA), and proficiency testing (PT) are critical in andrology laboratories to ensure reliable results. Yet most efforts to date have focused exclusively on developing standardised methodologies for semen sample preparation, neglecting the standardisation of training and re-training protocols for morphologists4.

Standardisation training has been long supported in industry as an important factor to ensure reliable results, yet there is no widely accepted method to train or standardise morphologists5,6. Studies on laboratory adherence to the WHO human sperm morphology classification standards have agreed that one of the largest contributors to variation in results is the inherent subjectivity of the test and the lack of a traceable standard7. Training tools have the potential to address this issue, as they can introduce traceability into training programs and be made consistent across users. Current external quality control programs such as the German QuaDeGA and UK NEQAS QC program, provide limited samples to participating laboratories and are often done infrequently due to their expense and availability8,9. If a morphologist fails the QC assessment and produces results outside of the acceptable range of variation for that QC program, the recommended re-training would require a more senior morphologist to do side-by-side assessment of sperm10. This method relies heavily on the senior morphologist also being within acceptable ranges of variation, and is a time consuming endeavour for both the trainee and the trainer. Software that allows users to train indefinitely and independently would remove this potential source of bias and expense. As sperm morphology is a subjective test, the training tool required a robust dataset of validated classified sperm as well as a method of training novices that could be considered objective in nature.

Validating the classification of subjective data is an area that has been explored in detail in the machine learning space. Supervised learning is a key method used to train machine learning models and relies upon the model ‘learning’ how to classify images from labelled datasets (Saravanan and Sujatha11). If the model is provided incorrectly labelled data, the accuracy potential of the model is compromised, as it can only learn from data it is provided with. This methodology can be effectively adapted for training humans. Trainees must be provided with high-quality data during training, in order to achieve accuracies of assessment comparable to experts. In machine-learning parlance, this data quality is referred to as “ground-truth” and is essential in fields such as medical imaging, which rely on subjective expert classification of images, in order to produce accurate models. Ground truth is established by the consensus of diagnosis of multiple experts for each image (Lebovitz, Levina and Lifshitz-Assaf12). By applying a similar strategy of expert consensus to the image datasets used for human training, it is possible to ensure that individuals are trained to a higher standard than would be achieved using data derived from a single expert. The application of this methodology to the development of the training tool used in the present study can be found in more detail in Seymour et al. (2025).

There are a variety of different classification systems used to identify sperm morphological abnormalities, but at the simplest level, morphologists must be able to distinguish normal from abnormal sperm. In the sheep industry, for example, this ‘normal/abnormal’ test is the primary method of morphology assessment13, which can influence which animals are used in large scale breeding programs. It can be done on a basic compound microscope fitted with phase contrast optics and is quicker to apply than more complicated classification systems. Regardless of its simplicity, this classification system can still be subject to bias. Our previous research has shown that expert morphologists only agreed on a normal/abnormal classification for 73% of the sheep sperm images shown14. In the cattle industry a more complex classification system is used, with cattle veterinarians in Australia adhering to an 8-category classification system15. Another classification method utilises 5-categories, and has sperm abnormalities categorised based on their location on the sperm i.e. head, midpiece, tail16. Other classification systems that have been used include categorising abnormalities based on their origin (primary, secondary, tertiary), whether its effects on fertility can be compensated for by increasing the insemination dosage (compensable or non-compensable) and by the effect on fertility (major or minor) (Koziol and Armstrong17). While a more complicated and hence informative classification system seems appealing, our research has revealed that a more complicated classification system causes more difficulty with correctly identifying the abnormality14.

The aim of the present study was to validate a previously developed14 training tool that could be used to train and test morphologists using machine learning principles of supervised learning and expert consensus labels (“ground truth). It was designed with the objective of being adaptable for multiple category systems, species and microscope optic types.

It was hypothesised that users would have a high level of variation in initial test accuracy and that variability, accuracy and speed would improve with training. Different classification systems were hypothesised to impact results, with the more complex systems (i.e. 25-categories) having lower overall accuracy and higher variability than less complex classification systems (i.e. the 2-category ‘normal/abnormal’ system).

Results

Without training, users demonstrated high variation and lower accuracy of morphological classification of ram sperm

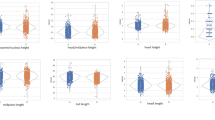

Experiment 1 explored untrained user accuracy and found that there was a very high degree of variation amongst users (CV = 0.28) with accuracy scores ranging from 19 to 77% (Fig. 1). There was also a significant difference in the accuracy of users when using different category systems (81 + /- 2.55%, 68 + /- 3.59%) , 64 + /- 3.5% and 53 + /- 3.69% for 2, 5, 8, and 25 respectively, p < 0.001, Fig. 1). On average users spent 9.5 + /- 0.8 s labelling an image but there was no significant trend to indicate that duration labelling impacted accuracy for Experiment 1.

Boxplot of accuracy results from experiment 1 (n = 22). Users with no prior experience in sperm morphology assessment attempted the 100 sperm-test without access to the video or visual aid. Users each classified the same 100 ram sperm (shown in random order). Results per classification system used (25, 8, 5 and 2 morphological categories) are shown. * indicates that results were statistically significantly different when compared to the other classification systems.

Training increased accuracy and reduced variation, with the greatest improvement seen after the 1st intensive day of training

Accuracy of assessment increased across the testing period (test 1- test 14) with a starting mean accuracy for test 1 of 82 + /- 1.05% and a finishing mean accuracy for test 14 of 90% + /- 1.38% (p < 0.001). Variation also significantly improved across the testing period, with the largest difference being recorded between day 1 (test 1–4) and days 2–5 (tests 5–14) (p < 0.001, Fig. 2). Accuracy results appeared to plateau following day 1 of testing, with small but non-significant fluctuations between the following weeks (Fig. 3). While all users improved over the duration of the study, they all exhibited different levels of variation with coefficients of variation ranging from 0.027 to 0.137 (Fig. 4).

Dunn Tests Pairwise Comparisons heatmap depicting significant differences in variation of accuracy of all tests across the 4 week study period. Results were taken from users classifying 100 sperm each (n = 1600) per test and average results per test were compared. There was no significant variation amongst results for day 1 (tests 1–4, red colour) or amongst days 2–5 (tests 5–14 red colour). Variation was significantly different when comparing day 1 results to days 2–5 (tests 5–14, blue colour).

Accuracy increased as duration spent labelling per image increased, but users became quicker as the experiment progressed

The time taken to classify an image per user was recorded and this significantly declined over the duration of the study in experiment 2 (test 1–14)(p < 0.001). For test 1 the average time spent labelling per image was 7.0 + /- 0.1 s, while for test 14 it was 4.9 + /- 0.3 s (Fig. 5). Within each test type, the duration spent classifying was significantly different for tests 1–4 (day 1) when compared to all following results (Fig. 6) and inversely correlated the accuracy results (Fig. 7).

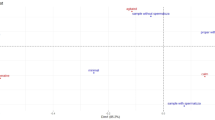

Dunn Tests Pairwise Comparisons heatmap depicting significant differences in duration (ss:mm.0) spent classifying images using the 25 morphological categories classification system per test. Results were taken from users classifying 100 sperm each (n = 1600) per test and average duration at identifying each category were compared. There was no significant difference (red colour) between the duration spent classifying for the first 4 tests (day 1). The results from tests during day 1 were significantly different from all other tests (5–14, days 2–5).

Mean (+ /- SEM) accuracy of users (N = 16) classifying 100 ram sperm per test using 25 morphological categories compared to mean (+ /- SEM) duration time spent labelling each image (n = 1600) (mm:ss.0) for the first test of each testing day across the 4 week study period (Days 1–2 indicate the two intensive training days in week 1, Days 3–5 indicate the follow up tests in weeks 2–4).

The fewer the categories included in the morphology classification system the higher the accuracy and the lower the variation of the results

There was a significant difference in the accuracy between tests results when different classification systems were used (p < 0.05). On average, the 2-category system had an accuracy of 98% (± 0.43%) for the final test, with the 5, 8 and 25-category systems having an average accuracy on the final test of 97 + /- 0.58%, 96 + /- 0.81% and 90 + /- 1.38% respectively (Fig. 8). For Test 1, the accuracy of all the classification systems were significantly different from each other (p < 0.05), for tests 2–14 the 2-category and 5-category classification systems were not significantly different, for tests 2 and 4–14 the 5-category and 8-category classification systems were not significantly different and for tests 6–14 the 2-category and 8-category classification systems were not significantly different (p < 0.05). For all tests the 25-category classification system results were significantly lower than the 2-,5- and 8-category classification systems (p < 0.05). The variation in accuracy results were significantly different for all classification systems (p < 0.001). Despite the classification system used, users were still able to achieve an accuracy of greater than 90% for all systems for the final test (14). As shown for the 25-category system in "Without training, users demonstrated high variation and lower accuracy ofmorphological classification of ram sperm" of this study, user accuracy similarly plateaued after the first day of intense training using the 8, 5 and 2-category systems. The most dramatic improvement was seen for the 25-category system which yielded on average a 7% increase in accuracy. The 2, 5 and 8-category systems yielded on average a 3.1%, 4.3% and 5.7% increase in accuracy respectively (p < 0.05, Fig. 9).

Mean accuracy of users (N = 16) in experiment 2 following morphological classification of 100 ram sperm on 14 occasions (i.e. n = 224 accuracy scores, N = 22,400 sperm classified) per classification system (25, 8, 5 and 2 morphological categories). Box plots with common superscripts are not statistically significantly (p < 0.05) different.

The identification of head abnormalities was less accurate

Within the 8 and 5 category systems, head abnormalities were the most difficult for users to identify. Within head abnormalities, acrosome defects contributed to the largest portion of incorrect labels. There was a significant difference in the level of variation seen for each label across the experiment, indicating that there was an improvement throughout the training period (p < 0.05). In both the 8 (Fig. 10) and 5-category (Fig. 11) systems, each morphology category exhibited significantly different (p < 0.05) degrees of accuracy variation, suggesting that certain abnormalities were easier to label than others. For the 8-category labels, pyriform heads were omitted from the data analysis as the low number of examples in the test set made statistical analysis unreliable. Among the remaining seven categories, significant differences were observed between all categories except between category 1 (normal) and category 2 (proximal cytoplasmic droplets), as well as between category 4 (loose/multiple heads and abnormal tails) and category 8 (swollen acrosomes) (Fig. 12). For the 5-category labelling system, midpiece abnormalities were significantly different compared to all other categories (Fig. 13). Between the first and last test for the 5-category system there was a significant improvement in accuracy amongst users when labelling for normal sperm and sperm with a head abnormality (p < 0.05). While for the 8-category system there was a significant improvement for normal sperm and for swollen or missing acrosomes.

Comparison of mean (+ /- SEM) user accuracy at classifying 100 ram sperm images/test each when using 8 morphological categories (Normal, ‘Proximal cytoplasmic droplets’, ‘Midpiece abnormalities’, ‘Loose/Multiple heads and abnormal tails’, ‘Knobbed acrosomes’, ‘Vacuoles and teratoids’ and ‘Swollen acrosomes’). Results are shown for the first test of each of the 5 testing days. The category ‘Pyriform heads’ was omitted from the data set due to insufficient occurrences in the population to allow for statistical analysis.

Dunn Tests Pairwise Comparisons heatmap depicting significant differences in variation of accuracy of the 8 morphological categories across the 4-week study period. Results were taken from users classifying 100 sperm each (n = 1600) per test and average accuracy at identifying each category were compared. There was no significant difference (red colour) when comparing categories ‘Midpiece abnormalities’ to ‘Vacuoles and teratoids’ as well as ‘Swollen acrosomes’ to ‘Loose/multiple Heads and abnormal tails’. The accuracy of all other morphological categories were significantly different from each other (blue colour).

Dunn Tests Pairwise Comparisons heatmap depicting significant differences in variation of accuracy of the 5 morphological categories across the 4-week study period. Results were taken from users classifying 100 sperm each (n = 1600) per test and average accuracy at identifying each category were compared. There was no significant difference (red colour) when comparing categories ‘Normal to ‘Tail’. Comparison of categories ‘Midpiece’ to all other categories were significant (blue colour).

Discussion

The training tool used in the current study proved to be an effective method of training novices in sperm morphology assessment. For the first test of the study, accuracy results were highly variable and this variation decreased as the amount of training increased, which supported the first hypothesis that training would minimise variation. Accuracy also improved following training with the greatest improvement being seen between tests 1–4 and tests 5–14, which suggests that one day of intensive training is sufficient for users to reach a highly reliable and repeatable accuracy score. Duration of labelling significantly reduced during the study and inversely reflected the accuracy results. Between the first and last test the duration spent labelling improved from 6.7 to 4.7s. Similar to the accuracy results, a plateau was observed following the first day of intensive training, indicating that users reached both peak accuracy and maximum efficiency within three hours of training. This result supports the second hypothesis that the duration spent labelling will decrease following training. The classification system (25, 8, 5 and 2-categories) was seen to significantly impact the results, with accuracy improving as the classification system simplified. The 25-category system had an accuracy of 90% for the final test, with the 8, 5 and 2-category systems having an average accuracy on the final test of 96%, 97% and 98% respectively. This supported the final hypothesis that more complex category systems will yield on average lower accuracy amongst users. Following training, the difference in accuracy results between 2-, 5- and 8- category classification systems was seen to be mitigated with there being no statistically significant difference in accuracy between the classification systems after test 6. Conversely accuracy results from using the 25-category system remained statistically significantly lower than the other systems for the entire study. Regardless, the training tool improved user accuracy and reduced variation for all four classification systems used. Users reached a high level of accuracy quicker than expected, and results indicate that one day of intensive training (3 h) is sufficient to reach an acceptably high accuracy. It can be seen from these results that providing users with a quality dataset labelled following expert consensus, and employing the ‘supervised learning’ methodology from machine learning enabled users to morphologically classify sperm to a high level of accuracy. This training tool will allow morphologists to be trained and standardised quickly and independently to produce reliable and accurate results. Retraining once per week ensures that this high level of accuracy can be maintained indefinitely.

Use of the training tool significantly improved accuracy and reduced variation amongst trainee morphologists. This result demonstrates that a consistent and robust standardised training tool should be able to improve the degree of agreement between morphologists. Previous research into the standardisation of sperm morphology assessment has agreed that a traceable standardisation training method that assesses and trains users on a sperm by sperm basis would largely contribute to reducing the variation amongst morphologists and across labs7,10. Current external QA programs, the only standardisation method used across laboratories, have yielded inconsistent results and primarily focus on the adherence to the protocol of assessing sperm morphology rather than the training of it8. In comparison, the training tool was developed specifically to improve training using supervised learning and the establishment of ground truth, resulting in an increase in accuracy for every user even when utilising the complex 25 morphological category classification system. The lack of any structured training in external QA programs does not provide sufficient support to meaningfully improve accuracy when compared to the training tool developed for this study. In addition to not providing structured training, industry use of internal QA programs is limited, with reportedly only 25% of specialised human reproductive biology laboratories employing any form of QA9. The implementation of a training tool, such as the one developed in this study, offers the industry a means to standardise practices internally. Furthermore, if widely adopted, it could facilitate standardisation across laboratories. This study used unstained ram sperm imaged with DIC optics, which differs from human clinical standards that rely on stained smears18,19. Future work aiming to extend the training tool to other species or clinical applications should explicitly evaluate the impact of different sample preparation and imaging techniques on classification accuracy. Further research should also be conducted to explore what is the optimal training and standardisation schedule for morphologists to reach and maintain a high level of accuracy. Variation and accuracy improvements tended to plateau after the first week of intensive training, and most improvements were made after the first day of training. The results from this study also indicated that there was little loss in recall between weeks after the 2nd week of the experiment, suggesting that a less rigorous standardisation schedule would also work effectively. Future follow up studies, perhaps 6–12 months post-training, would be of interest to determine the durability of the learning outcomes. It would also be of interest to investigate how the training tool impacts the variability of expert morphologists.

Despite training and re-training throughout the experimental period, variation differed between users and not all users improved to the same degree. This suggests that there is an inherent aptitude required to have low levels of variation despite rigorous and repeated standardisation training. Users also appeared to differ on an individual level when considering duration spent classifying. Across the user population, duration and accuracy appeared to become inversely correlated as users became more competent and consequentially more efficient at classifying, however this interaction wasn’t significant. Though duration did significantly decline across the study period, users that spent more time classifying did not exhibit significantly higher accuracies. This suggests that time spent classifying could mainly be attributed to human variation. It should be noted that for this study users were sourced from a single institution and were all undergraduate students. The homogeneity of the study group limits generalisability of the study, and future research should focus on exploring the aptitude of users from a variety of educational and professional backgrounds to determine if the results seen are consistent across diverse users. Existing literature supports innate individual variation in accuracy. For medical diagnostic imaging, training of expert radiologists has proven insufficient to improve the variability of results20,21. This research proposed that while training is essential to maximise a classifier’s accuracy, some individuals may be ill-suited to the task of morphological assessment and may never reach the same level of competency as an individual who is inherently skilled. For the reproductive industry, this implies that to minimise variation among morphologists, implementing a prerequisite assessment to evaluate natural aptitude may be useful for identifying and filtering out candidates who are less suited for the role. Regardless of that more drastic measure, the case remains that standardised training is essential for accurate morphological assessment, particularly if using complex classification systems.

The training tool was effective at improving the accuracy of morphological classification using a variety of systems at varying degrees of complexity. Within classification systems, some variation was seen between users’ abilities to classify certain categories. For example, in the 8-category classification system, users significantly improved at correctly identifying swollen or missing acrosomes between the first and last test. External QC training programs in human reproduction evaluate morphologists based on their overall accuracy at a population level. However, if an individual falls outside the recommended thresholds for variation, these systems are unable to provide specific feedback on the aspects of their assessment that were incorrect5. Additionally, a morphologist’s accuracy is typically only assessed using the 2-category normal/abnormal classification system, rather than the recommended standard of the 5-category location based classification system19. This can lead to misidentification of important but difficult to classify categories, such as head abnormalities22. Early studies into sperm morphology training explored using multiple categories to train users, but found that in 43% of cases users reversed their classification on their second attempt23, a trend not seen in our study employing the training tool. We propose that this difference is primarily due to the training method, with the training tool requiring active interaction from each individual user while the training conducted by23 was done in a classroom setting. In this comparison, it is evident that applying the machine learning methodology of supervised learning, wherein individuals are independently assessed on a sperm-by-sperm basis and receive immediate feedback, is an effective technique in improving human training. As this study indicates that changing the classification system will significantly impact the user’s accuracy, using different classification methods for training and actual assessment will prevent any meaningful assessment of competency. A limitation of this study is the lack of direct comparison to more traditional training methods, future comparative studies would provide clearer insights into how training methods impact accuracy and more clearly contextualise the effectiveness of the training tool within industry.

Conclusion

The Sperm Morphology Assessment Standardisation Training Tool proved to be an effective method of performing standardisation training for novice sperm morphologists. All users exhibited a significant increase in accuracy, reduction in variation and reduction in duration classifying across the 4-week study period. Different classification methods, such as using 25, 8, 5 or 2 morphological categories were seen to significantly impact accuracy, with the more complex category systems resulting in a lower accuracy. Despite this, users still achieved a high level of competency on the 25-category classification system with an average of 90% accuracy for the final test and an average of 98% for the simple 2-category system. The training tool’s innovative approach, enabling users to train consistently and independently with real-time feedback, distinguishes it from current industry practices. Its ability to facilitate highly controlled testing, coupled with the capacity for simultaneous use by multiple users within a single laboratory, makes it an excellent resource for internal quality assurance. Additionally, its availability for use at any time for both testing and training provides users with the flexibility to re-standardise themselves as frequently as necessary.

Further investigation into the optimal training schedule required to standardize a novice morphologist and the duration for which training effects are retained before re-standardisation becomes necessary would be valuable. The demonstrated effectiveness of the training tool highlights the feasibility of achieving high levels of standardisation in sperm morphology assessment, underscoring the need for updated gold standards in quality assurance, control, and proficiency testing within the field.

Methods

To investigate if novice users could become competent at sperm morphology assessment a training tool needed to be developed. The training tool is formally titled the ‘Sperm Morphology Assessment Standardisation Training Tool’, for brevity it will be referred to as the ‘training tool’. The training tool used in this study was developed in a previous project, as described in Seymour et al. (2025). That study, which involved the use of animal samples, was conducted and reported in accordance with the ARRIVE guidelines (PLOS Biol 8(6): e1000412, 2010). All animal procedures carried out in accordance with procedures that were approved by the University of Sydney animal ethics committee (project number 2019/1678). This study involved data collected from an internal laboratory training tool used for teaching new research assistants. All participants were members of the laboratory at time of involvement in the study. The University of Sydney Human Research Ethics Committee was consulted and advised that formal ethics approval was not required given the low-risk nature of the project and its internal educational context. Nonetheless, all study participants provided informed consent for the use of their de-identified data in research and publication and all research was performed in accordance with National Statement on Ethical Conduct in Human Research (Australian Government, National Health and Medical Research Council, 2025).

Experimental design

This study consisted of two experiments. Experiment 1 examined the accuracy of novice morphologists without prior training, while Experiment 2 assessed whether repeated interactive training could improve user accuracy. All students that were included in this study volunteered to participate of their own volition. Students that had previous experience with sperm morphology assessment, advanced semen assessment or any form of morphological cell assessment, were not included in the study. Students that participated were noted as having an interest in the field of assisted reproduction for livestock, despite no prior exposure to the industry.

Experiment 1 was run using undergraduate students (n = 22) with no prior experience in sperm morphology assessment. These novice users were asked to assess the same 100 sperm each within the training tool’s ‘Assess a semen sample’ test. Users were shown images of individual sperm in a random order. A second group of undergraduate students (n = 16), were shown supplementary materials and a visual aid (supplementary materials) which outlined the morphological categories with images and a written description, as well as an introductory video prior to undertaking the test. These are detailed further in the following study14. These results were compared to assess if there was a significant difference between untrained users, and users that had access to the supplementary material.

Experiment 2 employed further training of the ‘trained’ users (n = 16) over a 4-week experimental period. The first week of the experimental period the users were intensively trained in sperm morphology assessment, with regular tests performed to monitor progress. Two intensive training days were performed in week 1, wherein users underwent a test-train-test cycle. Users initially attempted to assess 100 sperm each (a “100 sperm test”). These sperm were the same throughout the testing period and shown randomly to each user on every attempt. Users then underwent a 1 h training period in which they used the interactive training mode which involved attempting to classify sperm then being given immediate feedback of which of their chosen categories were correct or incorrect. At the end of 1 h of training, the novices re-attempted to assess 100 sperm each. Following a 15 min break the novices did another 1 h of training followed by another 100 sperm test. Finally, they repeated this process again, amounting to 3 h of training and 4 100 sperm test results. For week 1, over the two intensive days of training, the novices completed 6 h of training and 8 100 sperm test results. The following weeks (2–4), the novices returned once a week on the same day and did one test/train/test cycle, amounting to 1 h of training and 2 100 sperm test results per week.

Sperm images generation

To populate the training tool with images of sperm, ejaculates from 72 ram sires were imaged using a 40 × magnification differential interference contrast (DIC) objective lens on a BX53 Olympus microscope. As per livestock industry standards, samples were fixed prior to imaging but not stained or smeared18. From the resulting field of view (FOV) images individual sperm were cropped out of the FOV so that only a single sperm would be visible at a time to the training tool user.

All sperm were then classified by three expert morphologists using the 30 morphological categories classification system explained in the following section of this study "Accuracy increased as duration spent labelling per image increased, but usersbecame quicker as the exper iment progressed". Consensus scores were generated to determine if the expert morphologists agreed on the classifications given to each individual sperm. Only sperm classifications that achieved 100% consensus amongst the experts were used in the training tool.

Sperm morphological categories

All images used in the training tool have been classified with an extensive 30-category system which includes the following labels;

-

1.

Normal

-

2.

Abaxial tail

-

3.

Bent midpiece

-

4.

Segmental aplasia

-

5.

Slightly pyriform head

-

6.

Narrow head

-

7.

Detached head

-

8.

Multiple heads

-

9.

Pyriform heads

-

10.

Microcephalic head

-

11.

Macrocephalic head

-

12.

Rolled head

-

13.

Swollen acrosome

-

14.

Knobbed acrosome

-

15.

Diadem defect

-

16.

Nuclear vacuole

-

17.

Teratoid

-

18.

Missing acrosome

-

19.

Distal midpiece reflex

-

20.

Broken neck

-

21.

Multiple midpieces

-

22.

Pseudodroplet

-

23.

Dag defect

-

24.

Corkscrew defect

-

25.

Distal cytoplasmic droplet

-

26.

Proximal cytoplasmic droplet

-

27.

Stumped tail

-

28.

Multiple tails

-

29.

Reflex tail

-

30.

Coiled tail.

For the purposes of this study the users were asked to classify the sperm using 25 of the 30 categories listed above. The categories, abaxial tail, bent midpiece, segmental aplasia, slightly pyriform head and narrow head were all considered minor abnormalities that would still functionally let the sperm be defined as ‘normal’. As the most important aspect of the training was being able to identify normal sperm, these labels were all combined into the ‘normal’ category for the experiment. To be deemed ‘correct’ all possible accurate categories for one singular image had to be selected by the user.

At the conclusion of the study, user data were re-analysed to determine whether the users would have correctly assigned labels under alternative classification systems. To achieve this, the 25 original morphological categories were divided amongst the categories for each classification system, they are listed amongst the following classification systems as category numbers as listed above);

-

2-categories13

-

(1)

normal (Category 1)

-

(2)

abnormal (Categories 7–30)

-

5-category system16

-

(1)

Normal (Category 1)

-

(2)

Head (Categories 7–18)

-

(3)

Midpiece (Categories 19–24)

-

(4)

Tail (Categories 27–30)

-

(5)

Cytoplasmic droplet/residue (Categories 25–26)

-

8 category system15

-

(1)

Normal (Category 1)

-

(2)

Proximal cytoplasmic droplet (Category 26)

-

(3)

Midpiece abnormalities (Categories 19–24)

-

(4)

Loose/multiple heads and abnormal tails (Categories 7–8, 25, 27–30)

-

(5)

Pyriform heads (Category 9)

-

(6)

Knobbed acrosomes (Category 14)

-

(7)

Vacuoles and teratoids (Categories 10–12, 15–17)

-

(8)

Swollen/missing acrosomes (Categories 13 and 18)

Statistical analysis

All statistical analyses were performed in R (Version 2023.06.1 + 524). The Kruskal–Wallis test was used to assess if there was a significant difference in the degree of variation amongst the tests, classification systems and individual categories. Dunn’s test was also used to visualise the significance of pairwise comparisons in a heatmap. A Wilcoxon test was run to determine if there was a significant difference in the accuracy scores between tests and between the untrained and trained groups in experiment 1. A p-value of less than 0.05 was considered statistically significant and results are reported as mean ± standard error of the mean (SEM).

Data availability

The image dataset and training tool are not publicly available. All data generated in this study is available in the supplementary materials. All data generated in this study is available in the supplementary materials.

References

Björndahl, L. et al. Standards in semen examination: Publishing reproducible and reliable data based on high-quality methodology. Hum. Reprod. 37(11), 2497–2502 (2022).

Mallidis, C. et al. Ten years’ experience with an external quality control program for semen analysis. Fertil. Steril. 98(3), 611-616.e614 (2012).

Matson, P. L. Andrology: External quality assessment for semen analysis and sperm antibody detection: Results of a pilot scheme. Hum. Reprod. 10(3), 620–625 (1995).

Keel, B. A. Quality control, quality assurance, and proficiency testing in the andrology laboratory. Arch. Androl. 48(6), 417–431 (2002).

Barratt, C. L. R., Björndahl, L., Menkveld, R. & Mortimer, D. Eshre special interest group for andrology basic semen analysis course: A continued focus on accuracy, quality, efficiency and clinical relevance†. Hum. Reprod. 26(12), 3207–3212 (2011).

Carrell, D. T. & De Jonge, C. J. The troubling state of the semen analysis. Andrology 4(5), 761–762 (2016).

Tomlinson, M. J. Uncertainty of measurement and clinical value of semen analysis: Has standardisation through professional guidelines helped or hindered progress?. Andrology 4(5), 763–770 (2016).

Ahadi, M. et al. Evaluation of the standardization in semen analysis performance according to the who protocols among laboratories in tehran, iran. Iran J. Pathol. 14(2), 142–147 (2019).

Riddell, D., Pacey, A. & Whittington, K. Lack of compliance by uk andrology laboratories with world health organization recommendations for sperm morphology assessment. Hum. Reprod. 20(12), 3441–3445 (2005).

Agarwal, A. et al. Sperm morphology assessment in the era of intracytoplasmic sperm injection: Reliable results require focus on standardization, quality control, and training. World J. Mens Health 40(3), 347–360 (2022).

Saravanan, R & Sujatha, P., A state of art techniques on machine learning algorithms: A perspective of supervised learning approaches in data classification, paper presented to 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), (2018).

Lebovitz, S., Levina, N. & Lifshitz-Assaf, H. Is ai ground truth really true? The dangers of training and evaluating ai tools based on experts’ know-what’. MIS Quarter. 45, 1501–1526 (2021).

Evans, G. & Maxwell, W. M. C. Salamon’s artificial insemination of sheep and goats (Butterworths, 1987).

Katherine R, Seymour Jessica P, Rickard Kelsey R, Pool Taylor, Pini Simon P, de Graaf. Development of a sperm morphology assessment standardization training tool.Biol Methods Protoc 10(1) (2025). https://doi.org/10.1093/biomethods/bpaf029

Perry, V. E. A. The role of sperm morphology standards in the laboratory assessment of bull fertility in australia. Front Vet. Sci. 8, 672058 (2021).

Menkveld, R. Sperm morphology assessment using strict (tygerberg) criteria. In Spermatogenesis Methods and protocols (ed. Carrell, D. T.) (Humana Press, 2013).

Koziol, J. H. & Armstrong, C. L. Manual for breeding soundness examination of bulls: Chapter 8 evaluation of semen quality 2nd edn. (Society of Theriogenology, 2018).

Beggs, D. S., McGowan, M. R., Irons, P. C., Perry, V. E. A. & Sullivan, T. Bullcheck® veterinary bull breeding soundness evaluation – 4th edn. (Australian Cattle Veterinarians, 2024).

WHO. Who laboratory manual for the examination and processing of human semen (WHO Press, 2021).

Birchall, D. Spatial ability in radiologists: A necessary prerequisite?. Br. J. Radiol. 88(1049), 20140511 (2015).

Sunday, M. A., Donnelly, E. & Gauthier, I. Individual differences in perceptual abilities in medical imaging: The vanderbilt chest radiograph test. Cogn. Res. Princ. Implic. 2(1), 36 (2017).

Ombelet, W. et al. Multicenter study on reproducibility of sperm morphology assessments. Arch. Androl. 41(2), 103–114 (1998).

Davis, R. O., Gravance, C. G. & Overstreet, J. W. A standardized test for visual analysis of human sperm morphology. Fertil. Steril. 63(5), 1058–1063 (1995).

Acknowledgements

The author would like to thank the Christian Rowe Thornett Stipend Scholarship and the members of the Animal Reproduction Group at the University of Sydney for their ongoing support. The authors also thank M Seymour and J Samm for their valuable comments and contributions to the design of the training tool.

Author information

Authors and Affiliations

Contributions

KS designed and conducted the experiments, analysed the data, and drafted the manuscript. JR contributed to project funding and provided critical review and revisions of the manuscript. KP and TP provided experimental data and manuscript review and revision. SdG conceptualised the study, secured funding, supervised the project, generated experimental data, and provided critical manuscript review and revision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Seymour, K.R., Rickard, J.P., Pool, K.R. et al. Use of a sperm morphology assessment standardisation training tool improves the accuracy of novice sperm morphologists. Sci Rep 15, 21963 (2025). https://doi.org/10.1038/s41598-025-07515-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-07515-3