Abstract

Cloud storage services are widely used due to their convenience and flexibility. However, the presence of a large amount of duplicate data in the cloud imposes a significant storage burden and increases the risk of privacy breaches. Random Message Locked Encryption (R-MLE) is an effective tool for secure deduplication of cloud data. However, since it is based on bilinear mapping, the comparison of fingerprint tags during deduplication results in substantial computational overhead. To address this issue, we propose a secure deduplication method based on an Autoencoder model. The summary tags generated by the model are used to reduce the number of fingerprint tag comparisons, thereby improving deduplication efficiency. Building on this, this paper further introduces a secure deduplication method based on a Convolutional Autoencoder (CAE) model, which utilizes convolution and pooling operations to reduce the number of parameters in the Convolutional Autoencoder model, thereby decreasing computational and storage overhead. Additionally, it effectively mitigates the problem of overfitting. Experiments conducted on the source code dataset indicate that the proposed approach yields superior deduplication efficiency, reduced model storage requirements, and a more uniform distribution.

Similar content being viewed by others

Introduction

With the implementation of various national strategies such as the “New Infrastructure”, “Internet Plus” and big data, as well as the rapid development of information technologies including 5G, cloud computing, the Internet of Things (IoT), big data and artificial intelligence, a large number of users’ privacy data have been generated1,2. Localized storage incurs high costs for equipment and maintenance. To reduce these, more users are opting for cloud storage. However, duplicate data in the cloud wastes resources and increases computing and communication costs3,4,5. Therefore, the secure deduplication of cloud data has become a hot topic for scholars6,7,8.

At present, the message-locked encryption deduplication method9,10 is one of the important methods to solve the secure deduplication of cloud data. It theoretically explains the feasibility and security of the deduplication of encrypted data. Scholars have built a complete data-secure deduplication framework around this method. However, there is always a problem with the message-locked encryption method: to achieve semantic security, the deterministic encryption method cannot be used, while the random encryption method uses bilinear mapping, which results in a huge computational cost in fingerprint tag comparison. This seriously hinders the practical application of random message-locked encryption.

In recent years, machine learning methods have made breakthroughs in many fields such as image recognition, natural language processing and feature learning and analysis11,12,13. Based on this, Qi et al.14 proposed a cloud data secure deduplication method based on the Autoencoder model. This scheme generates summary tags of ciphertext data through the Autoencoder model and uses the similarity of summary tags to filter out fingerprint tags that do not need to be compared, thus reducing the calculation overhead caused by the calculation of fingerprint tags and improving the deduplication efficiency. The proposed scheme has the following issues: the fully connected autoencoder has a large number of parameters, leading to high training computational costs and prolonged summary tag generation time; excessive parameters may result in local optima or model overfitting; and the high computational complexity leads to excessive storage consumption. To address the problems associated with the naive autoencoder model, this paper further proposes a deduplication method based on the CAE model. The convolution and pooling operations can reduce the number of model parameters, improve the training time of the model and the generation speed of summary tags, and reduce the storage space occupied by the model.

The main contributions of this paper are as follows:

-

In this paper, the convolutional Autoencoder model is introduced into the R-MLE-based secure deduplication scheme, and the similarity of the summary labels generated by the model is used to reduce the number of fingerprint tag comparisonss in the R-MLE deduplication scheme.

-

This paper designs a multi-layer CAE network, which reduces the parameters of the model by using convolution and pooling operations, thereby realizing efficient cloud data deduplication and occupying less storage space.

-

Experiments on the similarity and distribution analysis of summary tags show that the CAE model proposed in this paper exhibits higher similarity and a more uniform distribution.

The first part of this paper introduces the research background of secure deduplication. The second part introduces the related research work in data secure deduplication. The third part briefly introduces the random message-locked encryption deduplication method and the Autoencoder model-based secure deduplication method, points out the problems existing in naive Autoencoder, and describes in detail the secure deduplication scheme based on the CAE model proposed in this paper. The fourth part is experimental analysis. The fifth part is the summary of the research work of this paper.

Related work

The two primary concerns of cloud storage platforms are security and efficiency15. IDC’s research report16 pointed out that almost 80% of the surveyed companies indicated that they were exploring the use of efficient and secure deduplication technology to eliminate redundant data, so as to improve storage efficiency and reduce storage costs17,18.

Bellare et al.19 proposed the framework of Message-Locked-Encryption (MLE) and proved that convergence encryption meets tag consistency security with the help of MLE, which can resist data tampering attacks. Subsequently, more scholars proposed a data deduplication solution based on the framework of MLE20,21,22,23,24, Qi et al.25 proposed a secure deduplication system for encrypted data (AC-Dedup) based on MLE, which achieves secure and efficient dynamic access control while ensuring the effectiveness of duplicate data deletion. However, the key of a single MLE algorithm is generated by plaintext data, which makes it vulnerable to enemy attacks. Jiang et al.26 proposed a random message-locked encryption (R-MLE) deduplication method to achieve semantic security. During the execution of R-MLE, blind signature technology is introduced to encrypt packet data and generate keys, preventing the server from accessing the data information. However, as these schemes rely on bilinear mapping for label comparison, the high computational cost may impact performance, especially in resource-intensive and time-sensitive scenarios, reducing practicality27.

To solve the problem that the random message-locked encryption method in the traditional deduplication scheme has a high computational cost and low deduplication efficiency, a deduplication scheme based on the Autoencoder model is proposed in the literature14. Autoencoder (AE)28is an important unsupervised learning method, primarily composed of an encoder and a decoder, which learns and reconstructs the input data through encoding and decoding. It compresses information using specific algorithms to extract key features, achieving optimization based on the data structure rather than simple information reduction29. The Autoencoder model can effectively reduce the dimension of input data and ensure a better feature extraction effect. In literature14, it is proposed that the Autoencoder model is used to generate summary tags, and the comparison times of fingerprint tags are reduced through summary tags, thus reducing the calculation times of bilinear mapping and the calculation cost, and effectively solving the problem of high computational cost of random message locked encryption in traditional duplicate schemes.

However, the naive Autoencoder model has more parameters, longer training time and larger space. To reduce the storage burden and computing overhead of CSP, this paper further proposes an efficient deduplication method based on the CAE model. Convolutional Neural Network (CNN) is a typical feedforward neural network, which was first proposed by Lecun and has been widely applied by many scholars30,31. At present, scholars have proposed several classical convolutional neural network structures, such as LeNet-532, AlexNet33, VGG34 etc., which have been applied in image classification and recognition, data deduplication, etc. In this paper, the convolutional and pooling operations of CNN are integrated with the autoencoder, and an efficient deduplication method based on the CAE model is proposed. By integrating CNN’s convolution and pooling operations to replace fully connected layers, this method reduces parameters, accelerates training, decreases storage consumption, effectively prevents overfitting, and enhances deduplication efficiency.

Scheme design

In traditional autoencoder models, assuming the number of labels in the label library is \(|T| = n\), the algorithm’s time complexity is \(O(n)\). The performance bottleneck lies in the compareTag method, which requires bilinear mapping calculations. Each computation takes a significant amount of time, ultimately leading to a drastic increase in computational time when \(n\) is large. Literature14 proposed an autoencoder-based approach to generate summary tags and improve the efficiency of data security deduplication using these summary tags. In this approach, the time complexity of fingerprint tag comparison is \(O(m)\), and the overall time complexity is \(O(n + log n + m\)), where m represents the number of candidate tags, which is much smaller than n. Since the compareTag operation is dominant, the algorithm’s performance primarily depends on the size of \(m\). Therefore, in practical applications, the complexity can be approximated as \(O(m)\), offering significantly higher efficiency compared to traditional autoencoder models. However, the autoencoder model is a fully connected network. This paper proposes an efficient deduplication scheme based on the Convolutional Autoencoder (CAE) model: by replacing the fully connected layers with convolutional and pooling operations, it significantly reduces the number of parameters, accelerates training speed, and decreases storage consumption. This approach not only effectively prevents overfitting but also improves deduplication efficiency.

Secure deduplication scheme based on CAE model

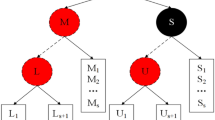

A convolutional neural network (CNN) mainly includes an input layer, convolutional layer, pooling layer and output layer, among which convolution is the core of CNN. CNN model needs to go through convolution, pooling and other steps to achieve the goal of dimension reduction. Based on this, this paper integrates CNN and autoencoder and designs a secure deduplication method based on the CAE model. The experimental environment used for training the model is: Windows 11 operating system, CPU i7 8750h 3.9GHz, memory 16G, and video card GTX1070. In addition, the same data set as literature14 is used, and the specific model is shown in Fig. 1.

In this paper, the multi-layer convolutional neural network is used to replace the naive Autoencoder model, and the operation of convolution and pooling of the model is increased, which greatly reduces the parameters of model training and improves the training efficiency. First of all, the input of the model is the 4096-dimensional data, and then the data enters the CAE with the convolution layer and pooling layer for data feature extraction. In this step, we choose a 4*4 matrix as the convolution kernel, and the step size is set as 2. To avoid overfitting problems in the training process of the model, The gradient descent method is used to update the gradient of the weight and bias values of the model until the model can be trained to meet the requirements. Through the operation of convolution and pooling, the naive Autoencoder is improved and optimized, which reduces the number of parameters and the amount of calculation, and optimizes the training efficiency of the model.

Model similarity analysis

In this paper, the input dimension of the model is also set to 4096. To intuitively present the experimental results, the summary tags are transformed into two-dimensional vectors through simple linear transformations, enabling their visualization in a plane coordinate system. Three different datasets are selected for similarity testing, with one set of original data, several similar data points, and some dissimilar data points. Euclidean distance calculations lead to the conclusion that the distance between similar data and the original data is smaller than that between dissimilar data, verifying the model’s effectiveness in similarity discrimination. This paper evaluates the performance of the Locality-Sensitive Hashing (LSH) algorithm in preserving similarity among abstract tags. The experimental results are shown in Fig. 2, where panels (a), (b), and (c) illustrate the similarity distribution based on LSH, and panels (d), (e), and (f) depict the similarity distribution of the proposed CAE model. The comparative results show that the CAE model performs better in preserving the semantic similarity of the summary tags, allowing for more accurate differentiation between similar and dissimilar samples.

Summary tag distribution analysis of the model

In this paper, CAE models of different dimensions were selected for training, summary tags generated by the model were converted into two-dimensional vectors for plane representation, and the model distribution was compared with that in the literature14. Figure 3 shows the distribution of summary tags of the model in reference14.

Summary tag distribution of the Autoencoder model in ref.14.

As shown in the figure above, literature14 uses different input data and trains multi-dimensional Autoencoder models, leading to local optima and overfitting in the distribution of the generated summary tags. This means that different data produce similar summary tags, which reduces both the deduplication efficiency and accuracy. To more intuitively verify the advantages of the CAE model proposed in this paper, we conduct experiments using the same dataset, as shown in Fig. 4 below.

To sum up, this paper compares the distribution of Autoencoder models with the literature14. Through experimental comparison, it can be seen that the summary tags generated by the CAE model proposed in this paper are more evenly distributed than those generated by the naive Autoencoder model.

To better verify the advantages of the model in this paper, this paper analyzes the distribution of summary tags in 3D coordinates. In this paper, literature14 and a well-trained model in the method of this paper are selected for experimental comparative analysis. Each model uses three groups of different data as input, and the specific distribution is shown in Fig. 5.

This paper’s method and literature14 model summary tag 3D distribution diagram.

From the perspective of the 3D distribution of the output summary tags of the model, the model distribution of the proposed method is more uniform. This shows that with different input data, the model in the literature14 has the problem of overfitting. With different input data, the output expectation is the same. However, the model proposed in this paper is evenly distributed, and its effect is better than that of the literature14.

In order to verify the degree of fitting between the model in this paper and the model proposed in the literature14, this paper selects the same training set and test set for experiments. Two dimensions of Mean Square Error (MSE), and Mean Absolute Error (MAE) were used to test the model, so as to evaluate the degree of data fitting between the output and input of the model.

Mean square error (MSE) is calculated by the following formula:

Among them, the \(Observed_t\) indicates the input data value of the model, the \(Predicted_t\) indicates the output data value of the model, and \(N\) indicates the size of the test set. MSE refers to the expected value of the square difference between the estimated value of the parameter and the real value of the parameter. The smaller the MSE value is, the better the accuracy of the prediction model in describing the experimental data is.

The mean absolute error (MAE) is calculated by the following formula:

Among them, the \(Observed_t\) indicates the input data value of the model, the \(Predicted_t\) indicates the output data value of the model, and \(N\) indicates the size of the test set. MAE is the average of the absolute values of the deviations from the arithmetic mean of all individual observations. MAE can avoid the problem of errors cancelling each other, so it can accurately reflect the size of the actual prediction error.

To verify that the model proposed in this paper is better, experiments are compared with literature14, as shown in the following Table 1.

Theoretical analysis

If encryption algorithms, specifically the compareTag method, are not considered, the data security deduplication method based on random message lock encryption simply searches for duplicate data through a basic loop. To improve the search efficiency, the only option is to reduce the number of iterations. This is the rationale behind the introduction of summary tags in this paper, which leverage the similarity of the summary tags to filter out a large number of fingerprint tags that do not require comparison. Therefore, the summary tags are central to the proposed method. In addition to possessing similarity, they must also ensure a certain level of security, as they cannot be encrypted; otherwise, rapid similarity calculations would be hindered. To ensure both the effectiveness and security of the method, the summary tags must satisfy the following four properties.

Properties that the summary tags should satisfy

Let the data be denoted as \(m\), and the summary tag generated from the data be denoted as \(\tau (m)\). The summary tag should satisfy the following properties:

-

\(\textcircled {1}\) The original data \(m\) cannot be restored from \(\tau (m)\).

-

\(\textcircled {2}\) If \(\tau (m_1) = \tau (m_2)\), it does not imply that \(m_1 = m_2\).

-

\(\textcircled {3}\) Even if \(m_1 = m_2\), if different models are used to generate \(\tau _1(m_1)\) and \(\tau _2(m_2)\), it should still hold that \(\tau _1(m_1) \ne \tau _2(m_2)\).

-

\(\textcircled {4}\) If \(m_1\) and \(m_2\) are similar, then \(\tau (m_1)\) and \(\tau (m_2)\) should also be similar, and the similarity can be represented by Euclidean distance.

The first three properties relate to the security of the summary tags, while the last property concerns their similarity.

Analysis of how the CAE model satisfies the above properties

(1) Properties \(\textcircled {1}\) and \(\textcircled {2}\):

The CAE model maps the high-dimensional input \(\textbf{x} \in {R}^n\) to a low-dimensional representation \(\textbf{y} = f(\textbf{x}) \in {R}^m\), where \(m \ll n\). Ignoring the activation function, this process can be represented as a linear transformation:

Since \(M\) is rank-deficient and non-invertible, there does not exist an \(f^{-1}\) that can recover \(\textbf{x}\) from \(\textbf{y}\), thus satisfying the non-invertibility property (Property \(\textcircled {1}\)). Consequently, multiple \(\textbf{x}\) values may map to the same \(\textbf{y}\), naturally satisfying Property \(\textcircled {2}\). Considering the inclusion of an activation function \(g\), the model becomes \(\textbf{z} = g(M\textbf{x})\). Although common activation functions (such as ReLU or sigmoid) are monotonic and possess an inverse \(g^{-1}\), the overall mapping \(g(f(\textbf{x}))\) remains non-invertible due to the non-invertibility of \(f\). Therefore, the model still satisfies Properties \(\textcircled {1}\) and \(\textcircled {2}\). To further enhance the security of the summary tags, an irreversible activation function–such as a non-monotonic periodic function like \(\sin\)–can be introduced. The specific approach is to use a monotonic activation function during model training to ensure convergence, while employing a non-monotonic activation function when generating summary tags to enhance their security. This ensures that even similar summary tags do not indicate that the associated data is similar. The experimental section of this paper provides relevant verification of the properties of non-monotonic activation functions.

(2) Properties \(\textcircled {3}\):

To ensure that the same data generates different summary tags for different users, multiple independently trained autoencoder models must be used. Let user 1 and user 2 train models \(f_1(\textbf{x}) = M_1\textbf{x}\) and \(f_2(\textbf{x}) = M_2\textbf{x}\), respectively. Due to differences in training data and initialization, it follows that \(M_1 \ne M_2\), resulting in the following for the same input \(\textbf{x}\):

This diversity prevents adversaries from deducing the original data from the summary tags, thereby enhancing semantic security. Property \(\textcircled {3}\) is a critical attribute for ensuring the semantic security of the summary tags, and it can only be achieved by applying multiple autoencoder models. This is also the key reason why Locality-Sensitive Hashing (LSH) is not used to generate summary tags in this paper. The experimental section of Reference14 provides verification that summary tags generated from the same data may differ when using different models.

(3) Properties \(\textcircled {4}\):

Let two input data points \(\textbf{x}_1\) and \(\textbf{x}_2\) be similar, i.e., \(\Vert \textbf{x}_1 - \textbf{x}_2\Vert\) is small. If the mapping function \(f\) is continuous, then the output satisfies:

In the CAE architecture, the convolutional layers, pooling layers, and common activation functions (such as ReLU) are all continuous functions, so the model naturally satisfies the property of similarity preservation. However, to satisfy Property \(\textcircled {3}\) while maintaining Property \(\textcircled {4}\), it is necessary to ensure that different models \(M_1\) and \(M_2\) are structurally similar. To achieve this, this paper proposes a key strategy: using the same initialization parameters for independent training. Since the training sets are independent and identically distributed (i.i.d.), if the initial matrices M’ are the same, the final trained \(M_1\) and \(M_2\) will maintain a high degree of structural similarity, thereby ensuring that similar inputs correspond to similar outputs:

In summary, under reasonable structural design and training mechanisms, the CAE model systematically satisfies the four key properties required for summary tags: Properties \(\textcircled {1}\) and \(\textcircled {2}\) arise from the compression process of mapping high-dimensional input to low-dimensional representations combined with nonlinear activation functions; Property \(\textcircled {3}\) is achieved by introducing multiple independently trained models, thus preventing direct correlations between tags; Property \(\textcircled {4}\) relies on the mathematical properties of the model’s continuous mapping and establishes structural consistency across different models through a unified parameter initialization strategy. Therefore, constructing multiple CAE models, independently trained based on the same initial parameters, is not only the core prerequisite for ensuring that summary tags possess both security and effectiveness, but also forms the theoretical foundation of the secure deduplication method proposed in this paper.

Experimental analyse

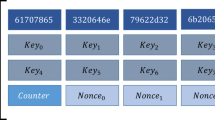

This article uses version 3.0 of the Linux kernel source code to construct the dataset for the experiment: the source code files are partitioned (using a CDC-based variable chunking algorithm), and the blocks are stored in a MySQL database. Our exposed data set consists of two tables: the \(c\_chunk\) table stores all the blocks, a total of 430,165 data blocks; The relationship between data blocks and files is stored in the \(c\_file\) table.

In this paper, we compare the previous schemes from the aspects of tag generation time, model training time and model occupied space. The experimental results show that the secure deduplication method designed in this paper has lower calculation costs, higher deduplication efficiency, and more uniform model distribution.

Comparison of model training time

To verify the performance advantages of the CAE model proposed in this paper in terms of training time, this section compares it with the traditional autoencoder model proposed in literature14. The models with the best training performance from both approaches were selected for comparison. The training data size was set to range from 10,000 to 100,000 data blocks, with a step size of 10,000. To improve the stability and credibility of the results, each set of experiments was repeated four times with different random seeds. The standard deviation of the training time in each case was less than 0.05, and the average value was taken as the comparison metric. The experimental results are shown in Fig. 6, which illustrate the differences in training efficiency between the two models for different data scales.

As can be seen from the figure above, the Autoencoder model generated in this paper has more advantages in terms of training time. This is because the CAE model designed in this paper adopts the convolution kernel of 4*4 matrix and sets the step size of 2, the multi-layer convolution and pooling operation is used to achieve the purpose of efficient dimensionality reduction. Therefore, the training time of the convolutional Autoencoder model designed in this paper is much less than that of the literature14.

Summary tag generation time comparison

This section compares the model’s summary generation time with that in literature14. The input data size was set to range from 10,000 to 100,000 data blocks, with a step size of 10,000. To improve the stability and credibility of the results, each set of experiments was repeated four times with different random seeds. The standard deviation of the summary generation time in each case was less than 0.05, and the average value was taken as the comparison metric. The experimental results are shown in Fig. 7, which illustrates the comparison of the summary tag generation time between the two models for different data scales.

As can be seen from the figure above, the scheme summary tag generation in this paper is more efficient and takes less time. In this paper, the parameters of the model are greatly reduced by the convolution operation, and the features of the data are extracted by the pooling operation. Then the dimension of the convolution Autoencoder model is reduced to achieve a small computational cost of summary tag generation.

Memory overhead comparison

This paper takes Autoencoder models of different dimensions as examples to compare and analyze the storage space occupied by the scheme in this paper and the model in literature14, as shown in the figure below.

In this paper, a better model trained in literature14 is used to compare with our scheme. Table 2 shows the storage cost occupied by different models in literature14.

This paper selects 5 models with different dimensions that are well-trained by the two schemes for training. Table 2 shows the storage cost occupied by different models in this paper. It can be seen from the above table that the CAE model trained in this paper saves 99.99% of the storage space occupied by the model in the literature14. The scale of the reduction is ten thousand times larger.

In summary, compared with the literature14 from multiple dimensions, such as model training time, summary tag generation time and model occupied space, the experimental results show that the scheme proposed in this paper has all aspects of advantages, such as higher deduplication efficiency and less storage space occupied.

Conclusion

Cloud storage services are widely used by users because of their convenience and flexibility. However, a large amount of duplicate data exists in the cloud, reducing the storage efficiency of the cloud platform. Meanwhile, the open network environment also poses huge security risks such as data leaks and breaches. Due to its semantic security, random message-locked encryption is applied to secure the deduplication of cloud data, but this method has a large number of bilinear mapping calculations, leading to low efficiency of deduplication. In order to solve this problem, we proposed a secure deduplication method based on the Autoencoder model in literature14, which uses the similarity of summary tags generated by the model to reduce the number of comparisons of fingerprint tags. And improve the efficiency of deduplication. However, simple Autoencoder models have a large number of parameters and are fully connected networks, which leads to problems such as a long training time, a long time for generating summary labels, and a large model size. Therefore, this paper further proposes a secure deduplication method based on CAE, which uses convolution and pooling operations to reduce the calculation amount of Autoencoder model parameters and improve the efficiency of deduplication. The experimental results indicate that the proposed method generates tags with a more uniform distribution on the source code dataset and exhibits superior deduplication efficiency. In future research work, we will deeply integrate artificial intelligence technology with cloud data secure deduplication, so as to realize cloud data security and efficient deduplication.

Data availability

The authors confirm that all the data supporting the findings of this study are available on request from the corresponding author.

References

China Academy of Information and Communications Technology. Analysis report of third-party data center operators in china. CAICT Research Reports (2022). Available at: https://www.caict.ac.cn/kxyj/qwfb/ztbg/202204/P020220408530633654580.pdf, Accessed: 2022-12-20.

Sun, P. et al. A survey of iot privacy security: Architecture, technology, challenges, and trends. IEEE Internet Things J. 11 (21), 34567-34591. (2024).

Reinsel, D., Rydning, J. & Gantz, J. F. Worldwide global datasphere forecast, 2021–2025: The world keeps creating more data – now, what do we do with it all? IDC White Paper 1–20 (2021).

Hua, Z. et al. Blockchain-assisted secure deduplication for large-scale cloud storage service. IEEE Trans. Serv. Comput. 17 (3), 821-835. (2024).

Cheng, S. et al. Pfdup: Practical fuzzy deduplication for encrypted multimedia data. J. Ind. Inf. Integr. 40, 100613 (2024).

Jin, C. et al. A blockchain-based auditable deduplication scheme for multi-cloud storage. Peer Peer Netw. Appl. 17, 1–14 (2024).

Wang, Z. et al. Lightweight secure deduplication based on data popularity. IEEE Systems Journal 17 (4), 5531-5542. (2023).

Song, M. et al. Enabling transparent deduplication and auditing for encrypted data in cloud. IEEE Trans Dependable Secure Comput. (2023).

Zhang, Q. et al. Blockchain-based privacy-preserving deduplication and integrity auditing in cloud storage. In IEEE Trans. Comput. (IEEE, 2025).

Wu, X. et al. A randomized encryption deduplication method against frequency attack. Journal of Information Security and Applications 83, 103774 (2024).

Jiménez Delgado, E., Roldán Morales, A. L. & Calvo Aray, Y. Implementation of machine learning algorithms to classify university academic success. In 2022 17th Iberian Conference on Information Systems and Technologies (CISTI), 1–4, https://doi.org/10.23919/CISTI54924.2022.9820593 (IEEE, 2022).

AI-Hmouz, R. et al. Logic-oriented autoencoders and granular logic autoencoders: Developing interpretable data representation. IEEE Trans. Fuzzy Syst. 30, 869–877 (2022).

El-Fiqi, H. et al. Weighted gate layer autoencoders. IEEE Trans. Cybern. 52, 7242–7253. https://doi.org/10.1109/TCYB.2021.3049583 (2022).

Qi, H. et al. Secure deduplication method based on autoencoder. In 2020 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (2020).

Prajapati, P. A review on secure data deduplication: Cloud storage security issue. J. King Saud. Univ. Comput. Inf. Sci. 34, 3996–4007 (2022).

DuBois, L., Amaldas, M. & Sheppard, E. Key considerations as deduplication evolves into primary storage. White Paper 223310 (2011).

Gao, Y. et al. Similarity-based secure deduplication for iiot cloud management system. IEEE Trans. Dependable Secure Comput. 21 (4), 2242-2256. (2023).

Vikas, C., Sateesh, P. & Rajkumar, B. dualdup: A secure and reliable cloud storage framework to deduplicate the encrypted data and key. Journal of Information Security and Applications 69, 103265. https://doi.org/10.1016/j.jisa.2022.103265 (2022).

Bellare, M., Keelveedhi, S. & Ristenpart, T. Message-locked encryption and secure deduplication. In Johansson, T. & Nguyen, P. Q. (eds.) Advances in Cryptology – EUROCRYPT 2013: 32nd Annual International Conference on the Theory and Applications of Cryptographic Techniques, 296–312 (Springer Berlin Heidelberg, 2013).

Wu, S. Q., Jia, C. F. & Wang, D. Up-mle: Efficient and practical updatable block-level message-locked encryption scheme based on update properties. In IFIP International Conference on ICT Systems Security and Privacy Protection 648, 251–269 (2022).

P, Z. G., H, C. P. & W, L. Y. Blockchain-based secure and verifiable deduplication scheme for cloud assisted internet of things. IEEE Internet Things J. 11, 13995–14006, https://doi.org/10.1109/JIOT.2024.1234567 (2024).

Zhang, Y. et al. Secure password-protected encryption key for deduplicated cloud storage systems. IEEE Trans. Dependable Secure Comput. 19, 2789–2806 (2022).

Liu, X. Q. et al. Message-locked searchable encryption: A new versatile tool for secure cloud storage. IEEE Trans. Serv. Comput. 15, 1664–1676 (2022).

Zhao, Y. J. & Chow, S. M. Updatable block-level message-locked encryption. IEEE Trans. Dependable Secure Comput. 18, 1620–1631 (2021).

Y, Q. et al. Secure data deduplication with dynamic access control for mobile cloud storage. IEEE Trans. Mob. Comput. 23, 2566–2582, https://doi.org/10.1109/TMC.2024.1234567 (2024).

Jiang, T. et al. Towards efficient fully randomized message-locked encryption. In Liu, J. & Steinfeld, R. (eds.) Information Security and Privacy, ACISP 2016, Lecture Notes in Computer Science, vol. 9722, https://doi.org/10.1007/978-3-319-40253-6_22 (Springer, Cham, 2016).

Wang, H., Chen, K., Qin, B., Lai, X. & Wen, Y. A new construction on randomized message-locked encryption in the standard model via uces. Sci. China Inf. Sci. 60, 52101 (2017).

Zhang, Z. X. & Yang, B. Hypergraph regularized deep autoencoder for unsupervised unmixing hyperspectral images. Journal of Donghua University (English Edition) 40, 8–17 (2023).

Nagar, S. & Kumar, A. Orthogonal features based eeg signals denoising using fractional and compressed one-dimensional cnn autoencoder. IEEE Trans. Neural Syst. Rehabil. Eng. 30, 2474–2485 (2022).

Lecun, Y. Generalization and network design strategies. In Connectionism in Perspective (Elsevier, 1989).

Li, Z. et al. Arpcnn: Auxiliary review-based personalized attentional cnn for trustworthy recommendation. IEEE Trans. Industr. Inform. 19, 1018–1029. https://doi.org/10.1109/TII.2022.3169552 (2023).

Guo, X. et al. Network pruning for remote sensing images classification based on interpretable cnns. IEEE Trans. Geosci. Remote Sens. 60 (2022).

Lecun, Y. & Bottou, L. Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 2278–2324 (1998).

Rasheed, A. et al. Handwritten urdu characters and digits recognition using transfer learning and augmentation with alexnet. IEEE Access 10, 102629–102645. https://doi.org/10.1109/ACCESS.2022.3208959 (2022).

Acknowledgements

This study is supported by 2025 Jilin Province Science and Technology Development Plan Project (20250102226JC).

Author information

Authors and Affiliations

Contributions

CBW conceived the framework and designed the algorithm. HQ designed the model. BC conducted the experimental simulations. GYZ performed the algorithm analysis and data analysis. HQ and GYZ drafted the manuscript. CBW and BC reviewed and revised the manuscript. All authors reviewed and approved the final version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, C., Zhang, G., Qi, H. et al. A CAE model-based secure deduplication method. Sci Rep 15, 24605 (2025). https://doi.org/10.1038/s41598-025-09788-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-09788-0

This article is cited by

-

GWOCS: an adaptive hybrid framework for duplicate data removal in cloud systems

International Journal of Information Technology (2025)