Abstract

As oil and gas exploration advances, the growing complexity of geological conditions demands higher-quality quadrilateral meshes for spectral element method-based seismic simulations. For complex geological models, existing quadrilateral meshing algorithms struggle to generate high-quality meshes that meet the spectral element method’s requirements, often producing initial meshes with topological errors or concave elements, which compromise simulation accuracy. To address this, we propose a swarm intelligence-based secondary optimisation method, employing particle swarm optimisation (PSO), wolf pack algorithm (WPA), and firefly algorithm (FA) to iteratively refine distorted nodes. Results demonstrate that all three algorithms eliminate initial mesh defects, with WPA achieving the highest mesh quality, PSO exhibiting the fastest convergence, and FA performing least effectively. The optimised meshes meet the high-quality standards of the spectral element method, significantly improving simulation stability and computational efficiency, and laying a foundation for the further application of the spectral element method in seismic exploration.

Similar content being viewed by others

Introduction

Seismic survey technology is widely used in the exploration of oil and gas as well as mineral resources. In oil and gas exploration, seismic surveys can help geologists to identify possible oil or gas reservoirs in the subsurface, thereby reducing the risks and costs of drilling. In mineral resources exploration, seismic survey technology can also be used to locate the distribution of coal, metal ores and other important resources. In actual seismic exploration, forward modelling simulations are required due to the complexity of the geological environment.

The mainstream forward modelling algorithms in seismic exploration include finite difference method, discontinuous finite element methods, finite element method and spectral element method. The finite difference method discretises continuous partial differential equations, which is suitable for regular grids and is computationally efficient but may have errors when dealing with complex boundaries1 the finite difference method was first widely used in seismic wave orthorectification2. The finite element method has high flexibility and versatility in the case of different problem characteristics such as geometry and boundary conditions3, although the finite element method traditionally requires complex implementation and substantial computational resources, modern commercial software packages have significantly enhanced its engineering accessibility, making it widely adopted in engineering practice. Discontinuous finite element methods are suitable for dealing with higher order derivatives and discontinuities, but they greatly increase the computational and computer storage requirements4.

The spectral element method is a more efficient method for simulating seismic waves5, also features high accuracy and fast convergence. The spectral element method was initially applied in the field of fluid mechanics6. Professor Komatitsch from France led a team to conduct theoretical research on the spectral element method in numerical simulation, and writes one-dimensional, two-dimensional, three-dimensional and four-dimensional computational procedures, which were successfully applied in practical problems7,the spectral element method was developed in the direction of more complex simulation media, faster numerical simulation speed and higher accuracy. In order to ensure the simulation accuracy and efficiency, the spectral element method has more stringent requirements on the quality of the quadrilateral mesh.

Research on generative mesh models began in the 1950s. At first William J. Gordon, Charles A. Hall and others proposed the use of parameter space mapping to generate full quadrilateral meshes8. In a curvilinear coordinate system, the mesh conforms more closely to the geometric boundary, which reduces mesh distortion caused by boundary irregularities. However, in order to better map to parameter space, the geometry needs to be decomposed manually, a tedious process that requires a large number of operators with specialised knowledge.In order to solve this problem, Blacker and Stephenson proposed the Paving algorithm in 1991, which can automatically generate quadrilateral meshes9. Compared with the parameter space mapping method proposed by William J. Gordon and Charles A. Hall in the early days, it is more automated, which greatly simplifies the mesh generation workflow,but it may yield lower-quality meshes when dealing with complex boundaries. All of the above methods generate quadrilateral meshes directly, which is simple and intuitive, but requires a lot of computational resources, is difficult to parallelise efficiently and generates poor quality quadrilateral meshes when dealing with large-scale complex problems. Therefore, an indirect method of generating quadrilateral meshes can be considered, i.e., constructing triangular meshes first and then merging and reorganising the triangular meshes into quadrilateral meshes. In 1989, S. H. Lo proposed a method for generating a full quadrilateral or a mixed triangle and quadrilateral mesh by decomposing or merging elements, starting from the triangulation of a region10. After that, Johnston combined the element decomposition and boundary flip algorithms to optimise the mesh quality and reduce the number of triangles in the mesh11 . Owen proposed the Q-Morph algorithm, which merges the regional triangle mesh layer by layer from the outer boundary to the inner boundary12. Under the condition that the number of initial boundary segments is even, the generation of full quadrilateral meshes is guaranteed, but at the same time, a small number of poor quality cells are introduced. Remacle J F et al. proposed a Frontal-Delaunay quadrilateral mesh generation algorithm based on the \(L^\infty\) norm in 2012, which constructs triangular meshes that are more suitable for merging into quadrilaterals by using infinity norms to compute the distances during the mesh generation process13.

The above methods can effectively generate quadrilateral meshes, which are widely used in various industries. For certain regular models, such as engineering components or medical models, traditional quadrilateral meshing algorithms can generate high-quality quadrilateral meshes. However, for complex and irregular geological models, the quadrilateral meshes generated by traditional quadrilateral meshing algorithms may not meet practical computational production requirements. The existing algorithms are relatively complex, and optimising the algorithm itself is challenging. Thus, a secondary optimisation of the quadrilateral mesh generated by the initial meshing can be applied. This secondary optimisation is divided into geometric optimisation and topological optimisation. A typical geometric optimisation algorithm is the Laplacian smoothing, which does not alter the mesh topology; it moves mesh nodes to the centroid of the polygons formed by neighboring nodes to improve mesh quality. However, this algorithm does not effectively optimise concave quadrilaterals14. Chen Ligang et al. applied mesh refinement, node addition, and node deletion to optimise quadrilateral mesh topology, significantly reducing the number of distorted nodes15.

Common approaches to quadrilateral mesh secondary optimisation are gradient descent algorithm and swarm intelligence algorithm. The two algorithms adjust the position of the nodes through iteration, so that the objective function of the mesh gradually converges to the minimum value and improves the overall mesh quality. However, the gradient descent algorithm relies too much on local gradient information and is prone to fall into local minima. Swarm intelligence algorithms demonstrate a considerable capability to avoid local optima through their multi-point parallel search and global exploration mechanisms, presenting certain advantages over traditional optimisation methods. However, some algorithms may still experience premature convergence or local optima traps when parameters are improperly configured or problem complexity is high. Many improved swarm intelligence algorithms have shown particularly strong performance in global optimisation tasks16.

To improve the quality of quadrilateral meshes generated by traditional quadrilateral meshing algorithms and create meshes suitable for numerical simulation using the spectral element method, we propose a secondary optimisation method based on the traditional indirect meshing approach.

The contributions of this paper are as follows:

A secondary optimisation method for quadrilateral meshes based on a swarm intelligence optimisation algorithm is proposed. An effective loss function is introduced to address the various defects of the quadrilateral meshes generated by traditional meshing algorithms, which are unsuitable for numerical simulation using the spectral element method. The specific framework for applying the swarm intelligence optimisation algorithm to the secondary optimisation of quadrilateral meshes is also clarified.

The differences in the effects of the three typical swarm intelligence optimisation algorithms, Particle Swarm optimisation Algorithm, Wolf Pack Algorithm, and Firefly Algorithm, when applied to the secondary optimisation of quadrilateral meshes, were compared, and the performance indicators of the different optimisation algorithms were analyzed. Through experimentation, we found that the three swarm intelligence algorithms can effectively perform secondary optimisation of the quadrilateral meshes, and all can make the meshes reach a quality that can be used for numerical simulation using the spectral element method. The WPA algorithm has a more prominent optimisation ability when applied to the secondary optimisation of the quadrilateral meshes, and can trade off a slight disadvantage in convergence speed for a greater improvement in mesh quality. The PSO algorithm has a slight advantage in convergence speed, but the quality of the optimised meshes is not as good as that of the WPA algorithm. Although the FA algorithm can meet the basic needs of secondary optimisation, it is inferior to the other two algorithms in terms of both convergence speed and the quality of the optimised meshes.

Related work

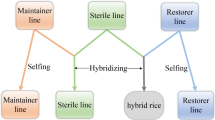

There are direct and indirect methods for generating quadrilateral meshes. The indirect method is widely used because it relies on a simpler triangular mesh generation algorithm, and the resulting mesh possesses certain mathematical properties, allowing for the quick construction of robust meshes. The basic approach of the indirect method involves first generating an initial triangular mesh, then treating this triangular mesh as a weighted graph, with each triangle serving as a node. The algorithm seeks a minimum-cost perfect matching within the graph, and based on the resulting matching edges, merges triangles in the triangular mesh to form quadrilateral meshes. Geological models are often complex, and the indirect meshing method is particularly advantageous for handling intricate geometrical structures, reducing computational demands, and enhancing meshing efficiency, making it more suitable for geological models. However, complex geological models frequently contain features such as overthrust faults, lenticular bodies, and pinch-outs, which can lead to poorly shaped quadrilateral elements. These elements require further optimisation before they can be used in numerical simulations and computations.

Frontal-Delaunay quadrilateral mesh generation algorithm based on the \(L^\infty\) norm

The input geological model often consists only of a boundary polygon, without internal control points, while the generated mesh model must be densified based on the model’s physical parameters. Consequently, the task of triangulation involves not only creating a triangulation of the input polygon but also densifying it appropriately. By applying the Frontal-Delaunay quadrilateral mesh generation algorithm based on the \(L^\infty\) norm, the model can be transformed into a denser triangular mesh, which is better suited for reorganization into quadrilaterals. This approach provides a solid foundation for the subsequent merging process to form the quadrilateral mesh.

First, each boundary of the model is discretized into an odd number of control points based on the mesh densification scale, ensuring an even number of triangles in the triangular mesh. These control points serve as vertices for the triangles, forming an initial triangular mesh using the Delaunay meshing algorithm. However, the triangular elements in the initial mesh often do not meet the densification scale requirements, making them unsuitable for merging into a quadrilateral mesh.

To densify the initial triangular mesh, the dimensionless \(L^\infty\) mesh scale value \({h_i}\) of each triangle \({t_i}\) is calculated, and triangles with \({h_i} < {h_{\max }} = 4/3\) are defined as triangles of a qualified scale. The formula for calculating \({h_i}\) is:

where u represents a point in the parameter plane, \({S^\prime }\) is the parametric mapping of surface S, and \(\theta \left( u \right)\) is the direction of the crossing field at point u. \({R_\infty }\left( {T,\theta } \right)\) is the distance between the center of the circumscribed circle and any of the three vertices of the triangle in the \({L^\infty }\) norm. If the three points are denoted as \({X_a}\left( {{x_a},{y_a}} \right)\), \({X_b}\left( {{x_b},{y_b}} \right)\), and \({X_c}\left( {{x_c},{y_c}} \right)\), then:

where \(\delta \left( X \right)\) is the mesh size field specified on S, and the metric tensor M needs to be calculated in advance. It is calculated as follows:

After calculating the mesh size \({h_i}\) for each triangle in the mesh, find the triangle with the largest scale parameter \({h_i}\) among the triangles adjacent to the model boundaries or adjacent to the triangles that already meet the size requirements, and insert new points at suitable locations within it to thereby divide the triangle into triangles that are more size-compliant and more suitable for use in merging into a quadrilateral.

The location of each new point inserted can be referred to the paper by Remacle et al. The process of densifying the triangle mesh is shown in Fig. 1.

Blossoms-Quad: a minimum-cost perfect-matching algorithm

After a triangulated model that meets the dimensional requirements has been generated using a triangulation algorithm, the Blossoms-Quad algorithm can be used to merge paired triangles to generate a quadrilateral mesh. Blossoms-Quad is based on the Blossoms algorithm from graph theory, which can compute the minimum-cost perfect matching in a graph in polynomial time. The algorithm converts the triangulated mesh into a weighted graph, where each vertex represents a triangle and each edge of the weighted graph connects adjacent triangles. Figure 2 illustrates the process of reorganizing the matching edges of the triangular mesh into a quadrilateral mesh based on the minimum-cost perfect matching algorithm. The cost function for each edge is computed using Eq. (4):

where \(\overrightarrow{{e_{ij}}}\) is a unit vector tangent to the mesh plane, and \(\overrightarrow{{t_1}}\) and \(\overrightarrow{{t_2}}\) are two orthogonal unit vectors at the midpoint. The Blossoms-Quad algorithm is used to match edges to form triangles and generate a quadrilateral mesh.

Methods

Swarm intelligence-based quadrilateral mesh secondary optimisation algorithm flow

For simple and regular models, the quadrilateral mesh generated using the Frontal-Delaunay quadrilateral meshing algorithm based on the \(L^\infty\) norm, combined with the Blossoms-Quad algorithm, can often directly satisfy the requirements of numerical simulation algorithms. However, when dealing with complex geological models, the resulting quadrilateral meshes may still contain a small number of concave quadrilaterals, degenerate quadrilaterals, and topology errors. Consequently, the quality of the mesh often falls short of the standards necessary for finite element analysis of the geological model, necessitating further optimisation.

When applying the aforementioned quadrilateral generation algorithm to complex geological models, the resulting quadrilateral mesh may still exhibit some issues. However, it can be ensured that all mesh cells consist of four vertices, making it feasible to optimise the existing quadrilateral mesh by adjusting the positions of the mesh nodes. optimising the quadrilateral mesh involves refining the locations of these nodes. By repositioning certain mesh nodes, the overall quality of the mesh can be enhanced, allowing the optimised quadrilateral mesh to meet the requirements for spectral element method calculations.

In order to optimise the quadrilateral mesh, after the quadrilateral mesh generated by the meshing algorithm is imported, the data needs to be stored in an internal data structure for subsequent optimisation. The internal data structure is mainly composed of a mesh node list and a quadrilateral list. The information in the mesh node list includes the mesh node index, coordinates, the index of the quadrilateral to which the node belongs, and whether the node is on the model boundary. The information in the quadrilateral list includes the index of the quadrilateral and the indices of the four nodes that form the quadrilateral.

Then, all nodes in the mesh node list except for the boundary nodes are traversed, and the nodes to be optimised are added to the list of nodes to be optimised. The nodes to be optimised mainly include the following five types of distorted nodes: (a) nodes that cause topology errors; (b) nodes that cause concave quadrilaterals; (c) nodes that cause quadrangles to degenerate into triangles; (d) nodes that cause oversize and undersize interior angle; (e) nodes that cause excessively large aspect ratio. A schematic diagram of the above five types of nodes to be optimised is shown in Fig. 3. Among these, topology errors are the most serious, followed by concave quadrilaterals. In a topology error state, the edges of the quadrilateral will intersect. In a concave quadrilateral, the angle within the calculated quadrilateral is greater than \(180^{\circ }\), which will make optimisation difficult. Therefore, the more serious the distortion, the more priority the node needs to be optimised. The nodes in the list of nodes to be optimised will be used in subsequent optimisation algorithms, and the optimisation order of the five types of nodes to be optimised is prioritised from a to e in descending order.

To optimise error nodes, it is essential to first establish a method for calculating the loss value of these nodes. In this study, the term loss value refers to a quantitative measure used to evaluate the mesh quality during the secondary optimisation of quadrilateral meshes using swarm intelligence algorithms. Specifically, this loss value functions as the objective function in the optimisation process: the higher the loss value, the poorer the mesh quality. Therefore, minimizing the loss value corresponds to improving the quality of the mesh elements. The loss value calculation criteria set forth in this paper are as follows: If a quadrilateral has a topology error, meaning two of its four edges intersect, its loss value is designated as TopologyErrorLoss. If the quadrilateral be concave, its loss value is assigned as ConcaveErrorLoss. If the quadrilateral contains excessively large or small angles at the vertices, its loss value is AngleErrorLoss. If the ratio of the longest side to the shortest side of the quadrilateral exceeds 4, the loss value is LenErrorLoss. These four types of nodes must undergo optimisation, as neglecting them would severely compromise the accuracy of spectral element method forward modeling. Thus, descending fixed maximum loss values are assigned to these four conditions respectively, ensuring the optimiser handles more severe geometric deformations with higher priority. For all other cases, the loss value of an individual quadrilateral is determined by Eq. (5).

where \(los{s_{quad}}\) is the loss value of a single quadrilateral, \(angl{e_i}\) is the i-th interior angle of the quadrilateral, \(le{n_i}\) is the i-th ordered edge of the quadrilateral, and \(\frac{{le{n_{i + 2}}}}{{le{n_i}}}\) represents the ratio of the two opposite sides of the quadrilateral.

The loss value of a node is the loss value of all the surrounding quads with that node as a vertex plus the variance of the lengths of the surrounding edges with that node as an endpoint, using the following formula:

where \(los{s_{node}}\) is the loss value of the node, n is the number of quads with the node as a vertex, \(los{s_{qua{d_i}}}\) is the loss value of the i-th quadrilateral, m is the number of edges with the node as an endpoint, and \(le{n_j}\) and \(le{n_k}\) are the lengths of the j-th and k-th edges.

Before optimising the nodes, it is necessary to determine the solution space of the nodes and calculate the loss value at the initial position according to the loss function of the optimisation objective. As shown in Figure 4, the area surrounded by the dotted line is the solution space of the hollow mesh nodes in the figure. When optimising the positions of the mesh nodes, the nodes should not be moved beyond the solution space, otherwise a new error will be generated.

The overall process of secondary optimisation of the quadrilateral mesh is shown in Fig. 5.

In this secondary optimisation algorithm for a quadrilateral mesh, the swarm intelligence algorithm is well-suited to optimising the position of the mesh nodes. It can optimise most models very well. Even if a complex model occasionally fails to converge fully within the specified number of times, there will only be a single-digit number of unoptimised points. After adjusting the optimisation step size and the maximum number of iterations based on the mesh density at the location of the unoptimised points in the output, all error nodes will be successfully optimised. The swarm intelligence optimisation algorithm is a biological heuristic method that mainly simulates the swarm behaviour of insects, herds, flocks of birds and other animals. These swarms search for food in a cooperative manner, with each member of the group constantly changing the direction of the search by learning from its own experience and the experience of other members. The distinguishing feature of the swarm intelligence optimisation algorithm is that it uses the collective intelligence of the population to search collaboratively, thereby finding the optimal solution in the solution space.

After the loss function and the overall optimisation process are determined by the secondary optimisation algorithm proposed in this paper, three swarm intelligence algorithms are selected to achieve the optimisation of the error nodes, namely: the firefly algorithm to simulate insects; the wolf pack algorithm to simulate beasts; and the Particle Swarm optimisation Algorithm to simulate bird flocks.

Firefly algorithm

The Firefly algorithm (FA) is a meta-heuristic algorithm proposed by Yang, a British scholar, in 200817. The algorithm achieves the purpose of optimisation by simulating the idealised behaviour of mutual attraction between firefly individuals in nature. Due to its simplicity of operation, fewer parameters to be adjusted and superior performance, the algorithm has attracted the attention of domestic and international researchers since it was proposed, and has been widely used in the fields of computer science, complex equations solving, structural and engineering optimisation. The optimisation of quadrilateral mesh nodes can be regarded as a search for the optimal position with the smallest loss value for each node, and the mesh nodes are regarded as fireflies, and different search nodes are randomly initialised in the solution space corresponding to the current node to be optimised to perform the position optimisation, and the initial parameters set at the beginning of the algorithm include the number of search nodes n, the maximum number of search times \({k_{\max }}\), the light attenuation coefficient \(\gamma\), and the random step factor \(\alpha\), attraction constant \(\beta\). The movement rules of the search nodes in the firefly algorithm when optimising the nodes to be optimised are as follows:

If the loss value corresponding to the position of each search node i is \(los{s_i}\), then the brightness of each search node \({l_i}\) is determined by Eq. (7).

For each pair of search nodes i and j, if the brightness of search node j is higher than that of search node i, then search node i will be attracted to move towards search node j. When calculating the distance and direction of search node i moving towards search node j, the attraction degree \({\beta _{ij}}\) of search node j to search node i is calculated as shown in Eq. (8):

The coordinates of the search node i after moving to the search node j in the solution space at the moment \(t+1\) are shown in Eq. (9):

where \(\alpha\) is a random step factor and rand is a random number between [0,1]. After sequentially moving and updating the positions of all search nodes if the maximum number of searches has been reached then the position of the current node to be optimised is replaced by the position of the search node with the highest brightness, if the maximum number of searches has not been reached then continues the next round of search.

Wolf pack algorithm

The Wolf Pack Algorithm (WPA) is a swarm intelligence optimisation algorithm inspired by the predatory behaviour of wolves. It was proposed by Wu Husheng et al. in 2013, the Wolf Pack Algorithm divides the wolf pack into three types of artificial wolves with different responsibilities, namely the head wolf, the scout wolf, and attack wolf, and solves various types of optimisation problems by simulating the social structure and predation strategy of the wolf pack18.

Corresponding to the problem of quadrilateral mesh secondary optimisation, the positional optimisation of the nodes can be regarded as the hunting process of the wolf pack, and the optimal position is the position of the prey. The overall strategy of wolf pack algorithm applied to quadrilateral mesh secondary optimisation is as follows:

The parameters to be initialised by the algorithm are the number of search nodes n, the maximum number of search times \({k_{\max }}\), the scout wolf proportion factor \(\alpha\), the maximum number of wandering \({T_{\max }}\), the distance determination factor w, the step size factor S, the update scale factor \(\beta\), the maximum wandering depth \({h_{\max }}\), and the minimum wandering depth \({h_{\min }}\). Randomly initialise the position of each search node in the solution space of the node to be optimised and calculate the corresponding loss value \(los{s_i}\). The wolves are classified into three types of artificial wolves with different duties according to the loss value: the search node with the lowest loss value is selected as the head wolf node; the \({\mathrm{{S}}_{\mathrm{{num}}}}\) search nodes with the lowest loss value other than the head wolf become the scout wolf node, and \({\mathrm{{S}}_{\mathrm{{num}}}}\) is randomly assigned an integer between \(\left[ {\frac{n}{{\alpha + 1}},\frac{n}{\alpha }} \right]\); the remaining search nodes become attack wolf nodes.

After dividing all the search nodes into artificial wolf nodes with different duties, firstly the scout wolf node carries out the wandering behaviour: if the loss value \(los{s_i}\) at the current position of the scout wolf node i is less than \(los{s_{head}}\), then make \(los{s_{head}}\)=\(los{s_i}\), the current scout wolf becomes the head wolf node and restarts the summoning behaviour. If the loss value \(los{s_i}\) is more than \(los{s_{head}}\), the scout wolf node i moves one step in each of the h directions, with a step size of \({S_a}\), and after each step forward, the loss value of the new position is calculated and recorded and then returned to the original position, then the coordinates of the scout wolf node i in the solution space after moving forward in the p-th(p=1,2,...,h) direction are calculated as shown in Equation (10):

After all h directions have completed the trying, the direction with the lowest loss value is selected to take a step forward, and the above behaviour is repeated for all scout wolf nodes after updating the state of the scout wolf node i until a scout wolf senses \(los{s_i}<los{s_{head}}\) or the number of wandering T reaches the the maximum number of wandering \({T_{\max }}\), and then moves on to the summoning behaviour. Where h is a random integer between [\({h_{\min }}\),\({h_{\max }}\)].

During the summoning behaviour, all the attack wolf nodes rapidly approach the location of the head wolf node with a relatively large move step \({S_b}\), then the position of the attack wolf node i in the solution space at the time of \(k+1\) moves is shown in Eq. (11):

where \(\left( {x_h^k,y_h^k} \right)\) is the position of the head wolf node in the solution space at the k-th move. During the movement of the attack wolf node, if the attack wolf node i senses \(los{s_i}<los{s_{head}}\), it changes this attack wolf node into the head wolf node and restars the summoning behaviour, and if \(los{s_i}\)>\(los{s_{head}}\), the attack wolf node i continues to move until the distance between it and the head wolf node is less than \({d_{near}}\). After all the attack wolves have raced up to \({d_{near}}\) or less, it is transferred to the siege behaviour. If \({x_{\max }}{x_{\min }}{y_{\max }}{y_{\min }}\) are the upper and lower limits of the x-axis and y-axis of the solution space, respectively, the calculation of \({d_{near}}\) is shown in Eq. (12):

The siege behaviour consists of all the search nodes except the head wolf node moving towards the location of the head wolf node to simulate the siege of the prey by the wolf pack, and the position of the search node i at the time of \(k+1\) moves is calculated as shown in Eq. (13):

where \(\lambda\) is a random number uniformly distributed between [-1,1], \({\mathrm{{S}}_\mathrm{{c}}}\) is the step size of the search node to move when it performs the siege behaviour, and the relationship between the three step sizes is shown in Eq. (14)

In the siege behaviour, the search node position is updated if the loss value is lower after the search node takes a step, if not, the search node position remains unchanged.

After the end of the siege behaviour, it represents that the wolf pack has completed one round of the optimisation search process, at this time, the R search nodes with the highest loss value will be removed, R new search nodes will be randomly generated in the solution space, and the wolf pack will be re-divided in order to maintain the diversity of the wolf pack’s search node individuals. If the maximum number of searches has been reached then the position of the current node to be optimised is replaced with the position of the head wolf node, if not, the next round of optimisation is performed. Here R is taken as a random integer between \(\left[ {\frac{n}{{2 \times \beta }},\frac{n}{\beta }} \right]\).

Particle swarm optimisation algorithm

The particle swarm optimisation algorithm is a very classic optimisation technique based on swarm intelligence. It simulates the information sharing and collaboration mechanism of birds flocks to find a better solution to the problem19. When optimising the position of the nodes in a quadrilateral mesh, their position can be abstracted as the position of the particles. The process of nodes in the mesh finding a better position can be abstracted as the process of the particle swarm finding a better solution in two-dimensional space. Therefore, when using the particle swarm optimisation algorithm for the secondary optimisation of the mesh nodes, first initialise the algorithm parameters such as the number of search nodes nop, the maximum number of search times moi, the inertia factor \(\omega\), and the learning factors \({c_1}\) and \({c_2}\). When optimising a particular node i, all search nodes are first randomly initialized with an initial position and velocity within the solution space of the node to be optimised i, and set the global optimal position and local optimal position to the position of the search node with the smallest current loss value.

When the particle swarm optimisation algorithm carries out a round of optimisation, for a certain search node i in the population, the speed of the search node i is randomly updated according to the set learning factor so that the search node i is approximated to the local optimal position and the global optimal position with different weights, respectively, and then the position of the search node i is updated according to the new speed. Then calculate the loss value of search node i at the latest position, and replace the local optimal position as the current search node position if it is smaller. After all the search nodes are updated, if the loss value of the local optimal position in this round of optimisation is smaller than the global optimal position, the global optimal position is replaced as the local optimal position. The velocity and position update formulas for a particular search node i iteration are Eqs. (15) and (16) respectively

where rand is a random number between [0,1], \(p_{best}^x\) and \(p_{best}^\mathrm{{y}}\) are the horizontal and vertical coordinates of the local optimal position, \(g_{best}^x\) and \(g_{best}^\mathrm{{y}}\) are the horizontal and vertical coordinates of the global optimal position. If the maximum number of search times has been reached after one round of optimisation, the global optimal position is set to the position of the current node to be optimised. If it has not been reached, the next round of optimisation is performed.

Experiments

Experimental model

To investigate the effectiveness of swarm intelligence algorithms in mesh quality optimisation for geological models, we select two representative cases for experimental validation:

-

BGPModel: A complex model with densely continuous undulations and numerous fractures, which rigorously tests the algorithm’s capability under strong heterogeneity conditions;

-

NanpuModel: A simplified model featuring abundant fractures but relatively regular block geometries, serving as a comparative benchmark for evaluating algorithmic performance across different complexity scenarios.

The P-wave velocity distributions of these models are illustrated in Figs. 6 and 7, respectively.

The detailed information on the bgpModel and nanpuModel used in the experiment is shown in Tables 1 and 2.

Experimental setup

First, the geological model is meshed using the Frontal-Delaunay algorithm and the Blossoms-Quad algorithm to generate a quadrilateral mesh. During meshing, the edge length of the quadrilateral elements must satisfy the dispersion condition. Equation (17) shows the formula for the edge length of the quadrilateral mesh elements.

where \({d_i}\) denotes the edge length of the mesh cell, \(V_s^i\) is the P-wave velocity of each geologic block, and \({f_0}\) is the computational main frequency, which is 55 HZ used in the experiment.

In the geological model discretization, abnormal internal angles (either too small or too large) of quadrilateral elements significantly affect the stability of spectral element method numerical simulations. Based on numerical experiments, we define angles \(\theta < 20^\circ\) as too small and \(\theta > 160^\circ\) as too large. For mesh optimisation, We define the loss values for four categories of critical geometric distortions as shown in Table 3. The optimisation algorithm parameters are configured as shown in Table 4.

-

All algorithms perform 200 iterations to ensure thorough search space exploration, with uniform population size of 50 for cross-algorithm comparability.

-

The extreme values of loss functions follow the “prioritize severe distortion” principle, where magnitude differences ensure correct distortion level identification by the optimiser.

Note: The 9-digit loss values are designed to: (1) substantially exceed normal optimisation targets, (2) clearly distinguish distortion levels through orders of magnitude, and (3) prevent interference from regular optimisation objectives in severe distortion detection.

Experimental results and analysi

The distribution of distorted nodes in the mesh models of bgpModel and nanpuModel when they are not optimised is shown in Tables 5 and 6.

The Fig. 8 below shows a comparison of the effect of bgpModel’s error area before and after optimisation. As shown in Fig. 8, area A in the figure shows areas with excessively small angles; area B shows meshes that have quadrilateral degenerated into triangles; area C shows areas with topology errors and concave quadrilaterals; and area D shows areas with quadrilaterals with excessively large aspect ratio.As for nanpuModel, due to its simpler structure compared to bgpModel, its initial mesh contains only three types of defective elements, as shown in Fig. 9, area A in the figure shows meshes that have quadrilateral degenerated into triangles; area B shows areas with excessively small angles; and area C shows areas with quadrilaterals with excessively large opposite side ratios. As can be seen from the figures, the initial mesh of bgpModel and nanpuModel, without optimisation, contains many errors that would prevent the spectral element method from performing the numerical simulation correctly. However, after optimisation, all become normal quadrangles.

The hardware environment of the experiment is as follows: the memory size is 32GB, the CPU model is Intel Core i5 13600KF, and the base speed of the CPU is 3.50 GHz. Under this hardware environment, the processor works in single-threaded mode. The average time taken for each optimisation using the three algorithms to complete ten optimisations for bgpModel is as follows: the PSO algorithm converges the fastest, taking an average of 324.06 s; the WPA algorithm is second, taking an average of 376.05 s; and the FA algorithm takes the longest because of its fully interconnected , i.e. each search node in the optimisation process needs to attract all the other search nodes, which leads to high computational complexity and may cause oscillations, affecting the convergence speed. Therefore, the FA algorithm takes the longest on average, with an average time of 518.69 s.

While the preceding comparative analysis of optimisation results for the two models demonstrates the effectiveness of swarm intelligence algorithms in mesh optimisation,to further analyze performance differences among the three selected algorithms, we focus on the more complex bgpModel—characterized by a higher diversity of initial mesh defects and a greater number of errors—and conduct a quantitative mesh quality evaluation on its optimised meshes. Since the number of quadrangles in the meshes after model meshing is very large, the part of the mesh that is not wrong is already good enough and will not be changed during secondary optimisation, so here we only focus on comparing the parameters of the part of the mesh where the nodes have been optimised during the optimisation process.

The distribution of the interior angles of the quadrilaterals in the mesh is an important comparison index. We prefer the quadrilaterals in the mesh to be closer to squares, so the more interior angles close to \(90^{\circ }\), the more suitable the mesh is for the spectral element method. The angle distribution of the optimised quadrilaterals in the geological model mesh after optimisation is shown in Figure 10. It can be seen that after optimisation by all three algorithms, none of these quadrangles have angles greater than \(160^{\circ }\) or less than \(20^{\circ }\). The difference between the maximum and minimum angles in the meshes after optimisation by the three algorithms is not significant. The angles of these quadrangles after optimisation by the PSO and FA algorithms are very similar, while the optimisation effect of the WPA algorithm is slightly better, with significantly more angles closer to \(90^{\circ }\) than the other two algorithms.

A large aspect ratio (the ratio of the longest side to the shortest side within a quadrilateral) can also lead to calculation instability and long calculation times when the spectral element method is used for numerical simulation. As shown in Table 7, the three algorithms all have a good effect on aspect ratio optimisation, and can optimise the aspect ratio to within 4.0. The WPA algorithm has a slight advantage in the aspect ratio of the optimised mesh, which is in the range of 1.0 to 2.0, but is not significantly better than the mesh optimised using the PSO algorithm. The two are almost identical in the range 2.0 to 3.0. The mesh optimised using the PSO algorithm has more edges in the range 3.0 to 4.0. The number of quadrangles in the mesh after optimisation by the FA algorithm in the range from 1.0 to 2.0 is significantly lower than that in the previous two algorithms, and it is more concentrated in the range from 2.0 to 3.0. However, in general, the aspect ratios of the meshes after optimisation by the three algorithms are all within 4.0, and the overall difference is not great. They can all meet the needs of numerical simulation using the spectral element method.

The Jacobian ratio is also a standard measure commonly used to evaluate the quality of a quadrilateral cell. It compares the determinant value of the Jacobian matrix at each corner point with the ideal value to determine the degree of distortion of the mesh. Ideally, the quadrilateral is undistorted, and the degree of deviation from this ideal value can be measured by the Jacobian Ratio. The closer the Jacobian Ratio is to 1, the better the quality of the quadrilateral mesh. Figure 11 shows the proportion of the Jacobian Ratio of the quadrilateral in the mesh after optimisation for the three algorithms.

It can be seen that there are also slight differences in the quality of the meshes under the Jacobian ratio evaluation criterion. First, the WPA algorithm, after optimisation, has 340 meshes in the range of 0.7 to 1.0, which is the largest number of high-quality meshes generated among the three algorithms. This indicates that the optimisation ability of this algorithm is relatively good, and that the proportion of high-quality meshes after optimisation is the largest. In comparison, PSO has 320 meshes in this range, which is slightly less than WPA, but still demonstrates strong optimisation ability. In addition, FA only has 261 meshes in this range, which is significantly lower than the other two algorithms, indicating that its optimisation ability is relatively insufficient. Among the meshes of medium quality (in the range of 0.4 to 0.7), FA performed relatively well, with 890 meshes, slightly higher than WPA and PSO, indicating that the algorithm’s optimised meshes are mainly of medium quality. In the low-quality meshes (in the 0.1 to 0.4 range), FA also produced the most meshes (384), which further indicates that the FA algorithm is slightly lacking in optimisation ability and is biased towards finding only nodes that just meet the conditions and is unable to find the optimal node. PSO performed slightly better in the low-quality interval, generating 356 meshes, while WPA only has 307 meshes in this interval, indicating that WPA has the lowest probability of generating low-quality meshes. None of the three algorithms had extremely low-quality meshes with a Jacobian Ratio in the 0 to 0.1 interval.

Figure 12 presents the 2D spectral element method forward modeling results using the WPA-optimised mesh from the bgpModel. The simulation was conducted with a surface shot point at x = 4900 m, a trace interval of 10 m, a maximum offset of 5000 m, and a recording duration of 4000 ms. Figure 13 displays the analogous spectral element method forward modeling results for the WPA-optimised mesh derived from the nanpuModel, with the shot point positioned at x = 2600 m (same acquisition parameters: 10 m trace interval, 5000 m maximum offset, 4000 ms recording time).The quadrilateral meshes of the geological models, optimised via the WPA algorithm, now yield high-quality results in 2D spectral element method forward modeling. Notably, even the less capable FA algorithm has enabled previously unusable meshes to support spectral element method simulations, marking a significant improvement in numerical feasibility.

To sum up, in terms of the effect of secondary optimisation on the quads obtained from meshing a geological model, the WPA algorithm has the best overall performance in terms of mesh quality after optimisation, followed by PSO, while FA is relatively weak. WPA is the best choice for optimising meshes, and PSO also has good optimisation capabilities. The mesh quality after optimisation by FA is more mediocre and not as good as the previous two. In terms of efficiency, the FA algorithm has the slowest convergence rate due to its fully interconnected nature. The WPA algorithm converges slightly more slowly than the PSO algorithm, but a little time can indeed be exchanged for a good performance improvement, making the mesh quality better. Although the performance of the three swarm intelligence algorithms for the secondary optimisation of the quadrilateral mesh varies, the optimised geological model mesh can already meet the calculation requirements of the spectral element method numerical simulation. This shows that the swarm intelligence algorithm is very effective when applied to the secondary optimisation of the quadrilateral mesh of the geological model.

Discussion

Why WPA works better: a theoretical comparison

We believe that WPA demonstrates superior performance compared to PSO and FA in grid optimisation problems, with its theoretical advantages primarily reflected in two aspects: search mechanisms and convergence characteristics.

From the perspective of search mechanisms, PSO relies on individual and global optima to guide the search, making it prone to falling into local optima, especially in extremely complex meshes where “premature convergence” often occurs. FA, based on the brightness attraction mechanism of fireflies, depends heavily on random step sizes during its search process. While it exhibits strong exploration capabilities, it lacks stable convergence during the fine-tuning phase, resulting in lower computational efficiency. Additionally, its fully connected nature means that even distant nodes can influence the search, making it more difficult to converge to the global optimum.

In contrast, WPA employs a hierarchical decision-making mechanism, forming a multi-level search strategy through the division of labor among the lead wolf, scout wolves, and fierce wolves. The lead wolf is responsible for precise localization of the global optimum, scout wolves perform wide-ranging exploration, and fierce wolves conduct fine local searches. This collaborative mechanism is more robust than PSO’s single gbest guidance, enabling WPA to ensure both global exploration and local refinement.

Regarding convergence characteristics, WPA’s adaptive step-size adjustment mechanism allows it to adopt larger steps in the early stages to enhance global exploration and gradually reduce step sizes later to improve local precision. This dynamic balancing capability significantly outperforms PSO’s inertia weight adjustment and FA’s fixed attraction coefficient.

Computational cost and scalability

In terms of computational cost, since the objective function depends solely on the local mesh quality around individual nodes, the computational complexity of the algorithm is decoupled from the scale of the global mesh. Specifically, the optimisation of a single node position typically involves multiple steps, such as position perturbation, fitness evaluation, behavior selection, and position update. Although each behavioral step may include exploration in multiple directions and repeated evaluations of the fitness function—potentially involving several formulas and perturbation mechanisms—these operations generally rely only on the local neighborhood information of the individual. Moreover, operations such as position updates can often be directly derived from explicit formulas in a single step, with a constant number of operations and data volume, independent of the global problem size (e.g., the total number of mesh nodes). Therefore, in asymptotic complexity analysis, the computational cost for optimising a single erroneous node can be considered \(\mathcal {O}(1)\), making the total computational complexity dependent only on the number of nodes to be optimised k, the population size N, and the number of iterations T, i.e., \(\mathcal {O}(T \cdot N \cdot k)\). This complexity arises from the repeated evaluation of the objective function and position updates for all individuals in each iteration, where the evaluation phase—requiring frequent computation of local mesh quality metrics—constitutes the primary computational overhead. Both T and N are controllable parameters. In practical applications, since the optimisation is restricted to local regions around faulty elements, the number of nodes to be optimised k is typically much smaller than the total number of mesh nodes. As a result, the algorithm retains acceptable computational efficiency even when applied to large-scale meshes, the optimisation time of the complex model used in this paper is also given in the experimental analysis above, and it can be seen that it is within the acceptable range. The computational cost of the algorithm is independent of the overall mesh size and complexity, and depends solely on the number of erroneous nodes. As this number increases, the cost grows linearly. Hence, the algorithm demonstrates good scalability.

Limitation

Although swarm intelligence algorithms demonstrate promising performance in quadrilateral mesh secondary optimisation, there remain noteworthy limitations that warrant discussion. First, the current algorithm is constrained by the quality of initial meshes: Since spectral element forward modeling imposes stringent restrictions on mesh edge lengths to satisfy specific numerical stability conditions (as specified in Eq. (17)), our secondary optimisation algorithm lacks the capability to actively refine or adaptively adjust mesh structures. Consequently, this necessitates the initial meshing algorithm to achieve region-adaptive multiscale refinement. Such dependence not only restricts the applicability scope of the secondary optimisation algorithm but also imposes specific requirements on initial mesh generation algorithms.

On the other hand, although our algorithm maintains decent computational efficiency when handling large-scale meshes as analyzed earlier, the gradient-free nature of swarm intelligence algorithms inherently limits their rapid convergence capability. These algorithms often require extensive iterations to reach stable solutions, meaning that even when optimising within constrained scopes, achieving satisfactory mesh quality improvements may still demand substantial computational time. Meanwhile, the initial meshing process for geological models using primary meshing algorithms also consumes considerable time. In contrast, deep learning-based mesh generation demonstrates significantly higher computational efficiency - once the model is trained, generating new meshes only requires inference operations.

Future

While the proposed method has shown promising results in improving quadrilateral mesh quality through swarm intelligence optimisation, there remain several avenues worth exploring to further enhance its applicability and performance.

Although the proposed method in this work focuses primarily on the quality optimisation of two-dimensional structured meshes, the underlying framework is inherently extensible to higher dimensions. With appropriate modifications to the optimisation strategies and procedures, it can be readily adapted to the quality improvement of three-dimensional meshes, including but not limited to hexahedral and hybrid meshes. This extension will constitute a key direction in our future work, particularly in the context of optimising 3D meshes in more complex geometric domains and engineering applications, where it holds significant research and practical value.

Another promising research direction involves integrating advantageous phases of multiple swarm intelligence algorithms to achieve synergistic enhancement. As exemplified by Houssein et al.’s innovative work20, the hybrid BES-GO algorithm developed through combining the global exploration strengths of the Bald Eagle Search algorithm with the local exploitation capabilities of the Growth optimiser demonstrates marked superiority in both convergence rate and solution accuracy. This inspired us, swarm intelligence algorithms are extremely numerous, our research has confirmed that swarm intelligence algorithms are effective in mesh secondary optimisation, and different swarm intelligence algorithms have their own advantages, future research may be able to combine the dominant strategy of multiple swarm intelligence algorithms to achieve better optimisation results.

To address the dependency on initial mesh quality discussed earlier in the limitation section, one promising direction for future research is the integration of mesh refinement strategies—particularly quadtree-based adaptive mesh refinement—into the initial mesh generation phase. Quadtree refinement offers a natural framework for generating locally refined, multiscale quadrilateral meshes that can better capture the complexity of geological structures while providing more suitable initial conditions for secondary optimisation. By combining this technique with swarm intelligence algorithms, it is conceivable to develop a hybrid framework that leverages adaptive refinement to construct high-resolution meshes in critical regions, and then employs optimisation to further improve element quality without altering mesh resolution.

Finally, another promising direction is to integrate swarm intelligence optimisation with deep learning methods to overcome limitations in generalization and convergence efficiency. For instance, graph neural networks (GNNs) or transformer architectures could be used to encode mesh structures and predict optimal node movement directions or regions with high improvement potential, providing informed initialization or guidance for the swarm. Alternatively, reinforcement learning frameworks could be adopted to train node movement policies that optimise long-term rewards, thereby reducing inefficient searches and improving convergence. Such learning-augmented frameworks not only enhance generalizability but also leverage historical optimisation data to uncover implicit patterns, offering a new paradigm for large-scale, high-complexity mesh quality control. Notably, Pan et al. (2023) formulated mesh generation as a Markov Decision Process (MDP) and employed a soft actor-critic (SAC) algorithm to build an automated quadrilateral meshing system without human intervention21. Their work highlights the potential of reinforcement learning in mesh optimisation and generation, particularly in sequential decision modeling and generalization. This provides both theoretical foundation and technical inspiration for future efforts to integrate deep reinforcement learning into swarm-based optimisation—such as designing hybrid frameworks that use learned policies to guide swarm behavior or dynamically adapt search strategies during optimisation.

Conclusion

The spectral element method offers high computational accuracy and effectively captures surface and subsurface interface undulations, making it particularly suitable for forward modeling of complex geological structures. However, its application has been limited by the poor quality of quadrilateral meshes generated by traditional algorithms for complex models.

This study presents a novel solution through swarm intelligence-based secondary optimisation of quadrilateral meshes. We developed a specific algorithm for applying swarm intelligence algorithms to optimise meshes generated by traditional indirect methods, along with an effective loss function for this process. Through comprehensive testing of three swarm intelligence algorithms (particle swarm, wolf pack, and firefly), we found that all approaches successfully addressed various mesh quality issues including topology errors, concave quadrilaterals, degenerate quadrilaterals, oversize and undersize interior angle, and problematic aspect ratios.

While all three algorithms produced meshes meeting spectral element method requirements, their performance differed notably. The particle swarm algorithm demonstrated faster convergence but yielded slightly lower quality meshes compared to the wolf pack approach. The firefly algorithm showed mediocre performance, with the slowest convergence due to its fully-connected nature, and produced mostly medium-to-low quality meshes containing fewer high-quality elements than the other algorithms. While this reflects its simpler nature as a basic swarm intelligence method, it confirms that even simpler swarm intelligence algorithms can handle fundamental mesh optimisation.

This work provides a practical secondary optimisation framework that significantly advances spectral element simulations for geological meshes. It addresses key mesh quality challenges and contributes to the broader applicability and promotion of the spectral element method in complex geological modeling.

Data availability

The models used in this paper can be downloaded at https://github.com/ohouyang/bgp-model.

Change history

21 November 2025

The original online version of this Article was revised: The Funding section in the original version of this Article was omitted. It now reads: “This work was supported by the National Key Research and Development Program of China (Grant No. 2023YFB3905004).”

References

Ling-he, H. et al. Numerical modelling and propagation law of seismic wave field in Loess Plateau of Ordos Basin (in Chinese). Oil Geophys. Prospect. 59, 504–513. https://doi.org/10.13810/j.cnki.issn.1000-7210.2024.03.013 (2024).

Duo-lin, Z. & Chao-ying, B. Review on the seismic wavefield forward modelling (in Chinese). Prog. Geophys. 26, 1588–1599 (2011).

Wei, H., Li, S. & Wang, W. Current status and prospects of numerical simulation algorithms in geodynamics (in Chinese). J. Jilin Univ. (Earth Sci. Ed.) 1–29. https://doi.org/10.13278/j.cnki.jjuese.20240106.

Shu-hai, Z., Cong-hai, W., Yong, L., Shuai-bin, H. & Jun-long, Z. A brief review on the numerical studies of the fundamental problems for the shock associated noise of the supersonic jets (in Chinese). J. Exp. Fluid Mech. 38, 1–27. https://doi.org/10.11729/syltlx20230075 (2024).

Opršal, I. & Zahradník, J. Elastic finite-difference method for irregular grids. Geophysics 64, 240–250 (1999).

Patera, A. T. A spectral element method for fluid dynamics: Laminar flow in a channel expansion. J. Comput. Phys. 54, 468–488 (1984).

Lee, S.-J., Komatitsch, D., Huang, B.-S. & Tromp, J. Effects of topography on seismic-wave propagation: An example from northern Taiwan. Bull. Seismol. Soc. Am. 99, 314–325 (2009).

Gordon, W. J. & Hall, C. A. Construction of curvilinear co-ordinate systems and applications to mesh generation. Int. J. Numer. Methods Eng. 7, 461–477 (1973).

Blacker, T. D. & Stephenson, M. B. Paving: A new approach to automated quadrilateral mesh generation. Int. J. Numer. Methods Eng. 32, 811–847 (1991).

Lo, S. Generating quadrilateral elements on plane and over curved surfaces. Comput. Struct. 31, 421–426 (1989).

Johnston, B. P., Sullivan, J. M. Jr. & Kwasnik, A. Automatic conversion of triangular finite element meshes to quadrilateral elements. Int. J. Numer. Methods Eng. 31, 67–84 (1991).

Owen, S. J., Staten, M. L., Canann, S. A. & Saigal, S. Advancing front quadrilateral meshing using triangle transformations. In IMR. 409–428 (1998).

Remacle, J.-F. et al. A frontal Delaunay quad mesh generator using the \(l^\infty\) norm. Int. J. Numer. Methods Eng. 94, 494–512 (2013).

Field, D. A. Laplacian smoothing and Delaunay triangulations. Commun. Appl. Numer. Methods 4, 709–712 (1988).

Li-gang, C. Topological improvement for quadrilateral finite element meshes (in Chinese). J. Comput. Aided Des. Comput. Graph. 19, 78 (2007).

Xu, M., Cao, L., Lu, D., Hu, Z. & Yue, Y. Application of swarm intelligence optimization algorithms in image processing: A comprehensive review of analysis, synthesis, and optimization. Biomimetics 8, 235 (2023).

Yang, X.-S. Nature-Inspired Metaheuristic Algorithms (Luniver Press, 2010).

Hu-sheng, W., Feng-ming, Z. & Lu-shan, W. New swarm intelligence algorithm—Wolf pack algorithm (in Chinese). Syst. Eng. Electron. 35, 2430–2438 (2013).

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95-International Conference on Neural Networks. Vol. 4. 1942–1948 (IEEE, 1995).

Houssein, E. H., Hossam Abdel Gafar, M., Fawzy, N. & Sayed, A. Y. Recent metaheuristic algorithms for solving some civil engineering optimization problems. Sci. Rep.15, 7929 (2025).

Pan, J., Huang, J., Cheng, G. & Zeng, Y. Reinforcement learning for automatic quadrilateral mesh generation: A soft actor-critic approach. Neural Netw. 157, 288–304 (2023).

Funding

This work was supported by the National Key Research and Development Program of China (Grant No. 2023YFB3905004).

Author information

Authors and Affiliations

Contributions

FZ suggested the original study idea and design the method, JYT designed and completed the experiment, PPL and KYL provide the models used in the experiments in this paper and perform numerical simulations with the spectral element method to verify the results. YGH analysed the results, SHY written the original draft, FD completed the review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, F., Tian, J., LV, P. et al. Quadrilateral mesh optimisation method based on swarm intelligence optimisation. Sci Rep 15, 25391 (2025). https://doi.org/10.1038/s41598-025-11071-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-11071-1