Abstract

Entity resolution technology is the process of distinguishing whether data from different knowledge bases refer to the same entity in the real world. Existing research takes entity pairs as input and makes judgments based on the characteristics of entity pairs. However, there is insufficient utilization of contextual semantics, as existing methods fail to effectively model the token-attribute associations within data sources and cross-attribute semantic hierarchical relationships, which weakens the discriminative power of key attributes. What’ more, they exhibit failure in handling polysemous ambiguities, as conventional graph neural network adopts rigid node representations that cannot dynamically adjust word meanings according to attribute-specific contexts. To address this issue, this paper proposes the Contextual Semantics Graph Attention Network (CSGAT), which extracts contextual information at token and attribute levels to generate semantically fused embeddings. The advantages of CSGAT are: 1) Leveraging the Transformer self-attention mechanism to extract feature vectors of words, model sequence relationships, and calculate the degree of relevance with other words; 2) Employing the attention mechanism on contextual information at the attribute level to extract semantic embeddings to enrich attribute embeddings, forming more discriminative attribute embeddings; 3) Utilizing the graph attention network to generate residual vectors for final entity resolution decisions. Experimental on Amazon-Google and BeerAdvo-RateBeer datasets show that, as compared with the competing methods, CSGAT can achieve significant improved performance on F1-score with fine Precision and Recall. Code is available at https://github.com/xhtech2024/csgat.

Similar content being viewed by others

Introduction

Entity resolution technology focuses on determining whether data records from different knowledge bases refer to the same real-world entity1. This process effectively bridges unstructured data tables with structured databases. Knowledge bases such as Freebase, DBpedia, and Wikidata store entities alongside detailed information2, including names, dates, and locations, among others. Entity resolution is a critical step in the integration and processing of these data-rich knowledge bases3. As knowledge bases exhibit distinct and customized data attributes, they allow for tailored feature extraction that facilitates the construction of more comprehensive product databases, forming the foundation for data mining and analysis. Due to the abundance of condensed contextual information inherent in knowledge bases, which aligns semantically with entities, entity resolution remains an active area of research. Nevertheless, disambiguating similar textual data remains a challenge.

Table 1 highlights two entity pairs and the challenges encountered when distinguishing them. For instance, while e1 and e2 feature similar terms across attributes, they represent different products manufactured by separate breweries. Traditional entity resolution methods4, such as models based on recurrent neural network (RNN)5, often misclassify such cases due to their inability to assign sufficient weight to critical attributes like Brew Factory Name and Factory Name. This results in flawed resolution outcome as these models treat all textual content equally, failing to account for the varying importance of specific information. In contrast, the entity pair e3 and e4 demonstrate the importance of analyzing attribute-level differences. Although both entities share the attribute text Sixpoint Brewery and have similar product names (Global Warmer and Sixpoint Global Warmer), their style attributes differ. Thus, the entities e3 and e4 are correctly identified as distinct products. These examples highlight the necessity of dynamic weighting mechanisms that prioritize richer, more informative attributes during the resolution process.

Core challenges in entity resolution tasks lies in effectively leveraging contextual semantic information and assign appropriate weights to different tokens and attribute nodes for precise entity distinction6. Although deep neural networks have made progress in this direction by transforming entities into semantic distributions through embedding techniques, these methods still suffer from significant limitations: First, they fail to adequately account for the dynamic variance in the importance of words and attributes. Second, they are unable to resolve ambiguity caused by polysemous words7. For instance, the inadequate coverage of pre-trained embeddings means that commercial and personalized vocabulary such as “Wahaha” and “Robust” (excluded from training corpora like Wikipedia) lacks effective representation. Consequently, the same token may represent different contextual semantic information across domains or entities—“Wahaha” embodies distinct semantics in sentiment representation versus product representation. Current deep learning-based entity resolution methods typically provide single, context-agnostic embedding vectors for words requiring imputation, severely limiting the model’s ability to capture nuanced semantic details and critical distinctions.

The research motivation of this paper is precisely to address these critical limitations—inadequate weight assignment and lack of contextual semantic modeling. We propose the Contextual Semantics Graph Attention Network (CSGAT) model. Our approach extends the graph neural network to better capture contextual semantic relationships and assign appropriate weights to different nodes. The model employs a hierarchical heterogeneous graph to separately represent attributes and tokens, facilitating the learning of relationships between tokens and attributes as well as those among attributes themselves. This enables the assignment of different weights to the same token under varying contexts. Furthermore, to handle the challenge of polysemy, Transformer is used to extract contextual semantics. Existing text vector representation methods employ pre-trained models such as GloVe, which integrates global co-occurrence relationships through textual co-occurrence frequency and linear substructures, but struggles to capture fine-tuned representations. To address this, token positional encoding is introduced to construct contextual semantics, resolving polysemy issues and enabling the acquisition of distinctive vector representations across diverse contextual semantic environments. Thus enhance the representation of word order and structure, complementing semantic embeddings with richer contextual information8,9. We use GloVe for text initialization, and for texts lacking completed vector representations, instead of traditional random representation methods, we employ the BERT model to capture positional encodings and generate vector embeddings, thereby establishing more comprehensive embedding representation capabilities.

The proposed CSGAT model combines the self-attention mechanism10 of the Transformer with graph attention mechanism to address these challenges. The Transformer captures inherent word semantics and contextual dependencies, while the graph attention mechanism clusters tokens and attributes based on their contributions to the overall entity representation. This integrated approach generates richer entity embeddings, improving entity resolution performance. The main contributions of this paper are summarized as follows:

-

(1)

A hierarchical heterogeneous graph decouples tokens and attributes into separate layers, explicitly modeling Token-Attribute composition links and Attribute-Attribute semantic dependencies. This overcomes homogeneous graph limitations, improving contextual token and semantic attribute embeddings.

-

(2)

CSGAT integrates Transformer self-attention and graph attention within a hierarchical heterogeneous graph, dynamically modeling token-attribute associations and cross-attribute semantics to resolve polysemy and enhance discriminative power.

-

(3)

Extensive experiments on various datasets demonstrate the superior performance of the proposed method. Comparisons of accuracy, recall, and F1 scores against existing deep learning and machine learning models validate that the contextual information captured by the CSGAT model provides richer semantic representations, leading to enhanced entity resolution.

Empirical validation across Amazon-Google and BeerAdvo-RateBeer datasets shows CSGAT outperforms baselines in F1, precision, and recall, attributed to its contextual semantic representations enhancing entity resolution performance.

Related work

Entity resolution

Entity resolution, as a pivotal preprocessing task for data integration, has been continuously studied and remains a topic of broad interest to this day11. Research in this field has progressed through three primary stages. The first stage involves methods based on manual labor and rules. When the outcomes necessitate interpretability, as well as the explicit definition and maintenance, rule-based strategies can adequately fulfill these requirements4. For highly structured data, predefined rules are formulated based on the attributes, relationships, and contexts of entities, such as entity name alignment via character matching and relationship alignment grounded in structural similarity12. Qin et al.13 proposed a generalized synthesis program that formed attribute matching segments including conjunctions, disjunctions, and negations, enabling the establishment of arbitrary attribute combinations. This, in turn, facilitates the synthesis of matching rules from both positive and negative entity pairs. The precision of rules has a direct influence on the outcome of entity resolution. When confronted with complex entity relationships, rule-based methods often struggle to handle them effectively. Crowdsourcing-based methods14 leverage efficient crowdsourcing platforms and quality management mechanisms to manually assist in identifying issues with intricate patterns and ambiguous semantic associations. The second stage revolves around machine learning-based approaches, which entail training classifiers based on entity features15. This includes various algorithms such as the decision tree, support vector machine, Bayesian network and ensemble learning. Konda et al.16 introduced the entity resolution system ‘Magellan’, with a comprehensive framework for blocking and matching and a scripting environment supporting interactive modifications. To tackle the challenge of large-scale matching data, a preprocessing step of blocking is typically employed to quickly eliminate obviously mismatched entity pairs. Wu et al.17 proposed a joint learning model of blocking and matching, allowing the blocker and matcher to iteratively update pseudo-labels to share knowledge, thereby broadening the supervision signals for the blocker. Wang et al.18 evaluated the performance of seven existing large language models in the blocking phase.

The methods from the aforementioned two stages suffer from two significant drawbacks. Firstly, they are highly sensitive to errors made by domain experts, as issues with training sample labels, feature engineering, and the selection of similarity functions can lead to severe performance degradation19. Secondly, the cost of portability is high, as each dataset utilizing traditional entity resolution systems requires the customization of labels, features, and other processes. The third stage involves deep learning-based approaches20, with a common strategy being to transform the entity resolution problem into a binary classification task, exemplified by systems such as DeepER4, DeepMatcher21, and Ditto22. DeepER4 treats both word embeddings and similarity functions as trainable parameters within the deep learning framework. It utilizes pre-trained GloVe to obtain initial word embeddings and then employs LSTM to generate entity embeddings. During backpropagation, the initial word embeddings are fine-tuned. To address multi-intent entity resolution, approaches beyond contextual semantic integration include treating multi-intent as a multi-label classification problem. Genossar et al.11 proposed the FlexER model, which incorporates intent representations for tuple pairs and constructs a multi-way graph as input to graph convolutional network (GCN). Through learning entity intents from relationships between various intents, FlexER improves performance on multi-scale problems. Low et al.23 leveraged Transformer-based text rearrangement and token aggregators to build the enhanced Transformer model AttendEM, achieving better performance. Ding et al.24 proposed SETEM, an ensemble model with strong generalization capabilities, addressing the issues faced by existing pre-trained language models when dealing with small datasets, unseen entities, and scenarios with imbalanced positive and negative samples. Dou et al.25 pointed out that the one-hot labels relied upon by deep learning models lead to sharp supervisory information, which reduced the model’s generalization ability and performance in new datasets. To address this problem, they proposed a regularization approach based on bias-variance trade-off, which constrained training during iterative updates.

Semi-structured data shares similarities with the triplet representation of knowledge graphs. However, due to its non-Euclidean data structure, Transformer-based language models cannot be directly applied. Researchers have therefore focused on utilizing graph structures for entity resolution studies. For instance, GCN21 and graph attention network (GAT)26 leverage deep learning for graph representation learning. GCN operates in the spatial domain, utilizing the Laplacian matrix to perform convolution operations on local graph information. When embedding nodes, GCN not only consider the features of the node itself but also integrate the feature information of adjacent nodes, resulting in richer node representation. Compared to GCN, GAT introduces an attention mechanism during the aggregation of adjacent nodes. This attention mechanism weighs the importance of different adjacent nodes and assigns varying attention coefficients, determining their varying influences on the central node. Consequently, the node representations incorporate structural information. Zhu et al.27 proposed the Relation-Aware Graph Attention Model (RAGA) for global entity resolution, which achieves the interaction between entity information and relation information. By employing a self-attention mechanism, RAGA transfers entity information to relation information and vice versa, utilizing a fine-grained matching matrix across the entire graph to accomplish one-to-one entity resolution. Both GCN and GAT, designed for graph-structured nodes, allow node embeddings to converge stably while maintaining continuous information transmission. In the context of entity resolution tasks, it is observed that only tokens possess raw semantic information, whereas nodes often lack rich semantic content. To address this, RAGA leverages both nodes and attributes to complete entity matching, enabling the controlled propagation of information across different types of nodes, resulting in more discriminative entity embeddings. The aforementioned methods that utilize pre-trained language models to convert words into word embeddings have a significant limitation: the semantic loss of untrained vocabulary. This issue is addressed by extracting contextual semantic information to provide word embeddings.

To address the limitations of pre-trained language models in the context of entity resolution, it is essential to examine how the semantic loss of untrained vocabulary impacts performance. Pre-trained language models, such as BERT, are typically trained on large general-purpose corpora, which neglect many domain-specific or personalized vocabularies commonly seen in practical applications. These out-of-vocabulary (OOV) tokens cannot be adequately mapped to their correct semantic representations and are often replaced by randomly initialized embeddings, leading to two significant issues in entity resolution tasks.

This semantic loss reduces the model’s ability to differentiate between similar but distinct entities effectively. For example, domain-specific terms or abbreviations that characterize different entities may not have corresponding context-aware embeddings, resulting in diminished discriminative power. Secondly, the use of randomly initialized embeddings introduces noise into the model, as they fail to capture the syntactic and semantic coherence encoded in the original text. Consequently, these embeddings lack the ability to reflect relationships between attributes or tokens, which are vital for accurate resolution.

Existing methods attempt to mitigate this issue by extracting contextual semantic information to enhance embedding. However, the inability to preserve even a partial semantic structure for untrained terms limits the overall performance. For instance, transforming such terms into embeddings without leveraging contextual clues from their co-occurring tokens or attributes undermines the model’s generalizability and robustness in unseen data scenarios. This creates a critical performance gap, particularly in datasets containing niche vocabularies or domain-specific terminology with unique semantic properties.

Addressing this bottleneck requires approaches that not only integrate contextual semantic extraction mechanisms but also adaptively fuses domain-specific information. By learning dynamic representations anchored in both local (token-level) and global (attribute-level) contexts, models can reconstruct more meaningful embeddings for untrained vocabulary. These embeddings should leverage both positional and structural information to establish semantic coherence across entities. This paper proposes a solution to this issue by designing a graph-based model that mitigates the semantic loss for untrained tokens, enhancing entity resolution performance in scenarios rich with personalized vocabularies. The proposed method ensures that even when encountering untrained tokens, the model’s ability to resolve entities is not compromised.

Contextual semantic extraction

In the long-term research of natural language processing, the encoder-decoder-based RNN architecture has been the cornerstone. The most popular translation model today employs the Seq2Seq27 architecture, which consists of two RNNs, one for encoding and the other for decoding. Google Translate adopted this architecture in 2016. Tu et al.4 combined Long Short-Term Memory (LSTM) units with bidirectional recurrent neural networks to transform tuples into embedding representations, effectively extracting similarities between tuples. However, as the length of the source sentence sequence increases, RNN can only adjust a single vector, failing to output all necessary information. This limitation is attributed to the context vector28, which acts as a bottleneck. Additionally, due to the sequential nature of RNN, parallel computing becomes challenging.

To overcome the aforementioned problems, Vaswani et al.29 proposed the Transformer, a technique leveraging attention mechanisms. Intuitively, it can be described as representing a word as a weighted combination of its contextual words. The model abandons the RNN structure and constructs an attention-based model. While retaining the encoder-decoder structure, it replaces RNN with multi-head attention layers. BERT10, which combines the Transformer with unsupervised training, was the first to achieve state-of-the-art performance in NLP tasks. It is a general-purpose language model that can be pre-trained on large-scale text and fine-tuned in a supervised manner for tasks such as entity resolution. Miao et al.30 introduced the Rotom architecture based on BERT, which incorporates a novel data augmentation operator “InvDA”, to generate naturally diverse augmented sequences from the original sequences. Through Rotom learning, an optimized filtering and weighting model is employed to better select and combine enhanced samples.

Previous studies have applied pre-trained word embedding vectors to the task of entity resolution. DeepER4 incorporates a contextual semantic extraction module during the training phase, which, through backpropagation, can better fit the contextual semantics. DeepMatcher extends DeepER by proposing a new entity resolution framework, on which the MPM and HierMatcher models are researched. MPM and HierMatcher achieving better experimental results on some datasets, thereby demonstrating the effectiveness of the Transformer architecture30 in entity resolution tasks. Ditto is based on a pre-trained Transformer language model and also provides modules for optimization based on domain-specific knowledge. Such entity resolution methods are all based on sequential text features, accomplishing attribute embedding representations and similarity calculations according to different word sequences. These models rely on pre-trained models for their entire datasets, but in the real world, there often exist many personalized vocabularies with clearer distinguishability that cannot be converted into embeddings through pre-trained models. Commonly used methods compensate for this by using randomly generated embeddings, which lose their original semantics and fail to help with entity resolution tasks. This is the research motivation of this paper.

Framework of contextual semantics graph attention network

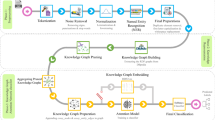

In the realm of entity resolution problems, an entity typically represents a unique item in the real world, such as a person, a product, or a business. Entities are denoted by \(e\), and their corresponding attributes and attribute values are represented by \(key\) and \(value\), respectively. When data is missing or incomplete, the term \(NAN\) is used to fill in the gaps. In entity resolution, two knowledge bases serve as collections of data entities, denoted as \(D\) and \(D^{\prime }\), with the objective of outputting an entity matching matrix \(M \subseteq D \times D^{\prime }\), where the elements of the matrix \(M_{i,j} = \left\{ {\left( {e_{i} ,e_{j} } \right)|e_{i} \in D,e_{j} \in D^{\prime } } \right\}\) indicate the result of whether the entities \(e_{i}\) and \(e_{j}\) match. Conventionally, a query entity \(e_{w}\) is extracted from \(D\) and compared against all entities in \(D^{\prime }\). Existing methods20 take \(n\) entity pairs \(\left\{ {\left( {e_{i} ,e_{j} } \right)|1 \le a \le n} \right\}\) as input and process these pairs independently. This chapter introduces the Contextual Semantic Graph Attention (CSGAT) framework, followed by a description of the training process. The specific model framework is illustrated in Fig. 1.

CSGAT Workflow Diagram: Starting with the generation of initial embeddings using GloVe, followed by hierarchical graph partitioning. BERT is then employed to extract contextual semantics for fine-tuning, leading to the generation of weighted embeddings and difference matrices. Ultimately, entity resolution is achieved through a single-layer CNN and HighwayNet.

Implementation details of the CSGAT model

The proposed method involves five key steps: Hierarchical Heterogeneous Graph Construction, Contextual Semantic Embedding, Token Comparison, Attribute Aggregation, and Matching Prediction.

Hierarchical Heterogeneous Graph Construction: A hierarchical graph is built with distinct layers for token and attribute nodes. Inspired by the Transformer, this step captures contextual dependencies across tokens in different orders, creating a foundation for semantic embedding.

Contextual Semantic Embedding: Leveraging the BERT model, this step extracts positional and contextual token information to refine token representations and initialize embeddings for unembedded tokens.

Token Comparison: Interdependency patterns are utilized to compare tokens across entity pairs. A difference matrix is computed between original and weighted embeddings, representing the matching features.

Attribute Aggregation: A single-layer neural network compresses the difference matrix into a vector, simplifying attribute differences for further computation.

Matching Prediction: HighwayNet computes the cross-entropy loss to produce the final matching distribution.

Hierarchical heterogeneous graph construction

Traditional graph structures do not differentiate between token nodes and attribute nodes. To independently process token nodes and attribute nodes, it is necessary to construct a hierarchical heterogeneous graph with separate token and attribute layers. This involves first building the hierarchical heterogeneous graph, denoted as \(G = (V,R)\), where \(V\) represents the set of nodes, and \(R\) represents the set of edges (relationships between nodes). The structure of this hierarchical heterogeneous graph is illustrated in Fig. 2.

Hierarchical Heterogeneous Graph Structure: It comprises a token layer and an attribute layer. The token layer encompasses all tokens, while the attribute layer holds all attributes. Based on the semantic information and positional information of various tokens, the integrated attribute information enriched with contextual details is derived.

The hierarchical heterogeneous graph constructs two distinct layers: a token layer and an attribute layer. Within these layers, there are token nodes \(t_{i}\) and attribute nodes \(a_{i}\), each forming a separate layer. Token nodes utilize word embeddings as their token embeddings, while attribute nodes are represented by embeddings of their \(\left\langle {key,value} \right\rangle\) pairs, where the \(key\) is the attribute name and the \(value\) is summarized and extracted from the attribute’s content. The relationship types in Rare of two kinds.: (1) Token-Attribute relationships, indicating which tokens constitute an attribute; and (2) Attribute-Attribute relationships, showing which tokens are shared between attributes. Inspired by the Transformer model, different positions within the text carry distinct semantics. For words in different positions within an attribute’s value, the word order within the attribute node is used to represent positional information. The advantage of the hierarchical heterogeneous graph lies in its distinction between the token layer and the attribute layer and enable a gradual embedding process from tokens to attributes. As seen in Fig. 2, token nodes are located at the outermost layer but carry the initial semantic information. Moving upwards, each attribute node connects to multiple token nodes, reflecting the hierarchical relationship where entities are decomposed into attributes and tokens. Compared to entity-pair dependency models31 more commonly used in entity resolution, the hierarchical heterogeneous graph serves as a global dependency model that captures richer semantic contextual information across multiple layers to construct entity embeddings.

Figure 3 demonstrates how to construct a hierarchical heterogeneous graph. A pair of candidate entities is taken as input to the entity resolution model. For each input entity, tokenization is applied to the attributes to generate a set of token collections. The entity is represented as a set of \(\left[ {\left\langle {key,[word]} \right\rangle } \right]\) pairs. Here, each entity \(key\) serves as an attribute node \(v^{a}\), and each distinct word is treated as a token node \(v^{t}\). Connections between token nodes and attribute nodes are established according to the relationship \(R\). The entity, composed of tokens and attributes, is represented as \(\left[ {\left\langle {v^{a} ,[v^{t} ]} \right\rangle } \right]\) where \(v^{t} \in R^{1 \times F}\) represents the context-embedded word embeddings, and \(F\) is the dimensionality of the embeddings. The attribute embedding is computed as \(v^{a} = \sum {h^{t} } (wvt)\)\(v^{a} = \sum {h^{t} } (wv^{t} )\), where, \(h^{t}\) represents the token node weights obtained through self-attention, and \(w \in R^{F \times F}\) is a learnable parameter. Finally, the entity embedding is generated by concatenating the embeddings of the internal attribute nodes, denoted as \(v^{e} = [v_{1}^{a} , \ldots ,v_{n}^{a} ]\).

Construction of Hierarchical Heterogeneous Graph: For an entity’s knowledge base, token embeddings are formed from the words within attribute values, attribute embeddings are extracted based on the attributes themselves, and entity embeddings are then generated through the integration of attribute embeddings and token embeddings.

Contextual semantic embedding

By incorporating contextual semantic information into the initial word embedding vectors, the expanded word embedding vectors are utilized as the input data format, upon which a deep neural network performs the entity resolution task. Currently, there are still limitations in relying solely on word embedding vectors to complete the entity resolution problem, and applying the strategy of contextual semantic embedding serves as a solution to address these limitations.

The limitations of word embedding vector representation

The distributed representation of words in vector space can assist learning algorithms in adapting to natural language processing tasks through better leveraging similarities. Since each word’s dimensions represent a weight distribution that can vary independently and support optimization through neural network training, remarkable achievements have been made in statistical language modeling with the earliest methods of word representation in 198632. Current research has made progress on this issue, with Word2Vec33, GloVe34, and FastText35 attempting to represent word semantics in context by transforming the representation of a word into a high-dimensional vector distribution, thereby helping similar word vectors maintain their similarities in the semantic space. GloVe34 introduces a least squares global log-bilinear regression model that encodes information through the contribution probabilities of word pairs. It is trained on a corpus containing 84 billion tokens, encoding a vast amount of general information, such as the similarity between “ICDE” and “International Conference on Data Engineering”. This pre-training has a positive impact, requiring fewer training samples on general datasets like products, articles, and restaurants. For words that are not part of the training, GloVe uses “UNK” to fill in all untrained vocabulary and leverages the learned embeddings of “UNK” for subsequent training. However, this approach renders all filled-in words indistinguishable, significantly impacting the accuracy of entity alignment. FastText35, on the other hand, utilizes n-grams to count word frequencies, capturing local order information of the words to be filled in as byte sequence features of length \(n\). This approach contradicts the reality that words with lower frequencies often carry more important semantics, resulting in filled-in words being able to generate different embedding vectors but failing to adequately represent the semantic information of the words. DeepER4 generates embedding representations using the co-occurrence distribution between the words to be filled and the known words. The embedding vector of a word to be filled is generated by calculating the average of the Top-K co-occurrence distributions of the existing word embedding vectors. However, the averaging strategy emphasizes the influence of common words, leading to the generated embedding vectors tending to be like each other.

Optimization methods for word embedding vector representation

To address the issue of incorporating contextual semantics, RNN23 combines the initial word embeddings with contextual semantic information, enabling the same word to have different word embedding representations that integrate relevant information under different semantics. However, contextual information cannot be extracted at the token level since it is the sequential order of attribute words that provides useful contextual information, and changing the word order has a significant impact on the generation of word embeddings. This makes data heterogeneity a major factor affecting the model. In DeepMatcher20, an additional common word alignment module is used to reduce the impact of word sequence variability on the generation of word embeddings.

To address the issues of missing word embeddings and lack of semantic context, contextual information provides crucial assistance. The main solutions are as follows: (1) For word embeddings that need to be filled, generate initial word embeddings using contextual semantic information, and for existing word embeddings, adjust the original word embeddings using contextual semantic information; (2) There exists a co-occurrence relationship in word frequency between entity attributes, and attribute embeddings can provide corresponding contextual information based on the sequence order of attributes. Following this strategy, techniques such as sequential word position embedding and attribute attention fusion are applied to achieve this.

Firstly, after constructing the hierarchical heterogeneous graph, the connections between neighbor nodes are regarded as contextual information. Similar to GNN, attribute nodes and token nodes are interconnected, with attribute nodes taking adjacent token nodes as contextual nodes, and token nodes acquiring semantic information from adjacent attribute nodes. Meanwhile, token nodes of the same entity can connect to different attributes and to attribute nodes of different entities. Secondly, when the same word appears in different semantic contexts, different attentions are adopted to extract the corresponding contextual semantic information. A hierarchical heterogeneous graph is set up, with the token set denoted as \(B^{t} = (b_{1}^{t} , \ldots ,b_{{n_{t} }}^{t} )^{{\text{T}}}\), where \(B^{t} \in R^{{n_{t} \times F}}\), and \(n^{t}\) is the number of tokens in the set. The initial word embeddings \(b_{i}^{t} \in B^{t}\) are obtained from general training, such as BERT, and cannot distinguish different contextual semantic information. Additionally, we perform representation learning for word semantics based on contextual embeddings10, using the contextual embeddings of words to fine-tune the initial word embeddings to generate semantic word embeddings \(\widehat{{B^{t} }}\). Therefore, the same word embeds different contextual semantic information in different semantic contexts.

Meanwhile, due to the three-level features based on entities, attributes, and tokens, richer contextual information can be obtained. Some studies propose using RNN or Transformer for entity resolution tasks, which only extract lexical context information at the sentence level. Extracting context from both types of features can yield rich semantics, capturing attribute semantic information through context at the attribute level and capturing sentence semantic information through context at the token level. The two-level contextual semantic embedding methods are presented in Algorithm 1, and the following introduces the two-level semantic extraction and embedding.

Token-level Contextual Semantic Embedding: The order of tokens captures the finest-grained semantic information, and different sentence orders indicate different meanings represented by the sentences. The self-attention mechanism is utilized to extract the local positional information of words within the sentence, modeling the sequential relationships between words and calculating the relevance of the current word to other words. The pre-trained language model BERT10 is employed to extract the contextual semantic embeddings for all tokens. Positional encoding is employed to construct sequential representations of text that capture local positional information, enabling the model to compute the relative distances between words based on their positions. In the BERT model, each sublayer incorporates residual connections and layer normalization, where the input is directly connected to the output. This design preserves the positional encoding unchanged as it propagates to deeper layers, effectively mitigating the gradient vanishing problem.

Attribute-level Contextual Semantic Embedding: The semantic significance of attributes lies in distinguishing between different attributes, assigning higher weights to attributes with important semantics, and simultaneously feeding the calculated weights back to the adjacent token embeddings. The process of attribute-level contextual embedding is illustrated in Algorithm 1.

Ultimately, different weights are assigned to different attributes adjacent to the same entity, and the same token adjacent to different entities will also be assigned different weights. A graph attention network (GAT) is utilized to extract contextual information at the attribute level. The set of attribute-level nodes is represented as \(B^{a} = (b_{1}^{a} , \ldots b_{{n_{a} }}^{a} )^{{\text{T}}}\), where \(n_{a}\) denotes the number of attributes in the graph. For each attribute node \(b_{i}^{a} \in B^{a}\), the set of adjacent token nodes is denoted as \(B_{{a_{i} }}^{t} \subseteq B^{t}\). The attribute semantic embedding \(B_{{a_{i} }}^{t}\) is calculated using the adjacent token nodes \(b_{i}^{a}\), specifically through the graph attention network operation, as follows:

where \(W^{t} \in R^{F \times F}\) and \(r^{t} \in R^{F}\) are trainable parameters. Since different attribute nodes may share the same keys, the uniqueness of attribute nodes is represented as \(\overline{{B^{a} }} = (\overline{{b_{1}^{a} ,}} \ldots ,\overline{{b_{j}^{a} ,}} \ldots \overline{{b_{{n_{k} }}^{a} }} )^{{\text{T}}}\), where \(n_{k}\) denotes the number of unique attributes. The unique attribute embeddings are obtained by summing the attribute embeddings with the same key: \(\overline{{b_{j}^{a} }} = \sum {b_{i}^{a} }\). By relating to the contextual semantics, the unique embeddings of attributes at the attribute level are represented as \(Q^{a} = (\overline{{b_{1}^{a} }} , \ldots ,\overline{{b_{{n_{k} }}^{a} }} )^{{\text{T}}} \in R^{{n_{k} \times F}}\).

Entity Embedding: After the calculation of all token embeddings and unique attribute embeddings is completed, the entity embedding is calculated as:

The process of generating contextual embeddings is illustrated in Algorithm 2.

\(\mu\) represents the computation that maps the embeddings at the attribute level to the corresponding token nodes. The contextual embeddings are trained through bi-directional gradient propagation: the first direction is from tokens to entities, and the second direction is the contextual propagation from entities to tokens, which differs from the traditional training process of graph neural networks. Initially, pre-trained word embedding vectors are obtained using a language model, and these vectors are then fine-tuned during the training process. As a result, contextual information from tokens can be applied at the entity level, along with the embedding information from the word embedding training. This allows for the corresponding adjustment of tokens based on different contextual environments, which can help make features during entity resolution more distinguishable.

Token comparison

The token comparison layer adopts an embedding pair representation instead of a single embedding representation, utilizing an interdependency pattern to generate entity features through comparison. For entities \(e_{1}\) and \(e_{2}\), a comparative encoding is generated by comparing all tokens of \(e_{1}\) against \(e_{2}\), and the same process is applied to \(e_{2}\) to generate its comparative encoding. The original embeddings are denoted as \(E_{N}\).

After the embeddings are generated for the entity pairs \(e_{1} = b_{1}^{{t_{1} }} , \ldots ,b_{{n_{t} }}^{{t_{n} }}\) and \(e_{2} = b_{1}^{{t_{2} }} , \ldots ,b_{{n_{k} }}^{{t_{n} }}\), the weight relationship between the two is calculated through the graph attention mutual weights between the two entity pairs. By leveraging a graph attention mechanism to generate weight encodings for entity pairs and employing an adaptive weighting strategy to produce adaptive encodings that preserve their intrinsic attribute information, the system enhances differentiation through the Hadamard product of the differences between weight encodings and adaptive encodings. This approach reduces fusion for critical attributes to amplify distinctions while increasing fusion for common textual features to minimize variability, thereby refining the fine-grained differences in textual representations. The specific calculation is as follows:

\(f(X) = W_{2}^{{\text{T}}} X + b\) is a linear function, where \(W_{2}\) and \(b\) are parameters, and \(\odot\) represents the Hadamard product between two matrices. The weight relationship is the same in bidirectional representation. By multiplying the embedding representation with the mutual weight, a new weighted token is obtained,

Then, all the weighted tokens are concatenated:

By calculating the difference between the weighted token \(Z_{N}\) and the original token \(E_{N}\), we generate a difference matrix \(M^{{(e_{i} \to e_{j} )}}\) for the entity pair. This difference matrix contains the Euclidean distances between the token weights and the initial tokens. The weighted tokens incorporate the feature mapping of the matched entities. The similarity between the two entities is compared based on the magnitude of the difference matrix, where a smaller difference element indicates similarity between the two entities, and vice versa.

Attribute aggregation

In this layer, a single-layer deep neural network is utilized to transform the difference matrix \(M^{{(e_{i} \to e_{j} )}}\) generated in the previous step into a signature vector \(s^{{(e_{i} \to e_{j} )}}\) with a fixed size to provide processable feature representations for subsequent matching prediction.

In the transformation process, \(CNN( \cdot )\) integrates convolutional operations, Max pooling operations, dropout operations, and activation functions in series to form a composite function. Within the convolutional operations, \(\alpha\) filter scales and \(\beta\) filters are set, with each filter window size configured as \(l \times h\)(where \(h\) represents the height of the filter). By utilizing various window sizes, multi-scale matching features can be extracted. The Max pooling operation selects the maximum value of the matching features within the corresponding filter scale, corresponding to the feature region with the most information content. Here, \(S^{{(e_{i} \to e_{j} )}} \in R^{1 \times \alpha \beta }\). The final signature vector is generated by concatenating the signature vectors independently produced for the two directions, \(e_{i} \to e_{j}\) and \(e_{j} \to e_{i}\).

This module transforms high-dimensional discrepancy matrices into low-dimensional dense vectors through multi-scale feature extraction and spatial compression, effectively preserving critical matching characteristics while substantially reducing computational complexity for subsequent processing.

Matching prediction

This stage maps the feature vector to matching probabilities through a deep neural network, with the core architecture being a HighwayNet incorporating residual connections. In this layer, the generated signature vector \(S\) is used as input, and passed through two layers of activation functions in HighwayNet to produce the final entity resolution result. The activation function employed is the ReLu function, which saves the workload of backpropagation for gradient error calculation. Additionally, through the sparsity of the network with 0 outputs, it mitigates the issue of overfitting. The loss function is set as the cross-entropy loss function, ultimately aiming to minimize the loss function.

In the loss function, \(L(p,q)\) represents the calculation of the cross-entropy loss between \(p\) and \(q\). Here, \(h(W,V)\) denotes the matching distribution output by the matching prediction layer, while y represents the matching label.

The process of overall CSGAT is illustrated in Algorithm 3.

Experimental setup

Dataset and evaluation metrics

To validate the performance of the CSGAT model in the entity resolution task, a comparison is made with the performance of state-of-the-art methods. Experimental validation is conducted on Amazon-Google dataset and BeerAdvo-RateBeer dataset. The Amazon-Google dataset contains information on electronic products, listing three attributes: (name, text content), (manufacturer, text content), and (price, numerical content). The name attribute represents the description of the product information and plays a crucial role in the entity resolution task. Meanwhile, there exist numerous text contents with different expressions but similar meanings within entity pairs. The manufacturer attribute refers to the source of production, and its attribute values may be abbreviated or incomplete. In this dataset, 1167 entity pairs are labeled positively, and there can be multiple matching attributes between entity pairs, with a maximum of 5 matching records in the dataset. The BeerAdvo-RateBeer dataset is sourced from two beer websites, BeerAdvo and RateBeer, and primarily includes data on their beer products. It lists four attributes in a semi-structured list format: (beer name, text type), (brewery, text content), (style, text content), and (ABV, numerical content). Among these, 68 entity pairs are labeled positively. Specific information is presented in Table 2.

In the experiment, the labeled entity pair dataset is divided into three subsets: training, validation, and test data, with a ratio of 3:1:1. Compared to the Amazon-Google dataset, this dataset has a smaller information scale and less noise. In evaluating the model’s performance, conventional metrics such as Precision (P), Recall (R), and F1 score were used.

Training setup

The server used for the experiment is equipped with an NVIDIA Tesla V100 (32 GB) GPU, CUDA version 12.2, and PyTorch version 2.2.0. During the training of the CSGAT model, the dimension of \(\vartheta^{1}\) is defined as \(\left| n \right| \times 300\), where \(\left| n \right|\) represents the number of nodes in the corresponding graph structure. Additionally, the dimension of \(\vartheta^{2}\) was set to \(300 \times 200\). The sliding window size for text was fixed at 20. All weight matrices were initialized using Xavier initialization with a gain of 1. In the feature aggregation layer’s convolutional neural network, the filter sizes are set to \([1,2,3][1,2,3]\), and the number of convolution kernels for each filter size is set to 150. In the matching prediction layer, the HighwayNet network has a fixed number of 4000 hidden units.

During the training optimization process, the Adam optimizer is employed, which supports the computation of adaptive learning rates for each parameter. The learning rate parameter, which controls the step size, was set to \(10^{ - 3}\), and the dropout filtering probability is set to 0.5. During backpropagation, the error gradient threshold is set to 5, and clipped gradients are used to update the weights. For the Amazon-Google dataset, the batch size is set to 32, while other hyperparameters are left at their default values. During training, the maximum number of training epochs is set to 100, but an additional termination condition is imposed: if the loss does not continuously decrease for 10 consecutive epochs before reaching 100 epochs, the training will be terminated.

Comparison models

There are four comparison methods, including three deep neural network learning models: RNN, Hybrid method36, and TLAM-ER, and the fourth method is a machine learning-based entity resolution model, Magellan15. RNN is used to process data that is inherently sequential in nature, especially when the input sequences are not of fixed length. The Hybrid method is an attribute alignment approach that combines word sequence awareness and sequence alignment. TLAM-ER is based on GraphER26 but instead of using GCN networks to obtain node embeddings, it employs GAT network to accomplish neighboring node embedding fusion. Its performance is obtained by averaging the results from 10 experiments. PBAL-EM (Probability-Based Active Learning with Expectation–Maximization) introduces a semi-supervised framework that integrates probabilistic active learning with the Expectation–Maximization (EM) algorithm37. Unlike conventional methods requiring extensive labeled data, PBAL-EM iteratively selects the most informative unlabeled samples for annotation using uncertainty sampling and leverages EM to refine model parameters by jointly optimizing labeled and pseudo-labeled data. This approach significantly reduces labeling costs while maintaining robustness in scenarios with sparse or imbalanced training data. Magellan constructs a comprehensive entity resolution framework with good scalability and interactivity.

To validate the effectiveness of the CSGAT model, we compare it with several baseline models, including both traditional machine learning methods and the latest deep learning models. The baseline models are as follows:

-

1.

RNN: A traditional recurrent neural network model that processes sequential data for entity resolution.

-

2.

Hybrid method: A combination of word sequence awareness and sequence alignment for attribute matching.

-

3.

TLAM-ER: A graph-based entity resolution model that uses Graph Attention Networks (GAT) for node embedding fusion.

-

4.

PBAL-EM: PBAL-EM is a machine learning-based methodology designed to enhance model interpretability and performance.

Discussion and analysis

Performance comparison experiments

The performance comparison experiment involves comparing the CSGAT model with other models in the entity resolution task. The CSGAT model is run 10 times, and the average of the experimental results is taken. The final model performance is determined by the sum and difference of the average and standard deviation of the experimental results. The performance of other models is based on the performance reported in their respective literature. Table 3 presents a comparison of the model performances.

-

(1)

As can be seen from the experimental results, on the Amazon-Google dataset, RNN achieves moderate precision (59.33%) but suffers from relatively low recall (48.12%), resulting in an F1-score of 52.77%, which reflects mediocre overall performance. The low recall indicates that the RNN model fails to identify many true matches, likely due to its inability to fully capture the contextual nuances of complex entity pairs in this dataset. Magellan achieves the highest precision (67.7%) among all models on this dataset, meaning it is effective at avoiding false positives. However, its recall is significantly lower (38.5%), leading to a poor F1-score of 49.1%. TLAM-ER demonstrates well-rounded performance on the Amazon-Google dataset, showing a balanced precision (61.71%) and recall (64.29%) that lead to an F1-score of 62.97%. This suggests that TLAM-ER effectively captures semantic relations and patterns in the data through its Transformer-based architecture. PBAL-EM achieves an F1-score of 42.40% on the Amazon-Google dataset, the lowest among all the models. Unfortunately, due to the lack of detailed precision and recall metrics, a deeper analysis of its performance breakdown is not possible. CSGAT achieves the best performance overall on the Amazon-Google dataset, with an F1-score of 65.88%, significantly outperforming other models. With precision at 63.36% and recall at 68.61%, CSGAT strikes the best balance between successfully identifying true matches and limiting false positives. On the Amazon-Google dataset, the challenges of textual noise and attribute variability demand models capable of capturing complex semantic patterns. CSGAT stands out as the best-performing model, leveraging advanced graph-based attention mechanisms to achieve the highest F1-score (65.88%) with a good balance of precision and recall. This positions CSGAT as a robust and reliable solution for entity matching tasks in challenging, heterogeneous datasets like Amazon-Google.

-

(2)

On the BeerAdvo-RateBeer dataset, The RNN model demonstrates moderately good performance, achieving an F1-score of 71.34%, with relatively balanced precision (74.82%) and recall (70.00%). The Hybrid model achieves results similar to RNN, with an F1-score of 71.08%. Its precision (73.44%) is slightly lower than that of RNN, while recall remains the same (70.00%). Magellan delivers the highest recall (92.9%) among all models on this dataset but compromises on precision (68.4%), resulting in an F1-score of 78.8%. TLAM-ER outperforms RNN, Hybrid, and Magellan with an F1-score of 79.03%, achieving a well-balanced precision (78.24%) and recall (79.84%). PBAL-EM achieves the highest F1-score (86.70%) reported for this dataset, outperforming all other models in terms of overall effectiveness. CSGAT performs exceptionally well on the BeerAdvo-RateBeer dataset, achieving the second-highest F1-score (82.73%) of all models and outperforming several others in both precision (80.66%) and recall (84.91%). PBAL-EM shows the best F1-score (86.70%), but the lack of full metrics limits deeper interpretability. CSGAT provides a close second with an F1-score of 82.73%, while also excelling in achieving a strong balance between precision (80.66%) and recall (84.91%) with low variance, making it a robust model. For practical use, CSGAT is a highly reliable option due to its predictable and consistently strong performance, whereas PBAL-EM may excel in specific, tailored scenarios but requires further transparency in its metrics.

-

(3)

Across both the Amazon-Google and BeerAdvo-RateBeer datasets, CSGAT consistently demonstrates superior performance compared to other models, highlighting its effectiveness in handling diverse data environments.

Ablation experiment

To validate the importance of attribute context and token context information, as well as the impact of different sizes of BERT on model performance, this paper conducted multiple sets of controlled experiments on the Amazon-Google and BeerAdvo-RateBeer datasets. The experiments first compare the performance of the model when using DistilBERT, RoBERTa, and RoBERTa-Large, and the results are shown in Fig. 4.

Based on Fig. 4, it can be observed that increasing the size of BERT can improve model performance to a certain extent. This is likely because larger BERT models can more accurately capture contextual information. To achieve optimal performance, this paper chooses to conduct subsequent ablation experiments based on RoBERTa-Large. For the ablation experiments on contextual information, the following experimental settings are adopted in CSGAT: (1) CSGATnon-Attribute, which removes attribute context; (2) CSGATnon-Context, which does not use any context. The experimental results are shown in Table 4.

It can be seen that in Amazon-Google, the performance of CSGATnon-Attribute drops by 5.21%, and CSGATnon-Context drops by 10.92%. While in BeerAdvo-RateBeer, the performance decline of the two variant models of CSGAT is more significant, with drops of 9.82% and 17.31% respectively. This is because the BeerAdvo-RateBeer dataset has a smaller size, and the model can only achieve better performance by fully utilizing contextual information.

Removing attribute-level contextual information (CSGATnon-Attribute) results in a significant drop in performance on both datasets. On the Amazon-Google dataset, the F1-score decreases by 5.21%, while on the BeerAdvo-RateBeer dataset, it decreases by 9.82%. This indicates that attribute-level context plays a crucial role in entity resolution, particularly in distinguishing between entities with similar attributes.

Removing all contextual information (CSGATnon-Context) leads to an even more significant performance drop. On the Amazon-Google dataset, the F1-score decreases by 10.92%, while on the BeerAdvo-RateBeer dataset, it decreases by 17.31%. This highlights the importance of token-level contextual semantics in capturing fine-grained relationships between tokens and attributes.

The performance drop is more pronounced on the BeerAdvo-RateBeer dataset, which has a smaller size and less noise. This suggests that contextual information is particularly critical in scenarios where the dataset is smaller and requires more precise semantic discrimination.

Discussion

Comparative analysis with traditional models

CSGAT addresses the limitation of homogeneous node processing in traditional GCN/GAT models through its hierarchical heterogeneous graph structure. Conventional methods treat tokens, attributes, and entities as homogeneous nodes during aggregation, leading to dilution of crucial information. CSGAT utilizes pre-trained models to obtain initial embeddings for tokens and attributes. By leveraging BERT to extract contextual semantic information for tokens, the initial embeddings are fine-tuned to obtain richer semantic representations. By distinguishing between the semantic information at the token and attribute levels, CSGAT completes the entity resolution task at a fine-grained level, overcoming the limitation of traditional deep learning models that cannot distinguish the semantics of different types of nodes. This architecture enables the model to dynamically allocate weights, significantly enhancing its fine-grained discriminative capability.

Computational complexity analysis

The computational complexity of CSGAT primarily stems from its hierarchical graph attention mechanism, involving dual-layer interactions and dynamic weight computation, as well as the generation of difference matrices. This requires calculating interactive differences across all token pairs for each entity pair. To enhance discriminative accuracy, the model employs full token-level interactive comparison. Although this approach captures subtle semantic differences by preserving comprehensive token-level interaction information, it inevitably introduces a massive number of matching combinations.

During the token embedding, token comparison, and attribute aggregation stages, computational complexity grows quadratically with the number of tokens. When attribute descriptions are lengthy, Token Embedding and Token Comparison become the primary bottlenecks. In entity alignment tasks, if the number of candidate pairs is substantial, the Token Comparison stage struggles to scale efficiently. To mitigate computational complexity during long-text preprocessing and candidate pair screening, attribute text can be truncated or summarized to limit the number of tokens, while pre-filtering operations can reduce the number of candidate pairs.

Conclusion and future work

By incorporating contextual embeddings, this paper enriches the semantic information of entities, improving the precision and recall rates of the model. However, the recall rate on simpler datasets still has room for improvement. In particular, the preprocessing step of entity resolution, such as blocking, can be beneficial. By screening entities through simple comparisons, the workload of matching can be reduced while potentially increasing the probability of incorrect matches. Future research will explore incorporating the blocking preprocessing step into the model. Currently, deep learning has not been applied to the blocking task, which represents a promising area for further investigation.

The experimental results demonstrate that CSGAT achieves state-of-the-art performance on both datasets, outperforming traditional machine learning models and deep learning models. Compared to the latest Transformer-based models, CSGAT shows competitive performance, particularly on the BeerAdvo-RateBeer dataset, where it achieves the highest F1-score. This indicates that CSGAT’s ability to leverage contextual semantics and graph attention mechanisms provides a significant advantage in entity resolution tasks.

Data availability

The authors confirm that the data and code supporting the findings of this study are available within the article. Code is available at https://github.com/xhtech2024/csgat. The authors confirm that the materials supporting the findings of this study are available within the article

References

Saputra, F. The role of human resources, hardware, and databases in mass media companies. Int. J. Adv. Multidiscip. 1(1), 47–55 (2022).

Li Manni, G. et al. The OpenMolcas Web: A community-driven approach to advancing computational chemistry. J. Chem. Theory Comput. 19(20), 6933–6991 (2023).

Suchanek, F. M., Alam, M., Bonald, T. et al. Yago 4.5: A large and clean knowledge base with a rich taxonomy. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval 131–140 (2024).

Tu, J., Fan, J., Tang, N. et al. Domain adaptation for deep entity resolution. In Proceedings of the 2022 International Conference on Management of Data 443–457 (2022).

Li, B., Miao, Y., Wang, Y. et al. Improving the efficiency and effectiveness for BERT-based entity resolution. In Proceedings of the AAAI Conference on Artificial Intelligence Vol. 35, 13226–13233 (2021).

Fu, C., Han, X., He, J. et al. Hierarchical matching network for heterogeneous entity resolution. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence 3665–3671 (2021).

Li, Y. et al. Deep entity matching: Challenges and opportunities. J. Data Inf. Qual. JDIQ 13(1), 1–17 (2021).

Peters, M., Neumann, M., Iyyer, M. et al. Deep contextualized word representations.

Wu, W., Li, Z., Gu, Y. et al. Draganything: Motion control for anything using entity representation. In European Conference on Computer Vision. Cham: Springer Nature Switzerland, 2024 331–348.

Simonini, G. et al. Entity resolution on-demand. Proc. VLDB Endow. 15(7), 1506–1518 (2022).

Genossar, B., Shraga, R. & Gal, A. FlexER: Flexible entity resolution for multiple intents. Proc. ACM Manag. Data 1(1), 1–27 (2023).

Barlaug, N. & Gulla, J. A. Neural networks for entity matching: A survey. ACM Trans. Knowl. Discov. Data TKDD 15(3), 1–37 (2021).

Qin, X., Chai, C., Tang, N. et al. Synthesizing privacy preserving entity resolution datasets. In 2022 IEEE 38th International Conference on Data Engineering (ICDE) 2359–2371 (IEEE, 2022).

Tu, J. et al. Dader: Hands-off entity resolution with domain adaptation. Proc. VLDB Endow. 15(12), 3666–3669 (2022).

Ezzaim, A. et al. AI-based learning style detection in adaptive learning systems: A systematic literature review. J. Comput. Educ. https://doi.org/10.1007/s40692-024-00328-9 (2024).

Konda, P. et al. Magellan: Toward building entity matching management systems. Proc. VLDB Endow. 9(12), 1197–1208 (2016).

Wu, S. et al. Blocker and matcher can mutually benefit: A co-learning framework for low-resource entity resolution. Proc. VLDB Endow. 17(3), 2292–2304 (2023).

Wang, R. & Zhang, Y. Pre-trained language models for entity blocking: a reproducibility study. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2024 Vol. 1, 8720–8730.

Guo, Y., Chen, L., Zhou, Z. et al. CampER: An effective framework for privacy-aware deep entity resolution. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining 626–637 (2023).

Zeakis, A. et al. An in-depth analysis of pre-trained embeddings for entity resolution. VLDB J. 34(1), 1–27 (2025).

Nananukul, N., Sisaengsuwanchai, K. & Kejriwal, M. Cost-efficient prompt engineering for unsupervised entity resolution in the product matching domain. Discov. Artif. Intell. 4(1), 56 (2024).

Neuhof, F. et al. Open benchmark for filtering techniques in entity resolution. VLDB J. 33(5), 1671–1696 (2024).

Low, J., Fung, B. & Xiong, P. Better entity matching with transformers through ensembles. Knowl. Based Syst. 293, 1–11 (2024).

Ding, H. et al. SETEM: Self-ensemble training with pre-trained language models for entity matching. Knowl. Based Syst. 293, 111708–111718 (2024).

Dou, W., Shen, D., Zhou, X. et al. Soft target-enhanced matching framework for deep entity matching. In Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence and Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence and Thirteenth Symposium on Educational Advances in Artificial Intelligence, 2023 Vol. 475, 4259–4266.

Wang, X., He, X., Cao, Y. et al. KGAT: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2019 950–958.

Zhu, R., Ma, M. & Wang, P. RAGA: Relation-aware graph attention networks for global entity alignment. In Proceedings of the Advances in Knowledge Discovery and Data Mining: 25th Pacific-Asia Conference, 2021 501–513.

Bienvenu, M., Cima, G. & Gutiérrez-Basulto. V. LACE: A logical approach to collective entity resolution. In Proceedings of the 41st ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems 379–391 (2022).

Ashish, V., Noam, S., Niki, P. et al. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, 2017 6000–6010.

Miao, Z., Li, Y. & Wang, X. Rotom: A meta-learned data augmentation framework for entity matching, data cleaning, text classification, and beyond. In Proceedings of the 2021 International Conference on Management of Data, 2021 1303–1316.

Gazzarri, L. & Herschel, M. Progressive entity resolution over incremental data. In EDBT 80–91 (2023).

Marchant, N. G., Rubinstein, B. I. P. & Steorts, R. C. Bayesian graphical entity resolution using exchangeable random partition priors. J. Surv. Stat. Methodol. 11(3), 569–596 (2023).

Rumelhart, D., Hinton, G. & Williams, R. Learning representations by back-propagating errors. Neurocomputing: Foundations of Research 696–699 (1988).

Mikolov, T., Sutskever, I., Chen, K. et al. Distributed representations of words and phrases and their compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, 2013 Vol. 2, 3111–3119.

Pennington, J., Socher, R., Manning, C. GloVe: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), 2014 1532–1543.

Nie, T. et al. Fine-grained tasks for crowdsourced entity resolution. Appl. Sci. 15(1), 4 (2024).

Han, Y. & Li, C. Entity matching by pool-based active learning. Electronics 13(3), 559 (2024).

Paganelli, M., Buono, F., Guerra, F. et al. Automated machine learning for entity matching tasks. In Proceedings of the 24th International Conference on Extending Database Technology (EDBT), 2021 325–330.

Joulin, A., Grave, E., Bojanowski, P. et al. Bag of tricks for efficient text classification. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 2017 Vol. 2, 427–431.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by S.F., H.S. The first draft of the manuscript was written by X.L., J.Y. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, X., Fan, S., Yao, J. et al. Contextual semantics graph attention network model for entity resolution. Sci Rep 15, 27093 (2025). https://doi.org/10.1038/s41598-025-11932-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-11932-9