Abstract

In the pathological diagnosis of colorectal cancer, the precise segmentation of glandular and cellular contours serves as the fundamental basis for achieving accurate clinical diagnosis. However, this task presents significant challenges due to complex phenomena such as nuclear staining heterogeneity, variations in nuclear size, boundary overlap, and nuclear clustering. With the continuous advancement of deep learning techniques—particularly encoder-decoder architectures—and the emergence of various high-performance functional modules, multi module collaborative fusion has become an effective approach to enhance segmentation performance. To this end, this study proposes the RPAU-Net++ model, which integrates the ResNet-50 encoder (R), the Joint Pyramid Fusion Module (P), and the Convolutional Block Attention Module (A) into the UNet++ framework, forming a multi-module-enhanced segmentation architecture. Specifically, ResNet-50 mitigates gradient vanishing and degradation issues in deep network training through residual skip connections, thereby improving model convergence stability and feature representation depth. JPFM achieves progressive fusion of cross-layer features via a multi-scale feature pyramid, enhancing the encoding capability for complex tissue structures and fine boundary information. CBAM employs adaptive weight allocation in both spatial and channel dimensions to focus on target region features while effectively suppressing irrelevant background noise, thereby improving feature discriminability. Comparative experiments on the GlaS and CoNIC colorectal cancer pathology datasets, as well as the more challenging PanNuke dataset, demonstrate that RPAU-Net++ significantly outperforms mainstream models in key segmentation metrics such as IoU and Dice, providing a more accurate solution for pathological image segmentation in colorectal cancer.

Similar content being viewed by others

Introduction

Cancer remains one of the most severe threats to human health. According to the 2022 global cancer report released by the World Health Organization’s International Agency for Research on Cancer (IARC) in February 2024, colorectal cancer has emerged as one of the top three most prevalent cancer types worldwide, ranking third in terms of new cases and second in cancer-related deaths1. These statistics underscore the urgency of colorectal cancer prevention and control, where early diagnosis is critical for improving patient prognosis. The five-year survival rate exceeds 90% for patients diagnosed and treated at an early stage, yet it plummets to less than 14% if cancer is detected only after metastasis2. Therefore, developing efficient and accurate pathological image segmentation techniques for colorectal cancer holds significant clinical value in enhancing diagnostic precision.

Currently, histopathological examination remains the gold standard for cancer diagnosis, and the widespread adoption of whole-slide imaging (WSI) has created opportunities for computer vision (CV) techniques in this domain3. CV-based approaches not only enable the segmentation of tumor versus non-tumor tissues but also facilitate instance-level analysis of fine-grained structures such as cells and glands, providing quantitative support for pathological assessment4. However, colorectal cancer pathology images—along with most existing histopathological image segmentation tasks—present unique challenges distinct from natural image segmentation, including:1)Morphological complexity: Glandular structures exhibit multi-scale continuity, ranging from micron-level nuclei to macroscopic glandular tubules.2)Boundary ambiguity: Low contrast due to hematoxylin and eosin staining and frequent cell overlapping.3)Regional heterogeneity: Uneven feature distribution between tumor and stromal regions, with poorly defined gradient transitions.

Current image analysis algorithms typically employ Convolutional Neural Networks (CNNs) as feature extractors for image regions. The introduction of U-Net5 marked a landmark achievement, as it was the first to demonstrate the effectiveness of encoder-decoder architectures with skip connections for medical image segmentation.In recent years, Transformer-based models such as TransUNet6 and Swin-Unet7 have enhanced contextual modeling capabilities, yet they exhibit notable limitations. The global self-attention mechanism in Transformers suffers from high computational complexity, which conflicts with the high-resolution requirements of pathological images. The fixed-window attention design tends to disrupt the morphological continuity of glandular structures, struggling to handle densely distributed cell clusters. Their multi-scale fusion strategies often rely on sparse sampling, which mismatches the dense distribution characteristics of glands, leading to the loss of fine-grained structures.

UNet++8, as an improved variant of U-Net, introduces densely connected convolutional layers between the encoder and decoder, significantly enhancing feature fusion efficacy and segmentation accuracy. However, it still faces challenges in precisely segmenting complex histopathological structures and high-density features due to their inherent intricacy.

To address the aforementioned challenges, we propose RPAU-Net++, an enhanced UNet++ model incorporating multi-module optimization. Its core design principles include: Adopting a ResNet-509 encoder with residual structures to mitigate gradient degradation and ensure efficient feature extraction of complex pathological patterns; Introducing the JPFM to capture multi-scale glandular structures precisely through dilated dense convolutions and feature alignment strategies; Integrating the CBAM to suppress background interference and enhance target region features via dual channel-spatial attention.Specifically, the contributions of this work are summarized as follows:

-

Hierarchical Modular Synergy Architecture: We propose a progressive optimization pathway “robust feature extraction,fine-scale fusion, precise region focusing” by systematically integrating ResNet-50 residual learning, JPFM dense multi-scale fusion, and CBAM dual-perspective attention.This forms a dedicated segmentation model for colorectal cancer pathology images.

-

Precise Multi-Scale Feature Alignment: To address the multi-scale continuity of glandular structures, JPFM employs dense dilated convolutions (d=1,2,4,8) to construct a feature pyramid, resolving the primitive overlap loss caused by sparse sampling in traditional ASPP. This enables full-scale structural capture from nuclei to glandular tubules.

-

Lightweight Attention Mechanism: For boundary ambiguity and background interference in pathological images, CBAM leverages channel attention to filter critical feature channels and spatial attention to focus on target regions.This dual-perspective synergy suppresses irrelevant backgrounds while maintaining computational efficiency far superior to Transformer’s global attention, better suited for real-time processing of high-resolution pathological images.

-

Comprehensive Multi-Dataset Validation: Extensive experiments on two public colorectal cancer datasets GlaS,CoNIC and further validation on the PanNuke dataset which includes more complex histological scenarios and multi-organ pathology images demonstrate the model robust recognition capability for intricate pathological structures. Results confirm consistent performance improvements over state-of-the-art methods.

Related work

With the escalating precision demands in medical image segmentation, traditional segmentation methods—such as intensity, boundary, or region-based approaches have proven inadequate in addressing the complex morphological variations and feature heterogeneity inherent in pathological images. These limitations have driven researchers toward deep learning techniques, which have emerged as the dominant paradigm in medical image segmentation due to their superior feature extraction and detail preservation capabilities10. Among these, U-Net and its variants have laid the foundation for precise segmentation through their encoder-decoder architecture and skip connection design. Current research focuses on four key directions: architectural optimization, attention mechanisms, multi-scale feature fusion, and Transformer integration.

In the realm of U-Net architectural improvements, researchers have enhanced performance by strengthening feature extraction and fusion capabilities. For instance: Lu et al11. developed WBC-Net, which integrates convolutional blocks with residual blocks and employs multi-scale skip paths to aggregate features, offering a solution for extracting features from complex pathological structures. Beyond mere block integration, Kiran et al12. further advanced this line of research by proposing DenseRes-UNet, which introduces a novel connectivity pattern to reduce the semantic gap between the encoder and decoder. This design is particularly effective for segmenting clustered nuclei, aligning closely with the requirements of dense cell cluster segmentation in colorectal cancer. Building on the focus on multi-scale information flow, Huang et al13. advanced UNet 3+, leveraging full-scale skip connections to enhance multi-scale information flow, thereby improving segmentation accuracy and providing a reference for multi-scale feature integration. Complementing these architectural tweaks with systematic framework design, Isensee et al14. introduced the nnUNet framework, which systematically unifies data preprocessing and training strategies, demonstrating exceptional performance in cross-modality medical image segmentation tasks.

The integration of attention mechanisms has emerged as a transformative approach for addressing boundary ambiguity and background interference challenges. Building upon foundational work, Ahmed et al15. developed the Dual Attention U-Net, which combines a ResNet encoder with attention gates to amplify target region weights while suppressing background noise, demonstrating exceptional performance in cancerous region segmentation. Extending this paradigm, Li et al16. proposed the Residual-Attention UNet++, where their innovative attention scheme achieved breakthroughs in nuclear segmentation by dynamically suppressing irrelevant backgrounds and enhancing foreground features, thereby validating the efficacy of attention mechanisms for fine structure and boundary detection. Subsequent advancements have further refined this approach: DCSSGA-UNet17 notably improved segmentation accuracy through integration of a DenseNet201 encoder with concurrent channel-spatial attention modules, while MAGRes-UNet18 optimized feature extraction through its novel multi-attention gating mechanism.

For addressing the continuous scale variation in glandular structures, multi-scale feature fusion has proven critical. The seminal DeepLabV3+19architecture, inspired by Spatial Pyramid Pooling (SPP), innovated the Atrous Spatial Pyramid Pooling (ASPP) module by incorporating an Inception-style structure with parallel atrous convolutions of varying dilation rates, thereby significantly enhancing multi-scale context capture. Parallel developments by Yao et al20. yielded PointNu-Net, which introduced the Joint Pyramid Fusion Module employing dense dilated convolutions coupled with feature alignment strategies to achieve superior multi-scale integration, successfully capturing continuous structures from nuclei to glandular tubules. This work provides direct methodological reference for our current application to colorectal cancer histopathology. However, alternative approaches such as Lan et al21. attribute-guided spatial pyramid pooling with multi-edge output fusion, while effective for low-contrast cellular images, reveal inherent limitations in their sparse dilation design when processing high-density primitive overlaps, thus underscoring the necessity of dense multi-scale fusion strategies as employed in our work.

While the fusion of Transformers with U-Net architectures has expanded contextual modeling capabilities, significant bottlenecks remain in pathological image segmentation. Pioneering work by Chen et al6. (TransUNet) and Hu et al7. (Swin-Unet) demonstrated enhanced global feature capture through Transformer encoders; however, the computational complexity inherent in their multi-head attention mechanisms fundamentally conflicts with the high-resolution requirements of pathological images. Subsequent optimizations, Lan et al22.proposed BRAU-Net++, while innovative, still fail to overcome the disruption of glandular morphological continuity caused by fixed-window attention mechanisms, exhibiting limited performance in dense cell cluster segmentation and complex boundary delineation. These persistent limitations provide the rationale for our study avoidance of pure Transformer architectures in favor of a lightweight convolution + attention hybrid strategy.

Recent advances in specialized architectural innovations have yielded diverse solutions. Notably, Agnes et al23. achieved significant improvements in pulmonary nodule segmentation by integrating Haar wavelet transforms with U-Net++ architectures. Concurrently, Zhu et al24. optimized 3D brain tumor segmentation performance through novel sparse dynamic modules. A particularly noteworthy advancement comes from Zhang et al25.proposed MRC-TransUNet, which replaces traditional skip connections with lightweight MR-ViT modules and reciprocal positional attention (RPA) mechanisms, outperforming existing methods across breast, brain, and lung medical image segmentation tasks. Complementing these developments, Hussain et al26. proposed EFFResNet-ViT successfully merges CNN and ViT advantages, achieving not only enhanced performance but also improved model interpretability. Most remarkably, Alam et al27. elbow fracture diagnosis system, combining YOLOv8 with a multi-model strategy, has demonstrated an exceptional accuracy rate of 99%, setting a new benchmark in specialized medical image analysis.

While existing studies have advanced medical image segmentation through residual structures, attention mechanisms, and multi-scale fusion strategies, they still face significant challenges when addressing the specific demands of colorectal cancer pathological images. Single-module approaches cannot simultaneously resolve multi-scale structures, boundary ambiguity, and background interference; Sparse sampling strategies mismatch the dense distribution characteristics of glandular tissues, leading to loss of fine structures;The high computational complexity of Transformer architectures limits their clinical real-time application.

The proposed RPAU-Net++ addresses these challenges through a hierarchical modular co-design: The ResNet-50 encoder with residual structures mitigates gradient degradation while ensuring efficient feature extraction of complex pathological patterns; the JPFM module with dense dilated convolutions and feature alignment strategies precisely captures multi-scale glandular structures; and the CBAM module suppresses background interference while enhancing target region features through dual channel-spatial attention. This combination avoids both the computational redundancy and structural disruption of Transformers while overcoming traditional U-Net limitations in multi-scale adaptability and target focus, ultimately achieving high-precision segmentation of complex colorectal histopathological images.

Compared to composite approaches like Residual-Attention UNet++, our hierarchical synergy design represents more than simple module stacking: First, in interaction logic, ResNet-50 extracted base features first undergo JPFM-based multi-scale alignment and fusion before CBAM targeted enhancement of key regions, forming a progressive extraction-fusion-focus workflow unlike existing methods parallel feature processing. Second, in pathological image adaptation, JPFM dense dilated convolutions (d=1,2,4,8) specifically optimize for glandular density distributions, while CBAM dynamically suppresses H&E staining-induced background interference through dual-perspective attention a level of customized design absent in generic composite methods.

Methodology

This section describes the core architecture of our proposed RPAU-Net++ model, detailing the integration of three key components the ResNet-50 encoder, JPFM, and CBAM. The synergistic combination of these modules is specifically designed to enhance the model performance in colorectal cancer pathological image segmentation tasks, particularly in handling multi-scale features and focusing on critical regions. The ResNet-50 backbone provides robust feature extraction capabilities, while the JPFM facilitates effective multi-scale feature fusion. The CBAM further refines feature representation through its dual attention mechanism, enabling precise focus on diagnostically relevant areas. Together, these components form a comprehensive framework optimized for the challenges of colorectal cancer histopathological image analysis.

RPAU-Net++ architecture

The proposed RPAU-Net++ model is built upon the UNet++ framework, specifically designed to address the complex characteristics of colorectal cancer pathological images. We systematically integrated mainstream modules into the UNet++ architecture, employing ResNet-50 to resolve fundamental feature degradation issues, JPFM to establish cross-scale dependencies, and CBAM to achieve precise localization. This creates a progressive processing pipeline of feature extraction, multi-scale fusion, region focusing.

As illustrated in Figure 1 showing our core RPAU-Net++ architecture, the model incorporates ResNet-50 as the encoder and integrates both the JPFM and CBAM in the decoder path to enhance global and local feature capture capabilities.The input image first undergoes multi-level feature extraction through the ResNet-50 encoder. Following each residual block, max pooling operations progressively reduce spatial dimensions while increasing channel depth. This five-stage process generates hierarchical feature maps \(X^{0,0}, X^{1,0}, X^{2,0}, X^{3,0}, X^{4,0}\). The JPFM then performs multi-scale convolutional operations on these feature maps to construct a feature pyramid. These processed features are upsampled to uniform spatial resolution via bilinear interpolation for proper alignment, followed by element-wise summation or concatenation to produce enhanced feature representations: \(X^{1,0*}, X^{2,0*}, X^{3,0*}, X^{4,0*}\). During decoding, the model progressively restores spatial resolution through upsampling operations. Each upsampling step incorporates skip connections with corresponding encoder-level features to preserve original image details. Prior to each skip connection, the CBAM module dynamically weights important features while suppressing irrelevant ones through dual channel-spatial attention mechanisms, significantly improving segmentation quality. The final output produces the segmentation result.

To precisely describe the feature fusion process in skip connections, Equations (1)-(4) define the computational method for node \(X^{0,j}\) (j=1,2,3,4) in the first skip pathway. Here: \(X^{i,j}\) represents the feature map output from the j-th convolutional block at the i-th encoder level, \(X^{1,0*}\) denotes the feature enhanced by multi-scale processing through the JPFM module, and \(X^{0,j}\)corresponds to the output of the j-th node in the skip pathway. The notation \(H(\cdot )\) indicates ReLU activation following convolution operations, while \(AG(\cdot )\) and \(T(\cdot )\) represent the attention mechanism and upsampling operation respectively. The attention mechanism \(AG(\cdot )\) is specifically implemented by CBAM, which performs feature weighting through sequential channel attention and spatial attention operations. The operation \(T(\cdot )\) denotes bilinear interpolation upsampling used to restore feature maps to target resolution, with \([\cdot ]\) representing channel-wise feature concatenation. Figure 2 illustrates the schematic diagram of the first skip pathway in the RPAU-Net++ model.

Taking Equation (1) as an example, this formula defines the first-level feature fusion process in the skip pathway: The shallow encoder feature \(X^{0,0}\) is first processed by the CBAM attention mechanism \(AG(X^{0,0})\) to enhance responses in key regions, while the deep feature \(X^{1,0*}\) (multi-scale enhanced by the JPFM module) undergoes 2\(\times\) upsampling via bilinear interpolation \(T(X^{1,0*})\). These processed features are then concatenated along the channel dimension to form \([AG(X^{0,0}), T(X^{1,0*})]\), which subsequently passes through the ReLU activation function \(H(\cdot )\) to generate the fused feature \(X^{0,1}\).

Equations (2)-(4) implement a progressive fusion strategy that systematically incorporates higher-level features for multi-scale information integration. Specifically, the n-th level fusion (n=2,3,4) simultaneously aggregates all lower-level features \(\{AG(X^{0,k})\}_{k=0}^{n-1}\) with the upsampled higher-level feature \(T(X^{1,n-1})\). This design ensures that features across all scales contribute to the final prediction.

Residual network structure

As the depth of CNNs increases, each layer extracts features from the previous layer, which often leads to the problem of vanishing or exploding gradients. To address this, ResNet introduces residual blocks to alleviate the gradient decay problem during backpropagation. Residual blocks use skip connections and identity mappings to allow the network to learn features from the input data more effectively. Specifically, the design of residual blocks allows direct transmission of input signals, preventing information loss in deep networks, which improves the stability and efficiency of training. The architecture of the residual network comprises a sequence of sequentially stacked residual units, which can be formally expressed as follows:

In the i-th residual unit, \(x_{i}\) represents the input, \(x_{i+1}\) represents the output, \(F(\cdot )\) denotes the residual function, and \(W_{i}\) are the parameters of the module, while \(L(\cdot )\) is the activation function. In this residual unit, the input xi undergoes feature transformation through the residual function F, and is then added to the original input \(x_{i}\) to form the output \(y_{i}\). This design allows the network to learn the difference between the input and output, rather than directly learning the mapping from input to output, thus simplifying the learning task and improving training stability.

Figure 3 illustrates the traditional convolutional block and the residual network. Figure 3(a) shows the typical VGG Block, which consists of two convolutional layers followed by Batch Normalization after each convolution to improve training stability and speed and then a ReLU activation function. However, as the network depth increases, VGG Blocks are prone to issues like gradient vanishing or explosion, making training difficult. Figure 3(b) presents the improved version with the introduction of residual modules. By adding skip connections, the residual block effectively mitigates gradient-related issues during backpropagation, enabling more stable training. To further consider the computational cost in practice, ResNet-50 optimized the residual modules and proposed a new convolutional structure, as shown in Figure 3(c). This new structure replaces two \(3\times 3\) convolution layers with a combination of \(1\times 1+3\times 3+1\times 1\) convolutions.

By integrating the ResNet-50 residual network mechanism, RPAU-Net++ demonstrates enhanced capability in learning the features of input data, effectively addressing challenges such as gradient vanishing or explosion that are commonly associated with increased network depth. The attributes, robust generalization capacity, and optimized convolutional architecture of ResNet-50 confer significant advantages in the analysis of complex histopathological images. When synergistically combined with UNet++, the model achieves superior multi-scale feature fusion, thereby significantly improving its overall performance.

Joint Pyramid Fusion Module

In image segmentation tasks, while pooling layers can reduce feature map dimensionality, they inevitably lead to the loss of spatial information for small targets a particularly prominent issue in colorectal cancer pathological image segmentation where the localization and morphological information of critical structures such as micron-level nuclei and glandular buds are highly susceptible to degradation from pooling operations. Previous studies in the DeepLab series addressed this limitation by introducing dilated convolutions, which incorporate intervals (dilation rates) within the convolutional kernels to expand the receptive field without compromising resolution. As illustrated in Figure 4, the receptive field measures \(3\times 3\) for a dilation rate d=1 (standard convolution), expands to \(7\times 7\) when d=2, and reaches \(15\times 15\) at d=4. By adjusting the dilation rate, the model can efficiently capture multi-scale contextual information while maintaining computational efficiency.

However, the traditional ASPP module exhibits certain limitations when processing high-density, multi-scale continuous colorectal cancer pathological images. To address this, we introduce the Joint Pyramid Fusion Module (JPFM), an improved multi-scale fusion module based on ASPP, whose architecture is illustrated in Figure 5. The core design is mathematically expressed by Equation (6):

where \(X \in \mathbb {R}^{C\times H\times W}\) represents the input feature map (with C channels and \(H\times W\) resolution), \(\text {Conv}_{3\times 3}^d\) denotes a \(3\times 3\) dilated convolution with dilation rate d, BN represents batch normalization, \(\sigma\) is the ReLU activation function, and Concat indicates channel-wise concatenation (each branch outputs K channels, resulting in an output dimension of 4K).

Compared with traditional ASPP, JPFM structural optimization is primarily manifested in two aspects. First, it eliminates the global average pooling branch. While ASPP captures global context through “global average pooling \(+ 1\times 1\) convolution”, this operation compresses the spatial dimensions of feature maps, leading to loss of precise spatial localization information for critical structures like micron-level nuclei and glandular buds in colorectal cancer pathological images. JPFM retains only four dilated convolutional branches and directly maintains input resolution through padding strategies, ensuring no degradation of local details.Second, JPFM adopts a dense dilation rate design. Compared to ASPP sparse and discrete dilation rates which exhibit excessive scale intervals, JPFM dilation rates follow a \(2\times\) progression (1,2,4,8), providing continuous scale intervals that precisely match the correlation of multi-scale structures. This design better adapts to overlapping and entangled features of high-density primitives in complex pathological scenarios.

To quantitatively validate the effectiveness of this design, we conducted ablation test on our dataset: evaluating both the improvement in preserving fine structures by removing average pooling, and comparing performance differences between dense and sparse dilation rates in multi-scale feature fusion. The results conclusively demonstrate JPFM superiority in colorectal cancer pathological image segmentation.

Convolutional block attention modules

Attention mechanisms have been widely adopted in multimodal tasks, yet traditional approaches in convolutional networks primarily focus on channel-domain analysis. The CBAM innovatively introduces a dual-dimensional attention mechanism combining both channel and spatial attention. Employing a sequential architecture that processes channel attention followed by spatial attention, CBAM simultaneously captures channel-wise dependencies and identifies spatially critical regions, as illustrated in Figure 6.

The initial component is the channel attention mechanism, which applies both maximum pooling and global average pooling to the input feature maps to derive \(T_{\text {avg}}^c\) and \(T_{\text {max}}^c\), respectively. The data is subsequently reshaped to a dimension of \(1\times 1\times \text {c}\), followed by processing through a shared multi-layer perceptron (MLP) that reduces the channel dimension and subsequently restores it to the original size c. The results are then summed, and a sigmoid activation function is employed to generate weight values. These weights are multiplied by the original feature map to produce the channel-wise attention weights, as described by the following equation:

The subsequent component is the spatial attention mechanism, which involves applying maximum pooling and average pooling operations to each channel of the input feature map, yielding the maximum value \(T_{\text {max}}^s\) and the average value \(T_{\text {avg}}^s\), respectively. These two outputs are concatenated to form a tensor of dimensions \(2\times \text {H}\times \text {W}\). This tensor is then processed through a 2D convolution layer to reduce the number of channels to one. The resulting weight values are subsequently multiplied with the original feature map to derive the spatial attention weights, as expressed in equation :

The channel attention mechanism models inter-channel semantic relationships through global pooling and shared MLPs, enabling dynamic weight allocation for discriminative feature channels such as gland contours and nuclear textures. The spatial attention mechanism, employing channel-wise pooling and convolutional operations, selectively enhances spatial responses in critical regions including tumor infiltration zones and gland boundaries. These components operate through a cascaded “channel-filtering-to-spatial-enhancement” cooperative mechanism, precisely coupling fine-grained pathological features with multi-scale contextual relationships.

RPAU-Net++ hierarchical decoding pathway demonstrates inherent compatibility with CBAM dual-perspective attention: During shallow decoding stages, channel attention preferentially activates morphology-relevant feature channels (e.g., edges and textures) through dynamic channel weighting, achieving precise refinement of fine structural features. In deep decoding stages, spatial attention reinforces the spatial saliency of pathological structures to accurately localize multi-scale pathological entities while suppressing stromal background interference. This hierarchical correspondence between channel-spatial attention and shallow-deep decoding enables stage-adaptive feature enhancement throughout the decoding process, providing robust support for high-precision colorectal tissue segmentation.

To analyze the synergistic effects of dual-dimensional attention, our ablation study introduces two variant modules: Channel Attention (CA) with removed spatial branch and Spatial Attention (SA) with removed channel branch. Maintaining identical network architectures and comparing against the complete CBAM, we conduct comparative analysis of different attention dimensions’ functional differences.

Deep supervision

Deep supervision establishes a multi-stage error backpropagation mechanism by deploying auxiliary losses at multiple intermediate network layers. This approach addresses two critical objectives: mitigating gradient vanishing in deep networks to accelerate convergence, and guiding the model to learn multi-semantic hierarchical features for improved segmentation accuracy. Adapted from the DSN framework proposed by Lee et al28., we implement this strategy within RPAU-Net++ hierarchical decoder architecture - extracting features from multiple depth output nodes (\(X^{0,1}, X^{0,2}, X^{0,3}, X^{0,4}\)) along the decoding path, where each node corresponds to a distinct decoding stage and independently generates segmentation results with computed losses.

For each output node, we employ a combined loss function integrating binary cross-entropy (BCE) and Dice coefficient to simultaneously constrain pixel-wise classification differences and region overlap:

where \(Y_b\) and \(\hat{Y_b}\) represent the flattened prediction probability and ground truth label for the b-th image respectively, and N denotes batch size. The BCE term focuses on pixel-level classification errors while the Dice coefficient emphasizes foreground region overlap, jointly optimizing pathological structure segmentation quality.

To transcend the conventional deep supervision limitation of serving merely as auxiliary training, we construct a feature feedback mechanism connecting intermediate layer outputs to the final output: each intermediate node output undergoes convolutional and upsampling processing before concatenation with the backbone network final output. This design directly injects fine-grained edge features, local structural features, regional contextual features, and global semantic features into the final prediction, enhancing hierarchical completeness of segmentation results through cross-stage feature interaction. Figure 7 illustrates the workflow of this enhanced deep supervision framework.

Model pruning

Model pruning reduces computational complexity and storage overhead while maintaining performance by removing redundant branches, connections, or hierarchical structures in neural networks, making it suitable for resource-constrained scenarios like edge computing. RPAU-Net++ employs a hierarchical decoding path architecture where different depth paths recover multi-scale features. Since shallow decoding paths can independently capture local features through skip connections without relying on global contextual support from deeper paths, this provides a theoretical basis for path-level pruning, allowing targeted removal of deep decoding paths irrelevant to the current task while retaining only core branches for inference.

The optimization rationale manifests in two aspects: (1) removal of deep paths directly reduces computational load and memory usage, lowering model complexity; (2) the independent operation capability of shallow paths breaks through the inference bottleneck of deep dependencies, improving computational efficiency to meet real-time segmentation requirements. Figure 8 visually demonstrates structural differences under various pruning strategies: defining \(L_i\) as the pruned model that “retains only the i-th and shallower decoding paths for output prediction”. For instance, \(L_1\) retains only the shallowest path (predictions from node \(X^{0,1}\)) as an extreme pruning scheme, while \(L_4\) preserves all decoding paths with fused multi-scale branch outputs, clearly illustrating pruning regulatory effect on model structural complexity.

Experiments and results analysis

Datasets

To comprehensively evaluate the model generalization capability, our experiments encompass three distinct scenarios: Gland Segmentation(GlaS), Colon Nuclei Identification and Counting Challenge(CoNIC), and cross-organ nuclei segmentation (PanNuke).The selected datasets are described as follows:

The GlaS29 dataset comprises 165 H&E-stained colon tissue(\(256\times 256\) pixels) sections from 16 patients, containing both benign and malignant glands. Stored in BMP format with binary mask annotations, we followed the official split of 132 training and 33 testing images to evaluate the model capability in single-organ gland-level segmentation, specifically assessing its performance in characterizing colon gland morphology.

The CoNIC30 dataset contains 4,981 H&E-stained colon tissue images (\(256\times 256\) pixels) with instance segmentation maps, nuclear classification tables, and counting tables. For our single-organ nuclei-level segmentation task, we extracted instance segmentation maps to validate the model colon nuclei recognition ability, using 3,984 images for training and 997 for testing.

The PanNuke31 dataset includes 7,904 image patches (\(256\times 256\) pixels) covering 19 tissue types including colon, with nuclei annotated into 5 categories (e.g., neoplastic, inflammatory) and provided with instance segmentation masks. We specifically selected colon-containing images while randomly sampling from other 18 tissue types to construct a cross-organ validation set of 2,770 images, primarily examining the model generalization capability when confronting both colon nuclei and heterogeneous tissue interference. The final split allocated 2,216 images for training and 554 for testing.

Parameter settings

The model proposed in this study was built, trained, and tested using the PyTorch deep learning framework. The specific hardware and software environments are listed in Table 1. During training, the binary cross-entropy loss function was used to calculate the model loss. The Adam optimizer was employed to continuously adjust and optimize the network parameters. The batch size was set to 8, and the training process comprised 200 epochs, with the CosineAnnealingLR learning rate scheduler employed. The initial learning rate was set to \(1\times 10^{-3}\), the minimum learning rate was \(1\times 10^{-5}\), and the momentum was set to 0.9. After training, the model with the minimum loss value was saved for segmentation testing.

Evaluation metrics

In this study, widely adopted evaluation metrics for medical image segmentation are employed, including IoU, Dice coefficient, Precision(Pre.), and Recall(Rec.). IoU quantifies the overlap between the predicted segmentation and the ground truth regions, serving as an indicator of segmentation accuracy. The Dice coefficient emphasizes the similarity between the predicted and ground truth labels. Precision evaluates the proportion of correctly predicted positive samples among all predicted positives, reflecting the model predictive accuracy. Recall assesses the proportion of actual positive samples that are correctly identified by the model, indicating its capability to capture positive instances. The corresponding formulas are defined as follows:

Where TP denotes true positives, TN denotes true negatives, FP denotes false positives, and FN denotes false negatives.

Comparison with classical algorithms

To validate the performance advantages and generalization capability of RPAU-Net++, experiments were conducted across three datasets, establishing a scenario gradient from single-organ structure segmentation to cross-organ generalization of homologous structures. Five representative models were selected as comparative benchmarks: the conventional U-shaped network U-Net5 and our improved baseline model UNet++8 to verify breakthroughs in classical architectures; Residual-Attention UNet++16 and DenseRes-UNet12 employing multi-module fusion strategies to contrast differences in modular co-design; and the state-of-the-art mainstream model nnUNet14 to examine competitiveness against cutting-edge methods.

Experimental results on GlaS dataset

RPAU-Net++ demonstrates superior comprehensive performance on the GlaS dataset compared to baseline models. As detailed in Tables 2,it achieves 4.23% and 2.99% improvements in IoU, along with 3.50% and 1.37% enhancements in Dice coefficient over U-Net and UNet++ respectively. This performance advantage primarily stems from two architectural innovations: JPFM dense dilation rates precisely cover the continuous scale spectrum from “nuclei-glandular tubules-tumor regions”, effectively addressing the discontinuous segmentation artifacts caused by discrete scale coverage in traditional U-Net architectures; CBAM channel attention preferentially activates edge- and texture-relevant feature channels, yielding particularly notable Precision improvement (88.17%) by significantly reducing gland-stroma misclassification.

Comparative analysis with similar strategy models reveals the critical importance of module synergy. While Residual-Attention UNet++ and DenseRes-UNet enhance feature flow through residual/dense connections, they exhibit insufficient localization precision - the former shows higher false positive rates due to inadequate scale coverage, while the latter demonstrates obvious over-segmentation tendencies from unconstrained channel redundancy. In contrast, RPAU-Net++ achieves an optimal “high-precision-high-recall” balance (85.67% recall) through CBAM spatial attention mechanism that specifically focuses on tumor nests, providing crucial clinical value for avoiding over-diagnosis.Notably, although nnUNet delivers excellent performance in general segmentation tasks through its adaptive architecture, it shows limitations in modeling the “fine structure-to-global region” correlations specific to colorectal glands. RPAU-Net++ superior performance in both IoU (+0.44%) and Precision (+1.02%), achieved through JPFM refined continuous-scale characterization, confirms its better alignment with pathological image requirements that simultaneously demand local detail preservation and global context integration.

Experimental results on CoNIC dataset

On the cell-dense, multi-scale overlapping CoNIC dataset, RPAU-Net++ still achieves superior performance, as demonstrated in Tables 3. The model shows 5.56% and 4.99% IoU improvements over U-Net and UNet++ respectively, attributable to JPFM dense dilation rates that provide continuous scale coverage from nuclei to small glandular structures, effectively resolving boundary ambiguity in densely packed cells. Compared to DenseRes-UNet, RPAU-Net++ achieves a balanced performance of 87.23% Recall and 82.36% Precision through CBAM spatial attention mechanism that specifically enhances cellular contours while reducing false positives in dense backgrounds. When benchmarked against nnUNet, RPAU-Net++ shows marginal but consistent advantages across all metrics, with particular efficacy in segmenting tumor cell clusters within glandular lumina - where the synergistic operation of JPFM and CBAM significantly improves discrimination capability for densely aggregated cells, validating its practical utility in complex scenarios.

Experimental results on PanNuke dataset

On the more complex PanNuke dataset, all models showed varying degrees of performance degradation compared to single-organ datasets due to cross-organ morphological variations, with detailed experimental results presented in Tables 4. However, RPAU-Net++ achieved superior performance with IoU of 74.25% and Dice of 83.25%, demonstrating its generalization capability under complex interference. By leveraging JPFM dense multi-scale modeling and CBAM attention-based feature selection, RPAU-Net++ outperformed nnUNet by 0.85% in IoU and 0.90% in Dice for scenarios involving confusion between colorectal nuclei and heterogeneous tissues, while maintaining balanced Precision and Recall. This effectively suppresses cross-organ misclassification and highlights the model superior adaptability to pathological scenarios with mixed tissue types.

To further validate the stability of RPAU-Net++, we plotted boxplots of Dice scores across three datasets for different models. Multiple experiments were conducted under identical conditions with different random data shuffles to generate the Dice coefficient boxplots, as shown in Figure 9 (a)-(c).

Analysis reveals that RPAU-Net++ exhibits higher median values in the boxplots compared to baseline models, indicating consistently superior performance across most tests. The score distribution demonstrates greater concentration with smaller fluctuations, reflecting enhanced stability across samples. The multi-module fusion strategy enables RPAU-Net++ to simultaneously improve average segmentation accuracy and reinforce stability, comprehensively validating its robustness and generalization capability for medical image segmentation tasks.

Comparative analysis of segmentation performance and pruning efficiency of RPAU-Net++. (a)-(c) Dice coefficient distributions of different models across GlaS, CoNIC, and PanNuke datasets from repeated experiments; (d) Relationship between inference time, Dice score, and parameter count under \(L_1\) - \(L_4\) pruning strategies for RPAU-Net++ on the CoNIC dataset.

Results of model pruning

Figure 9 (d) presents the performance comparison across pruning levels \(L_1\) to \(L_4\) (parameter size: 0.5M to 19M) on the CoNIC dataset. As model lightweighting progresses, end-to-end processing time decreases from 42ms to 12ms (71.4% efficiency gain), while Dice coefficient declines from 84.12% to 72.03%, demonstrating the characteristic lightweighting-efficiency gain-moderate accuracy tradeoff.

To assess edge deployment potential, we measured end-to-end processing times for each pruning level on high-performance GPU (RTX 4090) environments, encompassing complete preprocessing, model inference, and postprocessing mask generation pipelines. Current results demonstrate significant efficiency improvements through model lightweighting.While this study primarily focuses on academic performance validation, future work will prioritize practical edge deployment through: optimized quantization strategies, TensorRT acceleration framework integration, and adaptation to mainstream edge hardware platforms. These developments aim to enable CAD applications on compact computing devices and mobile platforms, ultimately enhancing clinical applicability.

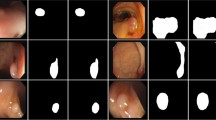

Visualization analysis

This section presents comparative visualization results through Figure 10 to Figure 12, demonstrating the segmentation performance of various models on GlaS, CoNIC, and PanNuke datasets. The three sets of visual comparisons clearly illustrate RPAU-Net++ dual advantages in structural preservation and detail segmentation. The differential maps, with red (oversegmentation) and green (undersegmentation) regions, provide quantitative assessment of segmentation quality.

In gland contour segmentation (GlaS), RPAU-Net++ prediction masks exhibit near-perfect alignment with ground truth, with minimal red/green artifacts confined to subtle edges. Comparative models UNet and UNet++ display more pronounced errors: significant green zones in gland folds (undersegmentation) and red areas in adjacent gland adhesion regions (oversegmentation), whereas RPAU-Net++ shows only negligible deviations at extreme fold boundaries.

For dense cell clusters in CoNIC, RPAU-Net++ achieves superior discrimination between individual cells and cell groups, demonstrating substantially lower noise density in boundary detection and cell separation compared to baseline models. Residual-Attention UNet++ (Dice=79.74%), for instance, exhibits densely distributed red/green noise, while RPAU-Net++ multi-module fusion effectively mitigates misjudgments from cell adhesion.When confronting PanNuke challenges of extreme nuclear size variation and overlap, RPAU-Net++ (Dice=84.01%) shows enhanced tolerance for small nucleus omission and fragmentation-induced oversegmentation. Compared with nnUNet (Dice=82.75%) which displays conspicuous green zones in small nuclear regions, RPAU-Net++ achieves near-complete nuclear detection.

Even in extreme cases - severely folded glands (GlaS), ultra-dense cell clusters (CoNIC), or completely overlapping nuclei (PanNuke) - RPAU-Net++ demonstrates significantly fewer errors than comparative models. Undersegmentation primarily stems from insufficient fine-structure feature extraction, with UNet-series models showing over twice the undersegmented area of RPAU-Net++ due to limited representation capacity for gland folds and nuclear edges. Oversegmentation results from contextual modeling deficiencies, as evidenced by DenseRes-UNet producing approximately 1.8 times more red zones from misclassified adjacent structures.In summary, RPAU-Net++ multi-module fusion strategy delivers superior performance in structural precision, detail completeness, and interference robustness. The segmentation results exhibit closer alignment with ground truth annotations and significant reduction in both oversegmentation and undersegmentation artifacts, conclusively validating its adaptability for complex medical imaging scenarios.

Model evaluation and ablation studies

Model efficiency and performance evaluation

As shown in Table 5, RPAU-Net++ achieves superior performance on the CoNIC dataset with a Dice coefficient of 84.12%, while simultaneously surpassing the strong baseline nnUNet in computational efficiency: it reduces parameter size (18.9M vs. 19.2M) and FLOPs (59.2G vs. 68.4G) by 1.6% and 13.4% respectively, while improving inference speed (28 FPS vs. 21 FPS) by 33.3%. This performance advantage stems from the synergistic optimization of JPFM and CBAM: JPFM dense dilation rate design eliminates redundant computations typical of conventional multi-scale modules, while CBAM dual attention mechanism precisely filters irrelevant features. Together, these components enhance feature utilization efficiency, enabling the model to achieve better segmentation performance with lighter computational costs in dense cell scenarios, thereby validating the rationale of efficient architecture design for clinical real-time diagnostic requirements.

Ablation studies

To systematically validate the effectiveness and synergistic value of the ResNet-50 encoder, JPFM multi-scale fusion, and CBAM dual-attention module, we designed eight comparative configurations on the GlaS and CoNIC datasets: using basic UNet++ (without any of the three modules) as the baseline, we sequentially tested individual incorporation of ResNet-50, JPFM, and CBAM, their pairwise combinations, and full three-module integration.

As shown in Table 6, model performance exhibited progressive improvement with incremental module integration. Individual incorporation of ResNet-50, JPFM, or CBAM yielded varying degrees of enhancement in IoU and Dice metrics. Pairwise combinations further improved performance through functional complementarity. The full integration of all three modules achieved optimal performance across all metrics on both datasets: 76.34% IoU and 83.20% Dice on GlaS; 74.89% IoU and 84.12% Dice on CoNIC, significantly outperforming single or pairwise configurations.

These results not only confirm each module independent value - ResNet-50 providing stronger low-level feature representation, JPFM enhancing continuous perception of multi-scale pathological structures, and CBAM precisely filtering effective features - but more importantly demonstrate their synergistic benefits. The cascading effects of encoder, fusion module, and attention mechanism achieve comprehensive optimization throughout the entire workflow of feature extraction, scale fusion, and precise selection, fully substantiating the rationale behind the module fusion strategy.

This ablation study focuses on validating two core improvements of JPFM: (1) the impact of removing the global average pooling branch on pathological detail preservation, and (2) the enhancement effect of dense dilation rate design on multi-scale fusion. A progressive control experiment was designed: JPFM-A versus original ASPP evaluates the isolated benefit of pooling branch removal; JPFM-B tests the preliminary effect of dense dilation rates; while JPFM-C and JPFM-D provide cross-comparisons to verify the synergistic advantage of “dense dilation rates + pooling removal.” This methodology precisely isolates the individual contributions of structural simplification and parameter optimization, quantitatively supporting JPFM accuracy improvement through resolution maintenance and continuous scale perception.

Results on the CoNIC dataset (Table 7), with all other modules and experimental conditions held constant, clearly validate JPFM design efficacy: performance progressively improves as dilation rates transition from sparse intervals (1,6,12,18) to dense intervals (1,2,4,8) with pooling branch removal. The dense dilation design (JPFM-B/D vs. JPFM-A) yields approximately 1.4% IoU gain by better capturing continuous scale features in colorectal cancer images spanning “nuclei-glandular structures-tumor regions.” Pooling branch removal (JPFM-D vs. JPFM-C) further enhances performance by 0.84% IoU and 0.53% Recall, confirming global pooling interference with micro-structure localization. The optimal JPFM-D configuration achieves 74.89% IoU and 87.23% Recall, demonstrating perfect alignment with pathological images’ dual requirements for multi-scale continuity and detail preservation.

To validate the synergistic value of CBAM dual-dimensional attention mechanism, comparative experiments were conducted using a baseline model without CBAM as reference, evaluating performance differences between channel attention only (CA), spatial attention only (SA), and complete CBAM. As shown in Table 8, CA alone improves Precision by 1.27% while maintaining nearly unchanged Recall, demonstrating its strength in feature filtering but weakness in spatial localization. SA alone significantly increases Recall by 1.63% but reduces Precision by 0.95%, indicating its effectiveness in spatial focusing while potentially introducing noise. The complete CBAM achieves synergistic optimization: maintaining Recall comparable to SA while improving Precision by 1.18% over SA, ultimately yielding 1.30% higher IoU and 0.35% higher Dice than Baseline. These results confirm the complementary nature of dual attention mechanisms - channel attention suppresses redundant features to enhance precision while spatial attention focuses on pathological regions to ensure completeness, with their coordinated operation enabling comprehensive segmentation performance optimization.

Conclusion and future work

In the context of medical artificial intelligence research, this study addresses the specific challenges of colorectal cancer pathological image segmentation by proposing the RPAU-Net++ model with systematic innovations. The main contributions and values can be summarized as follows:

At the architectural level, the synergistic design of ResNet-50 encoder, JPFM, and CBAM establishes a progressive optimization framework of “feature extraction-scale fusion-region focusing”: The residual structure of ResNet-50 effectively mitigates deep network degradation while ensuring training stability with increased model depth; The JPFM module dense dilation rate design (1,2,4,8) improves the Dice metric by 4.54% compared to conventional ASPP, enhancing continuous scale modeling capability for “nuclei-glandular structures-tumor regions”; The CBAM dual-attention mechanism achieves dynamic balance between segmentation precision and completeness, providing core support for accurate segmentation in complex pathological scenarios.

Comprehensive performance validation across three challenging datasets (GlaS, CoNIC, PanNuke) demonstrates superior capabilities: 83.20% Dice coefficient on GlaS (2.7% improvement over nnUNet); 74.89% IoU with 28 FPS processing speed on CoNIC; and maintained 83.25% Dice coefficient in cross-organ scenarios on PanNuke, confirming the model generalization ability. Notably, the proposed hierarchical pruning strategy (\(L_1\)to\(L_4\)) compresses model parameters from 19M to 0.5M while reducing inference time by 71.4%, establishing foundations for mobile deployment. Ablation studies further verify that the fully configured RPAU-Net++ achieves 4.37% higher Dice coefficient than baseline UNet++, highlighting the modules’ synergistic value.

Future research will focus on clinical applicability breakthroughs: 1) Extending multimodal compatibility to develop universal architectures for CT/MRI imaging; 2) Advancing 3D segmentation technologies to improve volumetric pathological structure analysis; 3) Integrating TensorRT acceleration framework to achieve<10ms real-time inference for clinical implementation. The current study has made significant progress in model lightweighting and multi-scale feature fusion, laying solid foundations for subsequent work. With these advancements, RPAU-Net++ is poised to better serve precision medicine needs and facilitate the transition of AI-assisted pathological diagnosis from laboratory research to clinical practice.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

An analysis of national mortality surveillance data. Qi, J., Li, M., Wang, L. & amp et al. National and subnational trends in cancer burden in china, 2005–20. Lancet Public Heal. 8, 943–955 (2023).

Chan, J. K. The wonderful colors of the hematoxylin-eosin stain in diagnostic surgical pathology. Int. J. Surg. Pathol. 22, 12–32 (2014).

Ester, O., Hörst, F., Seibold, C. & et al. Valuing vicinity: Memory attention framework for context-based semantic segmentation in histopathology. Comput. Med. Imaging Graph. 107, 102238 (2023).

Campanella, G., Hanna, M. G., Geneslaw, L. & et al. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 25, 1301–1309 (2019).

Ronneberger, O., Fischer, P. spsampsps Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 234–241 (2015).

Chen, J., Lu, Y., Yu, Q. & et al. Transunet: Transformers make strong encoders for medical image segmentation. ArXiv preprint arXiv:2102.04306 (2021).

Hu, C., Wang, Y., Joy, C. & et al. Swin-u-net: U-net-like pure transformer for medical image segmentation. In Computer Vision – ECCV 2022 Workshops, 205–218 (Springer Nature Switzerland, Cham, 2023).

Zhou, Z., Siddiquee, M. M. R., Tajbakhsh, N. & et al. U-net++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, 3–11 (Springer, Cham, 2018).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 770–778 (2016).

Khaniabadi, S. M., Ibrahim, H., Huqqani, I. A. & et al. Comparative review on traditional and deep learning methods for medical image segmentation. In 2023 IEEE 14th Control and System Graduate Research Colloquium (ICSGRC), 45–50 (2023).

Lu, Y., Qin, X., Fan, H. & et al. Wbc-net: A white blood cell segmentation network based on u-net and resnet. Appl. Soft Comput. 101, 107006 (2021).

Kiran, I., Raza, B., Ijaz, A. & et al. Denseres-u-net: Segmentation of overlapped/clustered nuclei from multi-organ histopathology images. Comput. Biol. Med. 143, 105267 (2022).

Huang, H., Lin, L., Tong, R. & et al. Unet 3+: A full-scale connected unet for medical image segmentation. In ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 1055–1059, https://doi.org/10.1109/ICASSP40776.2020.9053405 (Barcelona, Spain, 2020).

Isensee, F., Jaeger, P. F., Kohl, S. A. A. & et al. nnu-net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211, https://doi.org/10.1038/s41592-020-01008-z (2021).

Ahmad, I., Xia, Y., Cui, H. & et al. Dan-nucnet: A dual attention-based framework for nuclei segmentation in cancer histology images under wild clinical conditions. Expert Syst. Appl. 213, 118945 (2023).

Li, Z., Zhang, H., Li, Z. & et al. Residual-attention u-net++: A nested residual-attention u-net for medical image segmentation. Appl. Sci. 12, 107006 (2022).

Hussain, T., Shouno, H., Mohammed, M. A. & et al. Dcssga-unet: Biomedical image segmentation with densenet channel spatial and semantic guidance attention. Knowl.-Based Syst. 314, 113233, https://doi.org/10.1016/j.knosys.2025.113233 (2025).

Hussain, T. & Shouno, H. Magres-unet: Improved medical image segmentation through a deep learning paradigm of multi-attention gated residual u-net. IEEE Access 12, 40290–40310. https://doi.org/10.1109/ACCESS.2024.3374108 (2024).

Yang, Z., Peng, X., Yin, Z. & Yang, Z. Deeplab_v3_plus-net for image semantic segmentation with channel compression. In 2020 IEEE 20th International Conference on Communication Technology (ICCT), 1320–1324, https://doi.org/10.1109/ICCT50939.2020.9295748 (Nanning, China, 2020).

Yao, K. & et al. Pointnu-net: Key point-assisted convolutional neural network for simultaneous multi-tissue histology nuclei segmentation and classification. IEEE Trans. Emerg. Top. Comput. Intell. 8, 802–813 (2021).

Lan, K., Cheng, J., Jiang, J. & et al. Modified u-net++ with atrous spatial pyramid pooling for blood cell image segmentation. Math Biosci Eng. 20, 1420–1433 (2023).

Lan, L. & et al. Brau-net++: U-shaped hybrid cnn-transformer network for medical image segmentation. ArXiv preprint arXiv:2401.00722 (2024).

Agnes, S. A., Solomon, A. A. & Karthick, K. Wavelet u-net++ for accurate lung nodule segmentation in ct scans: Improving early detection and diagnosis of lung cancer. Biomed. Signal Process. Control 87, 105509. https://doi.org/10.1016/j.bspc.2024.105509 (2024).

Zhu, Z., Sun, M., Qi, G. & et al. Sparse dynamic volume transunet with multi-level edge fusion for brain tumor segmentation. Comput. Biol. Med. 108284 (2024).

Zhang, Z., Wu, H., Zhao, H. & et al. A novel deep learning model for medical image segmentation with convolutional neural network and transformer. Interdiscip. Sci.: Comput. Life Sci. 15, 663–677 (2023).

Hussain, T., Shouno, H., Hussain, A. & et al. Effresnet-vit: A fusion-based convolutional and vision transformer model for explainable medical image classification. IEEE Access 13, 54040–54068, https://doi.org/10.1109/ACCESS.2025.3554184 (2025).

Alam, T., Yeh, W. C., Hsu, F. R. & et al. An integrated approach using yolov8 and resnet, seresnet & vision transformer (vit) algorithms based on roi fracture prediction in x-ray images of the elbow. Curr. Med. Imaging 20, e15734056309890, https://doi.org/10.2174/0115734056309890240912054616 (2024).

Lee, C. Y., Xie, S., Gallagher, P. & et al. Deeply-supervised nets. In Proceedings of the Artificial Intelligence and Statistics, 562–570 (San Diego, CA, USA, 2015).

The glas challenge contest. Sirinukunwattana, K., Pluim, J. P. W., Chen, H. & amp et al. Gland segmentation in colon histology images. Medical Image Analysis 35, 489–502 (2017).

Graham, S., Jahanifar, M., Vu, Q. D. & et al. Conic: Colon nuclei identification and counting challenge 2022. ArXiv preprint arXiv:2111.14485 (2021).

Gamper, J., Koohbanani, N. A., Benes, K. & et al. Pannuke dataset extension, insights and baselines. arXiv preprint arXiv:2003.10778 (2020).

Acknowledgements

This work was supported in part by Shijiazhuang Introducing High-level Talents’ Startup Funding Project (248790067A), the Startup Foundation for PhD of Hebei GEO University (No. BQ201322), Natural Science Foundation of Hebei Province (H2024403001), and Science Research Project Funding from Hebei Provincial Department of Education (BJK2024099).

Author information

Authors and Affiliations

Contributions

Qi Liu and Zhenfeng Zhao conceived the study, designed the experimental framework, and drafted the manuscript. Yingbo Wu participated in the experimental design, assisted in data analysis, and reviewed and revised the manuscript. Siqi Wu contributed to the revision of the manuscript and supervised the overall direction of the research. Yutong He reviewed the experimental results and provided critical suggestions and feedback. Haibin Wang participated in the experimental design and provided technical support for the research. Shenwen Wang interpreted the experimental results and provided feedback and suggestions for optimizing the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, Q., Zhao, Z., Wu, Y. et al. Multi-module UNet++ for colon cancer histopathological image segmentation. Sci Rep 15, 28895 (2025). https://doi.org/10.1038/s41598-025-13636-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-13636-6