Abstract

Sleep apnea, a prevalent respiratory disorder, poses significant health risks, including cardiovascular complications and behavioral issues, if left untreated. Traditional diagnostic methods like polysomnography, although effective, are often expensive and inconvenient. SleepNet addresses these issues by introducing a new multimodal approach tailored for precise sleep apnea detection. At its core, the framework utilizes a fusion of one-dimensional convolutional neural networks (1D-CNN) and bidirectional gated recurrent units (Bi-GRU) to analyze single-lead electrocardiogram (ECG) recordings, yielding an accuracy of 95.08%. When the model is enriched with additional physiological signals—namely nasal airflow and abdominal respiratory effort—the performance further rises modestly to 95.19%. This multimodal strategy surpasses the performance of existing unimodal approaches, yielding enhanced sensitivity and specificity rates of 96.12% and 93.45%, respectively. When compared to previous studies, SleepNet represents a substantial leap forward in diagnostic efficacy, showcasing the transformative potential of integrating multiple data streams for sleep apnea detection. The results highlight the promise of deep learning methodologies in advancing this domain and lay a robust foundation for subsequent research.

Similar content being viewed by others

Introduction

Sleep apnea (SA) is a widespread and potentially serious disorder characterized by repeated interruptions in breathing during sleep, each pause lasting longer than ten seconds. These interruptions disrupt the normal sleep cycle and lead to significant drops in oxygen levels reaching vital organs such as the brain. As a result, individuals often experience fragmented rest, daytime drowsiness, and face a higher risk of developing cardiovascular and metabolic complications. Unfortunately, SA is often overlooked because conventional diagnostic methods have notable limitations. There are three primary forms of SA: obstructive sleep apnea (OSA), central sleep apnea (CSA), and mixed sleep apnea. OSA, the most common variant, arises when the upper airway becomes physically blocked. Unlike simple snoring—which is usually harmless and linked to factors like nasal congestion or poor sleep habits—OSA causes more severe breathing interruptions. Clinicians gauge OSA severity using the Apnea-Hypopnea Index (AHI), a measure that reflects how many apnea and hypopnea events occur per hour of sleep. To confirm a diagnosis of sleep apnea, polysomnography (PSG) remains the gold standard. This comprehensive test monitors brain waves (EEG), cardiac rhythms (ECG), airflow through the nose, and the respiratory effort of both the chest and abdomen.

However, its high cost, intricate setup, and limited accessibility make PSG impractical for widespread use, particularly for home-based applications. These challenges underscore the urgent demand for innovative diagnostic tools that are cost-effective, portable, and capable of delivering high accuracy without the logistical challenges associated with PSG. Recent developments in wearable devices and contactless diagnostic tools have enabled new methodologies for identifying sleep apnea. Leveraging machine learning and deep learning algorithms proves especially promising, as these approaches can enhance the precision of SA detection while also making it more scalable and accessible. By efficiently processing large, diverse physiological datasets, these advanced technologies offer a promising avenue for detecting SA in a more accessible and cost-effective manner.

In this work, we present SleepNet, a sophisticated deep learning architecture crafted to advance the identification of OSA. SleepNet fuses multiple data streams—ECG tracings, measurements of abdominal breathing effort, and nasal airflow—to create a rich, multimodal input. The core of the framework merges 1D-CNN with BiGRU, enabling an in-depth interpretation of these varied signals. By moving beyond single-source analyses, SleepNet not only boosts diagnostic accuracy but also paves the way for practical, non-clinical deployment, marking a significant leap forward in OSA detection.

Building on prior research, this study highlights SleepNet’s innovative contributions and situates it within the broader context of OSA detection. Prior research, including the works of Gutta et al.1 and Shen et al.2, has highlighted the utility of ECG signals in detecting OSA by analyzing heart rate variability and arrhythmic patterns linked to apnea events. SleepNet advances this approach by leveraging ECG signals alongside additional physiological inputs for a more robust analysis. Deep learning models particularly DNNs and CNNs have dramatically improved both feature extraction and classification in this field. SleepNet takes these strengths a step further by integrating BiGRUs, a type of recurrent architecture well suited for capturing temporal dependencies. This addition allows the system to recognize intricate sequential patterns and identify even the most complex apnea events. Moreover, similar to wearable systems explored by Surrel et al.3, which emphasize portability and home-based diagnostics, SleepNet is designed for practical, accessible use beyond clinical environments. This adaptability enhances its potential for widespread adoption, making it a transformative tool for improving OSA diagnosis and management.

However, SleepNet introduces several key innovations that set it apart from existing approaches. While most previous models focus on single-modal data, such as ECG signals, SleepNet uniquely integrates ECG with abdominal respiratory effort and nasal airflow data. This multimodal approach offers a holistic perspective on the physiological changes linked to OSA, thereby enhancing detection accuracy. Additionally, SleepNet’s hybrid model design combines 1D-CNN for spatial feature extraction with BiGRU for temporal pattern recognition, unlike other models such as the Multi-Scale Dilation Attention CNN used by Shen et al.2. By blending these layers, the network becomes better at identifying the subtle, complex sequences characteristic of apnea events. We also assembled two custom datasets for SleepNet: Dataset A consists solely of 60-second snippets of ECG recordings, while Dataset B pairs those ECG segments with simultaneous measurements of abdominal breathing effort and nasal airflow. These datasets not only provide a broader spectrum of apnea-related variations but also set new standards for dataset quality in the field. Finally, SleepNet’s multimodal integration and hybrid architecture lead to enhanced accuracy and resilience, outperforming existing models, including Shen et al.’s MSDA-1DCNN2, in both accuracy and robustness. Overall, SleepNet builds upon the strengths of existing research while addressing their limitations, positioning itself as a groundbreaking solution for accurate, efficient, and accessible OSA detection.

The prevalence of sleep disturbances is escalating globally4, with approximately 70 million5 individuals in the United States alone affected by various sleep-related conditions. Notably, the incidence of Sleep Apnea (SA) syndrome exhibits a concerning trend, with current estimates indicating that about 5% of females and 14% of males in the United States are afflicted6. This increasing prevalence is evident across diverse global populations, with an estimated one billion individuals affected by Obstructive Sleep Apnea (OSA) worldwide7. The impact of sleep disturbances goes beyond mere discomfort, as they are associated with elevated risks of morbidity and mortality8. Despite its chronic and potentially severe consequences, timely diagnosis and intervention can successfully manage and treat OSA. The current advancements and research in SA detection are summarized in Table 1, which presents a detailed review of various methodologies employed for OSA detection, focusing on their respective datasets and contributions to improving diagnostic accuracy. The table compiles numerous studies that explore the use of single-lead ECG signals for detecting OSA, providing valuable insights into their potential for enhancing understanding and precision in this domain. Each study, conducted by different researchers across various years, employed unique methodologies to tackle the challenges of OSA detection. For example, in 2017, Gutta et al.1 utilized Vector Valued Gaussian Processes, achieving an accuracy of 82.33%. Similarly, González et al. in the same year implemented binary classification with specific features, yielding an accuracy of 84.76%. In 2018, Li et al.9 combined Deep Neural Networks with Hidden Markov Models, also achieving an accuracy of 84.76%. Surrel et al.3 in the same year employed Support Vector Machines in a wearable system, achieving an accuracy of 88.20%. Furthermore, Papini et al.10 in 2018 focused on 10-fold cross-validation, achieving an accuracy of 88.30%. In 2019, Wang et al.11 utilized Deep Residual Networks, achieving an accuracy of 83.03%, while S. A. Singh et al used a pre-trained AlexNet, resulting in an accuracy of 86.22%. Moreover, in 2021, Feng et al.12 introduced a novel approach combining auto-encoders and cost-sensitive classification, achieving an accuracy of 85.10%. Finally, Shen et al.2 in 2021 employed a multi-scale dilation attention CNN with weighted-loss classification, achieving the highest accuracy of 89.40%. These studies collectively showcase a variety of methodologies and accuracy rates in OSA detection using single lead ECG signals, underscoring the ongoing endeavors to advance diagnostic capabilities in this domain.

Essential for the prevention and management of cardiovascular, behavioral, and other health issues related to sleep apnea is the creation and use of accurate, affordable, and portable diagnostic tools. PSG continues to be the definitive method for diagnosing SA, utilizing measures like the AI, HI, and the AHI to assess the condition’s severity clinically. Even with its remarkable precision, PSG is a demanding and costly procedure, necessitating thorough observation of signals through multiple electrodes and wires for about 10 hours. This complexity emphasizes the necessity for easier and more affordable methods for detecting obstructive sleep apnea (OSA). In reply, researchers have explored single-lead signal techniques like pulse oximetry17, photoplethysmography (PPG), electrocardiogram (ECG)18,19, tracheal body sound20, and electroencephalogram19,21, as alternative approaches for identifying sleep disorders. These methods demonstrate potential for simplifying diagnosis, enhancing accessibility and reducing inconvenience for patients. OSA is marked by repeated disruptions in standard breathing rhythms while sleeping. By directly assessing respiratory effort via sensors that track chest and abdominal movements, clinicians can detect tangible signs of apnea events, providing a more accurate evaluation of breathing irregularities than indirect measures such as ECG signals. Data on respiratory effort is essential for accurately assessing the AHI, which gauges the severity of sleep apnea by monitoring the frequency of apnea and hypopnea events per hour of the sleep cycle.

The development of ML and DL models for OSA detection offers the potential for cost-effective alternatives to PSG. These models could facilitate convenient and effortless detection of OSA in home settings, enabling individuals to seek specialist consultations if apnea is detected. However, challenges persist. For instance, some models, like the approach presented by22, achieve moderate accuracy levels of 82%, underscoring the need for further advancements before practical deployment. Innovative strategies, such as the reversed-pruning method by23 and network optimizations by24, highlight ongoing efforts to improve the efficiency and effectiveness of SA detection models. Despite the availability of numerous datasets, there remains a surprising gap in research on multimodal methodologies for sleep apnea detection. This study primarily focuses on OSA, a condition where the normal airway passage is obstructed, leading to irregular breathing during sleep. OSA typically occurs when the muscles supporting the throat’s soft tissues, including the tongue, relax excessively, causing periodic airway blockages. This study’s main contributions are stated below:

-

Preparation of two datasets: Dataset A comprises ECG signals for a 60-second time frame per annotation, while Dataset B includes ECG signals combined with abdominal and nasal respiratory effort signals.

-

Proposal of an advanced approach to integrate ECG, abdominal respiratory effort, and nasal airflow signals, providing deeper insights into the intricate patterns of OSA.

-

Development of a novel multimodal deep learning model, SleepNet, which leverages features from 1D-CNN and BiGRU for effective OSA detection.

-

Demonstration of superior accuracy in OSA detection compared to recent models, showcasing SleepNet’s capability in diverse real-world scenarios.

Related works

Sleep apnea represents a widespread sleep disturbance marked by frequent interruptions in breathing that can give rise to serious health complications, including cardiovascular disease, metabolic imbalances, and cognitive impairment. The most common variant OSA happens when the muscles in the throat relax excessively during sleep and block the airway. Detecting this condition promptly and accurately is crucial for effective treatment and for preventing further health risks. PSG remains the gold standard for diagnosis, as it monitors multiple physiological signals such as electroencephalogram, electrocardiogram, pulse oximetry, and airflow to guarantee accurate detection. Nevertheless, PSG demands specific tools and skilled staff, restricting its practicality for monitoring at home. This has generated interest in creating alternative diagnostic methods that are accurate and feasible for application in non-clinical environments. Due to the limitations of traditional diagnostic techniques, there has been considerable demand for novel, non-invasive, and user-friendly technologies that can identify sleep apnea in home settings. Wearable technology and remote tracking systems have surfaced as encouraging options, providing ongoing, unobtrusive observation of physiological signals. These technologies aim to deliver accurate evaluations of sleep apnea, enhancing patient comfort and ease of use, thus serving as a practical choice for home testing. Among the physiological indicators examined for sleep apnea detection, ECG and respiratory data are especially important. ECG signals are simple to obtain and offer valuable information about heart function, making them perfect for wearable technology. Studies show that combining ECG data with additional signals like respiratory effort and oxygen saturation greatly improves detection accuracy by offering a comprehensive perspective on physiological changes linked to sleep apnea. The rise of deep learning has transformed medical diagnostics, such as sleep apnea detection, with methods like CNNs and RNNs showcasing remarkable skill in recognizing intricate patterns in physiological data. These models autonomously derive significant features from unprocessed data, enhancing diagnostic accuracy while reducing the necessity for manual feature development. SleepNet, an innovative model, utilizes multimodal data—ECG and breathing signals—to enhance sleep apnea identification. It utilizes CNNs for extracting spatial features and BiGRUs to examine temporal patterns, thereby capturing both spatial and temporal dependencies for a thorough comprehension of the physiological changes associated with sleep apnea. Through the combination of multimodal inputs and sophisticated deep learning methods, SleepNet provides a strong and user-friendly approach to improving diagnostic precision. This research assesses the efficacy of SleepNet in identifying sleep apnea, contrasting its results with current techniques. It highlights the benefits of a multimodal strategy and the transformative influence of deep learning in enhancing diagnostic accuracy in sleep medicine. Through the use of various physiological signals and advanced deep learning techniques, SleepNet seeks to establish a new benchmark for detecting and managing sleep apnea. Future studies will concentrate on confirming the model’s effectiveness among diverse patient groups and clinical environments to guarantee its relevance and dependability in practical situations.

Accurate diagnosis of sleep apnea is essential for properly handling its related complications. Important methods for detecting sleep apnea include various strategies, ranging from surveys to sophisticated imaging and signal processing techniques. Questionnaires25 offer an economical method to assess individuals for sleep-related problems, while medical image analysis26 has become a powerful instrument in identifying sleep apnea, especially in more serious instances. This method also provides distinct perspectives on the anatomical alterations that happen during apnea events. The recognized gold standard for observing sleep apnea is PSG27, which includes a variety of physiological signals like EEG, ECG, pulse oximetry, arterial blood oxygen levels, airflow, and nasal flow assessments. Although PSG offers unmatched diagnostic precision, it is inappropriate for non-clinical settings, like home monitoring. This constraint highlights the increasing need for wearable and non-contact sleep technologies that allow for discreet monitoring without the need for direct oversight by healthcare providers28. Among the physiological signals investigated for sleep apnea identification, ECG is prominent because of its practicality in wearable monitoring devices. Numerous single-lead OSA detection investigations have utilized ECG and pulse oximetry data to identify essential features and recognize patterns suggestive of OSA occurrences. For instance14, employed a Discriminative HMM with ECG signals to identify OSA, but this approach only yielded binary results and failed to evaluate the severity of OSA events. In a different research14, integrated single-lead ECG data with DNN and HMM models, enhancing performance through the addition of SVM, ANN and decision fusion techniques20. These developments emphasize the possibility of utilizing single-lead ECG signals for identifying sleep apnea in wearable devices, opening the door for more affordable and accessible diagnostic methods. Nonetheless, additional studies are needed to enhance these techniques and confirm their dependability in various patient groups and environments. Nonetheless, this approach faced limitations in differentiating between various disorders. Chen et al.29 utilize a CNN-BiGRU based model for spatiotemporal learning on two datasets to achieve a good prediction score of SA prediction. While it has been established that sleep apnea can be accurately predicted and diagnosed using cardiac activity through ECGs, it is also essential to reiterate that it is a respiratory condition and studying airflow and respiration—based movements can help improve the accuracy of pre—diction. Avcı and Akbaş30 utilize chest, abdominal, and nasal signals in an ensemble method to classify sleep apnea in minute-based occurrences. Using nasal airflow signals31, propose an automated SVM-based algorithm that can alert users through an early-warning system. Thommandram et al.32 focus on features that are clinically recognizable and use k-nearest neighbours to classify sleep apnea. The effective identification and management of sleep apnea, particularly its most common form, OSA is a critical aspect of healthcare due to the condition’s potential to cause significant health com- plications. The current landscape of SA detection methods is diverse, ranging from cost-effective questionnaires to sophisticated medical image analysis and PSG - the latter being the clinical gold standard. PSG, which includes an array of biological signals like ECG, EEG, and oximetry, is highly effective but not suitable for non-clinical, everyday environments, spurring the need for more accessible monitoring technologies. Recent advancements in SA detection focus significantly on wearable and non-contact technologies that offer unobtrusive monitoring. In this context, ECG emerges as a particularly promising signal for use in wearable devices. Studies leveraging single- lead ECG, pulse oximetry data, and respiratory effort have been effective in identifying OSA, employing advanced computational models like Hidden Markov Models (HMM), DNN, and CNN. These models analyze various signal features, striving to predict and assess OSA incidents with varying degrees of success. This diverse array of research efforts underscores a significant commitment to improving OSA detection, with a focus on developing models that are not only accurate but also practical for everyday use. Current trends in research, as highlighted in the two tables, demonstrate the evolving nature of OSA detection, from leveraging advanced signal processing techniques to employing deep learning models. These studies contributes uniquely to the broader goal of creating more effective and user-friendly diagnostic tools. While a number of studies such as13,29,33,34 achieve more than 90% accuracy in detecting apnea episodes using ECG signals, some researchers investigate other forms of signal inputs. McClure et al.35 for instance, attempts to explore the usage of chest and abdominal movements to analyze breathing patterns. However, given the complexity of both the disorder and its diagnosis, it is imperative to dissect the various types of available signals and how a multimodal approach can further improve detection accuracy. This section dives into a detailed analysis of the database used to create the two datasets for the study, the machine learning algorithms explored, and the specifics of the proposed model, SleepNet. The training procedure is also briefly discussed. The model architecture is described with an overview of the diagnosis process given in Fig. 1. Figure 1 outlines the process of diagnosing obstructive sleep apnea (OSA) using the SleepNet model. The figure highlights the integration of diverse physiological inputs such as ECG and respiratory signals, which together enhance the model’s predictive accuracy. The depiction underscores the importance of a multimodal strategy in achieving precise diagnoses, paving the way for timely and effective therapeutic interventions for OSA.

Methodology

Overview of the SleepNet model

The SleepNet model presents an innovative method for detecting sleep apnea by utilizing various physiological signals to improve diagnostic precision. At the heart of its design is a hybrid framework that merges a 1D-CNN with a Bi-GRU. This combination allows the model to obtain spatial features via convolutional layers and assess temporal dependencies with the Bi-GRU element. Through the analysis of signals like ECG, abdominal respiratory effort, and nasal airflow, SleepNet delivers a thorough evaluation of the physiological patterns related to sleep apnea. SleepNet, as a comprehensive deep learning framework, reduces the necessity for manual feature engineering, which allows it to be versatile in practical applications. The model’s flexibility and scalability enable it to manage various datasets and extra signal modalities, improving its usefulness in clinical and home monitoring settings. Preliminary assessments indicate that SleepNet surpasses current techniques in accuracy, sensitivity, and specificity, providing a valuable resource for the early and dependable detection of sleep apnea. SleepNet connects traditional clinical diagnostics with wearable solutions by enabling non-invasive and affordable monitoring, creating a path for effective and accessible management of sleep apnea.

-

Hybrid architecture: SleepNet employs a hybrid architecture that synergizes CNNs with BiGRUs to enhance sleep apnea detection. The CNN component plays a crucial role in extracting spatial patterns from input biological signals, such as pulse rate fluctuations and airflow changes. These spatial features provide critical insights into the structural patterns within the data. The BiGRU component complements this by capturing temporal dependencies, processing sequential data bidirectionally to account for patterns occurring over time. This dual capability allows SleepNet to identify intricate patterns indicative of sleep apnea episodes with exceptional accuracy, significantly bolstering its diagnostic precision and reliability.

-

Integration of multi-modal data: A standout feature of SleepNet is its ability to integrate multiple types of physiological data, including ECG, SpO2 (oxygen saturation), and airflow signals. While many existing models rely on single-modal data, the incorporation of multiple physiological signals in SleepNet provides a comprehensive view of the patient’s condition. This multi-dimensional approach significantly enhances the model’s accuracy and robustness in detecting sleep apnea, making it more effective at identifying various types of apnea events.

-

Improved accuracy and generalization: SleepNet has been rigorously tested on a wide range of datasets, and its performance metrics, such as accuracy, specificity, and sensitivity, demonstrate its superior ability to identify sleep apnea. The model effectively addresses common challenges encountered in previous approaches, such as overfitting and difficulties with generalization. As a result, SleepNet is well-suited for deployment in diverse clinical settings, where it can offer consistent and reliable performance across varied patient populations.

-

Advanced regularization and learning strategies: To maintain high performance and prevent overfitting, SleepNet incorporates advanced regularization methods and ensemble learning techniques. These approaches guarantee that the model performs effectively across various datasets and testing conditions, ensuring its consistency and resilience. By applying these methods, SleepNet minimizes the risk of model degradation, ensuring its ability to adapt to new data without compromising on accuracy.

-

Computational efficiency: Another critical feature of SleepNet is its computational efficiency. The SleepNet model has been optimized for deployment in both research and clinical environments, enabling it to process data in real-time for rapid and accurate diagnoses. This real-time capability is crucial in clinical settings, where healthcare providers must make quick, informed decisions to manage patients effectively. Moreover, the model’s efficiency allows it to function well even in environments with limited computational resources, ensuring that high performance and accuracy are maintained without the need for expensive infrastructure.

In conclusion, SleepNet presents a cutting-edge solution for sleep apnea detection, leveraging its hybrid architecture, multi-modal data integration, and advanced deep learning techniques. Its capacity to process and analyze diverse physiological signals in real-time positions it as a highly valuable tool in sleep medicine. With its potential to improve diagnostic accuracy and enhance patient care, SleepNet holds great promise for advancing the field of sleep apnea detection and management.

Dataset

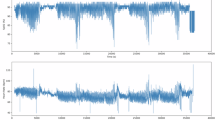

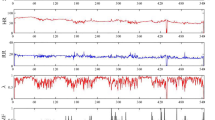

The ECG component of our study is derived exclusively from the PhysioNet ECG Sleep Apnea database36,37. This repository comprises seventy overnight recordings captured under standardized polysomnography (PSG) conditions, each spanning approximately seven to ten hours. Every recording includes a single-lead electrocardiogram sampled at 100 Hz with 16-bit resolution (nominally 200 A/D units per millivolt). The raw ECG waveforms are stored as binary .dat files (e.g., rnn.dat), with accompanying .hea header files that specify channel names, sampling frequency, gain, and file durations36. For each heartbeat, machine-generated R-peak annotations are available as binary .qrs files; however, these automatic detections can include false positives or misses, necessitating refinement via Pan–Tompkins or wavelet-based R-peak detectors to ensure accurate R–R interval time series for HRV feature analysis when needed38. The learning (training) set comprises thirty-five recordings (IDs a01–a20, b01–b05, c01–c10), divided into three cohorts: Group A includes twenty subjects with moderate-to-severe OSA, Group B includes five subjects with borderline or mild OSA, and Group C includes ten healthy controls36. Each ECG recording is partitioned into non-overlapping 60 s epochs, each containing 6,000 samples (60 s \(\times\) 100 Hz). Minute-by-minute apnea annotations are provided in binary .apn files for the learning-set recordings, with a “1” indicating an apnea event in that 60 s interval and a “0” indicating no apnea39. To prevent data leakage—especially since two recordings (c05 and c06) originate from the same individual with only an 80 s offset36—we strictly enforce subject-level partitioning: all epochs from a01–a20, b01–b05, and c01–c10 compose the training set, while all epochs from x01–x35 (the thirty-five test recordings) remain entirely unseen during training. ECG preprocessing entails a zero-phase band-pass filter between 0.05 Hz and 40 Hz to eliminate baseline drift and high-frequency noise while preserving P–QRS–T morphology. After filtering, the ECG signals are normalized to zero mean and unit variance to standardize amplitude ranges across subjects. For each 60 s epoch, the refined R–R interval time series are extracted for optional HRV-based feature construction, but SleepNet’s architecture ultimately operates on preprocessed raw ECG waveforms in an end-to-end fashion, preserving temporal and morphological information crucial for apnea detection.

A subset of eight learning-set recordings (IDs a01–a04, b01, c01–c03) additionally provides synchronized respiratory channels for multimodal analysis36. Specifically, Resp C (chest respiratory effort) and Resp A are measured via inductance plethysmography belts placed around the thorax and abdomen, respectively, capturing the expansion and contraction associated with each breathing cycle. Additionally, Resp N (oronasal airflow) is recorded using a nasal thermistor that detects pressure changes caused by inhalation and exhalation. All respiratory signals are sampled at 100 Hz and stored either in combined binary rnnr.dat files or as separate .dat files, each accompanied by a .hea header specifying channel names, sampling rate, and gain36. Respiratory preprocessing follows a zero-phase band-pass filter between 0.1 Hz and 15 Hz to isolate the adult respiratory frequency band (approximately 0.2–0.5 Hz) and to remove motion artifacts or high-frequency noise. After filtering, each Resp C, Resp A, and Resp N channel is normalized to zero mean and unit variance, ensuring that amplitude differences across subjects or sensor types do not bias subsequent feature extraction or model training38. In Dataset B (multimodal), each 60 s epoch is represented by a \(6{,}000\times 4\) matrix comprising ECG, Resp C, Resp A, and Resp N, aligned to the same binary apnea label from the .apn file. Because only eight subjects provide respiratory data, we employ LOSO-CV for evaluation: in each fold, one subject’s entire set of epochs (all four channels) is held out for testing, while the remaining seven subjects’ epochs form the training set. This LOSO strategy ensures that no individual’s data appear in both training and test partitions, yielding an unbiased estimate of SleepNet’s ability to generalize to unseen subjects.

Factors influencing SpO2 readings accuracy in sleep apnea detection

The accuracy of SpO2 readings in sleep apnea detection is influenced by various physiological factors that play a critical role in interpreting oxygen saturation levels during different sleep stages.

Physiological conditions

The reliability of SpO2 readings for detecting sleep apnea can be affected by several physiological factors. Oxygen saturation levels tend to vary naturally during sleep, showing distinct patterns between wakefulness, Rapid-Eye-Movement (REM) sleep, and non-REM sleep phases. For those with problem of sleep apnea, these variations can be more significant due to periodic breathing interruptions and altered respiratory patterns. Additional factors like obesity, respiratory ailments, and cardiovascular problems can further influence oxygen saturation levels, complicating the analysis of SpO2 data when diagnosing sleep apnea.

Standardization of PSG procedures

It is crucial to standardize Polysomnography (PSG) methods to guarantee that sleep-related information is gathered, analyzed, and interpreted uniformly and dependably in various environments. This standardization is essential for correctly identifying and handling sleep disorders like obstructive sleep apnea. By following established protocols and guidelines, such as those from the American Academy of Sleep Medicine (AASM), researchers and clinicians can reduce variability in the data, resulting in more dependable findings. Reliable PSG protocols assist in guaranteeing that the data remains comparable among various sleep centers and research studies, enhancing the reproducibility of results and the overall quality of sleep disorder diagnosis and treatment. Standardization helps improve the precision of automated diagnostic instruments, like deep learning models, by confirming they are trained and evaluated on high-quality, consistent data.

Strategies for standardization

Standardizing PSG procedures involves establishing uniform protocols for electrode placement, signal acquisition, data processing, and sleep stage scoring. This consistency ensures that sleep study data are comparable across various healthcare settings. Training sleep technologists and clinicians in standardized PSG protocols, including adherence to recognized scoring criteria like the AASM guidelines, helps maintain consistency in data collection and interpretation.

Quality control measures

Implementing quality control measures in PSG procedures is critical for ensuring the accuracy and reliability of sleep apnea diagnoses. Regular calibration of equipment is essential to maintain the precision of signal acquisition, while following established guidelines for sleep staging and event scoring ensures that data is interpreted consistently. Conducting inter-scorer reliability assessments, where multiple scorers independently assess the same data, helps to identify and minimize discrepancies in scoring, further ensuring the consistency of results. These quality control measures not only enhance the accuracy of sleep apnea diagnoses but also improve the overall reliability of PSG procedures, making it easier to monitor treatment effectiveness and make informed clinical decisions. By reducing variability in PSG results, healthcare providers can confidently rely on the data for more accurate and timely diagnosis, leading to better patient care.

Evaluation metrics for model performance in image classification and object detection

Establishing clear evaluation procedures necessitates identifying benchmark datasets, defining criteria for model validation and testing, and conducting thorough performance evaluations using techniques like cross-validation. Evaluation metrics are essential for measuring the effectiveness of models in image classification and object detection tasks.

Common metrics include:

Accuracy

Accuracy measures the fraction of correctly classified instances out of all predictions made. Although it serves as a general indicator of performance, it may be misleading when classes are imbalanced. Formally,

F1 score

The F1 Score represents the harmonic mean of precision and recall, providing a single metric that balances both false positives and false negatives. This is particularly useful when dealing with skewed class distributions. Specifically,

Recall

Recall (also known as sensitivity) quantifies a model’s capability to identify all relevant positive cases—i.e., how many true positives it captures compared to the total actual positives. This metric is critical when failing to detect a positive case carries a high penalty (for example, missing a sleep apnea event). It is calculated as

Precision

Precision measures the accuracy of positive predictions by determining the proportion of correctly predicted positives among all predicted positives. High precision is crucial when false alarms (false positives) are costly. Formally,

Data preparation and pre-processing

The authors began by extracting all annotated ECG signals that were labelled being apneic or not. They conducted a thorough examination of several ECG characteristics, including the intervals between successive ECG pulses (R - R intervals), the amplitudes of each pulse, the variation in pulse amplitudes, and the overall energy of the ECG pulses. Among these parameters, the R - R intervals were identified as particularly indicative in discerning apnea episodes. Two datasets were prepared for training and evaluation:

-

Dataset A: Contains ECG signals annotated for apneic and non-apneic events, with each segment lasting 60 seconds. This dataset serves as a baseline for unimodal analysis.

-

Dataset B: Expands upon Dataset A by incorporating additional respiratory signals (Resp A and Resp N). This multimodal dataset allows for a more comprehensive analysis of sleep apnea episodes.

Each annotation was provided for the subsequent one minute of the ECG signal, totaling 6000 ECG signals (calculated as 60 seconds multiplied by 100 signals per second) for each annotation. Then, these packets of ECG signals were mapped with corresponding annotation to compile a dataset with several rows containing 6000 features each, which was named as Dataset A. Furthermore, the authors expanded their analysis by incorporating additional respiratory data, specifically the Resp A and Resp N signals, available for a subset of eight subjects. Initially, all signals were normalized to a common scale. Given the susceptibility of physiological signals to various types of noise and artifacts, the authors applied a combination of band-pass filtering and adaptive noise cancellation techniques. This led to the creation of Dataset B, which integrates ECG, Resp A, and Resp N measurements against the apnea annotations. To rigorously evaluate the model, the resultant datasets were divided into training, testing, and validation sets, following a 70:9:21 ratio. The exact distribution of data across various classes in each subset for Dataset A is elaborately depicted and discussed in Table 2. This strategic partitioning of data was essential for assessing the model’s performance across multiple parameters. This overview highlights the diverse range of methodologies employed over the years to detect OSA, showcasing the evolution of machine learning and deep learning techniques in this field. In 2015, Song et al.14 used a Discriminative HMM and achieved an impressive accuracy of 97.10%, while Chen et al.40 also in 2015, employed a SVM, achieving slightly better accuracy at 97.41%. Moving to 2017, M. Cheng et al.41 utilized Long Short-Term Memory (LSTM) networks for OSA detection, reporting an accuracy of 97.80%, demonstrating the growing sophistication in model selection. In 2019, there was a significant leap in model complexity and performance. R. Stretch used a combination of machine learning algorithms, including Logistic Regression, Artificial Neural Networks, SVM, K-Nearest Neighbors, and Random Forest, but with a comparatively lower accuracy of 80.50%. On the other hand, X. Liang’s42 work that year combined CNN with unfolded bidirectional LSTM, which achieved an outstanding accuracy of 99.80%, underscoring the effectiveness of combining CNNs with RNNs for temporal and spatial feature extraction. Similarly, Wang et al.15 utilized Deep Residual Networks to achieve an accuracy of 94.40%, while T. Wang introduced a Modified LeNet-5 model for sleep apnea detection, achieving an accuracy of 97.10%. Further advancements were seen in 2020, with Bozkurt et al.43 developing an ensemble classifier that combined various machine learning algorithms like Decision Trees, K-Nearest Neighbors, and SVMs for SA detection, resulting in an accuracy of 85.12%. Finally, McClure et al.35 employed a Multi-scale Deep Neural Network combined with a 1D-CNN to classify and detect OSA, CSA, coughing, sighing, and yawning using wearable sensors, achieving a commendable accuracy of 87.0%. These various approaches demonstrate the steady progress in the field, with deep learning models, particularly CNNs, LSTMs, and their hybrid combinations, becoming more dominant in achieving higher detection accuracies.

Comparative benchmarking with prior studies

In order to contextualize SleepNet’s performance, we compare it against several notable studies that have reported apnea detection results using the Apnea-ECG benchmark. Table 2 summarizes these prior methods by listing the authors and publication year, the primary model or algorithm they employed, the dataset configuration, the input signal modality, and the detection accuracy achieved. In the remarks column, we also highlight each approach’s main strengths or weaknesses. Although every study listed here relies on PhysioNet’s Apnea-ECG data for evaluation, they differ in their choice of data-splitting strategies—some use the standard train/test division, while others apply cross-validation (either with or without subject-level partitioning).

In the literature as shown in Table 2, single-lead ECG approaches exhibit a broad spectrum of reported accuracies, largely influenced by evaluation protocols and model complexities. For instance, studies employing subject-overlapping cross-validation tend to report very high performance: Song et al. achieved 97.10% accuracy using a hidden Markov model combined with an SVM; Chen et al. likewise reported 97.41% with an SVM alone; Cheng et al. observed 97.80% accuracy when using an LSTM network; Liang et al. attained 99.80% by integrating a convolutional neural network with a bidirectional LSTM; Wang et al. reported 94.40% using a ResNet architecture; and Wang et al. achieved 97.10% with a modified LeNet-5. However, because these methods allow recordings from the same individual to appear in both training and test folds, they often overestimate real-world performance. By contrast, studies that strictly enforce subject-independent partitioning present more moderate yet realistic results: Stretch et al. reported 80.50% accuracy using a classical machine-learning ensemble; Bozkurt et al. achieved 85.12% by combining decision trees, k-nearest neighbors, and SVMs; and McClure et al. obtained 87.00% accuracy with a multi-scale deep neural network and one-dimensional CNN applied to wearable signals. These mid-80% figures better reflect a model’s capacity to generalize to previously unseen subjects. In this context, SleepNet’s ECG-only configuration demonstrates competitive performance, placing it firmly among the leading single-lead approaches under a subject-independent evaluation. By incorporating chest and abdominal respiratory effort signals alongside oxygen saturation in addition to ECG, the multimodal variant achieves even higher detection efficacy. This enhancement underscores the clinical value of adding direct respiratory and \(\hbox {SpO}_2\) measurements—features that complement ECG’s indirect markers of apnea—and highlights SleepNet’s advantage over models that rely exclusively on ECG.

Experimental setup

Our models including both the pre-trained versions and our proposed architecture—were implemented in Python with TensorFlow. Initially, we carried out training on a personal laptop featuring an AMD Ryzen 5 3500U CPU, an NVIDIA 940M GPU (compute capability 5.0), and 16 GB of RAM, using a limited number of epochs. To achieve more extensive training, we then switched to Kaggle’s environment, taking advantage of GPUs with compute capability 6.0 and 16 GB of VRAM. In total, each model was trained for 120 epochs to maximize accuracy during training, validation, and testing. The Table 3 displays the distribution of data across two datasets, Dataset A and Dataset B, within a machine learning framework. These datasets are categorized into three groups: Normal, Severely Affected (SA), and Total. Additionally, the data is divided into three parts: For the training subset, there are 11,907 samples in total, with 7,353 labeled as Normal and 4554 as SA. This shows that while a large portion of the training data focuses on normal cases, a significant number of severely affected cases are included to ensure the model can recognize critical conditions. The validation set, used to refine the model’s parameters and mitigate overfitting, consists of 1531 samples. Of these, 941 are Normal and 590 are SA, maintaining a distribution ratio similar to that of the training data. This consistent distribution ensures that the validation outcomes accurately reflect the training process. Lastly, the testing subset, which assesses the model’s performance on newly unseen data, consists of 3572 samples. This includes 2241 Normal and 1358 SA cases. The distribution in the testing data aligns with that of the training and validation subsets, ensuring a balanced evaluation metric.

Algorithms

This research evaluates various recurrent neural network architectures—namely LSTM, GRU, and BiGRU to handle time-series inputs like ECG recordings. In addition, we investigate convolutional neural network approaches, including pretrained models such as VGG16 and AlexNet, as well as custom 1D-CNNs. A brief overview of each model follows. When choosing between LSTM and GRU-based designs, several considerations arise: the complexity of the task, the nature of the dataset, computational cost, memory requirements, and the trade-off between accuracy and training time. LSTMs excel at modeling long-term dependencies in sequential data thanks to their memory cells and gating mechanisms, which help avoid the vanishing gradient issue. This feature allows them to retain and retrieve critical information over extended sequences, making LSTMs a popular choice for applications like speech recognition, time-series forecasting, and NLP. Conversely, GRU networks boast computational efficiency and fewer parameters compared to LSTMs, rendering them faster to train and deploy in scenarios where memory constraints or speed are critical factors. Researchers assess these considerations based on their specific task requirements and dataset characteristics to determine whether LSTM or GRU networks offer the most optimal performance and efficiency for their deep learning models.

Parameter sharing within neural networks involves employing identical or similar parameters across multiple layers to foster feature reuse and reduce the total number of trainable parameters. This practice facilitates learning hierarchical representations of data and capturing common patterns across different parts of the network. In CNNs, parameter sharing occurs in convolutional layers, where identical filter weights are applied to various spatial locations in the input data. This approach enables the network to efficiently learn spatial hierarchies of features and generalize effectively to new data. Similarly, in RNNs like LSTMs and GRUs, parameter sharing is achieved through recurrent connections that propagate information across time steps, enabling the network to maintain memory and capture temporal dependencies in sequential data. By embracing parameter sharing, neural networks can acquire more robust representations, enhance performance across diverse tasks, mitigate the risk of overfitting, and improve computational efficiency.

In the realm of training neural networks for detecting sleep apnea, researchers typically adhere to a meticulous procedure aimed at enhancing model performance and ensuring its applicability across various scenarios. This rigorous process encompasses several key stages: data preprocessing, model selection, hyperparameter optimization, delineation of training-validation-testing sets, and thorough performance assessment. Data preparation is the foundational step, encompassing operations like normalization, feature extraction, and the treatment of missing values to ensure the dataset remains consistent and reliable. Next, choosing an appropriate network architecture—whether an LSTM or a CNN—depends on the specific requirements of the problem. Tuning hyperparameters, such as learning rate, batch size, and regularization methods, is crucial for improving generalization and preventing overfitting. Splitting the data into training, validation, and test sets allows for an unbiased evaluation of model performance on unseen samples, offering a clearer picture of how it will behave in practical scenarios. Evaluating the model with metrics like accuracy, sensitivity, and specificity provides a comprehensive view of its strengths: accuracy reflects overall correctness, sensitivity measures the ability to correctly identify apnea events (true positives), and specificity quantifies how well the model avoids false alarms (true negatives). By rigorously following this workflow preprocessing data, selecting and tuning models, and assessing performance on separate datasets—researchers can iteratively refine their neural networks and overcome training challenges, ultimately yielding a more dependable and effective sleep apnea detector.

Recurrent neural networks

RNNs are a class of artificial neural networks specifically designed to handle sequences of data. Unlike traditional feedforward networks, RNNs build connections between nodes that form a directed graph, allowing information to be carried forward over time. This built-in “memory” makes them ideally suited for applications where past inputs influence future outputs—such as analyzing time-series signals, processing natural language, or recognizing spoken words. Because RNNs retain information from previous steps, they can capture temporal dependencies that ordinary neural networks cannot. This distinctive characteristic allows RNNs to retain context and utilize it to affect the output at every time step. Fundamentally, the output of an RNN at a specific moment relies on both the present input and the outputs from previous time steps, enabling the model to successfully and efficiently capture and employ long-term dependencies in the data.

-

Long short-term memory: LSTM, commonly applied to graphical data like ECG, is a sophisticated version of RNN that allows data to endure over time. Its feedback connections enable it to process sequences of data in their entirety. At the heart of LSTM is a ’cell state,’ a memory component that retains its state over time. In an LSTM model, the modification of information in the cell state is controlled through gates, which regulate the flow of information in and out of the cell. This process is facilitated by a combination of pointwise multiplication and a sigmoid layer in the neural network.

-

Gated recurrent unit: Developed as an optimization to the standard RNN archi- tecture, GRUs41,46 address the problem of long-term dependency, a challenge where RNNs struggle to carry information across many time steps. The improvement in GRUs is realized through gating mechanisms that control information flow inside the unit. GRUs feature two gates: the update gate, which determines how much the unit’s state is refreshed with new data, and the reset gate, which decides the amount of previous information to discard. GRUs are often considered a more streamlined and efficient option compared to LSTMs, as they have fewer parameters. This reduction in parameters can result in quicker training periods and decreased computational complexity.

-

Bidirectional gated recurrent unit: Bidirectional Gated Recurrent Units (BiGRUs) represent an enhanced version of traditional Gated Recurrent Units (GRUs) aimed at better modeling of sequential data in deep learning applications. Unlike standard GRUs, BiGRUs use a bidirectional structure, allowing the network to obtain contextual insights from both preceding and succeeding sections of a sequence. This is accomplished by employing two distinct GRU units: one that handles the sequence from start to finish (forward GRU), and the other that manages the sequence in reverse (backward GRU). The forward GRU collects information regarding the past context, whereas the backward GRU captures insights about the future context. At every time step, the results from both GRUs are merged, offering a more comprehensive representation of the sequence by integrating context from both sides. This two-way processing is especially beneficial in tasks where comprehending both previous and upcoming states is essential for precise predictions, like in time-series analysis, natural language processing, and speech recognition.

Convolutional neural networks

-

VGG16 The writers utilized the VGG16 model, a renowned 16-layer CNN that has proven highly effective in image classification applications. The structure comprises several convolutional layers succeeded by three Fully Connected layers. The initial two FC layers have 4096 channels each, and their main function is to combine features obtained from the convolutional layers. The third FC layer is designed for 1000-way classification, satisfying the needs of the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). A softmax layer is included at the end of the network to generate the final classification probabilities. Nonetheless, the network’s depth and the high quantity of nodes in the fully connected layers lead to a significant model size, causing it to surpass 500MB. Even with its substantial size, the VGG16 architecture continues to be a favored option for tasks needing deep feature extraction, owing to its straightforward yet effective design.

-

AlexNet To build a leaner, faster model, the researchers chose AlexNet—an eight-layer CNN known for balancing depth with computational speed. It features five convolutional blocks, each paired with max-pooling, followed by three fully connected layers that use ReLU activations. A key element is the dropout applied before the first and second fully connected layers, which helps curb overfitting and boosts generalization to new data. Although AlexNet is less deep than architectures like VGG16, it still performs remarkably well on image classification tasks. This mix of efficiency and strong accuracy makes AlexNet ideal for situations where computing resources are tight but high performance is still needed.

Combination of CNN and RNN

Encouraged by the promising outcomes achieved individu- ally with recurrent neural networks and the convolutional neural network architectures the authors combined the strengths of the two into hybrid models.

-

Hybrid AlexNet This model combines the spatial feature extraction strengths of AlexNet, a CNN, with the temporal processing capabilities of LSTM networks. It operates in two distinct phases. Initially, AlexNet extracts crucial spatial features from the input data through its convolutional layers, which are particularly effective at detecting and isolating important patterns and structures. These extracted features, which represent the spatial characteristics of the data, are then passed on to the LSTM network. The LSTM component of the model processes the sequential nature of the data, capturing long-term dependencies and temporal relationships. By leveraging both AlexNet and LSTM, this hybrid model effectively addresses both spatial and temporal aspects of the data, enhancing the model’s overall ability to analyze complex, dynamic patterns. Known for its effectiveness in handling sequential and time-series data, LSTM analyzes these features over time, capturing crucial temporal dependencies that are indicative of apneic events. This fusion of AlexNet and LSTM leverages their respective strengths - AlexNet’s ability to recognize detailed features and LSTM’s skill in temporal analysis. Similarly, a composite model combining AlexNet with Gated Recurrent Units (Hybrid AlexNet v2) was de- veloped, capitalizing on GRUs’ efficiency in sequential data processing.

-

Hybrid CNN After the success of the hybrid AlexNet models, the researchers extended their exploration into combining other neural network architectures to further enhance the analysis of complex datasets like those found in apnea detection. This development resulted in the creation of two additional hybrid models, each designed to leverage the complementary strengths of CNNs and RNNs. The first model, 1D-CNN + LSTM (Hybrid CNN v1), takes advantage of 1D-CNNs for extracting relevant features from time-series data. The 1D-CNN layers process the data linearly, identifying temporal patterns and key features. Following this, the LSTM network captures long-term dependencies, making it particularly effective for detecting the intricate, sequential patterns indicative of apneic events. The LSTM’s ability to retain information over time allows it to recognize and respond to complex temporal changes within the data. The second model, 1D-CNN + GRU (Hybrid CNN v2), also utilizes 1D-CNNs for initial feature extraction but incorporates GRUs in place of LSTMs. GRUs, which feature a more streamlined gating mechanism compared to LSTMs, strike a balance between computational efficiency and the ability to capture sequential dependencies in the data. While not as complex as LSTMs, GRUs are still highly effective for sequential data processing and require less computational power, making this hybrid model a suitable choice for tasks where efficiency is a priority without sacrificing the ability to model temporal relationships. Both models combine the strengths of CNNs for feature extraction with advanced recurrent networks for temporal analysis, making them well-suited for detecting sleep apnea from time-series data.

Proposed model: SleepNet

By placing a BiGRU layer immediately after the 1D-CNN stages, SleepNet gains a powerful edge in interpreting temporal features for sleep apnea identification. The bidirectional nature of BiGRU means the network analyzes each heartbeat sequence in both forward and reverse directions, allowing it to leverage information from before and after any given moment. In ECG analysis, this is especially important: knowing what precedes and follows a specific heartbeat can be critical when trying to pinpoint subtle apneic episodes. The 1D-CNN front end excels at spotting local ECG characteristics—such as QRS complexes, P waves, and T waves—regardless of where they appear in the signal, thanks to its translation-invariant filters. Unlike traditional pipelines that rely heavily on handcrafted features, these convolutional layers can learn clinically relevant patterns directly from the raw ECG waveform with minimal preprocessing. Research in biomedical signal processing consistently shows that 1D-CNNs outperform conventional feature-engineering approaches in extracting meaningful signal traits. Building on this, the BiGRU layer processes the extracted features bidirectionally, giving the model simultaneous access to both past and future context. This dual perspective is a major advantage when trying to detect sleep apnea, since an apneic event’s significance often depends on the sequence of heartbeats surrounding it. Empirical evidence in ECG classification tasks confirms that BiGRU-based architectures achieve better accuracy by leveraging contextual information from both time directions. Although previous studies have experimented with pairing CNNs and BiGRUs for ECG classification, SleepNet distinguishes itself by adopting a carefully streamlined and hyperparameter-tuned design. With additional layers and optimized settings, it is better equipped for realistic deployment and demonstrates higher accuracy in identifying apnea events than earlier models.

SleepNet’s architecture is carefully designed to balance thorough feature extraction with efficient classification. It begins with convolutional layers that progressively learn from the raw ECG input. The first convolutional stage uses 64 filters of size 3 to capture basic waveform characteristics, while a second convolution with 128 filters then builds on those low-level patterns to uncover more detailed structures. Interspersed max-pooling steps reduce the spatial size of these feature maps, which not only helps guard against overfitting but also keeps the computational cost in check. Once the convolutional hierarchy has distilled essential ECG features, BiGRU layers take over, processing the data in both forward and backward directions. The initial BiGRU layer draws in temporal relationships from past and future heartbeats, and a dropout layer follows to randomly deactivate some units during training, which further reduces overfitting. A second BiGRU layer deepens the network’s understanding of sequence-level dependencies, ensuring that no critical temporal pattern is overlooked. After this, the resulting maps are flattened into a single vector so they can be handled by fully connected (dense) layers. These dense layers, activated by ReLU, introduce nonlinearity and refine the extracted features for the final classification step. Dropout is applied again to improve generalization. In the end, a single-node output layer with a sigmoid activation produces a probability score, making the model suitable for distinguishing between apnea and non-apnea segments. To evaluate SleepNet’s performance, metrics such as accuracy, sensitivity, and specificity are used. When comparing it against traditional, single-signal methods, SleepNet consistently outperforms them, demonstrating higher accuracy and a better balance of true-positive and true-negative rates. By combining ECG data with additional physiological inputs, SleepNet leverages complementary information streams to deliver a more reliable diagnosis of sleep apnea. The performance gains evident in these multimodal results underscore the value of integrating multiple signals, showing that SleepNet is a promising tool for real-world clinical and non-clinical applications (Table 4).

Algorithm 1 details SleepNet’s workflow, which sequentially combines convolutional and recurrent modules to classify OSA. The process begins with the model receiving the training dataset and ultimately producing OSA predictions. The initial stage comprises two back-to-back blocks of one-dimensional convolutional layers, each using a kernel size of three to scan for local features within the ECG signal. Directly after each Conv1D block, a MaxPooling1D layer with a pool size of two downsamples the feature maps—this both trims unnecessary spatial dimensions and preserves critical signal characteristics, thereby improving computational efficiency. Once these convolutional and pooling layers have distilled fundamental spatial patterns, the network transitions to temporal analysis using two successive BiGRU layers. By processing sequences in forward and reverse directions, each BiGRU unit captures contextual information from both past and future time points—an invaluable capability when dealing with ECG data, where neighboring heartbeats can carry significant diagnostic clues. To discourage overfitting, a dropout layer with a rate of 0.2 follows each BiGRU block. During training, this dropout step randomly deactivates 20 percent of the hidden units, encouraging the network to learn more robust features that generalize well to unseen data.

After uncovering temporal dependencies, SleepNet flattens the BiGRU output into a single vector, preparing it for the final classification stage. This vector is fed into two fully connected (dense) layers, each followed by another dropout at the same 0.2 rate. These dense layers introduce nonlinearity and further refine the learned features. In the final step, a dense output node with a sigmoid activation generates the model’s OSA probability score, allowing straightforward binary decision-making (apnea vs. non-apnea). By integrating convolutional blocks for spatial feature extraction with bidirectional recurrent modules for sequence modeling, SleepNet effectively captures both the local waveform details and the broader temporal patterns necessary to detect sleep apnea events. However, this layered, sequential approach does carry computational costs—especially when handling large datasets—since each signal must pass through multiple Conv1D, BiGRU, and dense transformations. While dropout aids in reducing overfitting, careful tuning remains essential to prevent the network from becoming too tailored to the training set. Looking ahead, SleepNet’s modular design offers several avenues for enhancement. Researchers might experiment with additional forms of regularization (e.g., weight decay or batch normalization), test alternative model architectures (such as transformer-based blocks), or incorporate other physiological signals—like chest respiratory effort or oxygen saturation—to further enrich the input data. Such extensions could boost generalizability and resilience in clinical settings. Moreover, exploring hybrid approaches that combine SleepNet with simpler, lightweight classifiers might help strike a better balance between performance and deployment efficiency in real-world healthcare environments.

Strengths of the proposed approach

-

Improved diagnostic precision: One of the standout features of SleepNet is its ability to incorporate various physiological signals, such as ECG, nasal airflow, and abdominal respiratory effort. This integration has significantly boosted the model’s diagnostic accuracy, allowing it to achieve an impressive accuracy rate of 95.19%. This surpasses many existing methods that rely on a single signal and underscores the model’s potential to provide timely and accurate detection of sleep apnea—a condition that can lead to severe health risks if left untreated.

-

Integration of multimodal data: SleepNet distinguishes itself by utilizing data from multiple physiological sources, capitalizing on the unique strengths of each signal. This holistic approach provides a more detailed analysis of sleep patterns and respiratory behavior, enabling better differentiation among the obstructive, central, and complex types of sleep apnea. This holistic approach provides a more precise and detailed understanding of sleep apnea events, resulting in improved diagnostic outcomes.

-

Strong performance indicators: In addition to high accuracy, SleepNet demonstrated excellent sensitivity and specificity rates, key performance indicators for medical diagnostic tools. These metrics emphasize the model’s reliability in correctly identifying sleep apnea cases while minimizing the risk of false positives. This fosters patient confidence and supports effective treatment planning by ensuring that patients are correctly diagnosed and subsequently treated in a timely manner.

-

Adaptability for individualized care: SleepNet’s architecture is flexible, making it adaptable for individual patient profiles. This feature is particularly important in personalized medicine, where predictions can be further refined to align with the unique physiological traits and sleep habits of each patient. By incorporating personalized data in future iterations, the model has the potential to enhance its diagnostic precision, offering tailored solutions for each patient’s condition.

Limitations of the proposed approach

-

Reliance on data quality: While SleepNet excels in performance, its success heavily depends on the quality of the input signals. Factors such as noise, motion artifacts, and improper sensor placement can degrade the quality of the data, leading to compromised results. This emphasizes the importance of robust preprocessing and validation mechanisms to ensure reliable inputs, and the need for consistent data quality assurance to maintain model performance.

-

Limited applicability across diverse populations: The datasets used for training and validation may not fully capture the wide range of variability typically encountered in real-world clinical settings. These datasets are often limited in scope, potentially lacking sufficient diversity in patient demographics, medical histories, lifestyles, or comorbid conditions. As a result, the model’s performance could be less reliable when applied to populations that differ from those represented in the training data. For instance, the model may struggle to generalize to patients with uncommon medical conditions or those from underrepresented demographic groups. This limitation could hinder the model’s effectiveness in broader clinical applications, reducing its ability to accurately diagnose and predict sleep apnea in diverse patient populations. To address this issue, it is critical to expand the diversity of training datasets, incorporating data from various demographic groups and individuals with a wide range of medical histories and comorbidities. Additionally, employing techniques like transfer learning, domain adaptation, or multi-center studies could improve the model’s generalizability and its applicability in real-world clinical settings.

-

Implementation challenges: The complexity of processing and integrating multiple types of physiological data adds significant challenges to SleepNet’s development and deployment. The need to process large volumes of data from different sources requires advanced computational resources and technical expertise, which may limit its deployment in resource-constrained environments. These challenges must be addressed to ensure that the model can be practically implemented in diverse clinical and home settings.

-

Lack of transparency: Like many deep learning models, SleepNet operates as a “black box,” meaning its decision-making process is not easily interpretable. This lack of transparency can be a significant barrier for clinicians who require clear explanations for how predictions are made, especially in high-stakes healthcare environments where understanding the reasoning behind a diagnosis is crucial. In clinical settings, the ability to trust and verify the outcomes of a model is essential for its adoption, as healthcare professionals need to ensure that the model’s predictions align with clinical knowledge and patient-specific factors. Without interpretability, clinicians may be reluctant to fully rely on the model, as they cannot easily assess whether the decision-making process aligns with their own expertise or if it may be influenced by irrelevant patterns in the data. To address this challenge, it will be important to develop methodologies that enhance the model’s explainability, such as attention mechanisms, feature importance techniques, or visualization techniques that highlight the regions of input data influencing the model’s predictions. These tools could help clinicians better understand the rationale behind the model’s predictions, increasing their confidence in using the model for decision support in diagnosing and treating sleep apnea. Additionally, efforts to integrate model explanations into clinical workflows will facilitate more transparent collaboration between AI models and healthcare providers, ultimately driving the wider adoption of AI-based diagnostic tools.

-

Ongoing validation requirements: SleepNet’s continued success will depend on continuous validation and regular retraining. As new data becomes available and patient demographics evolve, the model must be regularly updated to maintain its relevance and accuracy. This ongoing validation will be critical to ensuring that SleepNet remains effective in real-world clinical scenarios and adapts to the changing landscape of patient data.

In conclusion, SleepNet represents a significant advancement in sleep apnea detection through its innovative use of multimodal data integration. The model’s strengths, such as improved diagnostic precision, strong performance indicators, and adaptability for individualized care, position it as a powerful tool in the realm of sleep medicine. However, challenges such as reliance on data quality, limited applicability across diverse populations, and a lack of interpretability must be addressed for the model to reach its full potential. Overcoming these limitations will be crucial for SleepNet’s widespread adoption and its effectiveness in clinical settings, ultimately this contributes to improved patient outcomes in the detection and management of sleep apnea.

Training procedure

In order to make the data from the two sources suitable for multimodal model training, the raw signals are processed. Both ECG and respiratory signals are subject to normalization. This process normalizes the signal amplitudes to a standard scale, typically between 0 and 1 or − 1 and 1. Normalization is essential because it ensures that neither signal disproportionately influences the model due to differences in their amplitude ranges. Real-world signals often contain noise. For ECG signals, this might include electrical noise, muscle artifacts, or base-line wander. Similarly, respiratory signals may have artifacts related to movement or sensor displacement. Common filtering techniques include band-pass filters to retain frequencies of interest while eliminating noise outside this band. For ECG, filters target the typical ECG frequency range (0.05–100 Hz). For respiratory signals, the frequency range of interest might differ, and filters are chosen accordingly. Besides filtering, other techniques like Independent Component Analysis (ICA) and Wavelet Transform are explored to remove specific types of artifacts. The continuous signals are segmented into shorter, fixed-length windows. This step is crucial for analyzing the signals in smaller, more manageable segments and for feeding them into the neural network. After segmentation, it’s vital to align the windows of the ECG and respiratory signals in time. This ensures that the model learns from corresponding segments of each signal, maintaining the temporal relationship between the cardiac and respiratory activities. The preprocessed and segmented signals are arranged into a dataset format suitable for training the neural network. This involves combining the corresponding segments of ECG and respiratory signals and associating them with labels (indicative of sleep apnea events or normal breathing). This approach utilizes the raw signal data, preserving the original temporal dynamics of the physiological signals.

Results