Abstract

Segmenting echocardiographic images is a crucial step in assessing heart function, as clinical indicators can be obtained by precisely delineating the left ventricle. The success of subsequent heart analyses depends entirely on the precision of this segmentation. However, echocardiography is characterized by ambiguity and heavy background noise interference, making accurate segmentation more challenging. Present methods lack efficiency and are prone to mistakenly segmenting some background noise areas, such as the left ventricular area, due to noise disturbance. To address these issues, we introduce P-Mamba, which integrates the Mixture of Experts (MoE) concept for efficient pediatric echocardiographic left ventricular segmentation. Specifically, we utilize the recently proposed ViM layers from the vision mamba to enhance our model’s computational and memory efficiency while modeling global dependencies. In the DWT-based (Discrete Wavelet Transform) Perona-Malik Diffusion (PMD) Block, we introduce a block that suppresses noise while preserving the left ventricle’s local shape cues. Consequently, our proposed P-Mamba innovatively combines the PMD’s noise suppression and local feature extraction capabilities with Mamba’s efficient design for global dependency modeling. We conducted segmentation experiments on two pediatric ultrasound datasets and a general ultrasound dataset, namely Echonet-dynamic, and achieved state-of-the-art (SOTA) results. Specifically, on the Pediatric PSAX (8959 images) and Pediatric A4C datasets (6425 images), we achieved Dice scores of 0.922 and 0.906, respectively; on the EchoNet-Dynamic dataset (19882 images), we achieved a Dice score of 0.931. Leveraging the strengths of the P-Mamba block, our model demonstrates superior accuracy and efficiency compared to established models, including vision transformers with quadratic and linear computational complexity.

Similar content being viewed by others

Introduction

Congenital heart diseases (CHD) pose significant health risks, necessitating precise diagnostic tools like the Echocardiogram for early detection and treatment in children1. Among these indices, the Left Ventricular Ejection Fraction (LVEF) is the most commonly used and vital metric for assessing systolic function2. The LVEF is predominantly calculated using the biplane Simpson’s standard protocol method in clinical settings3. This technique involves the manual delineation of the left ventricular endocardium by physicians in specific frames of the apical two-chamber (A2C) and apical four-chamber (A4C) echocardiographic views, a process essential for the identification of the end-systolic volume (LVESV) and end-diastolic volume (LVEDV).

Machine learning and artificial intelligence have significantly improved the reliability and precision of segmenting the left ventricle (LV) in adult echocardiography, as shown by multiple research projects. However, machine learning is more difficult in youngsters due to diverse anatomical anomalies, heart rate, stature, and cooperative capacity. Various factors influence the spatial and temporal resolutions, eventually influencing echocardiographic imaging quality4. For example, the size and shape of the heart in children fluctuate considerably due to the growth and developmental stages. This variability, along with disorders such as CHD, makes pediatric heart anatomy more complicated than that of adults, limiting the application of adult-based machine learning models. Furthermore, pediatric echocardiograms frequently exhibit lower resolution and increased speckle noise, especially when obtained using portable ultrasound instruments in non-specialized settings, affecting both the spatial and temporal resolutions of the images5. As a result, there is concern about how well machine learning models built on adult datasets can be applied in pediatric echocardiography owing to the more significant variabilities.

State Space Models (SSMs) have recently extracted broad interest from researchers. SSMs effectively model dynamic systems using linear equations, leading to reduced computational complexity in processing sequential data. They enhance data processing speed and model scalability by integrating input-dependent mechanisms with hardware-aware architectures. In contrast to conventional Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) models, SSMs optimize computational resource usage while effectively capturing long-range dependencies, making them ideal for implementation on edge devices6. As the first proposed basic model built by SSMs, Mamba7 has achieved superior performance compared to transformers in long-range dependency modeling, even with linear complexity. Vision Mamba8 was later proposed to apply Mamba to the vision domain and achieves superb efficiency-accuracy balance compared with DeiT. U-Mamba9 was the first Mamba-based medical image segmentation method and boasted its efficient design. Based on the above works, we apply the Mamba structure to our model to guarantee model efficiency.

On the other hand, echocardiography often encounters challenges, including significant speckle noise, limiting its imaging technique. As shown in Fig. 1, current approaches easily mistake some background noise areas for the target area. Recent studies10 have explored denoising techniques to improve ultrasound image quality, focusing on reducing noise and enhancing visualization. To suppress background noise while maintaining target structural features, we draw inspiration from Perona–Malik Diffusion (PMD)11, initially in de-noising tasks to achieve this goal. Therefore, we tailor P-Mamba for more efficient and accurate pediatric echocardiographic LV segmentation. This model can eliminate noise while preserving the local target boundary details for the best performance. At the same time, our model demonstrates superior efficiency.

Our main contributions are as follows:

-

We propose P-Mamba, which innovatively combines the PMD’s noise suppression and local feature extraction ability with the Vision Mamba’s efficient design for global dependency modeling and integrates the Mixture of Experts (MoE)12 concept to set new performance benchmarks on noisy pediatric echocardiogram datasets.

-

Benefiting from PMD, our model excels by suppressing noise while preserving and enhancing target edges in ultrasound images, as shown in Fig. 1.

-

Extensive experiments demonstrate that our P-Mamba achieves superior segmentation accuracy and efficiency to other methods, including specialized ultrasound segmentation models13,14, vision transformers with quadratic15 and linear16,17 computational complexity. In addition, Fig. 2 visually compares the efficiency among different models.

Related work

To date, deep learning (DL) development has promoted automatic medical image segmentation, and several well-known deep learning frameworks have provided innovative ideas for echocardiography segmentation with outstanding performance.

General deep learning segmentation methods

Deep learning has revolutionized image segmentation in recent years, a crucial task in computer vision. Among the pioneering methods, U-Net18 stands out for its effective encoder-decoder architecture, which captures context through its contracting path and refines localization through its expansive path. U-Net’s simplicity and efficiency have made it a foundational medical image segmentation model.

Pyramid Vision Transformer (PVT)15 and TransUNet19 both draw significant inspiration from transformer architecture. PVT captures long-range dependencies through self-attention mechanisms and hierarchical feature representations, making it highly effective for dense prediction tasks. Similarly, TransUNet integrates transformer modules into the traditional U-Net structure, leveraging the transformer’s attention mechanisms to enhance feature encoding and significantly improve segmentation accuracy, particularly in complex scenarios. Both models demonstrate the transformative impact of incorporating transformer principles into segmentation tasks.

UniFormer20, SpectFormer21, and Segformer22 represent some of the most contemporary segmentation models. Segformer achieves state-of-the-art performance in multiple segmentation benchmarks with lower computing costs by integrating hierarchical transformers with effective feature fusion approaches. This simplifies the transformer architecture while maintaining its key benefits. UniFormer merges convolutional networks with transformers to balance local feature extraction and global context understanding, efficiently capturing multi-scale features to enhance segmentation performance. SpectFormer delves deeper into the transformer domain by incorporating spectral analysis, emphasizing frequency domain information to complement traditional spatial representations. These models exemplify the cutting-edge advancements in segmentation technology.

Medical image segmentation methods

Recently, innovative deep-learning methods have become increasingly vital in medical analysis and represent the forefront of leveraging complex neural networks for precise medical image segmentation. For instance, CMU-Net13 and NHBS-Net14 are specialized ultrasound segmentation models. CMU-Net uses hybrid convolution and multi-scale attention gates to improve feature extraction and context information. NHBS-Net has advanced attention and fusion modules, making clinical ultrasound applications more accurate and error-free.

U-Mamba9 was the first general-purpose biomedical image segmentation method which integrates the global context understanding from Mamba. It designed a hybrid CNN-SMM block and employed the encoder-decoder framework within a U-shaped structure. Recently, there have been limited Mamba-based architectures that have shown promise in the echocardiographic segmentation task. MambaEviScrib23 introduces Mamba blocks alongside evidence-guided consistency to improve CNN robustness in a weakly supervised learning approach. However, such methods do not explicitly address feature-space denoising, which is a general problem in ultrasound images.

In the context of biomedical image segmentation, nnU-Net24 has emerged as a self-configuring solution that automatically adapts to a variety of datasets without requiring manual modifications. This model streamlines the segmentation pipeline, from preprocessing to postprocessing, by using a set of heuristic rules and dataset-specific configurations. 3D nnU-Net25, an extension of nnU-Net, utilizes 3D convolutions to handle volumetric data, making it particularly effective in processing echocardiographic images, where maintaining temporal consistency during cardiac cycles is essential. Additionally, a post-processing approach is introduced to guarantee temporal consistency in segmentation results26, which employs a 2D+time cardiac shape autoencoder that corrects inconsistencies across frames by learning a physiologically interpretable embedding of the cardiac shape. This technique ensures that the segmented sequences maintain both anatomical accuracy and temporal coherence, resulting in more reliable segmentation throughout the entire cardiac cycle.

However, when applying the present segmentation methods to segment the left ventricle in echocardiograms, whether pediatric or adult, the challenge exists since the irregular shape of the left ventricles still cannot be well segmented since these methods pay insufficient attention to high-frequency boundary details, which can be seen in Fig. 1. Also, present methods lack efficiency, hindering their wider application, as shown in Fig. 2. Another concern of the studies above is the limitations of their reliance on proprietary datasets. The EchoNet-Peds dataset, developed by Stanford University, indicates the first publicly available pediatric echocardiography dataset27, featuring 4467 echocardiograms from 1,958 patients, including a 43\(\%\) female demographic and ages ranging from newborns to 18 years. This comprehensive dataset yielded 7643 video clips and 17,600 labeled images, primarily from A4C and Parasternal Short-Axis (PSAX) view clips. As a result, the video clips were strategically allocated, with 6114 (80\(\%\)) for training, 765 (10\(\%\)) for testing, as well as 764 (10\(\%\)) for validation.

Methodology

We illustrate the overall architecture of P-Mamba in Fig. 3. The P-Mamba network, designed for automatically segmenting the left ventricle in echocardiograms, leverages the Mixture of Experts framework. The DWT-based PMD block suppresses background noise interference while preserving target edges to capture more local features. The ViM block8, sometimes also mentioned as the Bidirectional Mamba block, is designed to guarantee the high efficiency of our model while encapsulating global dependencies. A gating mechanism inspired by the MoE structure dynamically selects appropriate pathways for feature processing, enhancing efficiency and segmentation accuracy.

The P-Mamba encoder, incorporating multiple P-Mamba blocks, extracts hierarchical features at descending resolutions of 1/4, 1/8, 1/16, and 1/32 relative to the original input size while progressively increasing the channels at each level. There are mainly three reasons that Perona-Malik Diffusion is applied to the latent feature space rather than the input images. Firstly, denoising at the input level may irreversibly remove fine details essential for downstream tasks. Secondly, embedding PMD within the network can gradually reduce noise in stages based on learnt representations. Lastly, feature maps in deeper layers provide better semantic information, which preserves the boundary information better when the PMD filters out the noise. In the following sections, we will introduce the detailed structure of P-Mamba.

DWT-based PMD block

Discrete Wavelet Transform (DWT) is a mathematical transform used to compress the feature map or signal and represent the frequency components information in the time (or space) domains. It breaks the input into several wavelets localized in both time (or space) and frequency, thus enabling multi-resolution analysis. Therefore, DWT is beneficial when local and global features need to be captured, like in applications of image compression, denoising, feature extraction in deep learning, etc28.

It has been noticed that the PMD is widely used in image denoising due to its ability to suppress noise disturbance while preserving boundary details. In particular, PMD models have shown that they can effectively enhance edge-aware denoising performance in feature-specific regions29. Motivated by this, we integrate a PMD-based module into the latent space to leverage its boundary-preserving characteristics for more robust heart ultrasound image segmentation.

Considering that echocardiograms contain heavy background noise that may interfere with segmentation accuracy, we propose the DWT-based PMD Block to act on feature maps so that the background noise can be filtered. At the same time, some target boundary cues can be preserved.

Given an input feature map u, its PMD equation can be expressed as:

where \(g(|\nabla u|) = \frac{1}{1 + \left( \frac{|\nabla u|}{k} \right) ^2}\) is the diffusion coefficient; t is the diffusion step and can be regarded as the layer of the feature map; k is a positive constant to control the degree of diffusion and is set to 1 by default in our experiments. Notably, (1) is an anisotropic diffusion equation. In the flat or smooth regions where the gradient magnitude is small (\(|\nabla u|\rightarrow 0\)), the diffusion coefficient g is large, meaning that the diffusion is strong and (1) acts as Gaussian smoothing to remove the noise interference. Somewhere near the target’s boundary, the gradient magnitude is large (\(|\nabla u|\rightarrow 1\)), so the coefficient g is near zero, meaning the diffusion is weak, and the boundary details can be preserved. Equation (1) can also be rewritten to the following form:

where \(\frac{\partial u}{\partial x}\) and \(\frac{\partial u}{\partial y}\) represent the gradients of the feature map in horizontal and vertical directions. On the other hand, the Discrete Wavelet Transform (DWT) of an input feature map can be expressed as:

where \(u_{LL}\) is the low-frequency part of the feature map, while \(u_{LH}\), \(u_{HL}\) and \(u_{HH}\) are the high-frequency parts in horizontal, vertical and diagonal directions of the feature map which mainly contain the boundary details. By approximating the derivative terms \(\frac{\partial u}{\partial x}\) with \(u_{LH}\) and \(\frac{\partial u}{\partial y}\) with \(u_{HL}\) and setting the diffusion step size \(\delta t\) to one, we can transform (2) to the discrete format:

After enhancing the feature map by PMD, we feed the diffusion output into a basic ResNet block. Piling multiple DWT-based PMD blocks in all layers of the encoder branch, our P-Mamba can suppress background noise disturbance while preserving the target boundary features.

Vision Mamba block

Mamba7 is a novel deep sequence model architecture addressing the computational inefficiency of traditional Transformers on long sequences. It is based on selective state space models (SSMs), which improve upon previous SSMs by allowing the model parameters to be input functions.

Consider a structured SSM mapping one-dimensional sequence \(x(t) \in \mathbb {R}^L\) to \(y(t) \in \mathbb {R}^L\) through a hidden state \(h(t) \in \mathbb {R}^N\). With the evolution parameter \(\varvec{A}\in \mathbb {R}^{N \times N}\) and the projection parameters \(\varvec{B}\in \mathbb {R}^{N \times 1}\), \(\varvec{C}\in \mathbb {R}^{1 \times N}\), such a model is formulated using linear ordinary differential equations

Moreover, to enhance GPU utilization and efficiently materialize the state h within the memory hierarchy, hardware-aware state expansion is enabled by selective scan. By incorporating kernel fusion and recomputation with parallel scan, the fused selective scan layer can effectively decrease the quantity of memory I/O operations, leading to a significant acceleration compared to conventional implementations.

We mainly adopt the ViM block to improve our model’s computing and memory efficiency. The ViM block adds a bidirectional sequence modeling (SSM) approach to better model global context to allow the model to recognise relationships across patch levels of an image. After splitting the input image into smaller patches, each was linearly projected into a vector space. These patches are treated as sequence data in the ViM block, where the bidirectional SSM efficiently models long-range dependencies and compresses the visual information. The position embedding before the P-Mamba Block adds a crucial spatial context to each patch, ensuring the model captures positional information for accurate visual recognition. This innovative approach enables the ViM block to be both computationally efficient and memory-efficient while maintaining high performance. Most importantly, it helps capture global dependencies complementary to the local shape cues extracted by our DWT-based PMD Block.

Before processing into the P-Mamba block, a 2-D input \(\in \mathbb {R}^{H \times W\times C}\) is transformed into flattened patches \(P_N\) with dimensions \(M \times (N^2 \cdot C)\). Here (H, W) is the size of the original input, C stands for the number of channels, and N and M denote the size and total count of segmented patches, respectively. Then, the \(P_N\) is linearly projected to vectors of dimension D, and the position embedding \(E_{\text {pos}} \in \mathbb {R}^{M \times D}\) is added. This process can be described as follows:

where \(x^M\) is the \(M^{th}\) patch of \(P_N\), \(W \in \mathbb {R}^{(N^2 \cdot C) \times D}\) is the learnable projection matrix. The token sequence from the patch embedding layer, \(X_{pe}\), is processed by the layer ViM to obtain the output \(X_{vim}\), which is expressed by (7).

Subsequently, the outputs from the DWT-based PMD module and the ViM module are iteratively combined using the gating mechanism across several layers within the P-Mamba block. The final output is then reshaped and permuted to serve as the input for the next stage, where the process is repeated to obtain the next stage’s output.

Mixture of experts

We use a lightweight mixture-of-experts design to integrate the PMD and ViM modules, and the mathematical formula is shown below:

where w is between 0 and 1, and it is a gating weight based on global average pooled attributes. This approach enables the network to dynamically assess the significance of structure preservation (PMD) and semantic representation (ViM).

Decoder

The decoder consists of multiple interpolation layers interspersed with Conv2d operations, progressively upsampling the encoded features to generate high-resolution segmentation maps. Specifically, the decoder processes the output feature map from the different encoder stages through several Conv2d layers, followed by interpolation operations that align the feature maps to a common resolution. These feature maps are then concatenated and passed through a series of Conv2d, batch normalization, and ReLU layers to produce the final segmentation map.

Experiment

Datasets

Pediatric dataset

The dataset, EchoNet-Peds, comprises echocardiographic evaluations from patients at Lucile Packard Children’s Hospital Stanford from 2014 to 2021, authorized by the Stanford University Institutional Review Board. The dataset contains 4467 echocardiograms collected from 1958 patients, 43\(\%\) female, aged between 0 and 18 years (mean ± SD: 10 ± 5.4 years). The patients were classified into two groups based on their echocardiographic results: those with structurally normal hearts and average ejection fraction (EF) and those with structurally normal hearts but systolic dysfunction (including dilated cardiomyopathy, chemotherapy-induced systolic dysfunction) without congenital heart disease27.

After additional processing, the dataset was employed to obtain apical four-chamber (A4C) and parasternal short-axis (PSAX) video clips, totaling 7643 video clips and 17600 annotated pictures. The video clips were partitioned into training (80\(\%\), n=6114), testing (10\(\%\), n=765), and validation (10\(\%\), n=764) sets for machine learning purposes. In addition, 86\(\%\) of the trials had an ejection fraction (EF) equal to or greater than 55\(\%\).

EchoNet-dynamic dataset

To ensure the model’s effectiveness on general echocardiogram datasets, not just pediatric ones, we also conducted experiments on the EchoNet-Dynamic dataset30. EchoNet-Dynamic, obtained from Stanford University Hospital, is the largest publicly available dataset of apical four-chamber (A4C) cardiac echocardiograms. It includes 10030 echocardiogram clips and 9989 video samples that remained after data cleansing. Each video was examined solely for the end-diastolic and end-systolic frames. As a result, 96 images were excluded due to poor-quality ground truth, leaving 14846 out of 19882 images for the training set. The remaining images were divided into validation (2563 images) and testing (2473 images) sets. End-diastolic and end-systolic frames from the same individuals were grouped for the experiments.

Implementation details

The computational setup consists of a single Tesla V100-32GB GPU, a 12-core CPU, and 61GB of RAM. The system operates on an Ubuntu 18 environment with CUDA 11.0 and Pytorch 1.13 software.

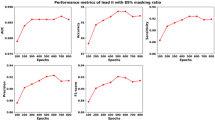

The network was trained for 50 epochs, beginning with an initial learning rate 1e-4, a weight decay of 0.01. Specifically, the input resolution is fixed at 256 x 256. Batch sizes of 24 were selected for training to obtain a compromise between computational efficiency and model accuracy. The model’s performance was assessed every five epochs, and early halting with a patience parameter of 10 was implemented to prevent overfitting. The network architecture was organized with layers configured in depth [2, 2, 2, 2]. During inference, all methods are benchmarked using the same test resolution and batch size to maintain consistency in runtime and memory usage measurements.

It is worth mentioning that we trained nnU-Net from scratch on our echocardiographic dataset, utilizing its auto-configuration pipeline for patch size, architecture, and data normalization.

Results and discussion

Table 1 offers a quantitative comparison of our P-Mamba with various state-of-the-art methods on the pediatric LV 2D segmentation task and includes experiments on the general ultrasound dataset EchoNet-Dynamic. The comparison includes CNN-based methods like U-Net18 with FCN and PSPNet backbones, as well as ViT-based approaches such as PVT15, Flatten Transformer16, and MaxVit17. Moreover, we also compared Uniformer20 and SpectFormer21, both newly introduced segmentation models from the last year, showcasing advanced capabilities in image segmentation. U-Mamba9 represents a straightforward plan Mamba model characterized by a U-shaped structure designed specifically for effective segmentation tasks. TransUnet19 and Segformer22 are recognized as classic segmentation models, and they are widely used in the field due to their robust performance. Additionally, CMUNet13 and NHBS14 are specialized models tailored for ultrasound image segmentation, addressing the unique challenges of medical imaging.

As a result, Table 1 demonstrates the superiority of our P-Mamba model. It achieves the highest average Dice Similarity Coefficient (DSC) on the PSAX and A4C pediatric datasets, with values of 0.922 and 0.906, respectively. Furthermore, our model also excels on the EchoNet-Dynamic dataset, achieving a DSC of 0.931. These results highlight the effectiveness and robustness of our approach across different datasets, outperforming the listed state-of-the-art methods.

This comparative study proved that our approach is effective in heart ultrasound images. US images tend to present a high noise level, complicating the segmentation task. The Perona-Malik Diffusion (PMD) module presented in this paper overcomes this limitation by enabling more accurate segmentation of images, as it is capable of noise reduction while still highlighting the important boundaries of the image. Additionally, we adopted ViM, a novel module that is not a conventional transformer module, it enhances the computational and memory efficiency of our model by retaining global information. More interestingly, we combine the PMD and ViM modules in a Mixture of Experts (MoE) configuration with a Neural Network. The topology also has a gating mechanism that enables it, to some extent, to follow the principles of a Mixture of Experts. This technique allows for the identification of the most suitable feature processing pathways to be selected during the processing of the features. In addition to improving the efficiency of feature processing, this approach effectively elevates the segmentation accuracy, even in the most difficult segmentation context, like echocardiogram images.

Ablation studies

We first ablate the DWT-based PMD Block in Table 2 across various configurations: ’Ours w/o PMD’ means removing the DWT-based PMD part; ’Ours w/ Sobel’ means replacing the DWT-based PMD part with a Sobel operator, which is only for edge preservation. Our DWT-based PMD block achieves the best performance on those three datasets. The DWT-based PMD part encompasses the Sobel operator due to its noise suppression and edge preservation capability, while the Sobel operator cannot finely preserve edges during noise removal.

In addition, Table 3 studies the effect of the Vision Mamba block. We replaced the ViM block with ViT structures of quadratic (PVT) and linear complexity (Flatten ViT, MaxViT). The results, summarized across the PSAX, A4C, and EchoNet-Dynamic datasets, indicate that Vision Mamba consistently achieves a higher Dice coefficient. Our findings indicate that Vision Mamba can be more accurate than other models with linear or quadratic complexity.

To further validate the effectiveness of the mixture of expert’s gating network convergence, we compared it with the results of simply adding the outputs of the DWT-based PMD Block and the ViM module. Table 4 shows that the gating structure improves the Dice coefficient across three datasets, demonstrating that the gating structure implemented with a linear layer significantly improves precision.

Model efficiency comparison

Table 5 presents the model efficiency comparison results of P-Mamba with various state-of-the-art methods, considering parameters, inference speed (ms), GPU memory usage (GB), and GFLOPs. Our P-Mamba significantly outperforms other methods across all metrics. Specifically, P-Mamba has the lowest parameter count (52.77M), fastest inference speed (9.18 ms), least GPU memory usage (4.95 GB), and lowest GFLOPs (24.66). The attention-free design of our Mamba block greatly enhances model efficiency, even compared to models with linear complexity. Additionally, our DWT-based PMD block does not add excessive parameters compared to the pure mamba net like U-Mamba, ensuring high efficiency.

Qualitative comparison

The qualitative results in Fig. 4 illustrate the performance of various models on pediatric PSAX, pediatric A4C, and EchoNet-Dynamic image segmentation tasks. Classic segmentation models like Segformer and TransUnet display poor segmentation results, indicating their unsuitability for echocardiogram data modalities. Due to noise interference, PVT, Flatten Transformer, MaxVit, and U-Mamba struggle to delineate heart structures correctly. Specialized ultrasound segmentation models such as NHBS and CMUNet also fall short in accurately segmenting the A4C views. In contrast, our P-Mamba is the least affected by noise interference and enjoys the best segmentation effect, thanks to our PMD design.

We visualize the learned features of TransUnet, P-Mamba without the DWT-based PMD Block, and P-Mamba to better understand the impact of the proposed method. The feature map outputs of encoder stages 1 and 2 are shown in Fig. 5, with nine feature map channels randomly sampled for each method. With more distinct feature contours, local information is better preserved in P-Mamba than in other methods. Additionally, we visualize the feature map output of the final decoder stage in Fig. 5 to support our analysis. We observe that P-Mamba features are more concentrated and exhibit stronger discriminative power.

Limitation and future work

One limitation of this study is that our experiments were focused exclusively on segmenting the left ventricle in echocardiographic images without exploring its application to other cardiac structures, such as myocardial segmentation31. Expanding the evaluation of additional anatomical regions, particularly the myocardium, would provide a more comprehensive understanding of the method’s performance across various heart components. Furthermore, automated Left Ventricular Ejection Fraction (LVEF) measurement based on this segmentation model can be included to enable heart functional assessment, since it is a critical metric in clinical cardiac evaluation

Additionally, the applicability of our approach to other ultrasound imaging modalities and tasks has yet to be investigated. Future research could investigate the generalization of our method to other types of ultrasound images, such as those used in abdominal or musculoskeletal imaging, to evaluate its robustness and versatility.

As large-scale models advance, an interesting direction for future research would be to investigate whether our method adheres to the scaling laws observed in deep learning32, particularly concerning model size, data quantity, and computational requirements. Exploring these aspects could provide valuable insights into the scalability and potential of our approach in more complex or larger datasets.

Conclusion

We present P-Mamba, a model tailored for efficient left ventricular segmentation in pediatric echocardiography, overcoming the background noise interference challenge. Specifically, the DWT-based Perona-Malik Diffusion Block is a mathematically explainable module focusing on local feature extraction. It can gradually suppress background noise disturbance while preserving the boundary details of the left ventricle. The vision Mamba block, on the other hand, can explore global dependencies. We integrate the two parts by borrowing the Mixture of Experts (MOE) ideas, and our P-Mamba successfully achieves an exceptional balance between segmentation accuracy and efficiency.

Data availability

All data used in this study were obtained from publicly available databases: https://stanfordaimi.azurewebsites.net/datasets/834e1cd1-92f7-4268-9daa-d359198b310a and https://stanfordaimi.azurewebsites.net/datasets/a84b6be6-0d33-41f9-8996-86e5df53b005

Code availability

The code for P-Mamba is available at https://github.com/yezizi1022/P-Mamba and can be cited using the DOI: https://zenodo.org/records/14899330.

References

Van Der Linde, D. et al. Birth prevalence of congenital heart disease worldwide: A systematic review and meta-analysis. J. Am. Coll. Cardiol. 58, 2241–2247. https://doi.org/10.1016/j.jacc.2011.08.025 (2011).

Silva, J. F., Silva, J. M., Guerra, A., Matos, S. & Costa, C. Ejection fraction classification in transthoracic echocardiography using a deep learning approach. In Proceedings of the 2018 IEEE 31st International Symposium on Computer-Based Medical Systems (CBMS). 123–128. https://doi.org/10.1109/CBMS.2018.00029 (Karlstad, 2018).

Lang, R. M. et al. Recommendations for cardiac chamber quantification by echocardiography in adults: An update from the American Society of Echocardiography and the European Association of Cardiovascular Imaging. Eur. Heart J. Cardiovasc. Imaging 16, 233–271. https://doi.org/10.1093/ehjci/jev014 (2015).

Power, A. et al. Echocardiographic image quality deteriorates with age in children and young adults with Duchenne muscular dystrophy. Front. Cardiovasc. Med. 4. https://doi.org/10.3389/fcvm.2017.00082 (2017).

Hu, Y. et al. Fully automatic pediatric echocardiography segmentation using deep convolutional networks based on BISENET. IEEE Trans. Med. Imaging 40, 3446–3458. https://doi.org/10.1109/TMI.2019.2933681 (2019).

Zhang, H. et al. A survey on visual mamba. Appl. Sci. 14, 5683. https://doi.org/10.3390/app14135683 (2024).

Gu, A. & Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. Preprint https://doi.org/10.48550/arXiv.2312.00752 (2024).

Zhu, L. et al. Vision mamba: Efficient visual representation learning with bidirectional state space model. Preprint https://doi.org/10.48550/arXiv.2401.09417 (2024).

Ma, J., Li, F. & Wang, B. U-Mamba: Enhancing long-range dependency for biomedical image segmentation. Preprint https://doi.org/10.48550/arXiv.2401.04722 (2024).

Stevens, T. S. W. et al. Dehazing ultrasound using diffusion models. IEEE Trans. Med. Imaging 43, 3546–3558. https://doi.org/10.1109/TMI.2024.3363460 (2024).

Perona, P. & Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 12, 629–639. https://doi.org/10.1109/34.56205 (1990).

Masoudnia, S. & Ebrahimpour, R. Mixture of experts: A literature survey. Artif. Intell. Rev. 42, 275–293. https://doi.org/10.1007/s10462-012-9338-y (2014).

Tang, F., Wang, L., Ning, C., Xian, M. & Ding, J. CMU-Net: A strong convmixer-based medical ultrasound image segmentation network. In Proceedings of the 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI). 1–5. https://doi.org/10.1109/ISBI53787.2023.10230609 (Cartagena, 2023).

Liu, R. et al. Nhbs-net: A feature fusion attention network for ultrasound neonatal hip bone segmentation. IEEE Trans. Med. Imaging 40, 3446–3458. https://doi.org/10.1109/TMI.2021.3087857 (2021).

Wang, W. et al. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV). 568–578. https://doi.org/10.1109/ICCV48922.2021.00061 (2021).

Han, D., Pan, X., Han, Y., Song, S. & Huang, G. Flatten transformer: Vision transformer using focused linear attention. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV). 5961–5971. https://doi.org/10.1109/ICCV51070.2023.00548 (2023).

Tu, Z. et al. Maxvit: Multi-axis vision transformer. In European Conference on Computer Vision (ECCV), 459–479, https://doi.org/10.1007/978-3-031-20053-3-27 (Springer, Tel Aviv, Israel, 2022).

Gupta, D. Image segmentation Keras: Implementation of SEGNET, FCN, UNET, PSPNET and other models in Keras. Preprint https://doi.org/10.48550/arXiv.2307.13215 (2024).

Chen, J.et al. Transunet: Transformers make strong encoders for medical image segmentation. Preprint https://doi.org/10.48550/arXiv.2102.04306 (2024).

Li, K. et al. Uniformer: Unifying convolution and self-attention for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 45, 12581–12600. https://doi.org/10.1109/TPAMI.2023.3282631 (2023).

Patro, B. N., Namboodiri, V. P. & Agneeswaran, V. S. Spectformer: Frequency and attention is what you need in a vision transformer. Preprint https://doi.org/10.48550/arXiv.2304.06446 (2024).

Xie, E. et al. Segformer: Simple and efficient design for semantic segmentation with transformers. Preprint https://doi.org/10.48550/arXiv.2105.15203 (2024).

Han, X. et al. Mambaeviscrib: Mamba and evidence-guided consistency enhance cnn robustness for scribble-based weakly supervised ultrasound image segmentation. Preprint https://doi.org/10.48550/arXiv.2409.19370 (2024).

Isensee, F., Jaeger, P. F., Kohl, S. A. A., Petersen, J. & Maier-Hein, K. H. nnu-net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 17, 204–212. https://doi.org/10.1038/s41592-020-01008-z (2020).

Ling, H. J. et al. Extraction of volumetric indices from echocardiography: Which deep learning solution for clinical use? Preprint https://arxiv.org/abs/2305.01997 (2023).

Painchaud, N., Duchateau, N., Bernard, O. & Jodoin, P.-M. Echocardiography segmentation with enforced temporal consistency. IEEE Trans. Med. Imaging 41, 2867–2878. https://doi.org/10.1109/TMI.2022.3173669 (2022).

Reddy, C. D., Lopez, L., Ouyang, D., Zou, J. Y. & He, B. Video-based deep learning for automated assessment of left ventricular ejection fraction in pediatric patients. J. Am. Soc. Echocardiogr. 36, 482–489. https://doi.org/10.1016/j.echo.2023.01.015 (2023).

Heil, C. E. & Walnut, D. F. Continuous and discrete wavelet transforms. SIAM Rev. 31, 628–666. https://doi.org/10.1137/1031129 (1989).

Guo, Z., Sun, J., Zhang, D. & Wu, B. Adaptive Perona-Malik model based on the variable exponent for image denoising. IEEE Trans. Image Process. 21, 958–967. https://doi.org/10.1109/TIP.2011.2169272 (2011).

Ouyang, D. et al. Echonet-dynamic: A large new cardiac motion video data resource for medical machine learning. In NeurIPS ML4H Workshop (2019).

Al-antari, M. A. et al. Deep learning myocardial infarction segmentation framework from cardiac magnetic resonance images. Biomed. Signal Process. Control 89, 105710. https://doi.org/10.1016/j.bspc.2023.105710 (2024).

Bahri, Y., Dyer, E., Kaplan, J. & Sharma, U. Explaining neural scaling laws. Proc. Natl. Acad. Sci. 121, e2311878121. https://doi.org/10.1073/pnas.2311878121 (2024).

Author information

Authors and Affiliations

Contributions

Zi Ye conceived the experiment(s) and contributed to the conceptualization, methodology, and writing the original draft. Tianxiang Chen was responsible for data curation and software. Fangyijie Wang contributed to visualization and investigation. Hanwei Zhang worked on validation. Lijun Zhang was involved in reviewing and editing the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ye, Z., Chen, T., Wang, F. et al. Marrying Perona Malik diffusion with Mamba for efficient pediatric echocardiographic left ventricular segmentation. Sci Rep 15, 32152 (2025). https://doi.org/10.1038/s41598-025-16797-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16797-6