Abstract

Cross-view geo-localization aims to match images of the same location captured from different perspectives, such as drone and satellite views. This task is inherently challenging due to significant visual discrepancies caused by viewpoint variations. Existing approaches often rely on global descriptors or limited directional cues, failing to effectively integrate diverse spatial information and global-local interactions. To address these limitations, we propose the Global-Local Quadrant Interaction Network (GLQINet), which enhances feature representation through two key components: the Quadrant Insight Module (QIM) and the Integrated Global-Local Attention Module (IGLAM). QIM partitions feature maps into directional quadrants, refining multi-scale spatial representations while preserving intra-class consistency. Meanwhile, IGLAM bridges global and local features by aggregating high-association feature stripes, reinforcing semantic coherence and spatial correlations. Extensive experiments on the University-1652 and SUES-200 benchmarks demonstrate that GLQINet significantly improves geo-localization accuracy, achieving state-of-the-art performance and effectively mitigating cross-view discrepancies.

Similar content being viewed by others

Introduction

Cross-view object geo-localization1,2,3,4,5 aims to determine the precise geographic location of an object in a reference image using its coordinates from a query image. This task is crucial in remote sensing applications, including autonomous navigation6, 3D scene reconstruction7, and precision delivery8,9. As a complementary technique to the Global Positioning System (GPS), image-based geo-localization enhances localization accuracy and provides a robust alternative in GPS-denied environments.

Despite its significance, cross-view geo-localization remains a challenging problem due to inherent visual discrepancies across images captured from different platforms, such as drones and satellites. Unlike traditional geo-localization methods10,11,12, which operate on images from similar viewpoints, cross-view geo-localization must overcome extreme viewpoint differences, scale variations, and partial occlusions between ground-level and aerial perspectives. The key to success lies in extracting salient scene features that effectively bridge the domain gap, enabling robust cross-view matching and accurate localization.

An illustration of the motivation behind our work. Our proposed GLQINet generates diverse patterns to encourage the network to learn informative feature representations by focusing on discriminative aspects of the input. In addition, the model employs an attention-based mechanism in an interactive manner to effectively learn both global and local features, enabling a comprehensive understanding of the geographic context across different views. The satellite imagery shown in this figure is derived from the University-1652 dataset 13, and the dataset can be accessed at: https://github.com/layumi/University1652-Baseline.

In the early stages of cross-view geo-localization, traditional image processing techniques were commonly employed, such as gradient-based methods14 and hand-crafted features15, to obtain effectively matched image pairs. With the rapid development of deep learning, there has been significant progress in this field. A notable method13 uses pre-trained convolutional neural networks (CNNs) as the backbone to extract image descriptors, followed by a classifier that aggregates correctly matched pairs of drone and satellite images within the feature space. As a result of the strong global modeling capabilities, Transformer has gained considerable attention in visual tasks16,17, positioning Transformer-based architectures as the preferred choice18,19,20 for geo-localization challenges. Building on this approach, several methodologies21,22,23 explicitly utilize contextual information to enhance feature representation. Nevertheless, the critical aspect of addressing cross-view geo-localization lies in identifying relevant information between images and thoroughly comprehending both global and local contextual information. This task proves quite challenging for CNNs, as many CNN architectures primarily emphasize small, discriminative features rather than capturing broader contextual cues.

On the other hand, attention mechanisms16,24,25, renowned for focusing on essential components while suppressing irrelevant ones within feature maps, are increasingly employed to help networks learn critical cues from images and improve the overall contextual information. Furthermore, as the self-attention mechanism has evolved in natural language processing, Vision Transformer–a self-attention-based architecture for visual tasks–has been incorporated into several studies related to cross-view geo-localization18,19,20,26, yielding impressive results. However, these approaches typically concentrate on extracting fine-grained information from the final feature map, often overlooking low-level cues such as texture and edge details, which are crucial for a comprehensive understanding of the images.

To address the limitations of the previously mentioned methods in cross-view geo-localization, we propose a Global-Local Quadrant Interaction Network (GLQINet), as shown in Fig. 1. GLQINet focuses on extracting fine-grained information from feature maps oriented in different directions, thereby enhancing the accuracy of cross-view geo-localization. Unlike existing approaches that rely on CNN or Transformer backbones to extract global features or local patterns in a uniform manner, GLQINet explicitly captures directional diversity in local features, which is essential for handling large viewpoint variations and complex scene structures. Prior methods often overlook such directional cues and perform global-local fusion in a loosely coupled or one-sided manner, limiting their ability to represent fine-grained spatial context effectively.

To overcome these limitations, GLQINet introduces two key innovations. First, the Quadrant Insight Module (QIM) segments feature maps into four directional stripes—horizontal, vertical, diagonal, and anti-diagonal. This division, coupled with varying receptive fields, allows the model to effectively fuse information across different stripes, facilitating a better understanding of the correspondence between aerial and ground images. Second, the Integrated Global-Local Attention Module (IGLAM) unifies global and local features using a novel Merged Attention mechanism, enabling adaptive integration of coarse and fine information. Unlike conventional cross-attention or self-attention modules, IGLAM provides more efficient and expressive global-local interaction. These two modules jointly enable GLQINet to generate compact yet discriminative feature representations that are resilient to viewpoint changes. Such directional feature modeling and interactive global-local fusion have not been jointly explored in prior CNN- or Transformer-based geo-localization methods, thereby distinguishing our approach from existing work. Finally, drawing inspiration from previous work on dataset sampling27, we construct batches that aid in extracting meaningful statistics to guide the training process and improve convergence. The primary contributions of this paper can be summarized as follows:

-

We propose the Quadrant Insight Module (QIM), which is specifically designed to capture multi-scale spatial features and preserve intra-class consistency, adaptively emphasizing discriminative regions within the features and enhancing the overall representation of the object.

-

We employ the Integrated Global-Local Attention Module (IGLAM), which bridges overall and partial information by aggregating high-association feature stripes, enhancing both broad contextual information and specific details while minimizing unnecessary background elements.

-

Through extensive experiments conducted on public datasets, namely University-1652 and SUES-200, we prove that our GLQINet achieves superior performance in cross-view geo-localization compared to competing methods.

The remainder of this paper is organized as follows. “Related work” reviews related work on cross-view geo-localization, with an emphasis on part-based and Transformer-based approaches. “Proposed method” describes the proposed GLQINet framework in detail, including the overall architecture, the Quadrant Insight Module (QIM), the Integrated Global-Local Attention Module (IGLAM), and the loss formulation. “Experiment and analysis” presents the experimental settings and results, including dataset descriptions, evaluation metrics, implementation details, comparisons with state-of-the-art methods, ablation studies, and visual analysis. “Limitations” discusses the limitations of the proposed method and potential directions for improvement. Finally, “Conclusion” concludes the paper and outlines future research directions.

Related work

Cross-view geo-localization

Cross-view geo-localization has become a significant research focus due to its broad applications in areas like urban planning and autonomous navigation. This section reviews the evolution of datasets and techniques for geo-localization across different viewpoints. Early studies28,29 organized datasets into image pairs to address challenges in ground-to-aerial localization. Key datasets, such as CVUSA30 and CVACT31, use ground-view panoramic images as queries and satellite images as references. The Vigor dataset32 offers a more practical testbed, bridging research and real-world applications. The University-1652 dataset13 includes images from drones, satellites, and ground cameras to focus on university buildings. While the CVOGL dataset33 addresses “Ground \(\rightarrow\) Satellite” and “Drone \(\rightarrow\) Satellite” localization, the SUES-200 dataset34 provides aerial imagery at various altitudes for better context in drone-based geo-localization.

One of the key challenges in this task is extracting viewpoint-invariant features. Early methods transformed ground images to bird’s-eye view (BEV)15. With the rise of deep learning, CNNs became popular for feature extraction35, improving cross-view localization. LONN31 incorporated orientation information for better accuracy, while polar transforms36 helped mitigate viewpoint differences but introduced distortions. Recent work, such as LPN21, uses feature partition strategies to improve model performance, while FSRA18 and TransGeo19 adopt Transformer-based approaches, reducing the need for geometric preprocessing and offering improved results. While significant progress has been made, issues such as background clutter, occlusion, and misalignment of buildings remain.

Based on part feature methods

Part-based representations divide image features into smaller regions to capture fine-grained details and emphasize salient regions, which is particularly beneficial in retrieval tasks such as vehicle and person re-identification37,38,39. PCB40 and MGN41 enhance recognition accuracy by aggregating global and local features, allowing for more robust feature discrimination. AlignedReID42 and DMLI43 improve localization by aligning local features without additional supervision, effectively reducing misalignment issues. Meanwhile, MSBA44 integrates self-attention mechanisms to optimize feature extraction efficiency, while FSRA18 and SGM26 achieve pixel-level feature division, enhancing retrieval performance by capturing finer spatial relationships. These methods have proven effective in handling complex scenarios, particularly when addressing variations in pose, background clutter, and viewpoint shifts. In cross-view geo-localization, the integration of global context and local feature alignment plays a crucial role in bridging perspective differences, ensuring robust and reliable matching. Inspired by these advancements, we introduce a novel quadrant-based strategy that refines spatial representations and strengthens multi-scale interactions, significantly improving feature compactness and geo-localization accuracy.

Based on transformer methods

The Transformer model24 has revolutionized natural language processing (NLP) and computer vision by effectively capturing long-range dependencies through self-attention. In geo-localization, attention mechanisms have been integrated into feature extraction pipelines, significantly improving performance in aerial-view-based tasks18,19. Notably, models such as TransGeo19 and EgoTR20 leverage Transformer-based attention to encode global dependencies, effectively mitigating cross-view visual ambiguities. Transformer models typically require extensive datasets and are computationally intensive. The limited scale of existing cross-view geo-localization datasets13,30,31 poses a challenge for training these models effectively. Recent research has further extended Transformer-based approaches to pose-guided generation45 and customizable virtual dressing46, demonstrating their adaptability across diverse applications. Moreover, advancements in progressive conditional diffusion models47,48 and long-term video generation49 highlight the growing role of attention mechanisms in tackling complex, dynamic tasks beyond static image matching. To address this, recent research has explored structured feature decomposition and global-local attention mechanisms to better capture view-invariant representations for geo-localization. Inspired by these advancements, our work integrates direction-aware quadrant partitioning and multi-scale global-local interaction to enhance feature compactness and improve cross-view matching accuracy.

Proposed method

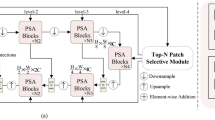

In this section, we present our proposed GLQINet. The overall network architecture is illustrated in Fig. 2. GLQINet primarily consists of two components. The first component is the Quadrant Insight Module (QIM), which divides the feature maps obtained from the drone and satellite view—after processing through the backbone network—into equal parts of contextual information stripes. The second component is the Integrated Global-Local Attention Module (IGLAM), where the feature vectors generated by the QIM are fed into the Merged Attention block, adaptively enhancing the discriminative regions and semantic representations while maintaining global cues. Finally, following a global average pooling operation, the feature vectors output by the IGLAM are directed to separate classifier blocks, all sharing the same structure. This allows for the acquisition of multiple feature representations, which are then used to calculate the loss, ultimately minimizing it to enhance the utilization of semantic information.

The proposed network’s architecture comprises a dual-stream feature extraction backbone, the Quadrant Insight Module (QIM), and the Integrated Global-Local Attention Module (IGLAM). QIM leverages fine-grained details to generate four-directional local representations of the feature. IGLAM integrates both global and local embeddings, enabling simultaneous attention to different perspectives within various feature spaces and incorporating additional key features into comprehensive final representations.

Overall

In this section, we examine a geo-localization dataset, denoting the input image and its corresponding label as \(x_j\) and \(y_j\), respectively. Here, j indicates the platform from which \(x_j\) is obtained, with \(j \in \{1, 2\}\). Specifically, \(x_1\) represents the satellite-view image, while \(x_2\) corresponds to the drone-view image. The label \(y_j\) falls within the range [1, R], where R is the total number of categories. Our GLQINet is structured with two branches: the drone-view and the satellite-view branch. Following the approach outlined in13, we employ shared weights between the two branches, as the aerial views exhibit similar patterns. For feature extraction, we utilize the pre-trained ConvNeXt50 as the backbone embedding. ConvNeXt is a standard CNN-based architecture, which achieves performance comparable to that of Vision Transformer networks16,17, offering a balance of processing speed and accuracy while maintaining a simpler design.

Quadrant insight module (QIM)

Cross-view geo-localization requires robust feature representations to mitigate variations in perspective, scale, and structural alignment. Existing methods often struggle with feature fragmentation when handling aerial and ground-level images, making it difficult to establish meaningful correspondences. To address this challenge, we introduce the Quadrant Insight Module (QIM) , which decomposes feature maps into directional components, capturing multi-scale spatial relationships across different quadrants. This structured partitioning enhances the model’s ability to distinguish fine-grained details , improving feature compactness and intra-class semantic consistency .

As illustrated in the middle part of Fig. 2, the QIM operates on the global feature map output by the ConvNeXt backbone \(\mathcal {F}_{backbone}\). Given an input image \(x_j\), the extracted feature representation \(f_{global} \in \mathbb {R}^{H\times W\times C}\), where H and W denote the height and width, and C is the number of channels, is defined as:

Horizontal average pooling (HAP) layer: the HAP layer averages feature values along horizontal stripes, capturing spatial dependencies across rows. The output feature map is denoted as \(h_i \in \mathbb {R}^{\frac{H}{t} \times W \times C}\), where t represents the number of partitioned stripes. For instance, when \(t=n\), the first horizontal feature is computed as:

where \(f_{1i}\) refers to the feature vector located at the first row and the \(i\)-th column of the global feature map \(f_{\text {global}}\). This operation effectively models horizontal structures such as roads and skylines, which are common in ground-view images. By compressing information along rows, HAP strengthens the model’s sensitivity to lateral spatial patterns critical for cross-view alignment.

Vertical average pooling (VAP) layer: the VAP layer aggregates feature responses across vertical stripes, facilitating structural alignment between aerial and ground viewpoints. The feature map \(v_i \in \mathbb {R}^{H \times \frac{W}{t} \times C}\) is transposed to \(v_i^T \in \mathbb {R}^{\frac{H}{t} \times W \times C}\) to maintain dimensional consistency with \(h_i\). When \(t=n\), an example computation is:

where \(f_{i2}\) denotes the feature vector at the \(i\)-th row and the second column of \(f_{\text {global}}\). VAP emphasizes columnar structures like buildings and trees, which often appear vertically aligned in both views. This pooling guides the network to better capture structural correspondences along the height dimension.

Diagonal average pooling (DAP) layer: the DAP layer enhances feature continuity along diagonal axes, extracting oblique structural patterns. When \(t=n\), the first diagonal feature is computed as:

where \(f_{p_k q_k}\) denotes the feature at diagonal position \((p_k, q_k)\), where \(p_k = q_k = k\), i.e., along the top-left to bottom-right diagonal of \(f_{global}\). Diagonal pooling captures features that span across both height and width simultaneously, such as rooftops or road intersections viewed at an angle. This enables the model to reason about spatial dependencies beyond pure vertical or horizontal constraints.

Anti-diagonal average pooling (AAP) layer: similarly, the AAP layer captures information across anti-diagonal directions, reinforcing spatial consistency. Given \(t=n\), the first anti-diagonal feature is:

where \(f_{r_k s_k}\) denotes the feature at anti-diagonal position \((r_k, s_k)\), where \(r_k = k\) and \(s_k = n-k+ 1\), i.e., along the top-right to bottom-left anti-diagonal of \(f_{global}\). AAP effectively encodes reverse-diagonal relationships, which are often indicative of symmetrical or reflective structures in scenes. Integrating anti-diagonal context improves the network’s ability to generalize to diverse and complex cross-view scenarios.

These directional pooling operations enhance spatial feature encoding by leveraging multiple receptive fields, allowing the model to effectively integrate multi-scale patterns. The aggregated outputs from QIM are formulated as:

By capturing multi-directional interactions , QIM enables more compact and informative feature representations, bridging the gap between aerial and ground views . The extracted quadrant-based descriptors are subsequently refined within our Global-Local Attention Module , further enhancing geo-localization robustness .

Integrated global-local attention module (IGLAM)

Cross-view geo-localization requires effectively integrating both global and local visual cues to robustly match corresponding features across aerial and ground perspectives. However, existing attention mechanisms either focus on global feature extraction while neglecting local spatial structures or rely on local descriptors without incorporating high-level semantic understanding. To bridge this gap, we introduce an Integrated Global-Local Attention Module (IGLAM) that facilitates efficient cross-scale feature interaction, capturing both high-level contextual information and fine-grained local details. Compared to existing interaction modules51,52, IGLAM achieves superior computational efficiency while preserving discriminative feature representation, as illustrated in Fig. 3.

Comparison of our Integrated Global-Local Attention Module (IGLAM) with existing interaction mechanisms. (a) Self-attention concatenates local and global features before passing them through a self-attention block. (b) Cross-attention fuses features via a cross-attention layer. (c) Co-attention applies a cross-attention layer followed by a self-attention block. (d) Our merged attention first concatenates global and local features, then processes them through a single cross-attention block, enabling effective cross-view interaction.

As shown in the upper right corner of Fig. 2, IGLAM first concatenates the global feature representation \(f_{global}\) with the local features extracted from different directional stripes (horizontal, vertical, diagonal, and anti-diagonal) obtained from the Quadrant Insight Module (QIM), as defined in Eq. (7). The concatenation operation can be expressed as:

where \(f_{part}\) represents one of the local feature representations (\(h_i, v_i, d_i, a_i\)).

Next, we process \(f_{cat}\) through a single Cross-Attention block. Specifically, as shown in the dashed rectangular box of the right end of Fig. 2, three linear layers are applied to map the concatenated feature into the query, key, and value representations:

where \(W^Q\), \(W^K\), and \(W^V\) are learnable transformation matrices, and Q, K, V are the projected feature embeddings used in the attention computation. The attention operation is then formulated as:

This mechanism ensures that local feature representations retrieve relevant contextual information from the global embeddings, reinforcing discriminative features across different views.

After the attention mechanism is applied, the output \(f_{local}\) is further processed using a Global Average Pooling (GAP) operation:

where \(g_j\) represents the final feature vector for the input image \(x_j\). This operation condenses spatial features into a compact descriptor while preserving global semantics.

To demonstrate the efficiency of IGLAM, we compare it against three widely used interaction modules in Table 3. As shown in Fig. 3, (a) self-attention treats local and global features as a single entity, potentially leading to redundant computations. (b) cross-attention fuses them in a one-way manner, limiting bidirectional interaction. (c) Co-attention employs sequential fusion, increasing computational overhead. In contrast, our Merged Attention method in (d) allows direct bidirectional interaction, significantly reducing computational complexity while preserving robust feature fusion. Through extensive evaluation, we find that IGLAM not only enhances Rank-k performance across multiple benchmarks but also significantly improves computational efficiency. This balance between accuracy and efficiency makes it particularly well-suited for large-scale cross-view geo-localization tasks.

Loss function

Individual feature vectors are generated after feature extraction and subsequently fed into the classifier block. This block comprises a linear layer, followed by a batch normalization layer, a ReLU activation layer and a dropout layer. During the training phase, each feature vector \(g_j\) is processed through a classifier block and then a linear layer, transforming it into a category vector that is used to calculate the cross-entropy loss. In the testing phase, only the classifier block is utilized to process \(g_j\), resulting in a \(16 \times 512\) feature representation.

The cross-entropy loss optimizes the network’s parameters and is defined as follows:

where \(p(x_{s})\) represents the ground truth probability, and \(q(x_{s})\) denotes the estimated probability. If \(x_{s}\) corresponds to the feature of the target (where the label value is 1), then \(p(x_{s}) = 1\). Otherwise, \(p(x_{s}) = 0\).

To minimize the distance between feature vectors of the same category across different views, we adopt the triplet loss, leveraging the conventional Euclidean distance as referenced in previous works18,53,54. The triplet loss is formulated as:

where \(||\cdot ||_2\) denotes the \(L_2\)-norm, \(F_a\) is the feature vector of the anchor image a (which can be either a satellite or drone-view image), \(F_b\) is the feature vector of an image from the same category as a, and \(F_c\) is the feature vector of an image from a different category. M is the triplet margin and we set to 0.3.

The total loss integrates both cross-entropy and triplet losses across multiple feature representations, computed as:

where k indexes the feature representations, and \(L_{\text {CE}}^{jk}\) and \(L_{\text {triplet}}^{jk}\) denote the cross-entropy and triplet losses for the kth feature of \(x_j\), respectively.

In summary, we employ cross-entropy loss for classification and triplet loss to enhance feature consistency across different domains.

To further clarify the integration of modules and the training flow, we present the pseudocode of GLQINet in Algorithm 1.

Experiment and analysis

To validate the proposed GLQINet’s superiority, it is compared with multiple state-of-the-art cross-view geo-location approaches on two large-scale datasets, namely, University-1652 and SUES-200.

Datasets

University-1652

The University-1652 dataset serves as a benchmark for drone-based geolocalization, featuring multi-view and multi-source imagery, which includes ground-view, drone-view and satellite-view images collected from 1652 buildings across 72 universities. The dataset is divided into a training set comprising images from 701 buildings at 33 universities and a testing set consisting of images from the remaining 39 universities. Notably, the training set contains an average of 71.64 images per location, in contrast to existing datasets, which typically include only two images per location.

SUES-200

SUES-200 is a cross-view matching dataset distinguished by its varied sources, multiple scenes and panoramic perspectives, which consists of images captured from drone and satellite viewpoints. What differentiates SUES-200 from earlier datasets is its incorporation of tilted images from the drone perspective, complete with labeled flight heights of 150 m, 200 m, 250 m and 300 m, making it more representative of real-world conditions. Additionally, this dataset encompasses a wide array of scene types, extending beyond campus buildings to include parks, schools, lakes and other public structures.

Evaluation metrics

In cross-view geo-localization, Recall@k (R@K) and Average Precision (AP) serve as standard evaluation metrics. R@K quantifies how likely a correct match will appear within the top k rankings. A higher R@K signifies superior network performance, which is defined as follows:

AP measures the area under the precision-recall curve, accounting for all true matches, which is formulated as follows:

where \(p_0 = 1\) and

Here, N represents the number of true matches for a query, while \(T_s\) and \(F_s\) denote the counts of true and false matches before the \((i+1)\)-th true match.

Implementation details

We select ConvNeXt-Tiny as the baseline model and utilize the pre-trained weights on ImageNet to extract visual features. To accommodate the requirements of the pre-trained model, we resize the input images to a fixed size of \(256 \times 256\) pixels for both training and testing. The parameter n is set to be 8.

For parameter initialization, we employ the Kaiming initialization method63 specifically for the classifier block. For the optimization process, we use stochastic gradient descent (SGD) with a momentum of 0.9 and a weight decay of 0.0005, operating on a batch size of 32. The learning rate is set to be 0.003 for the backbone parameters and 0.01 for the remaining layers. We train the model for a total of 200 epochs, applying a reduction of the learning rate by a factor of 0.1 after the 80th and 120th epochs. To measure similarity between query and gallery images, we utilize the cosine distance of the extracted features.

Comparison with state-of-the-art methods

The comparison results presented in Tables 1 and 2 demonstrate that the proposed GLQINet is superior to many state-of-the-art cross-view geo-localization methods, including CNN-based methods and Transformer-based methods. To provide better context for these comparisons, we briefly summarize the key characteristics of the representative methods.

CNN-based methods

U-baseline 13 utilizes a standard CNN backbone to extract global descriptors for cross-view matching. DWDR 55 introduces dual-weighted discriminative regularization to improve feature distinctiveness. MuSe-Net 56 extracts multiple semantic embeddings to enhance retrieval performance. LPN 21 employs local patch aggregation to capture fine-grained part-level features. LDRVSD 58 reduces viewpoint and scale variations via variational self-distillation. LCM64 enhances robustness to large viewpoint differences through local context matching and part-level attention. PCL 59 applies part-based contrastive learning to refine local descriptors. PAAN 60 integrates part-aware attention for enhanced feature learning. MSBA 44 adopts multi-scale bidirectional attention to strengthen robustness under perspective shifts. MBF 61 leverages multi-branch fusion to align features across views. MCCG 62 incorporates graph-based reasoning to capture cross-view structural correspondences.

Transformer-based methods

ViT34 adopts the Vision Transformer architecture to globally model spatial dependencies. SwinV2-T65 introduces hierarchical shifted windows and improved normalization techniques to capture local-global context with reduced computational cost. SAIG 57 employs lightweight self-attention to model global dependencies efficiently. FSRA 18 uses hierarchical self-attention for contextual feature alignment. SGM 26 introduces semantic-guided region alignment to focus on discriminative areas during matching.

Comparisons on University-1652

To evaluate the performance of GLQINet against state-of-the-art methods on the University-1652 dataset, we compare it with both CNN-based and Transformer-based techniques. The CNN-based methods include MCCG62, MBF61, PANN60, MSBA44 and LPN21, while the Transformer-based methods consist of SAIG57, SGM26 and FSRA18. Additionally, we reference the baseline model provided for the University-1652 dataset13 (U-baseline). The quantitative results are presented in Table 1, where the BOLD values indicate superior performance. The University-1652 dataset comprises two primary tasks: Drone-to-Satellite target localization and Satellite-to-Drone navigation. In the Drone \(\rightarrow\) Satellite task, GLQINet achieves a R@1 of \(91.66\%\) and an AP of \(92.94\%\). For the Satellite \(\rightarrow\) Drone navigation task, it attains a R@1 of \(94.58\%\) and an AP of \(91.11\%\). These results demonstrate that GLQINet consistently surpasses existing state-of-the-art models in both retrieval directions. Notably, GLQINet outperforms the previous strong CNN-based model MCCG62 by \(2.02\%\) in R@1 on the Drone \(\rightarrow\) Satellite task, showcasing significant improvements in cross-domain matching. Compared with transformer-based methods such as FSRA18 and SGM26, GLQINet exhibits better robustness and generalization ability, especially under large viewpoint variations and complex background interference. This performance gain is attributed to GLQINet’s design that integrates global-local quadrilateral interaction and directional pooling strategies. These modules enhance the model’s capacity to capture cross-view spatial dependencies and effectively align local structures between aerial and ground views. Particularly, the multi-directional feature aggregation mechanism helps preserve spatial cues that are often lost in traditional CNNs or diluted in pure Transformer architectures.

Furthermore, the experimental results reveal that GLQINet excels in handling the semantic gap between the drone and satellite perspectives, which is a critical challenge in the University-1652 dataset. By combining global context modeling with fine-grained feature interaction, GLQINet delivers a balanced representation that is both discriminative and robust. Overall, this comparison highlights GLQINet’s capability to deliver enhanced results across both localization and navigation tasks, solidifying its position as an advanced method in cross-view geo-localization. These findings suggest that GLQINet is highly promising for real-world drone applications requiring reliable target matching and navigation in large-scale remote sensing scenarios.

Comparisons on SUES-200

To further evaluate the effectiveness and generalization capability of GLQINet, we conduct experiments on the SUES-200 dataset34, which poses additional challenges due to diverse altitudes and complex urban environments. The detailed results are summarized in Table 2. In the drone-view target localization task (Drone \(\rightarrow\) Satellite), GLQINet achieves impressive R@1 scores of \(82.07\%\), \(91.50\%\), \(96.72\%\), and \(96.82\%\) across four different drone heights, along with corresponding AP values of \(85.55\%\), \(93.33\%\), \(97.49\%\), and \(97.42\%\). These results demonstrate a clear performance advantage over the representative CNN-based model LPN21, which only achieves R@1 scores ranging from \(61.58\%\) to \(81.47\%\) at the same altitude levels. This substantial improvement underscores GLQINet’s ability to maintain high detection accuracy despite variations in altitude and viewpoint. For the satellite-view navigation task (Satellite \(\rightarrow\) Drone), GLQINet consistently delivers outstanding performance, recording R@1 scores of \(95.00\%\), \(98.75\%\), \(98.75\%\), and \(98.75\%\), alongside AP values of \(85.44\%\), \(95.26\%\), \(97.14\%\), and \(97.98\%\) across the four heights. These results indicate that GLQINet not only excels in ground-to-air matching but also ensures robust and stable navigation accuracy across diverse altitudinal scenarios.

Furthermore, when compared with advanced Transformer-based baselines such as SwinV2-T and FSRA, GLQINet achieves nearly \(7\%\) and \(5\%\) improvements in R@1 performance, respectively. This highlights the superiority of our model’s global-local quadrilateral interaction design, which enhances the ability to align features effectively under large viewpoint changes and complex urban backdrops. Overall, these comprehensive results verify that GLQINet consistently outperforms existing methods in both localization and navigation tasks. Its strong robustness across varying flight altitudes further demonstrates its practical applicability to real-world drone-based remote sensing and geo-localization tasks.

Ablation studies and analysis

In this section, the proposed GLQINet is comprehensively analyzed from four aspects to investigate the logic behind its superiority. Specifically, we conduct ablation experiments to evaluate (1) the role of our proposed QIM and IGLAM, (2) the impact of the number of stripes, (3) analysis of the attention mechanism in IGLAM and (4) the effect of the utilized baseline network.

(1) Role of QIM and IGLAM. First, we evaluate the effectiveness of the proposed components, namely QIM and IGLAM, as shown in Fig. 4, on both the University-1652 and SUES-200 datasets. The results reveal that the simultaneous introduction of QIM and IGLAM leads to significant performance improvements compared to the baseline. Specifically, for the Drone \(\rightarrow\) Satellite task, the complete model enhances R@1 from \(76.74\%\) to \(91.66\%\) (an increase of \(14.92\%\)), while for the Satellite \(\rightarrow\) Drone task, it improves R@1 from \(88.45\%\) to \(94.58\%\) (an increase of \(6.13\%\)). From these results, we can draw the following conclusions: (1) The removal of all components results in notably poorer matching performance across both datasets. (2) Utilizing any single proposed module independently results in significant performance enhancements, with QIM contributing the most, highlighting the effectiveness of leveraging diverse features to improve model performance. (3) When all components are used together, the model achieves optimal performance across all metrics, which shows that our proposed modules work collaboratively to enhance the overall effectiveness of the model. All these above findings underscore the efficacy of the proposed QIM and IGLAM.

The error cases visualization of our method and baseline, with blue boxes denoting correct matching and red boxes signifying false matching. The satellite images shown in this figure are derived from the University-1652 dataset 13, and the dataset can be accessed at: https://github.com/layumi/University1652-Baseline.

(2) Impact of the number of stripes. In the following experiments, we only vary the parameter t, which denotes the number of stripes along each direction. This parameter is a crucial factor in the stripe partitioning strategy, and by default, we set \(t = 4\). To assess the impact of t on the accuracy of R@1 and AP, we performed several experiments using different t values ranging from 1 to 8. The results are illustrated in Fig. 5. For the drone navigation task, both R@1 and AP accuracy improve as t increases, reaching their peak values of \(91.66\%\) and \(91.11\%\) when \(t = 4\), after which there is a slight decline. On the other hand, in the drone-view target localization task, R@1 and AP accuracy exhibit an upward trend when t is less than 4, followed by a gradual decrease as t continues to increase. This indicates that the proposed method demonstrates resilience to variations in the number of stripes.

(3) Analysis of the attention mechanism in IGLAM. Among the various attention mechanisms discussed in the literature, we selected Merged Attention for its efficiency in enhancing feature representation and incurring minimal additional computational costs. We conducted several experiments comparing different attention mechanisms, which contain Self Attention, Cross Attention, and Co-Attention, in order to assess the effectiveness of the attention mechanism utilized in the IGLAM on the final results. Notably, all these attention mechanisms demonstrated inferior performance compared to Merged Attention within the IGLAM framework. The proposed model was trained using different attention-based IGLAM configurations under the same training conditions. As detailed in Table 3, the performance metrics for the Merged Attention-based IGLAM surpassed those of the Co-Attention-based IGLAM, with R@1 and AP improving by \(0.29\%\) (\(0.54\%\)) and \(0.81\%\) (\(0.40\%\)) for the Satellite \(\rightarrow\) Drone (and Drone \(\rightarrow\) Satellite) tasks, respectively. Merged Attention consistently achieved superior performance compared to other attention mechanisms used in the IGLAM. We hypothesize that the attention weights generated by Merged Attention effectively emphasize crucial components of the overall feature maps, significantly influencing the final outcomes.

(4) Effect of the utilized baseline network. The methods compared in Table 1 and Table 2 employ various backbones for feature extraction. As indicated in Table 4, to enable a fair comparison and assess the generalization capability of the proposed QIM and IGLAM, we report the performance of our model using different backbone architectures. Specifically, we utilize ConvNeXt-Tiny 50, ConvNeXt-Small 50, ResNet-50 66, and ResNet-101 66 as backbones. The results reveal that employing GLQINet across these four backbones leads to a significant improvement in average Recall@1, with a \(17.16\%\) increase for the Drone \(\rightarrow\) Satellite task and a \(7.13\%\) increase for the Satellite \(\rightarrow\) Drone task. Notably, in the Drone \(\rightarrow\) Satellite task, our method enhances the Recall@1 from \(59.56\%\) to \(83.01\%\) (a \(23.45\%\) increase) for ResNet-101, and from \(82.43\%\) to \(92.40\%\) (a \(9.97\%\) increase) for ConvNeXt-Small. These findings underscore the generalization capability of GLQINet across different backbones. Furthermore, the ConvNeXt-Tiny network outperforms the ResNet-50 network by at least \(5.99\%\) in both Recall@1 and AP for the two cross-view tasks. In our comparisons of ConvNeXt-Small with other backbones, we observe that a deeper ConvNeXt model shows a slight increase in performance, likely due to its more complex architecture. Consequently, to achieve a balance between accuracy and complexity, we select ConvNeXt-Tiny as the baseline model for the remainder of this paper.

(5) Effect of input image size. Using a smaller resolution reduces computational cost but may suppress fine-grained spatial cues, while larger inputs improve feature expressiveness at the expense of memory and training time. To evaluate this trade-off, we tested multiple resolutions ranging from \(128\times 128\) to \(512\times 512\). As shown in Table 5, GLQINet maintains strong performance across all settings, demonstrating robustness to image scale. Notably, increasing the resolution to \(384\times 384\) yields a slight performance gain, but at a higher computational cost. Therefore, we select \(256\times 256\) as the default setting in the main experiments to balance efficiency and accuracy.

(6) Effect of loss weighting. Our total loss function combines global and local supervision terms to jointly optimize high-level semantics and directional local cues. To assess the impact of their relative contributions, we vary the weighting ratio of the local feature loss while keeping the global term fixed. As shown in Table 6, performance drops significantly when either term dominates, confirming that both components are essential and complementary. The best performance is achieved with a balanced weighting of 0.05 : 0.8, which we adopt in all experiments.

Visualization

As a key qualitative assessment, we present the visualization of the retrieval results of our GLQINet on the University-1652 dataset. Figure 6 illustrates the visualization outcomes for various tasks in the performance from R@1 to R@5, where blue boxes indicate correct matches and red boxes represent incorrect matches. It is evident that our model demonstrates remarkable matching results, even with randomly selected images that differ in domain and viewpoint. In the Drone \(\rightarrow\) Satellite task, our model successfully distinguishes between negative samples that closely resemble positive ones. This visual evaluation confirms the effectiveness of GLQINet in discerning and aligning complex imagery, highlighting its potential as a valuable tool for advanced geo-localization applications.

To better recognize the model’s limitations and shortcomings, we analyze the scenarios of the error cases carefully. As shown in Fig. 6, GLQINet clearly outperforms the baseline in terms of discriminative ability. However, failures occur when there is a high degree of similarity among drone-view images. The imprecise texture mapping of front-layer features leads to difficulties in providing the model with more accurate retrievals.

Limitations

While GLQINet demonstrates strong performance on multiple cross-view geo-localization benchmarks, it still has certain limitations. First, the model relies on large-scale annotated datasets to learn robust and view-invariant representations, which may limit its effectiveness in low-resource or unlabeled environments. Second, the dual-stream architecture combined with multi-directional pooling introduces additional computational and memory overhead, which may hinder real-time deployment on edge devices or UAV platforms with constrained resources. To mitigate these limitations, future work will explore semi-supervised and self-supervised learning paradigms to reduce dependence on manual annotations. We also plan to investigate model compression techniques and lightweight architectural variants to improve efficiency. Additionally, incorporating domain adaptation strategies may further enhance the generalization capability of GLQINet across unseen regions or diverse geographic conditions. Lastly, we aim to evaluate and adapt the model on real UAV imagery to better support practical applications.

Conclusion

With the swift advancement of UAV technology, there is an increasing demand for autonomous control of UAVs, particularly in navigating without GPS signals. Image-based localization has emerged as a crucial solution to this challenge. This paper addresses the problem of cross-view image matching for geo-localization. We identified the limitations of current CNN and Transformer-based approaches and introduced an innovative method that ensured a comprehensive feature representation, incorporating a Quadrant Insight Module (QIM) and an Integrated Global-Local Attention Module (IGLAM). Recent progress in various computer vision tasks provides transferable insights into robust feature learning across modalities and representations. For example, frequency-to-spectral mapping GANs 67,68 employ adversarial training to translate data between frequency and spectral domains, effectively reducing domain discrepancies and enabling robust alignment. This principle of cross-domain transformation aligns with our motivation of bridging the visual gap between aerial and ground perspectives. Fountain Fusion Networks 69,70 demonstrate the efficacy of multi-level feature fusion for scene understanding, emphasizing the need to jointly leverage global context and fine-grained structure. Inspired by this, GLQINet employs quadrant-based decomposition and an integrated global-local attention mechanism to capture complementary spatial cues. Additionally, co-segmentation assisted cross-modality person re-identification 71,72 shows how structural priors and shared segmentation masks can guide feature alignment across heterogeneous domains. Analogously, our design incorporates directional structural priors to enhance local discriminability and spatial alignment, which is often overlooked in conventional geo-localization frameworks. By drawing on insights from these domains, GLQINet departs from traditional pipelines that treat global and local features independently, and instead unifies their modeling within a merged attention framework to enhance robustness under challenging cross-view conditions. The effectiveness of our proposed method was validated on two benchmark datasets, University-1652 and SUES-200, with experimental results showcasing its superior performance compared to existing state-of-the-art methods. Significantly, to utilize spatial correlations across the image, our GLQINet effectively captured diverse local pattern stripes and fostered a mutual reinforcement between global and local semantics. This paves the way for promising applications in future Vision Transformer-related research. In the next stages, we will focus on investigating ways to further enhance matching accuracy, especially with real UAV image data, and work towards optimizing the Transformer model for practical UAV applications.

Data availability

The data utilized in this study can be obtained from the corresponding author upon reasonable request.

References

Huang, G. et al. Dino-mix enhancing visual place recognition with foundational vision model and feature mixing. Sci. Rep. 14, 22100 (2024).

Fockert, A. et al. Assessing the detection of floating plastic litter with advanced remote sensing technologies in a hydrodynamic test facility. Sci. Rep. 14, 25902 (2024).

Yang, J. et al. Gle-net: Global-local information enhancement for semantic segmentation of remote sensing images. Sci. Rep. 14, 25282 (2024).

Bai, C., Bai, X., Wu, K. & Ye, Y. Adaptive condition-aware high-dimensional decoupling remote sensing image object detection algorithm. Sci. Rep. 14, 20090 (2024).

Shahabi, H. & Hashim, M. Landslide susceptibility mapping using GIS-based statistical models and remote sensing data in tropical environment. Sci. Rep. 5, 9899 (2015).

Xu, Q. et al. Heterogeneous graph transformer for multiple tiny object tracking in rgb-t videos. IEEE Trans. Multimed. 26, 9383–9397 (2024).

Middelberg, S., Sattler, T., Untzelmann, O. & Kobbelt, L. Scalable 6-dof localization on mobile devices. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part II 13. 268–283 (Springer, 2014).

An, Z., Wang, X., Li, B., Xiang, Z. & Zhang, B. Robust visual tracking for UAVs with dynamic feature weight selection. Appl. Intell. 53, 3836–3849 (2023).

Xu, Q., Wang, L., Wang, Y., Sheng, W. & Deng, X. Deep bilateral learning for stereo image super-resolution. IEEE Signal Process. Lett. 28, 613–617 (2021).

Krylov, V. A., Kenny, E. & Dahyot, R. Automatic discovery and geotagging of objects from street view imagery. Remote Sens. 10, 661 (2018).

Nassar, A. S., Lefèvre, S. & Wegner, J. D. Simultaneous multi-view instance detection with learned geometric soft-constraints. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 6559–6568 (2019).

Chaabane, M., Gueguen, L., Trabelsi, A., Beveridge, R. & O’Hara, S. End-to-end learning improves static object geo-localization from video. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2063–2072 (2021).

Zheng, Z., Wei, Y. & Yang, Y. University-1652: A multi-view multi-source benchmark for drone-based geo-localization. In Proceedings of the 28th ACM International Conference on Multimedia. 1395–1403 (2020).

Lin, T.-Y., Belongie, S. & Hays, J. Cross-view image geolocalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 891–898 (2013).

Castaldo, F., Zamir, A., Angst, R., Palmieri, F. & Savarese, S. Semantic cross-view matching. In Proceedings of the IEEE International Conference on Computer Vision Workshops. 9–17 (2015).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 10012–10022 (2021).

Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Dai, M., Hu, J., Zhuang, J. & Zheng, E. A transformer-based feature segmentation and region alignment method for UAV-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 32, 4376–4389 (2021).

Zhu, S., Shah, M. & Chen, C. Transgeo: Transformer is all you need for cross-view image geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1162–1171 (2022).

Yang, H., Lu, X. & Zhu, Y. Cross-view geo-localization with layer-to-layer transformer. Adv. Neural Inf. Process. Syst. 34, 29009–29020 (2021).

Wang, T. et al. Each part matters: Local patterns facilitate cross-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 32, 867–879 (2021).

Lin, J. et al. Joint representation learning and keypoint detection for cross-view geo-localization. IEEE Trans. Image Process. 31, 3780–3792 (2022).

Li, H., Chen, Q., Yang, Z. & Yin, J. Drone satellite matching based on multi-scale local pattern network. In Proceedings of the 2023 Workshop on UAVs in Multimedia: Capturing the World from a New Perspective. 51–55 (2023).

Vaswani, A. Attention is all you need. In Advances in Neural Information Processing Systems (2017).

Peng, J., Wang, H., Xu, F. & Fu, X. Cross domain knowledge learning with dual-branch adversarial network for vehicle re-identification. Neurocomputing 401, 133–144 (2020).

Zhuang, J. et al. A semantic guidance and transformer-based matching method for UAVs and satellite images for UAV geo-localization. IEEE Access 10, 34277–34287 (2022).

Kuma, R., Weill, E., Aghdasi, F. & Sriram, P. Vehicle re-identification: An efficient baseline using triplet embedding. In 2019 International Joint Conference on Neural Networks (IJCNN). 1–9 (IEEE, 2019).

Tian, Y., Chen, C. & Shah, M. Cross-view image matching for geo-localization in urban environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3608–3616 (2017).

Lin, T.-Y., Cui, Y., Belongie, S. & Hays, J. Learning deep representations for ground-to-aerial geolocalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5007–5015 (2015).

Workman, S., Souvenir, R. & Jacobs, N. Wide-area image geolocalization with aerial reference imagery. In Proceedings of the IEEE International Conference on Computer Vision. 3961–3969 (2015).

Liu, L. & Li, H. Lending orientation to neural networks for cross-view geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5624–5633 (2019).

Zhu, S., Yang, T. & Chen, C. Vigor: Cross-view image geo-localization beyond one-to-one retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3640–3649 (2021).

Sun, Y. et al. Cross-view object geo-localization in a local region with satellite imagery. In IEEE Transactions on Geoscience and Remote Sensing (2023).

Zhu, R. et al. Sues-200: A multi-height multi-scene cross-view image benchmark across drone and satellite. IEEE Trans. Circuits Syst. Video Technol. 33, 4825–4839 (2023).

Workman, S. & Jacobs, N. On the location dependence of convolutional neural network features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 70–78 (2015).

Shi, Y., Liu, L., Yu, X. & Li, H. Spatial-aware feature aggregation for image based cross-view geo-localization. Adv. Neural Inf. Process. Syst. 32 (2019).

Shen, F., Shu, X., Du, X. & Tang, J. Pedestrian-specific bipartite-aware similarity learning for text-based person retrieval. In Proceedings of the 31th ACM International Conference on Multimedia (2023).

Shen, F., Du, X., Zhang, L. & Tang, J. Triplet contrastive learning for unsupervised vehicle re-identification. arXiv preprint arXiv:2301.09498 (2023).

Shen, F., Xie, Y., Zhu, J., Zhu, X. & Zeng, H. Graph interactive transformer for vehicle re-identification. In IEEE Transactions on Image Processing, Git (2023).

Sun, Y., Zheng, L., Yang, Y., Tian, Q. & Wang, S. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision (ECCV). 480–496 (2018).

Wang, G., Yuan, Y., Chen, X., Li, J. & Zhou, X. Learning discriminative features with multiple granularities for person re-identification. In Proceedings of the 26th ACM International Conference on Multimedia. 274–282 (2018).

Zhang, X. et al. Alignedreid: Surpassing human-level performance in person re-identification. arXiv preprint arXiv:1711.08184 (2017).

Luo, H. et al. Alignedreid++: Dynamically matching local information for person re-identification. Pattern Recognit. 94, 53–61 (2019).

Zhuang, J., Dai, M., Chen, X. & Zheng, E. A faster and more effective cross-view matching method of UAV and satellite images for UAV geolocalization. Remote Sens. 13, 3979 (2021).

Shen, F. & Tang, J. Imagpose: A unified conditional framework for pose-guided person generation. In The Thirty-eighth Annual Conference on Neural Information Processing Systems (2024).

Shen, F. et al. Imagdressing-v1: Customizable virtual dressing. arXiv preprint arXiv:2407.12705 (2024).

Shen, F. et al. Advancing pose-guided image synthesis with progressive conditional diffusion models. arXiv preprint arXiv:2310.06313 (2023).

Shen, F. et al. Boosting consistency in story visualization with rich-contextual conditional diffusion models. arXiv preprint arXiv:2407.02482 (2024).

Shen, F. et al. Long-term talkingface generation via motion-prior conditional diffusion model. arXiv preprint arXiv:2502.09533 (2025).

Liu, Z. et al. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11976–11986 (2022).

Dou, Z.-Y. et al. An empirical study of training end-to-end vision-and-language transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18166–18176 (2022).

Hendricks, L. A., Mellor, J., Schneider, R., Alayrac, J.-B. & Nematzadeh, A. Decoupling the role of data, attention, and losses in multimodal transformers. Trans. Assoc. Comput. Linguist. 9, 570–585 (2021).

Zeng, W., Wang, T., Cao, J., Wang, J. & Zeng, H. Clustering-guided pairwise metric triplet loss for person reidentification. IEEE Internet Things J. 9, 15150–15160 (2022).

Chen, K., Lei, W., Zhao, S., Zheng, W.-S. & Wang, R. PCCT: Progressive class-center triplet loss for imbalanced medical image classification. IEEE J. Biomed. Health Inform. 27, 2026–2036 (2023).

Wang, T. et al. Learning cross-view geo-localization embeddings via dynamic weighted decorrelation regularization. arXiv preprint arXiv:2211.05296 (2022).

Wang, T. et al. Multiple-environment self-adaptive network for aerial-view geo-localization. Pattern Recognit. 152, 110363 (2024).

Zhu, Y., Yang, H., Lu, Y. & Huang, Q. Simple, effective and general: A new backbone for cross-view image geo-localization. arXiv preprint arXiv:2302.01572 (2023).

Hu, Q., Li, W., Xu, X., Liu, N. & Wang, L. Learning discriminative representations via variational self-distillation for cross-view geo-localization. Comput. Electr. Eng. 103, 108335 (2022).

Tian, X., Shao, J., Ouyang, D. & Shen, H. T. UAV-satellite view synthesis for cross-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 32, 4804–4815 (2021).

Bui, D. V., Kubo, M. & Sato, H. A part-aware attention neural network for cross-view geo-localization between UAV and satellite. J. Robot. Netw. Artif. Life 9, 275–284 (2022).

Zhu, R., Yang, M., Yin, L., Wu, F. & Yang, Y. UAV’s status is worth considering: A fusion representations matching method for geo-localization. Sensors 23, 720 (2023).

Shen, T., Wei, Y., Kang, L., Wan, S. & Yang, Y.-H. MCCG: A convnext-based multiple-classifier method for cross-view geo-localization. In IEEE Transactions on Circuits and Systems for Video Technology (2023).

He, K., Zhang, X., Ren, S. & Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision. 1026–1034 (2015).

Ding, L., Zhou, J., Meng, L. & Long, Z. A practical cross-view image matching method between UAV and satellite for UAV-based geo-localization. Remote Sens. 13, 47 (2020).

Liu, Z. et al. Swin transformer v2: Scaling up capacity and resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12009–12019 (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 770–778 (2016).

Wang, D., Gao, L., Qu, Y., Sun, X. & Liao, W. Frequency-to-spectrum mapping GAN for semisupervised hyperspectral anomaly detection. CAAI Trans. Intell. Technol. 8, 1258–1273 (2023).

Zhang, W. et al. Gacnet: Generate adversarial-driven cross-aware network for hyperspectral wheat variety identification. IEEE Trans. Geosci. Remote Sens. 62, 1–14 (2023).

Zhang, T. et al. FFN: Fountain fusion net for arbitrary-oriented object detection. IEEE Trans. Geosci. Remote Sens. 61, 1–13 (2023).

Wang, C. et al. Mlffusion: Multi-level feature fusion network with region illumination retention for infrared and visible image fusion. Infrared Phys. Technol. 134, 104916 (2023).

Lu, Y. et al. Cross-modality person re-identification with shared-specific feature transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13379–13389 (2020).

Huang, N., Xing, B., Zhang, Q., Han, J. & Huang, J. Co-segmentation assisted cross-modality person re-identification. Inf. Fusion 104, 102194 (2024).

Funding

This research is partially funded by the Major Program of National Natural Science Foundation of China (No.12292980,12292984,NSFC12031016 and NSFC12426529), National Key R&D Program of China (2023YFA1009000, 2023YFA1009004, 2020YFA0712203 and 2020YFA0712201), Beijing Natural Science Foundation (BNSF-Z210003), and the Department of Science, Technology and Information of the Ministry of Education (No. 8091B042240).

Author information

Authors and Affiliations

Contributions

Conceptualization, Xu Jin; Formal analysis, Xu Jin; Investigation, Xu Jin; Methodology, Xu Jin; Software, Xu Jin and Gao Tianyan; Validation, Xu Jin; Writing—original draft, Xu Jin; Writing – review & editing, Yin Junping and Zhang Juan. All authors have read and agreed to the published version of manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jin, X., Junping, Y., Juan, Z. et al. Enhancing cross view geo localization through global local quadrant interaction network. Sci Rep 15, 33431 (2025). https://doi.org/10.1038/s41598-025-18935-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-18935-6