Abstract

With the increasing complexity and automation of port logistics systems, ensuring the operational safety and real-time perception capability of ship unloaders has become a pressing issue in intelligent port equipment research. This study proposes a machine vision-based method for dynamic 3D coordinate system construction to optimize safety during unloader operations. A comprehensive system integrating RGB-D cameras, LiDAR sensors, IMU units, and SLAM algorithms is designed to achieve real-time 3D mapping and risk perception. The point cloud environment is enhanced through Voronoi-based grab bucket skeleton recognition and trajectory prediction. Experimental validation was conducted in laboratory and actual port environments. The 3D mapping module achieved a maximum root mean square error (RMSE) of 6.1 cm and a real-time frame rate of 14 FPS under night conditions. Collision prediction accuracy reached 91.2% with an average response time of 1.4 s. Over a 7-day continuous test, only 3 false alarms were recorded out of 68 warning events, confirming the system’s robustness and reliability. The results demonstrate that the proposed method significantly enhances spatial awareness and operational safety of unloaders, offering potential for broader applications in large-scale port equipment automation.

Similar content being viewed by others

Introduction

With the increasing complexity of port logistics, automation and safety in handling equipment have become critical challenges in both research and engineering applications1,2,3. As the core machinery for bulk cargo handling, the unloader performs essential tasks such as grabbing, transferring, and placing heavy materials. However, it often operates in open and unstructured environments with strong lighting variation, dense dust, and irregular movement patterns4,5. These conditions create significant risks for both equipment and personnel, raising operational costs and compromising efficiency.

Traditional safety monitoring methods mainly rely on three approaches6,7,8: physical devices such as limiters and laser sensors to prevent overtravel and collisions, PLC-based control logic to constrain movement, and manual video surveillance by dispatchers. While useful, these methods are inherently limited. For instance, Zhang et al.9 developed a real-time monitoring system combining multi-source sensing and finite element analysis, but such systems struggle with adaptability in fast-changing environments. Kara et al.10 applied fuzzy analytical hierarchy methods for risk assessment, yet these remain qualitative and lack real-time responsiveness. Chen et al.11 explored a vision-based system for gantry cranes, demonstrating accurate target tracking, but vision-only methods are vulnerable to occlusion and poor visibility. Overall, existing approaches cannot robustly handle dynamic obstacles or maintain perception stability under challenging port conditions12,13.

Recent advances in artificial intelligence and machine vision have opened new opportunities for smart ports14,15,16. For example, Shen et al.17 proposed a monocular vision system to locate unloader buckets, reducing dependence on costly LiDAR, while Wang et al.18 used CNNs and GANs to generate a 3D coordinate system, showing promising accuracy in simulations. Sunwoo Hwang et al.19 improved object detection by combining YOLO and Mask R-CNN with fuzzy clustering, achieving high recognition accuracy at real-time speeds. In parallel, Visual SLAM and point cloud reconstruction techniques have enabled non-contact 3D perception and rich spatial awareness20,21. However, most of these methods remain isolated, either focusing on vision-only perception or simulation-based demonstrations, with limited integration into dynamic unloader operations. Key gaps persist: maintaining spatial consistency under structural changes, detecting obstacles in real time, and transforming perception into actionable safety warnings.

To address these challenges, this paper proposes a machine vision–based framework for dynamic 3D coordinate system construction and unloader safety optimization. By fusing multi-view vision and IMU data with LiDAR point clouds through a sliding window nonlinear optimization method, the system reduces drift, maintains spatiotemporal consistency, and improves adaptability in disturbed environments. The main contributions are as follows:

-

Construction of a stable, dynamic 3D coordinate system adapted to structural changes;

-

Real-time 3D reconstruction of the unloader’s workspace;

-

Collision risk detection and a safety warning mechanism;

-

Experimental validation in real port environments with high accuracy and low false alarms.

In this study, “optimization” refers to systematically improving unloader safety and operational performance under defined objectives and constraints. The objective is to minimize collision risk and mapping error while maximizing real-time performance; the constraints include mechanical movement limits, environmental variability such as dust and lighting, and computational capacity of embedded hardware. The solution is realized by integrating LiDAR-based point cloud reconstruction, visual SLAM, and Voronoi skeleton extraction into a unified framework. This definition provides a clear foundation for the technical developments and evaluations presented in the following sections. Compared with existing approaches, our method addresses key weaknesses. IMU-only or visual odometry systems suffer from drift and lose consistency under structural changes or occlusions. Vision-based methods are sensitive to lighting, dust, and obstruction, while LiDAR-only frameworks, though geometrically accurate, lack semantic depth for risk prediction. Our approach fuses LiDAR, multi-view vision, and IMU data through sliding window nonlinear optimization, reducing drift, improving robustness, and enabling more accurate real-time obstacle detection with fewer false alarms.

System approach

To ensure reliable object localization and environment perception in complex port scenarios, this study adopts LiDAR-based point cloud data rather than relying solely on RGB or thermal imaging. RGB cameras, though capable of capturing rich texture, are highly susceptible to environmental noise such as variable lighting, heavy dust, and occlusion—common challenges in crane operations. Thermal imaging, while useful for detecting temperature-differentiated targets, lacks the spatial resolution and structural detail required for accurate 3D modeling and collision risk prediction. In contrast, LiDAR provides dense and lighting-independent point cloud data with sub-centimeter accuracy, enabling precise spatial structure acquisition, robust object contour mapping, and real-time depth perception. These capabilities are especially critical for capturing the dynamic geometry of grab buckets, material piles, and hull edges. Furthermore, LiDAR exhibits strong resilience to nighttime conditions, rapid mechanical movement, and interference from multiple obstacles, making it a robust and indispensable sensing modality for unstructured and highly dynamic port environments.

LiDAR-based intelligent environmental sensing system

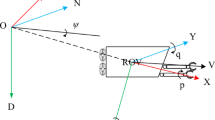

A mechanical surround-type LiDAR is selected as the environmental sensing device to scan the grab crane’s operating environment and ship cargo. It offers the operator intuitive spatial awareness and reliable data support for efficient path planning. This type of LiDAR provides 360° panoramic coverage by rotating at high speed through mechanical components such as rotating platforms or lenses. It features high spatial resolution, enabling accurate detection of both large structures and small objects across wide operating areas—an essential capability for port unloading scenarios22,23,24. Structurally, the system comprises three main modules: a transmission module that emits laser beams, a scanning module that directs the beams, and a receiving module that captures reflected pulses. The distance to objects is calculated by measuring the time delay between laser emission and return. The working principle is illustrated in Fig. 1.

Figure 1 illustrates the working principle of LiDAR distance measurement. A laser source emits pulses that are directed by the optical system and scanning mechanism toward the object. The reflected signals are collected by the optical and electrical systems, then amplified and processed. By measuring the time delay between emission and detection, the system calculates the distance to the object, enabling accurate 3D spatial mapping.

To perceive the grab crane’s operational environment, the LiDAR device is mounted in a fixed position to ensure proper alignment with the material pile. Prior to scanning, calibration is performed by adjusting the device’s horizontal and vertical orientation, ensuring accurate positioning relative to the cargo in the ship’s hold.

Global calibration involves four coordinate systems reflecting the degrees of freedom of the unloader’s movement: the stationary track coordinate system \(O_{{{\text{rail}}}} - X_{{{\text{rail}}}} Y_{{{\text{rail}}}} Z_{{{\text{rail}}}}\), which also serves as the yard reference frame; the yaw coordinate system \(O_{{{\text{yaw}}}} - X_{{{\text{yaw}}}} Y_{{{\text{yaw}}}} Z_{{{\text{yaw}}}}\); the pitch coordinate system \(O_{{{\text{pitch}}}} - X_{{{\text{pitch}}}} Y_{{{\text{pitch}}}} Z_{{{\text{pitch}}}}\); and the LiDAR’s intrinsic coordinate system \(O_{{{\text{laser}}}} - X_{{{\text{laser}}}} Y_{{{\text{laser}}}} Z_{{{\text{laser}}}}\). Except for the track system, all coordinate frames shift dynamically during operations.

Once activated, the LiDAR emits laser pulses, capturing the time delay of reflected signals to compute spatial distances and generate 3D positional data for the material pile. The resulting point cloud undergoes outlier removal, noise filtering, and error correction. Multiple scans are then fused to reconstruct a comprehensive point cloud of the pile and surrounding environment. This visual representation supports real-time situational awareness, helping operators detect obstacles and operational hazards more intuitively (see Fig. 2)25,26.

Voronoi-based grab skeleton recognition algorithm

Voronoi diagrams, also known as Thiessen polygons or Dirichlet diagrams, are composed of continuous polygons formed by vertical or bisecting curves connecting adjacent points. Characteristics of Voronoi diagrams: A fundamental generator in the Voronoi diagram is a point inside a V-shaped polygon, where the distance from this point to the generator is shorter than to any other generator27; the inner endpoints on the polygon’s edges are equidistant from the generator that created that edge; the Voronoi boundary line between adjacent diagrams takes the subset of the original adjacent boundary28.

The most critical part of the Voronoi algorithm is constructing the Delaunay triangulation. A triangle is automatically constructed from a discrete set of points, forming the Delaunay triangulation. The discrete points and the triangles they form are numbered, and the triangles made by three discrete points are recorded. Once the traversal process is complete, all Voronoi edges are identified, and a Voronoi diagram is drawn based on these edges. The specific process is shown in Fig. 3.

Figure 3 illustrates the visual recognition process of the Voronoi algorithm. The procedure begins with generating a Delaunay triangulation and calculating the circumcenters of each triangle. Neighboring triangles are then searched and, if found, the shared edge is recorded as a Voronoi edge. If no neighboring triangle exists, the outer bisector line is recorded. This iterative process produces the Voronoi diagram, which forms the basis for skeleton extraction and path recognition. The key to constructing a Voronoi diagram is the generation of the Delaunay triangulation. The characteristics of the Delaunay triangulation29,30 are:

① Empty circle property; ② Closest proximity; ③ Uniqueness; ④ Optimality; ⑤ Regularity; ⑥ Locality; ⑦ The convex polygon shell.

Delaunay triangulation is a standard form of triangular division, and there are several algorithms to achieve it. In this case, the Bowyer-Watson algorithm is used. The basic steps of the algorithm are:

-

(1)

Construct a super triangle that contains all the scattered points and place it into a triangle list;

-

(2)

Insert the scattered points of the point set in sequence, find the triangle in the triangle list whose circumcircle contains the inserted point (called the influencing triangle for that point), delete the common edges of the influencing triangle, and connect the inserted point with all the vertices of the influencing triangle to complete the insertion of a point into the Delaunay triangle list;

-

(3)

Perform comprehensive optimization on the locally newly formed triangles based on optimization criteria, and place the newly formed triangles into the Delaunay triangle list31.

Repeat step 2 above until all the scattered points are inserted, as shown in Fig. 4. The Bowyer–Watson algorithm was adopted for Delaunay triangulation due to its robustness, topological consistency, and computational efficiency when processing unstructured 2D spatial data derived from LiDAR point clouds. The algorithm operates based on an incremental construction strategy within an enclosing super-triangle, repeatedly removing triangles whose circumcircles contain the new point, and retriangulating the resulting cavity. This process guarantees that the resulting mesh satisfies the empty circumcircle property fundamental to Delaunay triangulation, ensuring non-overlapping triangles with maximized minimum angles—an essential feature for preserving geometric fidelity in LiDAR-derived environments. Compared to the incremental insertion method, Bowyer–Watson offers improved clarity in conflict zone identification and better cache coherence, especially beneficial when handling millions of spatial points. Furthermore, while the divide-and-conquer approach achieves optimal asymptotic complexity \((O(n\log n))\), its recursive structure introduces significant overhead in real-time or streaming scenarios, and its implementation becomes less tractable in non-uniform point distributions often observed in port unloading scenes.

The Bowyer–Watson method, in contrast, supports dynamic point set updates with localized triangulation repair, making it more adaptive and scalable. This local adaptability is particularly advantageous in safety–critical environments like port unloading, where moving machinery and cargo generate frequent updates in the point cloud. Thus, the Bowyer–Watson algorithm offers a theoretically sound and practically efficient foundation for Delaunay-based Voronoi diagram generation under real-world operational constraints.

The technical solution for grab skeleton machine vision in the fully automated unloader system is built upon a grid map generated via SLAM software, which is passed to a global planner. This planner applies search algorithms—such as A*, dynamic A*, the framework method, and the jump point method—under specific constraints to derive a smooth and visually efficient path structure. The resulting Voronoi skeleton map offers strong edge-pruning capabilities and supports robust global path planning. For deeper recognition, the algorithm identifies the main framework of the grab skeleton by selecting N nodes counterclockwise or by defining N side edges. Based on Voronoi rules, the system generates the skeleton and guiding points through a multi-step process: first, LiDAR and software collaboratively map the environment and define the initial skeleton shape. Then, Voronoi points are extracted, filtered by proximity to obstacles, and traversed to identify and connect valid intersection points. Guiding points are established from the spatial relationship between intersection lines and obstacles, and this information is exported and compared with the pre-processed grid map. If a potential collision is detected, immediate braking is triggered; otherwise, the system loops back for continuous scanning. Each full cycle takes approximately 15 ms (1/60 of a second), enabling real-time safety assurance and high-efficiency operation. To further enhance algorithmic transparency, pseudocode is provided to illustrate the core logic: a 2D projection of the point cloud is computed, followed by Delaunay triangulation and Voronoi diagram generation, forming the foundation for dynamic skeleton extraction and real-time monitoring. The skeleton is obtained by filtering Voronoi edges that pass through obstacle-free areas. Finally, redundant branches are pruned based on connectivity and angle heuristics to retain the main path, as shown in Table1. To improve clarity, the Voronoi-based skeleton extraction is described step by step, with each stage linked to its function in the system. First, the 2D projection of the point cloud is computed and transformed into a Delaunay triangulation, which provides the geometric foundation for Voronoi generation. Second, Voronoi edges are derived from the triangulation to represent potential medial axes. Third, edges intersecting obstacles are removed so that only safe candidate paths remain. Fourth, redundant or noisy branches are pruned, leaving a simplified skeleton that highlights the main structural paths of the grab and its environment. Finally, the extracted skeleton is directly fed into the global planner for collision detection and safety warning. This sequence ensures that each algorithmic step serves a specific purpose, from geometric construction to real-time safety decision-making.

3D mapping module design

To achieve real-time spatial perception of the unloader’s operational area, this paper constructs a 3D mapping module based on Visual SLAM. The system utilizes multiple RGB-D cameras placed on the grab, trolley, and track structure to capture continuous image frames and depth information. The ORB-SLAM2 framework is used to perform keyframe extraction, feature point matching, and map optimization. After feature points are extracted from the image frames by the visual frontend, they are matched with historical keyframes to calculate pose transformations, forming a continuous trajectory that is then reprojected into 3D space to generate a dense point cloud. The system then combines IMU information for tightly coupled optimization, effectively eliminating trajectory drift caused by mechanical vibrations and lighting changes. The generated point cloud map is mapped in real-time to the unloader’s operation interface, enabling dynamic modeling of structures such as the grab, material hopper, and ship’s hold32. This module supports heterogeneous sensor fusion and can be extended to incorporate LiDAR data for calibration and correction, ultimately outputting high-precision 3D mapping results in a unified coordinate system. These results provide spatial support for subsequent collision prediction and task scheduling, as shown in Fig. 5. In this module, optimization refers to the joint refinement of camera poses, IMU states, and point cloud alignment within a unified factor graph framework. The goal is to minimize reprojection errors of visual landmarks and integration errors of inertial measurements, thereby reducing trajectory drift and ensuring spatial consistency of the reconstructed environment. This process directly improves the accuracy of 3D mapping and provides a stable coordinate reference for subsequent collision detection and task scheduling.

To effectively integrate multi-source information such as RGB-D data, inertial measurements, and visual keyframes, the back-end of the system employs a nonlinear optimization model based on a factor graph representation. The objective is to minimize the following cost function:

where, X is the set of all state variables including camera poses, velocities, and IMU biases; \({\mathbf{r}}_{{{\text{reproj}}}}^{(i)}\) denotes the reprojection residual of visual landmark iii, computed as the difference between observed pixel coordinates and those projected from estimated 3D landmarks; \({\mathbf{r}}_{{{\text{imu}}}}^{(j)}\) represents the IMU residual computed via pre-integration between two frames j and j + 1; \({\Sigma }_{r}\), \({\Sigma }_{i}\) are the associated covariance matrices (information matrices) that weight the confidence of each measurement type. The optimization is solved iteratively using the Levenberg–Marquardt algorithm, within a sliding window of recent keyframes to maintain computational efficiency.

Experiments and results analysis

The LiDAR calibration diagram illustrates the intrinsic and extrinsic calibration procedure involving geometric alignment with known reference markers. The Voronoi skeleton extraction diagram presents a step-by-step representation of spatial segmentation, medial axis extraction, and topology simplification, as shown in Fig. 6.

Evaluation of 3D mapping performance

The use of point cloud data in this study is essential for capturing accurate spatial and geometric information of the unloading environment, which cannot be reliably obtained through conventional RGB or night vision cameras. Unlike 2D imaging, point cloud data provides dense three-dimensional measurements that are critical for modeling the structure of the grab crane, its surroundings, and dynamic obstacles with high precision. This spatial fidelity enables real-time depth estimation, volumetric analysis, and trajectory planning for the unloading process. Moreover, in dusty or low-light port environments where RGB imaging may suffer from occlusion or poor visibility, LiDAR-generated point clouds remain robust and unaffected by lighting conditions. These advantages justify the adoption of 3D mapping techniques for ensuring operational safety and automation reliability. To validate the feasibility and effectiveness of the machine vision-based dynamic 3D coordinate system construction method in the unloader operation process, typical experimental platforms were set up in both laboratory and actual port environments. Data collection and system operation tests were conducted in multiple scenarios. The experimental environments include three operational conditions: model laboratory, daytime operations at the port, and nighttime operations at the port. These cover typical conditions ranging from ideal controlled environments to complex dynamic field conditions, ensuring that the experimental results are representative and generalizable.

In terms of hardware configuration, the core of the 3D mapping system adopts a dual-vision sensor structure that combines the Intel RealSense D455 RGB-D camera and the Livox Mid-70 LiDAR. The former is responsible for capturing image and depth information, while the latter provides high-density point cloud support. Additionally, to further improve the robustness of pose estimation, the system integrates an Xsens MTi-30 IMU unit, which provides acceleration and angular velocity data for auxiliary optimization. These devices are installed at key structural locations of the unloader, including the grab beam, pitch platform, and the main frame of the trolley, and are fixed using aluminum alloy mounting brackets to ensure stability of the viewpoint and consistency of the coordinate system. In the port environment (Fig. 7), to avoid the impact of day-night lighting differences on recognition performance, the RGB image channel is equipped with an automatic exposure and median filtering enhancement module.

In terms of data collection, the system continuously acquires raw image and IMU data at a frequency of 20 frames per second and uses the ROS platform to perform timestamp alignment and buffering, ensuring consistency in the input for subsequent visual SLAM processing. During the experiment, the system automatically records the grab’s motion trajectory, changes in the grabbing position, and environmental point cloud data, while using GNSS and LiDAR as external reference sources to evaluate the accuracy of the mapping trajectory. A total of over 4 h of sample data was collected, covering real-world factors such as day-night lighting changes, wind speed interference, and non-uniform trolley movement, providing abundant data support for subsequent 3D mapping and safety performance analysis. To visually demonstrate the system’s 3D mapping performance in a typical port operational environment, Fig. 8 presents a spatial point cloud image obtained through the fusion of LiDAR and RGB-D camera sensing. The various structural areas in the image are presented with different color distributions based on distance information. The boundaries of the grab, trolley track, and material hopper are accurately reconstructed, with the boundary of the ship’s hold clearly reflected in the red region, verifying the system’s spatial recognition ability in complex industrial scenarios. The white rectangular box further highlights the grab operation target area, facilitating precise constraints for subsequent collision detection and path planning modules, as shown in Fig. 8.

To improve the scientific rigor of the system evaluation, this paper uses the RMSE (Root Mean Square Error) metric to quantify the mapping accuracy, while the frame rate (FPS) is collected to measure the system’s real-time performance33. The evaluation results for the three experimental scenarios are shown in Table 2.

From Table 2, it can be seen that the system performs most stably in the model laboratory environment, with the mapping error controlled within 2.8 cm and a frame rate of up to 20 FPS. Under the daytime conditions at the port, the system maintains good stability, with the mapping error slightly increasing to 4.3 cm. However, in the nighttime environment with insufficient lighting and increased background interference, the RMSE rises to 6.1 cm, but it remains within the allowable range of the system. To further evaluate the feasibility of real-time deployment on embedded platforms, a detailed system performance analysis was conducted. In addition to RMSE and FPS, we measured the processing latency breakdown, memory usage, and CPU/GPU computational load on an NVIDIA Jetson Xavier NX. As shown in Table 3, the total processing time per frame is approximately 86.2 ms, with LiDAR point cloud pre-processing consuming 24.7 ms, coordinate system transformation 15.4 ms, Voronoi diagram construction 19.1 ms, and visualization output 27.0 ms. The system occupies approximately 1.3 GB of memory during operation and maintains an average CPU utilization of 48.7% and GPU utilization of 38.4%, indicating sufficient headroom for real-time performance.

Furthermore, to visually demonstrate the system’s mapping performance in different environments, Fig. 9 presents a bar comparison chart of the mapping error RMSE and frame rate FPS. It can be observed that although complex environments cause some performance fluctuations, the overall mapping accuracy and real-time performance still exhibit strong adaptability.

Figure 9 presents the comparison of mapping error RMSE and frame rate FPS across three scenarios: laboratory, port daytime, and port nighttime. The results indicate that the laboratory condition achieves the lowest RMSE of about 2.8 cm with a frame rate close to 20 FPS, showing the most stable performance. Under port daytime conditions, the RMSE increases to approximately 4.3 cm while the frame rate remains around 17 FPS, reflecting good adaptability in real environments. During nighttime operations, the RMSE rises to about 6.1 cm and the frame rate drops to nearly 14 FPS, which demonstrates weaker performance due to lighting and environmental interference. The combined results confirm that the system maintains acceptable accuracy and real-time capability across varying scenarios, ensuring reliable slope stability and vertical displacement monitoring.

Safety detection performance metrics

During the operation of the unloader’s grab, factors such as the large operating radius, irregular material pile shapes, and frequent spatial obstructions result in a high risk of collision and a probability of operational errors. Therefore, to verify the perception ability of the proposed 3D vision system in detecting collision risks for key structures and its system response efficiency during actual operation, this paper conducted multiple rounds of safety detection experiments under typical unloading conditions to assess the system’s performance in collision prediction, false alarm control, and response latency. The safety detection module primarily relies on point cloud space analysis and grab motion trajectory prediction algorithms. By capturing the relative positional relationship between the grab and the surrounding environmental model in real-time, potential contact risks are identified in advance, and early warning signals are sent to the operational system. In the experimental tests, 82 actual grab motion trajectories were recorded, including those involving close interactions with the edges of material hoppers, ship hull panels, and other structures. To further validate the practical performance of the proposed safety detection module in unloading operations, this study visually demonstrated the dynamic spatial relationship between the grab’s path and the ship’s structure through 3D point cloud mapping and trajectory tracking experiments of typical grab operation scenarios. During the tests, the system recorded the grab’s motion trajectory in real-time and, combined with the actual unloading environment at the port, achieved intelligent recognition of potential collision risks with the ship’s boundaries and cargo hold contours. Figure 10 shows the visualization results of two typical test samples, comparing error paths with safe paths from dimensions such as spatial structure, trajectory shape, and relative positioning.

As shown in Fig. 10, the point cloud map accurately reconstructs the boundaries of the cargo hold, the hull panels, and the grab’s suspension structure at the unloading site. The red curve indicates the grab’s actual motion trajectory during a specific operation, which exhibits a clear tendency to deviate toward the left boundary of the cargo hold. As this segment overlaps increasingly with the boundary point cloud, the system identifies it as a high-risk zone and promptly issues an early warning signal, thereby preventing a potential collision. In contrast, the green curve represents the system-recommended safe trajectory, and the red curve reflects the originally planned path by the operator. Comparative analysis reveals that the original path poses significant proximity risks, especially during the final descent and near the right rear boundary, whereas the system-generated path effectively maintains a minimum safe distance from structural boundaries while ensuring efficient unloading operations. The point cloud visualization of the grab’s posture and movement not only enhances situational awareness but also provides a reliable foundation for refining safety strategies. Additionally, the system’s collision detection results were rigorously validated against manual judgments, achieving a high prediction accuracy of 91.2%. The false alarm rate was limited to 4.7%, ensuring minimal disruption to regular operations, and the average early warning response time was 1.4 s—sufficient for executing obstacle avoidance or emergency stopping within operational constraints. Figure 11 illustrates a comparative bar chart summarizing the three core safety metrics, clearly demonstrating that the system sustains over 90% prediction accuracy while keeping both the false alarm rate and response latency within acceptable engineering thresholds, thus confirming its robust risk perception performance under multi-source data fusion conditions.

To visually demonstrate the system’s spatial risk recognition performance under specific operating conditions, Fig. 12 shows a 3D point cloud image of the scenario where the grab approaches the edge of the material hold during unloading. In the image, the grab structure and the boundary wall outline are clearly visible. Through dynamic envelope analysis and trajectory fitting, the system successfully identifies the area where the grab is about to make contact and provides an early warning signal in advance. This highlights the system’s strong structural recognition and temporal judgment capabilities in a multi-target spatial environment.

System operational stability analysis

To further validate the stability and anti-interference capability of the proposed system during long-term operation, a continuous 7-day operational test was conducted in the actual port working environment. The core performance indicators of the system were recorded and analyzed for fluctuations under different time periods and operating conditions. During the test, the unloader operated for an average of 8 h per day, with the system running continuously online. The built-in log module automatically recorded key parameters such as mapping frame rate, error drift, warning frequency, and visual perception integrity.

Figure 13 shows the fluctuation curve of the mapping frame rate (FPS) during continuous operation. The results indicate that, despite strong sunlight during the day and insufficient lighting at night causing some interference with the visual image quality, the overall frame rate fluctuations remain within a ± 3 FPS range, demonstrating that the SLAM module has strong image adaptability and optimization capabilities. Table 4 summarizes the comparison of the total number of early warning triggers and false alarm frequencies during the entire testing period. It can be seen that, out of a total of 68 early warning events, 3 false alarms were recorded, accounting for only 4.4%. All false alarms were related to short-term occlusion misrecognition, indicating that the system still maintains a high level of stability and reliability in the context of frequent grab movements and multi-target interactions.

Discussion

The proposed machine vision-based system for dynamic 3D coordinate frame construction has demonstrated its effectiveness in spatial awareness and risk detection during ship unloader operations. Experimental data validate the system’s robust performance across various working conditions, maintaining low mapping errors and stable frame rates. The integration of visual SLAM and LiDAR point cloud data proved effective, especially in low-light nighttime environments at the port, where the system sustained a frame rate of 14 FPS and a mapping error under 6.1 cm. Although various studies have explored SLAM or LiDAR independently for localization or obstacle detection, and Voronoi-based strategies have been used for path planning, there is limited evidence in the literature of integrated frameworks combining all three specifically for unloader safety optimization. The proposed hybrid approach—fusing SLAM-based environmental mapping, LiDAR-driven spatial data acquisition, and Voronoi skeleton extraction—represents a novel direction for enhancing real-time perception and collision avoidance during grab crane operations. By explicitly integrating these modules, the system achieves both geometric precision and path-aware decision-making, which existing siloed solutions often fail to address holistically. This highlights the adaptability of the auto-exposure and filtering modules to challenging illumination changes.

From the safety monitoring perspective, the adoption of collision analysis based on dynamic envelope prediction and motion trajectory fitting allows early detection of potential contact between the grab bucket and critical structures like the cargo hold edge and hull walls. Unlike traditional threshold-based methods, this approach models spatial relationships among multiple targets across frames, effectively identifying nonlinear dynamic hazards. The experimental results showed a high collision prediction accuracy of 91.2%. Moreover, the system maintained stable performance during a seven-day deployment under real port conditions, demonstrating resilience to lighting variation, vibration, and electromagnetic interference.

Nonetheless, certain technical limitations remain. Despite the promising performance, these limitations may be further amplified under large-scale or multi-unloader operations. Specifically, point cloud sparsity caused by rapid mechanical movement can lead to incomplete spatial representation, which may hinder the accurate detection of adjacent unloaders, structural boundaries, or overlapping operational zones. Similarly, SLAM’s vulnerability to occlusion becomes more pronounced when multiple unloaders operate simultaneously within confined visual fields, increasing the risk of pose drift and coordinate frame misalignment. Moreover, the current system does not implement inter-device communication, which limits collaborative spatial awareness among multiple units. Without a shared coordinate framework or synchronized trajectory prediction, collision avoidance may become suboptimal in densely populated unloading environments. Addressing these limitations will be essential for scaling up the system towards multi-agent coordination and real-time risk management at intelligent ports. For instance, during high-speed acceleration phases of the grab bucket, localized sparsity in the point cloud can compromise edge detection accuracy. In addition, the SLAM algorithm is sensitive to occlusions, and substantial visual blockage in the operation zone can lead to pose drift and coordinate inconsistency. The system currently relies on embedded computing platforms, which restrict scalability for more complex deep neural networks such as GCNs or Transformers. While the experimental validation in both laboratory and port environments demonstrates promising results, it should be noted that the scale of trials remains limited. The reported performance indicators—such as mapping error, frame rate, and collision prediction accuracy—confirm the feasibility of the proposed approach under the tested conditions, but further large-scale deployment and extended testing across diverse operational scenarios will be necessary to fully establish its robustness and reliability. Several limitations should be acknowledged. The system shows weaker performance at night, with increased mapping errors and lower frame rates. Environmental factors such as dust and lighting changes may also affect perception stability. In addition, the cost and complexity of integrating multiple sensors may hinder large-scale deployment. Future work will focus on improving robustness, reducing cost, and validating the system in broader applications.

Future developments should focus on three key aspects: first, integrating advanced object recognition models based on Transformer or Graph Convolutional Networks to enhance multi-target recognition under complex conditions. Transformer models offer strong global context modeling capabilities, which can enhance the system’s ability to recognize overlapping or partially occluded objects in cluttered port environments. Their attention mechanisms allow the system to weigh spatial dependencies across frames, improving accuracy in identifying cargo holds, structural edges, and dynamic targets such as grab buckets. Meanwhile, Graph Convolutional Networks (GCNs) are well-suited for relational modeling among multiple moving entities, enabling improved spatial reasoning in multi-unloader coordination scenarios. However, these advanced architectures require significantly more memory and computation, which may exceed the capabilities of current embedded platforms. This trade-off necessitates the integration of lightweight model compression strategies, such as quantization or pruning, and may call for the use of dedicated edge AI chips or offloading inference tasks to centralized servers under 5G networks; Secondly, the adoption of edge computing and 5G communication modules can address the latency and bandwidth bottlenecks often encountered in multi-unloader collaborative tasks. By deploying lightweight computing nodes directly on or near the unloader, the system can perform real-time perception, collision prediction, and decision-making locally, reducing reliance on centralized servers and improving system responsiveness. The integration of 5G enables ultra-low latency data transmission and high-speed uplink/downlink throughput, allowing spatial coordinates, risk alerts, and operational status to be rapidly shared among different units. This capability is particularly critical when multiple unloaders operate in close proximity within a confined port environment, as it facilitates synchronized motion planning and conflict avoidance. Moreover, edge computing reduces data transmission costs and mitigates privacy concerns, making it more suitable for industrial-scale deployment.

Conclusion

This study presents a comprehensive framework for dynamic 3D coordinate system construction and safety optimization in ship unloader operations under complex port environments. By integrating LiDAR-based point cloud reconstruction, visual SLAM, and Voronoi skeleton recognition, the proposed system establishes a robust spatial reference adaptable to the unloader’s dynamic structure. Furthermore, a multi-modal sensing and risk prediction module is incorporated to achieve real-time collision avoidance and intelligent path planning. Experimental validations across laboratory and real port scenarios confirm the system’s strong performance, with mapping errors consistently under 6.1 cm, frame rates up to 20 FPS, and collision prediction accuracy reaching 91.2%. The system also maintained a low false alarm rate (< 5%) and short average response time (≤ 1.4 s). A seven-day field deployment further demonstrated its operational stability, with a false alarm rate limited to 4.4%. These results affirm the system’s potential for real-world applications in large-scale port machinery. Future work will focus on integrating deep learning-based multi-object detection models and digital twin simulation platforms to enhance remote coordination and safety resilience in automated unloading operations. To further improve real-world applicability, the system was designed with consideration for adverse environmental conditions such as fog or rain. Although extreme weather scenarios were not the focus of this study, future experiments will include tests under such conditions to fully evaluate sensing robustness.

Data availability

All data generated or analyzed during this study are available from the corresponding author upon reasonable request.

References

Yang, Y. et al. Research on the multi-equipment cooperative scheduling method of sea-rail automated container terminals under the loading and unloading mode. J. Mar. Sci. Eng. 11(10), 1975 (2023).

Carlan, V. et al. Automation in cargo loading/unloading processes: Do unmanned loading technologies bring benefits when both purchase and operational cost are considered?. J. Ship. Trade 8(1), 20 (2023).

Marsudi, S., Saputra, T. D., Lazaro, C. Comparative study of ship loading and unloading methods to increase productivity in large ports. Maritime Park: Journal of Maritime Technology and Society, 2025: 23–29.

Xu, Y., Wang, L., Ouyang, S., et al. Research on automatic loading and unloading system compatible with multiple materials. In: 2021 9th International Conference on Traffic and Logistic Engineering (ICTLE). IEEE, 2021: 27–30.

Toni, P. R. J., Saputra, D. P., Soekirmaan, A. Availability of loading and unloading equipment, berthing docks, loading and unloading speed on improving port operational performance through service digitalization. Dinasti International Journal of Digital Business Management (DIJDBM), 2024, 5(6).

Li, C. X. et al. Design and implementation of an automated crane system for container loading and unloading in ports. J. Drive Control 22(1), 16–26 (2025).

Chen, H. et al. Research on train loading and unloading mode and scheduling optimization in automated container terminals. J. Mar. Sci. Eng. 12(8), 1415 (2024).

Pan, X., Li, J., Qing, B., et al. An automatic loading and unloading storage control system and equipment. In: International Conference on Automation Control, Algorithm, and Intelligent Bionics (ACAIB 2024). SPIE, 2024, 13259: 154–162.

Panpan, Z., Jing, L., Chaoyu, S., et al. Design and application of online monitoring system for the grab-type ship unloader based on multi-source sensing. In: 2024 Global Reliability and Prognostics and Health Management Conference (PHM-Beijing). IEEE, 2024: 1–6.

Huang, X., Zhang, M., Sun, J., et al. Optimizing equipment configuration of handling technology in an ore terminal based on fuzzy rule. In: 2017 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD). IEEE, 2017: 1497–1504.

Chen, C., Zhang, Y., Mi, C. Vision-based measurement: the application and research in gantry cranes at bulk cargo terminals. In: 2024 International Symposium on Sensing and Instrumentation in 5G and IoT Era (ISSI). IEEE, 2024, 1: 1-6.

Hadiningrat, K. P. S. S., Asmoro, R. S. & Silalahi, V. A. J. M. Improving ship loading and unloading efficiency through optimizing cargo control room operations. Jurnal Cakrawala Akademika 1(6), 1890–1907 (2025).

Yuwono, Y., Barata, F. A., Sijabat, E. A. S., et al. The influence of loading and unloading facilities, development of port technology, and Hr quality on service user satisfaction which is mediated by service performance In Parit Rempak Port. SEIKO: Journal of Management & Business, 2024, 7(1.1).

Zhao, Y., Wang, Z., Liu, C., et al. Automatic loading and unloading control system of cable materials based on artificial immune algorithm remote sensing image recognition technology. In: 2023 2nd International Conference on 3D Immersion, Interaction and Multi-sensory Experiences (ICDIIME). IEEE, 2023: 146–149.

Luo, Y., Zhang, J. Research on the automatic loading and unloading control technology of industrial robots. In: 2024 International Seminar on Artificial Intelligence, Computer Technology and Control Engineering (ACTCE). IEEE, 2024: 163–166.

Su, C., Yuan, J. Bulk car loading and unloading machinery. In: Handbook of Port Machinery. Singapore: Springer Nature Singapore, 2024: 1631–1669.

Shen, Z., Wang, J., Zhang, Y., et al. Identification and positioning method of bulk cargo terminal unloading hopper based on monocular vision three-dimensional measurement. Journal of Marine Science & Engineering, 2024, 12(8).

Wang, L. F., Li, Q., Fu, W., et al. Enhancing automated loading and unloading of ship unloaders through dynamic 3D coordinate system with deep learning. International Journal of Computers, Communications & Control, 2024, 19(2).

Hwang, S., Park, J., Won, J., et al. Object detection for cargo unloading system based on fuzzy C means. Computers, Materials & Continua, 2022, 71(2).

Ou, H., Liu, J., Tan, Z., et al. Optimizing Production through Digitalization: A Case Study on Smart Manufacturing in Port Machinery. In: 2024 IEEE International Conference on Advanced Information, Mechanical Engineering, Robotics and Automation (AIMERA). IEEE, 2024: 343–350.

Rashidi, H., Habibi Ehsaee, F. & Parand, F. A. Integrated management of equipment in automated container terminals. Int. J. Maritime Technol. 16, 13–27 (2021).

Zhou, W., Liu, B. Research on PLC Communication Technology in Intelligent Loading Control System. In: International Conference on Intelligent Information Hiding and Multimedia Signal Processing. Singapore: Springer Nature Singapore, 2022: 37–48.

Matsumoto, T., Premachandra, C. Depth sensor application in ground unevenness estimation for UAV emergency landing[C]//2023 IEEE Sensors Applications Symposium (SAS). IEEE, 2023: 1–6.

Amarathunga, W. L. D. S. The determination of port automation levels using an analytic hierarchical process: a case study for the Port of Colombo. 2022.

Ngo, C. H. et al. Design of an AI model for a fully automatic grab-type ship unloader system. J. Mar. Sci. Eng. 12(2), 326 (2024).

Liu, P. et al. A multifunctional ship sorting and clearance robot implementation model. Acad. J. Eng. Technol. Sci. 7(2), 56–62 (2024).

Han, Y. et al. A 5G-based VR application for efficient port management. World Electric Vehicle Journal 13(6), 101 (2022).

Liu, M. et al. Intelligent monitoring method of tridimensional storage system based on deep learning. Environ. Sci. Pollut. Res. 29(46), 70464–70478 (2022).

Bashir, M. O. I. Application of artificial intelligence (AI) in dredging efficiency in Bangladesh. Ann. Emerg. Technol. Comput. 6(1), 74–88 (2022).

Huairui, T. et al. Robot’s automatic strategy for fermented grains scooping based on machine vision. Food Mach. 40(3), 110–119 (2024).

Song, L., Chen, M., Xu, Z. 5G Private Local Area Network Scenarios and Solutions[M]//5GtoB From Theory to Practice. Singapore: Springer Nature Singapore, 2024: 173–277.

Zhang, J., Song, J., Liu, J., et al. Bridge Type Grab Ship Unloaders[M]//Handbook of Port Machinery. Singapore: Springer Nature Singapore, 2024: 363–450.

Premachandra, C. & Funahashi, Y. Depth-gyro sensor-based extended face orientation estimation using deep learning[J]. IEEE Sens. J. 23(17), 20199–20206 (2023).

Funding

This research was supported by the General Program of Natural Science Foundation of Chongqing: Research on the safety of automatic loading and unloading of lifting ship unloader through dynamic construction of three-dimensional coordinate system (CSTB2023NSCQ-MSX0981), and the Science and Technology Research Program of Chongqing Education Commission: Research on key technologies of predictive maintenance of industrial robots based on operation big data (KJZD-M202203201).

Author information

Authors and Affiliations

Contributions

Lufeng Wang contributed to conceptualization, methodology design, and manuscript writing. Qu Li participated in system integration, field testing, and data validation. Qiang Zhang supported software implementation and experimental setup. Fei Jiang contributed to sensor deployment and technical debugging. Tianxing Song assisted in data processing and visualization. Guangbo Pi was responsible for hardware support and system calibration. Shijie Sun* (corresponding author) supervised the overall project, coordinated technical tasks, and reviewed and edited the final manuscript. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, L., Li, Q., Zhang, Q. et al. Safety optimization of grab unloaders based on machine vision and 3D coordinate system reconstruction. Sci Rep 15, 36559 (2025). https://doi.org/10.1038/s41598-025-19944-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-19944-1