Abstract

Resting-state electroencephalography (EEG) metrics are influenced by task instructions and momentary factors such as cognitive state, complicating their use as biomarkers. We assessed how strongly three classes of resting-state EEG metrics depend on time and state: functional connectivity (FC; amplitude-envelope correlation, AECc, and phase-lag index, PLI), signal complexity (inverted joint permutation entropy, JPEINV, and permutation entropy, PE) and network topology derived from minimum spanning trees (MST). Sixty-four-channel EEG was recorded in healthy adults during two sessions six weeks apart (n = 42) and during semi-resting-state epochs embedded in a P50-gating task (n = 24). Reliability for repeated-resting recordings and resting-state versus semi-resting-state comparisons was quantified with intraclass correlation coefficients (ICC) at sensor and source level. PE showed consistently good-to-excellent reliability (ICC > 0.75–0.90). FC metrics ranged from poor to excellent, and MST metrics from poor to good. Across analyses, theta and alpha bands outperformed delta and beta bands. Alpha and theta PE and alpha PLI were the most robust, whereas MST and AECc require caution, especially outside theta and alpha bands. Our results identify theta and alpha PE and alpha PLI as robust measures suitable for biomarker development, while urging caution for MST and AECc due to their limited stability across time and state.

Similar content being viewed by others

Introduction

Resting-state electroencephalography (EEG) measures intrinsic brain activity in the absence of explicit tasks orstimuli1. While resting-state EEG can be characterized by various aspects of brain function—from local oscillations to network-level organization2—its utility as a biomarker depends on measurement reliability. Subject heterogeneity, varying cognitive states and differences in measurement instructions can influence resting-state EEG recordings. These influences can lead to changes in amplitude, frequency distribution, oscillatory patterns and other EEG features3,4. This variability poses a challenge for biomarker development, where the goal is to reliably identify (alterations in) brain activity regardless of momentary state fluctuations. Resting-state EEG measures should demonstrate reliability across different conditions to serve as clinical biomarkers, indicating relative state independence while capturing the underlying trait5,6.

One way to assess EEG biomarker reliability is through measurement of their stability within subjects over time in repeated measures under similar conditions. Different EEG measures show varying degrees of temporal stability, which is high for power spectral characteristics, but variable for different functional connectivity (FC) measures7, where stability decreases with increasing retest intervals from hours to days8,9,10,11 to approximately 1 month12,13 or longer10,14. Among FC measures studied in EEG, phase-based measures such as phase lag index (PLI) show moderate to good reliability, while amplitude-based FC measures remain less thoroughly studied and show inconsistent reliability10,13,15. Complexity-based EEG measures have received limited attention, with one study reporting moderate reliability over a two-month period in a limited sample for several entropy measures16. Nevertheless, local signal complexity is an emerging type of EEG measure, possibly relating to balance between neuronal excitation and inhibition17. Expanding on this, an EEG marker combining local signal complexity and long-range FC is joint permutation entropy (JPE)18. Although JPE is a promising biomarker19, specifically inverted JPE (JPEINV), its temporal reliability remains unexplored in EEG.

Besides the functional interactions itself, the networks formed by interacting brain regions can also be characterized, although this often comes with arbitrary methodological choices20. Analyzing the core of such networks using Minimum Spanning Trees (MST) offers an alternative approach to characterize brain networks that avoids such choices21. This avoidance of arbitrary thresholds potentially leads to more consistent characterizations of core network topology across different studies, potentially reducing the heterogeneity observed in the literature on network alterations in brain disorders22. However, the temporal reliability of MST characteristics remains unclear. Nevertheless, three studies using a non-MST graph theory approach report heterogeneous outcomes ranging from poor to good reliability9,14,23.

The reliability of EEG measures may be improved through source reconstruction, which can reduce volume conduction effects while more directly capturing neuronal activity3, aid interpretation of results24 and increase signal-to-noise ratios25. Additionally, source reconstruction can lead to faster stabilization of MST measures26. Evidence showing reliability of measures based on source-reconstructed EEG is limited, with heterogeneous results ranging from poor to good9,13,15. Altogether, while power spectral measures show robust temporal reliability, evidence for the reliability of FC, complexity-based, and MST measures remains limited, with source reconstruction showing mixed benefits for measurement reliability.

Apart from these technical aspects, a complementary approach to assess biomarker stability is to investigate reliability of EEG measures through comparison of task-related resting periods, or semi-resting-state EEG27, with resting-state EEG. The periods surrounding task execution are characterized by heightened attention, anticipation, and cognitive preparation, potentially altering brain activity even in the absence of stimuli28,29,30,31. Evidence suggests that oscillatory neural fingerprints remain stable across resting-state EEG and semi-resting-state EEG in the context of a motor task27, indicating that resting-state EEG characteristics can maintain reliability despite different resting conditions. The extent to which more high-order measures such as FC, complexity and network characteristics remain stable across different resting conditions is yet to be investigated.

The present study used both repeated resting-state EEG measurements and semi-resting-state EEG to evaluate the reliability of various quantitative EEG measures across both time and different resting-state contexts in healthy subjects. The semi-resting-state data consisted of EEG in the periods of rest in a P50 gating paradigm measurement, which aims to measure sensory gating, referring to the preattentional filtering of irrelevant stimuli32. EEG offers diverse measures such as phase-, amplitude- and complexity-based measures, which fundamentally differ in the characteristics they capture3, alongside network topology methods like the widely applied Minimum Spanning Tree (MST) to characterize brain organization22. To cover these categories, we assessed phase-based (PLI), amplitude-based (corrected amplitude envelope correlation or AECc), and complexity-based (JPEINV) FC measures, alongside local signal complexity (PE) and network topology characteristics (MST), at both sensor and source level. This selection provides a diverse sample of common, relatively interpretable measures, aligning with prior applications in clinical contexts19,33,34,35. Given previous heterogeneous findings7, we hypothesized that (1) connectivity and complexity measures would demonstrate moderate reliability across both time and resting conditions and (2) MST measures would show comparable reliability as they are based on methodologically robust approach to network characterization.

Methods

EEG recordings from healthy adults in the age range between 18 and 40 years were acquired as part of the Pan European Collaboration Antipsychotic-Naïve Studies (PECANS), ClinicalTrials.gov Identifier: NCT01154829)36 and the OPTIMISE STUDY (ClinicalTrials.gov Identifier: NCT01555814)37,38. Matching inclusion criteria and site allowed for the combination of these two datasets. Exclusion criteria included a presence or history of head injury or mental health conditions, physical illness and/or first-degree relatives with mental health conditions. Data were collected according to the declaration of Helsinki and both studies were approved by the Danish Ethical Committee. Participants signed informed consent before participating.

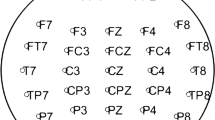

EEG recordings

EEG recordings were acquired using BioSemi hardware (BioSemi, Amsterdam, The Netherlands). A cap with 64active electrodes with an extended 10–20 layout was used. Sampling rate was 2048 Hz. Participants were seated in a comfortable chair in a sound-insulated room with a sound level of < 40 dB. Participants were instructed not to drink caffeine-containing beverages and/or smoke cigarettes 1 h before the recording. Participants were instructed to stay awake. EEG recordings took place between 9 AM and noon. An event-related potential (ERP) battery was recorded followed by ten minutes of eyes-closed resting-state EEG, ensuring a constant setting39,40,41,42,43. The ERP battery, also known as Copenhagen Psychophysiology Test Battery (CPTB), includes the startle reflex paradigm (PPI), P50 suppression, mismatch negativity (MMN), and selective attention paradigms. EEG recordings were made twice with a 6 week time interval, allowing for quantification of temporal reliability of the resting-state EEG characteristics described below. We analyzed epochs obtained during the rest periods of a P50 gating task in addition to true resting-state EEG. In this paper, we refer to the former as semi-resting-state EEG. We chose the P50 task for this semi-resting-state analysis, as there are periods of 10 s between sets of stimuli. Participants listened to three identical blocks of 40 auditory stimuli and were instructed to focus their gaze on a fixed point. These stimuli consisted of 1.5 ms-long bursts of white noise with an interstimulus interval of 500 ms. The analyses of the P50 paradigm have previously been published39,40; in the current project we made use of the EEG data recorded in the time between the trials. Using this paradigm in addition to resting-state EEG allowed us to study the effect of different brain states affected by measurement instruction and attention level. We compared these EEG epochs with the resting-state EEG recordings, both of which were acquired on the same day. In Fig. 1, an overview of the study design is provided.

EEG preprocessing

The EEG data were preprocessed using functions from version 1.8.0 of the open-source MNE Python package44. This implementation is openly accessible via: https://github.com/yorbenlodema/EEG-Pype. In short, we manually selected bad channels for interpolation and selected 15 artifact-free 4-s-long epochs for further quantitative analysis. See supplements S1 for a full explanation of the approach used.

EEG measures

Data were filtered in four frequency bands: delta (0.5–4 Hz), theta (4–8 Hz), alpha (8–13 Hz), and beta (13–20 Hz)2,45. We excluded frequency content above 20 Hz to reduce muscle activity45. EEG features were calculated using in-house developed software in Python, using functions from the Scipy package (available via https://github.com/yorbenlodema/EEG-Pype). The reliability of FC measures (PLI and AECc)46,47,48, MST characteristics based on these FC estimates using a maximum spanning tree approach21,49, and entropy or complexity-based measures (PE and JPEINV)18,50 were studied. As a supplementary analysis, the AECc was calculated both on separate epochs and one-minute concatenated epochs, which could result in higher reliability15. See supplement S2 for a full explanation of this analysis. MST was calculated with a maximum spanning tree approach (see supplement S1 for a full explanation). For the MST measures, we included both conventional quantitative measures and MST overlap. The MST overlap provides an additional way of quantifying MST reliability by looking at the overlap of the network backbone during different recordings and conditions and is similar to the approach by Govaarts et al.51. See Table 1 for an overview of the included EEG measures and their description. For more details concerning the EEG preprocessing and analysis see supplement S1.

Quantification of reliability

In this paper, the term “reliability” refers to test–retest reliability. A method that is commonly used to assess reliability is the Intraclass Correlation Coefficient (ICC). This test was first introduced by Fisher52 and modern versions are often used for clinical purposes, including assessing the reliability of clinical measurements like EEG recordings53,54. Compared to Pearson’s correlation, ICC is less sensitive to bias54. We used a 2-way mixed-effects ICC model54. The level of reliability was based on the 95% confidence interval of the ICC: reliability scores lower than 0.50 were considered ‘poor’, scores from 0.50 to 0.75 ‘moderate’, scores from 0.76 to 0.90 ‘good’, and scores above 0.90 ‘excellent’54. ICC scores were calculated for all EEG measures except MST overlap. This comparison was made over time for the repeated resting-state EEG, using the resting-state and follow-up after six weeks. We also calculated the ICC scores across resting-state conditions, comparing resting-state and semi-resting-state data. To assess whether resting-state EEG is stable and relatively state-independent, we interpreted the four different possible combinations of high and low reliability together for the two comparisons. The following combinations are possible:

-

High state reliability and high temporal reliability: possible biomarker.

-

Low state reliability and high temporal reliability: possible biomarker, sensitive to resting-state instructions.

-

High state reliability and low temporal reliability: possible cross-sectional marker, sensitive to time-variable factors.

-

Low state reliability and low temporal reliability: poor reliability.

Results

The reliability analysis over time included 42 participants, while 24 participants were included for the reliability analysis across resting-states. The mean age of participants was 24.2 years (SD 5.4) for the group that completed the repeated resting-state measurement and 26.0 years (SD 6.5) for the group that additionally completed the semi-resting-state condition. In the resting-state group 61.9% (N = 26) were male compared to 70.8% (N = 17) in the semi-resting-state group. See supplement S3 for 95% confidence intervals of all measures based on non-parametric bootstrapping with 1000 resamples. Note that these intervals vary across the different measures and tend to be wider for the across states comparison due to the smaller sample size.

Phase lag index

At sensor level, the PLI showed moderate reliability in the theta and alpha bands across time. The PLI showed poor delta and beta band reliability. When compared between resting-state and semi-resting-state conditions, the PLI showed excellent reliability in the theta band, good reliability in the alpha band and moderate reliability in the delta and beta band.

At source level, the PLI showed moderate reliability in the alpha and beta band when compared across time. In the delta and theta band the PLI showed poor reliability for this comparison. The PLI showed moderate to good reliability in the theta, alpha and beta bands and poor reliability in the delta band when compared across resting-states at source level. See Figs. 2, 3 and 4 for an overview of the results.

Temporal and state reliability of EEG measures at sensor and source level. Intraclass correlation coefficients (ICC) for EEG measures across time (6 week interval) and states (resting state vs. semi-resting-state) at both sensor level (A) and source level (B). Reliability scores lower than 0.50 were considered ‘poor’, scores from 0.50 to 0.75 as ‘moderate’, scores from 0.76 to 0.90 as ‘good’, and scores above 0.90 were considered ‘excellent’ (Koo & Li, 2016). Measures include phase lag index (PLI), corrected amplitude envelope correlation (AECc), permutation entropy (PE), and joint permutation entropy (JPEINV) across different frequency bands (delta: 0.5–4 Hz, theta: 4–8 Hz, alpha: 8–13 Hz, beta: 13–20 Hz). MST minimum spanning tree.

Scatterplot of measures across state and across time at sensor level. The ICCs for all MST measures, except for those for the PLI and AECc MST were averaged (‘overall’), as they all showed poor reliability. Delta: 0.5–4 Hz, theta: 4–8 Hz, alpha: 8–13 Hz, beta: 13–20 Hz). PLI phase lag index, AECc corrected amplitude envelope correlation, MST minimum spanning tree, PE permutation entropy, JPE joint permutation entropy, ICC intraclass correlation coefficients, inv inverted.

Scatterplot of measures across state and across time at source level. The ICCs for all MST measures, except for those for the PLI and AECc MST, were averaged (‘overall’), as they all, except for MST leaf fraction (based on alpha AECc), showed poor reliability. Delta: 0.5–4 Hz, theta: 4–8 Hz, alpha: 8–13 Hz, beta: 13–20 Hz). PLI phase lag index, AECc corrected amplitude envelope correlation, MST minimum spanning tree, PE permutation entropy, JPE joint permutation entropy, ICC intraclass correlation coefficients, inv inverted.

Temporal and state reliability of minimum spanning tree measures at sensor and source level. Intraclass correlation coefficients (ICC) for minimum spanning tree (MST) measures across time (6 week interval) and states (resting state vs. semi-resting-state) at both sensor level (A) and source level (B). Reliability scores lower than 0.50 were considered ‘poor’, scores from 0.50 to 0.75 as ‘moderate’, scores from 0.76 to 0.90 as ‘good’, and scores above 0.90 were considered ‘excellent’ (Koo & Li, 2016). MST measures were derived from both PLI and AECc connectivity matrices and include leaf fraction (LF), maximum degree (k), diameter (D), maximum betweenness centrality (BC), eccentricity (ECC), and tree hierarchy (Th) across different frequency bands (delta: 0.5–4 Hz, theta: 4–8 Hz, alpha: 8–13 Hz, beta: 13–20 Hz). PLI phase lag index, AECc corrected amplitude envelope correlation.

Corrected amplitude envelope correlation

The AECc showed moderate reliability in the beta band compared across time at sensor level, while all other AECc frequency bands demonstrated poor reliability. Across-states comparisons at sensor level showed moderate AECc reliability in the alpha band, and poor reliability in the delta, theta, and beta bands.

At source level, the AECc demonstrated moderate reliability over time in the theta, alpha and beta band, and poor reliability in the delta band. The reliability across states was moderate-to-good in the alpha and beta band, but poor in the delta and theta band. See Figs. 2, 3 and 4 for an overview of the results. Concatenating epochs before calculating AECc yields mixed results and does not provide a substantial overall improvement. While concatenation may slightly increase reliability for state-level analyses in sensor space, it often performs comparably or significantly worse in other contexts, particularly at the source level. See Supplements S2 for full results.

Permutation entropy and joint permutation entropy (inversed)

At sensor level, the PE showed good reliability over time in the theta and alpha bands, moderate in the beta band, and poor in the delta band. For JPEINV, reliability over time was moderate in the alpha band, but poor in the theta, beta, and delta bands. Across states, the reliability of PE was good in the theta and alpha bands, moderate in the beta band, and poor in the delta band. In contrast, JPEINV showed poor reliability across states in all four frequency bands.

At source level, the PE demonstrated good reliability in the theta and alpha bands and moderate reliability in the beta band when compared across time; PE also showed poor reliability in the delta band across time. The JPEINV showed moderate reliability when compared across time in the theta, alpha, and beta bands, and poor reliability in the delta band. When compared across resting-states at source level, the PE showed moderate reliability in the delta and beta bands and good reliability in the theta and alpha bands. The JPEINV showed poor reliability across states for all frequency bands at the source level for this comparison. See Figs. 2, 3 and4 for an overview of the results.

Minimum spanning tree

Several MST measures showed moderate reliability at sensor level. When compared across time, this included the AECc degree (k) in the alpha band and PLI Leaf fraction (LF) in the theta band. All other variables showed poor reliability for this comparison. When comparing across resting-states, some measures, mostly in the theta and alpha band, showed moderate to good reliability. This included the following measures: Leaf fraction (based on theta PLI, delta AECc, theta AECc, alpha PLI, alpha AECc, beta AECc), the maximum degree (based on theta PLI and alpha PLI), the diameter (based on delta AECc, theta PLI and alpha PLI) and eccentricity (based on delta AECc, theta PLI and alpha PLI) and tree hierarchy (based on beta AECc). See Figs. 3, 4 and 5 for a complete overview of these results.

At source level, the MST leaf fraction (based on alpha AECc) showed moderate reliability when compared across time. All other features showed poor reliability for this analysis.

MST overlap

The MST overlap analysis generally showed low percentages of overlap between the MST matrix elements of AECc and PLI MST matrices for both comparisons. The overlap ranged from 2.7 to 10.8%, with the highest overlap in the alpha band, indicating that on average, no more than 6.8 edges were consistently present in this backbone network in repeated EEG registrations. Network reconstruction in source space led to less overlap between networks with a maximum overlap of 4.7% or 3.0 edges. See Table 2 for a complete overview of these results.

Discussion

The present study investigated the reliability of quantitative FC, complexity and MST EEG measures. Frequency-dependent reliability was assessed between repeated resting-state EEG measurements over time and between resting-state and semi-resting-state EEG recordings at the same time point, both for sensor-level data and after source reconstruction. The reliability of FC measures varied depending on the measure, frequency band, and application of source reconstruction. Overall, we found moderate to good reliability for FC and complexity measures, where the PE showed highest reliability, but low reliability for MST measures. The results for FC measures were in line with our hypothesis, while PE outperformed our expectation and MST measures performed worse than hypothesized.

In sensor‐level analyses, PLI exhibited somewhat higher ICC values than AECc in the theta and alpha bands, though this pattern varied in other frequency bands and across different states. Additionally, measures in the theta and alpha bands showed higher reliability than in the delta and beta bands. Overall, when comparing ICCs across time and across resting-states, neither was clearly higher than the other. ICCs were slightly higher at sensor level than at source level.

Combining findings of reliability across time and across states, we can categorize measures in terms of their relative state-dependence. The theta and alpha PE showed high reliability across time and states, at both sensor and source level. This indicates PE exhibits high stability across different resting-state conditions, which include both cognitive factors and eye state, essential properties for a potential biomarker. Notably, JPEINV showed lower reliability than PE in all comparisons. It is conceivable that JPEINV is sensitive to non-stationary, metastable brain dynamics18,55 which could manifest as lower test-retest reliability. Additionally, it is worth noting that JPEINV is a relatively new FC measure, which means little is known about the influence of factors such as epoch length, signal-to-noise ratio and different parameter settings on reliability. Current evidence does not support the use of JPEINV as a reliable disease marker, though this result can be considered preliminary. Sensor-level theta and alpha PLI showed moderate temporal reliability combined with high reliability across states, implicating PLI as relatively state-independent. All remaining MST measures at source-level showed poor reliability, with low reliability across time and state. It is noteworthy that the task paradigm included eyes open EEG compared to the eyes closed resting-state recordings. Relating to this difference, it has been found that spectral measures in eyes closed resting-state EEG are more stable than in eyes open resting-state EEG56,57 and the spatial pattern of oscillatory activity is different between these conditions4. Nevertheless, eyes open and eyes closed resting-state EEG differ only marginally in terms of reliability when looking at functional connectivity15. Despite this, there is a possibility that some of the EEG measures included in the current study are more sensitive to changes related to eyes open resting-state EEG than others.

There is limited literature to relate our current findings regarding reliability of these EEG measures, but Hommelsen et al. were able to identify an individual neural finger print with high accuracies using a machine learning model based on power spectral density27. Interestingly, this was possible both for longitudinal resting-state data and for resting-state versus semi-resting-state data, indicating relative state-independence of the power spectral density when using a machine learning approach. Although not directly comparable to our experiment, previous studies compared reliability of resting-state and task EEG (though not based on a P50-task), reporting heterogeneous results46,58.

An additional finding of our study concerns the temporal reliability of EEG measures, which is a fundamental requirement of EEG measures in many applications. At the sensor level, PE in the theta and alpha bands had the highest temporal reliability. PLI in the theta and alpha band together with beta AECc are other, albeit slightly inferior, options. For the same comparison after source reconstruction, PE in the theta and alpha bands was most reliable, with the alpha PLI and beta AECc as a slightly less reliable alternative. From these results, it follows that a general recommendation for longitudinal EEG analyses would be to analyze the theta, alpha and beta bands, favoring PE over more traditional connectivity measures. Although limited evidence exists on the temporal reliability of complexity-based connectivity measures, the finding that alpha PLI demonstrates relatively high temporal reliability in healthy subjects aligns with previous research8,12,14. Additionally, the alpha band is known for its robustness and reliability in EEG recordings, and is associated with resting-state neural activity and attentional processes59. Regarding reliability of AECc, previous studies explored either magnetoencephalography data60,61, simulated data62, or looked primarily at reliability between disease states63. When looking at the corrected alpha power envelope connectivity, which can be considered equivalent to AECc, our temporal reliability with an ICC of around 0.5 for source-level data is comparable to findings of Rolle and colleagues for source-level EEG data15. Concerning the relatively lower reliability of AECc compared to the other measures, we have not quantified the effect of orthogonalization on this result. By comparing reliability of AECc with AEC, this effect could be explored. The finding that the delta band generally showed poor temporal reliability is consistent with its reduced signal-to-noise ratio, which arises from limited delta activity in healthy, awake adults and also from the substantial contamination by artifacts such as eye-blinks and eye movements. Even though MST measures have been found to be promising disease markers22, our results indicate that MST EEG measures should be interpreted with caution. The magnitude of MST overlap was similar or slightly higher than previous research in ALS patients51, though it remained relatively low. MST using a maximum spanning tree approach is stable and relatively insensitive to small variations in edge weights of the underlying FC matrix21. Therefore, both the low MST overlap and relatively low ICC values found for MST measures point towards significant fluctuations in underlying FC matrices between conditions. Interestingly, these fluctuations, especially for PLI, do notseem to result in poor ICC values after averaging the matrix. Together, these findings do not yet support the use of PLI and AECc MST-based EEG measures as possible biomarkers.

A secondary motivation for this study was to compare pure resting‐state data with semi‐resting-state epochs from a P50 gating task. Our findings imply that, for certain measures, there is little penalty in substituting semi‐resting-state for pure resting‐state data. This observation has practical implications, as it suggests that some studies could omit a separate resting-state EEG recording if the semi‐resting-state intervals in a task provide sufficient reliability. On the other hand, for measures that had lower across‐state reliability—such as MST features, FC in delta or beta bands, or certain amplitude‐based metrics—this might not hold true.

In this study, source reconstruction using an LCMV beamforming approach with a template MRI-based Boundary Element Method (BEM) head model did not clearly improve reliability of a wide range of EEG measures. Some considerations are important in this regard. Methods such as beamforming, which attempt to reconstruct source activity by focusing on specific locations within the brain, have been employed with standard head models but still face limitations due to model inaccuracies and assumptions64. Alternative source reconstruction approaches incorporate different assumptions about the source activity, leading to different source estimates. For example, Exact Low-Resolution Electromagnetic Tomography (eLORETA) provides smooth solutions for the distribution of neuronal electrical activity65. Notably, some studies suggest that Minimum Norm Estimation (MNE) may outperform beamforming techniques in certain contexts, offering better localization accuracy and reliability15,66,67. Another comparison between LCMV beamforming, eLORETA and MNE showed comparable between-cohort and within-patient consistency, though LCMV beamforming produced distinctly different FC estimates compared to the other two inverse methods68. Incorporating different source reconstruction methods could influence and potentially enhance the reliability of source-level EEG measures.

A strength of this study is that the methodological framework incorporates a wide array of metrics, including PLI, AECc, PE, JPEINV and MST measures, examined at both sensor and source levels, allowing for direct cross‐comparison of reliability. Second, by including both resting‐state and semi‐resting-state data, we could explore whether semi‐resting-state epochs can substitute for purely resting‐state measurements in biomarker‐focused research. Third, our findings of higher reliability in theta and alpha frequencies, especially for PE and PLI, complement earlier reports describing the potential of these bands for identifying stable neural signatures27. Finally, the reliability of resting-state EEG across different contexts has been studied sparingly and indirectly. Providing evidence that supports this reliability is of value for the development of clinically relevant biomarkers.

As mentioned previously, the fact that the acrossstate comparison is based on eyes open EEG is a possible physiological confound that complicates interpretation. Another possible limitation of his study is that we did not take into account other factors influencing EEG reliability other than time and state differences, such as sensitivity to noise, coupling strength, spatial leakage, or synchronization transitions62. Furthermore, reliability represents only one aspect of what makes an EEG measure valuable and does not address its discriminatory power, despite evidence supporting its ability to discriminate illness effects. In addition, EEG measurement length may have influenced our results, since EEG measures might become more reliable when using longer data segments compared to one minute of data15. We made use of a template head model for the beamforming analysis, which is a valid approach for magnetoencephalography. However, this type of evidence does not exist for EEG analyses, and the use of a template head model might influence MST results more than the results for other metrics69. We used different sample sizes for the time (n = 42) and state (n = 24) comparisons; ICC values may be under- or overestimated for smaller samples70. This can also be observed in the wider confidence intervals regarding the across-state comparisons. Therefore, these results should be interpreted with some caution. An additional limitation is the fact that our cohort consisted of a healthy, relatively young, mostly male population. This limits generalizability of these reliability findings to other clinical populations. Age and illness might influence the reliability of measures like PLI71, making the translation to a clinical population uncertain. The effect of sex on our findings is still unknown.

In conclusion, our findings demonstrate that PE in the theta and alpha bands is relatively stable both over time and across different resting‐state contexts, aligning with the requirements of a potential biomarker. To a slightly lesser degree, this also holds true for alpha PLI. For other measures, notably JPEINV and many MST and FC measures in the delta and beta bands, caution is warranted given their lower reliability. Future research is necessary to translate these findings to patient cohorts and to expand the analyses to a more diverse healthy cohort to explore the impact of sex and age.

Data and code availability

The data that support the findings of this study are available on reasonable request from the corresponding author (l.s.dominicus-2@umcutrecht.nl). The data are not publicly available due to their containing information that could compromise the privacy of research participants. All preprocessing and analysis scripts (built with version 1.8.0 of the open-source MNE-Python package; Gramfort et al. 2013) are openly available at https://github.com/yorbenlodema/EEG-Pype.

References

Engel, A. K., Gerloff, C., Hilgetag, C. C. & Nolte, G. Intrinsic coupling modes: Multiscale interactions in ongoing brain activity. Neuron 80(4), 867–886. https://doi.org/10.1016/j.neuron.2013.09.038 (2013).

da Silva, F. L. EEG and MEG: Relevance to neuroscience. Neuron 80(5), 1112–1128 (2013).

van Diessen, E. et al. Opportunities and methodological challenges in EEG and MEG resting state functional brain network research. Clin. Neurophysiol. 126(8), 1468–1481. https://doi.org/10.1016/j.clinph.2014.11.018 (2015).

Barry, R. J., Clarke, A. R., Johnstone, S. J., Magee, C. A. & Rushby, J. A. EEG differences between eyes-closed and eyes-open resting conditions. Clin. Neurophysiol. 118(12), 2765. https://doi.org/10.1016/j.clinph.2007.07.028 (2007).

Gemein, L. A. W., Schirrmeister, R. T., Boedecker, J. & Ball, T. Brain age revisited: Investigating the state vs. trait hypotheses of EEG-derived brain-age dynamics with deep learning. Imaging Neurosci. 2, 1–22. https://doi.org/10.1162/imag_a_00210 (2024).

Coan, J. A. & Allen, J. J. B. The state and trait nature of frontal EEG asymmetry in emotion. Asymmetrical Brain https://doi.org/10.7551/mitpress/1463.003.0023 (2018).

Lopez, K. L., Monachino, A. D., Vincent, K. M., Peck, F. C. & Gabard-Durnam, L. J. Stability, change, and reliable individual differences in electroencephalography measures: A lifespan perspective on progress and opportunities. Neuroimage 275, 120116. https://doi.org/10.1016/j.neuroimage.2023.120116 (2023).

Kuntzelman, K. & Miskovic, V. Reliability of graph metrics derived from resting-state human EEG. Psychophysiology https://doi.org/10.1111/psyp.12600 (2017).

Moezzi, B., Hordacre, B., Berryman, C., Ridding, M. C. & Goldsworthy, M. R. Test-retest reliability of functional brain network characteristics using resting-state EEG and graph theory. Biorxiv https://doi.org/10.1101/385302 (2018).

Cox, R.,Schapiro, A. C. & Stickgold, R. Variability and stability of large-scale cortical oscillation patterns. Netw. Neurosci. 2(4), 481–512 (2018).

Büchel, D., Lehmann, T., Sandbakk, Ø. & Baumeister, J. EEG-derived brain graphs are reliable measures for exploring exercise-induced changes in brain networks. Sci. Rep. 11(1), 20803. https://doi.org/10.1038/s41598-021-00371-x (2021).

Cannon, R. L. et al. Reliability of quantitative EEG (qEEG) measures and LORETA current source density at 30 days. Neurosci. Lett. 518(1), 27–31. https://doi.org/10.1016/j.neulet.2012.04.035 (2012).

Duan, W. et al. Reproducibility of power spectrum, functional connectivity and network construction in resting-state EEG. J. Neurosci. Methods 348, 108985. https://doi.org/10.1016/j.jneumeth.2020.108985 (2021).

Hardmeier, M. et al. Reproducibility of functional connectivity and graph measures based on the phase lag index (PLI) and weighted phase lag index (wPLI) derived from high resolution EEG. PLoS ONE 9(10), e108648. https://doi.org/10.1371/journal.pone.0108648 (2014).

Rolle, C. E. et al. Functional connectivity using high density EEG shows competitive reliability and agreement across test/retest sessions. J. Neurosci. Methods 367, 109424. https://doi.org/10.1016/j.jneumeth.2021.109424 (2022).

Gudmundsson, S., Runarsson, T. P., Sigurdsson, S., Eiriksdottir, G. & Johnsen, K. Reliability of quantitative EEG features. Clin. Neurophysiol. 118(10), 2162–2171. https://doi.org/10.1016/j.clinph.2007.06.018 (2007).

Donoghue, T. et al. Evaluating and comparing measures of aperiodic neural activity. BioRXiv https://doi.org/10.1101/2024.09.15.613114 (2024).

Yin, Y., Shang, P., Ahn, A. C. & Peng, C. K. Multiscale joint permutation entropy for complex time series. Phys. A: Stat. Mech. Appl. 515, 388–402. https://doi.org/10.1016/j.physa.2018.09.179 (2019).

Scheijbeler, E. P. et al. Network-level permutation entropy of resting-state MEG recordings: A novel biomarker for early-stage Alzheimer’s disease?. Netw. Neurosci. 6(2), 382–400. https://doi.org/10.1162/netn_a_00224 (2022).

Van Wijk, B. C., Stam, C. J. & Daffertshofer, A. Comparing brain networks of different size and connectivity density using graph theory. PLoS ONE 5(10), e13701. https://doi.org/10.1371/journal.pone.0013701 (2010).

Tewarie, P., van Dellen, E., Hillebrand, A. & Stam, C. J. The minimum spanning tree: An unbiased method for brain network analysis. Neuroimage https://doi.org/10.1016/j.neuroimage.2014.10.015 (2015).

Blomsma, N. et al. Minimum spanning tree analysis of brain networks: A systematic review of network size effects, sensitivity for neuropsychiatric pathology, and disorder specificity. Netw. Neurosci. 6(2), 301. https://doi.org/10.1162/netn_a_00245 (2022).

Hatz, F. et al. Microstate connectivity alterations in patients with early Alzheimer’s disease. Alzheimers Res. Ther. 7(1), 78. https://doi.org/10.1186/s13195-015-0163-9 (2015).

Antiqueira, L., Rodrigues, F. A., van Wijk, B. C., Costa, L. D. F. & Daffertshofer, A. Estimating complex cortical networks via surface recordings—a critical note. Neuroimage 53(2), 439–449. https://doi.org/10.1016/j.neuroimage.2010.06.018 (2010).

Adjamian, P. et al. Effective electromagnetic noise cancellation with beamformers and synthetic gradiometry in shielded and partly shielded environments. J. Neurosci. Methods 178(1), 120–127. https://doi.org/10.1016/j.jneumeth.2008.12.006 (2009).

Fraschini, M. et al. The effect of epoch length on estimated EEG functional connectivity and brain network organisation. J. Neural Eng. 13(3), 036015. https://doi.org/10.1088/1741-2560/13/3/036015 (2016).

Hommelsen, M., Viswanathan, S. & Daun, S. Robustness of individualized inferences from longitudinal resting state EEGdynamics. Eur. J. Neurosci. 56(1), 3613. https://doi.org/10.1111/ejn.15673 (2022).

Rogala, J., Kublik, E., Krauz, R. & Wróbel, A. Resting-state EEG activity predicts frontoparietal network reconfiguration and improved attentional performance. Sci. Rep. 10(1), 5064. https://doi.org/10.1038/s41598-020-61866-7 (2020).

Meredith Weiss, S. & Marshall, P. J. Anticipation across modalities in children and adults: Relating anticipatory alpha rhythm lateralization, reaction time, and executive function. Dev. Sci. 26(1), e13277. https://doi.org/10.1111/desc.13277 (2023).

Ülkü, S., Getzmann, S., Wascher, E. & Schneider, D. Be prepared for interruptions: EEG correlates of anticipation when dealing with task interruptions and the role of aging. Sci. Rep. 14(1), 5679. https://doi.org/10.1038/s41598-024-56400-y (2024).

Clements, G. M. et al. Spontaneous alpha and theta oscillations are related to complementary aspects of cognitive control in younger and older adults. Front. Hum. Neurosci. 15, 621620. https://doi.org/10.3389/fnhum.2021.621620 (2021).

Wan, L., Friedman, B. H., Boutros, N. N. & Crawford, H. J. P50 sensory gating and attentional performance. Int. J. Psychophysiol. 67(2), 91–100. https://doi.org/10.1016/j.ijpsycho.2007.10.008 (2008).

Dauwan, M. et al. Random forest to differentiate dementia with Lewy bodies from Alzheimer’s disease. Alzheimer’s Dementia: Diagn. Assess. Dis. Monit. https://doi.org/10.1016/j.dadm.2016.07.003 (2016).

Dominicus, L. et al. Advancing treatment response prediction in first-episode psychosis: Integrating clinical and electroencephalography features. Psychiatry Clin. Neurosci. https://doi.org/10.1111/pcn.13791 (2025).

Numan, T. et al. Functional connectivity and network analysis during hypoactive delirium and recovery from anesthesia. Clin. Neurophysiol. https://doi.org/10.1016/j.clinph.2017.02.022 (2017).

Nielsen, M. O. et al. Improvement of brain reward abnormalities by antipsychotic monotherapy in schizophrenia. Arch. Gen. Psychiatry https://doi.org/10.1001/archgenpsychiatry.2012.847 (2012).

Bojesen, K. B. et al. Treatment response after 6 and 26 weeks is related to baseline glutamate and GABA levels in antipsychotic-naïve patients with psychosis. Psychol. Med. https://doi.org/10.1017/S0033291719002277 (2020).

Kahn, R. S. et al. Amisulpride and olanzapine followed by open-label treatment with clozapine in first-episode schizophrenia and schizophreniform disorder (OPTiMiSE): A three-phase switching study. Lancet Psychiatry https://doi.org/10.1016/S2215-0366(18)30252-9 (2018).

Düring, S., Glenthøj, B. Y., Andersen, G. S. & Oranje, B. Effects of dopamine D2/D3 blockade on human sensory and sensorimotor gating in initially antipsychotic-naive, first-episode schizophrenia patients. Neuropsychopharmacology 39(13), 3000–3008. https://doi.org/10.1038/npp.2014.152 (2014).

Düring, S., Glenthøj, B. Y. & Oranje, B. Effects of blocking D2/D3 receptors on mismatch negativity and P3a amplitude of initially antipsychotic naïve, first episode schizophrenia patients. Int. J. Neuropsychopharmacol. 19(3), 109. https://doi.org/10.1093/ijnp/pyv109 (2016).

Jensen, K. S., Oranje, B., Wienberg, M. & Glenthøj, B. Y. The effects of increased serotonergic activity on human sensory gating and its neural generators. Psychopharmacology 196(4), 631–641. https://doi.org/10.1007/s00213-007-1001-y (2008).

Oranje, B. & Glenthøj, B. Y. Clonidine normalizes sensorimotor gating deficits in patients with schizophrenia on stable medication. Schizophr. Bull. 39(3), 684–691. https://doi.org/10.1093/schbul/sbs071 (2013).

Wienberg, M., Glenthoj, B. Y., Jensen, K. S. & Oranje, B. A single high dose of escitalopram increases mismatch negativity without affecting processing negativity or P300 amplitude in healthy volunteers. J. Psychopharmacol. 24(8), 1183–1192. https://doi.org/10.1177/0269881109102606 (2010).

Gramfort, A. et al. MEG and EEG data analysis with MNE-Python. Front. Neuroinf. 26(7), 267. https://doi.org/10.3389/fnins.2013.00267 (2013).

Whitham, E. M. et al. Scalp electrical recording during paralysis: Quantitative evidence that EEG frequencies above 20 Hz are contaminated by EMG. Clin. Neurophysiol. https://doi.org/10.1016/j.clinph.2007.04.027(2007).

Fraschini, M., Pani, S. M., Didaci, L. & Marcialis, G. L. Robustness of functional connectivity metrics for EEG-based personal identification over task-induced intra-class and inter-class variations. Pattern Recognit. Lett. https://doi.org/10.1016/j.patrec.2019.03.025 (2019).

Hipp, J. F., Hawellek, D. J., Corbetta, M., Siegel, M. & Engel, A. K. Large-scale cortical correlation structure of spontaneous oscillatory activity. Nat. Neurosci. https://doi.org/10.1038/nn.3101 (2012).

Stam, C. J., Nolte, G. & Daffertshofer, A. Phase lag index: Assessment of functional connectivity from multi channel EEG and MEG with diminished bias from common sources. Hum. Brain Mapp. https://doi.org/10.1002/hbm.20346 (2007).

Kruskal, J. B. On the shortest spanning subtree of a graph and the traveling salesman problem (1956). Ideas that Created the Future https://doi.org/10.7551/mitpress/12274.003.0019 (2021).

Bandt, C. & Pompe, B. Permutation entropy: A natural complexity measure for time series. Phys. Rev. Lett. 88(17), 174102. https://doi.org/10.1103/PhysRevLett.88.174102 (2002).

Govaarts, R. et al. Longitudinal changes in MEG-based brain network topology of ALS patients with cognitive/behavioural impairment, an exploratory study. Netw. Neurosci. 9, 824–841. https://doi.org/10.1162/netn_a_00450 (2025).

Fisher, R. A. Statistical methods for research workers. In Breakthroughs in Statistics: Methodology and Distribution 66–70 (Springer, New York, New York, NY, 1970).

Brunner, J. F. et al. Long-term test-retest reliability of the P3 NoGo wave and two independent components decomposed from the P3 NoGo wave in a visual Go/NoGo task. Int. J. Psychophysiol. 89(1), 106–114. https://doi.org/10.1016/j.ijpsycho.2013.06.005 (2013).

Koo, T. K. & Li, M. Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 15(2), 155–163. https://doi.org/10.1016/j.jcm.2016.02.012 (2016).

Tognoli, E. & Kelso, J. S. The metastable brain. Neuron 81(1), 35–48. https://doi.org/10.1016/j.neuron.2013.12.022 (2014).

Corsi-Cabrera, M., Galindo-Vilchis, L., Del-Río-Portilla, Y., Arce, C. & Ramos-Loyo, J. Within-subject reliability and inter-session stability of EEG power and coherent activity in women evaluated monthly over nine months. Clin. Neurophysiol. 118(1), 9–21. https://doi.org/10.1016/j.clinph.2006.08.013 (2007).

Rogers, J. M., Johnstone, S. J., Aminov, A., Donnelly, J. & Wilson, P. H. Test-retest reliability of a single-channel, wireless EEG system. Int. J. Psychophysiol. 106, 87–96. https://doi.org/10.1016/j.ijpsycho.2016.06.006 (2016).

Pani, S. M. et al. Subject, session and task effects on power, connectivity and network centrality: A source-based EEG study. Biomed. Signal Process. Control. 59, 101891. https://doi.org/10.1016/j.bspc.2020.101891 (2020).

Klimesch, W. Alpha-band oscillations, attention, and controlled access to stored information. Trends Cognit. Sci. 16(12), 606–617. https://doi.org/10.1016/j.tics.2012.10.007 (2012).

Colclough, G. L. et al. How reliable are MEG resting-state connectivity metrics?. Neuroimage 138, 284–293. https://doi.org/10.1016/j.neuroimage.2016.05.070 (2016).

Schoonhoven, D. N. et al. Sensitive and reproducible MEG resting-state metrics of functional connectivity in Alzheimer’s disease. Alzheimer’s Res. Ther. 14(1), 38. https://doi.org/10.1186/s13195-022-00970-4 (2022).

Yu, M. Benchmarking metrics for inferring functional connectivity from multi-channel EEG and MEG: A simulation study. Chaos 30(12), 123124. https://doi.org/10.1063/5.0018826 (2020).

Briels, C. T. et al. In pursuit of a sensitive EEG functional connectivity outcome measure for clinical trials in Alzheimer’s disease. Clin. Neurophysiol. 131(1), 88–95. https://doi.org/10.1016/j.clinph.2019.09.014 (2020).

Van Veen, B. D., Van Drongelen, W., Yuchtman, M. & Suzuki, A. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans. Biomed. Eng. 44(9), 867–880. https://doi.org/10.1109/10.623056 (1997).

Pascual-Marqui, R. D., Michel, C. M. & Lehmann, D. Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. Int. J. Psychophysiol. 18(1), 49–65. https://doi.org/10.1016/0167-8760(84)90014-X (1994).

Hauk, O. Keep it simple: A case for using classical minimum norm estimation in the analysis of EEG and MEG data. Neuroimage 21(4), 1612–1621. https://doi.org/10.1016/j.neuroimage.2003.12.018 (2004).

Hincapié, A. S. et al. The impact of MEG source reconstruction method on source-space connectivity estimation: A comparison between minimum-norm solution and beamforming. Neuroimage 156, 29. https://doi.org/10.1016/j.neuroimage.2017.04.038 (2017).

Mahjoory, K. et al. Consistency of EEG source localization and connectivity estimates. Neuroimage 152, 590–601. https://doi.org/10.1016/j.neuroimage.2017.02.076 (2017).

Douw, L., Nieboer, D., Stam, C. J., Tewarie, P. & Hillebrand, A. Consistency of magnetoencephalographic functional connectivity and network reconstruction using a template versus native M RI for co-registration. Hum. Brain Mapping 39(1), 104–119. https://doi.org/10.1002/hbm.23827 (2018).

Zou, G. Sample size formulas for estimating intraclass correlation coefficients with precision and assurance. Stat. Med. 31(29), 3972–3981. https://doi.org/10.1002/sim.5466 (2012).

Briels, C. T. et al. Reproducibility of EEG functional connectivity in Alzheimer’s disease. Alzheimers Res. Ther. 12(1), 68. https://doi.org/10.1186/s13195-020-00632-3 (2020).

Funding

No project-specific funding was used. E. van Dellen received funding for a part of his research time via an Organization for Health Research and Development (ZonMW) GGZ fellowship, identifier 60-63600-98-711 and UMC Utrecht Clinical Research Talent Fellowship, award ID: NA.

Author information

Authors and Affiliations

Contributions

K. Ambrosen: Writing—review & editing; E. van Dellen: Conceptualization, Methodology, Writing—review & editing; L. S. Dominicus: Formal analysis, Project administration, Visualization, Writing—original draft; B. H. Ebdrup: Conceptualization, Data curation, Writing—review & editing; A. P. C. Hermans: Formal analysis, Project administration; A. Hillebrand: Software, Writing—review & editing; L. Imhof: Formal analysis, Project administration; D. Y. Lodema: Formal analysis, Software, Visualization, Writing—original draft; B. Oranje: Conceptualization, Data curation, Writing—review & editing; W. M. Otte: Writing—review & editing; C. J. Stam: Conceptualization, Methodology. M. G. Zandstra: Writing—review & editing.

Corresponding author

Ethics declarations

Competing interests

B.E. serves on the advisory boards of Boehringer Ingelheim, Lundbeck Pharma A/S, and Orion Pharma A/S, and has received lecture honoraria from Boehringer Ingelheim, Otsuka Pharma Scandinavia AB, and Lundbeck Pharma A/S. All other authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dominicus, L.S., Lodema, D.Y., Oranje, B. et al. Reliability and state-dependency of EEG connectivity, complexity and network characteristics. Sci Rep 15, 38454 (2025). https://doi.org/10.1038/s41598-025-23662-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-23662-z