Abstract

To identify various oil well working conditions more accurately and practically from massive image data collected by multiple measured information sources of sucker-rod pumping wells, this paper proposes a working condition recognition method with three key aspects: curvelet pooling optimization technology, multi-source attention mechanism fusion feature extraction technology, and multi-source semi-supervised classification deep learning. Specifically: (a) Curvelet pooling optimization technology. We introduce the second-generation curvelet transform into the ResNet-50 pooling layer and adopt a collaborative learning pooling strategy of low-frequency and high-frequency information from the raw data decomposed via curvelet transform instead of max-pooling. This enhances the neural network’s capability to capture detailed features of complex image data. (b) Multi-source attention mechanism fusion feature extraction technology. We selected two information sources: measured ground dynamometer cards and measured electrical power cards. The multi-head self-attention mechanism enables interactive complementarity between curvelet-decomposed image data from each information source, while achieving dynamic weighted fusion of the interactive complementary data via the adaptive attention mechanism. This process yields optimal global feature representations of multi-source fused data. (c) Multi-source semi-supervised classification deep learning. By integrating multi-source fused feature data with a semi-supervised classification algorithm based on the dual strategy of dynamic adjustment of pseudo-label confidence and self-adaptive class fairness regularization, the method leverages abundant multi-source unlabeled samples to improve model classification performance and generalization ability under limited labeled training samples. This further enhances the accuracy and practicality of condition recognition. Experimental data were collected from a high-pressure, low-permeability, thin oil reservoir block in an oilfield in China. Extensive experiments demonstrate that the proposed method efficiently processes measured information source data in the sucker-rod pumping production system, improves the performance of traditional deep learning frameworks, explores the intrinsic correlations among multiple measured information source data of oil wells, and utilizes massive unlabeled working condition data to enhance the working condition recognition effect and engineering practicability with a minimal number of labeled samples. Code is available at https://github.com/Yoick/AMMFFECP.

Similar content being viewed by others

Introduction

In the context of the Internet of Things for oil and gas production, the sucker-rod pumping well production system can acquire massive measured working condition data, derived from multiple information sources such as ground dynamometer cards, electrical parameters et al., and also obtain a large amount of unlabeled working condition data. These data from diverse measured information sources are characterized by strong noise, high dimensionality, and significant randomness, yet they realistically and comprehensively reflect the operational status of pumping wells. Traditional signal processing techniques can no longer effectively handle such high-dimensional and complex data1,2. Deep learning technology excels at extracting deep feature representations from high-dimensional complex data by constructing machine learning models with multiple hidden layers, thereby enhancing model classification or prediction performance. However, the requirement for a large number of labeled training samples3 and the neglect of valuable unlabeled samples4 have constrained the engineering application of deep learning. The sucker-rod pumping well production system is a complex nonlinear system with the mechanic-electronic-liquid coupling, making it impossible for single-source information or traditional intelligent information processing techniques to efficiently identify working conditions in an accurate manner5,6. Thus, exploring how to effectively utilize multiple measured information sources at the pumping well site, leverage deep learning technology, and integrate massive unlabeled samples with a minimal number of existing labeled samples to enhance the performance and practicality of working condition recognition models holds significant theory and engineering value.

Currently, most deep learning-based working condition recognition methods for sucker-rod pumping wells rely on convolutional neural network architectures7. While these methods have demonstrated promising results, they suffer from a significant limitation: the loss of local key information during signal processing8. Specifically, classical CNNs typically employ max-pooling or average pooling to reduce the data dimensionality9. This technique overlooks the subtle and multi-scale characteristics of complex signals, inevitably leading to the loss of local key information within the working condition data. To address this issue, researchers in related fields have proposed integrating multi-scale transformation techniques into the CNN pooling layer to replace traditional pooling methods. Wavelet transform, renowned for its superior multi-scale decomposition capabilities, has been widely adopted in pooling layer enhancements. By preserving image details, reducing noise, and improving the model’s ability to capture multi-scale features, wavelet transform offers notable improvements10,11. However, it exhibits limitations when handling directional and complex edge features. With its low directional resolution, wavelet transform can only decompose signals in horizontal, vertical, and diagonal directions, rendering it ineffective in capturing curved edges and intricate curve structures in images. This shortcoming is particularly pronounced in tasks requiring precise multi-directional and detailed information extraction. In contrast, the curvelet transform12,13 not only inherits multi-scale properties but also enables multi-directional decomposition, allowing for more accurate capture of curve and edge information in images. The curvelet pooling technique, grounded in curvelet transform14, demonstrates significant advantages in retaining complex structural features and fine details of images, making it highly suitable for image processing tasks with stringent geometric feature requirements. Therefore, incorporating curvelet pooling into CNN-based working condition recognition methods can effectively mitigate the loss of local feature information in working condition data.

Most of the existing research on the working condition recognition of sucker-rod pumping wells primarily relies on single-source data analysis, such as dynamometer cards15 or electrical parameters16. However, these methods often yield inaccurate results when applied to complex and dynamic oil well conditions, failing to meet the urgent demand for efficient and precise identification in the context of accelerating oilfield digitalization. To address this challenge, recent studies have shifted focus toward multi-source fusion technologies, aiming to leverage complementary advantages among diverse data sources to enhance recognition performance. For example, researchers have combined dynamometer cards, electrical parameters, and oil production statistics through multi-feature concatenation17,18. While these approaches mitigate the limitations of single-source analysis, they introduce new challenges: (a) Robustness Issues. Uncertainties in production statistics can compromise model stability. (b) Inefficient Feature Fusion. The multi-feature concatenation struggles to capture deep inter-source correlations and often introduces redundant information under high-dimensional conditions, degrading both efficiency and accuracy. Weighted fusion methods19 attempt to improve integration by assigning fixed weights to different sources. However, these weights are typically determined via prior knowledge and fail to adapt dynamically to complex data relationships, limiting model generalization. In contrast, attention mechanisms in deep learning offer two key advantages: (a) Latent Relationship Capture: By enabling interactive learning across information sources, attention mechanisms uncover hidden correlations20. (b) Dynamic Weight Adjustment: They dynamically prioritize relevant features, enhancing model performance21. Thus, integrating attention mechanisms can effectively overcome the limitations of current multi-source fusion approaches for working condition recognition.

The requirement for a large number of labeled working condition samples has severely restricted the engineering application of existing sucker-rod pumping well working condition recognition technologies22. To address this issue, some scholars have proposed using a small amount of labeled dynamometer card data combined with meta-learning methods for the working condition recognition4, while others have suggested leveraging a small amount of labeled dynamometer card data and a large amount of unlabeled dynamometer card data in conjunction with semi-supervised generative adversarial networks23. Although these studies have effectively tackled the problem of requiring a large number of labeled training samples, their engineering practicality is limited by the constraints of single-source recognition and the weak generalization ability of the models.

In the field of semi-supervised deep learning, pseudo-label learning offers high flexibility and strong generalization capabilities, making it suitable for various practical application scenarios. Combining pseudo-label learning with consistency regularization can further enhance the performance of semi-supervised learning models24,25. Pseudo-label learning based on a dynamic confidence adjustment strategy can efficiently filter out low-quality pseudo-labels, while self-adaptive class fairness regularization learning can dynamically balance the prediction distributions of different categories. Integrating these two techniques with multi-source feature fusion technology can effectively overcome the limitations of single-source-based semi-supervised deep learning for the working condition recognition in the complex sucker-rod pumping well system, thereby improving the generalization performance and robustness of working condition recognition models in semi-supervised scenarios.

In summary, this paper proposes a measured multi-source semi-supervised working condition recognition method based on curvelet pooling and attention mechanism learning, addressing the limitations of existing sucker-rod pumping well working condition recognition research while integrating the characteristics of big data oil production. Specifically, we first introduce the second-generation curvelet transform into the ResNet-50 pooling layer, replacing max-pooling with a collaborative learning pooling strategy that utilizes low-frequency and high-frequency information from curvelet-decomposed raw data. We then select two information sources: measured ground dynamometer cards and measured electrical power cards, and employ a multi-head self-attention mechanism to enable interactive complementarity between curvelet-decomposed image data of each source. An adaptive attention mechanism further achieves a dynamic weighted fusion of the interactive complementary data, thereby obtaining the optimal global feature representation of multi-source fused data. Finally, we integrate the multi-source fused feature data with a semi-supervised classification algorithm based on a dual strategy of pseudo-label confidence dynamic adjustment and self-adaptive class fairness regularization. This approach leverages limited multi-source labeled training samples alongside abundant unlabeled samples to enhance model classification performance and generalization ability, ultimately improving the accuracy and practicality of working condition recognition.

The contributions of this paper are as follows:

-

We propose an efficient curvelet pooling optimization technology. Based on the ResNet-50 architecture, we introduce the second-generation curvelet transform into the ResNet-50 pooling layer, replacing the original max-pooling with a collaborative learning pooling strategy that utilizes low-frequency and high-frequency information from curvelet-decomposed raw data. This enhancement enables the neural network to capture detailed features of complex image data, overcoming the limitation of local key information loss in traditional CNNs during signal processing;

-

We propose an attention mechanism-based multi-source fusion feature extraction technology. By selecting two information sources: measured ground dynamometer cards and measured electrical power cards, we employ a multi-head self-attention mechanism to enable interactive learning across sources, effectively capturing latent relationships between multi-source features. An adaptive attention mechanism then achieves dynamic weighted fusion across sources, yielding optimal global feature representations of multi-source fused data;

-

We propose an efficient multi-source semi-supervised deep learning method for working condition recognition. This method integrates the curvelet pooling optimization and attention-based multi-source feature fusion with a semi-supervised classification algorithm featuring dynamic pseudo-label confidence adjustment and self-adaptive class fairness regularization. The integration allows effective utilization of limited labeled data and abundant unlabeled data to enhance recognition accuracy and practicality;

-

Extensive experiments validate the proposed method’s effectiveness. Compared with traditional single/multi-source approaches, different pooling techniques, and semi-supervised methods under varying labeling ratios, the proposed method demonstrates superior performance—especially with only 10% labeled data. Hyperparameter sensitivity and ablation studies further confirm its robustness.

Related work

At present, the working condition recognition technologies for sucker-rod pumping wells can be categorized into three types: dynamometer card-based methods, electrical parameter-based methods, and multi-source data-based methods.

Research status of dynamometer card-based working condition recognition

Traditional dynamometer card-based techniques construct classification models by integrating specific feature extraction strategies with machine learning methods to achieve accurate recognition of working conditions. For example, Wu et al.26 proposed a three-level wavelet packet decomposition method, which normalized dynamometer card data, extracted feature vectors, and classified working conditions using an RBF neural network. Li et al.27 partitioned dynamometer cards via the ’four-point method’, extracted moment-invariant features, and employed a PSO-optimized SVM for recognition. Zhang and Gao28 utilized the fast discrete curvelet transform for feature extraction and a sparse multi-graph regularized extreme learning machine for classification.

Although these traditional methods have achieved moderate success, they suffer from three key limitations: reliance on manual feature design, insufficient generalization capability, and the need for large labeled samples. Deep learning technologies based on convolutional neural networks (CNNs) have emerged as a research focus due to their powerful feature extraction capabilities. Wang et al.29 proposed using CNNs to extract dynamometer card features, enabling intelligent diagnosis of pumping well conditions without manual intervention or prior knowledge, but this approach relied heavily on abundant labeled samples. Zhang et al.30 applied transfer learning with CNNs to significantly improve the working condition diagnosis performance on small-sample datasets and alleviated the generalization limitations of shallow networks. He et al.4 developed a working condition recognition method based on 4-dimensional time-frequency signatures and a meta-learning convolutional shrinkage neural network, enhancing recognition accuracy through time-frequency feature extraction and low-frequency feature ablation while addressing few-shot problems via a meta-learning framework.

These methods still require performance improvements and overlook the value of abundant unlabeled samples. Additionally, to address the demand for large labeled samples, Moussa et al.23 proposed semi-supervised generative adversarial networks, which generated synthetic dynamometer cards by combining labeled and unlabeled data to enable the working condition recognition with minimal labeled samples. However, classification accuracy under low labeling ratios remains suboptimal. Notable Limitations: (a) Single-source vulnerability. Sole reliance on dynamometer cards causes misdiagnosis for complex conditions. (b) Labeling bottleneck. Most methods require a plentiful supply of labeled samples, unfitting to the actual engineering requirements. (c) CNN feature degradation. Standard pooling loses local critical features for subtle fault detection.

Research status of electrical parameter-based working condition recognition

Electrical parameters have garnered sustained attention due to their easy acquisition and cost-effectiveness. Zheng et al.31 proposed extracting seven novel features from electrical power data, combining key parameters such as valve opening/closing points and pump working cycles, and using multiple hidden conditional random fields for the working condition recognition. Chen et al.16 extracted 16 time-domain and frequency-domain features from power curves based on the working mechanism of sucker-rod pumps, employing XGBoost for classification. Tecle et al.32 generated motor power curves considering subsurface condition influences through a sucker-rod pump simulation model, using these curves with SVM for classification.

While these studies have achieved certain results, traditional feature extraction and machine learning methods show inadequacies when facing massive data. Thus, research on electrical parameter-based working condition recognition has gradually shifted to deep learning approaches. Wei and Gao33 proposed using CNN to extract electrical power features, fusing them with manually engineered features via mechanical analysis, and then using a generalized learning system as a classifier for the working condition recognition. These electrical parameter-based methods have made progress, but still have limitations: the “division by zero” issue, which originates from the up-and-down reciprocating mechanical motion characteristics of the sucker-rod pumping system’s four-bar linkage. Specifically, the torque coefficient is zero at the upper and lower dead centers of the suspension point of the polish rod. When it is used as a divisor, a large error will occur, thus affecting the calculation precision of the feature parameters; single-source data is prone to misjudgments; the demand for large labeled samples hinders engineering applications; and CNN-based methods suffer from key information loss.

Research status of multi-source data-based working condition recognition

The complex field environment makes single-source data insufficient to comprehensively represent pumping well conditions, driving multi-source working condition recognition to become an important research direction. Liu et al.34 proposed AdaBNet (Adaptive Weighted Ensemble Bayesian Network) for fault monitoring by integrating features such as dynamometer card area, maximum/minimum load, daily operation time, and production statistics. Zhang et al.35 established a comprehensive diagnostic model based on feature recognition by extracting features from dynamometer cards and power vs. position plots under different working conditions. Xu et al.36 proposed a machine learning diagnostic model fusing electrical parameters and dynamometer cards, setting multi-stage constraints and optimizing parameters via an adaptive genetic algorithm for precise condition assessment.

The above methods fail to fully exploit the potentiality among information sources, still relying on manually designed feature extraction. Traditional multi-feature concatenation has reached a bottleneck, and the use of statistical features weakens model robustness, limiting adaptability under complex conditions. Zhou et al.37 developed a multi-source p-Laplacian regularized kernel logistic regression model using dynamometer cards, electrical power signals, and wellhead temperature signals for measured multi-source semi-supervised working condition recognition, but its performance in multi-classification needs improvement.

To more efficiently utilize multi-source information, researchers have explored deep learning-based methods. Li et al.38 fused Fourier descriptor and graphical features of dynamometer cards to exploit complementary advantages, enhancing the diagnostic model performance. Abdurakipov et al.18 built a working condition recognition model by integrating dynamometer cards, pump inlet pressure and temperature data with Transformer. Although these deep learning methods have achieved results, they still underutilize latent correlations between multi-source features and perform poorly with few labeled samples, restricting practical application.

Based on the above analysis, CNN-based single-source deep learning methods have shown significant effectiveness, and extending them to multi-source learning is expected to improve the model performance. However, the inherent pooling design of traditional CNNs limits multi-source recognition. While wavelet pooling39 alleviates this issue, it struggles with images rich in edge details and texture features. By contrast, curvelet pooling14 more effectively mitigates feature loss caused by traditional CNN pooling. Additionally, existing multi-source learning methods rely on manual weight setting for information sources, weakening synergy. Li et al.40 proposed an adaptive data fusion strategy using deep learning, implementing fault diagnosis via adaptive convolution kernels matching multi-source channels. Liu et al.21 developed an attention-based adaptive multi-scale module to dynamically adjust feature weights across sources, providing references for pumping well multi-source learning. Meanwhile, working condition recognition based on multi-source learning is constrained by large labeled sample requirements. Semi-supervised techniques based on pseudo-label learning and consistency regularization have matured in semi-supervised deep learning, although lacking multi-source interactive learning, their semi-supervised concepts effectively address the over-reliance on labeled samples in current research.

Methodology

Problem definition

The key symbols used in this paper and their detailed descriptions are shown in Table 1.

This paper proposes a multi-source semi-supervised working condition recognition method for sucker-rod pumping wells based on curvelet pooling and attention mechanism learning. We construct a multi-source dataset D containing V different information sources, where each information source contains C categories. Dataset D is divided into two components: the labeled dataset L and the unlabeled dataset U, that is, \(D=\{L,U\}\). The labeled dataset L is defined as \(L=\{L^v\}=\{(x_i^v, y_i)\}_{i=1}^k\), where \(L^v\) represents the labeled dataset of the v-th information source, \(x_i^v\) is the i-th sample in the v-th information source, \(y_i\in \{1,2,\ldots ,C\}\) is the sample label, and k is the total number of labeled samples. The unlabeled dataset U is defined as \(U=\{U^v\}=\{x_j^v\}_{j=k+1}^{k+m}\), where \(U^v\) is the unlabeled dataset of the v-th information source, \(x_j^v\) is the j-th unlabeled sample from the v-th information source, and m is the total number of unlabeled samples.

Model overview

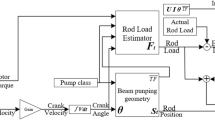

The model framework is illustrated in Fig. 1, primarily composed of two submodules. The multi-source feature extraction submodule based on curvelet pooling and attention mechanism learning first employs ResNet-50 as the basic architecture, introducing the curvelet transform to replace the max-pooling layers with curvelet pooling layers. This enhances the network’s capability to capture fine-grained features from complex images. The multi-head self-attention mechanism then processes multi-source feature representations in parallel, capturing cross-source dependencies across different subspaces to enable efficient feature interaction. A cosine similarity-based multi-source feature interaction constraint loss function is introduced to quantify feature similarity, further enhancing the cross-source interaction effect by maximizing this similarity. An adaptive attention mechanism finally learns weight coefficients for features from different sources, fusing multi-source representations to generate optimal global feature vectors for each sample. The multi-source semi-supervised classification submodule draws inspiration from the FreeMatch framework, leveraging abundant unlabeled samples in multi-source datasets under limited labeled conditions. It introduces self-adaptive confidence thresholding to dynamically filter high-quality pseudo-labels and self-adaptive class fairness regularization to balance class-wise prediction distributions. These mechanisms collectively improve the model’s generalization ability and classification performance in semi-supervised scenarios, effectively addressing the challenge of limited labeled data in practical applications.

A multi-source feature extraction submodule based on curvelet pooling and attention mechanism learning

Curvelet pooling

In the field of sucker-rod pumping well working condition recognition, signals such as dynamometer cards and electrical power cards exhibit rich texture and edge features, which are crucial for identifying operational states and detecting potential faults. However, traditional convolutional neural networks often weaken or lose key edge information when reducing the spatial dimension of feature maps via max-pooling layers, degrading overall model performance.

Compared with the wavelet transform, the curvelet transform preserves edge and texture information more effectively due to its multi-scale and multi-directional properties. This enables neural networks to learn richer feature representations, improving the model’s capability to recognize complex images. In this paper, ResNet-50 serves as the base network architecture, with traditional max-pooling layers replaced by curvelet pooling layers based on curvelet transform. This optimization enhances the network’s feature extraction efficiency, improves the model’s sensitivity to fine-grained features, and boosts performance in complex condition recognition tasks.

Specifically, for the input sample \({x}_{i}^{v}\), the curvelet pooling layer is defined in Eq. 1:

where \(C_J\) represents the low-frequency component, and \(D_j\) represents the high-frequency component of different scales \(j ( j =1,2,\ldots ,J )\). The low-frequency component \(C_J\) and high-frequency component \(D_j\) are aggregated through direct element-wise summation. This additive fusion method preserves both the global contextual information captured by \(C_J\) and the discriminative multi-scale details retained in \(D_j\). In terms of tensor dimensions, the input \({x}_{i}^{v}\) has a shape of \(B\) \(\times\) \(C\) \(\times\) \(H\) \(\times\) \(W\), \(C_J\) is of size \(B\) \(\times\) \(C\) \(\times\) \(H_{J}\) \(\times\) \(W_{J}\), while each component \(D_j\) at scale \(j ( j =1,2,\ldots ,J )\) has dimensions \(B\) \(\times\) \(C\) \(\times\) \(H_{j}\) \(\times\) \(W_{j}\). The resulting output \(I\), obtained through element-wise aggregation of these components, has a shape of \(B\) \(\times\) \(C\) \(\times\) \(H^{\prime }\) \(\times\) \(W^{\prime }\).

The curvelet pooling layer is integrated into the network to replace the max-pooling layer, as expressed in Eq. 2.

where \(I_{\text{ conv } }^v\)denotes the output of the convolutional layer in ResNet-50, and \(I_{\text{ curve } }^v\) represents the output of the curvelet pooling.

The architecture of the curvelet pooling layer is illustrated in Fig. 2. In the diagram, FDCT-USFFT (fast discrete curvelet transforms based on unequally-spaced fast Fourier transform) represents the curvelet transform method based on USFFT, where ’Coarse Scale’ corresponds to the coarsest scale of low-frequency information after transformation, ’Fine Scale’ corresponds to the finest scale of high-frequency information, and ’Middle Scale’ represents other high-frequency information.

By incorporating the curvelet pooling layer, the enhanced ResNet-50 model can more effectively capture the key edge features of sucker-rod pumping well working condition samples. It guides the model training process by jointly leveraging low-frequency and high-frequency information, thereby improving the accuracy and robustness of working condition recognition.

Multi-source feature interaction learning based on multi-head self-attention mechanism

Inspired by the transformer41 coding architecture, this component employs a multi-head self-attention mechanism and feedforward neural network to process multi-source feature representations in parallel. It captures cross-source feature dependencies across different subspaces and performs nonlinear transformations to enable multi-source feature interaction. A cosine similarity-based multi-source feature interaction constraint loss function is introduced to ensure maximum consistency of multi-source feature representations in the feature space, thereby enhancing model generalization.

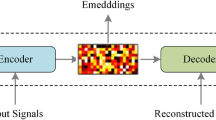

The schematic diagram of multi-source feature interaction learning based on multi-head self-attention mechanism is shown in Fig. 3. First, multi-source features \(F(D)\) outputted by curvelet pooling optimized ResNet-50 are flattened and concatenated, then fed into the multi-head self-attention module (mhsa). MHSA captures cross-source correlation information across different subspaces, effectively enhancing multi-source feature expressiveness. Training stability is maintained via residual connections and layer normalization. Next, the feedforward network (ffn) performs nonlinear transformations to extract deeper features, with residual connections and layer normalization again ensuring training stability, as defined in Eq. (3):

where \(N(F(D))\) denotes multi-source features after interaction, and the ffn consists of two linear mapping layers with a ReLU activation function.

To fully exploit the intrinsic interactions between multi-source data and improve model generalization, a cosine similarity-based multi-source feature interaction constraint loss is proposed. This loss maximizes cosine similarity between cross-source feature representations to ensure their consistency in the feature space. Specifically, first, for the cross-source feature representations: (a) Normalize via \(L_2\) norms to eliminate magnitude differences. (b) Calculate the cosine similarity of the v-th source feature, as in Eq. (4):

where \(S^v\) denotes the cosine similarity of the v-th source feature, \({N\left( F\left( D^v\right) \right) }\) denotes the feature representation of the v-th source after the multi-source interaction, and \((\cdot )^T\) denotes vector transposition.

Then, compute the difference matrix between any two source similarity matrices, and the multi-source feature interaction constraint loss \({L}_{c}\) is defined as the square of the norm of the difference matrix, ensuring cross-source feature consistency in the feature space, as shown in Eq. (5):

Multi-source feature fusion based on adaptive attention mechanism

This component introduces an adaptive attention mechanism to dynamically learn representation weights from different source features. By assigning differentiated weights, the model enhances its capability to capture key information, enabling effective fusion of interacted multi-source feature representations. The mechanism consists of the fully connected layer, the tanh activation layer, the fully connected layer, and the softmax layer in sequence, as illustrated in Fig. 4.

For the feature representation \(N\left( F\left( {D}^{v}\right) \right)\) of the v-th source data, the process unfolds as follows: first, the feature is transformed via the fully connected layer, followed by non-linear mapping through the tanh activation function. The second fully connected layer and softmax function then compute the attention score \(\omega ^v\). The calculated weight \(\omega ^v\) is multiplied by \(N(F(D^v))\) to weight the feature, and finally, weighted feature representations from all sources are fused to obtain the global feature representation, as shown in Eq. (6):

where \(G(D)\) represents the fused global feature representation, which provides a richer feature representation for subsequent classification

Multi-source semi-supervised classification submodule

This submodule performs semi-supervised multi-source classification on the global feature representation \(G(D)\). Inspired by the FreeMatch algorithm, it optimizes model training under limited labeled samples by introducing a self-adaptive confidence threshold and self-adaptive class fairness regularization for the multi-source feature representation \(G(U)\) of unlabeled samples. These mechanisms enhance the classification performance and generalization ability of the model.

The self-adaptive confidence threshold dynamically adjusts based on the model’s prediction confidence for unlabeled samples. A lower threshold in the early training phase enables full utilization of unlabeled data, while the threshold adjusts synchronously as the model confidence improves to suppress the negative impact of false labeling. The global threshold \(T_t\) is updated via exponential moving average (EMA), as shown in Eq. (7):

where \(\lambda\) is the EMA momentum decay coefficient, \(B\) is the batch size, and \({q}_{b}\) is the model’s prediction confidence for unlabeled samples.

The update of the local threshold \({\tilde{\theta }}_t(c)\) is shown in Eq. (8):

where c represents the category corresponding to the current threshold, and \(q_b(c)\) represents the confidence level predicted for that category. This threshold is independently and dynamically adjusted based on the predicted confidence distribution of each category, thereby effectively suppressing noise interference in categories with high uncertainty during the early training stage and progressively refining the screening precision in later phases. This mechanism effectively mitigates the issue of pseudo-label quality degradation.

The self-adaptive confidence threshold at different times can be calculated based on the dynamic update formula of the global threshold and the local threshold, as shown in Eq. (9):

As shown in Fig. 5, the adaptive threshold improves pseudo-label utilization in early training and reduces false pseudo-label effects in late training compared to the fixed threshold.

Self-adaptive class fairness regularization encourages the model to make diverse predictions in all categories, which helps to generate diverse predictions in the early training phase and improves the performance of the model with fewer data. Self-adaptive class fairness regularization is achieved by adjusting the histogram distribution of the expected output probability, and the \(L_f\) calculation of the loss of self-adaptive class fairness regularization is shown in Eq. (10):

where \(H\) is the entropy function used to calculate the difference between the two probability distributions, \(\widetilde{\theta _t}\) is the EMA of the predicted probabilities of the model for each category in the t-th iteration, \(\widetilde{h_t}\) is the EMA of the histogram distribution in the t-th iteration, and \({\bar{\theta }}\) and \({\bar{h}}\) are the probability distributions being performed histogram normalization processing to \(\widetilde{\theta _t}\) and \(\widetilde{h_t}\), respectively.

The calculation \(L_u\) of the loss of unsupervised learning training for the t-th iteration is shown in Eq. (11):

where \(q_b\) and \(Q_b\) represent the prediction probability distributions of the model for weakly enhanced unlabeled samples and strongly enhanced unlabeled samples, respectively. \(\widehat{{q}_{b}}\) represents the one-hot label converted from the \(q_b\). \(T_p\) represents the prediction probability of the unlabeled sample, \(T_t\) represents the confidence threshold, and \(\mathbbm {1}(\cdot )\) represents the indicator function.

Total loss function

The total loss function of the proposed method is composed of supervised learning loss \(L_s\), multi-source feature interaction constraint loss \(L_c\), unsupervised learning loss \(L_u\), and self-adaptive class fairness regularization loss \(L_f\), which are calculated as shown in Eq. (12):

where \({{w}}_{c}\), \({{w}}_{u}\) and \({{w}}_{f}\) are the corresponding weights of multi-source feature interaction constraint loss \({{L}}_{c}\), unsupervised learning loss \({{L}}_{u}\) and self-adaptive class fairness regularization loss \({{L}}_{f}\), respectively.

Experiments

Considering the influence of the damping coefficient and the ’division by zero’ issue on the accuracy of working condition recognition, this paper selects measured ground dynamometer cards and measured electrical power cards (both are binary images) as the main information sources to analyze the working status of sucker-rod pumping wells comprehensively. The measured ground dynamometer card is plotted based on the displacement and load data of the polished rod. The measured electrical power card is drawn by the displacement of the polished rod and the effective electrical power of the motor (without relying on the specific feature parameter calculation through electrical signals, thus avoiding the ’division by zero’ issue).

The experimental working condition dataset is derived from a high-pressure, low-permeability thin oil reservoir block in an oilfield in China. The working condition samples used in the experiment are from 60 different oil wells over three consecutive calendar years of sucker-rod pumping well production in the above reservoir conditions, and are strictly selected according to the operation records of the oil wells. Each working condition sample is composed of data points collected at the actual corresponding acquisition time in the production site for both the measured ground dynamometer card and the measured electrical power card. The dataset includes 11 typical working conditions, with 150 samples for each condition, totaling 1, 650 samples. The 11 typical working conditions are normal, lack of supply liquid, pump leakage, tubing leakage, standing valve leakage, traveling valve leakage, assist-blowing, rod cutting, stuck pump, traveling valve failing, and wax precipitation.

This paper uses PyTorch 1.13.0 as the basic development framework, with the operating environment being Ubuntu 20.04, and NVIDIA RTX 3090 GPU. The training dataset and the test dataset contain 825 samples respectively, covering 11 working conditions, with 75 samples for each condition. All samples undergo identical preprocessing with color inversion and normalization. The experimental results are calculated as the averages of 10 runs. All comparative methods are evaluated under identical experimental conditions. The accuracy and F1-Score (Macro F1) are selected as the evaluation metrics.

To verify the effectiveness and practicability of the proposed method, this paper designs a series of experiments, which are divided into the following five parts: (a) Comparison of recognition results of different methods based on curvelet pooling and wavelet pooling; (b) Comparison of recognition results of different methods based on single-source and multi-source; (c) Comparison of different multi-source semi-supervised condition recognition methods under different labeled sample ratios; (d) Sensitivity analysis of key hyperparameters, analyzing the influence of key hyperparameters on model performance and determining the optimal parameter configuration; (e) Ablation experiments, evaluating the contribution of key components in the proposed method to model performance and verifying the rationality of the method design.

Comparison of recognition results of different methods based on curvelet pooling and wavelet pooling

To verify the superiority of curvelet pooling implemented based on USFFT in supervised scenarios, different types of wavelet pooling are introduced into recognition methods based on single-source(S-ResNet) and attention mechanism-based multi-source feature fusion extraction(AMMFFE-ResNet), and then compared with the proposed method in this paper (AMMFFE-CP-ResNet-50). Specifically, these include single-source working condition recognition methods optimized by wavelet pooling based on different basis functions (S-WP-db4-ResNet-50, S-WP-bior3.3-ResNet-50), lifting wavelet pooling (S-LWP-db4-ResNet-50, S-LWP-bior3.3-ResNet-50), dual-tree complex wavelet pooling (S-DTCWP-ResNet-50), and USFFT-based curvelet pooling (S-CP-ResNet-50), and attention mechanism-based multi-source feature fusion extraction working condition recognition methods optimized by wavelet pooling based on different basis functions (AMMFFE-WP-db4-ResNet-50, AMMFFE-WP-bior3.3-ResNet-50), lifting wavelet pooling (AMMFFE-LWP-db4-ResNet-50, AMMFFE-LWP-bior3.3-ResNet-50), and dual-tree complex wavelet pooling (AMMFFE-DTCWP-ResNet-50). The experimental results are shown in Table 2.

As can be seen from Table 2: (a) In the pooling layer optimization experiments based on single-source and multi-source, the optimization effect of curvelet pooling is better than that of wavelet pooling. (b) Compared with the optimal multi-source working condition recognition method optimized by basis wavelet pooling, the accuracy and F1-Score of the proposed method are improved by 0.16% and 0.0017, respectively; compared with the optimal multi-source working condition recognition method optimized by lifting wavelet pooling, the accuracy and F1-Score are improved by 0.13% and 0.0015, respectively. (c) Compared with the multi-source working condition recognition method optimized by dual-tree complex wavelet pooling, the accuracy and F1-Score of the proposed method are improved by 0.55% and 0.0055, respectively. In supervised scenarios, although the improvement in accuracy of our method is modest, the enhancement in F1-Score further demonstrates the robustness of the performance improvement achieved by the proposed method. Through the above analysis, the curvelet pooling can effectively mitigate the problem that the inherent pooling design of traditional CNN affects the model recognition performance.

Comparison of recognition results of different methods based on single-source and multi-source

To verify the effectiveness and superiority of the proposed attention mechanism-based multi-source fusion feature extraction method in supervised scenarios, ResNet series models and ViT (Vision Transformer)42 models are selected for experiments. The experimental methods include single-source working condition recognition methods (SResNet-18/34/50/101/152, SViT), attention mechanism-based multi-source fusion feature extraction working condition recognition methods (AMMFFEResNet-18/34/50/101/152, AMMFFE-LWP-bior3.3-ResNet-50, AMMFFEViT, AMMFFE-ResNet-50_SE, AMMFFE-ResNet-50_SELF, and the proposed method AMMFFE-CP-ResNet-50). In addition, the traditional multi-source feature connection-based working condition recognition method (MResNet-50), the interpretable multi-view graph convolutional neural network (IMvGCN)43, and two semi-supervised recognition methods based on attention mechanism-based multi-source fusion feature extraction ( AMMFFE-InfoMatch-ResNet-5044, AMMFFE-SequenceMatch-ResNet-5045) are introduced as comparisons. The experimental results are shown in Table 3.

From Table 3, it can be seen that: (a) Among the single-source and multi-source feature attention fusion methods without pooling improvements, AMMFFEResNet-50 achieves the best recognition performance, verifying the effectiveness of the proposed attention mechanism-based multi-source fusion feature extraction. (b) The proposed AMMFFE-CP-ResNet-50 outperforms the optimal wavelet pooling method, further validating the effectiveness of curvelet pooling in optimizing CNNs for collaborative learning of low-frequency and high-frequency information. (c) Under the premise of multi-source feature interaction via multi-head self-attention, the adaptive attention-based fusion method, AMMFFEResNet-50 improves accuracy and F1-Score by 0.45% and 0.0045 compared to the SE attention mechanism, and by 0.51% and 0.0052 compared to the self-attention mechanism, and it is worth noting that in supervised scenarios, the consistent improvements in both accuracy and F1-Score further demonstrate the robustness of the performance enhancement attained by the proposed method, confirming the superiority of adaptive attention-based fusion.

Comparison of different multi-source semi-supervised condition recognition methods under different labeled sample ratios

To validate the proposed method’s superiority in semi-supervised scenarios, multi-source working condition recognition results under different semi-supervised ratios (1%, 10%, 30%, 50%, corresponding to labeled samples n=11, n=77, n=242, n=407) are compared based on the first set and the second set of experiments. For all settings, the splits of labeled and unlabeled samples are kept consistent across different sources to ensure fair comparisons. The results are shown in Table 4, with comparisons to different semi-supervised learning methods illustrated in Fig. 6.

From Table 4, it can be seen that: (a) The proposed AMMFFE-CP-ResNet-50 outperforms state-of-the-art semi-supervised methods in multi-source condition recognition.Specifically, compared with IMvGCN, at 1% labeled sample ratio, (accuracy/F1-Score +5.26%/0.0448); at 10% (accuracy/F1-Score +4.24%/0.0413); at 30% (accuracy/F1-Score +3.79%/0.0377); and at 50% (accuracy/F1-Score +3.20%/0.0318). Compared with AMMFFE-InfoMatch, at 1% (accuracy/F1-Score +10.45%/0.1233); at 10% (accuracy/F1-Score +1.59%/0.0145); at 30% (accuracy/F1-Score +1.46%/0.0131); and at 50% (accuracy/F1-Score +1.49%/0.0149). Compared with AMMFFE-SequenceMatch, at 1% (accuracy/F1-Score +2.61%/0.0339); at 10% (accuracy/F1-Score +0.29%/0.002); at 30% (accuracy/F1-Score +0.7%/0.0062); and at 50% (accuracy/F1-Score +0.36%/0.0037). (b) AMMFFE-ResNet-50 outperforms MResNet-50 under all semi-supervised ratios, particularly at 1% (accuracy and F1-Score improved by 1.15% and 0.0116), verifying the effectiveness of multi-source feature interaction and fusion in semi-supervised scenarios. (c) The proposed AMMFFE-CP-ResNet-50 surpasses the optimal pooling method (AMMFFE-LWP-bior3.3-ResNet-50) under all ratios, especially at 1% (accuracy/F1-Score +1.72%/0.017) and 10% (accuracy/F1-Score +0.46%/0.0037), confirming the superiority of curvelet pooling-based CNN optimization in semi-supervised settings.

Sensitivity analysis of key hyperparameters

To determine optimal configurations, sensitivity analysis is conducted on key hyperparameters (multi-source feature interaction constraint loss weight \(w_c\) (Fig. 7(i)), self-adaptive class fairness regularization weight \(w_f\) (Fig. 7(ii)), and number of multi-head self-attention heads (Fig. 7(iii)) in the Transformer encoder) at a 1% labeled sample ratio. The tuning ranges are \(w_c \in [0.1, 0.5, 1, 2, 5, 10]\), \(w_f \in [0.0001, 0.0005, 0.001, 0.005, 0.01, 0.1]\), and heads \(\in [1, 2, 4, 8, 16]\).

As shown in Fig. 7: (a) The analysis results for \(\omega _c\) and \(\omega _f\) exhibit similar patterns, with accuracy showing a distinct nonlinear trend: initially increasing to a peak value before declining. The peak accuracy is achieved at \(\omega _c = 2\) and \(\omega _f = 0.001\), respectively. (b) \(\omega _c\) and \(\omega _f\) serve as constraints for different loss terms in the objective function. Insufficient weighting may lead to inadequate constraint, while excessive weighting may restrict model learning capacity. Both scenarios can impair the model’s fitting ability. Appropriate weighting balances regularization and loss functions, thereby optimizing model performance. (c) Accuracy demonstrates a clear inverted-U trend as the number of attention heads increases, first rising and then declining. The model achieves peak accuracy with 4 attention heads, indicating optimal multi-source feature interaction learning. In summary, based on the experimental results of the sensitivity analysis, our key hyperparameters are set as follows: \(\omega _c\) = 2, \(\omega _f\) = 0.001, and the number of heads in the multi-head self-attention mechanism is 4.

Analysis of model inference time

In consideration of computational cost, inference efficiency is an important factor for the practical deployment of deep learning models. Therefore, we evaluate the inference time of the proposed model using different pooling strategies, and the results are presented in Table 5. The reported values are averaged over 100 runs to ensure reliability, and the evaluation is conducted under the setting with 1% labeled sample ratio.

Although the proposed curvelet pooling increases the inference time compared with max-pooling, this additional cost mainly stems from the multi-scale and directional transforms inherent in curvelet pooling. However, comprehensively considering the important actual oil extraction production indicators, such as enhancing the recovery rate of oil wells, the further improvement of the recognition accuracy of oil well working conditions is far more important than the increase in its computational cost.

Ablation study

Ablation tests at 1% labeled samples verify key module contributions:

-

Comparing the method without multi-source feature interaction (AMMFFE-CP-ResNet-50(w/o FI)) to the proposed method.

-

Replacing the adaptive attention-based fusion with average weight/fixed weight strategies (AMMFFE-CP-ResNet-50(w/FI-AVE), AMMFFE-CP-ResNet-50(w/FI-0.5FIX)).

Experimental results are presented in Table 6 and Fig. 8.

As shown in Table 6 and Fig. 8: (a) AMMFFE-CP-ResNet-50(w/o FI) shows 1.82% lower accuracy, confirming that multi-head self-attention-driven feature interaction enhances cross-source information learning. (b) AMMFFE-CP-ResNet-50(w/ FI-AVE) and AMMFFE-CP-ResNet-50(w/ FI-0.5FIX) exhibit 1.58% and 4% accuracy drop respectively, validating that adaptive attention-based fusion improves global feature representation and recognition performance of the model.

In addition to the key module ablation, we conducted a sample size ablation experiment to evaluate the robustness of the proposed method under reduced training samples. Specifically, for each class, the number of labeled samples was randomly reduced to 50 and 30, and the model performance was recorded. The results are presented in Table 7. As the training sample size decreases, AMMFFE-CP-ResNet-50 maintains comparatively good performance across different scales, indicating its robustness with limited training data.

Conclusion and future work

This paper proposes a working condition recognition method featuring three key characteristics: curvelet pooling, attention mechanism-based measured multi-source fusion feature extraction, and multi-source semi-supervised classification deep learning. Specifically, the measured ground dynamometer cards and measured electrical power cards are selected as major information sources for working condition recognition. The curvelet pooling layer, built upon the second-generation curvelet transform, is introduced to replace the max-pooling layer in ResNet-50, which helps alleviate certain limitations of conventional convolutional neural networks in feature representation. Further, an attention-based multi-source fusion feature extraction framework is designed to enable feature interaction through multi-head self-attention and achieve feature fusion via an adaptive attention mechanism. On this basis, the multi-source fused features are processed with a semi-supervised classification algorithm that incorporates a pseudo-label confidence dynamic adjustment strategy and adaptive class fairness regularization, forming an integrated approach for multi-source semi-supervised working condition recognition. Extensive experiments validate the effectiveness of the proposed method.

However, this research also has certain limitations: (a) Although the proposed multi-source semi-supervised learning method has shown effectiveness, its performance under extremely few labeled samples requires further improvement, which may not be sufficient to fully meet the demands of real-world industrial applications; (b) The research primarily focuses on theoretical aspects and improving recognition performance, without considering the computational and memory constraints of embedded devices, which may limit the immediate applicability of the method in resource-constrained industrial environments.

In order to improve the technical level and application effect in the field of oil extraction production, the following directions can be further explored: (a) Considering the lack of a general framework in this field that simultaneously addresses multiple practical challenges, future work will aim to enhance the generalization of the proposed method across different oil reservoir geologic condition. This would involve integrating techniques such as imbalanced learning46 to handle skewed data distributions, incremental learning47 to continuously incorporate new data without retraining, domain adaptation48 to mitigate distribution shifts between different operating site, etc., ultimately building a more robust and versatile working condition recognition system. (b) Focus on the improvement of semi-supervised learning algorithms for working condition recognition in sucker-rod pumping wells, such as enhancing the quality of pseudo-labels to improve the model performance with very few labeled samples. (c) Explore the lightweight deployment strategies of the proposed multi-source semi-supervised deep learning model on edge devices of sucker-rod pumping wells, such as curvelet transform optimization technology, deep model quantization compression technology, to further improve the practicability and promotion value of the working condition recognition system for sucker-rod pumping wells in complex oil extraction production sites, and provide efficient and stable edge intelligent solutions for intelligent oilfield construction.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Nascimento, J. et al. Diagnostic of operation conditions and sensor faults using machine learning in sucker-rod pumping wells. Sensors 21, 4546. https://doi.org/10.3390/s21134546 (2021).

Wang, X. et al. Fault diagnosis for sucker rod pumping systems: A parallel system approach. IEEE J. Radio Freq. Ident. 6, 743–747. https://doi.org/10.1109/JRFID.2022.3202276 (2022).

He, Y. et al. A hybrid approach of the deep learning method and rule-based method for fault diagnosis of sucker rod pumping wells. Energies 16, 3170. https://doi.org/10.3390/en16073170 (2023).

He, Y. P. et al. Few-shot working condition recognition of a sucker-rod pumping system based on a 4-dimensional time-frequency signature and meta learning convolutional shrinkage neural network. Pet. Sci. 20, 1142–1154. https://doi.org/10.1007/s12182-023-00689-x (2023).

Sreenivasan, H. & Krishna, S. Automatic sucker rod pump fault diagnostics by transfer learning using googlenet integrated machine learning classifiers. Process Saf. Environ. Prot. 191, 14–26. https://doi.org/10.1016/j.psep.2023.07.016 (2024).

Yin, C. et al. Imbalanced working states recognition of sucker rod well dynamometer cards based on data generation and diversity augmentation. SPE J. 28, 1925–1944. https://doi.org/10.2118/209012-PA (2023).

He, Y. P. et al. Working condition recognition of sucker rod pumping system based on 4-segment time-frequency signature matrix and deep learning. Pet. Sci. 21, 641–653. https://doi.org/10.1007/s12182-024-00906-9 (2024).

Martinović, B. et al. Unveiling deep learning insights: A specialized analysis of sucker rod pump dynamographs, emphasizing visualizations and human insight. Mathematics 11, 4782. https://doi.org/10.3390/math11234782 (2023).

Alzubaidi, L. et al. Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. J. Big Data 8, 1–74. https://doi.org/10.1186/s40537-020-00334-x (2021).

Williams, T. & Li, R. Wavelet pooling for convolutional neural networks. In International Conference on Learning Representations (2018).

Rodriguez, M. X. B., Gruson, A., & Polania, L. et al. Deep adaptive wavelet network. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision 3111–3119 (2020). https://doi.org/10.1109/WACV45572.2020.9093267.

Starck, J. L., Candès, E. J. & Donoho, D. L. The curvelet transform for image denoising. IEEE Trans. Image Process. 11, 670–684. https://doi.org/10.1109/TIP.2002.801063 (2002).

Candes, E. et al. Fast discrete curvelet transforms. Multiscale Model. Simul. 5, 861–899. https://doi.org/10.1137/S1540345905438782 (2006).

Jia, M. et al. Synthetic aperture radar image change detection based on convolutional-curvelet neural network and partial graph-cut. Electron. Lett. 57, 876–878. https://doi.org/10.1049/el.2021.2471 (2021).

Cai, X., Chen, & F. Fault. diagnosis with imbalanced datasets for sucker rod pumping system based on dynamometer card.,. CAA symposium on fault detection. In Supervision and Safety for Technical Processes1–6 (2023). https://doi.org/10.1109/SAFEPROCESS55091.2023.1003209.

Chen, L., Gao, X. & Li, X. Using the motor power and XGboost to diagnose working states of a sucker rod pump. J. Petrol. Sci. Eng. 199, 108329. https://doi.org/10.1016/j.petrol.2020.108329 (2021).

Zheng, B., Gao, X. & Li, X. Diagnosis of sucker rod pump based on generating dynamometer cards. J. Process Control 77, 76–88. https://doi.org/10.1016/j.jprocont.2019.05.004 (2019).

Abdurakipov, S. S. et al. Diagnostics of oil well pumping equipment by using machine learning. J. Eng. Thermophys. 33, 39–54. https://doi.org/10.1007/s10973-023-01401-2 (2024).

Zhou, B. et al. Hessian-regularized weighted multi-view canonical correlation analysis for working condition recognition of sucker-rod pumping wells. Syst. Sci. Control Eng. 6, 215–226. https://doi.org/10.1080/21642583.2018.1506366 (2018).

Wang, Z., Xuan, J. & Shi, T. Multi-source information fusion deep self-attention reinforcement learning framework for multi-label compound fault recognition. Mech. Mach. Theory 179, 105090. https://doi.org/10.1016/j.mechmachtheory.2023.105090 (2023).

Liu, Y. et al. High-resolution remote sensing image segmentation framework based on attention mechanism and adaptive weighting. ISPRS Int. J. Geo Inf. 10, 241. https://doi.org/10.3390/ijgi10040241 (2021).

Fakher, S. et al. A comprehensive review of sucker rod pumps’ components, diagnostics, mathematical models, and common failures and mitigations. J. Petrol. Explor. Prod. Technol. 11, 3815–3839. https://doi.org/10.1007/s10944-021-00517-w (2021).

Moussa, A. et al. Deep learning approach based on generative adversarial network for automatic diagnosis of sucker-rod pump operational conditions. In Mediterranean Offshore Conference (2024). https://doi.org/10.2118/21144-MS.

Sohn, K. et al. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural. Inf. Process. Syst. 33, 596–608. https://doi.org/10.5555/3442177.3442273 (2020).

Wang, Y., Chen, H., & Heng, Q. et al. FreeMatch: Self-adaptive thresholding for semi-supervised learning. In The 11th International Conference on Learning Representations (2023).

Wu, W., Sun, W. L. & Wei, H. X. A fault diagnosis of suck rod pumping system based on wavelet packet and RBF network. Adv. Mater. Res. 189–193, 2665–2669. https://doi.org/10.4028/www.scientific.net/AMR.189-193.2665 (2011).

Li, K. et al. Using the curve moment and the PSO-SYM method to diagnose downhole conditions of a sucker rod pumping unit. Pet. Sci. 10, 73–80. https://doi.org/10.1007/s12182-013-0304-3 (2013).

Zhang, A. & Gao, X. W. Fault diagnosis of sucker rod pumping systems based on curvelet transform and sparse multi-graph regularized extreme learning machine. Int. J. Computat. Intell. Syst. 11, 428–437. https://doi.org/10.1080/18756891.2018.1424197 (2018).

Wang, X. et al. A working condition diagnosis model of sucker rod pumping wells based on deep learning. SPE Prod. Operat. 36, 317–326. https://doi.org/10.2118/207842-PA (2021).

Zhang, R., Wang, L. & Chen, D. An intelligent diagnosis method of the working conditions in sucker-rod pump wells based on convolutional neural networks and transfer learning. Energy Eng. 118, 1069–1082. https://doi.org/10.1080/01403489.2020.1813843 (2021).

Zheng, B., Gao, X. & Pan, R. Sucker rod pump working state diagnosis using motor data and hidden conditional random fields. IEEE Trans. Industr. Electron. 67, 743–750. https://doi.org/10.1109/TIE.2019.2944081 (2020).

Tecle, S. I., Ziuzev, A. & Spiridonov, V. Development of fault diagnosis model for sucker rod pump using motor power curve. In 2021 XVIII International Scientific Technical Conference Alternating Current Electric Drives 1–6 (2021). https://doi.org/10.1109/ACED52610.2021.9598489.

Wei, J. & Gao, X. Fault diagnosis of sucker rod pump based on deep-broad learning using motor data. IEEE Access 8, 222562–222571. https://doi.org/10.1109/ACCESS.2020.2991212 (2020).

Liu, S., Raghavendra, C. S., & Liu, Y. et al. Automatic early fault detection for rod pump systems. In SPE Annual Technical Conference and Exhibition SPE–146038–MS (2011). https://doi.org/10.2118/146038-MS.

Zhang, R. et al. A real-time diagnosis method of reservoir-wellbore-surface conditions in sucker-rod pump wells based on multidata combination analysis. J. Petrol. Sci. Eng. 198, 108254. https://doi.org/10.1016/j.petrol.2020.108254 (2021).

Xu, L., Su, R., & Ali, A. et al. Fault diagnosis of pumping units based on extended-fusion machine learning. In 2023 18th International Conference on Intelligent Systems and Knowledge Engineering 302–308 (2023). https://doi.org/10.1109/ISKE53374.2023.1010362.

Zhou, B. et al. Multi-source working condition recognition via nonlinear kernel learning and p-laplacian manifold learning. Heliyon 10, E26436. https://doi.org/10.1016/j.heliyon.2024.e26436 (2024).

Li, J. et al. An evolutional deep learning method based on multi-feature fusion for fault diagnosis in sucker rod pumping system. Alex. Eng. J. 66, 343–355. https://doi.org/10.1016/j.aej.2022.09.010 (2023).

Wolter, M. & Garcke, J. Adaptive wavelet pooling for convolutional neural networks. In International Conference on Artificial Intelligence and Statistics 1936–1944 (2021). https://doi.org/10.5555/3442327.3442337.

Li, S. et al. An adaptive data fusion strategy for fault diagnosis based on the convolutional neural network. Measurement 165, 108122. https://doi.org/10.1016/j.measurement.2020.108122 (2020).

Vaswani, A., Shazeer, N., & Parmar, N. et al. Attention is all you need. Advances in Neural Information Processing Systems 30, (2017).

Dosovitskiy, A., Beyer, L., & Kolesnikov, A. et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. In International Conference on Learning Representations (2021).

Wu, Z. et al. Interpretable graph convolutional network for multi-view semi-supervised learning. IEEE Trans. Multimedia 25, 8593–8606. https://doi.org/10.1109/TMM.2023.3157892 (2023).

Han, Q., Tian, Z., & Xia, C. et al. InfoMatch: entropy neural estimation for semi-supervised image classification. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence 4089–4097 (2024). https://doi.org/10.24963/ijcai.2024/567.

Nguyen, K. B. SequenceMatch: revisiting the design of weak-strong augmentations for semi-supervised learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision 96–106 (2024). https://doi.org/10.1109/WACV.2024.00042.

Ren, Z. et al. Adaptive cost-sensitive learning: Improving the convergence of intelligent diagnosis models under imbalanced data. Knowl.-Based Syst. 241, 108296. https://doi.org/10.1016/j.knosys.2022.108296 (2022).

Sun, H. L. et al. Mos: Model surgery for pre-trained model-based class-incremental learning. Proceed. AAAI Conf. Artif. Intell. 39, 20699–20707. https://doi.org/10.1609/aaai.v39i19.31999 (2025).

Ngo, B. H., Chae, Y. J., & Kwon, J. E. et al. Improved knowledge transfer for semi-supervised domain adaptation via trico training strategy. In Proceedings of the IEEE/CVF International Conference on Computer Vision 19214–19223 (2023). https://doi.org/10.1109/ICCV.2023.01842.

Funding

The research is supported by the National Natural Science Foundation of China under Grant No. 62072468 and the Natural Science Foundation of Shandong Province under Grant No. ZR2021MF031.

Author information

Authors and Affiliations

Contributions

Shuo Yang and Bin Zhou wrote the main manuscript text. Yanjiang Wang and Weifeng Liu reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, S., Zhou, B., Wang, Y. et al. Measured multi-source semi-supervised working condition recognition based on curvelet pooling and attention mechanism learning. Sci Rep 15, 40021 (2025). https://doi.org/10.1038/s41598-025-23732-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-23732-2