Abstract

In recent years, single image super-resolution (SISR) based on deep learning has achieved excellent results. However, the consequent elevated computational and storage expenses limit its practicability in real life. Researchers seek a lightweight SISR network that minimizes computational load while maintaining high performance. To address this challenge, we introduce a general lightweight image super-resolution with sharpening enhancement and double attention network (ESDAN) to optimize the trade-off between model complexity and performance. The network achieves a balance between model complexity and performance through the Sharpening Enhancement Module (SEM) and the Dual Attention Upsampling module (DAU). Specifically, SEM effectively integrates the Attention-Driven Feature Sharpening module (ADFS) to enhance feature contrast and the Multi-Way Feature Enhancement module (MWFE) to reinforce key information, optimizing both the representation ability of composite features and the nonlinear mapping ability of the model. Moreover, DAU dynamically fuses shallow and deep features to enhance the model’s reconstruction capability. Extensive experimental results demonstrate that the proposed network surpasses contemporary state-of-the-art lightweight SISR methods. Additionally, we explore the potential of ESDAN in other SISR-related tasks, such as super-resolution of Alzheimer’s disease brain MRI, stereo endoscopic images, and surveillance images. The experimental results demonstrate the high versatility of the proposed network. The source code is available at https://github.com/Czs138/ESDAN.

Similar content being viewed by others

Introduction

Throughout the stages of image formation, transmission, and recording, the degradation of image quality ensues as a consequence of imperfections within the imaging system, transmission medium, and associated equipment. This phenomenon is commonly referred to as image degradation. This degradation results from various factors, including the low performance of the imaging system, film sensitivity, lossy compression, and improper storage. Image super-resolution (SR) stands as a crucial method to address the issue related to image deterioration. Its objective is the restoration of a high-resolution (HR) image from a low-resolution (LR) image with blurred details, thereby enhancing visual clarity.

Over the past 20 years, deep learning has found widespread application across a multitude of domains1,2. The VDSR proposed by Kim et al.4 introduced global residual learning into sisR network for the first time and expanded the network depth to 20 layers. The resultant reconstruction performance of the VDSR markedly surpasses that of the SRCNN. To enhance model performance, The SAN proposed by Dai et al.5 integrates non-local mechanism and innovative second-order channel attention mechanism, which aims to improve SR results. The DAT proposed by Chen et al.7 combined two different dimensions (spatial and channel) of the Transformer by alternately applying spatial and channel self-attention in consecutive Transformer blocks. This operation achieves inter-block feature aggregation with excellent reconstruction results. More advanced classical algorithms are presented in Table 1. However, the increased number of convolution layers significantly raises the model’s complexity and inference time, which limits its deployment on edge devices. Therefore, designing a lightweight image SR model holds significant practical importance.

There are several established techniques for reducing model size and computational complexity, such as parameter sharing, information distillation, and model pruning. The DRCN proposed by Kim et al.15 implemented parameter sharing by constructing a very deep recursive layer. The RFDN proposed by Liu et al.18 combines various features to distill more valuable information for sequence blocks. Guo et al.7 proposed the VLESR model by designing lightweight convolutional block, group convolution, and other methods to pruning the size of the model. More advanced lightweight algorithms are shown in Table 1. However, most of these algorithms are troubled by the poor effect of SR image reconstruction. One major contributing factor is that the insufficiently potent nonlinear mapping capabilities inherent in the majority of lightweight image SR. This deficiency proves critical in the retrieval of lost image details, including but not limited to edges and textures. In order to visualize the current progress in super-resolution algorithms, we make Table 1 of the mentioned algorithms and their characteristics.

To tackle the above problems, this paper proposes ESDAN. As the nonlinear mapping block of ESDAN, the carefully designed SEM is dedicated to improving the detail recovery ability and nonlinear mapping ability of the network by organically combining the functions of attention, contrast enhancement, and feature fusion. In SEM, the ADFS integrates the attention branch with the feature processing branch to enhance feature contrast and highlight edge. With the help of the selective mutual attention module (SMAM), the former realizes the accurate and selective weighting of different location features through the mutual attention and regulation of input features. The latter uses two simple convolution layers to extract depth features. The MWFE uses a three-branch structure to examine input features from different perspectives, capture key features, enhance important information, and preserve local details. In addition, to better integrate the shallow and deep features, this paper employs the DAU in place of the traditional upsampling module to enhance the model’s representation capabilities.

The contributions of our work can be summarized as follows:

1) This paper proposes a general lightweight image super-resolution with sharpening enhancement and double attention network (ESDAN). The network not only strikes a better balance between model complexity and performance but also has high versatility.

2) This paper proposes a sharpening enhancement module (SEM), which can organically combine attention-driven feature sharpening module (ADFS) and multi-way feature enhancement module (MWFE) to optimize the representation ability of composite features.

3) This paper proposes a dual attention upsampling module (DAU) that better integrates shallow and deep features.

Related works

Lightweight SISR methods

In recent years, the SISR method based on deep learning has been committed to designing novel network structures, achieving a remarkable SR reconstruction effect. The SRCNN proposed by Dong et al.3 is the pioneering application of CNN in the SR field. SRCNN contains only three convolution layers to obtain the end-to-end nonlinear mapping of LR and HR images. The reconstruction effect of SRCNN surpasses that of the traditional SISR method. Huang et al.9 introduced an improved Hessian filter into the deep CNN network to achieve the result of high-detail fidelity and proposed the DeFiAN model. Guo et al.10 designed a novel dual regression network DRN. The network can alleviate the ill-posed mapping problem and real application scenario problem in the SR field to a certain extent. Ledig et al.12 proposed the image super-resolution with a generative adversarial network (SRGAN). SRGAN adopts the perceptual loss function in model training, which includes confrontation loss and content loss. The use of this loss function allows SRGAN to make significant progress in terms of perceptual quality. Liang et al.13 introduced a transformer architecture into the domain of image SR and proposed network SwinIR. In the deep feature extraction module, the network uses multiple swin transformer layers to enable local attention and cross-window interaction, which significantly improves the image SR reconstruction. Li et al.56 proposed a deep adaptive information filtering network (FilterNet). The network adaptively filters redundant low-frequency information and enhances high-frequency details through dilated residual groups and adaptive information fusion. Zhang et al.58 proposed SRMamba-T, a hybrid model that combines Mamba and Transformer to balance efficiency and performance. The network employs a multi-directional selective scanning module to reduce parameters and a feature fusion module to enhance representation, achieving superior results on multiple benchmarks with lower computational cost compared to SOTA methods.

However, the complex network structure imposes elevated computational and memory costs, rendering its application challenging on edge devices. Therefore, the demand for a lightweight SISR network is imminent. Dong et al.14 proposed FSRCNN, a compacted hourglass-shape CNN architecture named. Meanwhile, FSRCNN introduces a transposed convolution layer at the conclusion of the network, serving as a substitute for interpolation calculation to generate an SR image. DRCN, as proposed by Kim et al.15, integrates recursive structure into the field of SISR. The recursive structure serves to augment the network depth without introducing additional parameters. The CARN, proposed by Ahn et al.16, is a cascaded residual network that combines residual blocks and recursive networks and uses a limited set of parameters and operations to achieve competitive results. The IMDN proposed by Hui et al.17 first adopted the information distillation strategy in the field of SISR, which is divided into splitting and aggregation operations. In addition, the network also proposes an adaptive pruning strategy to achieve arbitrary scale factor SR. Lv et al.53 proposed the Enhanced Local Multi-windows Attention Network (ELMA), which incorporates a Multi-windows Self-Attention mechanism to mitigate redundant computations and a Spatial Gated Network to enhance spatial feature representation. ELMA demonstrates competitive performance with reduced parameter count and computational overhead compared to state-of-the-art approaches. Li et al.57 proposed a lightweight super-resolution network SRConvNet. The network integrates Fourier modulated attention to efficiently model global dependencies and a dynamic mixing layer to enhance multi-scale contextual information, achieving a better trade-off between accuracy and efficiency.

Attention mechanism

Attention mechanism19 constitutes a technology to simulate human attention behavior, which allows the model’s capacity to concentrate on specific segments of input data to perform tasks better. This mechanism can be used in different deep learning models, enabling the model to selectively concentrate on specific segments of the input during information processing rather than treat all information equally. This contributes to bolstering the model’s processing capability for important information, thereby improving its performance. Hu et al.20 proposed the squeeze and excitation (SE) network, which is a typical attention network. The working principle of the network is based on two steps: squeeze and excitation. In the squeezing phase, the global average pooling is employed to acquire the global description of each channel feature map, which helps the network understand the different importance of each channel. In the excitatory stage, the importance of channels is reweighted through the weight obtained by the squeezing phase to enhance the dissemination of useful information. Woo et al.21 proposed CBAM combined with channel attention and spatial attention. In CBAM, the channel attention module captures the statistical information of each channel through global average pooling and global max pooling and then generates channel attention weights via a fully connected layer. The spatial attention module receives the output from the channel attention as its input and then uses the max pooling and average pooling operations to derive the attention weight of each position on the feature map. An et al.22 proposed the network MSMGA-Net to achieve competitive fine-grained visual classification. The training of this network focuses on determining subtle gaps between classes by localizing key discriminative regions of images. This is achieved through the Multi-Granularity Attention (MGA) module at the corresponding stage of the network. In addition to these general-purpose attention networks, attention mechanisms have also been applied to domain-specific tasks such as fringe projection profilometry for single-shot phase recovery. For instance, a recent study55 proposed a deep learning-based single-shot composite fringe phase recovery method that leverages multi-attention modules to extract phase information efficiently from a single composite fringe image. The network combines parallel phase-shifting theory with attention-guided feature extraction to focus on key spatial regions and channel features relevant to accurate phase reconstruction. This demonstrates that attention mechanisms can not only improve general visual tasks but also enhance efficiency and accuracy in specialized imaging applications.

SISR-related vision tasks

In recent years, amidst the rapid evolution of image SR, researchers have shown great interest in many application scenarios that are highly relevant to image super-resolution, including medical image reconstruction and surveillance image reconstruction. Among these applications, medical image reconstruction is one of the most attractive. On the one hand, medical images have distinct advantages, including low radiation exposure, minimal injury, low latency, and non-invasive. On the other hand, most medical images exhibit inherent limitations such as low definition, low resolution, low contrast, low gray value, and low quality. Therefore, Prior to the subsequent stages of processing, analyzing, classifying, recognizing, and interpreting of medical images, it is essential to investigate Medical Image Super-Resolution (MISR) reconstruction23. Magnetic resonance (MR) images SR reconstruction is an attractive research direction in medical reconstruction.

Traditional magnetic resonance imaging (MRI) encounters challenges such as low acceleration factor, long scanning time, difficult parameter selection, and high computational complexity. To enhance the quality of magnetic resonance images, Wang et al.24 initiated the introduction of deep learning into the field of rapid reconstruction of magnetic resonance imaging. They used high-quality magnetic resonance images for offline convolutional neural network training. This method has aroused widespread concern and recognition in the academic community. Schlemper et al.25 proposed an end-to-end deep network architecture composed of CNN cascades. The network reconstructs the input down-sampling MRI image using the CNN module. It incorporates domain information from the image in K-space into the network via the data consistency module to aid in the reconstruction process. These two modules are interconnected in a circular cascade to achieve high-speed offline SR reconstruction. Wang et al.26 constructed DeepcomplexMRI, a multi-channel image reconstruction model based on the residual complex convolution neural network. The model utilizes authentic real images as training data, obviating the necessity for prior information such as coil sensitivity. By utilizing the correlation between real and virtual parts of MRI images and continuously enhancing the consistency of k-space data, the multi-channel image reconstruction is successfully realized, and the effect is more accurate than traditional methods. Shi et al.27 proposed a progressive wide residual network with a fixed skip connection (FSCWRN). FSCWRN uses fixed jump connection and combines global residual learning with local residual learning based on shallow network. Compared with the deep network structure, FSCWRN can effectively mitigates the issue of structural degradation by a gradual increment in the width of the network. This fixed connection mechanism can transfer high-frequency local details from the shallow network to the subsequent network layer, providing sufficient information for medical image SR reconstruction.

In this study, we further demonstrate the versatility of our ESDAN by applying it to various SISR-related vision tasks.

Methods

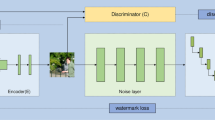

The overall framework of ESDAN. The images are sourced from publicly available dataset: DIV2K (https://data.vision.ee.ethz.ch/cvl/DIV2K/). The dataset is available for non-commercial use, and only requires citation of the corresponding papers.

Network architecture

The ESDAN network architecture proposed in this paper is shown in Fig. 1, which is mainly composed of a 3✕3 convolution layer, a depth feature extraction block (DFEB), and a DAU. DFEB is stacked with SEM. Assuming that the input LR image is marked as \({I_{LR}}\) . \({I_{LR}}\) first passes through a 3✕3 convolution layer:

where \({f_{{\rm{SFE}}}}( \cdot )\) is the first convolution layer. \({F_0}\) represents the shallow feature map extracted from the \(3\times3\) convolution layer. Then it is sent to DFEB to extract the deep feature. The DFEB contains \(I\) SEM. The output of the ith SEM can be described as:

where \(f_{SEM}^i( \cdot )\) represents the SEM. \({F_{i - 1}}\) and \(F_i\) represent the output features after the \((i - 1){\rm{th}}\) and \(ith\) SEM module, respectively. Then the obtained shallow feature map \(F_0\) and the deep feature map \(F_i\) obtained following the last SEM are fed into the DAU to reconstruct the target residual image \({I_{S{R^\prime }}}\) :

where \({f_{DAU}}( \cdot )\) stands for DAU. The ultimate output of the model is the target SR image \({I_{S{R}}}\) as:

where\({f_{Bi}}( \cdot )\) is the bilinear interpolation function. \({I_{L{R}}}\) is the input LR image of the model.

For ease of understanding, we share the pseudocode of the algorithm as follow Table 2, divided into Module 1, Module 2, and Total Framework 3. For clarity, we abbreviate convolution operation as CO, selective mutual attention module as SM, contact operation as CON, global average pooling operation as GA, global max pooling operation as GM, sigmoid operation as SIG, ReLU operation as RE, sub-pixel convolution operation as SPC, and bilinear operation as BI.

Sharpening enhancement module

SEM includes an ADFS, a MWFE, a \(3 \times 3\) convolution, and a jump connection. As depicted in Fig. 1.

To evaluate the effectiveness of SEM module, we compare it with the IMDB module in IMDN and the SC-PA module in PAN, as illustrated in Fig. 2. IMDB achieves a lightweight design through channel splitting; however, its feature interaction is relatively limited and lacks global context modeling. SC-PA enhances texture details via pixel attention, but it primarily focuses on local features and has limited capability in capturing global structural information. In contrast, the SEM incorporates a multi-dimensional feature enhancement mechanism (MWFE) that integrates local convolutional features with global statistical information (GAP and GMP), along with multiple feature re-calibration operations to enrich feature interactions. By maintaining model compactness while simultaneously considering global and local information, SEM demonstrates improved reconstruction performance under complex scenarios.

Aattention-driven feature sharpening module (ADFS). As depicted in Fig. 1, the ADFS contains two branches. The upper branch consists of two \(3 \times 3\) convolutions and a SMAM between convolutions. Based on the adaptive selective attention mechanism, SMAM realizes the accurate selective weighting of different location features through the mutual attention and regulation of input features, and effectively improves the ability of ADFS to extract and represent features. The lower branch is composed of two \(3 \times 3\) convolutions. It aims to extract high-level feature representation from input features using convolution operation. Ultimately, the outputs of the two branches are interconnected to improve the contrast of the feature map and highlight the edge features, which indirectly realize the feature sharpening function.

To ensure the lightweight of the model, SMAM does not introduce convolution operations and uses mathematical methods to compute the correlations between different locations within the feature tensor. For illustration, let the input feature map be \(X \in {{\rm{R}}^{{\rm{B}} \times C \times H \times W}}\) . First, calculate the spatial mean value \(\mu\) for each channel and the squared deviation \(\Delta\) of pixels from the mean:

Then the channel variance is approximated as:

And a small regularization term \(\epsilon\) is introduced for numerical stability. The attention weight is then formulated as:

where \(\sigma ( \cdot )\) represents a sigmoid function.

Finally, the output Z of SMAM is obtained by multiplying the attention weighting coefficient and input X:

where \({f_{3 \times 3}}( \cdot )\) represents a \(3 \times 3\) convolutional function.\(\odot\) represents element-wise multiplication operation.

The output of the ADFS in the ith (\(i = 1,2,...,I\) ) SEM can be described as:

where \({f_{1 \times 1}}( \cdot )\) represents a \(1 \times 1\) convolutional function, which is employed to modify the number of channels of output \({F_{i - 1}}\) of the \((i - 1){\rm{th}}\) SEM module, \({f_{SMAM}}( \cdot )\) stands for SMAM, and \({f_{3 \times 3}}( \cdot )\) represents a \(3 \times 3\) convolutional function. \({F_{i,ADFS}}\) is the output of the ADFS module in the SEM and the input of the MWFE module in the \(ith\) SEM.

Multi-way feature enhancement module (MWFE). The specific structure of the MWFE is shown in Fig. 3. MWFE uses three branches to focus on the global and local characteristics of input features respectively. This design enables the module to comprehensively examine the input features from multiple perspectives and obtain information from different perspectives. Then, the weight information received from the three branches is added to obtain the weight of each channel input feature. These weights are used to capture key features and enhance important information, providing richer and more accurate information for the model proposed in this paper.

For the MWFE module in the \(ith\) (\(i = 1,2,...,I\) ) SEM, the weight \(W\) of the three branches is:

where \(\sigma ( \cdot )\) represents a sigmoid function. \({f_{1 \times 1}}( \cdot )\) represents a \(1 \times 1\) convolutional function.\({f_{GAP}}( \cdot )\) represents a global average pooling function, and \({f_{GMP}}( \cdot )\) s represents a global max pooling function. The output \({F_{i,MWFE}}\) of the \(ith\) MWFE module can be described as:

Where \(\odot\) represents element-wise multiplication operation.

Current popular upsampling modules consists of a 3✕3 convolutional layer and a sub-pixel convolutional layer, where the 3✕3 convolutional layer is used to change the number of feature channels to \(3 \times {s^2}\) (s is the magnification of the current model) to facilitate the subsequent processing of the sub-pixel convolutional layer. Although this method has achieved remarkable results in current lightweight methods11,17, to better utilize the shallow and deep features extracted from the model, this paper proposes a DAU, as shown in Fig. 4.

To demonstrate the effectiveness of the proposed DAU module, we conduct a comparative analysis with representative upsampling structures from IMDN and PAN, as illustrated in Fig. 5. The IMDN module, based on 3 × 3 convolutions and sub-pixel rearrangement, achieves high efficiency but lacks adaptive feature fusion and attention guidance, limiting its ability to recover high-frequency details. The PAN module enhances feature refinement via pixel attention; however, its static weighting scheme and considerable computational overhead impede its deployment in lightweight scenarios. In contrast, the DAU module employs a dynamic attention mechanism to adaptively integrate shallow and deep features, and leverages global average pooling for multi-scale information aggregation, thereby significantly enhancing feature representation and reconstruction fidelity.

In DAU, the input shallow feature \({F_0}\) and deep feature \({F_1}\) are firstly summed to get the fusion feature F; then the fusion feature F goes through the local attention branch and the global attention branch to get the local channel attention \({F_i}\) and the global channel attention \({F_g}\) ; after that, the local channel attention information and global channel attention information are fused, and the activation function is applied to get the weight W. The feature \({F_{up}}\) is than computed by a weighted combination of inputs \({F_1}\) and \({F_0}\).and the computed weights W. Finally, the feature \({F_{up}}\) is passed through the sub-pixel convolutional layer to obtain the output \({I_{S{R^\prime }}}\) . The process of DAU can be described as follows:

where \({f_{1 \times 1}}( \cdot )\) represents a \(1 \times 1\) convolution, \({f_{GAP}}( \cdot )\) represents a global average pooling operation, \(\sigma ( \cdot )\) is a sigmoid function, and \({f_{up}}( \cdot )\) represents a sub-pixel convolutional layer.

Experiments

Experiment datasets

In this experiment, the public DIV2K and Flickr2K28 datasets were employed as the training datasets during the experiment, with a total of 3450 images, each with a 2 K resolution. They have 800 and 2650 pictures covering natural scenery, animals, people, daily life scenes, works of art, and other types, respectively. In addition, 20 images were selected from the validation images of the DIV2K dataset to form test-20 validation datasets for subsequent experiments. In this paper, bicubic interpolation was used to down-sample the HR images in the above datasets to obtain the corresponding LR images with different scale factors. This paper also enhanced the training set by 90-degree, 180-degree, 270-degree rotation, and horizontal flip. During the test phase, this paper used five classic test datasets: Set529, Set1430, B10031, Urban10032, and Manga10933. To evaluate the model’s performance, this paper used two general evaluation criteria: peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM), both assessed on the luminance (Y) channel in YCbCr color space.

Experiment settings

During training, the output image block size GT_size was configured to be 256 × 256. Single input sample batch_size was 32. The model was trained utilizing the L1 loss function34 in conjunction with the Adam optimizer35, with the parameters configured to \({\beta _1} = 0.9\) , \({\beta _2} = 0.99\) ,\(\varepsilon = {10^{ - 8}}\) A cosine annealing strategy was employed to accelerate training by dynamically adjusting the learning rate, starting from an initial maximum of 7e-4. As iterations progress, it experiences a decline characterized by a cosine function rather than adhering to a constant step size. The minimum learning rate was 10e-7 and the cosine period was 250 K. The algorithm was implemented using the PyTorch framework on an NVIDIA GeForce RTX 3090 GPU and deployed on Ubuntu systems, with development carried out in the PyCharm Integrated Development Environment (IDE).

Ablation analysis

To demonstrate the validity of ADFS, MWFE and DAU, eight ablation experiments were designed. For comparison, we first designed a simplified ADFS module and DAU module. SMAM was replaced by pixel attention36 in ADFS. In DAU, pixel summation was used instead of dual attention upsampling module. These two simplified modules were called ADFS_S and DAU_S. ESDAN removed MWFE, and uses ADFS_S and DAU_S to replace ADFS and DAU is called baseline. Various model variants of the baseline incorporated the implementation of MWFE, as well as the substitution of ADFS_S with ADFS, DAU_S with DAU, or the combination of both modifications. Table 3 presents the PSNR results for ×4 super-resolution across different models on the Test-20 validation dataset. The PSNR baseline results were recorded in column 2 of Table 3, which is only 29.311dB. In columns 3 to 5 of Table 3, the incorporation of ADFS, DAU, and MWFE into the baseline model respectively improved the PSNR value. In addition, as shown in columns 6 to 8 of Table 3, when any two modules in ADFS, DAU and MWFE were added to the baseline model, the PSNR value was further improved. The most significant PSNR value (29.391dB) appeared in column 9 of Table 3. That was the result of integrating ADFS, DAU, and MWFE into the baseline model, which led to an increase of 0.08dB compared with the baseline model.

Experiments on SEM

Experiments on ADFS

To substantiate the effectiveness of ADFS module, four comparative experiments were conducted in this paper. In the first experiment, ESDAN did not include ADFS, resulting in a model called ADFS_0. For the second experiment, the SMAM in ADFS was substituted with channel attention20, resulting in a model called ADFS_1. For the third experiment, the SMAM in ADFS was replaced by pixel attention36, leading to the creation of the ADFS_2 model. The fourth experiment involved the ESDAN model that incorporates ADFS, referred to as ADFS (ESDAN). The PSNR results for ×4 super-resolution across various models evaluated on the test-20 validation dataset are presented in Table 4. It was evident that the proposed ADFS in this paper demonstrates efficacy. Additionally, the effect of SMAM in ADFS was better than the channel attention and pixel attention, which were often used at present.

To more intuitively show the effectiveness of ADFS, we further analyzed the heat map of the feature map and obtained the results shown in Fig. 6. The highlighted area in the heat map showed the ability of ADFS to enhance feature contrast and highlight edges. This further substantiates the validity of the ADFS suggested in this paper.

ADFS heatmap, the first and fourth columns show the input image, the second and fifth columns depict the heatmaps without ADFS module, and the third and sixth columns display the heatmaps after applying ADFS. These images are sourced from the benchmark datasets including Set5, Set14, and Urban100, corresponding to the ×2 super-resolution process. The images are sourced from publicly available datasets: DIV2K (https://data.vision.ee.ethz.ch/cvl/DIV2K/),and Flickr2K (https://github.com/limbee/NTIRE2017). All datasets are available for non-commercial use, and only require citation of the corresponding papers.

Experiments on MWFE

To substantiate the effectiveness of the ADFS module, this paper conducted three comparative experiments. For the first experiment, MWFE was not included in the SEM of ESDAN. This first model was named MWFE_0. In the second experiment, MWFE was replaced with the channel attention module20, resulting in a model called MWFE_1. In the third experiment, MWFE was incorporated into SEM, and this model was called MWFE (ESDAN). Table 5 displays the PSNR results for the ×4 super-resolution of different models from the test-20 validation dataset. It was clear that the MWFE suggested in this paper was effective and superior to the current popular channel attention.

In order to more intuitively show the effectiveness of MWFE, we further analyzed the heat map of the feature map and obtained the results shown in Fig. 7. The highlighted area in the hot map showed MWFE’s ability to enhance key information and retain local details. This further substantiate the effectiveness of MWFE proposed in this paper.

MWFE heatmap, the first and fourth columns show the input image, the second and fifth columns depict the heatmaps without MWFE module, and the third and sixth columns display the heatmaps after applying MWFE. These images are sourced from the benchmark datasets including Set5, Set14, and Urban100, corresponding to the ×2 super-resolution process. The images are sourced from publicly available datasets: DIV2K (https://data.vision.ee.ethz.ch/cvl/DIV2K/),and Flickr2K (https://github.com/limbee/NTIRE2017). All datasets are available for non-commercial use, and only require citation of the corresponding papers.

Comparison with state-of-the-art methods

To assess the ESDAN’s ability, a comparative analysis was performed, contrasting the method presented in this paper with several classical and advanced lightweight SR methods, focusing on both objective quantification and subjective visual assessment. In terms of objective quantification, the method presented in this paper, along with 14 algorithms SRCNN3, DRRN6, LapSRN37, IDN38, IMDN17, DRCN15, CARN16, ShuffleMixer40, MLRN44, SAFMN51, CFM39 and DAFEN52 were compared using magnification levels of ×2, ×3, and ×4 across five standard benchmark test datasets. The obtained PSNR and SSIM indicators were shown in Table 6. Table 6 also listed Params and Mult-Adds for each method, where Params indicate memory consumption and Mult-Adds refer to the multiply–accumulate operations, which are related to the model’s computational complexity. Regarding subjective vision, the model introduced in this paper was compared with seven other algorithms SRCNN3, FSRCNN14, IDN38, IMDN17, PAN36, AAF-SD42, and LAPAR-A43 when the magnification is ×2, ×3, and ×4 on the standard benchmark test dataset. The outcomes were presented in Figs. 8 and 9, and 10. This paper selected four representative images from the Mange109 and Urban100 datasets for display.

Table 6 presents a comparison of objective quantization results for ×2, ×3, and ×4 super-resolution. The model proposed in this study attained the outstanding PSNR and SSIM scores while keeping Mult-Adds and Params to a minimum. In comparison to the IDN algorithm, this model demonstrated a peak enhancement of 1.03 dB in PSNR and 0.01 in SSIM for ×4 super-resolution, along with a 2% decrease in Params and a 42% decrease in Mult-Adds. For ×3 SR, the maximum improvements were 0.0188 in SSIM and 1.14 dB in PSNR, while for ×2 SR, the maximum enhancements were 0.0265 in SSIM and 1.31 dB in PSNR. The Params and Mult-Adds for the MLRN and ESDAN models proposed in this paper were quite similar. However, ESDAN consistently outperformed MLRN in PSNR and SSIM metrics at every amplification level. For ×4 SR, ESDAN achieved a maximum PSNR enhancement of 0.16 dB and a maximum SSIM enhancement of 0.003; for ×3 super-resolution, the PSNR increased by 0.19 dB and the SSIM improved by 0.0014, while for ×2 super-resolution, the PSNR saw a 0.26 dB boost and the SSIM rose by 0.0004. Compared to KRGN59, the proposed ESDAN achieves slightly lower PSNR and SSIM metrics, but the ESDAN have smaller Params and Mult-Adds. For ×4 SR, KRGN had 621 Params and 59 Mult-Adds, whereas the ESDAN achieved 554 Params and 26.1 Mult-Adds. These data indicated that the ESDAN proposed in this paper was an effective lightweight SR model.

Figures 8 and 9, and 10 showed the visual comparison outcomes of the suggested method against the leading lightweight super-resolution model, utilizing the benchmark dataset. Specifically, we selected a series of images in the Urban100 datasets and compared them with other advanced algorithms at a magnification of ×2, ×3, and ×4. The finding indicated that the ESDAN model introduced in this paper produced fewer artifacts when reconstructing images and could more accurately reconstruct details like buildings and clothing.

Visualization Comparison of ×2 super resolution. The images are sourced from publicly available datasets: DIV2K (https://data.vision.ee.ethz.ch/cvl/DIV2K/),and Flickr2K (https://github.com/limbee/NTIRE2017). All datasets are available for non-commercial use, and only require citation of the corresponding papers.

Visualization Comparison of ×3 super resolution. The images are sourced from publicly available datasets: DIV2K (https://data.vision.ee.ethz.ch/cvl/DIV2K/),and Flickr2K (https://github.com/limbee/NTIRE2017). All datasets are available for non-commercial use, and only require citation of the corresponding papers.

Visualization Comparison of ×4 super resolution. The images are sourced from publicly available datasets: DIV2K (https://data.vision.ee.ethz.ch/cvl/DIV2K/),and Flickr2K (https://github.com/limbee/NTIRE2017). All datasets are available for non-commercial use, and only require citation of the corresponding papers.

Analysis of network computational complexity

Four key metrics for assessing model complexity are multiply-accumulate operations, the count of parameters, floating-point operations, and inference time. Table 7 illustrated the comparison outcomes between the model introduced in this paper and two other advanced lightweight models on the above four indicators. The floating-point operation was executed at an input image resolution of 256 × 256. The inference time was evaluated on two large test sets: B100 and Urban100.

As shown in Table 7 (for detailed training settings and strategies, see Sect. Experiment Settings), although the ESDAN proposed in this paper was not the model with the shortest inference time, it required fewer multiplication and accumulation operations, parameter quantities, and floating-point operation compared to RFDN-L18 and MRDN-L41. The slightly longer inference time observed in ESDAN could be attributed to its strict control of parameter count and floating-point operations through a multi-branch lightweight architecture and an efficient attention mechanism, while simultaneously ensuring hardware friendliness. Taking into account Tables 6 and 7, our ESDAN achieved superior PSNR results under comparability in terms of multiply–accumulate operations, the number of parameters, floating-point operation, and inference time. This indicated that the ESDAN proposed in this paper was an effective lightweight SR model.

Application to SR-related vision tasks

Application to alzheimer’s disease MR image super-resolution

Alzheimer’s Disease (AD) is a neurodegenerative disorder marked by cognitive and intellectual impairment, as well as decreased behavioral abilities. Currently, its exact cause is unknown, and there is a lack of effective treatment options. The imaging of brain structure or function provided by medical images is beneficial for the early diagnosis and exploration of the pathogenesis of AD. Super-resolution technology can enhance the resolution of existing medical images, aiding the diagnosis and research of AD.

In this experiment, we used the OASIC dataset45. We randomly selected 42 patient data from the OASIS dataset (30 for training, 3 for validation, and 9 for testing) for ×4 SR reconstruction experiments. Each patient’s dataset comprises three or four T1-weighted magnetic resonance imaging scans along with corresponding segmentation labels for an individual in the early stages of AD. Only one scanned image (T88-111) was used for training in this experiment. To ascertain the versatility of the proposed model, this paper compared ESDAN with five popular and representative SISR methods, including EDSR46, RDN47, RCAN5, SwinIR13, RDST-E48.

Table 8 lists the quantitative evaluation indicators of ESDAN and other advanced methods. It was clear that the ESDAN introduced in this paper achieved the highest SSIM value and the second-best PSNR value while utilizing the fewest parameters. In addition, Fig. 11 (a) showed the ESDAN’s performance ×4 SR reconstruction results. It was evident that the proposed method effectively restored the details of brain MR images of AD patients.

Application to stereo endoscopic image super-resolution

Endoscopic technology provides a visual representation of the internal structures of the human body, but sometimes the images can be limited in resolution and detail. The application of super-resolution technology allows these images to present tissue structures, lesion details, and anatomical sites more clearly, providing more precise and detailed information for medical research and clinical diagnosis.

In this experiment, we used the da Vinci dataset49, which contains 6300 pairs of stereoscopic laparoscopic images, each of which has a size of 512 × 512. 80% of the samples in this paper were randomly selected for training, and the remaining 20% were used for testing. Figure 11 (b) showed some visual examples of SR reconstruction results for the ESDAN model. it was evident that the ESDAN proposed in this paper adeptly reconstructed high-quality images compared to the ground truth images and corresponding low-resolution images.

Application to surveillance image super-resolution

While the medical image SR focuses on the accurate reconstruction of single targets and details, the surveillance images SR focus on clearly reconstructing the overall structure of rich scene content and multiple targets. Surveillance images are an important part of city security. However, due to factors such as device, weather and distance, surveillance images may sometimes lack sufficient details. Applying super-resolution technology to process these surveillance images can enhance the details and clarity of the images, helping the relevant staff to better discover the key information and support the security of the city.

In this experiment, we selected images with rich scenes from the WIDER Attribute dataset50 to simulate surveillance scenarios and validate the effectiveness of the proposed SR method in complex environments. The HR image was down-sampled by bicubic interpolation to generate the associated LR image. An example of the super-resolution results obtained from ESDAN proposed in this paper is shown in Fig. 11 (c). It was evident that compared with ground truth images and corresponding low-resolution images, the reconstruction results of the model proposed in this paper had high clarity.

Examples of SR reconstruction using the method proposed in this paper on three applications to SR-related vision tasks datasets. (a) OASIC dataset ×4 SR. (b) Da Vinci dataset ×2 SR. (c) Surveillance image ×4 SR. From top to bottom: ground truth maps (first row), corresponding low-resolution images (second row), and the SR reconstruction results proposed in this article (third row). The images are sourced from publicly available datasets: OASIC (https://sites.wustl.edu/oasisbrains/), Da Vinci (https://github.com/hgfe/DCSSR), and WIDER Attribute (https://opendatalab.org.cn/OpenDataLab/WIDER_ATTRIBUTE). All datasets are available for non-commercial use, and only require citation of the corresponding papers.

Conclusions

This paper proposes a universal lightweight image super-resolution network named ESDAN. Attention-driven Feature Sharpening module (ADFS) optimizes feature representation; the Multi-way Feature Enhancement module (MWFE) leverages different clues of features to improve their expression ability; dual attention upsampling module (DAU) can better utilize shallow and deep features to improve the quality of SR image reconstruction. Extensive experimental results demonstrate that the proposed method is both effective and competitive. ESDAN outperforms other state-of-the-art methods in both objective quantitative indicators and subjective visual quality, and achieves a new balance between the complexity and performance of SR reconstruction. Additionally, the model demonstrates high versatility in SR tasks for various applications such as Alzheimer’s disease brain MR image SR, endoscopic image SR, and surveillance image SR.

However, the proposed model still has several limitations. First, compared with MLRN, the performance improvement is relatively modest, which indicates that there remains room for further enhancement in reconstruction quality. Second, although the model achieves a favorable trade-off between accuracy and efficiency on standard benchmarks, its scalability to ultra-high-resolution inputs may be constrained due to the increasing computational and memory costs. Finally, while the model is relatively lightweight, there is still potential for reducing model size and inference time to make it more suitable for deployment on resource-constrained devices. Future research will therefore focus on improving reconstruction capability, enhancing scalability, and further optimizing the model for real-world applications.

Data availability

The data are available from the corresponding author(zhangchuanhao981@163.co) on reasonable request.

References

Thies, J. et al. Face2face: Real-time face capture and reenactment of RGB videos. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2016).

Li, D. et al. Multi-branch-feature fusion super-resolution network. Digit. Signal Proc. 145, 104332 (2024).

Dong, C. et al. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38 (2), 295–307 (2015).

Kim, J. & Lee, J. K. and Kyoung Mu Lee. Accurate image super-resolution using very deep convolutional networks. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2016).

Dai, T. et al. Second-order attention network for single image super-resolution. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2019).

Tai, Y., Yang, J. & Liu, X. Image super-resolution via deep recursive residual network. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2017).

Chen, Z. et al. Dual aggregation transformer for image super-resolution. Proceedings of the IEEE/CVF international conference on computer vision. (2023).

Gao, D. & Zhou, D. A very lightweight and efficient image super-resolution network. Expert Syst. Appl. 213, 118898 (2023).

Huang, Y. et al. Interpretable detail-fidelity attention network for single image super-resolution. IEEE Trans. Image Process. 30, 2325–2339 (2021).

Guo, Y. et al. Closed-loop matters: Dual regression networks for single image super-resolution. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2020).

Liu, J. et al. From coarse to fine: Hierarchical pixel integration for lightweight image super-resolution. Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 37. No. 2. (2023).

Ledig, C. et al. Photo-realistic single image super-resolution using a generative adversarial network. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2017).

Liang, J. et al. SwinIR: Image restoration using swin transformer. Proceedings of the IEEE international conference on computer vision (CVPR). (2021).

Dong, C., Loy, C. C. & Xiaoou Tang. and. Accelerating the super-resolution convolutional neural network. Proceedings of the European conference on computer vision (ECCV). (2016).

Kim, J. & Lee, J. K. and Kyoung Mu Lee. Deeply-recursive convolutional network for image super-resolution. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2016).

Ahn, N., Kang, B. & Kyung-Ah, S. Fast, accurate, and lightweight super-resolution with cascading residual network. Proceedings of the European conference on computer vision (ECCV). (2018).

Hui, Z. et al. Lightweight image super-resolution with information multi-distillation network. Proceedings of the 27th ACM international conference on multimedia. (2019).

Liu, J., Tang, J. & Wu, G. Residual feature distillation network for lightweight image super-resolution. Computer vision–ECCV 2020 workshops: Glasgow, UK, August 23–28, 2020, proceedings, part III 16. Springer International Publishing, (2020).

Itti, L., Koch, C. & Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 20 (11), 1254–1259 (1998).

Hu, J. & Shen, L. and Gang Sun. Squeeze-and-excitation networks. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2018).

Woo, S. et al. CBAM: Convolutional block attention module. Proceedings of the European conference on computer vision (ECCV). (2018).

An, C. et al. Multi-scale network via progressive multi-granularity attention for fine-grained visual classification. Appl. Soft Comput. 146, 110588 (2023).

Qiu, D., Cheng, Y. & Wang, X. Medical image super-resolution reconstruction algorithms based on deep learning: A survey. Comput. Methods Programs Biomed. 238, 107590 (2023).

Wang, S. et al. Accelerating magnetic resonance imaging via deep learning. 2016 IEEE 13th international symposium on biomedical imaging (ISBI). (2016).

Schlemper, J. et al. A deep cascade of convolutional neural networks for dynamic MR image reconstruction. IEEE Trans. Med. Imaging. 37 (2), 491–503 (2017).

Wang, S. et al. DeepcomplexMRI: exploiting deep residual network for fast parallel MR imaging with complex Convolution. Magn. Reson. Imaging. 68, 136–147 (2020).

Shi, J. et al. MR image super-resolution via wide residual networks with fixed skip connection. IEEE J. Biomedical Health Inf. 23 (3), 1129–1140 (2018).

Agustsson, E. & Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. Proceedings of the IEEE conference on computer vision and pattern recognition workshops. (2017).

Bevilacqua, M. et al. Low-Complexity Single Image Super-Resolution Based on Nonnegative Neighbor Embedding. British Machine Vision Conference (BMVA), (2012).

Yang, J. et al. Image super-resolution via sparse representation. IEEE Trans. Image Process. 19 (11), 2861–2873 (2010).

Martin, D. et al. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. Proceedings Eighth IEEE International Conference on Computer Vision (ICCV). (2001).

Huang, J. B., Singh, A. & Narendra Ahuja. and. Single image super-resolution from transformed self-exemplars. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2015).

Matsui, Y. et al. Sketch-based manga retrieval using manga109 dataset. Multimedia Tools Appl. 76, 21811–21838 (2017).

Wang, Z. et al. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13 (4), 600–612 (2004).

Diederik, P. & Kingma, J. B. Adam: A method for stochastic optimization. International Conference on Learning Representations (ICLR), (2015).

Zhao, H. et al. Efficient image super-resolution using pixel attention. Computer Vision–ECCV 2020 Workshops: Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16. Springer International Publishing, (2020).

Lai, W. S. et al. Deep laplacian pyramid networks for fast and accurate super-resolution. Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). (2017).

Hui, Zheng, X., Wang & Gao, X. Fast and Accurate Single Image Super-Resolution via Information Distillation Network. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2018).

Wu, Z., Liu, W. & Huang, D. When Handcrafted Filter Meets CNN: A Lightweight Conv-Filter Mixer Network for Efficient Image Super-Resolution. Proceedings of the 2024 International Conference on Multimedia Retrieval. (2024).

Sun, L., Pan, J. & Tang, J. ShuffleMixer: an efficient Convnet for image super-resolution. Adv. Neural. Inf. Process. Syst. 35, 17314–17326 (2022).

Yang, X. et al. MRDN: A lightweight multi-stage residual distillation network for image Super-Resolution. Expert Syst. Appl. 204, 117594 (2022).

Wang, X. et al. Lightweight single-image super-resolution network with attentive auxiliary feature learning. Proceedings of the Asian conference on computer vision. (2020).

Li, W. et al. LAPAR: Linearly-assembled pixel-adaptive regression network for single image super-resolution and beyond. Adv. Neural. Inf. Process. Syst. 33, 20343–20355 (2020).

Gendy, G. et al. Mixer-Based Local Residual Network for Lightweight Image Super-Resolution. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). (2023).

Marcus, D. S. et al. Open access series of imaging studies (OASIS): cross-sectional MRI data in young, middle aged, nondemented, and demented older adults. J. Cogn. Neurosci. 19, 1498–1507 (2007).

Lim, B. et al. Enhanced deep residual networks for single image super-resolution. Proceedings of the IEEE conference on computer vision and pattern recognition workshops. (2017).

Zhang, Y. et al. Image super-resolution using very deep residual channel attention networks. Proceedings of the European conference on computer vision (ECCV). (2018).

Zhu J, Yang G, Liò P. A residual dense vision transformer for medical image super-resolution with segmentation-based perceptual loss fine-tuning. CoRR 2023;abs/2302.1. https://doi.org/10.48550/ARXIV.2302.11184.

Zhang, T. et al. Disparity-constrained stereo endoscopic image super-resolution. Int. J. Comput. Assist. Radiol. Surg. 17 (5), 867–875 (2022).

Li, Y. et al. 14th European Human attribute recognition by deep hierarchical contexts. Computer Vision–ECCV, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part VI 14. Springer International Publishing, 2016. (2016).

Sun, L. et al. Spatially-adaptive feature modulation for efficient image super-resolution. Proceedings of the IEEE/CVF International Conference on Computer Vision. (2023).

Chen, W. et al. Dual attention fusion enhancement network for lightweight Remote-Sensing image Super-Resolution. Remote Sens. 17 (6), 1078 (2025).

Lv, Y. et al. Enhanced local multi-windows attention network for lightweight image super-resolution. Comput. Vis. Image Underst. 250, 104217 (2025).

Zhang, Y. et al. Residual dense network for image super-resolution. Proceedings of the IEEE conference on computer vision and pattern recognition. (2018).

Qin, J. et al. Single-shot phase-shifting composition fringe projection profilometry by multi-attention fringe restoration network. Neurocomputing 634, 129908 (2025).

Li, F., Bai, H. & Zhao, Y. FilterNet: adaptive information filtering network for accurate and fast image super-resolution. IEEE Trans. Circuits Syst. Video Technol. 30 (6), 1511–1523 (2019).

Li, F. et al. Srconvnet: A transformer-style Convnet for lightweight image super-resolution. Int. J. Comput. Vision. 133 (1), 173–189 (2025).

Liu et al. SRMamba-T: exploring the hybrid Mamba-Transformer network for single image Super-Resolution. Neurocomputing 624, 129488 (2025).

Zhang, L. et al. Toward lightweight image super-resolution via re-parameterized kernel recalibration. Knowledge-Based Syst. 325, 113876 (2025).

Acknowledgements

This work is partially supported by Henan Province Science and Technology Research Project (252102210147, 252102210083), and Fundamental Research Funds for the Central Universities(2025TJJBKY008), Key Research Projects of Higher Education Institutions in Henan Province (23A520042).

Funding

This work is partially supported by Henan Province Science and Technology Research Project (252102210147, 252102210083), and Fundamental Research Funds for the Central Universities(2025TJJBKY008), Key Research Projects of Higher Education Institutions in Henan Province (23A520042).

Author information

Authors and Affiliations

Contributions

Chuanhao Zhang: Writing - original draft, Validation, Data curation. Xiaohan Tu: Software, Investigation, Data curation. Zhisheng Cui: Writing - review & editing, Validation.Xuehui Gu: Visualization, Software, Investigation. Kunming Li: Supervision, Data curation.Yuncong Lu: Supervision, Methodology, Conceptualization.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, C., Tu, X., Cui, Z. et al. A general lightweight image super-resolution with sharpening enhancement and double attention network. Sci Rep 15, 40848 (2025). https://doi.org/10.1038/s41598-025-24493-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-24493-8