Abstract

The majority of India’s coal is mined using opencast methods, which causes more waste dumps to be formed and stability problems. There is a higher chance of dump instability because to the 1148 million cubic meters of overburden that Coal India Limited (CIL) has removed in the past few years. Complex calculations make dump slope stability studies complicated and time-consuming. Analytical and numerical methods are needed to calculate factor of safety (FOS) of dump slope. This research bridges traditional geotechnical methods with emerging computational approaches by integrating advanced ML techniques with rigorous statistical evaluation and a comprehensive dataset to improve dump slope stability prediction accuracy, reliability, and applicability. With so many available options, picking the best ML model can be a challenge. Consequently, for the purpose of this research, the authors selected models using the Lazy predict AutoML algorithm. Using six base models—Gradient Boosting (GBM), Light Gradient Boosting Machine (LGBM), Extreme Gradient Boosting (XGB), Histogram Gradient Boosting (HGB), Nu-Support Vector Regressor (NuSVR), Extra Tree Regressor (ETR), with Stacking Ensemble, and H2OAutoML—this study proposes an effective method for analysing dump slope stability. In preparation for model calibration and evaluation, databases of 2250 datasets were created. The output is the SLIDE computed factor of safety, and the inputs are six influential parameters such as cohesion (c), angle of internal friction (ϕ), unit weight (γ), overall bench height (H), natural moisture content (m), and overall slope angle (β). The coefficient of determination (R squared or R2), mean square error (MSE), mean absolute percentage error (MAPE), root mean square error (RMSE), and mean absolute error(MAE) were used for evaluating the performance of all models. The H2O Auto ML performed best model in comparison to other ensemble models. This research also makes use of the Shapley additive explanations (SHAP) technique to determine which of the six inputs is most crucial. This study shows that sophisticated ML approaches improve dump slope stability prediction in Indian opencast coal mines.

Similar content being viewed by others

Introduction

Opencast coal mining accounts for 93% of coal production in India, leading to an increase in waste dump formations and associated stability issues. CIL has removed over 1148 million cubic meters of overburden in recent years, increasing the risk of dump instability1. Maintaining a stable overburden dump (OB) is critical in mining regions, and effective dump management is required to prevent slope failure and associated hazards2. The dump becomes steeper, and the slope becomes more dangerous due to an increase in the stripping ratio and limitations on available land. There have been multiple reports of catastrophic human and property losses due to dump failure. It has an impact on the surrounding environment and impedes the continuing mining operations, which leads to decreased productivity and higher expenses associated with rehandling waste, large dump trucks, and excavators3.

In 2016, a catastrophic dump failure in Rajmahal coalfield resulted in 23 fatalities1. Similarly, Jayant opencast mine experienced a severe dump failure in December 2008, burying five mine workers4. Slope and dump failures have recently begun to exhibit an increasing tendency, according to a review of accidents in opencast mines (DGMS 2020) Accordingly, comprehensive evaluation of dump stability is currently receiving global attentions5,6,7.

Adding benches to the dump, rather in forming a single heap, is the standard technique for waste dump stabilisation in mining locations. This increases the dump’s FOS. Cohesion and friction angle, two strength controlling characteristics, are frequently thought of as crucial governing factors in dump stability2,8. To generalize and evaluate the slope’s overall strength and weakness, many slope stability studies used the FOS9,10,11. Slope stability evaluations are notoriously difficult and time-consuming due to the complexity of the calculations involved. Finding the FOS of a given slope requires a combination of analytical and numerical techniques12,13. Failures are often triggered by excessive load, hydrostatic pressure, poor dump configuration, and lack of space for proper disposal1. Consequently, a viable alternative to limit equilibrium or numerical methods that provide fast and reliable findings is urgently required5.

Machine learning, a subfield of AI, provides a different way to approach slope stability analysis by learning from data and making predictions without the need for explicit rules or equations. The majority of slope stability analyses used the data-driven technique14. The classification predictive models were used by most researchers for slope stability analysis whereas few studies were available on safety factor prediction15,16. The effectiveness of different machine learning models in predicting the safety factor was also determined to be higher for the artificial dump slope compared to the residual soil slope that occurs naturally17.While ML models offer significant advantages in slope stability analysis, they are not without limitations. The accuracy of these models heavily depends on the quality and quantity of the input data, and their performance can vary with different soil conditions and slope characteristics. Additionally, traditional methods like numerical simulations and expert-based models still play a crucial role in validating and complementing ML predictions, ensuring a comprehensive approach to slope stability assessment18.Combining machine learning algorithms with more traditional approaches like finite element methods or limit equilibrium methods (LEM) allow more precise and efficient analysis using hybrid methods14,19. Hybrid models were adopted by various researchers, and it showed that the machine learning models were performed well by the datasets generated from limit equilibrium analysis and finite element analysis17,19,20,21,22,23,24,25,26,27,28.

However, there are always going to be constraints when employing machine learning techniques. While models based on machine learning have seen extensive application, certain studies have relied on a limited sample size as given in Table 1, potentially impacting the ML model’s ability to generalize29. The database is the most critical constraint for machine learning algorithms. The learning rate is enhanced with a larger database. Due to the wide range of possible values for the input parameters used in geotechnical engineering, it is essential that the database used to build highly accurate models have ample coverage in both the training and testing sets30. Furthermore, the majority of machine learning models have been crafted through the expertise of individuals with specialized knowledge, employing a method of experimentation and refinement. Indeed, comprehensive procedures, such as data pre-processing18, feature engineering31, ML algorithm selection32 and hyperparameter tuning, are integral to the practical implementation of ML. Model selection and hyperparameter tuning continue to pose challenges for achieving success in ML-based modelling33. According to the no-free-lunch theorem34, no algorithm can be superior to all others across every problem.

The latest advancements in AI for evaluating slope stability should make use of hybrid intelligence or other AI methods with appropriate performance, minimal governing factors, and easy operation15. One major advantage is that they can effectively manage big and diverse datasets, combine a plethora of influencing factors, and capture intricate data connections18,31,32. Demir and Sahin34 found that data increases model performance, with random forest (RF) performing best out of five machine learning methods for predicting slope stability. In their investigation of machine learning methods for slope stability analysis, Kurnaz et al.42 discovered that the Auto Gluon package’s weighted ensemble learning approach produced the most precise outcomes33. Ma et al.40, found that AutoML (H2O-AutoML) offers a robust automated approach for the development of machine learning (ML) models and the analysis of slope stability concerning circular mode failure. The performance of tree-based ensemble models demonstrated greater accuracy in predictions compared to traditional machine learning methods17,25,26,29,30,43,44. Gao and Ge suggested that the use of novel hybrid ensemble methods would be a better option to assess the stability of complex rock slopes15.

This paper’s significant contribution is emphasized as follows:

A comprehensive database comprising 2250 datasets has been developed through parametric studies aimed at predicting dump slope stability. An AutoML approach has been proposed for model selection in predicting dump slope stability, utilizing the updated dataset. The six top-performing models were optimized using Bayesian optimization with a Gaussian process to identify the best version of each individual model and these optimized models were employed for the stacking ensemble learning model. A different AutoML method was introduced for stability prediction, eliminating the necessity for manual trial and error. It was noted that this AutoML approach surpassed both the stacked random forest metamodel and the individual base models in performance.

This paper is structured as follows (Fig. 1): Sects. “Database preparation” and “Exploratory data analysis” provide an overview of the updated database and the exploratory data analysis. Sects. “Methodology” and “Model interpretation” provide methodology and model interpretation. Sect. “Results and discussions” provide the results and discussions. In Sect. “Conclusions”, the conclusions of the study are presented and outlined potential avenues for further work.

Database preparation

It is well-known that limiting equilibrium methods can be used numerically to estimate the stability of both natural and artificial slopes. The shear strength along a sliding surface is computed using the Mohr–Coulomb strength formula. The shear stress upon failure is the material’s shear strength. To achieve a condition of limit equilibrium, the mobilized shear stress must be less than or equal to the shear strength45. In LEM, FOS is calculated as given formula in Eq. (1),

where, the mobilized shear stress, shear strength, cohesion, normal stress, and friction angle produced at the sliding surface are represented by τf, τ, c’, σ’ and ϕ’, respectively.

With the advent of the conventional technique in 1936, numerous LEMs were created for the purpose of analyzing slope stability. Among these advancements include the Generalized LE (GLE) approach created by Chugh46, as well as Bishops, Janbu, Morgenstern-Price, and Spencer. Though all LEMs rely on assumptions about the interslice normal and shear forces, the main distinction among them is in how these forces are computed. The FOS is found via horizontal force equilibrium, and Janbu’s simplified technique is commonly used for both circular and non-circular failure surfaces47.

However, probabilistic analysis provides a good understanding of the overburden material properties, which are very varied. The FOS of soil/rockfill embankments, such as ore stockpiles and waste rock dumps, can be calculated using limit equilibrium (LE) analysis48,49. Mine tailing dumps at Enyigba, Southeastern Nigeria, were the subject of a limit equilibrium (LE) slope stability analysis conducted by Igwe and Chukwu50. At various points along the dump’s slope, which ranged from 30° to 60°, a constant shear strength parameter was measured. When it was dry, FOS was 1.27–1.33; when it was rainy, it dropped to 1.10–1.29. It is possible that the sample disruption led to inaccurate laboratory determinations of cohesion and friction angle values in this investigation. Therefore, taking the probabilistic analysis into consideration could have explained the attributes’ associated fluctuation. Probabilistic analysis can manage the uncertainties in important factors like shear strength (cohesion, c, in kPa), friction angle (ϕ, in degrees), unit weight (γ, in kN/m3), and hydraulic properties51.

Based on limit equilibrium, the five factors that were used to determine the FOS of the slope were as follows: overall slope angle (β), overall bench height (H), cohesion (c), angle of internal friction (ϕ) and unit weight of soil material (γ). The evaluation was conducted using Slide software. For parametric study Janbu’s simplified method with 50 number of slices was adopted in SLIDE software. According to Table 2, a grand total of 2250 parametric scenarios covering a variety of slope parameter ranges were used to construct the datasets. In order to get the complete database ready for additional investigation utilizing ML algorithms, the FOS that was obtained for several parametric features was utilized. Table 2 also provides a statistical summary of all input and outcome factors that were used in this investigation. The dump slope stability prediction model’s parameter ranges (see Table 3)were established after thorough literature research which were based on real case studies on dump slopes in different Indian coal mines3,5,47,49,51,52.

Table 1 indicates different factors like overall slope angle, overall bench height, angle of internal friction, unit weight and cohesion are important for slope stability. The inherent moisture content of coal mine dump material which is the natural water content influenced the shear strength values and dump slope stability52. Hence in this study all machine learning models took moisture content as an input parameter, along with five parameters.

Exploratory data analysis

One way to see the probability density of continuous variables in detail is with a Kernel Density Estimate (KDE) graphic. The article’s data visualization is based on the dump dataset using KDE Plot. The probability density of a continuous variable was demonstrated using the KDE Plot, which is sometimes called the Kernel Density Estimate. For a continuous variable, it displays the probability density at different levels. It takes a dataset’s underlying distribution and smooths it out. Figure 2 shows a KDE plot, which is a visual representation of data distribution that sheds light on its shape, central tendency, and dispersion. The plots indicate peaks and distribution ranges that aid in identifying underlying patterns and trends in the dataset, offering a smooth and thorough picture. This is achieved by applying kernel density estimations.

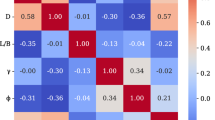

Data distributions for independent and dependent variables are shown using density curves in a violin plot, as seen in Fig. 3. Each curve’s width represents the relative frequency of data points in that area. More data points are suggested by a greater breadth, which suggests higher density. In a violin plot, the median line is the line that runs through the centre. It is useful for figuring out general trends. As illustrated in Fig. 4. a correlation matrix analyses and interprets interactions between variables in a dataset all at once.

Methodology

Lazy predict for model selection

One popular Python package, Lazy Predict, provides 42 ML models and automates ML. Because of the size of this dataset, the authors were able to do extensive testing to determine which forecasting models collaborate best with it53. There isn’t a “one size fits all” model because data variability impacts the models’ applicability. It can be challenging to choose the finest ML model from the many that are available. This self-sufficient ML method speeds up the process of finding solutions.

This AutoML model made use of both the training set, which comprised 80% of the dataset, and the testing set, which comprised 20% of the dataset. The R2 scores and runtimes of the models are evaluated by the lazy prediction mechanism after they get these datasets. One can find the top models in Table 4. The dataset takes six inputs and utilizes FOS as an output. The R2 value was used to choose the best six models. Automatic hyperparameter calibration model suggestions are provided by this algorithm. Afterwards, ML models were hyperparameter tweaked based on this algorithm’s recommendations. The authors were able to create reliable ML models for the research by following this procedure. The stability of dump slopes based on prediction of FOS was the primary goal of optimizing these ML models. (See Fig. 5).

Hyperparameter tuning for model optimisation

A machine learning algorithm usually turns a problem into an optimization problem and uses optimization techniques to solve it. The optimization function defines numerous hyperparameters before learning that affect how the machine learning algorithm aligns the model with the data. Hyperparameters are different from internal model parameters like neural network weights, which are learned from data during model training. Before training, the goal was to find hyperparameter settings that optimize data performance in a realistic timeframe. Hyperparameter optimization or tuning is this process. This greatly affects ML algorithm prediction accuracy54.

Complex hyperparameter tuning is an optimization problem with an unknown or black-box objective function. Traditional optimization methods like Newton or gradient descent are inapplicable. Bayesian optimization is an efficient algorithm for this type of optimization55. By combining prior information about the unknown function with data from samples, this method applies Bayesian principles to obtain posterior information on the distribution of the function. Subsequently, utilizing this posterior information, we can infer the points at which the function achieves its optimal value. The findings indicate that the Bayesian optimization algorithm surpasses other global optimization algorithms56. Consequently, the authors utilized Bayesian optimization grounded in Gaussian processes to adjust the hyperparameters of machine learning models.

Table 4 lists six base ML models which hyperparameters are optimised by Bayesian search—Gaussian process with fivefold cross-validation. Resampling through cross-validation is used for model evaluation and hyperparameter selection. According to fivefold cross-validation, the dataset is divided into five equal-sized folds. All aspects of the training and evaluation procedure are double-checked five times. A more comprehensive evaluation of the model’s performance is within reach. Model performance and hyperparameter tuning efficiency estimation are both enhanced by averaging the coefficient of determination and mean square error (MSE) across five iterations with five folds.

Model performance

This study considers model evaluators such as R2, MSE, RMSE, MAE, and MAPE to determine the prediction of FOS of dump slope. In Eqs. (2–6), one can see the formulae that were used to evaluate these performance measures.

where,

SSR = sum of squared residuals.

SST = total sum of squares

Machine learning models

The four primary steps for implementing the suggested six base machine models are as given Fig. 6 and stacked random forest ensemble model for FOS prediction have been highlighted. Partitioning the database into training (80%) and test sets (20%) was the initial step. Second, Bayesian optimisation-Gaussian process was used to determine the best model hyperparameters when training. Additionally, five-fold cross-validation (CV) was used to reduce the possibility of discrepancy that may occur from randomly picking the dataset. Thirdly, five widely-used performance metrics—R2, MAE, MSE, MAPE, and RMSE—were used to assess the trained model’s efficacy. The model prediction outcomes were subsequently explained using SHAP.

Base ML models

Nusupport vector regressor (NuSVR)

NuSupport Vector Regression is a regression-focused SVM. It extends Support Vector Regression (SVR). NuSVR manages the model complexity-prediction accuracy trade-off differently than normal SVR. The “nu” parameter in NuSVR lets users set the number of support vectors and error tolerance for training57. The statistical learning theory-based SVM reduces structured risk, experience risk, and confidence range. This improves the learning machine’s generalization and model accuracy with less samples58.

Here, the authors go over how to build a dump slope stability model in Python using the NuSVR methodology. The authors used Bayesian Optimization and fivefold cross-validation to find the best hyperparameters. The optimal values of the Nu-SVR-BO model’s hyperparameters are shown in Table 5.

Light GBM (LGBM)

Another method developed by Microsoft Research Asia59 that made use of the gradient boosting decision trees (GBDT) framework was LGBM. The purpose of this endeavour is to improve the efficiency of computations, which will ultimately result in improved solutions for prediction problems that include vast amounts of data. By employing a pre-sorting technique, the GBDT algorithm selects and partitions indicators. This method can exactly determine the separation point, but it requires more time and memory. LGBM saves memory and speeds up training with a histogram-based technique and a tree leaf-wise growth approach with a maximum depth constraint. LGBM consistently outperforms traditional methods and even some other machine learning models. LGBM is known for its speed and efficiency, making it a strong choice for large datasets60.

Here the authors detail the steps used to create a Python-based dump slope stability model with the LGBM methodology. With the help of Bayesian Optimization and fivefold cross-validation, the hyperparameters were found. Table 6 displays the hyperparameter values that work best for the LGBM -BO model.

Gradient boosting (GBM)

A popular integrated learning technique, the GBM model builds many trees with different attributes to help with decision-making. To minimize loss, each tree considers the most current residuals and the conclusions of all trees that came before it before learning on its own. In both regression and classification settings, gradient tree boosting is useful since it is a generalization of the lifting approach that works with any differentiable loss function. It seems that the GBM model’s slope stability predictions have produced promising results38,61.

GBM uses numerical optimization to find a loss-function-minimizing prediction model. To minimize the loss function, the GBM rule iteratively adds a “weak learner,” or tree, at each level. After creating the model with an initial estimate, regression minimizes loss function with a decision tree. Each stage refines residuals from the previous model with a new decision tree. Exploiting a buyer, the person repeats rules until they reach the highest level. Each phase cannot amend the selection criteria specified in earlier phases since the procedures are final. GBM works best when a shrinkage parameter, or learning rate, minimizes choice tree size at each iteration cycle. Shrink operation in the GBM reveals that smaller stages are more accurate. Lower distances may improve model accuracy. Arguments return zero to one. The value is inversely proportional to iteration variability; thus, a smaller shrink requires more iterations to converge. Feature organization caused the GBM rule’s recent prophetic increase62. A carefully selected subsample informs the selection tree each iteration instead of the entire educational dataset. When there were enough observations, each generation consumed 50% of the dataset through knowledge evasion on average. A sub-sample fraction’s diverse values must be investigated to discover how lowering statistical parameters affects model quality. The sub-sampling strategy increases GBM model performance and reduces algorithm compute cost by a similar ratio63.

Using Bayesian Optimization and fivefold cross-validation methods, this section describes how the GBM approach is applied in a Python environment to build a dump slope stability model. The goal is to discover the ideal hyperparameters. The optimal settings of the GBM -BO model’s hyperparameters are shown in Table 7.

Extreme gradient boosting (XGB)

Chen and Guestrin’s64 XGB ruleset offer a novel approach to implementing the Gradient Boosting technique, specifically K Regression and classification trees. A “sturdy” learner can be produced through additive education methods by combining all of the predictions of a group of “vulnerable” starters, according to the set of rules. To start with, XGB optimizes the processing resources while attempting to prevent overfitting. This is frequently accomplished by reducing the complexity of the target capabilities in order to combine predictive and regularization phrases while maintaining the highest possible processing rate. All the way through education level, XGB functions often undergo parallel calculations63.

In order to create a dump slope stability model, this section describes how the XGB approach was applied in a Python environment. The hyperparameters were determined using Bayesian Optimization and fivefold cross-validation procedures. Table 8 shows the optimal settings of the XGB -BO model’s hyperparameters.

Histogram gradient boosting (HGB)

The proposed method leverages histogram-based techniques to optimize gradient boosting ensembles, reducing computational complexity. Instead of processing individual data points, histogram-based methods group continuous features into discrete bins. This significantly reduces the number of calculations required for each iteration. By discretizing features into histograms, the algorithm lowers memory usage, making it feasible to train models on large datasets without excessive resource consumption. Since fewer computations are needed per iteration, HGB trains faster while maintaining high predictive accuracy. HGB scales well with high-dimensional data, making it suitable for complex machine learning tasks65.

Here the authors show how to build a dump slope stability model in Python using the HGB technique, finding the best hyperparameters with Bayesian Optimization and fivefold cross-validation. The optimal settings of the HGB-BO model’s hyperparameters are displayed in Table 9.

Extra tree regressor (ETR)

To increase prediction accuracy and decrease the likelihood of overfitting, ETR makes use of a cluster of random decision trees that have been fitted to different parts of the dataset. By combining the results of each tree, this clever method creates a final model that is more powerful than the sum of its parts66,67. This method is an example of a learning algorithm, and it has proven repeatedly that even a basic algorithm may provide good results. This finding, meanwhile, is very dependent on the details of the data that were provided67,68.

In order to create a dump slope stability model, this section describes how the ETR methodology was applied in a Python environment. The hyperparameters were determined using Bayesian Optimization and fivefold cross-validation procedures. Table 10 shows the optimal settings of the ETR -BO model’s hyperparameters.

Convergence plot

A convergence plot is a graphical representation of the evolution of a time step or error estimate in machine learning. This tool allows for the visualization of algorithm performance, helping to assess whether the results are stabilizing at constant values. Figure 7a–f shows how the error minimized with increasing the iterations in optimisation algorithm for different machine learning models.

Dependence plot

Figure 8a, b show the evolution of the model’s hyperparameter variables and their optimal values with red star in a dependency plot.

Learning curve

A machine learning model’s learning curve illustrates how the model’s prediction error evolves with changes to the size of the training set. It is evident from the learning curves for different ML models(as shown in Fig. 9a–f that the training score curve and the validation score curve converge as the size of the training set increases. This is something that can be seen clearly. Adding more training data improves the validation score.

Stacking ensemble

By utilizing a meta-learner, a stacking ensemble integrates the forecasts of several base learners69. Training numerous base learners is the first step, and training a meta-learner to optimally aggregate their outputs is the second70. The first step is to gather the predictions made by the base learners after they have trained independently on the same dataset using various techniques or hyperparameter setups. Training a meta-learner with the basis learners’ predictions as features is the second stage. In order to arrive at final predictions, this meta-model (Random Forest) figures out how to integrate or weight the results of the foundation models. Improving prediction accuracy by merging heterogeneous base learners through a meta-learner and so minimizing generalization mistakes is the underlying premise of stacking ensemble71 (Fig. 10). By combining the best features of several base models and reducing the impact of their flaws, stacking EL (as shown in Fig. 10) can achieve better results than any one model in the ensemble72.

Figure 11 learning curve makes it very evident that, as the size of the training set increases, both the training score curve and the validation score curve converge. Adding more training data improves the validation score. Figure 11 shows that with a cross-validation score of 0.9989, the R2 value converged.

H20 AutoML

In order to make AutoML more accessible, several open-source systems have been created, including Auto-Keras, Auto-PyTorch, Auto-Sklearn, Auto-Gluon, and H2O AutoML73. H2O AutoML’s strength in processing big and complex datasets has been highlighted in previous research40,74,75 through its ability to swiftly search for the ideal model without relying on manual trial and error. In addition, H2O AutoML includes a user interface that even those without technical knowledge can use to automatically train and fine-tune models, as well as import and split datasets, find the answer column, and more. Therefore, the current study employed H2O AutoML to analyze the stability of dump slopes.

To build ML models for dump slope stability regression, this work used the H2O AutoML method. The first step was to randomly split the database into half, with 80% going into training and 20% into testing. A variety of ML models were created and programmed, including GLM, DRF, XGB, Deep Learning, and GBM. The authors used the standard fivefold CV to boost performance and dependability and built stacked ensembles using the top-performing models and every tuned model. Mode performance accuracy could be leader boarded. The leader models were tested on the testing dataset after storage. The best model was gradient boosting regressor.

Model interpretation

Sensitivity analysis

For a 120 m overall bench height and 35° overall slope angle, Fig. 12 shows how the FOS is affected by slope parameters. A material attribute’s normalized fluctuation from its minimum to maximum value, spanning from 0 to 100%, is represented by the percentage of range (%) on the x-axis and the factor of safety (FOS) on the y-axis. The influence of input factors on slope stability can be better understood through sensitivity analysis. The combination of slope inclination and height was the subject of a sensitivity study, as shown in Table 11. This study’s dataset was developed using the sensitivity analysis results, which were based on the provided overall slope angle (β) and overall bench height (H).

For a 120 m overall bench height and 35° overall slope angle, Monte Carlo simulation was used to spread the uncertainties in the input across the dump slope stability model. Dump material properties were represented as random variables characterized by designated probability distributions, as seen in Table 12.

The mean of the resulting distribution of FOS was 0.914, and the standard deviation was 0.22, as seen in Fig. 13. The authors figured out that the probability of failure (PoF), which is the percentage of realizations with FoS < 1.0, was 63.33%.

Model interpretation using SHAP

This study used the Shapley additive explanations (SHAP) approach to assess the impact of features on machine learning model prediction due to its rapid implementation for tree-based models. In coalitional game theory, Shapley values determined how much each attribute contributed to the prediction76,77. Qualities with higher positive SHAP ratings usually affect the forecast more. Figure 14 shows SHAP values for six input features. Each scattering point on the diagram represents a sample, and the red dots indicate elevated relevant characteristic values. However, blue represents low feature values. Red sample points near positive SHAP values show that the angle of internal friction (ϕ) significantly affects the dump slope’s factor of safety (FOS). Higher friction angle increases dump slope stability. However, several red-marked sample locations are in the area with negative SHAP values for the overall slope angle. All evidence indicated the dump slope’s stability is threatened by the slope angle.

The friction angle ϕ is the primary factor in predicting the factor of Safety (FOS), followed by overall slope angle, cohesion, and overall bench height. Higher bench geometry can affect dump slope stability; however, the shear strength parameters (ϕ and c) have a positive impact among the six features. Figure 14 also states the six features’ relevance. The order of these six features reveals that each is valuable in its own way. To anticipate FOS, the friction angle ϕ is the most important element, followed by slope angle, cohesion, and bench height.

Figure 15 confirms that bench geometry(overall bench height and overall slope angle) and shear strength parameters (ϕ and c) are crucial for assessing dump slope stability.

One helpful visual for comprehending the impact of a specific characteristic on the model’s predictions is the dependence plot, as seen in Fig. 16 of SHAP. The correlation between the value (x-axis) and Shap value (y-axis) of a feature, which represents the feature’s influence on the prediction, is shown in a SHAP dependence plot. Alterations to that feature’s impact on model predictions are illustrated by each point, which stands in for a row in the dataset. The non-linear interactions between features and predictions can be better detected with its help. It colours the dots depending on another characteristic to highlight how features interact with one other. It reveals which characteristic values aid in prediction and which ones hinder it. In Fig. 16a, f once can see that SHAP values grow in relation to feature values, which implies that larger feature values lead to the value of output to be more, and in Fig. 16c, d it can be seen that SHAP values fall in relation to feature values, which means that larger feature values lead to the value of output to be declined. Complex, non-linear interactions are indicated by a curved or scattered relationship (see Fig. 16b, e).

Results and discussions

Comparative study between individual ensemble, stacking ensemble and H2O AutoML

Table 13 provides a summary of the eight models’ prediction performance. For predicting dump slope stability, the H2O AutoML and stacking ensemble usually outperforms standalone ensemble models. Enhancing prediction accuracy by merging varied base learners through a meta-learner and so limiting generalization mistakes is the main advantage of stacking ensemble models over other ensemble models. In addition, H2O AutoML’s user interface enables non-technical users to accomplish tasks such as importing and splitting datasets, finding the answer column, and automatically training and fine-tuning models. An issue that arises often during model training is overfitting, which weakens the models’ ability to generalize as the training data has significantly greater predictive performance than the testing data78. If the prediction accuracies of the training and testing data are significantly different, overfitting may happen. Table 13, fourth column, shows that for all eight models, there are less than 0.1 absolute differences between training and testing R squared prediction accuracies. Despite the lack of a universally accepted definition of overfitting in engineering practice79, this study found that the eight models had relatively small differences (less than 0.1) in their forecast accuracies80.

Now that each ML model has been fine-tuned on the training datasets, one can evaluate its effectiveness. It is possible to compare the performance of several ML models using these quantitative parameters(see Table 14). To evaluate the performance of ML models, number of additional statistical metrics, like MSE, MAPE, RMSE, and MAE were used. Table 14 displays the statistical metrics for all of the models. To measure how well the models work, many different statistical measures are employed. Compared to other ensemble models, these results point to H2O AutoML as the most accurate.

In Fig. 17a–h the x axis represents the real values of 50 observations, and the y axis represent the predicted values of FOS. Figure 17a–h compares the predicted and actual outcomes produced by each of the ML models. It is clear that all the models have produced quite accurate FOS estimates. Consequently, the XGB model achieved the worst accuracy (R2 = 0.7453). Additionally, with an R2 value of 0.998937, the H2O AutoML model delivered the best accuracy. Accuracy between these two models has been provided by other models.

To determine if a regression model is suitable, regression analysts employ residual plots, which are graphical tools. They are useful for seeing the differences between the expected and actual values (the residuals) and for finding issues with the model. Whether the model’s assumptions are satisfied and the model fits the data well can be ascertained by analysing the distribution and patterns of residuals. The gradient boosting model was more suitable regression model than XGB model for this study as shown Fig. 18a, b. The error in prediction was less in case GBM model.

Conclusions

First of all, the authors conducted the data preprocessing of 2250 raw data to transform into a clean, structured and usable format in exploratory data analysis for further processing. In order to predict the FOS of dump slopes in opencast coal mines, this study examined the use of advanced ML models such as GBM, LGBM, XGB, HGB, NuSVR, ETR, with stacked random forest meta model, and H2O AutoML. In order to build the stacking ensemble model, the authors first employed Bayesian optimization—a Gaussian process-to fine-tune the hyperparameters of six foundational machine learning models. The fivefold CV method enhanced the generalizability of the regression models and six potentially significant indicators like cohesion (c), internal friction angle (ϕ), unit weight (γ), overall bench height (H), natural moisture content (m), and overall slope angle (β) were chosen. One last step was to use the SHAP method to do the sensitivity analysis. The main conclusions are as follows:

-

It was suggested that an AutoML method for building ML models and selecting the optimal model for each dataset. With AutoML, one need not worry about establishing a pipeline by hand for ML algorithm selection and hyperparameter tweaking; the system can handle model development and pipeline searches with ease. Traditional models that were manually tweaked and models that were optimized using metaheuristics were beaten by the trained H2O AutoML model. Automated ML model building, model selection and dump slope stability tests were all successfully accomplished with using AutoML.

-

Lazy predict Auto-ML’s predictions are comparable to those of standalone ML models, with the exception of XGB regressor and NuSVR, where they diverge.

-

Compared to other base learners, the stacking ensemble model has a high accuracy value. This study demonstrated the importance of ensemble ML approaches for evaluating and predicting complex dump slope stability. In order to improve computational efficiency, it is required to integrate the super learner function in practical applications due to the huge number of computations and long training period of the stacking model.

-

SHAP study found that factors such cohesiveness (c), unit weight (γ), total bench height (H), and natural moisture content (m) were important in determining dump slope stability, whereas the internal friction angle (ϕ) and overall slope angle (β) were crucial. The stability of the dump slope is more affected by the prediction variables, such as internal friction angle (ϕ), overall slope angle (β), cohesion (c), and overall bench height (H), which are geotechnical material variables and slope geometry, with relevance scores of 0.2, 0.15, 0.06, and 0.03 respectively.

-

The non-linear interactions between input features and predictions can be better detected with the help of SHAP analysis. It colours the dots depending on another characteristic to highlight how features interact with one other. It reveals which characteristic values aid in prediction and which ones hinder it.

-

The standard modelling approaches have a hard time dealing with the complicated, nonlinear, high-dimensional relationship between slope stability and influencing factors. Because of its adept handling of this relationship and its capacity to generate reliable prediction outcomes, the ensemble learning method is a good fit for assessing dump slope stability.

To build an AutoML approach and enhance its generalizability and dependability, future research should incorporate samples and parameters that are crucial, such as the distribution of grain size, rainfall and other external or trigger factors.

Data availability

The dataset used and/ or analysed in this paper is available in a supplement file at **[** [https://data.mendeley.com/datasets/459cbkwwdr/1]].

Abbreviations

- XGB:

-

Extreme gradient boost

- ANN:

-

Artificial neural network

- GPR :

-

Gaussian process regression

- SVR:

-

Support vector regression

- LSTM:

-

Long-short term memory

- DNN:

-

Deep neural networks

- KNN:

-

K-nearest neighbours

- MLP:

-

Multilayer perceptron

- SVM:

-

Support vector machines

- DT:

-

Decision tree

- RF:

-

Random forest

- GBM:

-

Gradient boosting

- DL:

-

Deep learning

- GA-XGB:

-

Genetic algorithm-extreme gradient boosting

- MLR:

-

Multiple linear regression

- SLR:

-

Simple linear regression

- XGB-RF:

-

Extreme gradient boosting-random forest

- LGBM:

-

Light gradient boosting machine

- HGB:

-

Histogram gradient boosting

- Nu-SVR:

-

Nu-support vector regressor

- ETR:

-

Extra tree regressor

- FOS:

-

Factor of safety

- RMSE:

-

Root mean square error

- MSE:

-

Mean square error

- MAE:

-

Mean absolute error

- R squared or R2 :

-

Coefficient of determination

- MAPE:

-

Mean absolute percentage error

- AutoML:

-

Automated machine learning model

- ML:

-

Machine learning

- c:

-

Cohesion

- ϕ:

-

Angle of internal friction

- γ:

-

Unit weight

- H:

-

Overall bench height

- m:

-

Natural moisture content

- β:

-

Overall slope angle

- H’:

-

Bench height

- β’ :

-

Slope angle

- ϑ:

-

Porewater pressure

- SHAP:

-

Shapley additive explanations technique

- CIL:

-

Coal India Limited

- OB:

-

Overburden dump

- AI:

-

Artificial intelligence

- LEM:

-

Limit equilibrium methods

- KDE:

-

Kernel density estimate

- CV:

-

Cross-validation

- BO:

-

Bayesian optimization

- BD:

-

Bulk density

- Hs:

-

Height of the bench at dragline sitting level

- Hcd:

-

Height of the bench between the coal-rib roof and dragline sitting level

- BW:

-

Berm width at dragline sitting level

- A:

-

Angle of the face of the bench at dragline sitting level

- B:

-

Slope angle of the bench between the coal-rib roof and the dragline sitting level

- Hcr:

-

Coal-rib height

References

Dash, A. K. Analysis of accidents due to slope failure in Indian opencast coal mines. Curr. Sci. 117(2), 304. https://doi.org/10.18520/cs/v117/i2/304-308 (2019).

Rajhans, P., Ekbote, A. G. & Bhatt, G. Stability analysis of mine overburden dump and improvement by soil nailing. Mat. Today Proc. 65, 735–740 (2022).

Kainthola, A., Verma, D., Gupte, S. S. & Singh, T. N. A coal mine dump stability analysis—A case study. Geomaterials 01(01), 1–13. https://doi.org/10.4236/gm.2011.11001 (2011).

Sharma, S. & Roy, I. Slope failure of waste rock dump at Jayant opencast mine, India: A case study. Int. J. Appl. Eng. Res. 10(13), 33006–33012 (2015).

Khandelwal, R., Rai, M. & Shrivastva, R. Evaluation of dump slope stability of a coal mine using artificial neural network. Geomech. Geophys. Geo Energy Geo Res. 1, 69–77 (2015).

Gupte, S. S. Optimisation of internal dump capacity and stability analysis in a coal mine-a case study. In: APSSIM 2016: Proc. of the First Asia Pacific Slope Stability in Mining Conference. pp. 557–570, (2016).

DGMS(Tech) Circular No 03 of 2020 dated 16–01–2020—Guideline for Scientific Study under Regulation 106 of Coal Mines. (2017). Directorate General of Mines Safety, 03, (2020).

Koner, R., & Chakravarty, D. Evaluation of seismic response of external mine overburden dumps. In: Fifth International Conferences on Recent Advances in Geotechnical Earthquake Engineering and Soil Dynamics; Missouri university of Science and Technology. pp. 4–63, (2010).

Garg, A., Garg, A., Tai, K. & Sreedeep, S. Estimation of factor of safety of rooted slope using an evolutionary approach. Ecol. Eng. 64, 314–324. https://doi.org/10.1016/j.ecoleng.2013.12.047 (2014).

Shiferaw, H. M Study on the influence of slope height and angle on the factor of safety and shape of failure of slopes based on strength reduction method of analysis. Beni Suef Univ. J. Basic Appl. Sci. 10(1), 31. https://doi.org/10.1186/s43088-021-00115-w (2021).

Mojtahedi, S. F. F. et al. A novel probabilistic simulation approach for forecasting the safety factor of slopes: a case study. Eng. Comput. 35(2), 637–646. https://doi.org/10.1007/s00366-018-0623-5 (2019).

Maedeh, P. A. et al. A new approach to estimate the factor of safety for rooted slopes with an emphasis on the soil property, geometry and vegetated coverage. Adv. Comput. Des. 3(3), 269–288 (2018).

Rawat, S. & Gupta, A. K. Analysis of a nailed soil slope using limit equilibrium and finite element methods. Int. J. Geosynth. Ground Eng. 2(4), 34. https://doi.org/10.1007/s40891-016-0076-0 (2016).

Sahoo, A. K., Tripathy, D. P. & Jayanthu, S. Application of machine learning techniques in slope stability analysis: A comprehensive overview. J. Min. Environ. 15(3), 907–921 (2024).

Gao, W. & Ge, S. A comprehensive review of slope stability analysis based on artificial intelligence methods. Expert Syst. Appl. 239(122400), 122400. https://doi.org/10.1016/j.eswa.2023.122400 (2024).

Cai, Y., Yuan, Y. & Zhou, A. Predictive slope stability early warning model based on CatBoost. Sci. Rep. 14(1), 25727 (2024).

Karir, D. et al. Stability prediction of a natural and man-made slope using various machine learning algorithms. Transp. Geotech. 34(100745), 100745. https://doi.org/10.1016/j.trgeo.2022.100745 (2022).

Aminpour, M. et al. Slope stability machine learning predictions on spatially variable random fields with and without factor of safety calculations. Comput. Geotech. 153(105094), 105094. https://doi.org/10.1016/j.compgeo.2022.105094 (2023).

Singh, S. K., & Chakravarty, D. Assessment of slope stability using classification and regression algorithms subjected to internal and external factors. Arch. Mining Sci. 68(1) (2023).

Paliwal, M. et al. Stability prediction of residual soil and rock slope using artificial neural network. Adv. Civ. Eng. 2022(1), 1–14. https://doi.org/10.1155/2022/4121193 (2022).

Mahmoodzadeh, A. et al. Prediction of safety factors for slope stability: comparison of machine learning techniques. Nat. Hazards 111, 1–29 (2022).

Ahangari Nanehkaran, Y. et al. Application of machine learning techniques for the estimation of the safety factor in slope stability analysis. Water 14(22), 3743. https://doi.org/10.3390/w14223743 (2022).

Azmoon, B., Biniyaz, A. & Liu, Z. Evaluation of deep learning against conventional limit equilibrium methods for slope stability analysis. Appl. Sci. 11(13), 6060. https://doi.org/10.3390/app11136060 (2021).

Meng, J., Mattsson, H. & Laue, J. Three-dimensional slope stability predictions using artificial neural networks. Int. J. Numer. Anal. Meth. Geomech. 45(13), 1988–2000. https://doi.org/10.1002/nag.3252 (2021).

Asteris, P. G. et al. Slope stability classification under seismic conditions using several tree-based intelligent techniques. Appl. Sci. 12(3), 1753. https://doi.org/10.3390/app12031753 (2022).

Fatty, A., Li, A.-J. & Qian, Z.-G. An interpretable evolutionary extreme gradient boosting algorithm for rock slope stability assessment. Multimed. Tools Appl. 83(16), 46851–46874. https://doi.org/10.1007/s11042-023-17445-9 (2023).

Waris, K. A., Fayaz, S. J., Reddy, A. H. & Basha, B. M. Pseudo-static slope stability analysis using explainable machine learning techniques. Nat. Hazards 121(1), 485–517. https://doi.org/10.1007/s11069-024-06839-z (2025).

Bui, T., Moayedi, D., Gör, H., Jaafari, M. & Foong, A. Predicting slope stability failure through machine learning paradigms. ISPRS Int. J. Geo. Inf. 8(9), 395 (2019).

Duc, N. D., Nguyen, M. D., Prakash, I., Van, H. N., Van Le, H., & Thai, P. B. Prediction of safety factor for slope stability using machine learning models. Vietnam J. Earth Sci. (2025).

Nanehkaran, Y. A. et al. Comparative analysis for slope stability by using machine learning methods. Appl. Sci. 13(3), 1555. https://doi.org/10.3390/app13031555 (2023).

Liu, Z. et al. Modelling of shallow landslides with machine learning algorithms. Geosci. Front. 12, 385–393 (2021).

Qi, C. & Tang, X. Slope stability prediction using integrated metaheuristic and machine learning approaches: A comparative study. Comput. Ind. Eng. 118, 112–122. https://doi.org/10.1016/j.cie.2018.02.028 (2018).

Rajan, K. C. et al. Development of a framework for the prediction of slope stability using machine learning paradigms. Nat. Hazards 121, 83–107 (2025).

Demir, S. & Sahin, E. K. Application of state-of-the-art machine learning algorithms for slope stability prediction by handling outliers of the dataset. Earth Sci. Inf. 16(3), 2497–2509 (2023).

Bai, G. et al. Performance evaluation and engineering verification of machine learning based prediction models for slope stability. Appl. Sci. 12(15), 7890. https://doi.org/10.3390/app12157890 (2022).

Bharati, A. K., Ray, A., Khandelwal, M., Rai, R. & Jaiswal, A. Stability evaluation of dump slope using artificial neural network and multiple regression. Eng. Comput. 38(S3), 1835–1843. https://doi.org/10.1007/s00366-021-01358-y (2022).

Jiang, S. et al. Landslide risk prediction by using GBRT algorithm: Application of artificial intelligence in disaster prevention of energy mining. Proc. Safety Environ. Prot. Trans. Inst. Chem. Eng. Part B 166, 384–392. https://doi.org/10.1016/j.psep.2022.08.043 (2022).

Yang, Y. et al. Slope stability prediction method based on intelligent optimization and machine learning algorithms. Sustainability 15(2), 1169 (2023).

Li, X., Nishio, M., Sugawara, K., Iwanaga, S., & Chun, P. J. Surrogate model development for slope stability analysis using machine learning. Sustainability. 15, (2023).

Ma, J. et al. Machine learning models for slope stability classification of circular mode failure: an updated database and automated machine learning (AutoML) approach. Sensors 22(23), 9166 (2022).

Ragam, P. et al. Estimation of slope stability using ensemble-based hybrid machine learning approaches. Front. Mat. 11, 1330609. https://doi.org/10.3389/fmats.2024.1330609 (2024).

Kurnaz, T. F., Erden, C., Dağdeviren, U., Demir, A. S. & Kökçam, A. H. Comparison of machine learning algorithms for slope stability prediction using an automated machine learning approach. Nat. Hazards 120, 6991–7014 (2024).

Barkhordari, M. S., Barkhordari, M. M., Armaghani, D. J., Mohamad, E. T., & Gordan, B. Straightforward Slope Stability Prediction Under Seismic Conditions Using Machine Learning Algorithms. (2023).

Lin, S. et al. A comprehensive evaluation of ensemble machine learning in geotechnical stability analysis and explainability. Int. J. Mech. Mater. Des. 20(2), 331–352 (2024).

Aryal, K. P. Slope Stability Evaluations by Limit Equilibrium and Finite Element Methods. Doctoral dissertation. (2006).

Chugh, A. K. Variable interslice force inclination in slope stability analysis. Soils Found. 26, 115–121 (1986).

Poulsen, B., Khanal, M., Rao, A. M., Adhikary, D. & Balusu, R. Mine overburden dump failure: A case study. Geotech. Geol. Eng. 32(2), 297–309. https://doi.org/10.1007/s10706-013-9714-7 (2014).

Hawley, M. Guidelines for Mine Waste Dump and Stockpile Design (CSIRO Publishing, 2017).

Geete, S. S., Singh, K. H., Verma, A. K., Singh, T. N., & Dahale, P. P. Stability analysis of overburden dump slope-a case study of Marki Mangli-I Coal Mine. In: Indian Geotechnical Conference. Springer Nature. (2021).

Igwe, O. & Chukwu, C. Slope stability analysis of mine waste dumps at a mine site in southeastern Nigeria. Bull. Eng. Geol. Env. 78(4), 2503–2517. https://doi.org/10.1007/s10064-018-1304-8 (2019).

Kumar, A., Das, S. K., Nainegali, L., Raviteja, K. V. & Reddy, K. R. Probabilistic slope stability analysis of coal mine waste rock dump. Geotech. Geol. Eng. 41, 4707–4724 (2023).

Dewangan, P. K., Pradhan, M. & Ramtekkar, G. D. Effect of fragment size, uniformity coefficient and moisture content on compaction and shear strength behavior of coal mine overburden dump material. Eur. J. Adv. Eng. Technol. 2(12), 1–1 (2015).

Putra, M. I. & Alexander, V. Comparison of machine learning land use-land cover supervised classifiers performance on satellite imagery sentinel 2 using lazy predict library. Indones. J. Data Sci. 4(3), 183–189 (2023).

Wu, J. et al. Hyperparameter optimization for machine learning models based on Bayesian optimization. J. Electron. Sci. Technol. 17(1), 26–40 (2019).

Betrò, B. Bayesian methods in global optimization. In: Operations Research ’91 (pp. 16–18). Physica-Verlag HD. (1992).

Jones, D. R. A taxonomy of global optimization methods based on response surfaces. J. Global. Optim. 21, 345–383 (2001).

Mahmoodzadeh, A. et al. Comprehensive analysis of multiple machine learning techniques for rock slope failure prediction. J. Rock Mech. Geotech. Eng. 16(11), 4386–4398. https://doi.org/10.1016/j.jrmge.2023.08.023 (2024).

Moayedi, H., Tien Bui, D., Kalantar, B. & Kok Foong, L. Machine-learning-based classification approaches toward recognizing slope stability failure. Appl. Sci. 9(21), 4638. https://doi.org/10.3390/app9214638 (2019).

Ke, G. et al. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 30 (2017).

Liang, W., Luo, S., Zhao, G. & Wu, H. Predicting hard rock pillar stability using GBDT, XGBoost, and LightGBM algorithms. Mathematics 8(5), 765. https://doi.org/10.3390/math8050765 (2020).

Zhou, J. et al. Slope stability prediction for circular mode failure using gradient boosting machine approach based on an updated database of case histories. Saf. Sci. 118, 505–518. https://doi.org/10.1016/j.ssci.2019.05.046 (2019).

Friedman, J. H. Stochastic gradient boosting. Comput. Stat. Data Anal. 38(4), 367–378. https://doi.org/10.1016/s0167-9473(01)00065-2 (2002).

Bharti, J. P. et al. Slope stability analysis using Rf, gbm, cart, bt and xgboost. Geotech. Geol. Eng. https://doi.org/10.1007/s10706-021-01721-2 (2021).

Chen, T. & Guestrin, C. A scalable tree boosting system. In: Proc. of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining. pp. 785–794 (2016).

Guryanov, A. Histogram-based algorithm for building gradient boosting ensembles of piecewise linear decision trees. In: Analysis of Images, Social Networks and Texts: 8th International Conference, AIST 2019 (pp. 39–50). Springer International Publishing. (2019).

Friedman, J. H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 29(5), 1189–1232. https://doi.org/10.1214/aos/1013203451 (2001).

Piryonesi, S. M. & El-Diraby, T. E. Data analytics in asset management: Cost-effective prediction of the pavement condition index. J. Infrastruct. Syst. 26(1), 04019036. https://doi.org/10.1061/(asce)is.1943-555x.0000512 (2020).

Hastie, T., Tibshirani, R., Friedman, J. H. & Friedman, J. H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction (Springer, 2009).

Wolpert, D. H. Stacked generalization. Neural Netw. 5, 241–259 (1992).

Sahin, E. K. & Demir, S. Greedy-AutoML: A novel greedy-based stacking ensemble learning framework for assessing soil liquefaction potential. Eng. Appl. Artif. Intell. 119, 105732 (2023).

Pernía-Espinoza, A., Fernandez-Ceniceros, J., Antonanzas, J., Urraca, R. & Martinez-de-Pison, F. J. Stacking ensemble with parsimonious base models to improve generalization capability in the characterization of steel bolted components. Appl. Soft Comput. 70, 737–750. https://doi.org/10.1016/j.asoc.2018.06.005 (2018).

Brownlee, J. Ensemble learning algorithms with Python: Make better predictions with bagging, boosting, and stacking. In: Machine Learning Mastery. (2021).

Ferreira, L., Pilastri, A., Martins, C. M., Pires, P. M., & Cortez, P. A comparison of AutoML tools for machine learning, deep learning and XGBoost. In: 2021 International Joint Conference on Neural Networks (IJCNN). (2021).

Sun, A. Y., Scanlon, B. R., Save, H. & Rateb, A. Reconstruction of GRACE total water storage through automated machine learning. Water Resour. Res. 57(2), e2020WR028666. https://doi.org/10.1029/2020wr028666 (2021).

Babaeian, E., Paheding, S., Siddique, N., Devabhaktuni, V. K. & Tuller, M. Estimation of root zone soil moisture from ground and remotely sensed soil information with multisensor data fusion and automated machine learning. Remote Sens. Environ. 260(112434), 112434. https://doi.org/10.1016/j.rse.2021.112434 (2021).

Lundberg, S., & Lee, S.-I. A unified approach to interpreting model predictions. In arXiv [cs.AI]. http://arxiv.org/abs/1705.07874 (2017).

Guo, D., Chen, H., Tang, L., Chen, Z. & Samui, P. Assessment of rockburst risk using multivariate adaptive regression splines and deep forest model. Acta Geotech. 17, 1–23 (2022).

Cawley, G. C. & Talbot, N. L. On over-fitting in model selection and subsequent selection bias in performance evaluation. J. Mach. Learn. Res. 11, 2079–2107 (2010).

Hou, S., Liu, Y. & Yang, Q. Real-time prediction of rock mass classification based on TBM operation big data and stacking technique of ensemble learning. J. Rock Mech. Geotech. Eng. 14(1), 123–143. https://doi.org/10.1016/j.jrmge.2021.05.004 (2022).

Zhang, W., Li, H., Han, L., Chen, L. & Wang, L. Slope stability prediction using ensemble learning techniques: A case study in Yunyang county, Chongqing, China. J. Rock Mech. Geotech. Eng. 14(4), 1089–1099. https://doi.org/10.1016/j.jrmge.2021.12.011 (2022).

Author information

Authors and Affiliations

Contributions

AK Sahoo wrote the main manuscript text, conceptualisation and analysis. DP Tripathy reviewed the manuscript, conceptualisation and analysis. S Jayanthu reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sahoo, A.K., Tripathy, D.P. & Jayanthu, S. Advanced machine learning techniques for predicting dump slope stability in Indian opencast coal mines. Sci Rep 15, 40985 (2025). https://doi.org/10.1038/s41598-025-24680-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-24680-7