Abstract

With the rapid expansion of smart grids and renewable energy integration, efficient monitoring and data communication have become critical tasks. Traditional techniques lack adaptability, generating inefficiencies in path selection, increased communication overhead and high latency. This research aims to develop a Smart Grid Monitoring System (SGMS) that improves collection of data, processing and transmission efficiency in Internet of Things (IoT) based smart grids. The proposed SGMS employs sensors to collect data from photovoltaic (PV) systems, wind systems, the grid and battery systems. The gathered data is processed and stored using an ESP8266 NodeMCU microcontroller, facilitating monitoring and analysis. The monitored data undergoes preprocessing steps, including one-hot encoding and dimensionality reduction through Linear Discriminant Analysis (LDA). One-hot encoding ensures categorical data compatibility for machine learning algorithms, while LDA optimally reduces the data dimensionality preserving class discriminability. To improve the efficiency of data transmission and minimize latency, a Hybrid Updated Gazelle-Random Forest (HUG-RF) classifier is proposed for identifying the shortest path from the NodeMCU to an IoT webpage for data visualization. The processed data is thus transmitted via the identified shortest path, enhancing the system’s responsiveness and scalability. The proposed SGMS offers comprehensive monitoring of critical parameters like voltage, current and state-of-charge in the smart grid ecosystem, efficient data processing and transmission along with reduced computational complexity. The simulation outcomes establish the effectiveness of the developed approach in achieving 96.8% accuracy and 0.36% of energy consumption. The study thus presents a robust and intelligent monitoring system with IoT platform for remote accessibility and control.

Similar content being viewed by others

Introduction

Smart grid and communication technologies are key components of modern power sector, which is going through substantial growth and evolution. These technologies are intended for enhancing the safety, efficiency, and environmental responsibility of power generation, transmission, and distribution operations1,2,3. These technologies enable improved energy management. In addition, the implementation of these technologies might lessen environmental effect by encouraging the application of clean energy sources. Therefore, smart grid and communication technologies are critical to the energy sector’s ongoing change. The rapid progress of technological innovation and cloud computing have resulted in a surge in data generation via a variety of tools such as smart phones, computers, and human interactions. An efficient evaluation of these data can add tremendous value and benefits to people and businesses4,5. Nevertheless, the number and complexity of data are continuously expanding, and the concept of “big data” has transformed data processing and analysis into a new challenge and potential for this century6. Data analysis enables grid operators determine energy consumption patterns, optimize energy flow in the case of excessive, optimize and demand for the incorporation of Renewable Energy (RE sources7. In addition, data evaluation is employed for tracking the efficiency of communication technologies including devices and smart grid sensors. This simplifies critical procedures like defect detection and prevention, as well as lowering energy losses8. Finally, this study emphasizes the necessity of data analysis for smart grids and communication technologies, which are handled by collecting and analyzing more data than traditional energy grids.

IoT in smart grids brings numerous advantages, including real-time monitoring, automation and efficient energy management. However, it also introduces significant security challenges that impact the reliability and stability of the power infrastructure. These grids rely on a vast network of interconnected devices, sensors, and communication protocols, making them susceptible to data interception, man-in-the-middle attacks and spoofing9,10. Additionally, privacy concerns arise due to the continuous collection and transmission of consumer energy consumption data, which, if compromised, can be exploited for malicious purposes. Therefore, implementing robust encryption techniques, intrusion detection systems and security mechanisms is essential to safeguard data integrity and grid resilience. Addressing these security issues is necessary in IoT-enabled smart grids while minimizing potential risks and vulnerabilities11,12.

Identifying the efficient path for transmitting data in IoT-based smart grids significantly mitigates security threats and enhances grid resilience. Shorter paths optimize bandwidth usage, reduce energy consumption in communication devices, and minimize network congestion, ensuring smooth and secure data flow. A well-optimized data transmission path reduces the number of intermediate nodes and communication links through which data passes. Traditional grid monitoring systems often rely on rule-based algorithms to recognize the best possible paths for data transmission. However, the smart grids become more complex with rising number of interconnected nodes and dynamic load patterns. Due to this, conventional approaches can become inefficient and struggle to keep up with the rapid changes in the grid infrastructure. The machine learning approaches learn complex patterns and make accurate predictions, offering a solution to this challenge. By applying these methods to analyze grid data, including voltage levels, power flow, and grid topology, these systems can efficiently identify the shortest and most reliable paths for data and control signal transmission.

For instance, an adaptive routing based13,14 method is proposed to preserve the source location privacy for data transmission. However, it requires a more complex routing mechanism and decision-making process. In the Exhaustive Routing Path (ERP) allocation method15, the legitimate node is selected for complete broadcast of the data packets. It minimizes the communication overhead and improves the throughput. Despite efforts, achieving outstanding service results remains challenging. The author of16 established a Neuro-Fuzzy routing technique for IoT network data transmission. Efficient routing in IoT networks improves network quality of service (QoS). However, this technique needs to be enhanced for an ensemble algorithm in the IoT environment. In17, a Crossover-based Neural Network is implemented to conduct optimal route detection. This approach can potentially outperform traditional routing algorithms in terms of finding the most efficient paths. However, this model may overfit to the training data, resulting in poor generalization. The research presents18,19,20,21 a resilient routing strategy that improves IoT network routing. It efficiently captures topological properties under routing table attacks, which cause varying degrees of harm to local link communities. However, the quality of training data can have a substantial impact on the model’s performance and the accuracy of routing decisions. A proposed trusted routing system using Markov Decision Processes (MDPs) and deep-chain technology enhances routing efficiency and security22. It effectively transfers messages swiftly and safely by selecting the best path. However, it poses substantial challenges for routing applications, such as high computational complexity.

In23,24, an energy-efficient routing technique known as the fuzzy based classifier is presented to extend network lifetime. However, as the network size and complexity rise, the computational needs of the approach may become excessive. Euclidean distance is used in a shortest path identification technique to lower the energy consumed, as proposed in25. This method extends the longevity and total energy of the network. This approach might make it more difficult for the algorithm to adapt in real time to network changes, which could result in less-than-ideal routing choices. In26, Fuzzy-based node selection is introduced to enhance the service quality in data transmission. This method improves routing efficiency and effectiveness. However, it has limited scalability. In addition, Ant Colony Optimization (ACO)27 and African buffalo optimization (ABO)28 are introduced to select optimal path for data transmission. However, the performance of these algorithms can be highly sensitive to the choice of the parameters, which can make the deployment and optimization process challenging.

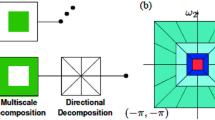

Dimensionality reduction plays a crucial role in IoT-based smart grid systems owing to the massive volume of data generated by sensors monitoring various grid components. Efficient data handling is essential to ensure performance, reduce computational complexity, and optimize energy usage in resource-constrained IoT devices29. Principal Component Analysis (PCA) transforms the original dataset into a lower dimensional space. However, the disadvantage of PCA is that it does not consider class labels or the discriminative nature of the data. This drawback is effectively addressed by LDA. Linear Discriminant Analysis is used instead of PCA because LDA not only reduces dimensionality but also preserves class discriminability, which is crucial for classification tasks in smart grid monitoring. While PCA focuses on maximizing variance and identifying principal components that capture the most information, it does not consider class labels, potentially leading to suboptimal feature representation for supervised learning. In contrast, LDA finds the optimal projection by increasing the separation within different classes and also reducing intra-class variance, ensuring that the most relevant features for classification are retained30. Table 1 represents the literature survey of existing methodologies.

Motivation of the work

In general, all existing systems have used optimization-based techniques or other methodologies to achieve optimal routing or path selection. However, none of the articles focused on pre-processing techniques with advanced algorithms using optimization techniques. Moreover, traditional algorithms for the selection of path struggle to adapt to the variations which in turn leads to inefficiencies in decision making. Machine learning approaches have demonstrated potential in optimizing routing decisions but face challenges related to complexity and scalability. Conventional approaches for dimensionality reduction do not effectively preserve class discriminability making them suboptimal for the processing of data. As a result, this work provides efficient pre-processing techniques with a new updated gazelle RF classifier for optimal routing path selection. This strategy enhances network longevity, enabling the system to function continuously.

Contributions of the work

The primary contribution of the work lies on the detection of optimal path selection for effective data transmission in IoT based smart grid systems.

-

The proposed smart grid monitoring system collects data from multiple energy sources in an efficient manner. It assures uninterrupted monitoring and analysis aiding in the design of an adaptive and resilient energy infrastructure.

-

The research introduces a novel preprocessing technique that integrates one-hot encoding with Linear Discriminant Analysis for dimension reduction enabling data optimization before transmission. One-hot encoding assures categorical data compatibility with machine learning approaches to improve classification accuracy.

-

An advanced path selection mechanism using Updated Gazelle optimized RF classifier is proposed for selecting the optimal path and ensuring low-latency data transmission. With this method, the shortest and most reliable communication path is determined for reducing latency and enhancing the reliability of data communication within the IoT-based smart grid system.

The remainder portion of the manuscript includes Sect. 2 proposed system description detailing the architecture, Sect. 3 results and discussion presenting the performance evaluation and Sect. 4 conclusion summarizing the key findings.

Proposed system description

The innovative system begins by leveraging the power of IoT technology to monitor the critical parameters like voltage, current and state-of-charge of the various energy sources, including the battery, PV system, wind turbine, and grid as seen in Fig. 1. These measurements are transmitted to the system’s pre-processing stage through an ESP8266 Wi-Fi module, enabling seamless integration of IoT capabilities. The pre-processing stage then applies two crucial techniques to prepare the data for the subsequent classification step. One-hot encoding is used to transform categorical variables into a machine-learning format. This is followed by the application of LDA, a statistical dimension reduction technique that preserves the most important features of the data while reducing its dimensionality. This stage assures that input is optimized for efficient and accurate decision-making in the next step.

The core of the system lies in the HUG-RF classifier, a novel approach that combines the strengths of the Gazelle optimization algorithm and the RF machine learning algorithm. This hybrid classifier is responsible for selecting the shortest and most efficient path for the system’s operation, drawing upon the complementary capabilities of the two methods. Finally, the system integrates an IoT-based webpage that serves as a centralized hub for data visualization, allowing users to monitor the system’s performance and make informed decisions. By seamlessly integrating IoT, machine learning, and advanced optimization techniques, this innovative system aims to deliver a solution for managing the complex energy landscape of the future. To assess the effectiveness of the proposed HUG-RF classifier, a comparative evaluation is conducted against conventional machine learning models, specifically RF and SVM approach. The effectiveness of these traditional classifiers is analyzed and benchmarked to highlight the advantages and improvements brought by the HUG-RF classifier. This comparison aims to provide a detailed understanding of how the HUG-RF model excels SVM and RF related to accuracy, latency and packet delivery ratio and energy consumption. The results and insights from this evaluation are thoroughly discussed in forthcoming sections.

IoT based smart grid monitoring system

An IoT-based smart grid monitoring system provides a comprehensive and integrated approach to managing and optimizing the various energy generation and storage components within a modern power grid. At the heart of this system is integration of clean energy sources, including PV panels and wind turbines, along with energy storage solutions, like batteries. The IoT connectivity permits for control and monitoring of these distributed energy resources, enabling the system to tailor for changing energy requirements and environmental conditions.

The PV and wind generation units are continuously monitored for their output power, voltage, current, and other critical parameters, allowing the system to track the energy harvesting performance and identify any anomalies or degradation in the renewable energy sources. Figure 2 showcases the IoT based smart grid monitoring system architecture. The battery system is also closely monitored, with data on the state of charge, charging and discharging rates, and overall battery health being transmitted to the central control system. This information is then used to optimize the discharging and charging cycles, ensuring the efficient utilization of the stored energy and increasing the battery’s lifetime. Additionally, the IoT-enabled smart grid monitoring system integrates with the main utility grid, providing visibility into the grid’s power flow, voltage levels, and any potential disturbances. This holistic approach allows the system to make informed decisions on when to draw power from the grid, when to utilize the renewable energy sources, and when to discharge the battery storage. This ultimately enhances the overall reliability, efficiency, and sustainability of the power grid.

Pre-processing stage

In the preprocessing stage, the data undergoes two key transformations to prepare it for the subsequent analysis and modeling. Initially, the categorical variables in the dataset are encoded using the one-hot encoding technique. Following the one-hot encoding, the next preprocessing step involves dimensionality reduction using the LDA method. LDA is selected for preprocessing in the SGMS due to its ability to reduce data dimensionality while preserving class separability, which is essential for efficient machine learning processing. In IoT-based smart grids, large volumes of heterogeneous data are generated from various sources, including PV systems, wind systems, batteries and the grid. Directly processing high-dimensional data leads to increased computational complexity and slower response times. LDA addresses this issue by projecting the data onto a lower-dimensional space where class distinctions remain maximized. This improves the efficiency of the machine learning model and classification accuracy by minimizing redundancy and noise in the dataset. Consequently, LDA contributes to optimizing data transmission and monitoring, making it a suitable choice for preprocessing in the proposed SGMS.

One-hot encoding

In an IoT-based smart grid system, one-hot encoding is an essential preprocessing technique used to convert categorical data into a numerical format suitable for machine learning algorithms. Each unique categorical variable is then transformed into a binary column, where 1 indicates the presence of a category and 0 indicates its absence. Each of these columns contains binary values reflecting the presence of the respective device type in the original dataset. The first phase is to encrypt the n states; here, a single number represents one state, and n digits are necessary for representing n states. When a state has been attained, the related digit is 1 and the remaining digits are 0. Furthermore, it is evident that using One-Hot Encoding, any feature with m possible values is converted into binary features. In addition, only one of these attributes can be active at the same time, implying that they cannot be combined. Consequently, there will be fewer data. In addition to fixing the data attributes issue, One-Hot Encoding generates sparse label variables with matching dimensions from the many labels in the experimental data set.

Dimensionality reduction using LDA method

LDA is a supervised decrease in dimensionality approach that uses a linear projection. This method minimizes within-class scatter while maximizing between-class scatter in a high-dimensional space. A LDA is also known as Fisher’s linear discriminant analysis. Feature extraction is mainly used prior to classification to reduce feature vector dimensions without sacrificing information. The primary goal of LDA is a dataset with a large no. of attributes into a less-dimensional space with adequate class separation, resulting in lower computing costs39. Establish two parameters in LDA for every sample from all classes,

-

1.

Within-class scatter matrix.

$$\:{S}_{w}=\sum\:_{J=1}^{c}\sum\:_{i=1}^{{N}_{j}}({x}_{i}^{j}-{\mu\:}_{j}){({x}_{i}^{j}-{\mu\:}_{j})}^{T}$$(1)Where number of classes is indicated by\(\:c\), \(\:{x}_{i}^{j}\)is the \(\:i\:th\) sample of class\(\:\:j\), \(\:{\mu\:}_{j}\) is the mean of class\(\:j\), and \(\:{N}_{j}\) is the number of samples in class \(\:j,\) respectively.

-

2.

Among classes scatter matrix.

$$\:{S}_{b}=\sum\:_{j=1}^{c}({\mu\:}_{j}-\mu\:){({\mu\:}_{j}-\mu\:)}^{T}$$(2)Where \(\:\mu\:\)denotes the average of all classes, the LDA approaches use the linear discriminant principle.

The missing values in the dataset were imputed using mean values for numerical features and mode values for categorical features for maintaining data consistency. When missing data exceeds a predefined threshold, the feature is removed for preventing bias into the model. Z-score analysis is used to identify outliers for detecting extreme values which distorts the learning process of model. One-hot encoding is applied to categorical variables to convert them to multiple binary columns for improving interpretability and compatibility with machine learning models. This transformation reduces the potential biases in feature importance by assigning equal weights to all categorical variables. LDA ensures the retaining of most discriminative features for maintaining predictive power with reduced computational overhead. This process assures efficient and robust classification of various operational states in smart grids aiding in improved monitoring and decision-making. The numerical features are ensured with consistent ranges using min-max scaling which prevents dominance of high-value attributes.

Shortest path detection using optimized RF classifier

The HUG-RF classifier syndicates the strengths of multiple techniques to improve the detection of the shortest path in a smart grid system. This approach leverages the Gazelle algorithm’s optimization capabilities with the robustness of the random forest classifier, providing a powerful tool for path detection. Unlike traditional classifiers which rely on static decision-making models, HUG-RF integrates the Gazelle Optimization Algorithm with an enhanced RF model to dynamically identify the efficient path. The algorithm inherits adaptive behavior of gazelles, ensuring optimal path selection while reducing communication overhead.

The integration of the Updated Gazelle Optimization Algorithm with the Random Forest classifier in the HUG-RF framework is realized through a tightly coupled training–optimization cycle. Initially, sensor data from IoT-enabled smart grid nodes undergoes preprocessing before being fed into the RF training module. The RF is constructed using a predefined number of unpruned decision trees, with both pre-pruning and post-pruning strategies applied to prevent overfitting. While the RF classifier generates candidate routing decisions based on learned patterns from training data, the optimization layer dynamically refines these decisions by adjusting key RF parameters. These include the number of features considered at each split, the maximum depth of trees, and the threshold for leaf node creation. The UGOA evaluates the performance of the RF outputs using multi-objective criteria including latency, energy consumption and packet loss. It guides the RF toward configurations that maximize classification accuracy while minimizing computational overhead. Additionally, by perturbing and re-evaluating elite solutions, the optimizer ensures that the RF avoids overfitting to static conditions and remains adaptive to IoT grid dynamics. Thus, optimization not only fine-tunes the RF model’s hyperparameters but also provides feedback-driven adjustments that improve its predictive robustness and routing efficiency in dynamic smart grid environments.

During runtime, the trained RF predicts potential candidate paths for data transmission based on grid conditions. These candidate paths are then optimized using the Gazelle Optimization Algorithm, which dynamically updates path weights through logistic mapping and Gaussian mutation coefficients to ensure global exploration and fast convergence. The elite solutions from the gazelle population correspond to optimal routing decisions, which are periodically refined by perturbation strategies to avoid stagnation. The optimized path output is then re-evaluated against network parameters and feedback is used to retrain the RF ensemble at fixed intervals, ensuring adaptability to changing grid dynamics. The implementation integrates the RF’s parallelizable architecture for distributed decision-tree processing and the lightweight computation of the Gazelle algorithm. This results in a scalable and energy-efficient shortest path detection mechanism for IoT-based smart grids.

Additionally, the updated RF module enhances prediction accuracy by integrating an ensemble learning strategy, mitigating overfitting and improving classification robustness. The classifier’s multi-objective optimization capability ensures minimal latency, reduced energy consumption and reduced packet loss, making it superior to conventional RF models, which suffers from high computational complexity.

Random forest classifier

In ensemble learning, random forests build multiple decision trees during training and return the mean or mode of each class (classification) or regression (regression). It is particularly effective for handling large datasets with high dimensionality, which is typical in IoT-based systems. RF is a multilayered group of tree-structured fundamental classifiers. The set of data frequently has a large number of dimensions. The dataset contains a significant number of useless characteristics. Only a few crucial attributes are useful for classifier models. The RF algorithm selects the most relevant feature based on a predefined probability. RF algorithm involves the mapping a random sampling of feature subspaces to multiple decision trees. Merge \(\:K\:\)unpruned trees (\(\:{h}_{1}\)(\(\:{X}_{1}\)), \(\:{h}_{1}\)(\(\:{X}_{2}\)), etc.) into a random forest combination and select the highest likelihood value for classification (d).

Pseudocode of RF classifier

-

1.

Randomly choose “\(\:n\)” features from an aggregate of “\(\:k\)” features. (where\(\:\:n<<k\)).

-

2.

Compute the node “n” from the “n” features by the optimum split point.

-

3.

Assign the optimal split to the daughter nodes of the node.

-

4.

Repeat steps 1 to 3 till the “l” number of nodes is attained.

-

5.

Construct a forest by performing steps 1 through 4 “n” times to generate “n” trees.

In order to obtain the optimal results for optimal routing selection for efficient data transmission, the RF parameters are tuned by using updated gazelle optimization algorithm.

Gazelle algorithm optimization

The Gazelle algorithm is known for its efficiency in solving optimization problems. The Gazelle algorithm is chosen for its ability to explore and exploit the search space. As a bio-inspired metaheuristic, the algorithm mimics the adaptive movement and survival strategies of gazelles. These gazelles are known for their swift decision-making in response to dynamic environmental changes. This characteristic makes Gazelle algorithm particularly suitable for optimizing routing paths in IoT-based smart grid systems, where network conditions and data flow patterns frequently change. Unlike traditional optimization techniques, which may suffer from local optima stagnation or slow convergence, Gazelle algorithm effectively utilizes a balanced searching ability to achieve an efficient routing strategy. In the context of a smart grid, it is used to optimize the selection of paths based on various parameters such as latency, bandwidth, and energy consumption. By updating the Gazelle algorithm, the data from IoT devices is incorporated, ensuring that the optimization process is dynamic and responsive to current grid conditions. The exploiting phase of the gazelle optimization algorithm’s optimization process is based on gazelle survival behavior, which involves circumventing the hunter while in the foraging procedure as it updates the candidate solution. During the exploitation stage, when there is no hunter, foraging occurs, and during the exploration stage, escaping happens. Figure 3 illustrates the flowchart of HUG-RF classifier for shortest path detection.

Exploitation stage

Gazelles forages in the absence of predators and this process is based on the idea that each gazelle’s location follows Brownian motion. The procedure for updating a gazelle’s position is outlined below:

where \(\:v\) denotes the foraging velocity, \(\:{x}_{i}^{p1}\) and \(\:{x}_{i}\)reflect the locations of the \(\:ith\) gazelle prior to and following the initial location update step; \(\:Elite\:\)is a random number that ranges between \(\:0\:and\:1\); it denotes the location of the ideal gazelle as well as \(\:{R}_{b}\)is a vector representing the position of the Brownian Motion.

Exploration stage

The gazelles respond to the presence of a hunter and their positions are updated using the Levy flight mode. It is hypothesized which begins in Brownian motion and later transitions to Levy flight mode. The Gazelle’s current position update formula is as follows:

In this equation, \(\:{x}_{i}^{p\text{2,1}/2}\)is the current location of the \(\:ith\) gazelle in the \(\:jth\) dimension after the subsequent location update step, \(\:{\mu\:}_{g}\)is the directional feature parameter, and \(\:{r}_{2}\)is a randomized value ranging from\(\:\:0\:to\:1\)40.

Updated gazelle optimization

The Updated Gazelle Optimization Algorithm enhances the conventional Gazelle Optimization Algorithm by introducing additional mechanisms that improve convergence speed, exploration capability and solution stability. The conventional GOA primarily relies on the adaptive movement patterns of gazelles modeled through Brownian motion and Lévy flight for exploration and exploitation. The updated version incorporates three major improvements. First, logistic mapping is applied during initialization to generate a more diverse and uniformly distributed population, reducing the risk of premature convergence. Second, a Gaussian mutation coefficient and logarithmic inertia weight are integrated into the position update equations, enabling a better balance between global search and local refinement. This modification prevents stagnation in local optima and enhances adaptability to dynamic environments. Finally, a local optimal perturbation strategy is introduced, where elite solutions are periodically perturbed and re-evaluated, ensuring that only the most promising candidates are retained. These updates collectively make the UGOA more robust, lightweight, and responsive compared to the conventional GOA, which may suffer from slower convergence and local optima entrapment.

Initialization of logistic mapping

This work uses logistic mapping to update the conventional gazelle optimization for increasing the unpredictability and uniformity of initially determined set allocation in the gazelle optimization method. The logistic map is outlined as following:

where \(\:\psi\:\) is the logistic factor and ranges from\(\:0\:to\:4\).

Gaussian mutation coefficient and logarithmic inertia weight

Integrating the Gaussian mutation factor and logarithmic inertia weight to the gazelle’s location updating formula in the initial and subsequent phases of the gazelle optimization method can improve the global search capability in the iterative procedure.

where \(\:\omega\:\) is the logarithmic inertia factor; \(\:{w}_{max}\) and \(\:{w}_{min}\); \(\:\epsilon\:\) is randomized parameter associated with the present iteration number; \(\:k\) is index of gaussian mutation; and \(\:{r}_{5}\),\(\:{r}_{6}\) are two random values ranging from \(\:0\:to\:1\).

The modified location update formula for gazelles is as follows:

Here, \(\:\upsilon\:\)-foraging velocity, \(\:{x}_{i}\)-updated positions of \(\:{i}^{th}\) gazelle, \(\:{r}_{1},\:{r}_{2}\)-random values.

Local optimal perturbation strategy

The local disruption mechanism of optimum separate in its surroundings is stated as follows:

The optimum individual’s location prior to and following optimization is represented by \(\:Elite\) and \(\:Elite{\prime\:},\) with \(\:{r}_{5}\) being a random number ranging from\(\:0\:to\:1\).

\(\:{Elite}^{*}\) indicates the updated location, while \(\:F\left(Elite\right)\) and \(\:F\left({Elite}^{{\prime\:}}\right)\)reflect the objective function parameters.

Pseudocode of updated Gazelle optimized RF classifier.

Pseudocode for the full model pipeline.

Table 2 provides the parameters of HUG-RF classifier. In order to avoid overfitting, pruning approaches are adopted in which pre-pruning restricts tree growth by limiting depth and also requires a minimum number of samples per leaf. This prevents excessive branching leading to memorization of training data. By stopping tree expansion before it fully captures noise in the training data, pre-pruning helps maintain model generalization. Post-pruning further refines the model by removing weak decision nodes which assures that only meaningful patterns contribute to predictions. This technique systematically removes weak or less significant nodes by evaluating their contribution to the overall classification accuracy. Nodes that contribute minimally or increase variance are pruned, ensuring that the final model retains only the most essential decision paths. In the HUG-RF classifier, these pruning techniques work in combination across multiple trees, reducing the likelihood of individual trees overfitting while maintaining ensemble diversity. The combination of pre-pruning and post-pruning helps prevent model memorization of noise, reduces variance and enhances generalization, leading to more stable and accurate predictions across unseen data.

To ensure the HUG-RF classifier generalizes well to new, unseen data, rigorous testing is conducted using cross-validation and evaluation on external datasets. A 10-fold cross-validation strategy is employed, where the dataset is divided into 10 subsets, with the model trained on 9 and tested on the remaining one, ensuring that each sample is used for both training and validation. This approach proves the model’s consistency across different data splits and minimized the risk of overfitting. Additionally, the model’s robustness is evaluated on an independent external dataset collected from a separate IoT-based smart grid system. The classification accuracy attained is high, demonstrating the model’s ability to adapt to unseen patterns without significant performance degradation. In conclusion, the HUG-RF classifier provides a robust and efficient solution for shortest path detection in IoT-monitored smart grid systems, ensuring optimal data transmission and resource utilization.

The proposed work employs LDA for dimensionality reduction, which significantly decreases computational burden by eliminating irrelevant features while preserving class discriminability. Additionally, the Gazelle Optimization Algorithm is lightweight compared to more computationally intensive nature-inspired optimization algorithms, as it requires fewer iterations and minimal computational resources to converge to an optimal solution. The updated RF component further enhances efficiency by integrating an optimized decision tree structure, reducing redundancy and unnecessary computations that typically occur in traditional RF implementations. Moreover, the parallelizable nature of RF allows for distributed processing, reducing latency and improving responsiveness. The streamlined shortest path identification process in HUG-RF also minimizes communication overhead, ensuring faster and more energy-efficient data transmission. Compared to hybrid classifiers, HUG-RF offers a balance between computational efficiency and predictive accuracy, making it ideal for IoT-based smart grid applications with constrained processing capabilities.

Results and discussion

This work proposes an innovative optimized RF classifier for IoT based smart grid monitoring system. The proposed concept is evaluated using the MATLAB R2022a environment combined with an Arduino/ESP8266 NodeMCU IoT test bed for data acquisition and transmission. The IoT environment is configured with PV modules rated at 250 W, a small-scale wind turbine emulator of 300 W, a 24 V/50 Ah lithium-ion battery storage system and grid interface units operating at 230 V, 50 Hz. The communication layer employed ESP8266 Wi-Fi modules transmitting to a local IoT server with an average bandwidth of 10 Mbps and latency of 50 ms. For statistical reliability, each experiment is repeated for 10 independent runs and the reported results are averaged across these runs. Furthermore, paired t-tests are conducted to verify the statistical significance of improvements over conventional routing approaches.

The dataset used for developing and evaluating the proposed HUG-RF classifier is generated from an IoT-enabled smart grid test bed consisting of PV panels, wind turbine systems, grid supply units and battery storage modules. A total of 10,000 samples are collected over a continuous monitoring period, where each sample corresponds to a time-stamped observation of grid operating conditions. The dataset features include electrical parameters such as voltage, current, power, frequency and state of charge of batteries. The class labels for supervised learning correspond to routing path categories, indicating whether a transmission route is optimal, sub-optimal, or inefficient under the given operating conditions. To ensure generalizability, the dataset is divided into 70% training, 15% validation and 15% testing subsets.

The collected data is fed into the pre-processing stage involving one hot encoding for enhancing data compatibility. Further the LDA lowers the data dimensionality preserving class discriminability. The obtained data from the pre-processing stage is efficiently transmitted to an IoT webpage for data visualization using Hybrid Updated Gazelle-Random Forest classifier. The simulation results are discussed in this section. The IoT based smart grid monitored parameters including solar panel voltage and current, battery SOC, DC link voltage, grid voltage and current are displayed in Fig. 4.The proposed smart grid system utilizes a single bus system for easy validation, meaning that all the components of the grid are connected to a common point. The parameters value of developed system is represented in Table 3.

Performance metrics

The proposed HUG-RF approach’s efficacy is evaluated using five performance indices: average delay, routing overhead ratio, average throughput, packet delivery ratio, and maximum energy consumption. Unlike existing algorithms, the proposed HUG-RF algorithm’s efficacy is evaluated for packet sizes ranging from \(\:128\:to\:1024\) bytes, with \(\:50\) nodes. Throughput measures the data transfer rate, indicating overall network capacity. PDR reflects reliability by assessing the successful delivery of packets. Delay quantifies latency, which is crucial for time-sensitive applications, while energy consumption determines the sustainability of the system, particularly in resource-constrained environments. The trade-offs between these metrics are significant; for instance, increasing throughput may lead to higher energy consumption, while minimizing delay could reduce PDR due to potential packet loss in faster transmissions. The most critical metric is PDR which is often the most vital as it directly reflects the network’s reliability, ensuring that data transmission remains accurate and consistent, which is crucial for decision-making in various networked systems.

To ensure a fair and unbiased comparison, both Support Vector Machine (SVM) and Random Forest (RF) baselines are carefully tuned using standard hyperparameter optimization strategies prior to evaluation. For the SVM, kernel functions (linear, polynomial, radial basis function (RBF)) are tested and the best-performing kernel (RBF) with an optimized penalty parameter 10 and kernel coefficient 0.01 is selected. For the RF baseline, the number of trees, maximum tree depth and feature subset size are varied, with the optimal configuration determined as 100 trees, maximum depth of 15, ensuring the model is not underfitted or overfitted. Both models are trained and validated under the same dataset splits, preprocessing pipeline and evaluation metrics as the proposed HUG-RF method. Moreover, cross-validation is applied to confirm stability across multiple runs.

Table 4 shows that the proposed HUG-RF outperforms SVM and RF with regard to performance metrics such as packet delivery ratio, delay, throughput and, routing overhead, energy consumption, with maximum values of 299.1\(\:kbps\), 4.0\(\:ms\), 99.96%, 2.3%, and 0.36%, respectively.

According to Fig. 5, there are variations in the optimum throughput for SVM, RF and HUG-RF. For the three approaches that are analysed, the maximum throughput rises as the packet size also rises. Throughput attained is higher employing the proposed HUG-RF algorithm. This is due to boosting packet delivery and execution to reach the destination, the HUG approach gives the HUG-RF technique a significant advantage. The HUG-RF algorithm consistently exhibits the highest throughput. This superior performance can be attributed to the integration of the HUG approach, which enhances the packet delivery process. The HUG mechanism optimizes how packets are grouped and forwarded, ensuring that they are delivered more reliably and efficiently to their destination. This improvement in packet execution and delivery gives the HUG-RF algorithm a clear advantage over standard SVM and RF methods.

The average end-to-end delay for algorithms like SVM, RF, and HUG-RF classifier varies with packet length, as shown in Fig. 6. For the three techniques that have been examined, the average latency increases as the packet size does. Compared to SVM and RF, HUG-RF performs better for maximum latency factor. It results from the realisation that HUG-RF uses the HUG scheduling technique and the least hop count route to speed up packet processing on the node, lowering average latency.As a result, this approach significantly lowers the average latency in comparison to traditional SVM and RF methods, which do not incorporate such efficient scheduling and routing strategies.

Figure 7 indicates that the delivered packets for each of the three approaches are affected when the packet size is changed. A greater packet delivery ratio is achieved by increasing the packet size. This is because as packet sizes get larger, more data is sent over the network. It is evident from the results that HUG-RF classifier outperforms SVM and RF routing systems in terms of performance. This is because HUG-RF classifier uses the RF scheduling technique more effectively to increase packet delivery ratio and speeds up execution and packet delivery to target nodes. In addition to maintaining good network connectivity, maximum node density lowers the likelihood of packet loss.HUG-RF dynamically adapts to network conditions, selecting the most reliable and shortest communication paths. This reduces congestion, minimizes delays and enhances overall transmission efficiency. Additionally, by effectively managing high node density, HUG-RF maintains strong network connectivity, reducing the likelihood of packet loss. Larger packet sizes benefit from this optimized routing, as more data is transmitted with fewer disruptions, further increasing PDR. The classifier’s ability to balance network load and ensure stable connectivity contributes to improved data transmission, making it a superior choice for smart grid monitoring systems where reliable communication is essential. Furthermore, the HUG-RF classifier not only ensures high packet delivery efficiency but also sustains robust network connectivity. By optimizing the network’s resources and managing high node density, it minimizes the chances of packet loss, leading to better data transmission and higher network reliability.

Figure 8 shows how three alternative algorithms’ routing overhead rates are affected by changing packet sizes. For all three techniques those are studied, routing overhead increases with packet size. The proposed method has a reduced routing overhead rate than SVM and RF since HUG-based packet scheduling mechanism significantly rises packet delivery ratio via the path in HUG-RF classifier. By increasing the packet delivery ratio through more efficient routing, the HUG-RF classifier reduces unnecessary overhead associated with managing and reestablishing connections or routes, even as packet sizes grow larger. As a result, the proposed method achieves reduced routing overhead, meaning less network bandwidth and computational resources are wasted on routing tasks.

Figure 9 shows the distinct packet sizes affect the average energy usage of three distinct methods in relation to packet size. It is noted that when packet sizes rise, the average energy consumption of all algorithms increases. More energy is needed for broadcasts and packet transfers when network demand rises. The HUG-RF classifier uses less energy than the SVM and RF because it optimises the way for data transmission with the least amount of energy by using the minimal hop count route and the HUG technique. Enhanced cyber security for smart grid applications is achieved by an optimised routing method, which permits ongoing parameter monitoring of the IoT. The energy used for data reception and transmission is computed in this section. In this case, the network lifespan is described as the round-trip time it takes for the first sensor node’s residual energy to reach zero.

Figure 10 presents the network lifetime comparison of HUG-RF, RF and SVM classifiers under different data generation patterns. In all three cases the proposed HUG-RF consistently achieves the longest network lifespan, maintaining stability even as the number of nodes increases. While RF shows moderate performance, SVM exhibits the shortest lifetime across all scenarios. These results highlight that HUG-RF effectively balances load and energy utilization, thereby prolonging network operation in diverse traffic conditions.

Figure 11 illustrates the energy performance of HUG-RF in comparison with RF and SVM. Figure 11a shows that HUG-RF maintains higher average residual energy across different network sizes, ensuring efficient resource preservation. Figure 11b demonstrates that HUG-RF consumes significantly less energy than RF and SVM, reflecting its optimization capability in reducing redundant transmissions and minimizing packet losses. Together, these results confirm that HUG-RF not only enhances network lifetime but also improves energy efficiency in IoT-enabled smart grid environments.

The time complexity comparison of proposed Updated Gazelle Optimization (UGO) with other optimization algorithms including Gray Wolf Optimization (GWO), Genetic Algorithm (GA), and Particle Swarm Optimization (PSO) are showcased in Fig. 12. The proposed UGO algorithm has reduced time complexity when compared to other methods. Thus the proposed SGMS enhances reliability in smart grid data transmission through a combination of advanced data processing and optimized path selection. By adopting the HUG-RF classifier, the system identifies the shortest and most reliable communication paths, reducing latency and minimizing the risk of data loss or congestion. Additionally, preprocessing techniques such as one-hot encoding and LDA enhance data integrity by ensuring compatibility and reducing dimensionality, thereby mitigating the risk of data inconsistencies. By integrating encryption protocols and authentication mechanisms within the IoT framework to prevent unauthorized access and cyber threats, security measures can be reinforced.

From Table 5, it is evident that the proposed HUG-RF classifier achieves the lowest execution time (60 s) and memory usage (160 MB) compared to conventional SVM (95 s, 220 MB) and RF (80 s, 190 MB) models. These results highlight that HUG-RF not only improves classification accuracy but also enhances computational efficiency.

Results in Table 6 highlight that HUG-RF consistently surpasses existing hybrid and baseline approaches, with its 96.8% accuracy, 99.96% PDR and reduced delay and energy usage.

Limitations and future research directions

IoT applications in smart grid systems face many technical challenges that need to be addressed in future research.

-

While the proposed smart grid monitoring system performs well in simulations, its scalability in real-world environments with a significantly higher number of sensors and data points may pose challenges. The increased data volume could affect processing speed and energy efficiency.

-

The performance of the HUG-RF classifier may be influenced by network conditions and sensor accuracy. Variability in these parameters can affect data quality and transmission efficiency, potentially leading to suboptimal decision-making.

-

The system’s reliance on stable Wi-Fi connectivity through the ESP8266 NodeMCU may limit its effectiveness in regions with poor network infrastructure. Any interruptions in connectivity could hinder monitoring and data transmission.

-

The integration of various techniques, such as one-hot encoding, LDA, and the HUG-RF classifier, adds complexity to the system’s design and implementation. This could pose challenges in troubleshooting and system maintenance, particularly for users without technical expertise.

-

While the system aims to be energy-efficient, the energy consumption of the IoT devices, especially under high data generation rates, may lead to battery depletion, affecting the longevity of the monitoring system.

Future research focuses on deploying the proposed system in real-world smart grid environments to evaluate its performance under practical conditions. This helps identify and address scalability and connectivity issues. In addition, the integration of advanced algorithm further improves the efficiency and adaptability of path selection in dynamic environments. As IoT devices are often vulnerable to cybersecurity threats, future work also investigates the robust security measures to protect the data. This is essential for maintaining the integrity and reliability of the energy infrastructure. Although the present study focuses on MATLAB-based simulations, the system parameters and runtime analysis are aligned with practical IoT-enabled smart grid conditions. Future work will involve FPGA- and microcontroller-based implementation to validate the proposed HUG-RF classifier in real-world environments.

Conclusion

In this paper, a smart grid monitoring system is presented, which uses sensors for gathering data from PV, wind, grid, and battery systems. The collected data is analysed and stored on an ESP8266 NodeMCU microcontroller, allowing for monitoring and evaluation. The monitored data is pre-processed, including one-hot encoding and dimensionality reduction using LDA. To improve data transmission efficiency and reduce latency, a HUG-RF classifier is presented to identify the shortest path from the NodeMCU to an IoT webpage for data visualisation. The processed data is then delivered via the quickest path, increasing the system’s responsiveness and scalability. The proposed smart grid monitoring system has various advantages, including continuous monitoring of crucial parameters in the smart grid ecosystem, efficient data processing and transmission, and seamless interaction with IoT platforms for remote access and control. The simulation findings illustrates the proposed approach efficiently ensures dependable and low-latency data transmission, therefore aiding the transition to a more intelligent and sustainable energy infrastructure. In addition, the proposed HUG-RF classifier achieves superior performance in terms of delay, packet delivery ratio, routing overhead, throughput, and energy consumption, with maximum values of 299.1 kbps, 4.0 ms, 99.96%, 2.3%, and 0.36%, respectively. The future work would concentrate on the practical implementation of the proposed concept in real-time environment.

Data availability

Data sharing is not applicable.

Abbreviations

- \(\:c\) :

-

Number of classes

- \(\:\mu\:\) :

-

Average of all classes

- \(\:v\) :

-

Gazelle’s foraging velocity

- \(\:{R}_{b}\) :

-

Vector representing the position of the Brownian Motion

- \(\:\psi\:\) :

-

Logistic factor

- \(\:\omega\:\) :

-

Logarithmic inertia factor

- \(\:\epsilon\:\) :

-

Randomized parameter connected to present iteration number

- \(\:k\) :

-

Index of Gaussian mutation

- \(\:{x}_{i}\) :

-

Location

References

Hashmi, S. A., Ali, C. F. & Zafar, S. Internet of things and cloud computing-based energy management system for demand side management in smart grid. Int. J. Energy Res. 45(1), 1007–1022 (2021).

Murali Krishna, V. B., Sarathbabu Duvvuri, S. S. S. R., Yadlapti, K. & PidikitiT, P. S. Deployment and performance measurement of renewable energy based permanent magnet synchronous generator system. Sensors. 24, 100478 (2022).

Kumari, A. R., Tanwar, G. S., Tyagi, S. & Kumari, N. When blockchain Meets smart grid: exploring demand response management for secure energy trading. IEEE Netw. 1–7 (2020).

Bagdadee, A. H., Hoque, M. Z. & Zhang, L. IoT based wireless sensor network for power quality control in smart grid. Procedia Comput. Sci. 167, 1148–1160 (2020).

Kumari, A. & Tanwar, S. Artificial Intelligence-Empowered Modern Electric Vehicles in Smart Grid Systems: Fundamentals, Technologies, and Solutions (Elsevier, 2024).

Chen, S. et al. Internet of things based smart grids supported by intelligent edge computing. IEEE access. 7, 74089–74102 (2019).

Kabalci, Y., Kabalci, E., Padmanaban, S., Holm-Nielsen, J. B. & Blaabjerg, F. Internet of things applications as energy internet in smart grids and smart environments. Electronics 8(9), 972 (2019).

Liu, Y., Yang, C., Jiang, L., Xie, S. & Zhang, Y. Intelligent edge computing for IoT-based energy management in smart cities. IEEE Netw. 33(2), 111–117 (2019).

Rabie, O. B. J. et al. A security model for smart grid SCADA systems using stochastic neural network. IET Gener. Transm. Distrib. 17(20), 4541–4553 (2023).

Rabie, O. B. J., Balachandran, P. K., Khojah, M. & Selvarajan, S. A proficient ZESO-DRKFC model for smart grid SCADA security. Electronics 11(24), 4144 (2022).

Padmaja, M. et al. Grow of artificial intelligence to challenge security in IoT application. Wireless Pers. Commun. 127(3), 1829–1845 (2022).

Rabie, O. B. J. et al. A novel IoT intrusion detection framework using decisive red Fox optimization and descriptive back propagated radial basis function models. Sci. Rep. 14(1), 386 (2024).

Arya, G., Bagwari, A. & Singh Chauhan, D. Performance analysis of deep learning-based routing protocol for an efficient data transmission in 5G WSN communication. IEEE Access. 10, 9340–9356 (2022).

Yao, H. et al. Capsule network assisted IoT traffic classification mechanism for smart cities. IEEE Internet Things J. 6(5), 7515–7525 (2019).

Bharti, R. K. et al. Routing path selection and data transmission in industry-based mobile communications using optimization technique. Wirel. Commun. Mob. Comput. (2022).

Thangaramya, K. et al. Energy aware cluster and neuro-fuzzy based routing algorithm for wireless sensor networks in IoT. Comput. Netw. 151, 211–223 (2019).

Sujanthi, S. & Nithya Kalyani, S. SecDL: QoS-aware secure deep learning approach for dynamic cluster-based routing in WSN assisted IoT. Wireless Pers. Commun. 114(3), 2135–2169 (2020).

Martín, I. et al. ÓG(2019)Machine learning-based routing and wavelength assignment in software-defined optical networks. IEEE Trans. Netw. Serv. Manag. 16(3), 871–883 .

Huang, R. et al. Resilient routing mechanism for wireless sensor networks with deep learning link reliability prediction. IEEE Access. 8, 64857–64872 (2020).

Srividya, P. & Devi, L. N. An optimal cluster & trusted path for routing formation and classification of intrusion using the machine learning classification approach in WSN. Glob. Transit. Proc. 3(1), 317–325. (2022).

Borkar, G. M., Patil, L. H., Dalgade, D. & Hutke, A. A novel clustering approach and adaptive SVM classifier for intrusion detection in WSN: A data mining concept. Sustainable Computing: Inf. Syst. 23, 120–135 (2019).

Abd El-Moghith, I. A. & Darwish, S. M. A deep blockchain-based trusted routing scheme for wireless sensor networks. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, 2020, 282–291 (Springer International Publishing, 2021).

Raghavendra, Y. M. & Mahadevaswamy, U. B. Energy efficient routing in wireless sensor network based on composite fuzzy methods. Wireless Pers. Commun. 114(3), 2569–2590 (2020).

Sreedharan, P. S. & Pete, D. J. A fuzzy multicriteria decision making based CH selection and hybrid routing protocol for WSN. Int. J. Commun Syst. 33(15), e4536 (2020).

Panchal, A. & Singh, R. K. Eadcr: energy aware distance based cluster head selection and routing protocol for wireless sensor networks. J. Circuits Syst. Computers. 30(04), 2150063 (2021).

Ramkumar, K. et al. Efficient routing mechanism for neighbour selection using fuzzy logic in wireless sensor network. Comput. Electr. Eng. 94, 107365 (2021).

Srivastava, V., Tripathi, S. & SinghK, Son, L. H. Energy efficient optimized rate based congestion control routing in wireless sensor network. J. Ambient Intell. Humaniz. Comput. 11, 1325–1338 (2020).

Bera, S., Das, S. K. & Karati, A. Intelligent routing in wireless sensor network based on African Buffalo optimization. Nat. Inspired Comput. Wirel. Sens. Netw., 119–142. (2020).

Bharadiya, J. P. A tutorial on principal component analysis for dimensionality reduction in machine learning. Int. J. Innovative Sci. Res. Technol. 8(5), 2028–2032 (2023).

Choubey, D. K., Kumar, M., Shukla, V., Tripathi, S. & Dhandhania, V. K. Comparative analysis of classification methods with PCA and LDA for diabetes. Curr. Diabetes. Rev. 16(8), 833–850 (2020).

Siniosoglou, I., Radoglou-Grammatikis, P., Efstathopoulos, G., Fouliras, P. & Sarigiannidis, P. A unified deep learning anomaly detection and classification approach for smart grid environments. IEEE Trans. Netw. Serv. Manage. 18(2), 1137–1151 (2021).

Khan, I. U., Javeid, N., James Taylor, C., Gamage, A. A., Ma, K. & X A stacked machine and deep learning-based approach for analysing electricity theft in smart grids. IEEE Trans. Smart Grid. 13(2), 1633–1644 (2021).

Elsisi, M., Mahmoud, K., Lehtonen, M. & MF Darwish, M. Reliable industry 4.0 based on machine learning and IOT for analyzing, monitoring, and securing smart meters. Sensors 21(2), 487 (2021).

Mostafa, N., Saad Mohamed Ramadan, H. & Elfarouk, O. Renewable energy management in smart grids by using big data analytics and machine learning. Mach. Learn. Appl. 9, 100363 (2022).

Lepolesa, L. J., Achari, S. & Cheng, L. Electricity theft detection in smart grids based on deep neural network. IEEE Access. 10, 39638–39655 (2022).

Zhang, Y., Yang, L. & Tan, Y. Energy-efficient adaptive routing in heterogeneous wireless sensor networks via hybrid PSO and dynamic clustering. J. Cloud Comput. 14(1), 46 (2025).

Priyadarshi, R., Kumar, R. R., Ranjan, R. & Kumar, P. V. AI-based routing algorithms improve energy efficiency, latency, and data reliability in wireless sensor networks. Sci. Rep. 15(1), 22292 (2025).

Ahmed, S., Hossain, M. A., Chong, P. H. & Ray, S. K. Bio-inspired energy-efficient cluster-based routing protocol for the IoT in disaster scenarios. Sensors 24(16), 5353 (2024).

Zhao, S., Zhang, B., Yang, J., Zhou, J. & Xu, Y. Linear discriminant analysis. Nat. Reviews Methods Primers. 4(1), 70 (2024).

Agushaka, J. O., Ezugwu, A. E. & Abualigah, L. Gazelle optimization algorithm: a novel nature-inspired metaheuristic optimizer. Neural Comput. Appl. 35(5), 4099–4131 (2023).

Sindhwani, M. et al. A novel context-aware reliable routing protocol and Svm implementation in vehicular area networks. Mathematics 11(3), 514 (2023).

Tamilselvi, P. & Ravi, T. N. Hybridization of brownboost and random forest tree with gradient free optimization for route selection. Int. J. Comput. 20(3), 400–407 (2021).

Author information

Authors and Affiliations

Contributions

Authors’ contributions Conceptualization: T. Saravana KumarData Curation: T. Saravana KumarMethodology: D. Kesavaraja, T. Saravana KumarProject administration: D. KesavarajaSupervision: D. KesavarajaValidation: D. KesavarajaWriting-original draft: T. Saravana KumarWriting-review & editing: D. Kesavaraja, T. Saravana Kumar.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kumar, T.S., Kesavaraja, D. Improving efficiency in smart grid monitoring using hybrid classification and dimensionality reduction. Sci Rep 15, 42052 (2025). https://doi.org/10.1038/s41598-025-26009-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-26009-w