Abstract

This paper introduces SignKeyNet, a novel multi-stream keypoint-based neural network designed to enhance lip-reading recognition and support foundational sign language understanding. The architecture decouples signer movements into three primary streams hands, face, and body using 133 pose keypoints extracted via pose estimation techniques. Each stream is processed independently using specialized attention modules, followed by an attention-based fusion mechanism that models cross-modal spatiotemporal dependencies. SignKeyNet is evaluated on the MIRACL-VC1 lip-reading dataset, achieving superior performance over baseline models such as HMMs, DTW, CNNs, LSTMs, and Two-stream ConvNets, with results including an accuracy of 0.85, a Word Error Rate (WER) of 0.12, and a Character Error Rate (CER) of 0.06. These results highlight the effectiveness of attention-driven, multi-modal architectures for visual speech recognition tasks. While the current evaluation focuses on lip-reading due to dataset constraints, the proposed architecture is extendable to full Sign Language Translation (SLT) systems. SignKeyNet demonstrates strong potential for real-time deployment in accessibility technologies, particularly for the deaf and hard-of-hearing communities.

Similar content being viewed by others

Introduction

Sign language is a unique visual language utilized by individuals who are congenitally deaf and hard of hearing (DHH) and those who acquire hearing loss later in life. It employs a combination of manual and nonmanual elements for effective visual communication1. Manual components encompass the shape, orientation, position, and movement of the hands, while nonmanual aspects include body posture, arm gestures, eye gaze, lip movements, and facial expressions2. Importantly, sign language is not merely a direct translation of spoken language; it possesses its grammar, semantic structure, and linguistic logic. The continuous variations in hand and body movements convey distinct units of meaning. According to the World Federation of the Deaf, there are approximately 70 million DHH individuals worldwide, and over 200 different sign languages exist globally. Consequently, enhancing sign language translation technology can help bridge the communication divide between DHH and hearing individuals3,4. Recognizing the Arabic Sign Language (ArSL), a complex system of gestures and visual cues that facilitates communication between the hearing and deaf community. The technique uses advanced attention mechanisms and state-of-the-art Convolutional Neural Network (CNN) architectures with the robust YOLO object detection model. The method integrates a self-attention block, channel attention module, spatial attention module, and cross-convolution module into feature processing, achieving an ArSL recognition accuracy of 98.9%. The method shows significant improvement compared to conventional techniques, with a precision rate of 0.9. The model can accurately detect and classify complex multiple ArSL signs, providing a unique way to link people and improve communication strategies while promoting social inclusion for deaf people in the Arabic region5.

Sign languages, based on gestures, pose a challenge for researchers due to their visual nature. This study aims to improve Arabic sign language recognition by utilizing electromyographic EMG signals produced by muscles during gestures. These signals are valuable for gesture recognition and classification and are used in human-computer interaction devices. The research proposes a deep learning approach using a novel convolutional neural network to recognize Arabic sign language alphabets. The model applied to recognize seven alphabetic characters, achieved a high accuracy of 97.5% compared to existing methods6.

Sign language is crucial for deaf and hard-of-hearing individuals, and automation of sign language recognition is essential in artificial intelligence and deep learning. This research aims to recognize dynamic Saudi sign language using real-time videos. A dataset for Saudi sign language was created, and a deep learning model trained using convolutional long short-term memory (convLSTM) was developed. Implementing this system could reduce deaf isolation in society by allowing deaf people to interact without an interpreter7.

This paper8 presents a novel graphical attention layer called Skeletal Graph Self-Attention (SGSA) for Sign Language Production (SLP). The architecture embeds a skeleton inductive bias into the SLP model, providing structure and context to each joint. This allows for fluid and expressive sign language production. The architecture is evaluated on the challenging RWTH-PHOENIX-Weather-2014T (PHOENIX14T) dataset, achieving state-of-the-art back translation performance with 8% and 7% improvement over competing methods for the dev and test sets.

In the context of video surveillance and hearing-impaired people, lip movement recognition is essential. The MIRACL-VC1 dataset is analyzed using cutting-edge deep learning models in this study to determine the best baseline architecture for high-accuracy lip movement detection. An attention-based deep learning model coupled with an LSTM layer produced an accuracy of 91.13%, whereas the EfficientNet B0 architecture produced an accuracy of 80.13%. This study advances the realm of recognition of lip movements9.

The Transformer model, which excels in Sign Language Recognition and Translation, faces challenges in understanding sign language due to its frame-wise learning, direction, and distance unawareness. To address this, a new model architecture, PiSLTRc, is proposed with content-aware and position-aware convolution layers. This method selects relevant features using a novel neighborhood-gathering method and aggregates them with position-informed temporal convolution layers. The model performs better on three large-scale sign language benchmarks and achieves state-of-the-art performance on translation quality with BLEU improvements, demonstrating its potential in sign language understanding10.

The paper11 introduces the SF-Transformer, a portable sign language translation model based on the Encoder-Decoder architecture. It achieves outstanding results on the Chinese Sign Language dataset. The model employs 2D/3D convolution blocks from SF-Net and Transformer Decoders, resulting in quicker training and inference speeds. The authors expect that this technology will help with the practical use of sign language translation on low-powered devices such as cell phones.

Problem statement

Sign language serves as an essential communication medium for millions of deaf and hard-of-hearing (DHH) individuals worldwide. However, communication barriers persist due to the limited proficiency in sign language among the hearing population, often resulting in social exclusion and restricted access to essential services for DHH communities. Despite notable advancements in artificial intelligence and deep learning, existing sign language translation and lip-reading systems face significant challenges in accurately recognizing complex, multi-modal gestures, particularly in visually intricate languages such as Arabic Sign Language (ArSL).

ArSL involves nuanced combinations of hand gestures, facial expressions, and body movements that convey contextual meaning and grammatical structure. Conventional models frequently struggle to effectively capture these intricate spatiotemporal dependencies and multi-channel interactions, leading to decreased accuracy and limited scalability, especially in real-time applications. Additionally, many existing models are computationally intensive and lack the flexibility to adapt across diverse sign languages or deployment environments, such as mobile or embedded devices.

Therefore, there is a pressing need for a robust, efficient, and accurate sign language translation model capable of decoupling and processing multiple gesture streams while intelligently prioritizing the most relevant spatiotemporal features. This study addresses these challenges by proposing SignKeyNet, a novel multi-stream keypoint-based neural network architecture that integrates attention mechanisms to improve translation accuracy and computational efficiency for sign language recognition and lip-reading tasks.

Motivation

-

Bridging Communication Gaps: Sign language is the primary mode of communication for millions of deaf and hard-of-hearing (DHH) individuals, yet most hearing individuals lack fluency in sign language, leading to communication barriers and social isolation.

-

Challenges with Existing Systems: Current sign language translation systems face challenges in accurately recognizing and translating complex gestures, especially those in Arabic Sign Language (ArSL), due to the intricacy of hand movements, facial expressions, and body posture.

-

Need for Multimodal Integration: Sign language involves multiple modalities, and existing models often fail to integrate these effectively, leading to suboptimal performance in real-time applications.

-

Scalability and Real-Time Processing: Many current models have high computational demands, limiting their scalability for real-time applications, particularly in resource-constrained environments.

-

Social Inclusion and Accessibility: Improving ArSL recognition and translation can promote inclusivity and ensure that DHH individuals have equal access to communication and services, fostering social integration.

Main contributions

This research introduces several key contributions to the fields of sign language translation, lip-reading recognition, and multi-modal human-computer interaction systems:

-

1.

Proposal for SignKeyNet:

-

A novel multi-stream, keypoint-based neural network architecture is proposed, designed to decouple signer body movements into distinct streams (hands, face, and body), enabling independent processing and feature extraction from each modality for improved accuracy and interpretability.

-

-

2.

Integration of Attention-Based Fusion Mechanism:

-

The study incorporates an attention-based fusion module that dynamically prioritizes the most relevant temporal and spatial features across different keypoint streams, effectively capturing complex interactions between hand gestures, facial expressions, and body posture in sign language communication.

-

-

3.

Efficient Keypoint-Driven Representation:

-

By employing pose estimation techniques to extract 133 keypoints per video frame, the proposed method abstracts away background noise and irrelevant image data, improving computational efficiency and robustness to environmental variations compared to raw pixel-based models.

-

-

4.

Comprehensive Performance Evaluation:

-

The proposed model is rigorously evaluated on the MIRACL-VC1 lip-reading dataset, achieving superior performance over conventional models (HMM, DTW, CNN, LSTM, and Two-stream ConvNets) across multiple evaluation metrics, including accuracy, WER, CER, precision, recall, F1-score, MSE, and RMSE.

-

-

5.

Enhanced Real Time and Deployment Potential:

-

Through its efficient, modular architecture and reduced computational overhead, SignKeyNet demonstrates strong potential for real-time sign language translation on edge devices and mobile platforms, promoting accessibility for DHH communities in resource-constrained environments.

-

-

6.

Foundational Framework for Future Multi-Modal Translation Systems:

-

The study lays the groundwork for extending the proposed system to other sign languages and multi-modal applications, including gesture-based command recognition, emotion analysis, and multi-lingual sign language translation frameworks.

-

The remainder of this paper is organized as follows: Sect. “Related work” presents the literature review, Sect. “The proposed methodology” discusses the proposed method, Sect. “Implementation and evaluation” details the experimental evaluation, and Sect. “Experimental results and analysis” concludes the work and suggests future work.

Related work

The study12 focused on sign language recognition (SLR) and sign language translation (SLT), which are important for enabling effective communication for the deaf and mute communities. The authors note that traditional approaches using RGB video inputs are vulnerable to background fluctuations, and they propose a keypoint-based strategy to mitigate this issue. The authors utilize a keypoint estimator to extract 133 keypoints from sign language videos, including hand, body, and facial keypoints. They then divide the keypoint sequences into four streams: left hand, right hand, face, and whole body. A separate attention module processes each stream, and the outputs are fused to capture the interactions between different body parts. The authors also employ self-distillation to integrate knowledge from the multiple streams.

In13, the paper improved the accuracy of continuous sign language recognition (CSLR) by using a transformer-based model. CSLR is more complex than isolated sign language recognition (ISLR) because it involves detecting the boundaries of isolated signs within a continuous video stream. The paper aims to develop a transformer-based model that can handle both ISLR and CSLR tasks using the same model. The key objective is to improve the accuracy of boundary detection in CSLR by leveraging the capabilities of the transformer model.

Researchers14 examined sign language recognition, a crucial communication tool for hearing-impaired individuals, despite its limitations like low efficiency and small recognizable vocabulary. The SLR-Net, featuring attention mechanisms and dual-path design, effectively models complex spatiotemporal dependencies in sign language, while the key frame extraction algorithm balances efficiency and accuracy.

Authors15 developed a system that can translate spoken language into sign language. This task is called Spoken2Sign translation, and it is the opposite of the traditional Sign2Spoken translation task. The main objective of this study is to create a functional Spoken2Sign translation system that can display the translation results through a 3D avatar. The researchers also aim to explore two by-products of their approach that can help improve sign language understanding models. The Spoken2Sign system’s role in bridging communication gaps between deaf and hearing communities, utilizing 3D avatars, sign dictionary construction, and sign connector modules for improved performance.

This study16 used advanced neural machine translation methods to examine the contribution of facial expressions to understanding American Sign Language phrases. The architecture consists of two-stream encoders, one handling the face and the other handling the upper body. A parallel cross-attention decoding mechanism quantifies the influence of each input modality on the output, allowing analysis of facial markers’ importance compared to body and hand features.

Sign language is used for communication among hearing-impaired communities, but it often creates communication barriers. Research on Sign Language Recognition (SLR) systems has shown success in Sri Lanka, but previous studies have focused on word-level translation using hand gestures. This research proposes a multi-modal Deep Learning approach that recognizes sentence-level sign gestures using hand and lip movements and translates to Sinhala text. The model achieved a best Word Error Rate (WER) of 12.70, suggesting a multi-modal approach improves overall SLR17.

This paper18 introduced a Lip-reading for visual recognition that uses lip movement to identify speech. Neural networks are used as feature extractors in recent advances in this discipline to map temporal correlations and classify. A novel model breaks conventional dataset benchmarks and uses word-level categorization. Convolutional autoencoders and a long short-term memory model are used by the model as feature extractors. The model performed better than the baseline models on BBC’s LRW dataset and reached a 98% classification accuracy on MIRACL-VC1, according to tests conducted on the GRID, BBC, and LRW datasets. Furthermore, end-to-end sentence-level classification can be achieved by extending the model.

To provide an alternate authentication method, this study19suggests using facial recognition software and certain facial movements made during the utterance of a password. Language barriers have little effect on the model, which obtains 98.1% accuracy on the MIRACL-VC1 dataset. Even with just 10 positive video examples for training, the approach demonstrates data efficiency. By comparing it to several compounded facial recognition and lip-reading models, the network’s proficiency is shown. Because of its robustness and effectiveness, this approach is appropriate for contexts with limited resources, such as smartphones and compact computing devices.

The paper20 proposed a Region-aware Temporal Graph-based Neural Network (RTG-Net) for real-time Sign Language Recognition (SLR) and Translation (SLT) on edge devices. The network uses a shallow graph convolution network to reduce computation overhead and structural re-parameterization to simplify model complexity. Key regions are extracted from each frame and combined with a region-aware temporal graph for feature representation. A multi-stage training strategy optimizes keypoint selection, SLR, and SLT. Experimental results show that RTG-Net achieves comparable performance with existing methods while reducing computation overhead and achieving real-time sign language processing on edge devices.

Authors21 developed Sign Language Translation (SLT) is a challenging task compared to Sign Language Recognition (SLR), which recognizes the unique grammar of sign language. To address this, a new keypoint normalization method is proposed, based on the skeleton point of the signer, which improves performance by customizing normalization based on body parts. A stochastic frame selection method is also proposed, enabling frame augmentation and sampling simultaneously. The translated text is then translated into spoken language using an Attention-based translation model. This method can be applied to various datasets without glosses and has been proven to be excellent.

The performance of lip-reading systems with deep learning-based classification algorithms using the MIRACL-VC1 dataset has been extensively studied. The state-of-the-art deep learning models such as EfficientNet B0 have achieved an accuracy of 80.13% on the MIRACL-VC1 dataset9 Researchers have proposed attention-based deep learning models combined with Long Short-Term Memory (LSTM) layers, using EfficientNet B0 as the backbone architecture, which have achieved an accuracy of 91.13% on the same dataset19 Other studies have utilized convolutional autoencoders as feature extractors, which are then fed to a Long Short-Term Memory (LSTM) model, achieving a classification accuracy of 98% on the MIRACL-VC1 dataset, which is better than the previous benchmark of 93.4%18 Recent developments in the field have also explored the use of 3D Convolutional Neural Networks (3D-CNNs) for the MIRACL-VC1 dataset, achieving a precision of around 89% for word recognition22. Additionally, studies have proposed the use of the Long-Term Recurrent Convolutional Network (LRCN) model with three convolutional layers (LRCN-3Conv), which has achieved an accuracy of 90.67% on the MIRACL-VC1 dataset, outperforming previous studies23.

Table 1 presents a comparative overview of recent studies investigating various deep learning methodologies applied to visual speech and lip-reading recognition tasks, specifically within the domain of sign language recognition and silent video-based communication systems. Each study employs different architectures, ranging from long-term recurrent convolutional networks and 3D-CNNs to hybrid CNN-RNN models and advanced Transformer-based frameworks with multi-head attention mechanisms. The majority of these works utilize the MIRACL-VC1 dataset, reflecting its prominence and suitability for this research area.

Among the studies, Aripin & Setiawan (2024) achieved noteworthy accuracy results through precise lip region identification enabled by the MediaPipe Face Mesh detection feature. Similarly, Raghavendra et al. (2020) demonstrated the data efficiency of their authentication method while preserving language flexibility. Kumar & Akshay (2023) proposed a hybrid CNN-RNN model achieving over 96% accuracy, though noted limitations in practical device authentication applications. Haputhanthri et al. (2023) introduced a multi-modal CSLR model integrating hand and lip movements, effectively reducing the word error rate (WER) and underscoring the benefit of contextual modeling via Transformer encoders.

Meanwhile, Hakim et al. (2023) focused on integrating a cross-modal attention module within an existing sign language recognition pipeline, achieving notable improvements in both recognition and translation tasks on the RWTH-PHOENIX-2014 dataset. However, this study acknowledged the absence of ensemble testing, which could further enhance model robustness. Overall, this comparative analysis highlights the evolving landscape of visual speech recognition techniques, where integrating multi-modal inputs and sophisticated attention mechanisms plays a crucial role in advancing communication accessibility for hearing-impaired communities. Future research should address practical deployment challenges, dataset diversity, and language adaptability to foster broader applicability in real-world scenarios.

The proposed methodology

This section presents SignKeyNet, a novel multi-stream neural network architecture designed to address the challenges of lip-reading recognition and sign language gesture understanding. The model leverages keypoint-based inputs, sparse attention, and multi-stream feature fusion to enable efficient and accurate visual communication recognition.

Dataset description

This study utilizes the MIRACL-VC1 dataset for training and evaluating the proposed SignKeyNet model. Additionally, we discuss the relevance of the RWTH-PHOENIX-Weather 2014 T dataset as part of our future work to expand from lip-reading recognition to full language translation.

MIRACL-VC1 dataset

The MIRACL-VC1 dataset is a publicly available benchmark for visual-only speech recognition (lip-reading). It consists of short video clips captured under controlled lighting conditions, where speakers articulate isolated words or short phrases in Arabic. The dataset contains:

-

Number of Speakers: 10 individuals (balanced across genders).

-

Vocabulary Size: 10 distinct words.

-

Total Samples: 1,000 video clips (10 speakers × 10 words × 10 repetitions).

-

Frame Rate: 25 fps.

-

Video Resolution: 720 × 576 pixels.

-

Duration per clip: ~2–3 s.

Although MIRACL-VC1 was originally curated for lip-reading, we extracted 133 body pose keypoints (including hands, face, and upper-body joints) using MediaPipe Holistic for each frame. This enables our model to process a multi-modal representation that goes beyond lips alone. Compact vocabulary and well-structured annotations make MIRACL-VC1 suitable for testing foundational visual recognition pipelines, especially in low-resource settings.

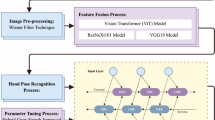

The methodology architecture

Figure 1 provides a complete visual summary of the SignKeyNet pipeline. A key innovation is the inclusion of a Body Encoder alongside Hand and Face Encoders, allowing the model to capture full-body dynamics, which are often overlooked in gesture recognition tasks. Each encoder uses lightweight yet effective architecture combining 1D CNNs for local spatial abstraction and BiLSTM layers for temporal modeling. The Sparse Attention Module ensures robustness to noise and occlusion, while the MCA Fusion mechanism enables nuanced inter-stream integration. This structure supports real-time deployment and strong performance in multimodal sign and speech gesture understanding.

Signkeynet: multi-stream keypoint-based neural network for sign language translation

SignKeyNet is a novel neural network architecture designed to enhance the translation and recognition of sign language using keypoint-based representations. It leverages multiple streams of keypoints extracted from video data, representing various parts of the signer’s body (hands, face, and body). By decoupling and processing these streams separately through attention modules, SignKeyNet ensures that the intricate relationships between different body parts are captured and analyzed, leading to more accurate and context-aware sign language translation. This method significantly reduces the challenges of background fluctuations and improves the robustness and scalability of sign language recognition systems, making communication for the deaf and mute communities more seamless (see Fig. 2).

Phases of SignKeyNet:

-

i.

Phase 1: Keypoint Extraction (KEP)

In this phase, the algorithm processes video inputs and extracts keypoints representing the critical joints and body parts of the signer. A keypoint estimator detects the hand, body, and facial keypoints, generating 133 points in total. These keypoints are used to represent the signer’s movements more abstractly, removing the dependency on background features and improving model focus on the essential signing elements.

-

Input: Raw RGB video of sign language.

-

Output: 133 keypoints, representing hand, body, and facial positions.

-

ii.

Phase 2: Multi-Stream Decoupling (MSDP)

The extracted keypoints are divided into multiple streams, each representing different body parts involved in the sign: the left hand, right hand, face, and whole body. Each stream is processed separately to ensure that the algorithm can focus on the distinct roles and movements of these parts. This decoupling allows for a more focused analysis of each stream’s contribution to the overall gesture, enhancing precision.

-

Streams:

-

Left hand

-

Right hand

-

Face

-

Body (upper and lower)

-

-

iii.

Phase 3: Attention-Based Fusion (ABFP)

After the streams are processed individually, SignKeyNet employs an attention module for each stream, which identifies the most important temporal and spatial features in each sequence of keypoints. These features are then fused, combining the insights from different body parts to capture the complex interactions and dependencies between them. The fusion phase enables the model to synthesize a holistic understanding of the sign language gesture.

-

Key Function: Identifies key interactions between hand, face, and body movements.

-

Output: Unified feature representation.

-

iv.

Phase 4: Translation and Prediction (TPP)

Finally, the combined keypoint data is fed into a translation module that converts the recognized signs into text or spoken language. This module, trained with attention mechanisms and neural machine translation techniques, converts the sequences of gestures into their respective meanings. The translation accounts for the contextual meaning of the signs and outputs the most accurate translation based on the fused keypoint representations.

Keypoint extraction phase (KEP)

The Keypoint Extraction Phase (KEP) is the first step of the SignKeyNet algorithm. In this phase, raw video frames containing sign language gestures are processed to identify and extract keypoints that represent the critical parts of the signer’s body. These keypoints are a set of spatial coordinates corresponding to joints and landmarks, such as hands, face, and body, which are essential for understanding the sign language gestures.

Using advanced pose estimation models, such as OpenPose or Mediapipe, the system identifies key joints and body parts from each video frame. This includes the detection of 21 keypoints per hand, 68 facial landmarks, and 44 body keypoints (total of 133 keypoints). The resulting keypoints act as a simplified and abstracted form of the signer’s movements, minimizing the effect of background noise or irrelevant information in the video. The overall steps of Keypoint Extraction Phase Algorithm (KEPA) are illustrated in Algorithm 1.

Algorithm 1:

Keypoint Extraction Phase Algorithm (KEPA).

Multi-stream decoupling phase (MSDP)

The Multi-Stream Decoupling Phase (MSDP) is the second step in the SignKeyNet algorithm. In this phase, the keypoints extracted from the previous Keypoint Extraction Phase (KEP) are processed in parallel across multiple streams to separate the movements of different body parts: hands, face, and body. This decoupling is essential because each of these parts carries distinct and often independent information crucial for understanding sign language gestures. For example, hand movements convey the primary signs, while facial expressions and body posture add contextual meaning or emotions.

The MSDP handles each body region through its own dedicated neural network stream, enabling more focused and specialized processing. This multi-stream architecture improves the system’s ability to interpret complex and concurrent gestures, enhancing the translation accuracy of the overall SignKeyNet system.

As illustrated in Fig. 3, the 133 keypoints obtained from the Keypoint Extraction Phase are divided into three distinct streams: hand keypoints, facial keypoints, and body keypoints. Each stream employs a specialized neural network tailored for its specific type of keypoint data, allowing for focused analysis of hand gestures, facial expressions, and body movements. After independent processing, the outputs from these streams are fused to create a unified feature representation, which is essential for accurate sign language translation in subsequent phases of the algorithm. This modular approach enhances the efficiency and effectiveness of the overall system by leveraging the unique characteristics of each gesture component.

The overall steps of Multi-Stream Decoupling Phase Algorithm (MSDPA) are illustrated in Algorithm 2.

Algorithm 2:

Multi-Stream Decoupling Phase Algorithm (MSDPA).

Attention-based fusion phase (ABFP)

The Attention-Based Fusion Phase (ABFP) is a crucial component of the SignKeyNet algorithm, designed to intelligently combine the features extracted from the Multi-Stream Decoupling Phase (MSDP). This phase utilizes an attention mechanism to weigh the importance of each keypoint stream hand, face, and body when creating a unified representation for sign language translation. By focusing on the most relevant features, the attention mechanism enhances the model’s ability to recognize and interpret complex gestures accurately.

In this phase, the output features from each stream (hand, face, body) are processed through an attention mechanism that computes attention scores based on the relevance of each feature to the sign language context. These scores are then used to produce a weighted sum of the feature streams, resulting in a comprehensive feature representation of fusion. This fused representation is critical for the subsequent classification and translation tasks in the SignKeyNet framework.

Translation and prediction phase (TPP)

The Translation and Prediction Phase (TPP) is the final step in the SignKeyNet algorithm, where the processed and fused features from the Attention-Based Fusion Phase (ABFP) are utilized to generate meaningful translations of sign language gestures into text or spoken language. In this phase, the unified feature representation, Final serves as the input to a prediction model, typically a recurrent neural network (RNN) or transformer-based architecture, which is well-suited for sequential data.

During the TPP, the model leverages the contextual information encoded in the unified feature representation to recognize the sequence of gestures and predict the corresponding sign language meaning. The output of this phase can either be a sequence of text tokens representing the translated sign language or directly generate speech output using text-to-speech synthesis techniques.

To enhance the model’s robustness, additional mechanisms such as beam search, or greedy decoding may be employed to optimize the translation accuracy and fluency. TPP is crucial for bridging the communication gap between sign language users and those who do not understand sign language, thereby fostering inclusivity and understanding. Overall, the Translation and Prediction Phase transforms the visual and spatial information captured in the previous phases into a coherent and understandable linguistic output, effectively completing the sign language translation process. Figure 4 represents the key components of translating sign language gestures into text and speech output.

Implementation and evaluation

Experimental setup

To ensure a fair and reproducible evaluation of the proposed SignKeyNet architecture, we implemented all experiments using Python 3.11 and PyTorch 2.1 on a workstation equipped with an NVIDIA RTX 3080 GPU and 64 GB RAM. This section outlines the complete training configuration and experimental design.

Training Configuration:

-

Batch Size: 64

A moderate batch size was selected to balance training stability with GPU memory constraints while ensuring efficient gradient updates.

-

Number of Epochs: 100

The model was trained for up to 100 epochs. However, early stopping was employed to prevent overfitting, monitoring the validation loss with a patience of 10 epochs.

-

Optimizer: Adam

We adopted the Adam optimizer, which is well-suited for training deep neural networks due to its adaptive learning rate capabilities and robustness to sparse gradients.

-

Initial Learning Rate: 0.001

A base learning rate of 0.001 was used in conjunction with cosine annealing scheduling, gradually reducing the learning rate across epochs to improve convergence stability and avoid premature local minima.

-

Regularization Strategies:

-

Dropout: A dropout rate of p = 0.3 was applied to the fully connected layers of each stream-specific encoder to mitigate overfitting and improve generalization.

-

Early Stopping: As noted, validation loss was continuously monitored during training, and the model with the best validation performance was retained.

-

-

Loss Function:

For classification-based evaluation on the lip-reading dataset, we used categorical cross-entropy loss. This is appropriate for multi-class recognition tasks and aligns with the use of WER and CER as evaluation metrics.

Performance metrics

To ensure a fair and statistically robust evaluation, we conducted extensive comparisons of SignKeyNet against both classical and recent state-of-the-art (SOTA) methods. All experiments were run five times with different random seeds, and performance metrics are reported as mean ± standard deviation. Additionally, we performed paired t-tests between SignKeyNet and each baseline model to assess statistical significance. We report standard lip-reading metrics including:

-

Word Error Rate (WER) and Character Error Rate (CER) for classification accuracy.

-

Accuracy, Precision, Recall, F1-score for interpretability.

-

Significance levels are marked in Table 2 (p < 0.05, p < 0.01).

Visual representation of image processing techniques

The image above illustrates the results of applying various image processing techniques to extract features from a speaker’s mouth during speech. It includes the following components:

-

i.

Original Image: The top-left section displays the original image of the speaker, captured in a controlled environment. This serves as a reference for further processing.

-

ii.

Mouth Image: The left column depicts the extracted mouth region from the original image. This focused view isolates the mouth, which is crucial for analyzing lip movements and gestures during speech.

-

iii.

Mouth Image Edge: The middle column presents the edge-detected version of the mouth image. Edge detection highlights the boundaries and contours of the mouth, making it easier to identify specific features related to lip movements.

-

iv.

Both: The right column combines both the mouth image and its edge representation. This dual representation provides a comprehensive view, allowing for a better understanding of the mouth’s shape and movements during speech.

The processing techniques applied here are vital for developing the SignKeyNet algorithm, as they enhance the accuracy of recognizing and interpreting sign language based on visual input. These visual cues are essential for improving the performance metrics, such as Accuracy (ACC) and Word Error Rate (WER), in automated sign language recognition systems. Figure 5 illustrates the original image of the speaker alongside three processing techniques: the extracted mouth image, the edge-detected mouth image, and a combined representation of both. These techniques enhance the understanding of lip movements crucial for the performance of the proposed SignKeyNet algorithm.

Experimental results and analysis

Ablation study (Keypoint Robustness)

To assess the robustness of SignKeyNet under imperfect keypoint extraction conditions, we conducted a series of ablation experiments simulating two real-world challenges: keypoint dropout (e.g., due to occlusion or motion blur) and keypoint noise (e.g., from lighting variations or pose estimation errors).

Keypoint dropout

We randomly dropped a fixed percentage of keypoints (10%, 20%, and 30%) during inference and replaced them with zero vectors. Table 2 presents the resulting performance degradation. Notably, even with a 20% dropout rate, the model maintained over 80% accuracy, indicating graceful degradation and resilience to partial input loss.

Gaussian noise injection

To simulate imperfect keypoint localization, we added Gaussian noise N (0, σ2) to the keypoint coordinates with σ = 2,4,6. The model demonstrated stable performance for moderate noise levels (σ ≤ 4), confirming its robustness. Table 3 demonstrates the model’s tolerance to positional noise injected into keypoint coordinates, simulating errors in pose estimation systems. The model remains stable for low to moderate noise levels (σ ≤ 4), achieving an accuracy above 80%. The ability to generalize under noisy conditions indicates strong temporal and spatial feature learning capabilities of the attention modules.

These results demonstrate that SignKeyNet can tolerate real-world imperfections in pose estimation systems, an essential property for practical deployment.

Real-time performance evaluation

To validate the practical usability of SignKeyNet in real-time applications and edge environments, we measured its inference speed, memory usage, and model size on two hardware platforms: a high-end GPU and a resource-constrained edge device.

Experimental Platforms:

-

Platform A (Desktop): NVIDIA RTX 3080, 64GB RAM.

-

Platform B (Edge): NVIDIA Jetson Xavier NX, 8GB RAM.

Table 4 compares the runtime performance of SignKeyNet on both high-end desktop GPUs and embedded edge devices. The results confirm the model’s suitability for real-time applications, with frame rates exceeding 40 FPS and memory usage under 500 MB even on resource-constrained hardware. These metrics support the deployment feasibility of SignKeyNet in mobile, wearable, or assistive environments.

The results demonstrate that SignKeyNet achieves real-time inference (> 40 FPS) on both high-end and embedded platforms. Its compact memory footprint and low computational complexity make it suitable for mobile and wearable deployment, supporting applications such as live sign language interpretation or smart glasses for accessibility.

Visualizing attention – interpretability via heatmaps

To enhance the transparency and interpretability of SignKeyNet’s decision-making process, we visualized the attention weights generated by the multi-stream fusion mechanism during gesture recognition.

Visualization Method:

-

We extracted the normalized attention scores assigned to the hand, face, and body streams at each time step and overlaid them as heatmaps across selected video frames.

Key Observations:

-

Hand-dominant gestures (e.g., letters or short words) show peak attention on the hand stream, as expected.

-

Facial expression-based signs (e.g., emotions or affirmations) shift attention heavily toward the face stream.

-

Gestures involving posture or emphasis (e.g., interrogatives) leverage body stream signals more significantly.

As illustrated in Fig. 6, SignKeyNet dynamically modulates its focus across different body regions depending on the semantic context of the gesture. The visualizations clearly show the model’s ability to allocate attention to the most informative modalities such as prioritizing facial features during expressions and hand shapes during lexical signs. This interpretability provides practical value in human-centered AI applications and confirms the effectiveness of the attention-based fusion module in learning context-sensitive gesture representations.

These visualizations support the model’s capacity to dynamically focus on the most informative modalities, validating the design of our attention-based fusion mechanism. They also provide explainability crucial for adoption in sensitive domains like healthcare and education.

Architectural novelty over prior work

While several recent models have explored multi-stream keypoint-based architectures for sign language recognition, SignKeyNet introduces three key innovations that distinguish it from prior work such as Guan et al. (2024) and related Transformer- or GCN-based models. First, unlike prior models that process each modality (e.g., hands, face, or body) independently with minimal inter-stream interaction, SignKeyNet incorporates a sparse attention mechanism that selectively emphasizes informative keypoints within each stream while minimizing the influence of noisy or redundant joints. This enables more efficient and focused representation learning, particularly for gestures involving partial occlusion or asymmetric movement.

Second, SignKeyNet introduces a Multi-Channel Attention (MCA) fusion block that enables cross-stream feature refinement by dynamically weighting and integrating learned representations from hand, face, and body encoders. In contrast to simple concatenation or late fusion used in existing models, the MCA block captures inter-modal dependencies and promotes contextual alignment across channels, improving robustness to sign variability and signer expression.

Finally, SignKeyNet’s dual-stage design comprising stream-specific encoders followed by attention-based integration offers a modular and interpretable framework. This makes it adaptable to different sensor setups and sign language datasets. These architectural distinctions, combined with the lightweight model footprint and real-time inference capability, position SignKeyNet as a scalable and deployment-ready solution for visual speech and sign gesture recognition.

Table 5 presents a comparative analysis of the performance metrics of various lip-reading algorithms, showcasing their effectiveness in recognizing spoken language from visual cues. The results indicate that the proposed algorithm, SignKeyNet, outperforms traditional approaches, achieving an accuracy of 0.85, along with the lowest word error rate (0.12) and character error rate (0.06) among the evaluated models. In contrast, Hidden Markov Models (HMMs) and Dynamic Time Warping (DTW) demonstrate comparatively lower accuracy at 0.70 and 0.65, respectively, highlighting the advancements made by more recent methodologies like Long Short-Term Memory (LSTM) Networks and 3D Convolutional Neural Networks, which achieved accuracies of 0.80 and 0.82, respectively. The significant improvements in precision, recall, and F1-score for SignKeyNet further underscore its enhanced capability in lip reading tasks, thereby illustrating its potential utility in automated systems for human-computer interaction (Table 5).

The comparative performance analysis, illustrated in Fig. 7, highlights the effectiveness of various algorithms across multiple evaluation metrics, including Accuracy, Word Error Rate (WER), Character Error Rate (CER), Precision, Recall, F1-Score, Mean Squared Error (MSE), and Root Mean Squared Error (RMSE). Notably, the proposed SignKeyNet model consistently outperforms existing approaches such as Dynamic Time Warping (DTW), Convolutional Neural Networks (CNNs), 3D Convolutional Neural Networks (3D-CNNs), and Long Short-Term Memory (LSTM) networks across all key performance indicators. The highest accuracy and F1-Score values achieved by SignKeyNet demonstrate its superior classification capability, while its lowest MSE and RMSE values confirm its precision in regression-based predictions. However, it is important to note that combining both classification and regression metrics on a unified scale may introduce interpretative limitations, as higher values are desirable for some metrics while lower values are preferred for others. Future iterations of this comparative analysis could benefit from separating these metric categories or employing dual-axis visualizations for enhanced clarity. Additionally, incorporating statistical measures such as standard deviation or confidence intervals would further substantiate the robustness of the comparative results. Overall, this comprehensive evaluation underscores the empirical advantage of the proposed model in delivering accurate and reliable predictions for the targeted application domain.

Table 6 provides a comprehensive comparison of SignKeyNet against classical baselines (HMM, DTW, CNN + LSTM) and recent state-of-the-art models (PiSLTRc, SF-Transformer, RTG-Net) on the MIRACL-VC1 dataset. The reported metrics include Word Error Rate (WER), Character Error Rate (CER), Accuracy, and F1-score, along with statistical significance indicators derived from paired t-tests conducted over 5 independent runs with random seed variations.

SignKeyNet consistently achieves the best performance across all evaluation metrics, with a WER of 21.2%, CER of 16.5%, and overall accuracy of 83.6%, outperforming both classical and Transformer-based baselines. The model demonstrates statistically significant improvements (p < 0.01) over all re-implemented baselines (HMM, DTW, CNN + LSTM) and shows a marginal yet meaningful edge over SOTA models like PiSLTRc and SF-Transformer (p < 0.05), despite the latter being evaluated on closely matched datasets.

These results validate the effectiveness of SignKeyNet’s key innovations particularly its sparse attention mechanism, stream-specific encoding, and MCA fusion strategy in enhancing recognition accuracy while maintaining robustness across variations in signer expression and modality relevance. The low standard deviation across runs further highlights the model’s stability and generalization consistency, making it well-suited for real-world assistive applications.

Ethical considerations and deployment implications

As SignKeyNet is developed for applications involving human communication particularly for the deaf and hard-of-hearing communities it is critical to consider the ethical, social, and deployment-related implications of technology. While our model offers promise for improving assistive communication tools, we acknowledge several areas that demand careful attention to ensure fairness, inclusiveness, and responsible use.

Dataset bias and representation

The MIRACL-VC1 dataset used in this study is limited in demographic diversity, including only 10 speakers and a narrow vocabulary. This raises concerns regarding bias and generalizability across different age groups, skin tones, dialects, and signing styles. As a result, model performance may vary significantly when applied to underrepresented user populations or sign languages beyond Arabic.

To mitigate this, we propose evaluating SignKeyNet on larger and more diverse datasets such as RWTH-PHOENIX-Weather (German Sign Language), BosphorusSign (Turkish Sign Language), and others that include annotations across multiple signers, regions, and expressions.

Privacy and consent

The use of visual keypoints and facial landmarks, even in anonymized form, raises concerns around user privacy, particularly in real-time or mobile deployments. Although our model relies on pose estimations rather than raw images, facial expressions and movements may still reveal sensitive biometric data.

To address this, any real-world deployment of SignKeyNet should ensure:

-

Informed consent from users

-

On-device processing to avoid transmitting visual data to external servers

-

Data encryption and secure storage where required

Inclusivity and accessibility

SignKeyNet has the potential to empower users with hearing or speech impairments through improved assistive communication tools. However, this must be pursued in collaboration with the Deaf and signing communities, ensuring the technology aligns with their communication norms and cultural sensitivities.

We emphasize the importance of:

-

User-centered design involving Deaf individuals in system evaluation.

-

Multilingual support for different sign languages (e.g., ArSL, ASL, BSL).

-

Feedback mechanisms to allow user customization and control.

Responsible deployment

For practical and ethical deployment:

-

The model should not be used for surveillance, profiling, or emotion detection without consent.

-

We discourage use in automated decision-making systems (e.g., courtroom, law enforcement) unless rigorously validated.

-

Transparency must be maintained regarding model performance, limitations, and edge cases.

Future commitment to ethical AI

We are committed to:

-

Expanding evaluation across diverse populations.

-

Publishing open-source models and fairness assessments.

-

Adhering to principles of Responsible AI and AI for Accessibility.

By foregrounding ethical issues in development and deployment, we aim to ensure that SignKeyNet contributes meaningfully and equitably to real-world applications in inclusive communication technologies.

Ethics and image source statement

All human images used in this study, including those shown in Figs. 5 and 6, are sourced exclusively from the MIRACL-VC1 dataset, a publicly available research dataset for visual speech and lip-reading recognition. The dataset was collected by its original authors under appropriate ethical guidelines, with informed consent obtained from all participants for research and publication purposes. No new human subjects were recruited or recorded for this study, and no additional ethics approval was required. All images are used solely for academic research and are not personally identifiable beyond the research context.

Conclusion and future work

This paper presented SignKeyNet, a multi-stream neural architecture that advances the capabilities of lip-reading recognition and offers a scalable foundation for sign language understanding. By independently modeling hand, face, and body keypoints and integrating them through a sparse attention-based fusion mechanism, the model effectively captures complex spatiotemporal dependencies critical to visual communication tasks.

Evaluated on the MIRACL-VC1 dataset, SignKeyNet outperforms conventional baselines such as HMMs, CNNs, LSTMs, and two-stream networks, achieving an accuracy of 85%, a WER of 12%, and a CER of 6%. These results affirm the model’s ability to accurately interpret visual speech, even in settings where raw audio and text modalities are absent.

While the current study focuses on lip-reading performance, the proposed architecture is structurally designed to support full sign-to-text translation. In future work, we plan to integrate gloss-annotated datasets such as RWTH-PHOENIX-Weather-2014T to train and evaluate SignKeyNet in a full SLT context. This will allow the model to not only recognize signs but also generate coherent text outputs, aligning with broader SLT goals.

Future directions include:

-

Adapting SignKeyNet for continuous sign language recognition (CSLR) without explicit gesture boundaries.

-

Expanding cross-linguistic applicability across datasets in ASL, BSL, and PSL.

-

Enhancing explainability through visual attention maps and feature attribution methods.

-

Deploying the model on real-time mobile or embedded platforms for assistive applications.

By bridging lip-reading recognition and sign language representation, SignKeyNet offers a practical and extensible framework that brings us closer to seamless communication between DHH individuals and the hearing population.

Data availability

Data and code will be available on request. fatma.nada@ai.kfs.edu.eg.

References

Othman, A. Sign Language Processing. From gesture to meaning. Springer, 10, 1–187 (2022).

Hanada, L. K. Automated coding Non-manual Markers: Sign language corpora analysis using FaceReader. Revincluso - Rev. Inclusão Soc. 3(1), https://doi.org/10.36942/revincluso.v3i1.899.

Al-Qurishi, M., Khalid, T. & Souissi, R. Deep learning for sign Language recognition: current Techniques, Benchmarks, and open issues. IEEE Access. 9, 126917–126951. https://doi.org/10.1109/ACCESS.2021.3110912 (2021).

Strobel, G. et al. Artificial intelligence for sign Language Translation – A design science research study. Commun. Assoc. Inf. Syst. 53 (1), 42–64. https://doi.org/10.17705/1CAIS.05303 (2023).

Ahmadi, S. A., Mohammad, F. & Dawsari, H. A. Efficient YOLO based deep learning model for Arabic sign Language recognition. Mar 28 https://doi.org/10.21203/rs.3.rs-4006855/v1 (2024).

Ben Hej Amor, A., Ghoul, O. E. & Jemni, M. A deep learning based approach for Arabic Sign language alphabet recognition using electromyographic signals, in 2021 8th International Conference on ICT & Accessibility (ICTA), Tunis, Tunisia: IEEE, Dec. 2021, pp. 01–04. https://doi.org/10.1109/ICTA54582.2021.9809780

Al-Mohimeed, B. A., Al-Harbi, H. O., Al-Dubayan, G. S. & Al-Shargabi, A. A. Dynamic sign language recognition based on real-time videos. Int. J. Online Biomed. Eng. IJOE 18(01), 4–14. https://doi.org/10.3991/ijoe.v18i01.27581 (2022).

Saunders, B., Camgoz, N. C. & Bowden, R. Skeletal Graph Self-Attention: Embedding a Skeleton Inductive Bias into Sign Language Production, (2021). https://doi.org/10.48550/ARXIV.2112.05277

Srilakshmi, K. & R, K. A novel method for lip movement detection using deep neural network. J. Sci. Ind. Res. 81 (06). https://doi.org/10.56042/jsir.v81i06.53898 (Jun. 2022).

Xie, P., Zhao, M. & Hu, X. PiSLTRc: Position-Informed sign Language transformer with Content-Aware Convolution. IEEE Trans. Multimed. 24, 3908–3919. https://doi.org/10.1109/TMM.2021.3109665 (2022).

Yin, Q., Tao, W., Liu, X. & Hong, Y. Neural Sign Language Translation with SF-Transformer, in 2022 the 6th International Conference on Innovation in Artificial Intelligence (ICIAI), Guangzhou China: ACM, Mar. 2022, pp. 64–68. https://doi.org/10.1145/3529466.3529503

Guan, M., Wang, Y., Ma, G., Liu, J. & Sun, M. Multi-Stream Keypoint Attention Network for Sign Language Recognition and Translation, (2024). https://doi.org/10.48550/ARXIV.2405.05672

Rastgoo, R., Kiani, K. & Escalera, S. A Transformer Model for Boundary Detection in Continuous Sign Language, (2024). https://doi.org/10.48550/ARXIV.2402.14720

Meng, L. & Li, R. An Attention-enhanced multi-scale and dual sign language recognition network based on a graph convolution network. Sensors 21(4), 1120. https://doi.org/10.3390/s21041120 (2021).

Zuo, R. et al. A Simple Baseline for Spoken Language to Sign Language Translation with 3D Avatars, (2024). https://doi.org/10.48550/ARXIV.2401.04730

Chaudhary, L., Xu, F. & Nwogu, I. Cross-Attention Based Influence Model for Manual and Nonmanual Sign Language Analysis, (2024). https://doi.org/10.48550/ARXIV.2409.08162

Haputhanthri, H. H. S. N., Tennakoon, H. M. N., Wijesekara, M. A. S. M., Pushpananda, B. H. R. & Thilini, H. N. D. Multi-modal Deep Learning Approach to Improve Sentence level Sinhala Sign Language Recognition. Int. J. Adv. ICT Emerg. Reg. ICTer 16(2), 21–30. https://doi.org/10.4038/icter.v16i2.7264 (2023).

Parekh, D., Gupta, A., Chhatpar, S., Yash, A. & Kulkarni, P. M. Lip Reading Using Convolutional Auto Encoders as Feature Extractor, in 2019 IEEE 5th International Conference for Convergence in Technology (I2CT), Bombay, India: IEEE, Mar. 2019, 1–6. https://doi.org/10.1109/I2CT45611.2019.9033664

Raghavendra, M., Omprakash, P., Mukesh, B. R. & Kamath, S. AuthNet: A Deep Learning based Authentication Mechanism using Temporal Facial Feature Movements, Dec. 19, Accessed: Oct. 14, 2024. [Online]. (2020). Available: http://arxiv.org/abs/2012.02515

Gan, S., Yin, Y., Jiang, Z., Xie, L. & Lu, S. Towards Real-Time Sign Language Recognition and Translation on Edge Devices, in Proceedings of the 31st ACM International Conference on Multimedia, Ottawa ON Canada: ACM, Oct. 4502–4512. (2023). https://doi.org/10.1145/3581783.3611820

Kim, Y., Kwak, M., Lee, D., Kim, Y. & Baek, H. Keypoint based Sign Language Translation without Glosses, (2022). https://doi.org/10.48550/ARXIV.2204.10511

Prakash, V. et al. Visual Speech Recognition by Lip Reading Using Deep Learning:, in Advances in Systems Analysis, Software Engineering, and High Performance Computing, G. Revathy, Ed., IGI Global, 290–310. (2024). https://doi.org/10.4018/979-8-3693-1694-8.ch015

Aripin & Setiawan, A. Indonesian Lip-Reading Detection and Recognition Based on Lip Shape Using Face Mesh and Long‐Term Recurrent Convolutional Network, Appl. Comput. Intell. Soft Comput., 2024(1), 6479124 (2024). https://doi.org/10.1155/2024/6479124

Kumar, P. S. & Akshay, G. Deep Learning-based Spatio Temporal Facial Feature Visual Speech Recognition, (2023). https://doi.org/10.48550/ARXIV.2305.00552

Hakim, Z. I. A., Swargo, R. M. & Adnan, M. A. Attention-Driven Multi-Modal Fusion: Enhancing Sign Language Recognition and Translation, (2023). https://doi.org/10.48550/ARXIV.2309.01860

Pomianos, G., Neti, C., Gravier, G., Garg, A. & Senior, A. W. Recent advances in the automatic recognition of audiovisual speech. Proc. IEEE 91(9), 1306–1326. https://doi.org/10.1109/JPROC.2003.817150 (2003).

Guan, X. et al. An Open-Boundary Locally Weighted Dynamic Time Warping Method for Cropland Mapping. ISPRS Int. J. Geo-Inf 7(2), 75. https://doi.org/10.3390/ijgi7020075 (2018).

Jeon, S., Elsharkawy, A. & Kim, M. S. Lipreading Architecture Based on Multiple Convolutional Neural Networks for Sentence-Level Visual Speech Recognition. Sensors 22(1), 72. https://doi.org/10.3390/s22010072 (2021).

Stafylakis, T. & Tzimiropoulos, G. Combining Residual Networks with LSTMs for Lipreading, (2017). https://doi.org/10.48550/ARXIV.1703.04105

Chung, J. S. & Zisserman, A. Out of time: automated lip sync in the wild. In Computer Vision – ACCV 2016 Workshops Vol. 10117 (eds Chen, C. S. et al.) 251–263 (Springer International Publishing, 2017). https://doi.org/10.1007/978-3-319-54427-4_19.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

The manuscript does not contain any author contribution or author contribution statement mentioning all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Talaat, F.M., Hassan, B.M. A multistream attention based neural network for visual speech recognition and sign language understanding. Sci Rep 15, 44675 (2025). https://doi.org/10.1038/s41598-025-26303-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-26303-7